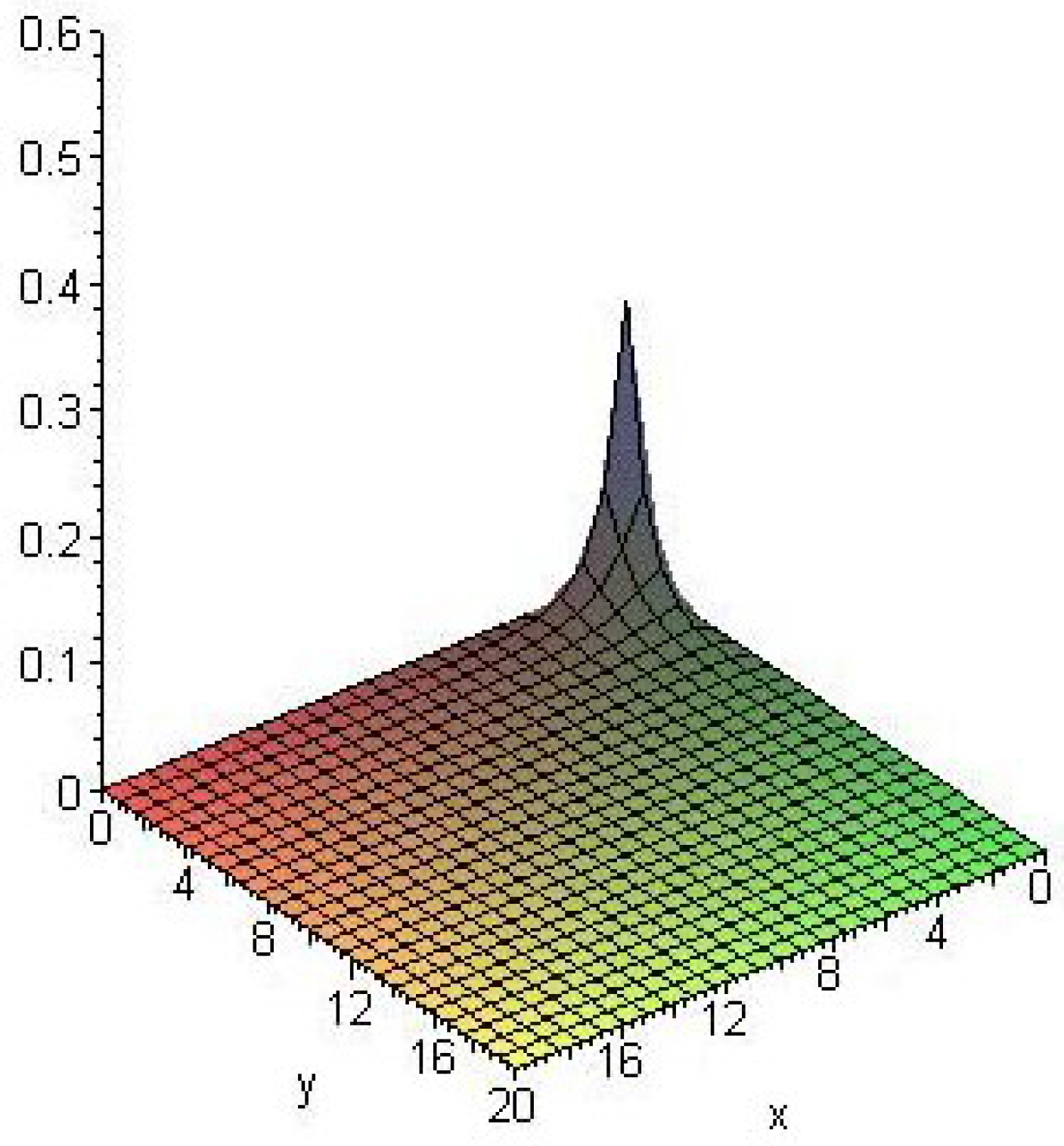

Multivariate Extended Gamma Distribution

Abstract

:1. Introduction

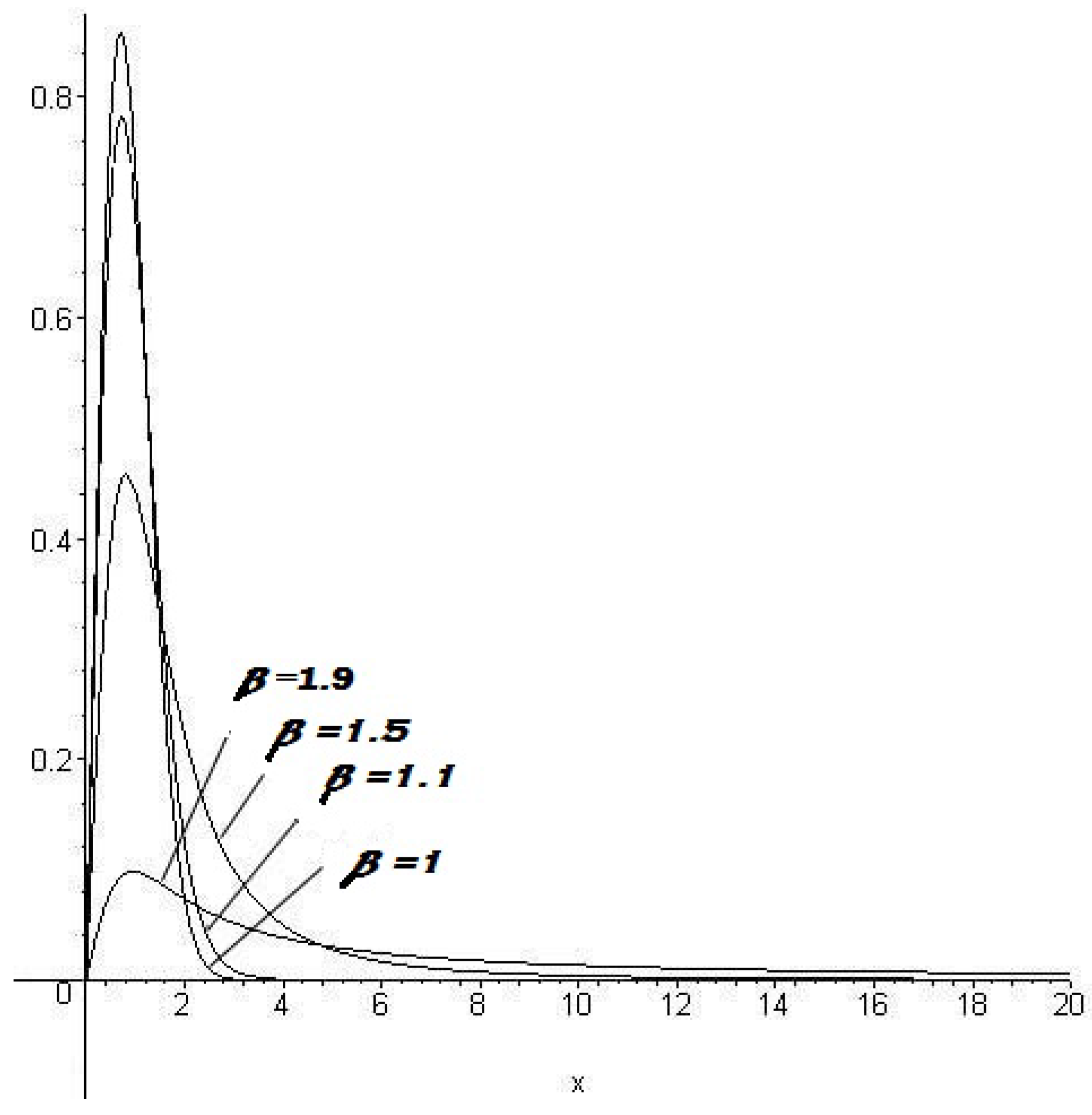

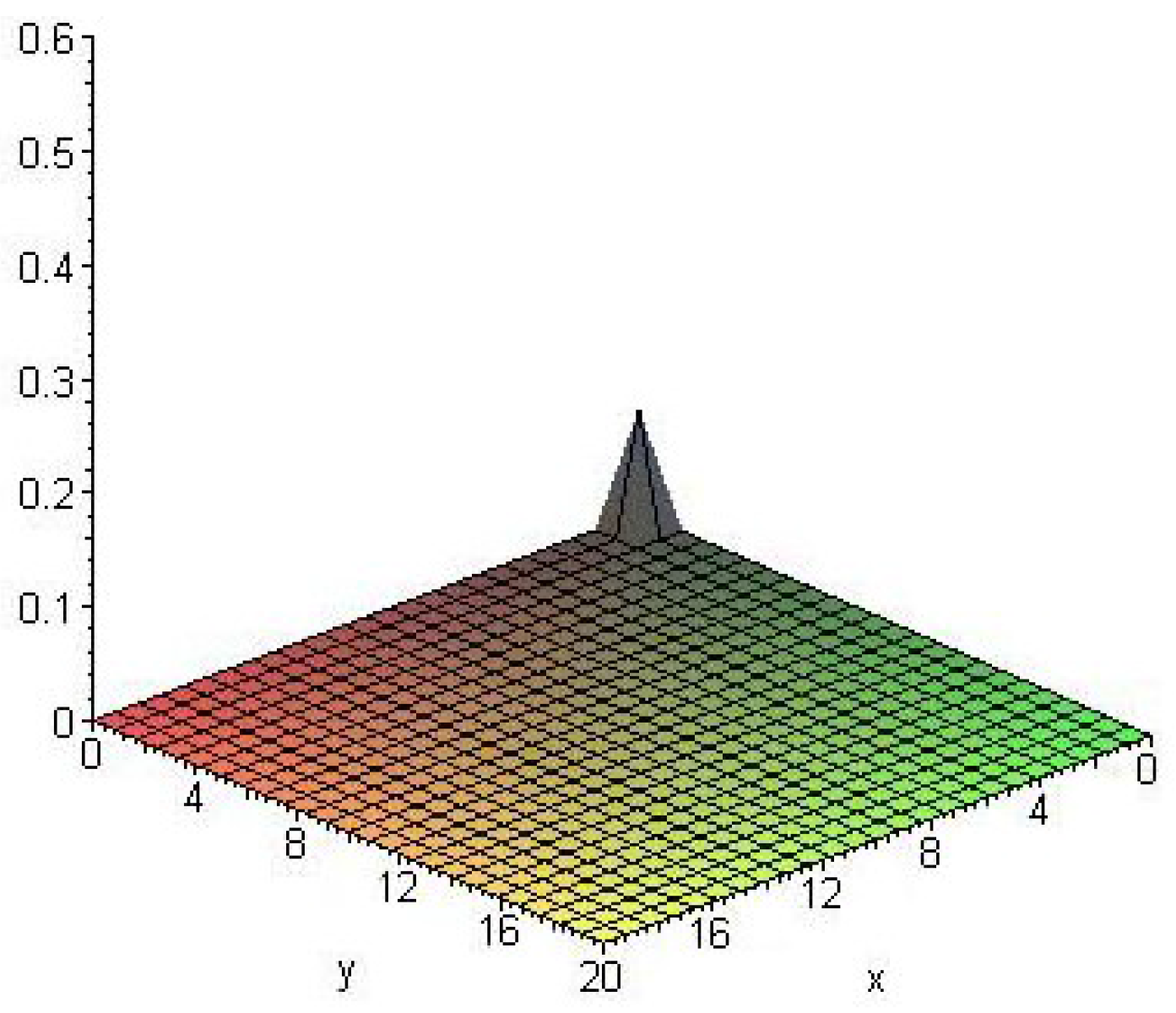

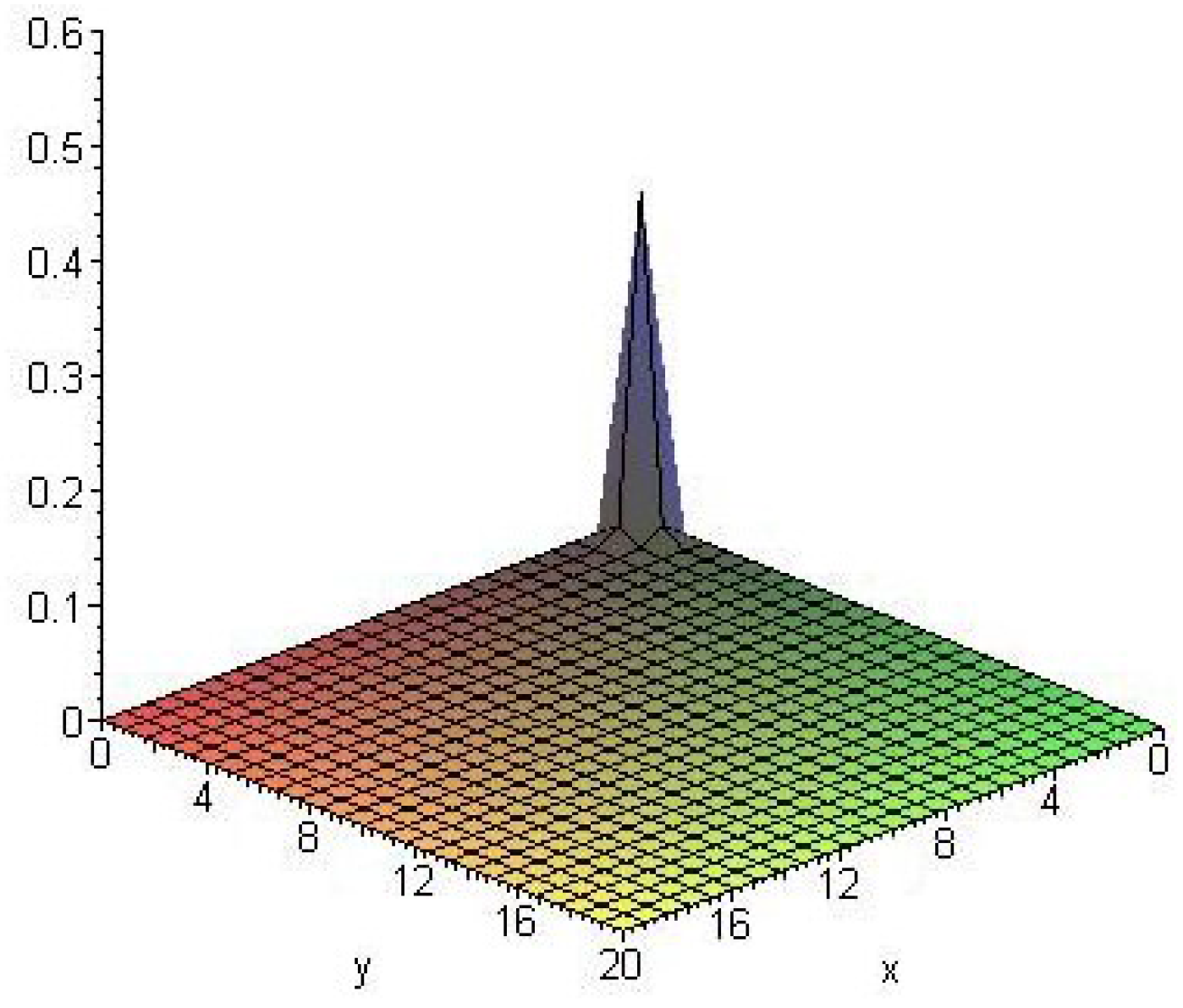

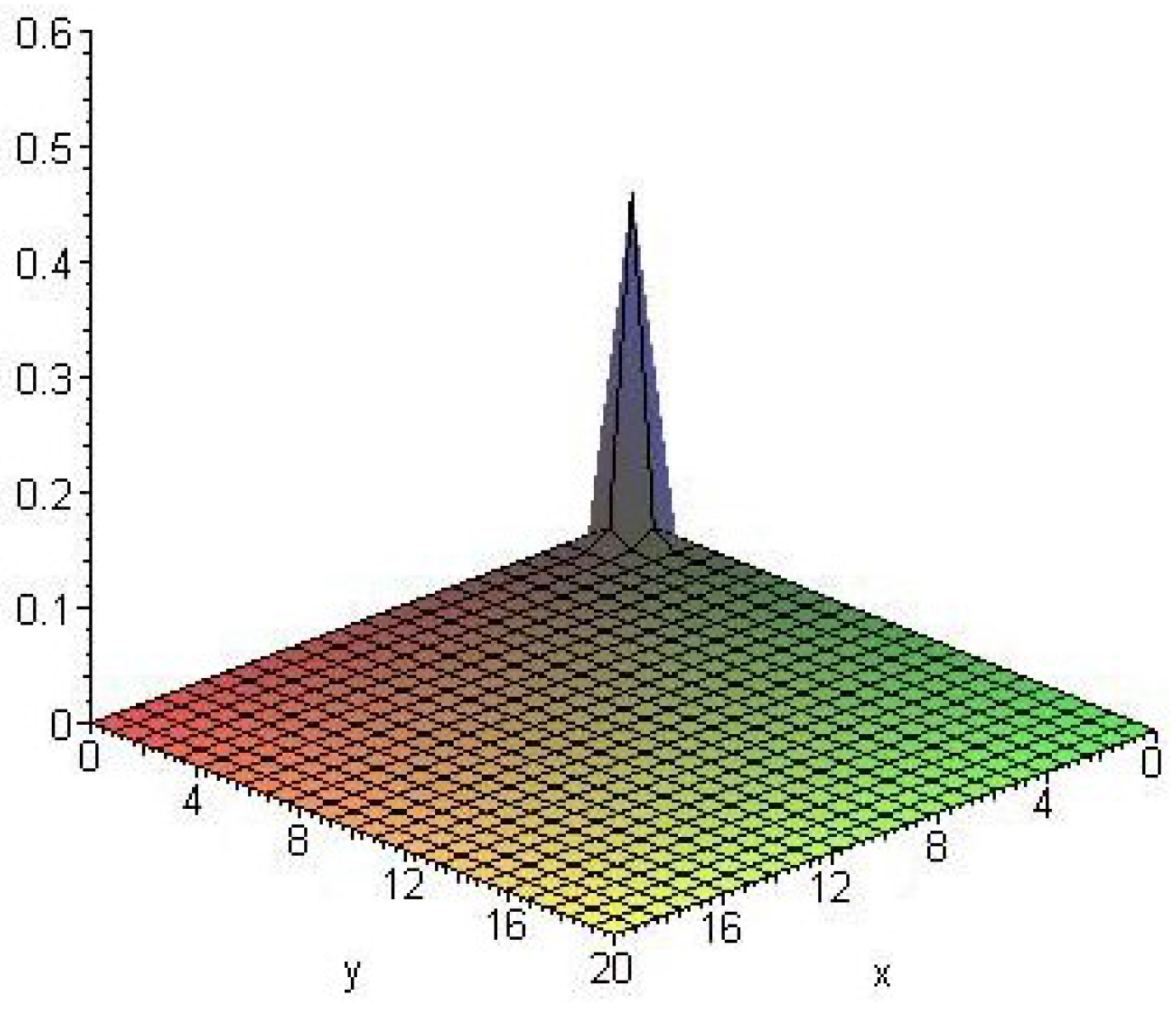

2. Multivariate Extended Gamma

Special Cases and Limiting Cases

- When → 1, (3) will become independently distributed generalized gamma variables. This includes multivariate analogue of gamma, exponential, chisquare, Weibull, Maxwell- Boltzmann, Rayleigh, and related models.

- If , (3) is identical with type-2 beta density.

3. Marginal Density

Normalizing Constant

4. Joint Product Moment and Structural Representations

4.1. Variance-Covariance Matrix

4.2. Normalizing Constant

5. Regression Type Models and Limiting Approaches

Best Predictor

6. Multivariate Extended Gamma When

7. Conclusions

Acknowledgments

Conflicts of Interest

References

- Mathai, A.M. A Pathway to matrix-variate gamma and normal densities. Linear Algebra Appl. 2005, 396, 317–328. [Google Scholar] [CrossRef]

- Joseph, D.P. Gamma distribution and extensions by using pathway idea. Stat. Pap. Ger. 2011, 52, 309–325. [Google Scholar] [CrossRef]

- Beck, C.; Cohen, E.G.D. Superstatistics. Physica A 2003, 322, 267–275. [Google Scholar] [CrossRef]

- Beck, C. Stretched exponentials from superstatistics. Physica A 2006, 365, 96–101. [Google Scholar] [CrossRef]

- Tsallis, C. Possible generalizations of Boltzmann-Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Mathai, A.M.; Haubold, H.J. Pathway model, superstatistics Tsallis statistics and a generalized measure of entropy. Physica A 2007, 375, 110–122. [Google Scholar] [CrossRef]

- Kotz, S.; Balakrishman, N.; Johnson, N.L. Continuous Multivariate Distributions; John Wiley & Sons, Inc.: New York, NY, USA, 2000. [Google Scholar]

- Mathai, A.M.; Moschopoulos, P.G. On a form of multivariate gamma distribution. Ann. Inst. Stat. Math. 1992, 44, 106. [Google Scholar]

- Furman, E. On a multivariate gamma distribution. Stat. Probab. Lett. 2008, 78, 2353–2360. [Google Scholar] [CrossRef]

- Mathai, A.M.; Provost, S.B. On q-logistic and related models. IEEE Trans. Reliab. 2006, 55, 237–244. [Google Scholar] [CrossRef]

- Haubold, H.J.; Mathai, A.M.; Thomas, S. An entropic pathway to multivariate Gaussian density. arXiv, 2007; arXiv:0709.3820v. [Google Scholar]

- Tsallis, C. Nonextensive Statistical Mechanics and Thermodynamics. Braz. J. Phys. 1999, 29, 1–35. [Google Scholar]

- Thomas, S.; Jacob, J. A generalized Dirichlet model. Stat. Probab. Lett. 2006, 76, 1761–1767. [Google Scholar] [CrossRef]

- Thomas, S.; Thannippara, A.; Mathai, A.M. On a matrix-variate generalized type-2 Dirichlet density. Adv. Appl. Stat. 2008, 8, 37–56. [Google Scholar]

- Mathai, A.M. A Handbook of Generalized Special Functions for Statistical and Physical Sciences; Oxford University Press: Oxford, UK, 1993; pp. 58–116. [Google Scholar]

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Joseph, D.P. Multivariate Extended Gamma Distribution. Axioms 2017, 6, 11. https://doi.org/10.3390/axioms6020011

Joseph DP. Multivariate Extended Gamma Distribution. Axioms. 2017; 6(2):11. https://doi.org/10.3390/axioms6020011

Chicago/Turabian StyleJoseph, Dhannya P. 2017. "Multivariate Extended Gamma Distribution" Axioms 6, no. 2: 11. https://doi.org/10.3390/axioms6020011