Multi-Attribute Decision-Making Method Based on Neutrosophic Soft Rough Information

Abstract

:1. Introduction

2. Construction of Soft Rough Neutrosophic Sets

- (i)

- (ii)

- (iii)

- (iv)

- (v)

- (vi)

- (vii)

- (viii)

- (i)

- By definition of SRNS, we havewhereHence, .

- (ii)

- (iii)

- It can be easily proved by Definition 1.

- (iv)

- Let be an NS on M, then

- Let be an NS on then

3. Construction of Neutrosophic Soft Rough Sets

- (i)

- (ii)

- (iii)

- (iv)

- (v)

- (vi)

- (vii)

- (viii)

- (i)

- (ii)

- Now, consider

- (iii)

- It can be easily proven by Definition 3.

- (iv)

- (vii)

- (i)

- ,

- (ii)

- (i)

- By Definition 3 and definition of difference of two NSs, for all

- (ii)

- By Definition 3 and definition of difference of two NSs, for all

- (i)

- (ii)

- (i)

- Since ∅ is a null NS on M,Now,Since is full NS on , for all , and this implies Thus,

- (ii)

- Since is an NSAS and is a serial neutrosophic soft relation, then, for each , there exists , such that and . The UNSRA and LNSRA operators , and of an NS C can be defined as:Clearly, for all Thus, .☐

- Let be an NS on M, then

- Let be an NS on then

- Hence, is NSRR.

- (i)

- (ii)

- (i)

- (ii)

4. Application

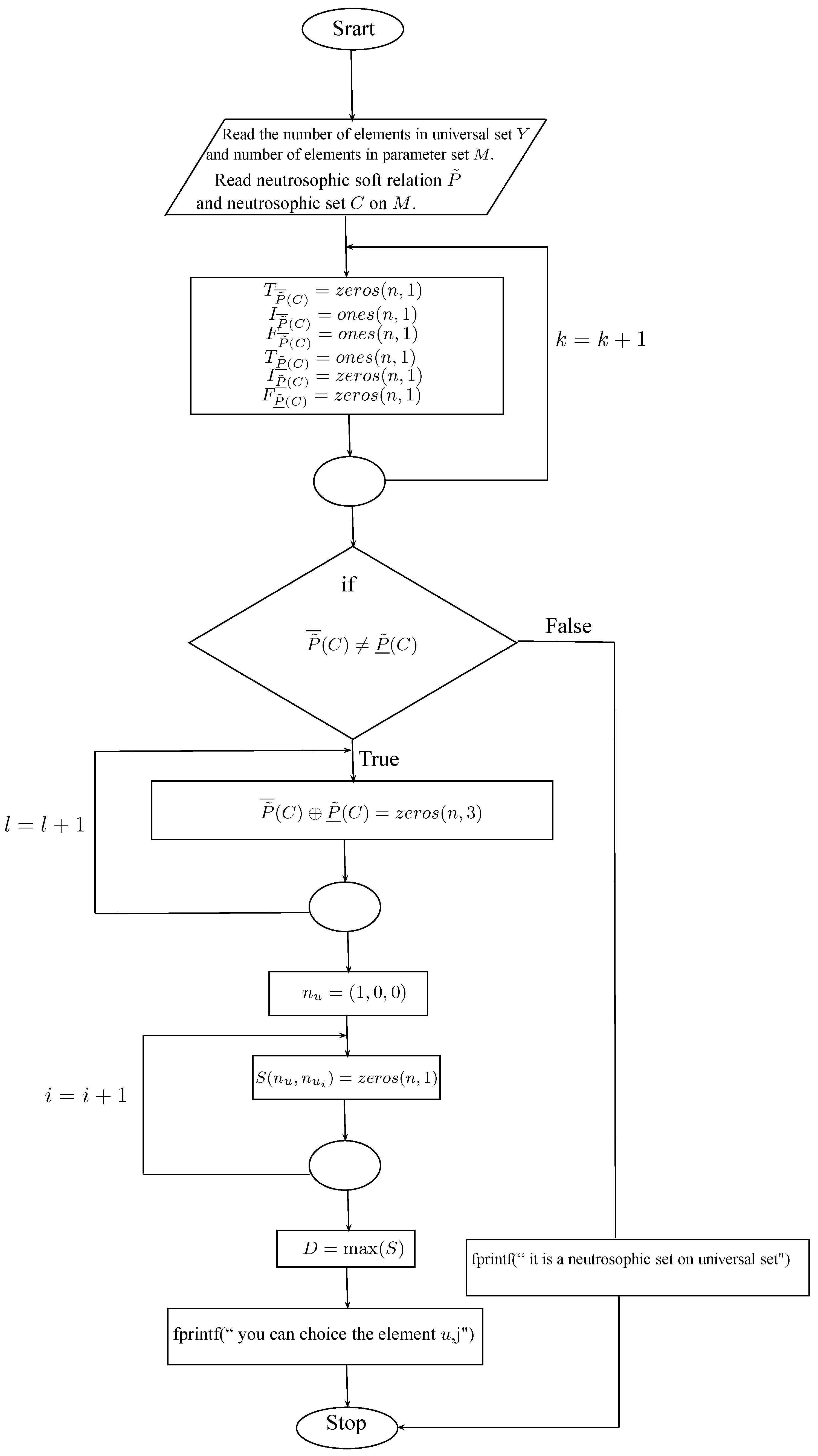

| Algorithm 1: Algorithm for selection of the most suitable objects |

| 1. Begin |

| 2. Input the number of elements in universal set . |

| 3. Input the number of elements in parameter set . |

| 4. Input a neutrosophic soft relation from Y to M. |

| 5. Input an NS C on M. |

| 6. if |

| 7. fprintf(‛ size of neutrosophic soft relation from universal set to parameter |

| set is not correct, it should be of order x) |

| 8. error(‛ Dimemsion of neutrosophic soft relation on vertex set is not correct. ’) |

| 9. end |

| 10. if |

| 11. fprintf(‛ size of NS on parameter set is not correct, |

| it should be of order %dx3; ’,m) |

| 12. error(’Dimemsion of NS on parameter set is not correct.’) |

| 13. end |

| 14. ; |

| 15. ; |

| 16. ; |

| 17. ; |

| 18. ; |

| 19. ; |

| 20. if |

| 21. if |

| 22. if |

| 23. if |

| 24. for |

| 25. for |

| 26. j=3*k-2; |

| 27. ; |

| 28. ; |

| 29. ; |

| 30. ; |

| 31. ; |

| 32. ; |

| 33. end |

| 34. end |

| 35. |

| 36. |

| 37. if |

| 38. fprintf(‛ it is a neutrosophic set on universal set.) |

| 39. else |

| 40. fprintf(‛it is an NSRS on universal set.) |

| 41. ; |

| 42. for i=1:n |

| 43. |

| ; |

| 44. ; |

| 45. ; |

| 46. end |

| 47. ; |

| 48. ; |

| 49. for i=1:n |

| 50. ; |

| 51. end |

| 52. |

| 53. D=max(S); |

| 54. l=0; |

| 55. m=zeros(n,1); |

| 56. D2=zeros(n,1); |

| 57. for j=1:n |

| 58. if S(j,1)==D |

| 59. l=l+1; |

| 60. D2(j,1)=S(j,1); |

| 61. m(j)=j; |

| 62. end |

| 63. end |

| 64. for |

| 65. if |

| 66. fprintf(‛ you can choice the element ,j) |

| 67. end |

| 68. end |

| 69. end |

| 70. end |

| 71. end |

| 72. end |

| 73. end |

| 74. End |

5. Conclusions and Future Directions

Author Contributions

Conflicts of Interest

References

- Smarandache, F. Neutrosophy: Neutrosophic Probability, Set, and Logic; American Research Press: Rehoboth, DE, USA, 1998; 105p. [Google Scholar]

- Wang, H.; Smarandache, F.; Zhang, Y.; Sunderraman, R. Single-valued neutrosophic sets. Multispace Multistruct. 2010, 4, 410–413. [Google Scholar]

- Ye, J. Multicriteria decision-making method using the correlation coefficient under single-valued neutrosophic environment. Int. J. Gen. Syst. 2013, 42, 386–394. [Google Scholar] [CrossRef]

- Ye, J. Improved correlation coefficients of single valued neutrosophic sets and interval neutrosophic sets for multiple attribute decision making. J. Intell. Fuzzy Syst. 2014, 27, 2453–2462. [Google Scholar]

- Ye, J.; Fu, J. Multi-period medical diagnosis method using a single valued neutrosophic similarity measure based on tangent function. Comput. Methods Prog. Biomed. 2016, 123, 142–149. [Google Scholar] [CrossRef] [PubMed]

- Peng, J.J.; Wang, J.Q.; Zhang, H.Y.; Chen, X.H. An outranking approach for multi-criteria decision-making problems with simplified neutrosophic sets. Appl. Soft Comput. 2014, 25, 336–346. [Google Scholar] [CrossRef]

- Molodtsov, D.A. Soft set theory-first results. Comput. Math. Appl. 1999, 37, 19–31. [Google Scholar] [CrossRef]

- Maji, P.K.; Biswas, R.; Roy, A.R. Fuzzy soft sets. J. Fuzzy Math. 2001, 9, 589–602. [Google Scholar]

- Maji, P.K.; Biswas, R.; Roy, A.R. Intuitionistic fuzzy soft sets. J. Fuzzy Math. 2001, 9, 677–692. [Google Scholar]

- Maji, P.K. Neutrosophic soft set. Ann. Fuzzy Math. Inform. 2013, 5, 157–168. [Google Scholar]

- Babitha, K.V.; Sunil, J.J. Soft set relations and functions. Comput. Math. Appl. 2010, 60, 1840–1849. [Google Scholar] [CrossRef]

- Sahin, R.; Kucuk, A. On similarity and entropy of neutrosophic soft sets. J. Intell. Fuzzy Syst. Appl. Eng. Technol. 2014, 27, 2417–2430. [Google Scholar]

- Pawlak, Z. Rough sets. Int. J. Comput. Inf. Sci. 1982, 11, 341–356. [Google Scholar] [CrossRef]

- Ali, M. A note on soft sets, rough sets and fuzzy soft sets. Appl. Soft Comput. 2011, 11, 3329–3332. [Google Scholar]

- Feng, F.; Liu, X.; Leoreanu-Fotea, B.; Jun, Y.B. Soft sets and soft rough sets. Inf. Sci. 2011, 181, 1125–1137. [Google Scholar] [CrossRef]

- Shabir, M.; Ali, M.I.; Shaheen, T. Another approach to soft rough sets. Knowl.-Based Syst. 2013, 40, 72–80. [Google Scholar] [CrossRef]

- Feng, F.; Li, C.; Davvaz, B.; Ali, M.I. Soft sets combined with fuzzy sets and rough sets: A tentative approach. Soft Comput. 2010, 14, 899–911. [Google Scholar] [CrossRef]

- Dubois, D.; Prade, H. Rough fuzzy sets and fuzzy rough sets. Int. J. Gen. Syst. 1990, 17, 191–209. [Google Scholar] [CrossRef]

- Meng, D.; Zhang, X.; Qin, K. Soft rough fuzzy sets and soft fuzzy rough sets. Comput. Math. Appl. 2011, 62, 4635–4645. [Google Scholar] [CrossRef]

- Sun, B.Z.; Ma, W.; Liu, Q. An approach to decision making based on intuitionistic fuzzy rough sets over two universes. J. Oper. Res. Soc. 2013, 64, 1079–1089. [Google Scholar] [CrossRef]

- Sun, B.Z.; Ma, W. Soft fuzzy rough sets and its application in decision making. Artif. Intell. Rev. 2014, 41, 67–80. [Google Scholar] [CrossRef]

- Zhang, X.; Dai, J.; Yu, Y. On the union and intersection operations of rough sets based on various approximation spaces. Inf. Sci. 2015, 292, 214–229. [Google Scholar] [CrossRef]

- Zhang, H.; Shu, L. Generalized intuitionistic fuzzy rough set based on intuitionistic fuzzy covering. Inf. Sci. 2012, 198, 186–206. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, B.; Li, P. A general frame for intuitionistic fuzzy rough sets. Inf. Sci. 2012, 216, 34–49. [Google Scholar] [CrossRef]

- Zhang, H.; Shu, L.; Liao, S. Intuitionistic fuzzy soft rough set and its application in decision making. Abstr. Appl. Anal. 2014, 2014, 13. [Google Scholar]

- Zhang, H.; Xiong, L.; Ma, W. Generalized intuitionistic fuzzy soft rough set and its application in decision making. J. Comput. Anal. Appl. 2016, 20, 750–766. [Google Scholar]

- Broumi, S.; Smarandache, F. Interval-valued neutrosophic soft rough sets. Int. J. Comput. Math. 2015, 2015, 232919. [Google Scholar] [CrossRef]

- Broumi, S.; Smarandache, F.; Dhar, M. Rough Neutrosophic sets. Neutrosophic Sets Syst. 2014, 3, 62–67. [Google Scholar]

- Yang, H.L.; Zhang, C.L.; Guo, Z.L.; Liu, Y.L.; Liao, X. A hybrid model of single valued neutrosophic sets and rough sets: Single valued neutrosophic rough set model. Soft Comput. 2016, 21, 6253–6267. [Google Scholar] [CrossRef]

- Faizi, S.; Salabun, W.; Rashid, T.; Watrbski, J.; Zafar, S. Group decision-making for hesitant fuzzy sets based on characteristic objects method. Symmetry 2017, 9, 136. [Google Scholar] [CrossRef]

- Faizi, S.; Rashid, T.; Salabun, W.; Zafar, S.; Watrbski, J. Decision making with uncertainty using hesitant fuzzy sets. Int. J. Fuzzy Syst. 2018, 20, 93–103. [Google Scholar] [CrossRef]

- Mardani, A.; Nilashi, M.; Antucheviciene, J.; Tavana, M.; Bausys, R.; Ibrahim, O. Recent Fuzzy Generalisations of Rough Sets Theory: A Systematic Review and Methodological Critique of the Literature. Complexity 2017, 2017, 33. [Google Scholar] [CrossRef]

- Liang, R.X.; Wang, J.Q.; Zhang, H.Y. A multi-criteria decision-making method based on single-valued trapezoidal neutrosophic preference relations with complete weight information. Neural Comput. Appl. 2017, 1–16. [Google Scholar] [CrossRef]

- Liang, R.; Wang, J.; Zhang, H. Evaluation of e-commerce websites: An integrated approach under a single-valued trapezoidal neutrosophic environment. Knowl.-Based Syst. 2017, 135, 44–59. [Google Scholar] [CrossRef]

- Peng, H.G.; Zhang, H.Y.; Wang, J.Q. Probability multi-valued neutrosophic sets and its application in multi-criteria group decision-making problems. Neural Comput. Appl. 2016, 1–21. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, H.Y.; Wang, J.Q. Frank Choquet Bonferroni mean operators of bipolar neutrosophic sets and their application to multi-criteria decision-making problems. Int. J. Fuzzy Syst. 2018, 20, 13–28. [Google Scholar] [CrossRef]

- Zavadskas, E.K.; Bausys, R.; Kaklauskas, A.; Ubarte, I.; Kuzminske, A.; Gudiene, N. Sustainable market valuation of buildings by the single-valued neutrosophic MAMVA method. Appl. Soft Comput. 2017, 57, 74–87. [Google Scholar] [CrossRef]

- Li, Y.; Liu, P.; Chen, Y. Some single valued neutrosophic number heronian mean operators and their application in multiple attribute group decision making. Informatica 2016, 27, 85–110. [Google Scholar] [CrossRef]

| P | |||||

|---|---|---|---|---|---|

| P | |||

|---|---|---|---|

| R | ||||

|---|---|---|---|---|

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akram, M.; Shahzadi, S.; Smarandache, F. Multi-Attribute Decision-Making Method Based on Neutrosophic Soft Rough Information. Axioms 2018, 7, 19. https://doi.org/10.3390/axioms7010019

Akram M, Shahzadi S, Smarandache F. Multi-Attribute Decision-Making Method Based on Neutrosophic Soft Rough Information. Axioms. 2018; 7(1):19. https://doi.org/10.3390/axioms7010019

Chicago/Turabian StyleAkram, Muhammad, Sundas Shahzadi, and Florentin Smarandache. 2018. "Multi-Attribute Decision-Making Method Based on Neutrosophic Soft Rough Information" Axioms 7, no. 1: 19. https://doi.org/10.3390/axioms7010019