Approximate Methods for the Generation of Dark Matter Halo Catalogs in the Age of Precision Cosmology

Abstract

:1. Introduction

2. Foundations of Approximate Methods

2.1. Perturbation Theories

2.2. The Need for Smoothing

2.3. Press and Schechter and Its Extensions

2.4. Ellipsoidal Collapse

2.5. Halo Bias

3. Approximate Methods in the 1990s

3.1. Lognormal Model

3.2. Adhesion Theory

3.3. Extensions of ZA

3.4. Truncated Zeldovich Approximation and Beyond

3.5. Reconstruction of Initial Conditions

4. The age of Precision Cosmology

4.1. Recent Development of the Foundations

4.2. The Universal Mass Function

4.3. Lagrangian Methods to Produce Mock Catalogs

4.3.1. PINOCCHIO

4.3.2. PTHALOS

4.3.3. Methods Based on the Particle-Mesh Scheme

4.4. Bias-Based Methods

4.4.1. PATCHY

4.4.2. EZmocks

4.4.3. Halogen

5. Comparison of Methods

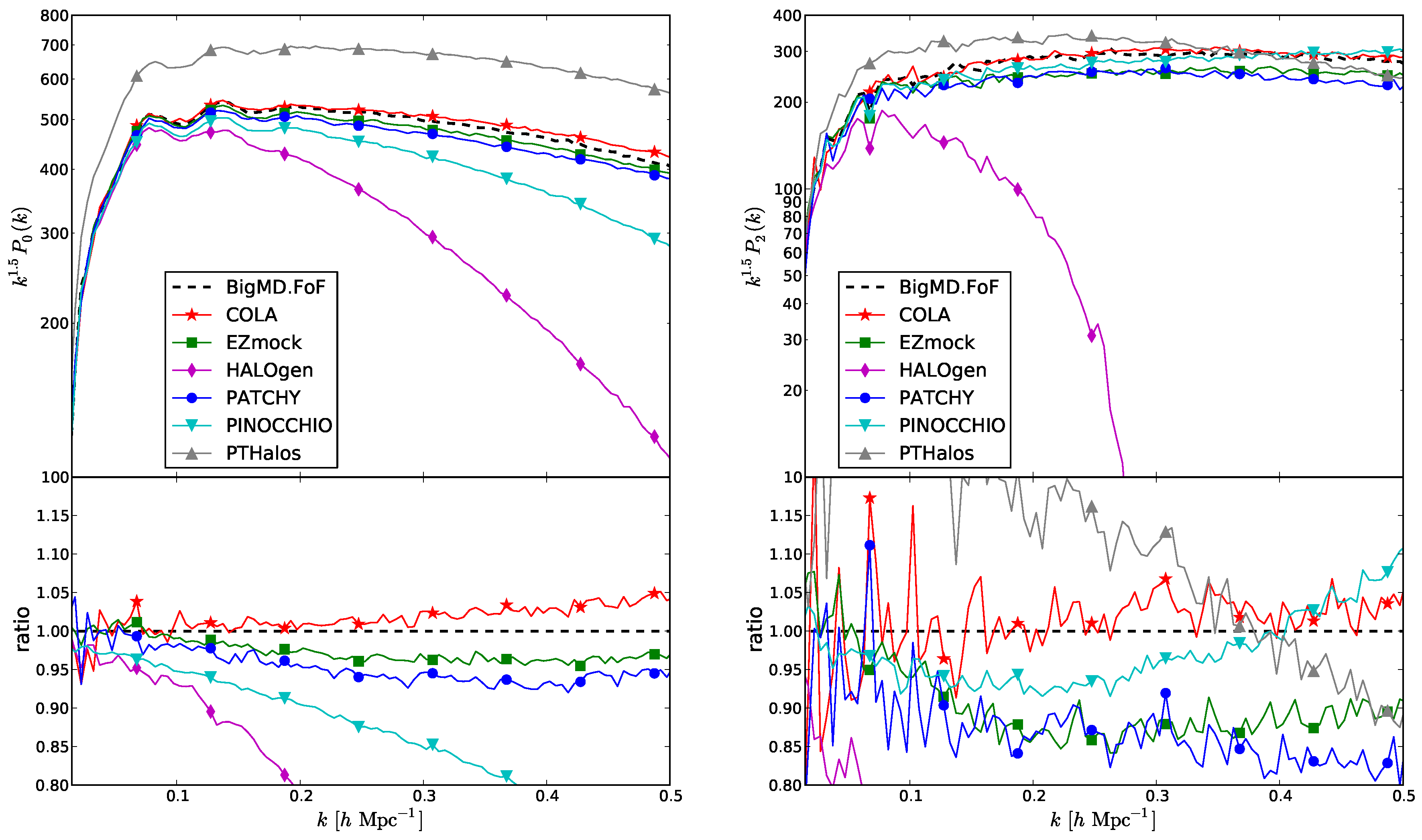

5.1. The nIFTy Comparison Project

5.2. Generating Displacement Fields of Halos without Worrying about Particle Assignments to Halos

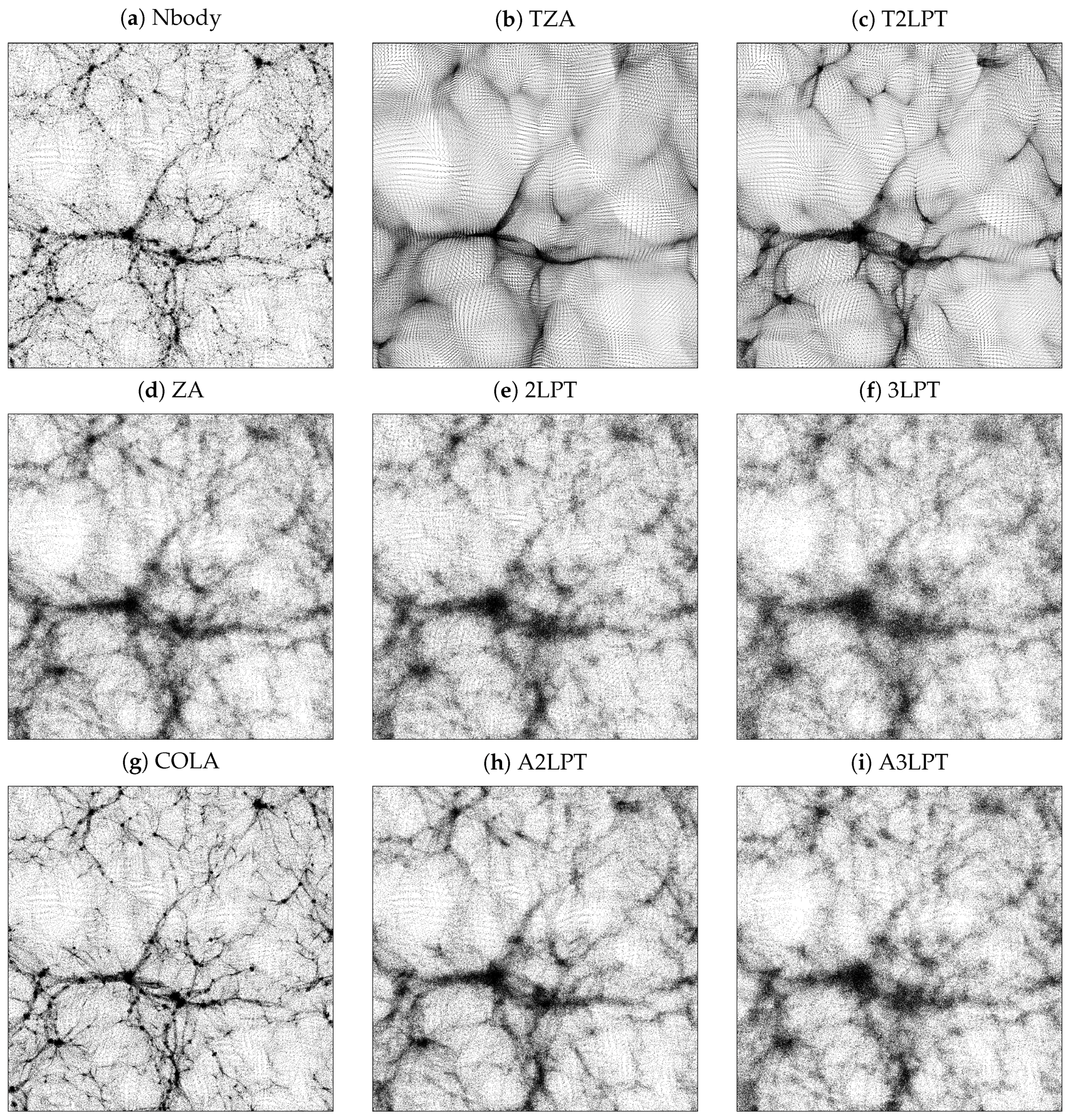

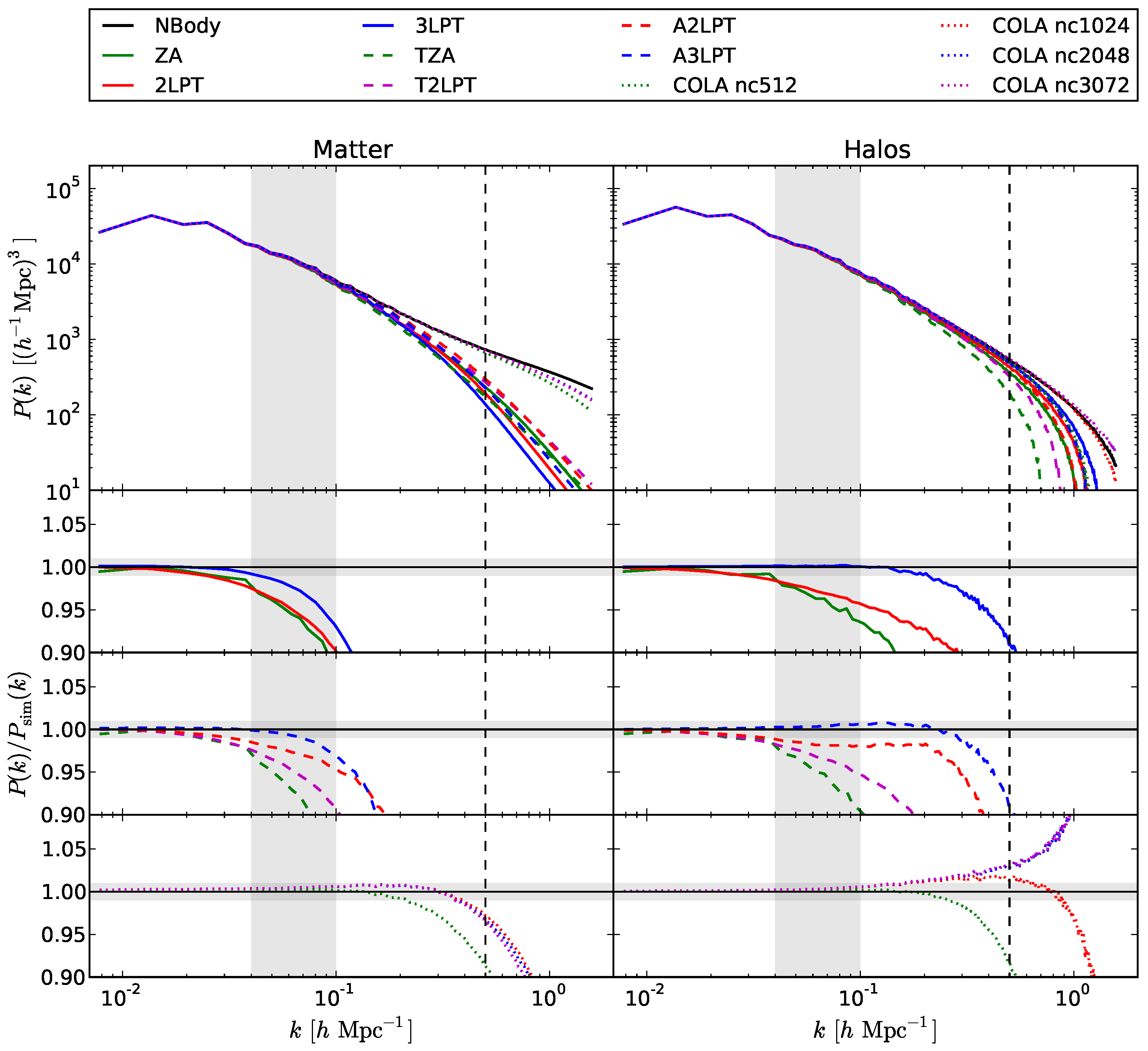

- (1)

- For the matter power spectrum, the wavenumber at which power drops below a % level does not overtake 0.2 h Mpc for all models, with the exception of COLA, that can reach 0.8 h Mpc for all meshes but the coarsest one. Moreover, going from lower to higher orders provides only some modest improvement, and this is true for all LPT flavours. This quantifies the trend, noticed in Figure 2, that higher LPT orders may give better convergence before orbit crossing, but they add to the spreading of the multi-stream region.

- (2)

- All approximate methods are able to recover the halo power spectrum at a higher wavenumber than the matter power spectrum. For the straight LPT series, power drops below a % level at k ∼0.13, and h Mpc for the three orders, showing that the improvement achieved going to higher orders is significant.

- (3)

- No advantage is found by adopting the truncated LPT scheme. As noticed in Section 3.4, in the original paper of Coles et al. [102] the advantage of the truncated scheme was found to be good for a power spectrum of positive or flat slope ≥ but marginal for a slope , that is shallower than the slope of a ΛCDM spectrum at k ∼0.5.

- (4)

- Augmentation contributes to the improvement of the LPT series. This trend is more apparent for the halo power spectrum, in which case the improvement in going from 2LPT to A2LPT is of the same order as the improvement in going from 2LPT to 3LPT. A3LPT is only marginally better than 3LPT.

- (5)

- COLA gives percent accurate results for the matter power spectrum up to k ∼0.5 h Mpc, and is 10% accurate up to h Mpc. Very good results are obtained for halos, where some excess power at the 2% level is found at , but 10% accuracy is achieved up to h Mpc. Results are stable with the grid dimension, with the exception of the coarsest grid that gives poor performance; for halos, finer grids give some excess of power, the grid gives the best performance. Clearly, finer grids are needed by COLA to allow proper identification of halos, but not to achieve better accuracy in their clustering.

6. Concluding Remarks

Acknowledgments

Conflicts of Interest

Abbreviations

| BAO | baryonic acoustic oscillations |

| CDM | cold dark matter |

| DM | dark matter |

| EPT | Eulerian Perturbation Theory |

| FFT | fast Fourier transform |

| FoF | friends-of-friends |

| LPT | Lagrangian Perturbation Theory |

| 2LPT | 2nd-order LPT |

| 3LPT | 3rd-order LPT |

| ALPT | augmented LPT |

probability distribution function | |

| SAM | semi-analytic model |

| ZA | Zeldovich approximation |

References

- Coles, P.; Lucchin, F. Cosmology: The Origin and Evolution of Cosmic Structure, 2nd ed.; Wiley: Hoboken, NJ, USA, 2002; p. 512. [Google Scholar]

- Mo, H.; van den Bosch, F.C.; White, S. Galaxy Formation and Evolution; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Planck Collaboration; Ade, P.A.R.; Aghanim, N.; Alves, M.I.R.; Armitage-Caplan, C.; Arnaud, M.; Ashdown, M.; Atrio-Barandela, F.; Aumont, J.; Aussel, H.; et al. Planck 2013 results. I. Overview of products and scientific results. Astron. Astrophys. 2014, 571, A1. [Google Scholar]

- Buchert, T. Toward physical cosmology: Focus on inhomogeneous geometry and its non-perturbative effects. Class. Quantum Gravity 2011, 28, 164007. [Google Scholar] [CrossRef]

- Springel, V. The cosmological simulation code GADGET-2. Mon. Not. R. Astron. Soc. 2005, 364, 1105–1134. [Google Scholar] [CrossRef]

- Hockney, R.W.; Eastwood, J.W. Computer Simulation Using Particles; McGraw-Hill: New York, NY, USA, 1981. [Google Scholar]

- Springel, V.; White, S.D.M.; Jenkins, A.; Frenk, C.S.; Yoshida, N.; Gao, L.; Navarro, J.; Thacker, R.; Croton, D.; Helly, J.; et al. Simulations of the formation, evolution and clustering of galaxies and quasars. Nature 2005, 435, 629–636. [Google Scholar] [CrossRef] [PubMed]

- Alimi, J.M.; Bouillot, V.; Rasera, Y.; Reverdy, V.; Corasaniti, P.S.; Balmes, I.; Requena, S.; Delaruelle, X.; Richet, J.N. DEUS Full Observable ΛCDM Universe Simulation: The Numerical Challenge. 2012; arXiv:1206.2838. [Google Scholar]

- Angulo, R.E.; Springel, V.; White, S.D.M.; Jenkins, A.; Baugh, C.M.; Frenk, C.S. Scaling relations for galaxy clusters in the Millennium-XXL simulation. Mon. Not. R. Astron. Soc. 2012, 426, 2046–2062. [Google Scholar] [CrossRef] [Green Version]

- Watson, W.A.; Iliev, I.T.; Diego, J.M.; Gottlöber, S.; Knebe, A.; Martínez-González, E.; Yepes, G. Statistics of extreme objects in the Juropa Hubble Volume simulation. Mon. Not. R. Astron. Soc. 2014, 437, 3776–3786. [Google Scholar] [CrossRef]

- Heitmann, K.; Frontiere, N.; Sewell, C.; Habib, S.; Pope, A.; Finkel, H.; Rizzi, S.; Insley, J.; Bhattacharya, S. The Q continuum simulation: Harnessing the power of GPU accelerated supercomputers. Astrophys. J. Suppl. 2015, 219, 34. [Google Scholar] [CrossRef]

- Skillman, S.W.; Warren, M.S.; Turk, M.J.; Wechsler, R.H.; Holz, D.E.; Sutter, P.M. Dark Sky Simulations: Early Data Release. 2014; arXiv:1407.2600. [Google Scholar]

- Kim, J.; Park, C.; L’Huillier, B.; Hong, S.E. Horizon Run 4 simulation: Coupled evolution of galaxies and large-scale structures of the Universe. J. Korean Astron. Soc. 2015, 48, 213–228. [Google Scholar] [CrossRef]

- Potter, D.; Stadel, J.; Teyssier, R. PKDGRAV3: Beyond Trillion Particle Cosmological Simulations for the Next Era of Galaxy Surveys. 2016; arXiv:1609.08621. [Google Scholar]

- Heitmann, K.; White, M.; Wagner, C.; Habib, S.; Higdon, D. The Coyote Universe. I. Precision determination of the nonlinear matter power spectrum. Astrophys. J. 2010, 715, 104–121. [Google Scholar] [CrossRef]

- Reed, D.S.; Smith, R.E.; Potter, D.; Schneider, A.; Stadel, J.; Moore, B. Towards an accurate mass function for precision cosmology. Mon. Not. R. Astron. Soc. 2013, 431, 1866–1882. [Google Scholar] [CrossRef]

- Schneider, A.; Teyssier, R.; Potter, D.; Stadel, J.; Onions, J.; Reed, D.S.; Smith, R.E.; Springel, V.; Pearce, F.R.; Scoccimarro, R. Matter Power Spectrum and the Challenge of Percent Accuracy. 2015; arXiv:1503.05920. [Google Scholar]

- Lynden-Bell, D. Statistical mechanics of violent relaxation in stellar systems. Mon. Not. R. Astron. Soc. 1967, 136, 101–121. [Google Scholar] [CrossRef]

- Saslaw, W.C. Gravitational Physics of Stellar and Galactic Systems; Cambridge University Press: Cambridge, UK, 1985. [Google Scholar]

- Navarro, J.F.; Frenk, C.S.; White, S.D.M. The structure of cold dark matter halos. Astrophys. J. 1996, 462, 563–575. [Google Scholar] [CrossRef]

- Hinshaw, G.; Larson, D.; Komatsu, E.; Spergel, D.N.; Bennett, C.L.; Dunkley, J.; Nolta, M.R.; Halpern, M.; Hill, R.S.; Odegard, N.; et al. Nine-year Wilkinson Microwave Anisotropy Probe (WMAP) observations: Cosmological parameter results. Astrophys. J. Suppl. 2013, 208, 19. [Google Scholar] [CrossRef]

- Planck Collaboration; Ade, P.A.R.; Aghanim, N.; Arnaud, M.; Ashdown, M.; Aumont, J.; Baccigalupi, C.; Banday, A.J.; Barreiro, R.B.; Bartlett, J.G.; et al. Planck 2015 Results. XIII. Cosmological Parameters. 2015; arXiv:1502.01589. [Google Scholar]

- Frieman, J. Dark Energy Survey Collaboration. In Proceedings of the 221th Meeting of the American Astronomical Society, Long Beach, CA, USA, 6–10 January 2013.

- Dawson, K.S.; Kneib, J.P.; Percival, W.J.; Alam, S.; Albareti, F.D.; Anderson, S.F.; Armengaud, E.; Aubourg, É.; Bailey, S.; Bautista, J.E.; et al. The SDSS-IV extended baryon oscillation spectroscopic survey: Overview and early data. Astron. J. 2016, 151, 44. [Google Scholar] [CrossRef] [Green Version]

- Levi, M.; Bebek, C.; Beers, T.; Blum, R.; Cahn, R.; Eisenstein, D.; Flaugher, B.; Honscheid, K.; Kron, R.; Lahav, O.; et al. The DESI Experiment, a Whitepaper for Snowmass 2013. 2013; arXiv:1308.0847. [Google Scholar]

- LSST Science Collaboration; Abell, P.A.; Allison, J.; Anderson, S.F.; Andrew, J.R.; Angel, J.R.P.; Armus, L.; Arnett, D.; Asztalos, S.J.; Axelrod, T.S.; et al. LSST Science Book. Version 2.0. 2009; arXiv:0912.0201. [Google Scholar]

- Laureijs, R.; Amiaux, J.; Arduini, S.; Auguères, J.; Brinchmann, J.; Cole, R.; Cropper, M.; Dabin, C.; Duvet, L.; Ealet, A.; et al. Euclid Definition Study Report. 2011; arXiv:1110.3193. [Google Scholar]

- Green, J.; Schechter, P.; Baltay, C.; Bean, R.; Bennett, D.; Brown, R.; Conselice, C.; Donahue, M.; Fan, X.; Gaudi, B.S.; et al. Wide-Field InfraRed Survey Telescope (WFIRST) Final Report. 2012; arXiv:1208.4012. [Google Scholar]

- Kitaura, F.S.; Enßlin, T.A. Bayesian reconstruction of the cosmological large-scale structure: Methodology, inverse algorithms and numerical optimization. Mon. Not. R. Astron. Soc. 2008, 389, 497–544. [Google Scholar] [CrossRef]

- Jasche, J.; Kitaura, F.S.; Wandelt, B.D.; Enßlin, T.A. Bayesian power-spectrum inference for large-scale structure data. Mon. Not. R. Astron. Soc. 2010, 406, 60–85. [Google Scholar] [CrossRef]

- Baugh, C.M. A primer on hierarchical galaxy formation: the semi-analytical approach. Rep. Prog. Phys. 2006, 69, 3101–3156. [Google Scholar] [CrossRef]

- Benson, A.J. Galaxy formation theory. Phys. Rep. 2010, 495, 33–86. [Google Scholar] [CrossRef]

- Somerville, R.S.; Davé, R. Physical models of galaxy formation in a cosmological framework. Ann. Rev. Astron. Astrophys. 2015, 53, 51–113. [Google Scholar] [CrossRef]

- Peebles, P.J.E. The Large-Scale Structure of the Universe; Princeton University Press: Princeton, NJ, USA, 1980. [Google Scholar]

- Buchert, T.; Ehlers, J. Averaging inhomogeneous Newtonian cosmologies. Astron. Astrophys. 1997, 320, 1–7. [Google Scholar]

- Bernardeau, F.; Colombi, S.; Gaztañaga, E.; Scoccimarro, R. Large-scale structure of the Universe and cosmological perturbation theory. Phys. Rep. 2002, 367, 1–248. [Google Scholar] [CrossRef]

- Carlson, J.; White, M.; Padmanabhan, N. Critical look at cosmological perturbation theory techniques. Phys. Rev. D 2009, 80, 043531. [Google Scholar] [CrossRef]

- Zel’dovich, Y.B. Gravitational instability: An approximate theory for large density perturbations. Astron. Astrophys. 1970, 5, 84–89. [Google Scholar]

- Shandarin, S.F.; Zeldovich, Y.B. The large-scale structure of the universe: Turbulence, intermittency, structures in a self-gravitating medium. Rev. Mod. Phys. 1989, 61, 185–220. [Google Scholar] [CrossRef]

- Buchert, T. A class of solutions in Newtonian cosmology and the pancake theory. Astron. Astrophys. 1989, 223, 9–24. [Google Scholar]

- Moutarde, F.; Alimi, J.M.; Bouchet, F.R.; Pellat, R.; Ramani, A. Precollapse scale invariance in gravitational instability. Astrophys. J. 1991, 382, 377–381. [Google Scholar] [CrossRef]

- Bouchet, F.R.; Juszkiewicz, R.; Colombi, S.; Pellat, R. Weakly nonlinear gravitational instability for arbitrary Omega. Astrophys. J. Lett. 1992, 394, L5–L8. [Google Scholar] [CrossRef]

- Bouchet, F.R.; Colombi, S.; Hivon, E.; Juszkiewicz, R. Perturbative Lagrangian approach to gravitational instability. Astron. Astrophys. 1995, 296, 575. [Google Scholar]

- Buchert, T. Lagrangian theory of gravitational instability of Friedman-Lemaitre cosmologies and the ‘Zel’dovich approximation’. Mon. Not. R. Astron. Soc. 1992, 254, 729–737. [Google Scholar] [CrossRef]

- Buchert, T.; Ehlers, J. Lagrangian theory of gravitational instability of Friedman-Lemaitre cosmologies—Second-order approach: An improved model for non-linear clustering. Mon. Not. R. Astron. Soc. 1993, 264, 375–387. [Google Scholar] [CrossRef]

- Buchert, T. Lagrangian theory of gravitational instability of Friedman-Lemaitre cosmologies—A generic third-order model for nonlinear clustering. Mon. Not. R. Astron. Soc. 1994, 267, 811–820. [Google Scholar] [CrossRef]

- Buchert, T. Lagrangian perturbation theory—A key-model for large-scale structure. Astron. Astrophys. 1993, 267, L51–L54. [Google Scholar]

- Catelan, P. Lagrangian dynamics in non-flat universes and non-linear gravitational evolution. Mon. Not. R. Astron. Soc. 1995, 276, 115–124. [Google Scholar]

- Buchert, T. Lagrangian perturbation approach to the formation of large-scale structure. In Dark Matter in the Universe; Bonometto, S., Primack, J.R., Provenzale, A., Eds.; IOS Press: Amsterdam, The Netherlands, 1996. [Google Scholar]

- Bouchet, F.R. Introductory overview of eulerian and Lagrangian perturbation theories. In Dark Matter in the Universe; Bonometto, S., Primack, J.R., Provenzale, A., Eds.; IOS Press: Amsterdam, The Netherlands, 1996; p. 565. [Google Scholar]

- Ehlers, J.; Buchert, T. Newtonian cosmology in Lagrangian formulation: Foundations and perturbation theory. Gen. Relativ. Gravit. 1997, 29, 733–764. [Google Scholar] [CrossRef]

- Crocce, M.; Pueblas, S.; Scoccimarro, R. Transients from initial conditions in cosmological simulations. Mon. Not. R. Astron. Soc. 2006, 373, 369–381. [Google Scholar] [CrossRef]

- Scoccimarro, R. Transients from initial conditions: A perturbative analysis. Mon. Not. R. Astron. Soc. 1998, 299, 1097–1118. [Google Scholar] [CrossRef]

- Angulo, R.E.; Hahn, O.; Ludlow, A.; Bonoli, S. Earth-Mass Haloes and the Emergence of NFW Density Profiles. 2016; arXiv:1604.03131. [Google Scholar]

- Ishiyama, T. Hierarchical formation of dark matter halos and the free streaming scale. Astrophys. J. 2014, 788, 27. [Google Scholar] [CrossRef]

- Press, W.H.; Schechter, P. Formation of galaxies and clusters of galaxies by self-similar gravitational condensation. Astrophys. J. 1974, 187, 425–438. [Google Scholar] [CrossRef]

- Doroshkevich, A.G. Momentum and mass distribution funcatons for newly generated cosmic objects. Astrophysics 1967, 3, 175–188. [Google Scholar] [CrossRef]

- Efstathiou, G.; Frenk, C.S.; White, S.D.M.; Davis, M. Gravitational clustering from scale-free initial conditions. Mon. Not. R. Astron. Soc. 1988, 235, 715–748. [Google Scholar] [CrossRef]

- Epstein, R.I. Proto-galactic perturbations. Mon. Not. R. Astron. Soc. 1983, 205, 207–229. [Google Scholar] [CrossRef]

- Peacock, J.A.; Heavens, A.F. Alternatives to the Press-Schechter cosmological mass function. Mon. Not. R. Astron. Soc. 1990, 243, 133–143. [Google Scholar] [CrossRef]

- Bond, J.R.; Cole, S.; Efstathiou, G.; Kaiser, N. Excursion set mass functions for hierarchical Gaussian fluctuations. Astrophys. J. 1991, 379, 440–460. [Google Scholar] [CrossRef]

- Monaco, P. The Cosmological Mass Function. Fundam. Cosm. Phys. 2008, 19, 157–317. [Google Scholar]

- Zentner, A.R. The excursion set theory of halo mass functions, halo clustering, and halo growth. Int. J. Mod. Phys. D 2007, 16, 763–816. [Google Scholar] [CrossRef]

- Bower, R.G. The evolution of groups of galaxies in the Press-Schechter formalism. Mon. Not. R. Astron. Soc. 1991, 248, 332–352. [Google Scholar] [CrossRef]

- Lacey, C.; Cole, S. Merger rates in hierarchical models of galaxy formation. Mon. Not. R. Astron. Soc. 1993, 262, 627–649. [Google Scholar] [CrossRef]

- Sheth, R.K.; Lemson, G. The forest of merger history trees associated with the formation of dark matter haloes. Mon. Not. R. Astron. Soc. 1999, 305, 946–956. [Google Scholar] [CrossRef]

- Somerville, R.S.; Kolatt, T.S. How to plant a merger tree. Mon. Not. R. Astron. Soc. 1999, 305, 1–14. [Google Scholar] [CrossRef]

- Van den Bosch, F.C. The universal mass accretion history of cold dark matter haloes. Mon. Not. R. Astron. Soc. 2002, 331, 98–110. [Google Scholar] [CrossRef]

- Sheth, R.K.; Tormen, G. Large-scale bias and the peak background split. Mon. Not. R. Astron. Soc. 1999, 308, 119–126. [Google Scholar] [CrossRef]

- Sheth, R.K.; Tormen, G. An excursion set model of hierarchical clustering: Ellipsoidal collapse and the moving barrier. Mon. Not. R. Astron. Soc. 2002, 329, 61–75. [Google Scholar] [CrossRef]

- Monaco, P. Dynamics in the Cosmological Mass Function (or, why does the Press & Schechter work?). In Observational Cosmology: The Development of Galaxy Systems; Giuricin, G., Mezzetti, M., Salucci, P., Eds.; Astronomical Society of the Pacific Conference Series; Astronomical Society of the Pacific: San Francisco, CA, USA, 1999; Volume 176, p. 186. [Google Scholar]

- Sheth, R.K. Random walks and the additive coagulation equation. Mon. Not. R. Astron. Soc. 1998, 295, 869–872. [Google Scholar] [CrossRef]

- Adler, R.J. The Geometry of Random Fields; SIAM-Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1981. [Google Scholar]

- Doroshkevich, A.G. The space structure of perturbations and the origin of rotation of galaxies in the theory of fluctuation. Astrofizika 1970, 6, 591–600. [Google Scholar]

- Peacock, J.A.; Heavens, A.F. The statistics of maxima in primordial density perturbations. Mon. Not. R. Astron. Soc. 1985, 217, 805–820. [Google Scholar] [CrossRef]

- Bardeen, J.M.; Bond, J.R.; Kaiser, N.; Szalay, A.S. The statistics of peaks of Gaussian random fields. Astrophys. J. 1986, 304, 15–61. [Google Scholar] [CrossRef]

- Kerscher, M.; Buchert, T.; Futamase, T. On the abundance of collapsed objects. Astrophys. J. Lett. 2001, 558, L79–L82. [Google Scholar] [CrossRef] [Green Version]

- Bond, J.R.; Myers, S.T. The peak-patch picture of cosmic catalogs. I. Algorithms. Astrophys. J. Suppl. 1996, 103, 1. [Google Scholar] [CrossRef]

- Nadkarni-Ghosh, S.; Singhal, A. Phase space dynamics of triaxial collapse: Joint density-velocity evolution. Mon. Not. R. Astron. Soc. 2016, 457, 2773–2789. [Google Scholar] [CrossRef]

- Monaco, P. The mass function of cosmic structures with nonspherical collapse. Astrophys. J. 1995, 447, 23. [Google Scholar] [CrossRef]

- Monaco, P. A Lagrangian dynamical theory for the mass function of cosmic structures—I. Dynamics. Mon. Not. R. Astron. Soc. 1997, 287, 753–770. [Google Scholar] [CrossRef]

- Hahn, O.; Porciani, C.; Carollo, C.M.; Dekel, A. Properties of dark matter haloes in clusters, filaments, sheets and voids. Mon. Not. R. Astron. Soc. 2007, 375, 489–499. [Google Scholar] [CrossRef]

- Cooray, A.; Sheth, R. Halo models of large scale structure. Phys. Rep. 2002, 372, 1–129. [Google Scholar] [CrossRef]

- Kaiser, N. On the spatial correlations of Abell clusters. Astrophys. J. Lett. 1984, 284, L9–L12. [Google Scholar] [CrossRef]

- Bagla, J.S. Evolution of galaxy clustering. Mon. Not. R. Astron. Soc. 1998, 299, 417–424. [Google Scholar] [CrossRef]

- Mo, H.J.; White, S.D.M. An analytic model for the spatial clustering of dark matter haloes. Mon. Not. R. Astron. Soc. 1996, 282, 347–361. [Google Scholar] [CrossRef]

- Sheth, R.K.; Mo, H.J.; Tormen, G. Ellipsoidal collapse and an improved model for the number and spatial distribution of dark matter haloes. Mon. Not. R. Astron. Soc. 2001, 323, 1–12. [Google Scholar] [CrossRef]

- Jing, Y.P. Accurate fitting formula for the two-point correlation function of dark matter halos. Astrophys. J. Lett. 1998, 503, L9–L13. [Google Scholar] [CrossRef]

- Fry, J.N.; Gaztanaga, E. Biasing and hierarchical statistics in large-scale structure. Astrophys. J. 1993, 413, 447–452. [Google Scholar] [CrossRef]

- Chan, K.C.; Scoccimarro, R.; Sheth, R.K. Gravity and large-scale nonlocal bias. Phys. Rev. D 2012, 85, 083509. [Google Scholar] [CrossRef]

- Sheth, R.K.; Chan, K.C.; Scoccimarro, R. Nonlocal Lagrangian bias. Phys. Rev. D 2013, 87, 083002. [Google Scholar] [CrossRef]

- Dekel, A.; Lahav, O. Stochastic nonlinear galaxy biasing. Astrophys. J. 1999, 520, 24–34. [Google Scholar] [CrossRef]

- Sahni, V.; Coles, P. Approximation methods for non-linear gravitational clustering. Phys. Rep. 1995, 262, 1–135. [Google Scholar] [CrossRef]

- Coles, P.; Jones, B. A lognormal model for the cosmological mass distribution. Mon. Not. R. Astron. Soc. 1991, 248, 1–13. [Google Scholar] [CrossRef]

- Kofman, L.A.; Shandarin, S.F. Theory of adhesion for the large-scale structure of the universe. Nature 1988, 334, 129–131. [Google Scholar] [CrossRef]

- Gurbatov, S.N.; Saichev, A.I.; Shandarin, S.F. The large-scale structure of the universe in the frame of the model equation of non-linear diffusion. Mon. Not. R. Astron. Soc. 1989, 236, 385–402. [Google Scholar] [CrossRef]

- Kofman, L.; Pogosyan, D.; Shandarin, S.F.; Melott, A.L. Coherent structures in the universe and the adhesion model. Astrophys. J. 1992, 393, 437–449. [Google Scholar] [CrossRef]

- Buchert, T.; Dominguez, A. Modeling multi-stream flow in collisionless matter: Approximations for large-scale structure beyond shell-crossing. Astron. Astrophys. 1998, 335, 395–402. [Google Scholar]

- Menci, N. An Eulerian perturbation approach to large-scale structures: Extending the adhesion approximation. Mon. Not. R. Astron. Soc. 2002, 330, 907–919. [Google Scholar] [CrossRef]

- Matarrese, S.; Lucchin, F.; Moscardini, L.; Saez, D. A frozen-flow approximation to the evolution of large-scale structures in the Universe. Mon. Not. R. Astron. Soc. 1992, 259, 437–452. [Google Scholar] [CrossRef]

- Bagla, J.S.; Padmanabhan, T. Nonlinear evolution of density perturbations using the approximate constancy of the gravitational potential. Mon. Not. R. Astron. Soc. 1994, 266, 227. [Google Scholar] [CrossRef]

- Coles, P.; Melott, A.L.; Shandarin, S.F. Testing approximations for non-linear gravitational clustering. Mon. Not. R. Astron. Soc. 1993, 260, 765–776. [Google Scholar] [CrossRef]

- Melott, A.L.; Buchert, T.; Weib, A.G. Testing higher-order Lagrangian perturbation theory against numerical simulations. 2: Hierarchical models. Astron. Astrophys. 1995, 294, 345–365. [Google Scholar]

- Melott, A.L. Comparison of dynamical approximation schemes for nonlinear gravitaional clustering. Astrophys. J. Lett. 1994, 426, L19–L22. [Google Scholar] [CrossRef]

- Borgani, S.; Coles, P.; Moscardini, L. Cluster correlations in the Zel’dovich approximation. Mon. Not. R. Astron. Soc. 1994, 271, 223. [Google Scholar] [CrossRef]

- Nusser, A.; Dekel, A. Tracing large-scale fluctuations back in time. Astrophys. J. 1992, 391, 443–452. [Google Scholar] [CrossRef]

- Peebles, P.J.E. Tracing galaxy orbits back in time. Astrophys. J. Lett. 1989, 344, L53–L56. [Google Scholar] [CrossRef]

- Keselman, A.; Nusser, A. Performance Study of Lagrangian Methods: Reconstruction of Large Scale Peculiar Velocities and Baryonic Acoustic Oscillations. 2016; arXiv:1609.03576. [Google Scholar]

- Monaco, P.; Efstathiou, G. Reconstruction of cosmological initial conditions from galaxy redshift catalogues. Mon. Not. R. Astron. Soc. 1999, 308, 763–779. [Google Scholar] [CrossRef]

- Mohayaee, R.; Mathis, H.; Colombi, S.; Silk, J. Reconstruction of primordial density fields. Mon. Not. R. Astron. Soc. 2006, 365, 939–959. [Google Scholar] [CrossRef]

- Mohayaee, R.; Frisch, U.; Matarrese, S.; Sobolevskii, A. Back to the primordial Universe by a Monge-Ampère-Kantorovich optimization scheme. Astron. Astrophys. 2003, 406, 393–401. [Google Scholar] [CrossRef]

- Hoffman, Y.; Ribak, E. Constrained realizations of Gaussian fields—A simple algorithm. Astrophys. J. Lett. 1991, 380, L5–L8. [Google Scholar] [CrossRef]

- L’Huillier, B.; Park, C.; Kim, J. Effects of the initial conditions on cosmological N-body simulations. New Astron. 2014, 30, 79–88. [Google Scholar] [CrossRef]

- Garrison, L.H.; Eisenstein, D.J.; Ferrer, D.; Metchnik, M.V.; Pinto, P.A. Improving Initial Conditions for Cosmological N-Body Simulations. 2016; arXiv:1605.02333. [Google Scholar]

- Pope, A.C.; Szapudi, I. Shrinkage estimation of the power spectrum covariance matrix. Mon. Not. R. Astron. Soc. 2008, 389, 766–774. [Google Scholar] [CrossRef]

- Schneider, M.D.; Cole, S.; Frenk, C.S.; Szapudi, I. Fast generation of ensembles of cosmological n-body simulations via mode resampling. Astrophys. J. 2011, 737, 11. [Google Scholar] [CrossRef]

- Percival, W.J.; Ross, A.J.; Sánchez, A.G.; Samushia, L.; Burden, A.; Crittenden, R.; Cuesta, A.J.; Magana, M.V.; Manera, M.; Beutler, F.; et al. The clustering of Galaxies in the SDSS-III Baryon Oscillation Spectroscopic Survey: Including covariance matrix errors. Mon. Not. R. Astron. Soc. 2014, 439, 2531–2541. [Google Scholar] [CrossRef]

- Paz, D.J.; Sánchez, A.G. Improving the precision matrix for precision cosmology. Mon. Not. R. Astron. Soc. 2015, 454, 4326–4334. [Google Scholar] [CrossRef]

- Kalus, B.; Percival, W.J.; Samushia, L. Cosmological parameter inference from galaxy clustering: The effect of the posterior distribution of the power spectrum. Mon. Not. R. Astron. Soc. 2016, 455, 2573–2581. [Google Scholar] [CrossRef] [Green Version]

- Pearson, D.W.; Samushia, L. Estimating the power spectrum covariance matrix with fewer mock samples. Mon. Not. R. Astron. Soc. 2016, 457, 993–999. [Google Scholar] [CrossRef]

- O’Connell, R.; Eisenstein, D.; Vargas, M.; Ho, S.; Padmanabhan, N. Large Covariance Matrices: Smooth Models from the 2-Point Correlation Function. 2015; arXiv:1510.01740. [Google Scholar]

- Padmanabhan, N.; White, M.; Zhou, H.H.; O’Connell, R. Estimating Sparse Precision Matrices. 2015; arXiv:1512.01241. [Google Scholar]

- Angulo, R.E.; Pontzen, A. Cosmological N-body simulations with suppressed variance. Mon. Not. R. Astron. Soc. 2016, 462, L1–L5. [Google Scholar] [CrossRef]

- Schäfer, J.; Strimmer, K. A Shrinkage approach to large-scale covariance matrix estimation and implications for functional genomics. Stat. Appl. Genet. Mol. Biol. 2005, 4, 32. [Google Scholar] [CrossRef] [PubMed]

- De la Torre, S.; Guzzo, L.; Peacock, J.A.; Branchini, E.; Iovino, A.; Granett, B.R.; Abbas, U.; Adami, C.; Arnouts, S.; Bel, J.; et al. The VIMOS Public Extragalactic Redshift Survey (VIPERS). Galaxy clustering and redshift-space distortions at z ∼ 0.8 in the first data release. Astron. Astrophys. 2013, 557, A54. [Google Scholar] [CrossRef]

- White, M. The Zel’dovich approximation. Mon. Not. R. Astron. Soc. 2014, 439, 3630–3640. [Google Scholar] [CrossRef]

- White, M. Reconstruction within the Zeldovich approximation. Mon. Not. R. Astron. Soc. 2015, 450, 3822–3828. [Google Scholar] [CrossRef]

- Eisenstein, D.J.; Seo, H.J.; Sirko, E.; Spergel, D.N. Improving cosmological distance measurements by reconstruction of the baryon acoustic peak. Astrophys. J. 2007, 664, 675–679. [Google Scholar] [CrossRef]

- Padmanabhan, N.; White, M.; Cohn, J.D. Reconstructing baryon oscillations: A Lagrangian theory perspective. Phys. Rev. D 2009, 79, 063523. [Google Scholar] [CrossRef]

- Padmanabhan, N.; Xu, X.; Eisenstein, D.J.; Scalzo, R.; Cuesta, A.J.; Mehta, K.T.; Kazin, E. A 2 per cent distance to z = 0.35 by reconstructing baryon acoustic oscillations—I. Methods and application to the Sloan Digital Sky Survey. Mon. Not. R. Astron. Soc. 2012, 427, 2132–2145. [Google Scholar] [CrossRef]

- Burden, A.; Percival, W.J.; Howlett, C. Reconstruction in Fourier space. Mon. Not. R. Astron. Soc. 2015, 453, 456–468. [Google Scholar] [CrossRef] [Green Version]

- McCullagh, N.; Szalay, A.S. Nonlinear behavior of Baryon Acoustic Oscillations from the Zel’dovich approximation using a non-fourier perturbation approach. Astrophys. J. 2012, 752, 21. [Google Scholar] [CrossRef]

- Rampf, C.; Buchert, T. Lagrangian perturbations and the matter bispectrum I: Fourth-order model for non-linear clustering. J. Cosmol. Astropart. Phys. 2012, 6, 021. [Google Scholar] [CrossRef]

- Tatekawa, T. Fourth-order perturbative equations in Lagrangian perturbation theory for a cosmological dust fluid. Prog. Theor. Exp. Phys. 2013, 2013, 013E03. [Google Scholar] [CrossRef]

- Leclercq, F.; Jasche, J.; Gil-Marín, H.; Wandelt, B. One-point remapping of Lagrangian perturbation theory in the mildly non-linear regime of cosmic structure formation. J. Cosmol. Astropart. Phys. 2013, 11, 048. [Google Scholar] [CrossRef]

- Nadkarni-Ghosh, S.; Chernoff, D.F. Modelling non-linear evolution using Lagrangian perturbation theory re-expansions. Mon. Not. R. Astron. Soc. 2013, 431, 799–823. [Google Scholar] [CrossRef]

- Bartelmann, M. Trajectories of point particles in cosmology and the Zel’dovich approximation. Phys. Rev. D 2015, 91, 083524. [Google Scholar] [CrossRef]

- Tassev, S. Lagrangian or Eulerian; real or Fourier? Not all approaches to large-scale structure are created equal. J. Cosmol. Astropart. Phys. 2014, 6, 008. [Google Scholar] [CrossRef] [PubMed]

- Sugiyama, N.S. Using Lagrangian perturbation theory for precision cosmology. Astrophys. J. 2014, 788, 63. [Google Scholar] [CrossRef]

- Vlah, Z.; Seljak, U.; Baldauf, T. Lagrangian perturbation theory at one loop order: Successes, failures, and improvements. Phys. Rev. D 2015, 91, 023508. [Google Scholar] [CrossRef]

- Carrasco, J.J.M.; Hertzberg, M.P.; Senatore, L. The effective field theory of cosmological large scale structures. J. High Energy Phys. 2012, 9, 82. [Google Scholar] [CrossRef]

- Porto, R.A.; Senatore, L.; Zaldarriaga, M. The Lagrangian-space Effective Field Theory of large scale structures. J. Cosmol. Astropart. Phys. 2014, 5, 022. [Google Scholar] [CrossRef]

- Baldauf, T.; Schaan, E.; Zaldarriaga, M. On the reach of perturbative methods for dark matter density fields. J. Cosmol. Astropart. Phys. 2016, 3, 007. [Google Scholar] [CrossRef] [PubMed]

- Kitaura, F.S.; Heß, S. Cosmological structure formation with augmented Lagrangian perturbation theory. Mon. Not. R. Astron. Soc. 2013, 435, L78–L82. [Google Scholar] [CrossRef]

- Bernardeau, F. The nonlinear evolution of rare events. Astrophys. J. 1994, 427, 51–71. [Google Scholar] [CrossRef]

- Neyrinck, M.C. Quantifying distortions of the Lagrangian dark-matter mesh in cosmology. Mon. Not. R. Astron. Soc. 2013, 428, 141–153. [Google Scholar] [CrossRef]

- Chan, K.C. Helmholtz decomposition of the Lagrangian displacement. Phys. Rev. D 2014, 89, 083515. [Google Scholar] [CrossRef]

- Neyrinck, M.C. Truthing the stretch: Non-perturbative cosmological realizations with multiscale spherical collapse. Mon. Not. R. Astron. Soc. 2016, 455, L11–L15. [Google Scholar] [CrossRef]

- Cole, S.; Helly, J.; Frenk, C.S.; Parkinson, H. The statistical properties of Λ cold dark matter halo formation. Mon. Not. R. Astron. Soc. 2008, 383, 546–556. [Google Scholar] [CrossRef]

- Parkinson, H.; Cole, S.; Helly, J. Generating dark matter halo merger trees. Mon. Not. R. Astron. Soc. 2008, 383, 557–564. [Google Scholar] [CrossRef] [Green Version]

- Maggiore, M.; Riotto, A. The halo mass function from excursion set theory. I. Gaussian fluctuations with non-markovian dependence on the smoothing scale. Astrophys. J. 2010, 711, 907–927. [Google Scholar] [CrossRef]

- Maggiore, M.; Riotto, A. The Halo mass function from Excursion Set Theory. II. The Diffusing Barrier. Astrophys. J. 2010, 717, 515–525. [Google Scholar] [CrossRef]

- Farahi, A.; Benson, A.J. Excursion set theory for correlated random walks. Mon. Not. R. Astron. Soc. 2013, 433, 3428–3439. [Google Scholar] [CrossRef]

- Manrique, A.; Salvador-Sole, E. The confluent system formalism. I. The mass function of objects in the peak model. Astrophys. J. 1995, 453, 6. [Google Scholar] [CrossRef]

- Juan, E.; Salvador-Solé, E.; Domènech, G.; Manrique, A. Fixing a rigorous formalism for the accurate analytic derivation of halo properties. Mon. Not. R. Astron. Soc. 2014, 439, 719–724. [Google Scholar] [CrossRef]

- Paranjape, A.; Sheth, R.K. Peaks theory and the excursion set approach. Mon. Not. R. Astron. Soc. 2012, 426, 2789–2796. [Google Scholar] [CrossRef]

- Paranjape, A.; Sheth, R.K.; Desjacques, V. Excursion set peaks: A self-consistent model of dark halo abundances and clustering. Mon. Not. R. Astron. Soc. 2013, 431, 1503–1512. [Google Scholar] [CrossRef]

- Musso, M.; Sheth, R.K. One step beyond: The excursion set approach with correlated steps. Mon. Not. R. Astron. Soc. 2012, 423, L102–L106. [Google Scholar] [CrossRef]

- Paranjape, A.; Sefusatti, E.; Chan, K.C.; Desjacques, V.; Monaco, P.; Sheth, R.K. Bias deconstructed: Unravelling the scale dependence of halo bias using real-space measurements. Mon. Not. R. Astron. Soc. 2013, 436, 449–459. [Google Scholar] [CrossRef]

- Ludlow, A.D.; Borzyszkowski, M.; Porciani, C. The formation of CDM haloes—I. Collapse thresholds and the ellipsoidal collapse model. Mon. Not. R. Astron. Soc. 2014, 445, 4110–4123. [Google Scholar] [CrossRef]

- Borzyszkowski, M.; Ludlow, A.D.; Porciani, C. The formation of cold dark matter haloes—II. Collapse time and tides. Mon. Not. R. Astron. Soc. 2014, 445, 4124–4136. [Google Scholar] [CrossRef]

- Baldauf, T.; Seljak, U.; Senatore, L.; Zaldarriaga, M. Galaxy bias and non-linear structure formation in general relativity. J. Cosmol. Astropart. Phys. 2011, 10, 031. [Google Scholar] [CrossRef]

- McDonald, P. Clustering of dark matter tracers: Renormalizing the bias parameters. Phys. Rev. D 2006, 74, 103512. [Google Scholar] [CrossRef]

- Gil-Marín, H.; Verde, L.; Noreña, J.; Cuesta, A.J.; Samushia, L.; Percival, W.J.; Wagner, C.; Manera, M.; Schneider, D.P. The power spectrum and bispectrum of SDSS DR11 BOSS galaxies—II. Cosmological interpretation. Mon. Not. R. Astron. Soc. 2015, 452, 1914–1921. [Google Scholar] [CrossRef]

- Kitaura, F.S. The initial conditions of the Universe from constrained simulations. Mon. Not. R. Astron. Soc. 2013, 429, L84–L88. [Google Scholar] [CrossRef]

- Kitaura, F.S.; Erdoǧdu, P.; Nuza, S.E.; Khalatyan, A.; Angulo, R.E.; Hoffman, Y.; Gottlöber, S. Cosmic structure and dynamics of the local Universe. Mon. Not. R. Astron. Soc. 2012, 427, L35–L39. [Google Scholar] [CrossRef]

- Wang, H.; Mo, H.J.; Yang, X.; Jing, Y.P.; Lin, W.P. ELUCID—Exploring the Local Universe with the Reconstructed Initial Density Field. I. Hamiltonian Markov Chain Monte Carlo Method with Particle Mesh Dynamics. Astrophys. J. 2014, 794, 94. [Google Scholar] [CrossRef]

- Jasche, J.; Wandelt, B.D. Bayesian physical reconstruction of initial conditions from large-scale structure surveys. Mon. Not. R. Astron. Soc. 2013, 432, 894–913. [Google Scholar] [CrossRef]

- Jasche, J.; Leclercq, F.; Wandelt, B.D. Past and present cosmic structure in the SDSS DR7 main sample. J. Cosmol. Astropart. Phys. 2015, 1, 036. [Google Scholar] [CrossRef]

- Gottloeber, S.; Hoffman, Y.; Yepes, G. Constrained Local UniversE Simulations (CLUES). 2010; arXiv:1005.2687. [Google Scholar]

- Wandelt, B.D.; Larson, D.L.; Lakshminarayanan, A. Global, exact cosmic microwave background data analysis using Gibbs sampling. Phys. Rev. D 2004, 70, 083511. [Google Scholar] [CrossRef]

- Despali, G.; Giocoli, C.; Angulo, R.E.; Tormen, G.; Sheth, R.K.; Baso, G.; Moscardini, L. The Universality of the Virial Halo Mass Function and Models for Non-Universality of Other Halo Definitions. 2015; arXiv:1507.05627. [Google Scholar]

- Jenkins, A.; Frenk, C.S.; White, S.D.M.; Colberg, J.M.; Cole, S.; Evrard, A.E.; Couchman, H.M.P.; Yoshida, N. The mass function of dark matter haloes. Mon. Not. R. Astron. Soc. 2001, 321, 372–384. [Google Scholar] [CrossRef] [Green Version]

- Warren, M.S.; Abazajian, K.; Holz, D.E.; Teodoro, L. Precision determination of the mass function of dark matter halos. Astrophys. J. 2006, 646, 881–885. [Google Scholar] [CrossRef]

- Reed, D.S.; Bower, R.; Frenk, C.S.; Jenkins, A.; Theuns, T. The halo mass function from the dark ages through the present day. Mon. Not. R. Astron. Soc. 2007, 374, 2–15. [Google Scholar] [CrossRef]

- Tinker, J.; Kravtsov, A.V.; Klypin, A.; Abazajian, K.; Warren, M.; Yepes, G.; Gottlöber, S.; Holz, D.E. Toward a Halo mass function for precision cosmology: The limits of universality. Astrophys. J. 2008, 688, 709–728. [Google Scholar] [CrossRef]

- Crocce, M.; Fosalba, P.; Castander, F.J.; Gaztañaga, E. Simulating the Universe with MICE: The abundance of massive clusters. Mon. Not. R. Astron. Soc. 2010, 403, 1353–1367. [Google Scholar] [CrossRef] [Green Version]

- Manera, M.; Sheth, R.K.; Scoccimarro, R. Large-scale bias and the inaccuracy of the peak-background split. Mon. Not. R. Astron. Soc. 2010, 402, 589–602. [Google Scholar] [CrossRef]

- Bhattacharya, S.; Heitmann, K.; White, M.; Lukić, Z.; Wagner, C.; Habib, S. Mass function predictions beyond ΛCDM. Astrophys. J. 2011, 732, 122. [Google Scholar] [CrossRef]

- Courtin, J.; Rasera, Y.; Alimi, J.M.; Corasaniti, P.S.; Boucher, V.; Füzfa, A. Imprints of dark energy on cosmic structure formation—II. Non-universality of the halo mass function. Mon. Not. R. Astron. Soc. 2011, 410, 1911–1931. [Google Scholar] [CrossRef]

- Watson, W.A.; Iliev, I.T.; D’Aloisio, A.; Knebe, A.; Shapiro, P.R.; Yepes, G. The halo mass function through the cosmic ages. Mon. Not. R. Astron. Soc. 2013, 433, 1230–1245. [Google Scholar] [CrossRef]

- Monaco, P.; Theuns, T.; Taffoni, G.; Governato, F.; Quinn, T.; Stadel, J. Predicting the number, spatial distribution, and merging history of dark matter halos. Astrophys. J. 2002, 564, 8–14. [Google Scholar] [CrossRef]

- Monaco, P.; Theuns, T.; Taffoni, G. The pinocchio algorithm: Pinpointing orbit-crossing collapsed hierarchical objects in a linear density field. Mon. Not. R. Astron. Soc. 2002, 331, 587–608. [Google Scholar] [CrossRef]

- Taffoni, G.; Monaco, P.; Theuns, T. PINOCCHIO and the hierarchical build-up of dark matter haloes. Mon. Not. R. Astron. Soc. 2002, 333, 623–632. [Google Scholar] [CrossRef]

- Monaco, P.; Fontanot, F.; Taffoni, G. The MORGANA model for the rise of galaxies and active nuclei. Mon. Not. R. Astron. Soc. 2007, 375, 1189–1219. [Google Scholar] [CrossRef]

- Monaco, P.; Sefusatti, E.; Borgani, S.; Crocce, M.; Fosalba, P.; Sheth, R.K.; Theuns, T. An accurate tool for the fast generation of dark matter halo catalogues. Mon. Not. R. Astron. Soc. 2013, 433, 2389–2402. [Google Scholar] [CrossRef]

- Munari, E.; Monaco, P.; Sefusatti, E.; Castorina, E.; Mohammad, F.G.; Anselmi, S.; Borgani, S. Improving the prediction of dark matter halo clustering with higher orders of Lagrangian Perturbation Theory. Mon. Not. R. Astron. Soc. 2016. Accepted. Preprint: arXiv:1605.04788. [Google Scholar]

- Scoccimarro, R.; Sheth, R.K. PTHALOS: A fast method for generating mock galaxy distributions. Mon. Not. R. Astron. Soc. 2002, 329, 629–640. [Google Scholar] [CrossRef]

- Manera, M.; Scoccimarro, R.; Percival, W.J.; Samushia, L.; McBride, C.K.; Ross, A.J.; Sheth, R.K.; White, M.; Reid, B.A.; Sánchez, A.G.; et al. The clustering of galaxies in the SDSS-III Baryon Oscillation Spectroscopic Survey: A large sample of mock galaxy catalogues. Mon. Not. R. Astron. Soc. 2013, 428, 1036–1054. [Google Scholar] [CrossRef]

- Dawson, K.S.; Schlegel, D.J.; Ahn, C.P.; Anderson, S.F.; Aubourg, É.; Bailey, S.; Barkhouser, R.H.; Bautista, J.E.; Beifiori, A.; Berlind, A.A.; et al. The Baryon Oscillation Spectroscopic Survey of SDSS-III. Astron. J. 2013, 145, 10. [Google Scholar] [CrossRef]

- Ross, A.J.; Percival, W.J.; Sánchez, A.G.; Samushia, L.; Ho, S.; Kazin, E.; Manera, M.; Reid, B.; White, M.; Tojeiro, R.; et al. The clustering of galaxies in the SDSS-III Baryon Oscillation Spectroscopic Survey: Analysis of potential systematics. Mon. Not. R. Astron. Soc. 2012, 424, 564–590. [Google Scholar] [CrossRef] [Green Version]

- Manera, M.; Samushia, L.; Tojeiro, R.; Howlett, C.; Ross, A.J.; Percival, W.J.; Gil-Marín, H.; Brownstein, J.R.; Burden, A.; Montesano, F. The clustering of galaxies in the SDSS-III Baryon Oscillation Spectroscopic Survey: Mock galaxy catalogues for the low-redshift sample. Mon. Not. R. Astron. Soc. 2015, 447, 437–445. [Google Scholar] [CrossRef]

- Koda, J.; Blake, C.; Beutler, F.; Kazin, E.; Marin, F. Fast and accurate mock catalogue generation for low-mass galaxies. Mon. Not. R. Astron. Soc. 2016, 459, 2118–2129. [Google Scholar] [CrossRef]

- Izard, A.; Crocce, M.; Fosalba, P. ICE-COLA: Towards fast and accurate synthetic galaxy catalogues optimizing a quasi N-body method. Mon. Not. R. Astron. Soc. 2016, 459, 2327–2341. [Google Scholar] [CrossRef]

- Feng, Y.; Chu, M.Y.; Seljak, U. FastPM: A New Scheme for Fast Simulations of Dark Matter and Halos. 2016; arXiv:1603.00476. [Google Scholar]

- Merz, H.; Pen, U.L.; Trac, H. Towards optimal parallel PM N-body codes: PMFAST. New Astron. 2005, 10, 393–407. [Google Scholar] [CrossRef]

- White, M.; Pope, A.; Carlson, J.; Heitmann, K.; Habib, S.; Fasel, P.; Daniel, D.; Lukic, Z. Particle mesh simulations of the Lyα forest and the signature of Baryon Acoustic Oscillations in the intergalactic medium. Astrophys. J. 2010, 713, 383–393. [Google Scholar] [CrossRef]

- White, M.; Tinker, J.L.; McBride, C.K. Mock galaxy catalogues using the quick particle mesh method. Mon. Not. R. Astron. Soc. 2014, 437, 2594–2606. [Google Scholar] [CrossRef]

- White, M.; Reid, B.; Chuang, C.H.; Tinker, J.L.; McBride, C.K.; Prada, F.; Samushia, L. Tests of redshift-space distortions models in configuration space for the analysis of the BOSS final data release. Mon. Not. R. Astron. Soc. 2015, 447, 234–245. [Google Scholar] [CrossRef]

- Beutler, F.; Saito, S.; Seo, H.J.; Brinkmann, J.; Dawson, K.S.; Eisenstein, D.J.; Font-Ribera, A.; Ho, S.; McBride, C.K.; Montesano, F.; et al. The clustering of galaxies in the SDSS-III Baryon Oscillation Spectroscopic Survey: Testing gravity with redshift space distortions using the power spectrum multipoles. Mon. Not. R. Astron. Soc. 2014, 443, 1065–1089. [Google Scholar] [CrossRef] [Green Version]

- Tassev, S.; Zaldarriaga, M.; Eisenstein, D.J. Solving large scale structure in ten easy steps with COLA. J. Cosmol. Astropart. Phys. 2013, 6, 036. [Google Scholar] [CrossRef]

- Howlett, C.; Manera, M.; Percival, W.J. L-PICOLA: A parallel code for fast dark matter simulation. Astron. Comput. 2015, 12, 109–126. [Google Scholar] [CrossRef]

- Howlett, C.; Ross, A.J.; Samushia, L.; Percival, W.J.; Manera, M. The clustering of the SDSS main galaxy sample—II. Mock galaxy catalogues and a measurement of the growth of structure from redshift space distortions at z = 0.15. Mon. Not. R. Astron. Soc. 2015, 449, 848–866. [Google Scholar] [CrossRef]

- Drinkwater, M.J.; Jurek, R.J.; Blake, C.; Woods, D.; Pimbblet, K.A.; Glazebrook, K.; Sharp, R.; Pracy, M.B.; Brough, S.; Colless, M.; et al. The WiggleZ Dark Energy Survey: Survey design and first data release. Mon. Not. R. Astron. Soc. 2010, 401, 1429–1452. [Google Scholar] [CrossRef]

- Kazin, E.A.; Koda, J.; Blake, C.; Padmanabhan, N.; Brough, S.; Colless, M.; Contreras, C.; Couch, W.; Croom, S.; Croton, D.J.; et al. The WiggleZ Dark Energy Survey: improved distance measurements to z = 1 with reconstruction of the baryonic acoustic feature. Mon. Not. R. Astron. Soc. 2014, 441, 3524–3542. [Google Scholar] [CrossRef]

- Leclercq, F.; Jasche, J.; Wandelt, B. Bayesian analysis of the dynamic cosmic web in the SDSS galaxy survey. J. Cosmol. Astropart. Phys. 2015, 6, 015. [Google Scholar] [CrossRef]

- Tassev, S.; Eisenstein, D.J.; Wandelt, B.D.; Zaldarriaga, M. sCOLA: The N-body COLA Method Extended to the Spatial Domain. 2015; arXiv:1502.07751. [Google Scholar]

- Kitaura, F.S.; Yepes, G.; Prada, F. Modelling Baryon Acoustic Oscillations with perturbation theory and stochastic halo biasing. Mon. Not. R. Astron. Soc. 2014, 439, L21–L25. [Google Scholar] [CrossRef]

- Zhao, C.; Kitaura, F.S.; Chuang, C.H.; Prada, F.; Yepes, G.; Tao, C. Halo mass distribution reconstruction across the cosmic web. Mon. Not. R. Astron. Soc. 2015, 451, 4266–4276. [Google Scholar] [CrossRef]

- Kitaura, F.S.; Gil-Marín, H.; Scóccola, C.G.; Chuang, C.H.; Müller, V.; Yepes, G.; Prada, F. Constraining the halo bispectrum in real and redshift space from perturbation theory and non-linear stochastic bias. Mon. Not. R. Astron. Soc. 2015, 450, 1836–1845. [Google Scholar] [CrossRef]

- Kitaura, F.S.; Rodríguez-Torres, S.; Chuang, C.H.; Zhao, C.; Prada, F.; Gil-Marín, H.; Guo, H.; Yepes, G.; Klypin, A.; Scóccola, C.G.; et al. The clustering of galaxies in the SDSS-III Baryon Oscillation Spectroscopic Survey: Mock galaxy catalogues for the BOSS Final Data Release. Mon. Not. R. Astron. Soc. 2016, 456, 4156–4173. [Google Scholar] [CrossRef] [Green Version]

- Chuang, C.H.; Kitaura, F.S.; Prada, F.; Zhao, C.; Yepes, G. EZmocks: Extending the Zel’dovich approximation to generate mock galaxy catalogues with accurate clustering statistics. Mon. Not. R. Astron. Soc. 2015, 446, 2621–2628. [Google Scholar] [CrossRef]

- Avila, S.; Murray, S.G.; Knebe, A.; Power, C.; Robotham, A.S.G.; Garcia-Bellido, J. HALOGEN: A tool for fast generation of mock halo catalogues. Mon. Not. R. Astron. Soc. 2015, 450, 1856–1867. [Google Scholar] [CrossRef]

- Klypin, A.; Yepes, G.; Gottlöber, S.; Prada, F.; Heß, S. MultiDark simulations: The story of dark matter halo concentrations and density profiles. Mon. Not. R. Astron. Soc. 2016, 457, 4340–4359. [Google Scholar] [CrossRef]

- Chuang, C.H.; Zhao, C.; Prada, F.; Munari, E.; Avila, S.; Izard, A.; Kitaura, F.S.; Manera, M.; Monaco, P.; Murray, S.; et al. nIFTy cosmology: Galaxy/halo mock catalogue comparison project on clustering statistics. Mon. Not. R. Astron. Soc. 2015, 452, 686–700. [Google Scholar] [CrossRef]

- Reid, B.; Ho, S.; Padmanabhan, N.; Percival, W.J.; Tinker, J.; Tojeiro, R.; White, M.; Eisenstein, D.J.; Maraston, C.; Ross, A.J.; et al. SDSS-III Baryon Oscillation Spectroscopic Survey Data Release 12: Galaxy target selection and large-scale structure catalogues. Mon. Not. R. Astron. Soc. 2016, 455, 1553–1573. [Google Scholar] [CrossRef] [Green Version]

- Sefusatti, E.; Crocce, M.; Scoccimarro, R.; Couchman, H. Accurate Estimators of Correlation Functions in Fourier Space. 2015; arXiv:1512.07295. [Google Scholar]

- Kopp, M.; Uhlemann, C.; Achitouv, I. Choose to Smooth: Gaussian Streaming with the Truncated Zel’dovich Approximation. 2016; arXiv:1606.02301. [Google Scholar]

- Pace, F.; Manera, M.; Bacon, D.J.; Crittenden, R.; Percival, W.J. The importance of the cosmic web and halo substructure for power spectra. Mon. Not. R. Astron. Soc. 2015, 454, 708–723. [Google Scholar] [CrossRef] [Green Version]

- Giocoli, C.; Meneghetti, M.; Bartelmann, M.; Moscardini, L.; Boldrin, M. MOKA: A new tool for strong lensing studies. Mon. Not. R. Astron. Soc. 2012, 421, 3343–3355. [Google Scholar] [CrossRef]

- Berlind, A.A.; Weinberg, D.H. The halo occupation distribution: Toward an empirical determination of the relation between galaxies and mass. Astrophys. J. 2002, 575, 587–616. [Google Scholar] [CrossRef]

- Yang, X.; Mo, H.J.; van den Bosch, F.C. Constraining galaxy formation and cosmology with the conditional luminosity function of galaxies. Mon. Not. R. Astron. Soc. 2003, 339, 1057–1080. [Google Scholar] [CrossRef]

- Skibba, R.A.; Sheth, R.K. A halo model of galaxy colours and clustering in the Sloan Digital Sky Survey. Mon. Not. R. Astron. Soc. 2009, 392, 1080–1091. [Google Scholar] [CrossRef]

- Zehavi, I.; Zheng, Z.; Weinberg, D.H.; Blanton, M.R.; Bahcall, N.A.; Berlind, A.A.; Brinkmann, J.; Frieman, J.A.; Gunn, J.E.; Lupton, R.H.; et al. Galaxy clustering in the completed SDSS redshift survey: The dependence on color and luminosity. Astrophys. J. 2011, 736, 59. [Google Scholar] [CrossRef]

- Crocce, M.; Castander, F.J.; Gaztañaga, E.; Fosalba, P.; Carretero, J. The MICE Grand Challenge lightcone simulation—II. Halo and galaxy catalogues. Mon. Not. R. Astron. Soc. 2015, 453, 1513–1530. [Google Scholar] [CrossRef]

- Vale, A.; Ostriker, J.P. Linking halo mass to galaxy luminosity. Mon. Not. R. Astron. Soc. 2004, 353, 189–200. [Google Scholar] [CrossRef]

- Conroy, C.; Wechsler, R.H.; Kravtsov, A.V. Modeling luminosity-dependent galaxy clustering through cosmic time. Astrophys. J. 2006, 647, 201–214. [Google Scholar] [CrossRef]

- Merson, A.I.; Baugh, C.M.; Helly, J.C.; Gonzalez-Perez, V.; Cole, S.; Bielby, R.; Norberg, P.; Frenk, C.S.; Benson, A.J.; Bower, R.G.; et al. Lightcone mock catalogues from semi-analytic models of galaxy formation—I. Construction and application to the BzK colour selection. Mon. Not. R. Astron. Soc. 2013, 429, 556–578. [Google Scholar] [CrossRef]

| Method | Memory | N. Cores | Elapsed Time | CPU Time | 1000 Realizations |

|---|---|---|---|---|---|

| EZmocks | 40 GB | 16 | 7.5 m | 2 h + calibration N-body | 822,000 h |

| PATCHY | 40 GB | 16 | 7.5 m | 2 h + calibration N-body | 822,000 h |

| PINOCCHIO | 14 TB | 2048 | 30 m | 1024 h | 1,024,000 h |

| COLA | 33 TB | 4096 | 2.5 h | 10,240 h | 10,240,000 h |

| N-body (HugeMDPL) | 6.5 TB | 2000 | 410 h | 820,000 h | 820,000,000 h |

© 2016 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Monaco, P. Approximate Methods for the Generation of Dark Matter Halo Catalogs in the Age of Precision Cosmology. Galaxies 2016, 4, 53. https://doi.org/10.3390/galaxies4040053

Monaco P. Approximate Methods for the Generation of Dark Matter Halo Catalogs in the Age of Precision Cosmology. Galaxies. 2016; 4(4):53. https://doi.org/10.3390/galaxies4040053

Chicago/Turabian StyleMonaco, Pierluigi. 2016. "Approximate Methods for the Generation of Dark Matter Halo Catalogs in the Age of Precision Cosmology" Galaxies 4, no. 4: 53. https://doi.org/10.3390/galaxies4040053