First Application of Robot Teaching in an Existing Industry 4.0 Environment: Does It Really Work?

Abstract

:1. Introduction

2. Related Work

2.1. Human-Robot Interaction in the Industrial Environment

2.2. User-Centered Development and Evaluation

3. Motivation and Objectives

4. Studies with the Off-The-Shelf System

4.1. Research Questions

- (1)

- Is there a difference between the two application contexts in terms of the usability of the teach-in (set-up) process?

- (2)

- Will participants’ attitudes towards robots change due to teaching the robotic system?

- (3)

- How is the workload of teaching the robot perceived by the users with respect to the application context?

- (1)

- How is the collaboration with the robot experienced in the assembly line with respect to user acceptance?

4.2. Phase 1: Project Kick-Off Meeting

4.3. Phase 2: Preparation of Use Cases and Study Material

- (1)

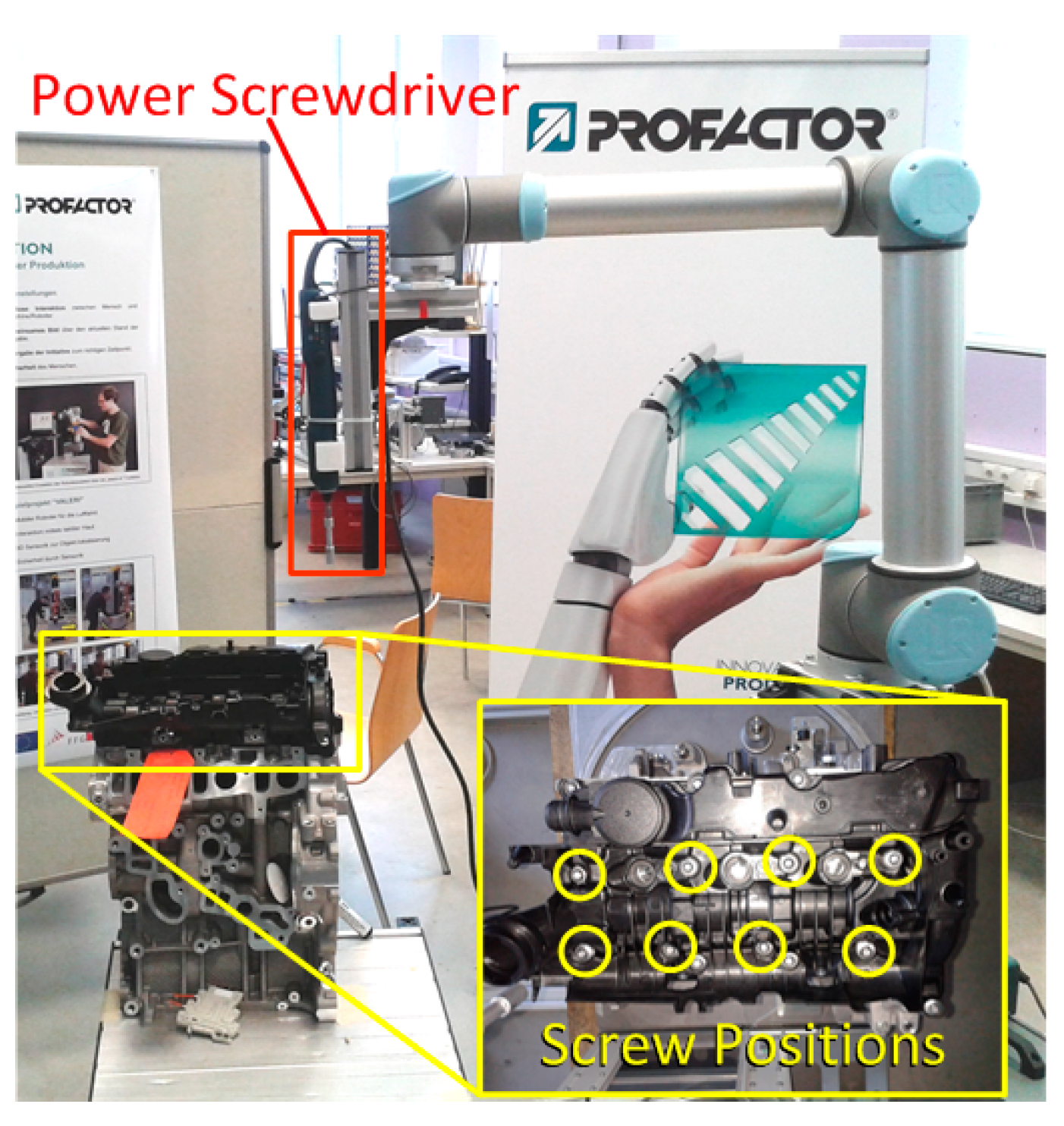

- Use cases: The industrial partner together with the technological partner developed the use cases in order to define how the study participants will interact with the robot within a defined working process. The three use cases were based on the usual working processes and described how the participants had to teach the robot’s trajectories and positions: manually or with the teach pendant. A few weeks before the user studies were conducted, the technological partners visited the industrial partners in order to adapt the robot prototype to the specific process requirements and conditions.

- (2)

- Study guidelines and questionnaires: The choice of the questionnaires and development of the study guidelines was based on the use cases and research questions. We used established and validated questionnaires (System Usability Scale (SUS) [51], Negative Attitude towards Robot Scale (NARS) [52] and NASA Task Load Index (NASA-TLX) [53]; see Section 4.6 for details) and developed one questionnaire on usability and acceptance questions ourselves. The same study guidelines and questionnaires were used in Use Cases A and B. For the field trial of three weeks (Use Case C), we used an additional interview guideline in order to explore the long-term impressions of the workers in a qualitative manner.

- (3)

- Recruitment: A week before the each study was conducted, the industrial partners recruited the participants. The participants were all employed at the industrial partners’ factories and were experts in the concerned working processes. The recruitment criteria in terms of age, sex, handedness and prior knowledge of robot control were set to reflect their respective distribution in the factories of our industrial partners. The detailed demographic characteristics of the recruited participants are listed in Table A1 (see the Appendix).

4.5. Phase 3: Performing User Studies

- (1)

- Pre-study questionnaires: The questionnaires (demographic questionnaire and NARS [52] questionnaire on attitudes towards robots) were filled in by the participants before any interaction with the robot happened.

- (2)

- Introduction of the robot: Each participant was introduced to the assistive robot, the control mechanisms and the goal of the trial. In order to relieve stress and increase compliance, the participants were assured that the focus of investigation was only the robot’s performance and there were no negative implications for them.

- (3)

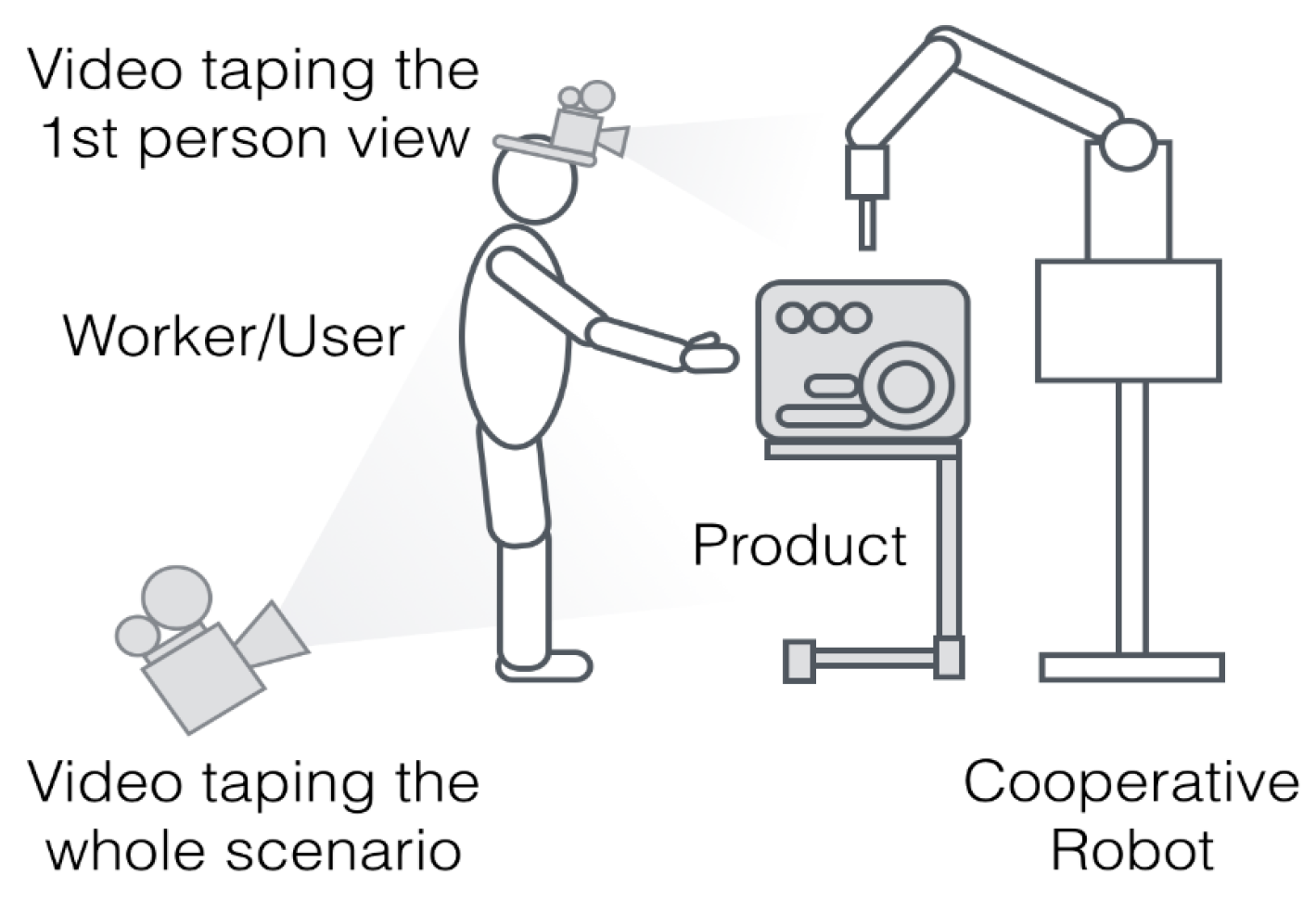

- Conducting the user study: Each participant was audio- and video-taped with two cameras. Thus, every interaction could be recorded from different distances and angles in order to reproduce the subjective perspective, as well as the context of interaction:

- (a)

- Videotaping of the first-person view: A head-mounted camera (eye-tracker from Pupil Labs [54]) recorded the participants’ interaction with the robotic arm, the work piece and the touch panel during the teaching procedure. The recording of this point-of-view camera provided additional information concerning the participants’ focus shifts for where he/she was approximately looking.

- (b)

- Videotaping the whole scenario: One camera was used to get an overview of the scenario for the data interpretation of the eye-tracking recordings and for documenting the think-aloud information.

- (4)

- (1)

- Introduction of the robot at the assembly line: First, the robot was introduced at the assembly line in the industrial partner’s factory. The participants were informed about the aim of the study and how to interact with the robotic system (either manually or by using the touch panel; see Figure 4) during the working process. This field test lasted for three weeks and should help the worker with developing profound opinions concerning the daily cooperation with the robot. Our technological partner was only present in case of a system malfunction.

- (2)

- Post-field test interview: An interview was conducted after the field test by using established interview guidelines [8] in order to ensure a phenomenological-oriented empirical perspective on the usability and user experience. This approach focuses on: (1) general aspects regarding the work with the robot; (2) experiences before the introduction of the robot; (3) first confrontation with the new system/enrollment; (4) special experiences working with the new system; and (5) special aspects of the HRI (e.g., safety). This open and narrative interview technique evokes personal experiences [55], which were recorded in order to reveal opinions, rumors, impressions, emotional issues, concerns and ideas for improvements.

4.6. Data Analysis

- SUS (System Usability Scale) [51]: a low-cost standardized usability scale. It is used to quickly measure the effectiveness, efficiency and satisfaction of a system and includes 10 statement-based items. Participants can rate them on a five-point Likert scale. After the system has been evaluated, the overall score for the usability of the investigated system is calculated (the scores on individual items are not meaningful). The overall score is between zero and 100 with scores being grouped as follows: “80–100: users like the system”; “60–79: users accept the system”; “0–59: users do not like the system”. Additionally, the SUS can be separated into a usability and a learnability dimension for a further differentiation of the results [56].

- NARS (Negative Attitude towards Robot Scale) [52]: a psychological scale to measure the negative attitudes of humans against robots. It should become visible which factors are the reasons preventing individuals from interacting with robots. The NARS questionnaire consists of 14 questions, which are grouped into three sub-scales: S1 = negative attitude toward the situations of the interaction with robots; S2 = negative attitude toward the social influence of robots; S3 = negative attitude toward emotions in the interaction with robots.

- NASA-TLX questionnaire [53]: The NASA-TLX is a subjective workload assessment tool. NASA-TLX allows users to perform subjective workload assessments on operator(s) working with various human-machine systems. NASA-TLX is a multi-dimensional rating procedure that derives an overall workload score based on a weighted average of ratings on six sub-scales. These sub-scales include mental demands, physical demands, temporal demands, own performance, effort and frustration. The sub-scales are rated for each task within a 100-point range with five-point steps.

- •

- Performance expectancy is defined as the degree to which an individual believes that using the system will help him or her to attain gains in job performance. Its estimated value is the mean of Item 1 and the inverted Item 6.

- •

- Effort expectancy is defined as the degree of ease associated with the use of the system. Its estimated value is the mean of Item 2 and Item 7.

- •

- Perceived competence describes the user’s belief that the system has the ability to recognize, understand and react adequately to given tasks with a sufficient set of applicable skills. Its estimated value is the mean of Item 3 and Item 8.

- •

- System trust describes the user’s belief that the system performs with the needed functionality to do a required task. Furthermore, the system has to demonstrate being able to effectively provide help when needed (e.g., through a help menu) and to reliably or consistently operate without failing. Its estimated value is the mean of Item 4 and Item 9.

- •

- Perceived safety describes to what extent the robotic system is perceived as safe by its users. Its estimated value is the mean of Item 5 and Item 10.

4.7. Final Discussion of the Study Report with the Industrial Partner

5. Results

- •

- NARS: The negative attitude towards the robot decreases after the human-robot interaction. This expectation is based on other studies [58], which showed such a decrease after short-term human-robot interactions.

- •

- NASA-TLX: All scores are less than 50%, which indicates low workload. There are no differences expected between the application contexts.

- •

- SUS: The touch panel for teaching the robot is rated lower in terms of usability (due to its off-the-shelf complexity) than the assistive robot itself (manual teaching).

- •

- Self-developed items on perceived usability and acceptance: Use Case A should be rated slightly better than Use Case B, as the screwing task could be more robustly automized with the off-the-shelf robot than the polishing task.

6. Interpretation and Recommendation

- (1)

- Touch panels: Panels are an intermediate device to control the robot. This implies a continuous switching of visual attention between the robot arm, work piece and touch panel. This implies a high cognitive and attention-related workload for the user. Furthermore, only one third of the time was used for actual teaching, and the rest was used for other activities (e.g., menu navigation). Thus, the application of usability guidelines in this real-time system is absolutely necessary (more intuitive, easier to learn, more shortcuts), and the physical handling of the panel must have high priority (smaller, lighter, more sensitive, without a cord). These insights are in line with Pan et al. [24], who emphasize the importance of teach pendants in the factory context, and Fukui et al. [60], who stated that such input devices should be easy to hold and to operate.

- (2)

- Manual control: Although the robot arm was described as too bulky to use in practice, there is an overall tendency of preferring manual control over control with an intermediate device. There are various possibilities to integrate manual control, including direct hand pushing, via joystick or via gesture recognition. However, this approach needs an increase of sensors and artificial intelligence in order to live up to its potential. These insights are in line with research concerning teaching motor skills by physical HRI [32] and industry-oriented application [35]. Our technological partner drew similar conclusions concerning the need to improve the manual HRI. Direct interaction should be improved by using more sensors, including cameras for the (1) recording of the whole working place and (2) the detection of potential human-robot movement collisions. The teaching process can be significantly improved by using, e.g., a 3D joystick system.

- (3)

- Impact on “work”: The field test revealed that the robot prototype was not well perceived with regard to its degree of adaptation towards the users’ individual rhythm, speed and working steps. The robot determined the working pace of the operator, due to its lack of sensors and intelligence. We assume that this essential lack in flexibility is at least partly responsible for the other mentioned shortcomings in usability, perceived safety, system trust, general helpfulness and personal working rhythm of the workers. Furthermore, all workers expressed their fear of being replaced by such a robot in the near future. Although the robot was introduced as a tool and cooperative agent, this fear was not reduced after three weeks of personal human-robot interaction. These empirically-derived insights are in line with Bonekamp and Sure [61], whose review of the current literature on the implications of Industry 4.0 on human labor and work organization revealed a rather consistent view, particularly on job redundancies for low-skilled jobs. However, the factory management discussed the results with the work council, who has a veto concerning further technical and social developments.

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix

| Use Cases A and C (Screw Tightening) | Use Case B (Surface Polishing) | |

|---|---|---|

| (n = 5) | (n = 5) | |

| Age (years) | ||

| Mean (SD) | 45.2 (11.3) | 45.4 (5.7) |

| Gender | ||

| Male (n (%)) | 3 (60.0%) | 5 (100.0%) |

| Female (n (%)) | 2 (40.0%) | 0 (0.0%) |

| Handedness | ||

| Left (n (%)) | 0 (0.0%) | 1 (20.0%) |

| Right (n (%)) | 4 (80.0%) | 4 (80.0%) |

| Both (n (%)) | 1 (80.0%) | 0 (0.0%) |

| Color Blindness | ||

| Green-Red or Blue-Yellow (n (%)) | 0 (0.0%) | 0 (0.0%) |

| Spatial Imagery | ||

| Below-average (n (%)) | 0 (0.0%) | 0 (0.0%) |

| Average (n (%)) | 4 (80.0%) | 5 (100.0%) |

| Above-average (n (%)) | 1 (80.0%) | 0 (0.0%) |

| Excellent (n (%)) | 0 (0.0%) | 0 (0.0%) |

| Computer Skills | ||

| No experience (n (%)) | 1 (20.0%) | 1 (20.0%) |

| Beginner (n (%)) | 0 (0.0%) | 3 (60.0%) |

| Experienced (n (%)) | 3 (60.0%) | 1 (20.0%) |

| Expert (n (%)) | 1 (20.0%) | 0 (0.0%) |

| Computer Usage per Day | ||

| None (n (%)) | 1 (20.0%) | 1 (20.0%) |

| Less than 1 h (n (%)) | 0 (0.0%) | 2 (40.0%) |

| 1–3 h (n (%)) | 2 (40.0%) | 1 (20.0%) |

| 3–6 h (n (%)) | 0 (0.0%) | 1 (20.0%) |

| More than 6 h (n (%)) | 2 (40.0%) | 0 (0.0%) |

| Usually-used OS | ||

| Windows (n (%)) | 5 (100.0%) | 4 (0.0%) |

| Unix/Linux (n (%)) | 0 (0.0%) | 0 (0.0%) |

| Mac (n (%)) | 1 (20.0%) | 0 (0.0%) |

| Used Applications | ||

| Word Processors (n (%)) | 4 (80.0%) | 3 (60.0%) |

| Spreadsheet Applications (n (%)) | 2 (40.0%) | 1 (20.0%) |

| E-Mail Applications (n (%)) | 5 (100.0%) | 3 (60.0%) |

| Graphics Software (n (%)) | 1 (20.0%) | 0 (0.0%) |

| Music Applications (n (%)) | 1 (20.0%) | 0 (0.0%) |

| Desktop-Publishing Software (n (%)) | 0 (0.0%) | 1 (20.0%) |

| Industrial Machine Skills | ||

| No experience (n (%)) | 0 (0.0%) | 0 (0.0%) |

| Beginner (n (%)) | 2 (40.0%) | 4 (80.0%) |

| Experienced (n (%)) | 2 (40.0%) | 0 (0.0%) |

| Expert (n (%)) | 1 (20.0%) | 1 (20.0%) |

| Industrial Robot Skills | ||

| No experience (n (%)) | 1 (20.0%) | 3 (60.0%) |

| Beginner (n (%)) | 3 (60.0%) | 2 (40.0%) |

| Experienced (n (%)) | 1 (20.0%) | 0 (0.0%) |

| Expert (n (%)) | 0 (0.0%) | 0 (0.0%) |

| Industrial Robot Experience | ||

| None (n (%)) | 3 (60.0%) | 5 (100.0%) |

| Less than 3 months (n (%)) | 0 (0.0%) | 0 (0.0%) |

| 3–6 months (n (%)) | 0 (0.0%) | 0 (0.0%) |

| 6–12 months (n (%)) | 1 (0.0%) | 0 (0.0%) |

| 1–3 years (n (%)) | 0 (0.0%) | 0 (0.0%) |

| More than 3 years (n (%)) | 1 (20.0%) | 0 (0.0%) |

| 1. I can trust the robot. | |||||

| O | O | O | O | O | O |

| Strongly disagree | Disagree | Rather disagree | Rather agree | Agree | Strongly agree |

| 2. The robot seems harmless to me. | |||||

| O | O | O | O | O | O |

| Strongly disagree | Disagree | Rather disagree | Rather agree | Agree | Strongly agree |

| 3. I feel capable of handling the robot to perform tasks. | |||||

| O | O | O | O | O | O |

| Strongly disagree | Disagree | Rather disagree | Rather agree | Agree | Strongly agree |

| 4. The use of the robot increases my professional effectiveness. | |||||

| O | O | O | O | O | O |

| Strongly disagree | Disagree | Rather disagree | Rather agree | Agree | Strongly agree |

| 5. It is easy for me to make the robot do what I want to accomplish. | |||||

| O | O | O | O | O | O |

| Strongly disagree | Disagree | Rather disagree | Rather agree | Agree | Strongly agree |

| 6. The robot gives me the creeps. | |||||

| O | O | O | O | O | O |

| Strongly disagree | Disagree | Rather disagree | Rather agree | Agree | Strongly agree |

| 7. The robot seems very reliable. | |||||

| O | O | O | O | O | O |

| Strongly disagree | Disagree | Rather disagree | Rather agree | Agree | Strongly agree |

| 8. I can operate the robot good enough to carry out work. | |||||

| O | O | O | O | O | O |

| Strongly disagree | Disagree | Rather disagree | Rather agree | Agree | Strongly agree |

| 9. I can fulfill my tasks more efficiently with the help of the robot. | |||||

| O | O | O | O | O | O |

| Strongly disagree | Disagree | Rather disagree | Rather agree | Agree | Strongly agree |

| 10. It is easy for me to operate the robot. | |||||

| O | O | O | O | O | O |

| Strongly disagree | Disagree | Rather disagree | Rather agree | Agree | Strongly agree |

References

- Young, M. The Technical Writer’s Handbook; University Science Books: Mill Valley, CA, USA, 1989. [Google Scholar]

- Alben, L. Quality of experience: Defining the criteria for effective interaction design. Interactions 1996, 3, 11–15. [Google Scholar] [CrossRef]

- Buchner, R.; Wurhofer, D.; Weiss, A.; Tscheligi, M. Robots in time: How user experience in human-robot interaction changes over time. In International Conference on Social Robotics; Herrmann, G., Pearson, M.J., Lenz, A., Bremner, P., Spiers, A., Leonards, U., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8239, pp. 138–147. [Google Scholar]

- Obrist, M.; Reitberger, W.; Wurhofer, D.; Förster, F.; Tscheligi, M. User experience research in the semiconductor factory: A contradiction? In Human-Computer Interaction—INTERACT 2011; Campos, P., Graham, N., Jorge, J., Nunes, N., Palanque, P., Winckler, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6949, pp. 144–151. [Google Scholar]

- Hermann, M.; Pentek, T.; Otto, B. Design Principles for Industrie 4.0 Scenarios. 2015. Available online: http://www.snom.mb.tu-dortmund.de/cms/de/forschung/Arbeitsberichte/Design-Principles-for-Industrie-4_0-Scenarios.pdf (accessed on 25 February 2016).

- Jasperneite, J.; Niggemann, O. Systemkomplexität in der Automation beherrschen. Intelligente Aisstenzsysteme unterstützen den Menschen. Available online: http://www.ciit-owl.de/uploads/media/Jasperneite_Niggemann_edi_09_S.36-44.pdf (accessed on 19 July 2016).

- Kagermann, H.; Wahlster, W.; Helbig, J. Recommendations for Implementing the Strategic Initiative Industrie 4.0. Final report of the Industrie 4.0 Working Group. Available online: http://www.acatech.de/fileadmin/user_upload/Baumstruktur_nach_Website/Acatech/root/de/Material_fuer_Sonderseiten/Industrie_4.0/Final_report__Industrie_4.0_accessible.pdf, 2013 (accessed on 13 July 2016).

- Meneweger, T.; Wurhofer, D.; Fuchsberger, V.; Tscheligi, M. Erlebniszentrierte Interviews zur Erfassung von User Experience Mit Industrierobotern. In Design 2 Product. Beiträge zur empirischen Designforschung, Band 5; Bucher: Hohenems, Austria, 2016. (In German) [Google Scholar]

- Zäh, M.F.; Wiesbeck, M.; Engstler, F.; Friesdorf, F.; Schubö, A.; Stork, S.; Bannat, A.; Wallhoff, F. Kognitive Assistenzsysteme in der manuellen Montage. Werkstattstech. Online 2007, 97, 644–650. [Google Scholar]

- Rooker, M.; Hofmann, M.; Minichberger, J.; Ikeda, M.; Ebenhofer, G.; Fritz, G.; Pichler, A. Flexible and assistive quality checks with industrial robots. In Proceedings of the ISR/Robotik 2014, the 41st International Symposium on Robotics, Munich, Germany, 2–3 June 2014; pp. 1–6.

- LIAA Project. Available online: http://www.project-leanautomation.eu/ (accessed on 25 February 2016).

- Plasch, M.; Pichler, A.; Bauer, H.; Rooker, M.; Ebenhofer, G. A plug & produce approach to design robot assistants in a sustainable manufacturing environment. In Proceedings of the 22nd International Conference on Flexible Automation and Intelligent Manufacturing (FAIM 2012), Helsinki, Finland, 10–13 June 2012.

- Hvilshøj, M.; Bøgh, S. “Little Helper”—An autonomous industrial mobile manipulator concept. Int. J. Adv. Robot. Syst. 2011, 8, 80–90. [Google Scholar] [CrossRef]

- Helms, E.; Schraft, R.D.; Hagele, M. rob@work: Robot assistant in industrial environments. In Proceedings of the 11th IEEE International Workshop on Robot and Human Interactive Communication, Berlin, Germany, 25–27 September 2002; pp. 399–404.

- Wögerer, C.; Bauer, H.; Rooker, M.; Ebenhofer, G.; Rovetta, A.; Robertson, N.; Pichler, A. LOCOBOT-low cost toolkit for building robot co-workers in assembly lines. In Intelligent Robotics and Applications; Springer: Berlin/Heidelberg, Germany, 2012; pp. 449–459. [Google Scholar]

- Hägele, M.; Neugebauer, J.; Schraft, R.D. From robots to robot assistants. In Proceedings of the 32nd ISR (International Symposium on Robotics), Seoul, Korea, 19–21 April 2001.

- Feldmann, K.; Schöppner, V. Handbuch Fügen, Handhaben und Montieren; Carl Hanser Verlag: München, Germany, 2013; p. 231. [Google Scholar]

- Peshkin, M.A.; Colgate, J.E.; Wannasuphoprasit, W.; Moore, C.A.; Gillespie, R.B.; Akella, P. Cobot architecture. IEEE Trans. Robot. Autom. 2001, 17, 377–390. [Google Scholar] [CrossRef]

- Gambao, E.; Hernando, M.; Surdilovic, D. A new generation of collaborative robots for material handling. Gerontechnology 2012, 11, 368. [Google Scholar] [CrossRef]

- MRK-Systeme. Available online: http://www.mrk-systeme.de/index.html (accessed on 25 February 2016).

- KUKA Laboratories. Available online: http://www.kuka-healthcare.com/de/lightweight_robotics/ (accessed on 25 February 2016).

- Bøgh, S.; Hvilshøj, M.; Kristiansen, M.; Madsen, O. Autonomous industrial mobile manipulation (AIMM): From research to industry. In Proceedings of the 42nd International Symposium on Robotics, Chicago, IL, USA, 21–24 March 2011.

- Griffiths, S.; Voss, L.; Röhrbein, F. Industry-academia collaborations in robotics: Comparing Asia, Europe and North-America. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation, Hongkong, China, 31 May–7 June 2014.

- Pan, Z.; Polden, J.; Larkin, N.; van Duin, S.; Norrish, J. Recent progress on programming methods for industrial robots. Robot. Comput. Integr. Manuf. 2012, 28, 87–94. [Google Scholar] [CrossRef]

- Biggs, G.; Macdonald, B. A survey of robot programming systems. In Proceedings of the Australasian Conference on Robotics and Automation, Brisbane, Australia, 1–3 December 2003; pp. 1–3.

- Ko, W.K.H.; Wu, Y.; Tee, K.P.; Buchli, J. Towards industrial robot learning from demonstration. In Proceedings of the 3rd International Conference on Human-Agent Interaction (HAI’15), New York, NY, USA, 21–24 October 2015; pp. 235–238.

- Pires, J.N.; Veiga, G.; Araújo, R. Programming-by-demonstration in the coworker scenario for SMEs. Ind. Robot Int. J. 2009, 36, 73–83. [Google Scholar] [CrossRef]

- Argall, B.D.; Billard, A.G. A survey of tactile human–robot interactions. Robot. Auton. Syst. 2010, 58, 1159–1176. [Google Scholar] [CrossRef]

- De Santis, A.; Siciliano, B.; De Luca, A.; Bicchi, A. An atlas of physical human–robot interaction. Mech. Mach. Theory 2008, 43, 253–270. [Google Scholar] [CrossRef]

- ISO 10218-1:2011 (Robots). Available online: http://www.iso.org/iso/iso_catalogue/catalogue_tc/catalogue_detail.htm?csnumber=51330 (accessed on 25 February 2016).

- ISO 10218-1:2011 (Robot Systems and Integration). Available online: http://www.iso.org/iso/iso_catalogue/catalogue_tc/catalogue_detail.htm?csnumber=41571 (accessed on 25 February 2016).

- Ikemoto, S.; Amor, H.B.; Minato, T.; Jung, B.; Ishiguro, H. Physical human-robot interaction: Mutual learning and adaptation. IEEE Robot. Autom. Mag. 2012, 19, 24–35. [Google Scholar] [CrossRef]

- Meyer, C. Aufnahme und Nachbearbeitung von Bahnen bei der Programmierung Durch Vormachen von Industrierobotern. Ph.D. Thesis, Universität Stuttgart, Stuttgart, Germany, 2011. [Google Scholar]

- Geomagic Touch. Available online: http://geomagic.com/en/products/phantom-omni/overview (accessed on 25 February 2016).

- Fritzsche, M.; Elkmann, N.; Schulenburg, E. Tactile sensing: A key technology for safe physical human robot interaction. In Proceedings of the 2011 6th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Lausanne, Switzerland, 8–11 March 2011; pp. 139–140.

- Intuitive an Mobile Robot Teach-In. Available online: http://www.keba.com/en/industrial-automation/ketop-operation-monitoring/mobile-operating-panels/ketop-t10-directmove/ (accessed on 25 February 2016).

- Baxter. Available online: http://www.rethinkrobotics.com/products/baxter (accessed on 25 February 2016).

- Stadler, S.; Weiss, A.; Mirnig, N.; Tscheligi, M. Antropomorphism in the factory: A paradigm change? In Proceedings of the 8th ACM/IEEE International conference on Human-Robot interaction, Tokyo, Janpan, 3–6 March 2013; pp. 231–232.

- Weiss, A.; Buchner, R.; Fischer, H.; Tscheligi, M. Exploring human-robot cooperation possibilities for semiconductor manufacturing. In Proceedings of the 2011 International Conference on Collaboration Technologies and Systems, Philadelphia, PA, USA, 23–27 May 2011.

- Buchner, R.; Mirnig, N.; Weiss, A.; Tscheligi, M. Evaluating in real life robotic environment—Bringing together research and practice. In Proceedings of the RO-MAN 2012, 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012.

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Weiss, A.; Igelsböck, J.; Wurhofer, D.; Tscheligi, M. Looking forward to a Robotic Society“?—Imaginations of future human-robot relationships. Special issue on the human robot personal relationship conference. Int. J. Soc. Robot. 2011, 3, 111–123. [Google Scholar] [CrossRef]

- Lohse, M.; Hanheide, M.; Rohlfing, K.; Sagerer, G. Systematic interaction analysis (SINA) in HRI. In Proceedings of the 4th ACM/IEEE International conference on Human Robot Interaction, La Jolla, CA, USA, 11–19 March 2009; pp. 93–100.

- Strasser, E.; Weiss, A.; Tscheligi, M. Affect misattribution procedure: An implicit technique to measure user experience in HRI. In Proceedings of the 7th ACM/IEEE international conference on Human-robot interaction, New York, NY, USA, 5–8 March 2012.

- Stadler, S.; Weiss, A.; Tscheligi, M. I Trained this Robot: The Impact of Pre-Experience and Execution Behavior on Robot Teachers. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014.

- Seo, S.H.; Gu, J.; Jeong, S.; Griffin, K.; Young, J.E.; Bunt, A.; Prentice, S. Women and men collaborating with robots on assembly lines: Designing a novel evaluation scenario for collocated human-robot teamwork. In Proceedings of the 3rd International Conference on Human-Agent Interaction (HAI’15), New York, NY, USA, 21–24 October 2015; pp. 3–9.

- Bernhaupt, R.; Weiss, A. How to convince stakeholders to take up usability evaluation results? In COST294-MAUSE Workshop Downstream Utility: The Good, the Bad and the Utterly Useless Usability Feedback; Institute of Research in Informatics of Toulouse: Toulouse, France, 2007; pp. 37–39. [Google Scholar]

- Weiss, A.; Bernhaupt, R.; Lankes, M.; Tscheligi, M. The USUS evaluation framework for Human-Robot Interaction. In Proceedings of the Symposium on New Frontiers in Human-Robot Interaction, Edinburgh, UK, 6–9 April 2009; pp. 158–165.

- Universal Robots. Available online: https://en.wikipedia.org/wiki/Universal_Robots (accessed on 13 July 2016).

- Bovenzi, M. Health effects of mechanical vibration. G. Ital. Med. Lav. Ergon. 2005, 27, 58–64. [Google Scholar] [PubMed]

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Nomura, T.; Suzuki, T.; Kanda, T. Measurement of negative attitudes toward robots. Interact. Stud. 2006, 7, 437–454. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Pupil Labs. Available online: https://pupil-labs.com/ (accessed on 13 July 2016).

- Flick, U. Episodic interviewing. In Qualitative Researching with Text, Image and Sound; Bauer, M.W., Gaskell, G., Eds.; Sage: London, UK, 2000; pp. 75–92. [Google Scholar]

- Lewis, J.R.; Sauro, J. The factor structure of the system usability scale. In Proceedings of the International Conference HCII 2009, San Diego, CA, USA, 19–24 July 2009.

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Weiss, A. Validation of an Evaluation Framework for Human-Robot Interaction. The Impact of Usability, Social Acceptance, User Experience, and Societal Impact on Collaboration with Humanoid Robots. Ph.D. Thesis, University Salzburg, Salzburg, Austria, 2010. [Google Scholar]

- Weiss, A.; Huber, A. User experience of a smart factory robot: Assembly line workers demand adaptive robots. 2016; arXiv:1606.03846. [Google Scholar]

- Fukui, H.; Yonejima, S.; Yamano, M.; Dohi, M.; Yamada, M.; Nishiki, T. Development of teaching pendant optimized for robot application. In Proceedings of the 2009 IEEE Workshop on Advanced Robotics and its Social Impacts, Tokyo, Japan, 23–25 November 2009; pp. 72–77.

- Bonekamp, L.; Sure, M. Consequences of Industry 4.0 on human labour and work organisation. J. Bus. Media Psychol. 2015, 6, 33–40. [Google Scholar]

- Ebenhofer, G.; Ikeda, M.; Huber, A.; Weiss, A. User-centered assistive robotics for production—The AssistMe Project. In Proceedings of the OAGM-ARW2016: Joint Workshop on “Computer Vision and Robotics, Wels, Austria, 11–13 May 2016.

- Why You Only Need to Test with 5 Users. Available online: https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users/ (accessed on 13 July 2016).

| Use Case A | Use Case B | Use Case C | |

|---|---|---|---|

| Description | Teaching of screw positions | Teaching of polishing positions | Cooperative screwing |

| Environment | Factory | Laboratory | Factory (assembly line) |

| Number of Participants | 5 | 5 | 5 |

| Duration | 1 day | 2 days | 3 weeks |

| Research Instruments | Observation Questionnaires | Observation Questionnaires | Interviews |

| Sub-Scale | NARS Sub-Scale Description | Use Case A (n = 5) | Use Case B (n = 5) | ||

|---|---|---|---|---|---|

| Pre (SD) | Post (SD) | Pre (SD) | Post (SD) | ||

| S1 | Negative attitude towards situations and interactions with robots. | 1.27 (0.52) | 0.75 (0.52) | 1.40 (0.51) | 1.23 (0.46) |

| S2 | Negative attitude towards social influence of robots. | 1.92 (0.54) | 1.40 (0.96) | 2.08 (0.46) | 2.08 (0.46) |

| S3 | Negative attitude towards emotions in interaction with robots. | 2.87 (0.42) | 1.50 (0.50) | 2.33 (0.99) | 2.33 (0.31) |

| NASA-TLX Item Description | Use Case A (n = 5) | Use Case B (n = 5) |

|---|---|---|

| Mental Demand: How mentally demanding was the task? | 51.25% | 48.00% |

| Physical Demand: How physically demanding was the task? | 37.50% | 20.00% |

| Temporal Demand: How hurried or rushed was the pace of the task? | 20.63% | 59.00% |

| Performance: How successful were you in accomplishing what you were asked to do? | 86.88% | 71.00% |

| Effort: How hard did you have to work to accomplish your level of performance? | 53.13% | 38.00% |

| Frustration: How insecure, discouraged, irritated, stressed and annoyed were you? | 9.38% | 27.00% |

| Sub-Scale | SUS Sub-Scale Description | Use Case A (n = 5) | Use Case B (n = 5) | ||

|---|---|---|---|---|---|

| Panel | Robot | Panel | Robot | ||

| S1 | Usability (item mean) | 2.69 | 3.15 | 2.00 | 2.38 |

| S2 | Learnability (item mean) | 3.38 | 3.63 | 1.70 | 2.20 |

| Total score (0%–100%) | 70.73% | 81.15% | 48.50% | 58.50% | |

| Score group | Users accept the system | Users like the system | Users do not like the system | Users do not like the system | |

| Sub-Scale | Subjective Acceptance Sub-Scale Description | Use Case A (SD) (n = 5) | Use Case B (SD) (n = 5) |

|---|---|---|---|

| S1 | System Trust (mean of Item 1 and inverted 6) | 3.75 (0.71) | 2.90 (0.42) |

| S2 | Safety (mean of Items 2 and 7) | 4.13 (0.18) | 3.00 (0.00) |

| S3 | Perceived Competence of the System Handling (mean of Items 3 and 8) | 4.38 (0.18) | 3.30 (0.71) |

| S4 | Performance Expectancy (mean of Items 4 and 9) | 3.00 (0.00) | 2.40 (0.00) |

| S5 | Effort Expectancy (mean of Items 5 and 10) | 4.13 (0.18) | 3.30 (0.71) |

| Total score (0%–100%) | 79.50% | 61.60% |

| Short-Time Feedback Category | Use Case A (n = 5) | Use Case B (n = 5) | Total (n = 10) |

|---|---|---|---|

| Manual robot guidance is very bulky, especially with one hand. | 5 | 5 | 10 |

| The menu architecture of the touch panel is overwhelmingly complex. | 5 | 5 | 10 |

| Some menu buttons are too small to touch accurately. | 4 | 4 | 8 |

| Tap/swipe/scroll on the touch panel sometimes does not work. | 4 | 3 | 7 |

| Manual robot guidance is preferred (hand-pushed or via joystick). | 3 | 2 | 5 |

| The connecting cable of the touch panel is very bulky. | 2 | 1 | 3 |

| The angle of the robot arm/tool should be controllable (also for manual guidance). | 1 | 2 | 3 |

| The high number of coordinate axes makes controlling cognitively demanding. | 2 | 0 | 2 |

| Robot/screwdriver should be prepared so that shorter people can better handle it. | 2 | 0 | 2 |

| The teaching process should be linear (straightforward) featuring more feedback. | 1 | 1 | 2 |

| The robot arm should have more sensors to detect edges and obstacles. | 0 | 2 | 2 |

| The whole teaching process takes too long. | 0 | 2 | 2 |

| For smaller persons, the visual switch between panel and robot arm is difficult. | 1 | 0 | 1 |

| The automatic processing of surfaces should be configurable. | 0 | 1 | 1 |

| Long-Term Feedback Category | Use Case C (n = 5) |

|---|---|

| The robot will replace my job in the near future. | 5 |

| The robot determines my work and speed. | 4 |

| The robot should be more adaptive. | 4 |

| The robot does not stop at physical contact. | 4 |

| To work with the robot was a big transition. | 3 |

| The robot is a helpful tool. | 2 |

| The robot should have more anthropomorphic features. | 2 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weiss, A.; Huber, A.; Minichberger, J.; Ikeda, M. First Application of Robot Teaching in an Existing Industry 4.0 Environment: Does It Really Work? Societies 2016, 6, 20. https://doi.org/10.3390/soc6030020

Weiss A, Huber A, Minichberger J, Ikeda M. First Application of Robot Teaching in an Existing Industry 4.0 Environment: Does It Really Work? Societies. 2016; 6(3):20. https://doi.org/10.3390/soc6030020

Chicago/Turabian StyleWeiss, Astrid, Andreas Huber, Jürgen Minichberger, and Markus Ikeda. 2016. "First Application of Robot Teaching in an Existing Industry 4.0 Environment: Does It Really Work?" Societies 6, no. 3: 20. https://doi.org/10.3390/soc6030020