Challenges in Getting Building Performance Monitoring Tools for Everyday Use: User Experiences with A New Tool

Abstract

:1. Introduction

2. Tools for Building Performance Monitoring

2.1. Categorization of the Tools

2.2. Challenges in Adopting the Tools

- Lack of quantified evidence on the benefits of fault detection and diagnostics;

- Faults in HVAC systems rarely cause considerable economical losses or danger to safety or health;

- Lack of adequate sensors installed on HVAC systems;

- The sensitivity and robustness of FDD methods need more investigation;

- The time and cost of installing a FDD tool can be significant;

- Intelligent algorithms are often neglected in practice;

- The benefits of FDD are not realized since sufficient resources may not be available for the use of the FDD tools.

3. Method

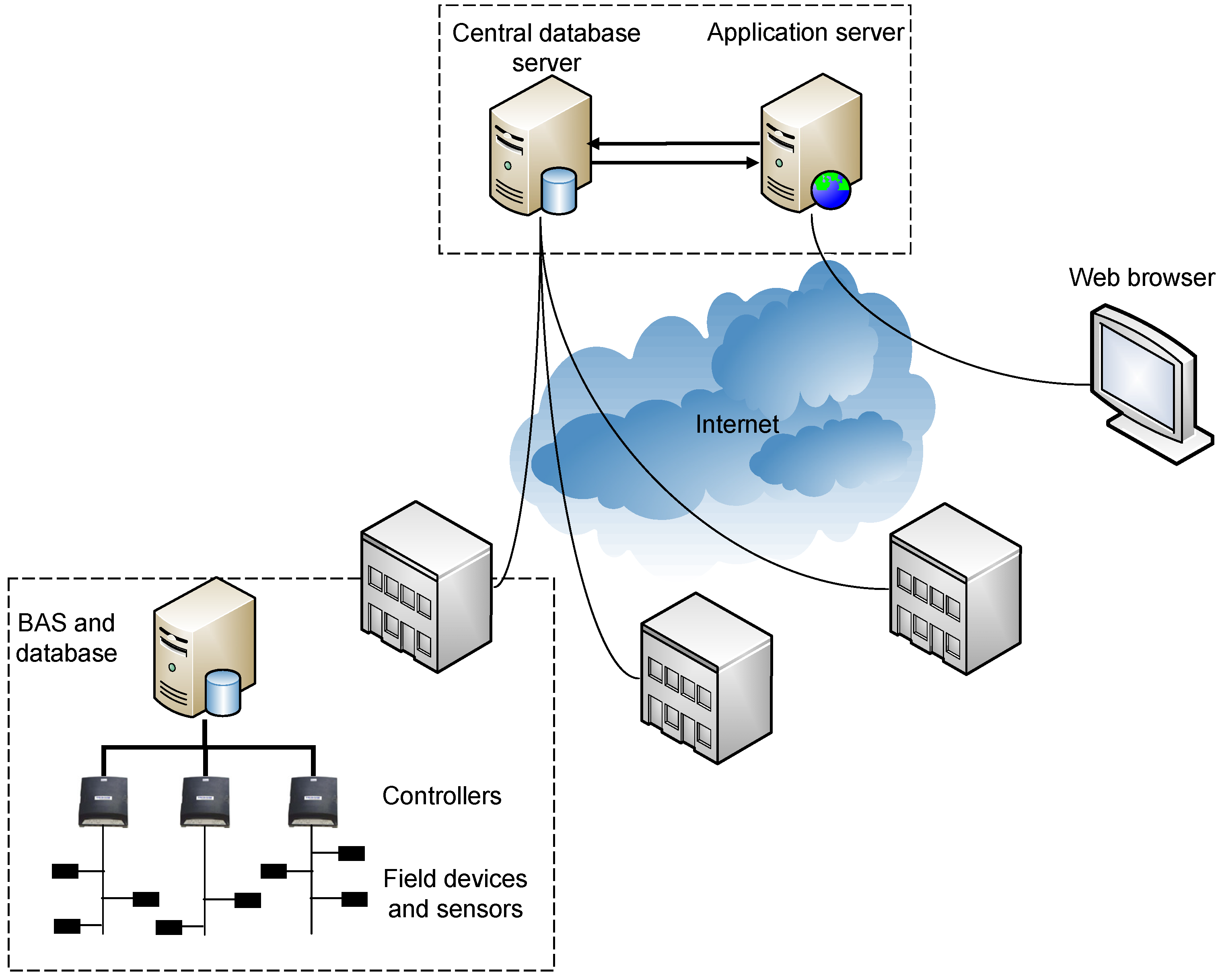

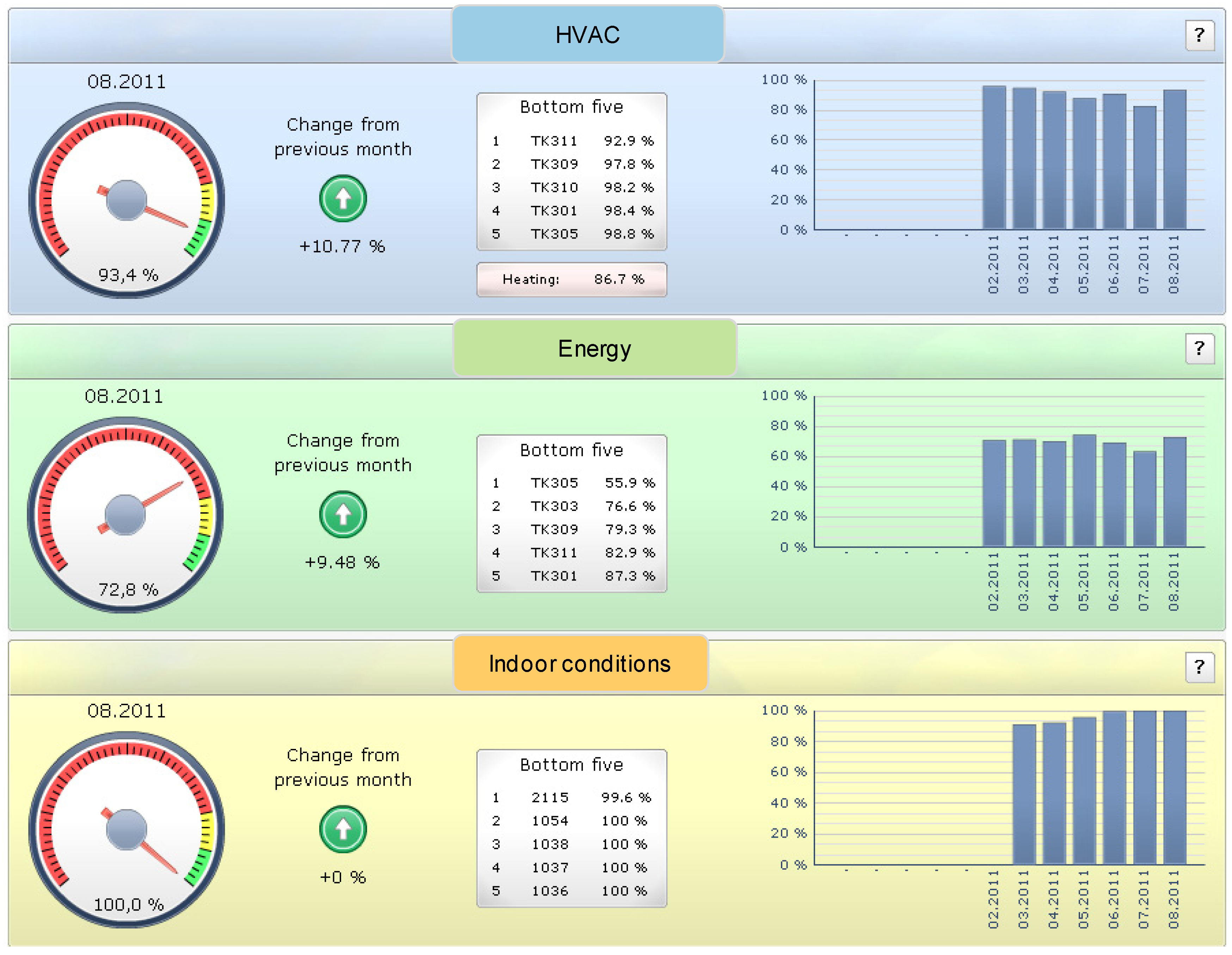

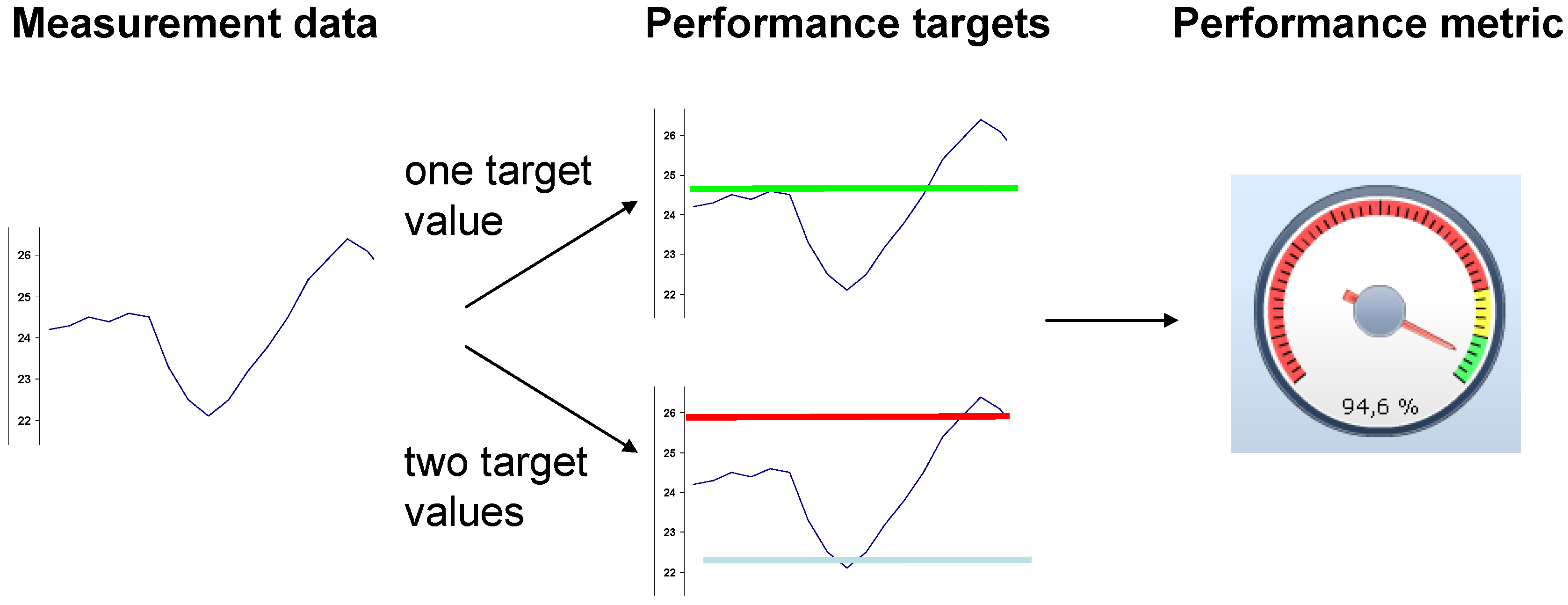

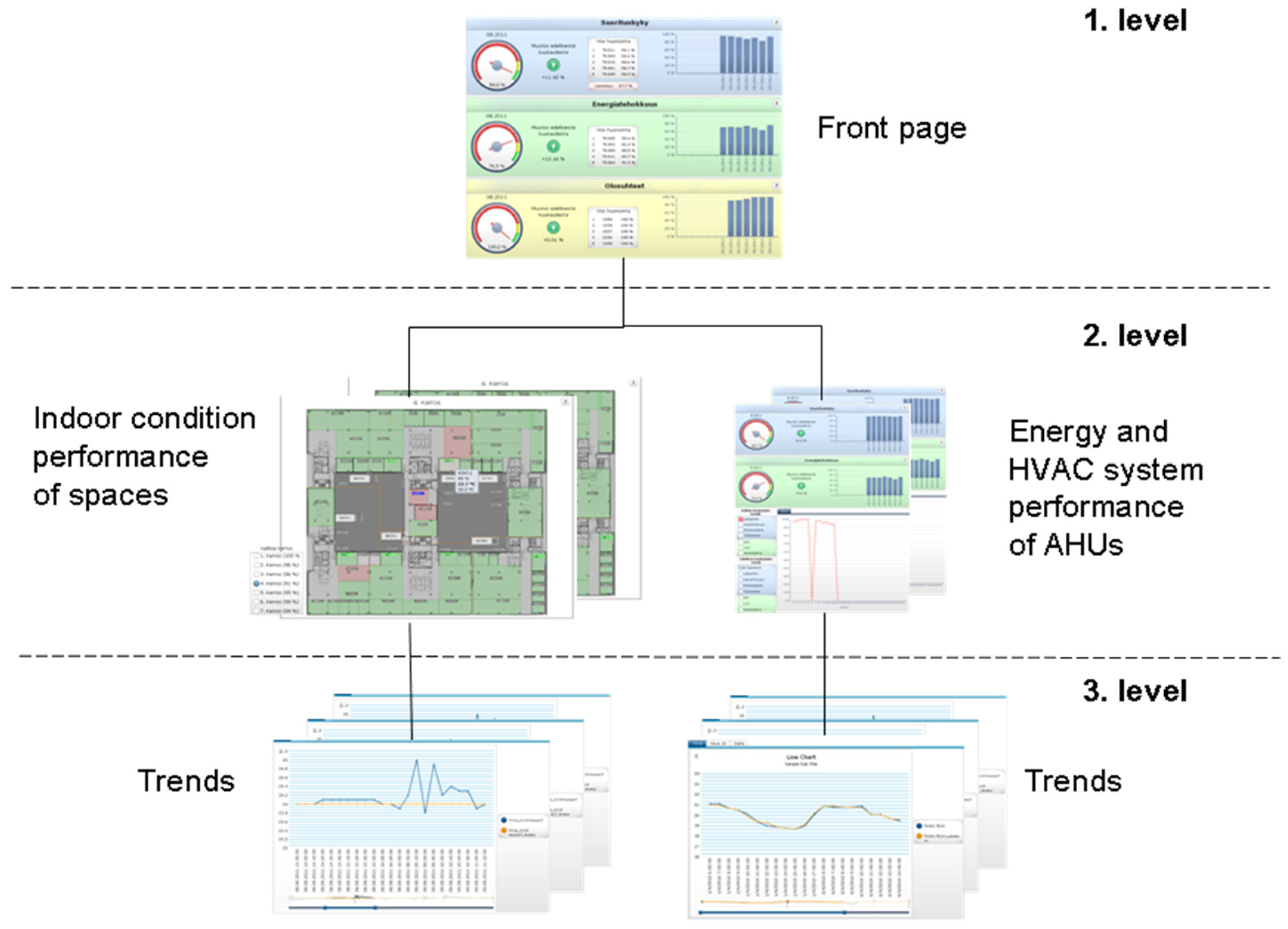

3.1. Performance Monitoring and Management System (PEMMS)

- Target values are generally seen as representing good performance, such as targets derived from building standards or guides;

- Target values represent good performance for the building or the equipment in question, such as targets presenting optimal operation or targets that can be achieved according to the equipment manufacturer.

3.2. Qualitative Interview Study

| Please tell about your role and responsibilities in the building where the PEMMS is in use. |

| What information systems associated to building operation and maintenance do you use in your work? How often do you use these systems? |

| What deficiencies do you see in these systems? What kind of information would you need in your work? |

| Why did you acquire PEMMS? What kind of expectations did you have for the system? (only presented to persons who participate in the purchase decision making) |

| Have you participated to the development or the implementation of PEMMS? |

| In what ways has the implementation/use of PEMMS been supported or motivated? |

| What did you think about the training and the instructions? |

| Have you used the PEMMS? How often have you used it? Has the use been mandatory or voluntary? |

| For what purposes have you used the PEMMS? |

| What is your overall impression of the PEMMS and satisfaction with the system? |

| Has the system been useful in your work? |

| What do you think about the information quality or the user interface of the system? |

| How would you describe the difficultness or easiness of use of the system? |

| What kind of changes has there been in working procedures/organizations/management practices? |

| What kind of benefits has the system provided? |

| What challenges do you see in the use of the system? |

| What would you improve in the system? |

| Do you intend to use the system in the future? |

| Do you intend to purchase similar kind of systems in the future? (only presented to persons who participate in the purchase decision making) |

| No. | Role | Occupation | Duration of access | Building |

|---|---|---|---|---|

| 1 | Owner | HVAC specialist | 39 weeks | Office building 1 |

| 2 | Owner/user | Head of corporate real estate management | 6 weeks | Office building 2 |

| 3 | Owner/user | Facility manager | 6 weeks | Office building 2 |

| 4 | Service provider | Facility manager | 30 weeks | Commercial building |

| 5 | Service provider | Customer service manager | 29 weeks | Commercial building |

| 6 | Service provider | Customer service manager | 29 weeks | Commercial building |

| 7 | Service provider | Energy consultant | 8 weeks | Office building 2 |

| 8 | Service provider | Energy consultant | 7 weeks | Office building 2 |

| 9 | Service provider | Service man | 6 weeks | Office building 2 |

| 10 | Service provider | Service man | 4 weeks | Office building 2 |

4. Results

4.1. Implementation of the System

“In my opinion the training was sufficient at this point … you cannot learn so much at one time … it is easier if you familiarize yourself with the system, then you can ask more and understand better what it is about.”

“I thought that it (the training) was good … it is good that the training is short so that too much information is not given … usually you learn by doing … I believe that everyone can figure out themselves how the system works in detail.”

“The system is extremely clear. The only thing that is needed is to go through what the terms mean.”

“… some kind of manual, what the system enables and how you find it. You cannot remember everything after the training.”

4.2. Frequency and Purpose of Use

“I have only tested it (the PEMMS) for few times”

“I have just familiarized myself with it (the PEMMS).”

“I viewed the HVAC performance metrics, why is there good and poor air handling units. I took a glance at the spaces as well, how they are performing. It gave me good information. It was quite warm in there … the reason is not known … it has not yet been sorted out. It would require going to the site.”

“I suppose I will use it … I have a link to it on my computer. From there I can find it.”

4.3. Positive Experiences

“In my opinion this makes common sense. You can quickly see if everything is fine and if not then you can search what might be the problem behind it.”

“I think that this is clear this front page. There is no unnecessary information on the front page. You can see certain things at a glance”

“In my opinion this is very easy (to use). And then this is also very clear. This is not too complicated … even a stupid can understand this. You don’t need to be a technical expert to understand this.”

“The more there are devices in the building the better this (the PEMMS) services it … when there are large number of devices, it is difficult to see the overall performance ... some things can go unnoticed, there may not be alarms but still the process does not work optimally … this (the PEMMS) definitely assists … you can drill down to see what is wrong.”

“This is a monitoring application.”

“The history information is good. If something is creating problems all the time and if actions are taken to fix it, you can easily see what kind of affect it has on performance … The system provides advantage if you can predict things and you don’t need to hurry only after someone comes to complain. This would be good customer service … This is a tool of the future for maintenance men. It is about predicting things …”

4.4. Challenges and Proposals for Improvement

“The Computerized Maintenance Management Systems are often not updated … all the documents are not included … this is very common.”

“Alarm limits, you would get alarms to email stating that the performance has dropped under 50% or whatever the limit is.”

“The thing that would be needed and would be efficient is reporting once a month to email … is would be handy, you wouldn’t need to login to the system.”

“If the system had an alarm feature … stating that something is wrong now … without login in to the system”

“I have just familiarized with the system and all these (calculation principles) have not entirely opened up to me.”

“It (the PEMMS) could be useful when you start to understand how the system functions and you begin to trust it.”

“The outdoor temperature determines how people perceive indoor conditions … there are those people that think 22 °C is cold … we cannot go and say that this temperature is good.”

“It is not able to interpret all, in all possible way … for example does the night cooling or heating work as suppose to.”

“I would see that there is much to improve in the trending features … when I open this trend page, it does not tell me anything … some of the features were such that I did not even notice that something had happened.”

“Then the trending could be more user-friendly … the possibility to display more plots at a time … the x-axis could scale automatically.”

“Question marks could include information of the air handling units, such as the size of the unit.”

“Floor plan pictures could show which areas each air handling unit serves.”

“The system was sometimes slow to use. Could it be faster?”

“When you click a trend, could it be opened in another window?”

“Could the indoor air temperature be viewed from energy efficiency perspective in the floor plan reports?”

5. Discussion

5.1. Present Use of the System

5.2. Challenges and Proposals for Improvement

5.2.1. Information Accessibility

5.2.2. Trust in the System

5.2.3. Management Practices

- Management’s understanding and commitment;

- Company policies, directives and organization;

- Program responsibilities;

- Procedures and systems;

- Project selection and focus;

- Approved budget;

- Approved investment criteria;

- Training;

- Integrated information systems;

- Reports on savings achieved;

- Motivation;

- Marketing.

6. Conclusions

Author Contributions

Conflicts of Interest

References

- European Commission. EU’s Energy Related Strategies. Available online: http://ec.europa.eu/energy/strategies/index_en.htm (accessed on 2 February 2011).

- International Energy Agency (IEA). Technology Roadmap: Energy-Efficient Buildings: Heating and Cooling Equipment; International Energy Agency: Paris, France, 2011. [Google Scholar]

- United States General Accounting Office (GAO). School Facilities, Condition of America’s Schools; United States General Accounting Office: Washington, DC, USA, 1995. [Google Scholar]

- Huizenga, C.; Zagreus, L.; Arens, E. Air quality and thermal comfort in office buildings: Results of a large indoor environmental quality survey. In Proceedings of the Healthy Buildings 2006, Lisbon, Portugal, 4–8 June 2006.

- Mills, E.; Mathew, E. Monitoring-Based Commissioning: Benchmarking Analysis of 24 UC/CSU/IOU Projects; Lawrence Berkeley National Laboratory: Berkeley, CA, USA, 2009. [Google Scholar]

- Bordass, B.; Leaman, A.; Ruyssevelt, P. Assessing building performance in use 5: Conclusions and implications. Build. Res. Inf. 2001, 29, 144–157. [Google Scholar] [CrossRef]

- Menezes, A.C.; Cripps, A.; Bouchlaghem, D.; Buswell, R. Predicted vs. actual energy performance of non-domestic buildings: Using post-occupancy evaluation data to reduce thè performance gap. Appl. Energy 2012, 97, 355–364. [Google Scholar] [CrossRef] [Green Version]

- Haves, P.; Hitchcock, R.J. Performance Monitoring in Large Commercial Buildings; Berkeley National Laboratory for the California Energy Commission: Berkeley, CA, USA, 2008. [Google Scholar]

- Piette, M.A.; Kinney, S.; Friedman, H. EMCS and Time-Series Energy Data Analysis in a Large Government Office Building; Lawrence Berkeley National Laboratory: Berkeley, CA, USA, 2001. [Google Scholar]

- Brown, K.; Anderson, M.; Harris, J. How monitoring-based commissioning contributes to energy efficiency for commercial buildings. In Proceedings of the 2006 ACEEE Summer Study on Energy Efficiency in Buildings, Pacific Grove, CA, USA, 13–18 August 2006.

- Brambley, M.; Haves, P.; McDonald, S.; Torcellini, P.; Hansen, D.; Holmberg, D.; Roth, K. Advanced Sensors and Controls for Building Applications: Market Assessment and Potential R&D Pathways; Pacific Northwest National Laboratory: Washington, DC, USA, 2005. [Google Scholar]

- Friedman, H.; Piette, M. Comparative Guide to Emerging Diagnostic Tools for Large Commercial HVAC Systems; Lawrence Berkeley National Laboratory: Berkeley, CA, USA, 2001. [Google Scholar]

- Visier, J.C. Annex 40 Commissioning Tools for Improved Energy Performance. Available online: http://www.ecbcs.org/docs/Annex_40_Commissioning_Tools_for_Improved_Energy_Performance.pdf (accessed on 23 May 2014).

- Motegi, N.; Piette, M.; Kinney, S.; Herter, K. Web-based Energy Information Systems for Energy Management and Demand Response in Commercial Buildings; Lawrence Berkeley National Laboratory: Berkeley, CA, USA, 2002. [Google Scholar]

- Granderson, J.; Piette, M.A.; Ghatikar, G.; Price, P. Building Energy Information Systems: State of Technology and User Case Studies; Lawrence Berkeley National Laboratory: Berkeley, CA, USA, 2009. [Google Scholar]

- Few, S. Information Dashboard Design: The Effective Visual Communication of Data; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2006. [Google Scholar]

- Lehrer, D. Research Scoping Report: Visualizing Information in Commercial Buildings; California Energy Commission (CEC): Sacramento, CA, USA, 2009. [Google Scholar]

- Friedman, H.; Crowe, E.; Sibley, E.; Effinger, M. The Building Performance Tracking Handbook; California Commissioning Collaborative: Berkeley, CA, USA, 2011. [Google Scholar]

- International Performance Measurement and Verification Protocol (IPMVP). Concepts and Options for Determining Energy and Water Savings Volume 1; Efficiency Valuation Organization: Washington, DC, USA, 2012. [Google Scholar]

- Katipamula, S.; Brambley, M. Methods for fault detection, diagnostics, and prognostics for building systems—A review. Part I. Int. J. HVAC R Res. 2005, 11, 3–25. [Google Scholar] [CrossRef]

- Zhivov, A.; Pietiläinen, J.; Reinikainen, E.; Schmidt, F.; Woody, A. Energy Process Assessment Protocol; International Energy Agency: Washington, DC, USA, 2009. [Google Scholar]

- Ulickey, J.; Fackler, T.; Koeppel, E.; Soper, J. Building Performance Tracking in Large Commercial Buildings: Tools and Strategies; California Energy Commissioning: Berkeley, CA, USA, 2010. [Google Scholar]

- Katipamula, S.; Brambley, M. Methods for fault detection, diagnostics, and prognostics for building systems—A review. Part II. Int. J. HVAC R Res. 2005, 11, 169–187. [Google Scholar] [CrossRef]

- Dexter, A.; Pakanen, J. Demonstrating Automated Fault Detection and Diagnosis Methods in Real Buildings; Technical Research Centre of Finland, Laboratory of Heating and Ventilation: Espoo, Finland, 2001. [Google Scholar]

- Braun, J.; Li, H. Automated Fault Detection and Diagnostics of Rooftop Air Conditioners for California; California Energy Commission: Berkeley, CA, USA, 2003. [Google Scholar]

- Seem, J. A personal view of commercial exploitation. In Demonstrating Automated Fault Detection and Diagnosis Methods in Real Buildings, Technical Research Centre of Finland; Laboratory of Heating and Ventilation: Espoo, Finland, 2001; pp. 375–376. [Google Scholar]

- Norford, L.K.; Wright, J.A.; Buswell, R.A.; Luo, D.; Klaassen, C.J.; Suby, A. Demonstration of fault detection and diagnosis methods for air-handling units. Int. J. HVAC R Res. 2002, 8, 41–71. [Google Scholar] [CrossRef]

- Schein, J.; Bushby, S.T. Fault detection & diagnostics for AHUs and VAV boxes. ASHRAE J. 2005, 47, 8–63. [Google Scholar]

- Smith, W. Final Report: Energy Efficient and Affordable Commercial and Residential Buildings; California Energy Commission: Berkeley, CA, USA, 2003. [Google Scholar]

- Hirvonen, J.; Kaasinen, E.; Kotovirta, V.; Lahtinen, J.; Norros, L.; Salo, L.; Timonen, M.; Tommila, T.; Valkonen, J.; van Gils, M.; et al. Intelligence Engineering Framework; VTT Technical Research Centre of Finland: Espoo, Finland, 2010. [Google Scholar]

- Hirvonen, J.; Ventä, O. Design process for intelligent algorithm intensive systems. In International Multi-Conference on Engineering and Technological Innovation, 2nd ed.; International Institute of Informatics and Systemics: Winter Garden, FL, USA, 2009; pp. 236–241. [Google Scholar]

- Tuomi, J.; Sarajärvi, A. Laadullinen Tutkimus ja Sisällönanalyysi; Tammi: Helsinki, Finland, 2002. [Google Scholar]

- Patton, M.Q. Qualitative Research & Evaluation Methods, 3rd ed.; Sage Publications, Inc.: Thousand Oaks, CA, USA, 2002. [Google Scholar]

- Allen, T.J. Managing the Flow of Technology: Technology Transfer and the Dissemination of Technological Information within the Research and Development Organization; MIT Press: Cambridge, MA, USA, 1977. [Google Scholar]

- O’Reilly, A. Variations in decisions makers’ use of information sources: The impact of quality and accessibility of information. Acad. Manag. J. 1982, 25, 756–771. [Google Scholar] [CrossRef]

- Rice, R.E.; Shook, D.E. Access to, usage of, and outcomes from an electronic messaging system. ACM Trans. Office Inf. Syst. 1988, 6, 255–276. [Google Scholar] [CrossRef]

- Bronstein, J. Selecting and using information sources: Source preferences and information pathways of Israeli library and information science students. Inf. Res. 2010, 15. Available online: http://www.informationr.net/ir/15-4/paper447.html (accessed on 23 May 2014).

- Motegi, N.; Piette, M.A. Web-based energy information systems for large commercial buildings. In National Conference on Building Commissioning; Lawrence Berkeley National Laboratory: Berkeley, CA, USA, 2002. [Google Scholar]

- Lehrer, D.; Vasudev, J. Visualizing information to improve building performance: A study of expert users. In Proceedings of the ACEEE Summer Study on Energy Efficiency in Buildings, Pacific Grove, CA, USA, 15–20 August 2010.

- Neumann, C.; Jacob, D. Results of the Project Building EQ Tools and Methods for Linking EPBD and Continuous Commissioning; Fraunhofer Institute for Solar Energy Systems: Freiburg, Germany, 2010. [Google Scholar]

- Katipamula, S.; Brambley, M.R.; Bauman, N.N.; Pratt, R.G. Enhancing building operations through automated diagnostics: Field test results. In Proceedings of the 2003 International Conference for Enhanced Building Operations, Berkeley, CA, USA, 9–10 October 2013.

- Venkates, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar]

- Mahmood, M.A.; Burn, J.A.; Gemoets, L.A.; Jacquez, C. Variables affecting information technology end-user satisfaction: A meta-analysis of the empirical literature. Int. J. Hum. Comput. Stud. 2000, 52, 751–771. [Google Scholar] [CrossRef]

- Hooke, J.H.; Landry, B.J.; Hart, D. Energy Management Information Systems, Achieving Improved Energy Efficiency, A Handbook for Managers, Engineers and Operational Staff; Office of Energy Efficiency of Natural Resources Canada: Ottawa, Canada, 2003. [Google Scholar]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Ihasalo, H.; Karjalainen, S. Challenges in Getting Building Performance Monitoring Tools for Everyday Use: User Experiences with A New Tool. Buildings 2014, 4, 222-243. https://doi.org/10.3390/buildings4020222

Ihasalo H, Karjalainen S. Challenges in Getting Building Performance Monitoring Tools for Everyday Use: User Experiences with A New Tool. Buildings. 2014; 4(2):222-243. https://doi.org/10.3390/buildings4020222

Chicago/Turabian StyleIhasalo, Heikki, and Sami Karjalainen. 2014. "Challenges in Getting Building Performance Monitoring Tools for Everyday Use: User Experiences with A New Tool" Buildings 4, no. 2: 222-243. https://doi.org/10.3390/buildings4020222