1. Introduction

Structural empowerment (SE) is a widely-used concept in the nursing literature, one that is often considered when funders and nurse administrators are making important resource decisions such as offering nursing employees educational or promotional opportunities. Structural empowerment is defined as one’s perceptions of access to information, resources, opportunities, support, and formal and informal chains of power [

1]. In the nursing context, outcomes predicted by SE include psychological empowerment [

1], burnout [

2], job satisfaction [

3], organizational commitment [

4], turnover [

5] and quality of nursing care [

6].

When employees perceive they have access to empowering structures, they are more likely to identify their workplace as a healthy work environment. Healthy workplace environments are known as key determinants of positive nurse and patient outcomes. Aiken and colleagues studied the effects of unhealthy work environments caused by the extensive health care restructuring and downsizing that had occurred internationally over a decade ago. These environments were shown to compromise nursing care, as evidenced by increased nurse burnout, intent to leave, adverse events, and patient morbidity and mortality [

7,

8,

9,

10]. Such findings prompted Dr. Heather Laschinger and nursing colleagues from University of Western Ontario to attend to the notion of SE, leading to the development of the Conditions of Work Effectiveness Questionnaire I (with 58 items) and the shorter II (CWEQ I and CWEQ II). The CWEQ II has been studied and used frequently in nursing research since 2000 [

11].

The CEWQ is a 19-item measure with 6 subscales: opportunities (e.g., the extent to which one has the opportunity to gain new skills in his/her job), information (e.g., the extent to which one has access to information related to the current state of the hospital), support (e.g., the extent to which one is provided with problem solving advice), resources (e.g., the extent to which one has time to do job requirements), informal power (e.g., the extent to which one is sought by work peers), and formal power (e.g., the extent to which one is rewarded for innovation in his/her role) [

11]. Items are rated on a five-point Likert-type response scale with scores ranging from 0 (

strongly disagree) to 4 (

strongly agree). With the exception of the informal power subscale, each subscale consists of three items. Mean subscale scores are summed to obtain a total SE score, with higher total scores indicating higher levels of SE.

There has been extensive evaluation of the CWEQ II. For example, a systematic review that was limited to psychometric studies of SE and psychological empowerment identified seven studies that evaluated the scale’s content, convergent, discriminant, predictive, concurrent, and factorial structure validity and reliability [

12]. Other more recent assessments of the instrument have been focused on its cultural adaptation [

13,

14,

15,

16,

17]. Although there has been extensive examination of the psychometric properties of the CWEQ II, these psychometric studies have relied exclusively on Classical Test Theory (CTT); the CWEQ II has not been previously assessed using more robust techniques such as Item Response Theory (IRT). Item Response Theory is a probabilistic model that uses item characteristics and one’s pattern of responses to estimate one’s overall level of the latent variable in question (e.g., perceptions of SE) [

18]. This is contrary to CTT methods where item characteristics are not taken into consideration. Item Response Theory also allows us investigate the psychometric implications of using different response scales. The main purpose of this paper is to examine the psychometric properties of the original five-point CWEQ II (with a response scale of 5 categories) using IRT methods followed by an examination of the revised three-point CWEQ II (with a response scale of 3 categories) using a large sample of staff nurses from British Columbia, Canada.

Item Response Theory addresses three shortcomings associated with the CTT procedures. First, rather than assuming that all items in the scale have the same characteristics (e.g., the same level of difficulty), IRT methods account for potential differences between item characteristics when estimating respondents’ ability levels (i.e., level of the construct in question). Second, whereas CTT is predominantly concerned with the psychometric characteristics of overall scale or subscales, IRT focuses on item level analysis. Third, CTT methods provide a single estimate of the scale’s measurement precision (i.e., reliability) which applies to all items and all respondents. The disadvantage of single index of reliability is that reliability tends to be lower when an individual’s ability level is at the extreme ends of the spectrum [

18,

19]. In contrast, IRT methods assess the measurement precision of each item, for individuals across a continuum of abilities.

The application of IRT analysis to CWEQ II provides us with additional information about the scale, unobtainable through CTT. This information can facilitate more accurate measurement of SE, better guide nurse leaders’ decision making with respect to the workplace environment, and provide direction towards leadership strategies that enhance SE. The specific research questions addressed by this study were as follows: (a) Does an IRT model fit SE data obtained from staff nurses? (b) Does evidence support reliable and valid interpretation of SE scores? and (c) How does validity and reliability change with different number of response options? First, an overview of IRT, in particular polytomous IRT as it was applied to examining the study data is provided, followed by a description of the underpinning assumptions and most common issues in IRT.

2. An Overview of IRT

Item Response Theory uses complex mathematical modeling based on the idea that the probability of selecting a ‘correct’ response for an item is dependent on one’s ‘ability level’ and item characteristics such as ‘item difficulty’ [

20]. For the CWEQ II, with a Likert-type response scale where there is no correct response, this means that the probability of selecting a particular response (e.g.,

strongly agree) would be dependent on one’s overall level of SE (i.e., ability) and item difficulty. Item difficulty pertains to the level of ability that would be required to endorse the correct or, in this case, the higher response option. For example, for ‘easy’ items, even individuals with lower levels of SE would

strongly agree. On the other hand, for ‘difficult’ items, only individuals with higher levels of SE would

strongly agree.

The three main advantages of IRT are its (a) ability to generate invariant item characteristics, (b) item level analysis, and (c) measurement precision. Item Response Theory procedures generate invariant item characteristics. An example of an invariant item characteristic is that the item has the same level of difficulty regardless of the ability level of the respondent, which allows for a more valid comparison of the level of the construct across individuals or groups [

20,

21]. This overcomes CTT’s shortcomings in relation to its reliance on proportion correct or mean scores to determine an individual’s ability level [

20,

21]. For example, in CTT, two individuals who do not have the same ability level (level of SE) may obtain the same mean score by strongly agreeing with the same number of items, although those items may have different difficulty levels. Second, unlike CTT’s scale level analysis, IRT procedures examine the psychometric characteristics for each item [

18]. The third advantage of IRT is that it assesses the measurement precision of each item, for individuals across a continuum of abilities [

18]. In other words, the reliability of each item may vary from other items and for individuals with different ability levels. For this reason, reliability coefficients are considered more precise in IRT analysis in comparison to CTT procedures [

18]. Despite these advantages, IRT procedures are also associated with some drawbacks. First, IRT methods are founded upon relatively strict assumptions and sample size requirements. Item Response Theory also requires that each response option be endorsed by a certain proportion of respondents.

Polytomous IRT

Various IRT models are available for items with different types of response options. For example, a dichotomous IRT model can be applied to scales where there are correct/incorrect answers. On the other hand, polytomous IRT is typically used for items with a Likert-type response scale (e.g.,

strongly disagree to

strongly agree) where there are no correct or incorrect responses. Item characteristics are known as item parameters and individual ability levels are referred to as the latent trait (denoted as θ). In polytomous models, item parameters such as the item’s location with respect to the level of ease or difficulty (i.e., denoted as the

b parameter) and the item’s ability to differentiate between individuals with high and low ability levels (i.e., denoted as the

a parameter) are estimated and illustrated using Item Response Curves (IRCs) [

18]. Unlike dichotomous IRT models with one item difficulty parameter (b), polytomous models have multiple item difficulty parameters (b), depending on the number of response options. These are known as step difficulty parameters, and reflect the ability levels required to endorse higher versus lower response options. In addition to item parameters, item and scale information curves, and item fit are also typically examined in IRT procedures.

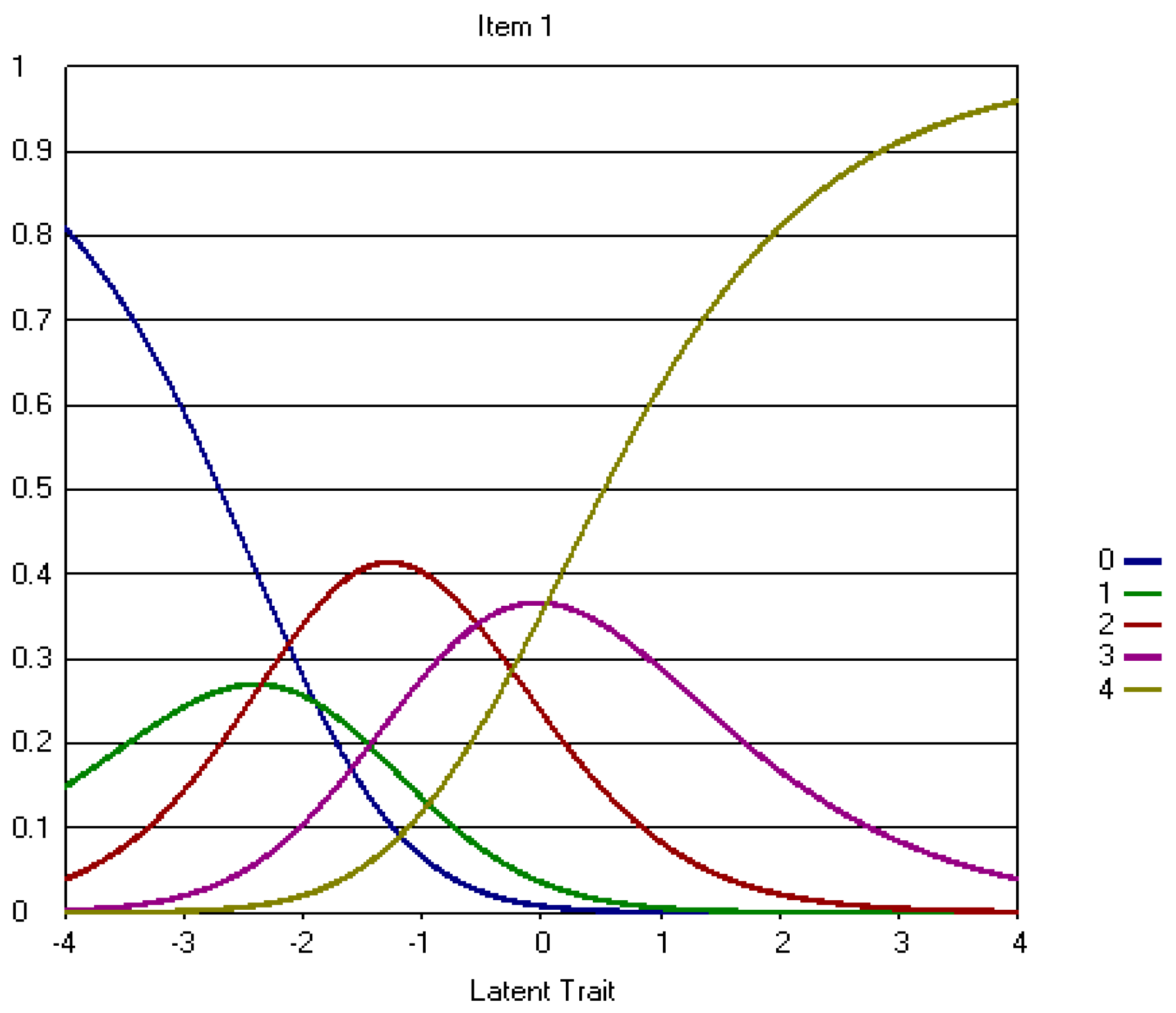

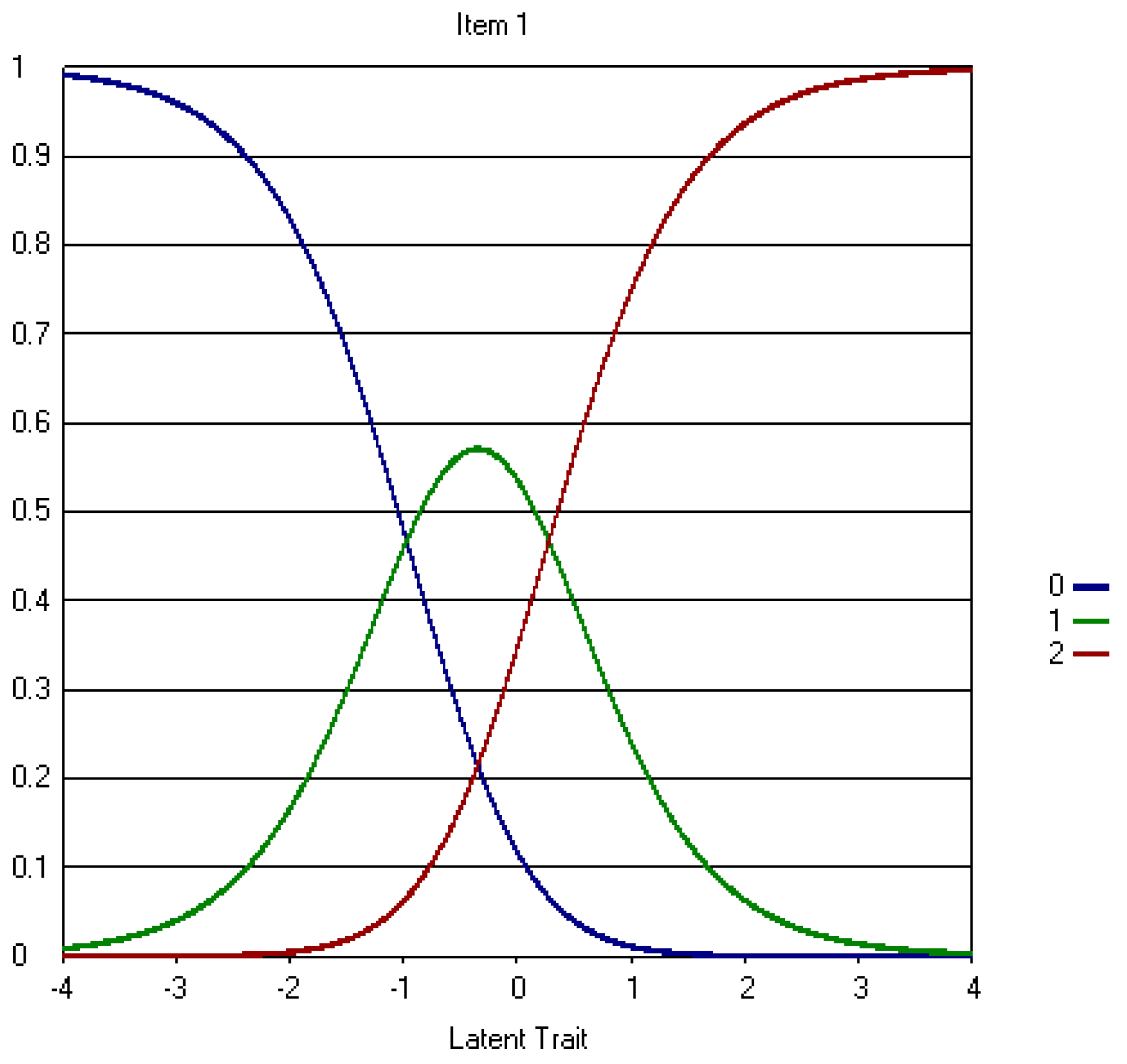

The probability of selecting each response option is obtained for individuals on a continuum of ability levels and illustrated on an IRC. In polytomous IRT, the

b parameter is defined as the point on the ability continuum of an IRC at which two consecutive response option curves intersect [

19] (See b1–b4 in

Figure 1). It is important to note that the step difficulty parameters should follow an order such that moving from a high response option to its next highest response option ought to be more difficult for respondents than moving from a lower response option to its next highest response option. For example, it is expected that moving from

strongly agree (coded as 4) to

agree (coded as 3) in CWEQ II would be more difficult (i.e., require higher level of SE) than moving from

agree to

neutral (coded as 2). This notion is also shown in

Figure 1 where b1–b4 follow a consecutive order from lowest to highest. Also, if all of the category response curves of an item were located below or close to 0 on the continum, this would indicate that the item was fairly easy. In the case of CWEQ II, this means even staff nurses with low SE levels would be likely to select the highest response option.

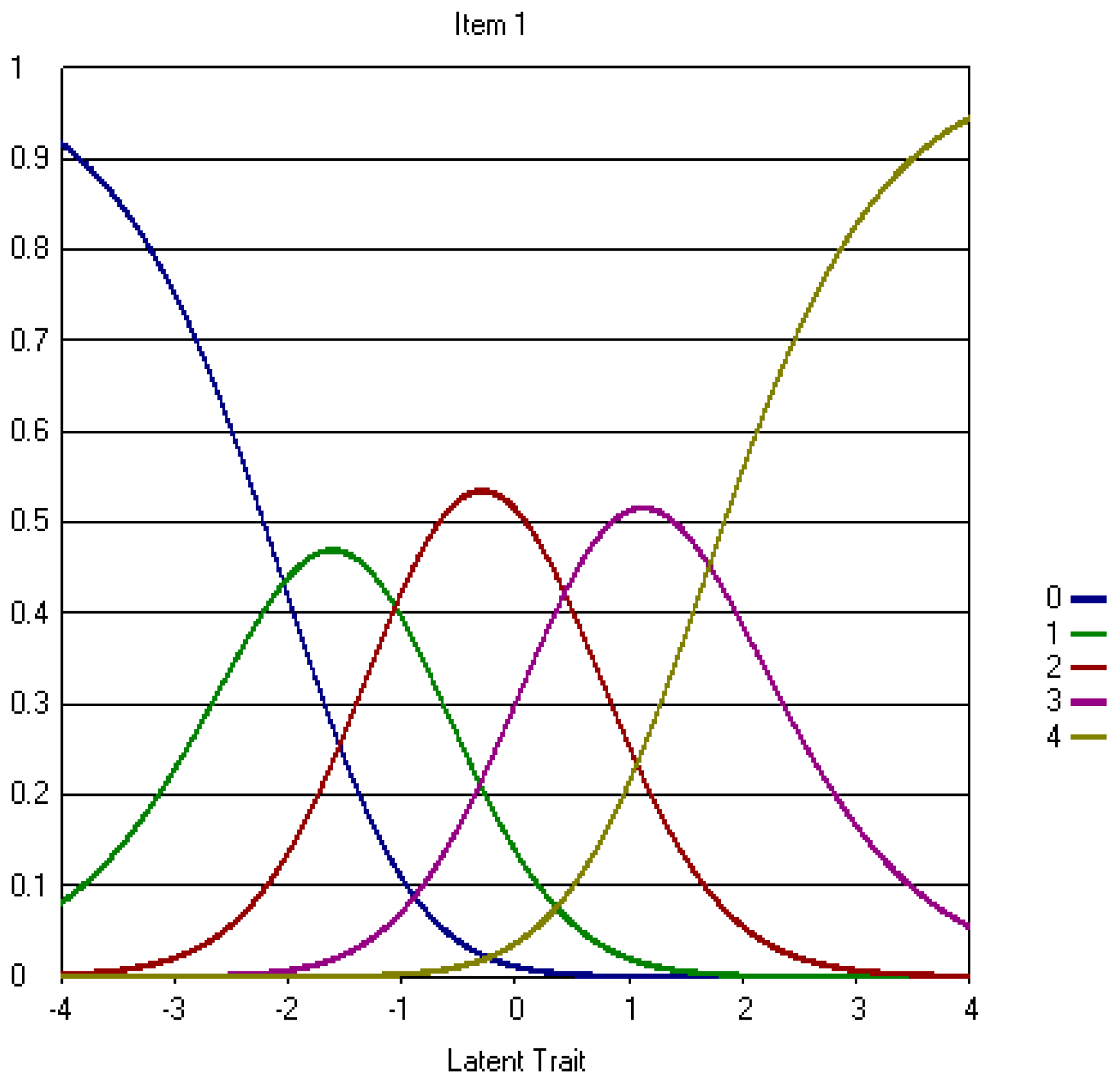

An item’s ability to differentiate between individuals with high and low ability levels (i.e., denoted as the

a parameter) is known as its ‘discriminant ability’. For polytomous items, a highly discriminating item is one with a narrower and higher peaked response option curve which indicates that the response options differentiate between various ability levels fairly well [

19]. Well discriminating items generally have a slope (a parameter) of greater than 1.0 [

18].

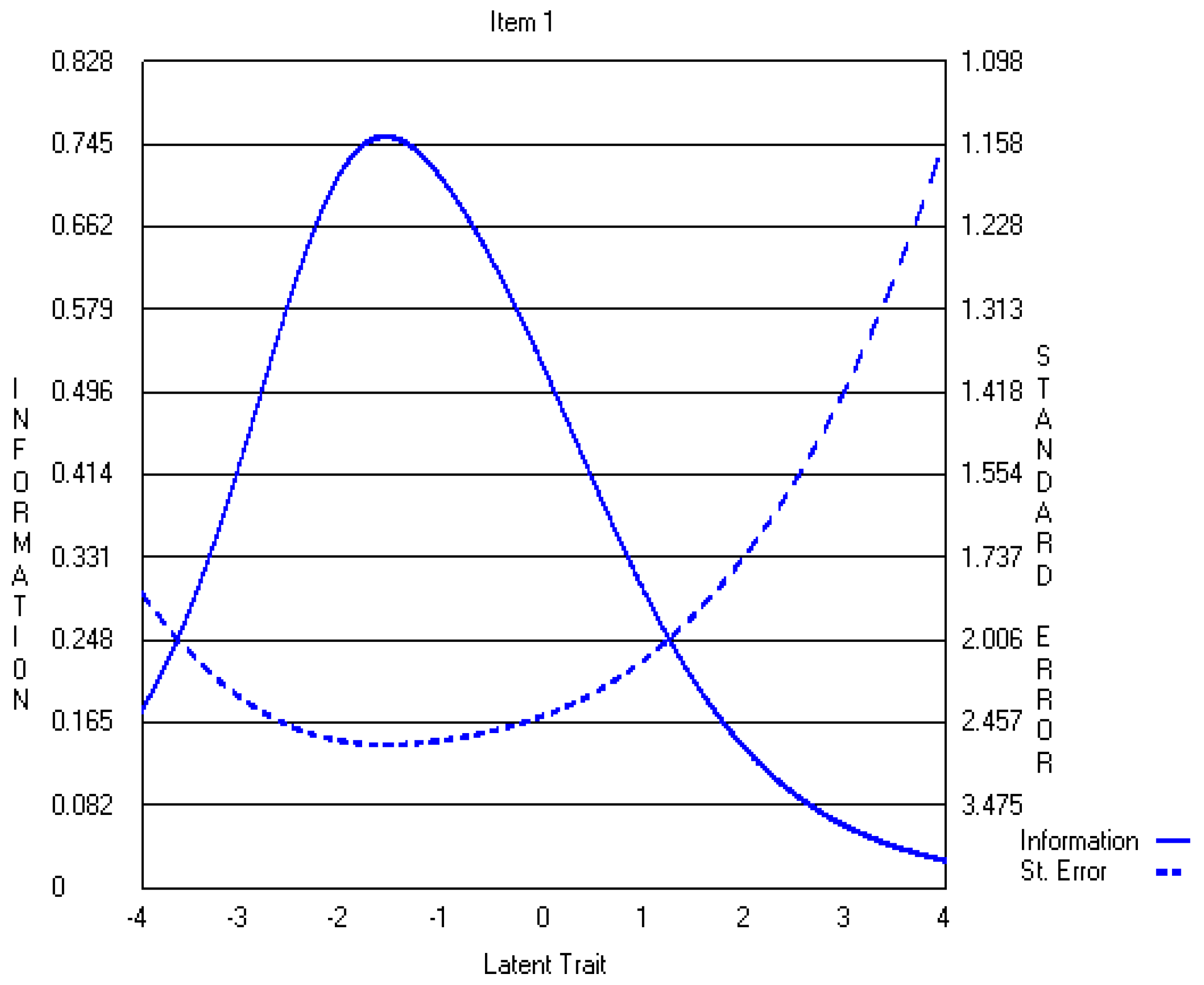

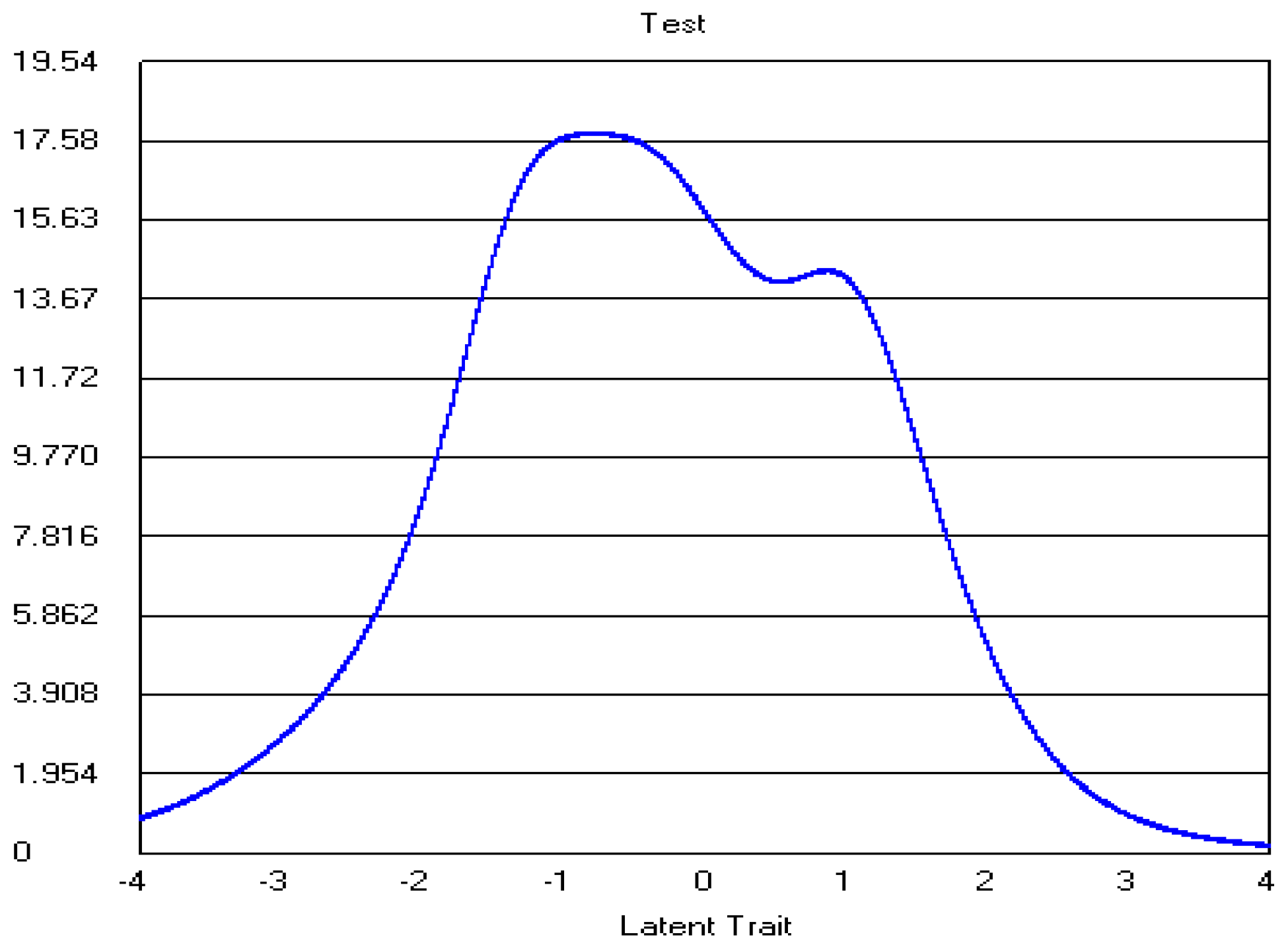

The information functions from each response option curve are combined to obtain an information function curve for the item. An item information curve demonstrates item reliability for a continuum of latent traits (SE levels). This is the latent trait level at which the item is most accurate as the amount of information provided by an item is inversely related to its standard error of measurement [

18]. More discriminating items are typically known to provide more information about the latent trait, and therefore are more reliable [

18]. Because there are no agreed-upon criteria to judge the adequacy of item information, Muraki recommended evaluating the graphical representation of item information curves as a measure of reliability [

22]. Furthermore, item information curves are added to obtain a test information curve which demonstrates the overall reliability of the test for respondents on a continuum of ability levels [

18].

Item fit depends on the consistency between respondents’ actual scores on an item and the item scores predicted by the model [

20]. The Q1 Chi square statistic and Z values are used as measures of item fit. For large sample sizes, Z values of greater than 4.6 indicate poor fit [

23].

3. Methods

This study was a secondary analysis of data from a larger quasi-experimental study that investigated the effects of a nursing leadership development program on nurse leader and nursing staff outcomes. The one-year program was offered three times a year between 2007 and 2010 to novice nurse leaders who were selected by their Chief Nursing Officers to participate in the program. To determine the effectiveness of this program, data were gathered from the program participants and staff nurses whose leaders had attended the leadership development program. Data were gathered at baseline, prior to their nurse leaders beginning the program, and one year later, following the leaders’ completion of the program. Surveys were mailed to nurse leaders who later distributed them among their staff. Only baseline data from staff nurses were used to examine the psychometric properties of CWEQ II using IRT methods. Ethical approval for this research was obtained from the University of British Columbia Behavioural Ethics Review Board as well as the participating health authorities (H07-01559).

3.1. Sample and Sample Size Requirements

Baseline data from 1067 staff nurses were analyzed. Although there is no clearly defined sample-size requirement for IRT methods, the general rule of thumb is that sample size requirements increase as the model becomes more complex. For example, although a sample size of 100 may be adequate for parameter estimation in the most basic IRT model, known as Rasch models [

24]; more complex models such as those required for polytomous scale analysis require a sample size greater than 500 [

25]. Yen and Fitzpatrick similarly reported that a minimum sample size of 500 would be sufficient for accurate parameter estimation of a 25-item scale with five response options using polytomous IRT [

20]. Based on this evidence, a sample size of 1067 nurses was deemed sufficient for this IRT study.

The majority of respondents were female (92%), with a mean age of 42 years (SD = 11). Sixty-three percent, 28%, and 9% of the staff nurses were working full-time, part-time and on a casual basis, respectively. In relation to nursing education, about 55% of the sample had a nursing degree (BSN or MSN) and 45% had a nursing diploma. Half of the nurses had 16 years or more of nursing experience. With respect to health care setting, 74% of the nurses worked in acute care settings followed by 23% and 3% that worked in Community Care and Long Term Care settings, respectively. A majority of the nursing staff worked in medical and/or surgical settings (26%) and in Emergency departments (13%).

3.2. Data Analysis

Descriptive statistics, exploratory factor analysis (EFA) and Polytomous IRT techniques were used to examine IRT assumptions and answer the study research questions. Descriptive statistics and EFA analyses were conducted using the Statistical Package for Social Sciences for Windows 20.0 (SPSS Inc., Chicago, IL, USA). For polytomous IRT, the 2-parameter partial credit model (2PPC) was selected to analyze this study’s data using PARDUX software [

20]. Estimates of item parameters and person latent trait for the 2-parameter partial credit model (2PPC) was provided using marginal maximum likelihood algorithms in PARDUX. Software known as IRT-lab was then used to generate IRCs, item and test information function curves, and item fit.

3.3. IRT Assumptions

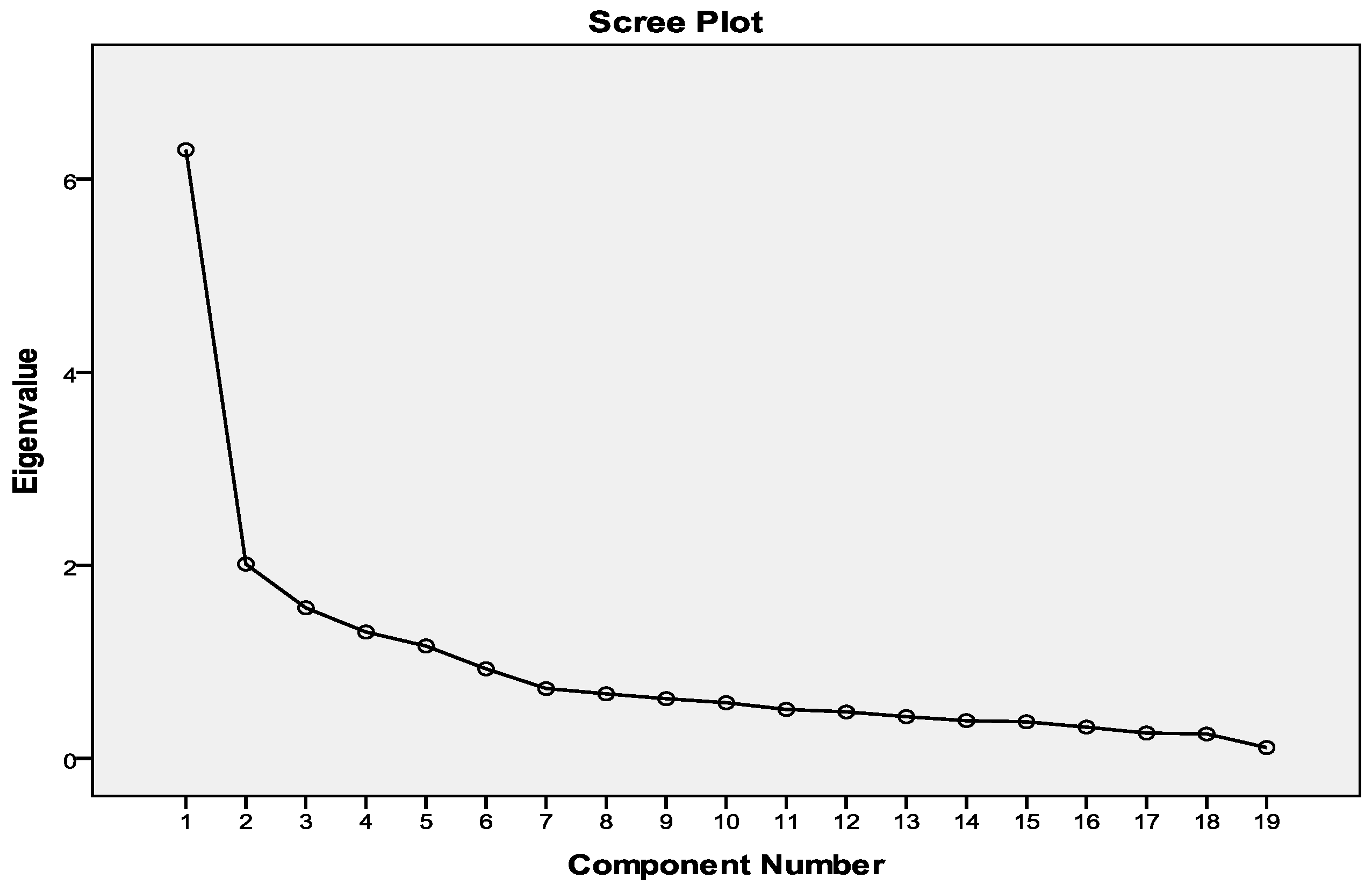

IRT analysis is founded upon three assumptions: unidimensionality, local independence, and a minimum frequency of response option selection. First, when there is a fit between the scale and the applied measurement model in IRT, items are measuring only one underlying trait (i.e., are unidimensional) [

26]. With respect to CWEQ II, unidimensionality means that the variance in staff nurses’ item responses can be explained solely by their levels of SE. However, satisfying the strict assumption of unidimensionality is often impractical; therefore, this assumption may be replaced by the notion of “essential unidimensionality”. Essentially unidimensional items may reflect several traits but one factor will very clearly dominate [

26]. Similar to Yen and Edwardson [

26], Slocum-Gori and Zumbo suggested the ratio of first to second Eigen values obtained through EFA as criteria for meeting this assumption [

27]. A first- to second Eigen value ratio of greater than three was used as evidence of essential unidimensionality [

26,

27].

Local independence reflects the absence of correlation among items except through their relationship with the latent trait [

26,

28]. In other words, in case of CWEQ II, if SE were kept constant, an absence of correlation between items would indicate local independence. This also means that item residuals or error terms would be uncorrelated. Some common causes of local dependence include: respondent fatigue, external assistance/interference, and closely-related item content [

29]. Local independence is usually evaluated using Q3 statistics, the correlation between any pairs of item residuals after the latent trait, in this case SE, is kept constant [

29,

30]. According to Yen, Q3 statistics greater than .2 are evidence that the assumption of local independence has not been met [

29].

The third assumption is related to the frequency with which each response option is selected. Edelen and Reeve noted that parameter estimation is adversely affected if certain response options are infrequently selected (i.e., selected by 5% or less of respondents) [

31]. In such situations, response options are collapsed to increase the proportion of response option selection. Lecointe demonstrated that low-frequency categories can be combined with neighboring categories without seriously impacting item level reliability [

32]. Wakita and colleagues compared three sets of questionnaires that were comprised of the same items but included various numbers of response options (i.e., four, five, and seven) [

33]. They noted that participants tended to select more negative responses and avoided selecting response options at each end of the seven-point Likert-type scale in comparison to the three- or five- point scale [

33]. Although reliability coefficients were independent of the number of response options, item values were negatively affected by a greater number of response options.

5. Discussion

The purpose of this study was to examine the psychometric properties of CWEQ II using IRT analysis. The three study research questions included (a) Does an IRT model fit SE data obtained from staff nurses? (b) Does evidence support reliable and valid interpretation of SE scores? and (c) How does validity and reliability change with different numbers of response options?

With respect to the first research question, we noted that the two versions of CWEQ II fit the SE data equally, as each version had only one poor fitting item. With respect to the second research question, we found that the validity and reliability were partially supported for both versions of the CWEQ II. We noted that discriminant ability was poor for a majority of the five-point CWEQ II items. Also, item 1 demonstrated disordinal step difficulty parameters. Item reliability was supported for a relatively wider range of SE levels. With respect to the three-point CWEQ II, although the discriminant ability of items improved, it improved for a narrower range of SE levels. The disordinal step difficulty in item one of the five-point CWEQ II was resolved after response options were collapsed. This is expected as reversal in step difficulty parameters was found to originate from the infrequent selection of certain response options [

34]. The three-point CWEQ II demonstrated increased reliabilility but only for a relatively narrow range of SE scores. With respect to the third research question, a detailed comparison of validity and reliability evidence for each version of the CWEQ II is conducted in the following section.

5.1. Data-Model Fit

The IRT results demonstrated that, overall, there was an equally acceptable fit between the staff nurses’ data and the indicated model for both the five-point and three-point versions of CWEQ II. Although item 9 was identified as poorly fitting the data in the original CWEQ II, this item was retained in the analysis as its Z value was close to the acceptable fit criterion. This decision was further justified as items ought not to be deleted based solely on item statistics, but also based on consideration of the item content [

35]. We believe that item 9 content, related to problem solving advice, reflects an important and unique aspect of access to support in one’s work environment. Although the fit of item 9 improved after CWEQ II response options were collapsed, item 19 illustrated a poor fit in the three-point CWEQ II; but with a value close to the acceptable criterion. Yen and Fitzpatrick reported using the wrong IRT model is one potential reason for obtaining poorly fitting items [

20]. This may be the case here as a partial credit model, most appropriate for unidimensional constructs, was adopted to fit potentially multidimensional data consistent with the five-factor structure found by EFA results in this study.

5.2. Validity and Reliability

A majority of the five-point CWEQ II items showed poor discriminant abilities and low step difficulties. These items were predominantly from the opportunity, resources and informal power subscales. Their poor discriminant abilities mean that CWEQ II items had insufficient strength in differentiating among high and low levels of SE; small changes in the SE levels do not change the probability of respondents selecting a particular response option. In addition, most items were identified as relatively easy because even individuals with lower levels of SE would

strongly agree with the item. However, the discriminant ability of most items increased and difficulty levels decreased after response options were collapsed. This is contradictory to Muraki’s finding that the discriminant ability of most items decreased as a result of collapsing response options [

22]. Collapsing the response options also resolved the disordinal step difficulty noted in item one of the five-point CWEQ II and enhanced item precision of measurement. This may suggest that a three-point response option would have been more appropriate for this item, but this was not done to maintain consistency and face validity.

Item information function and standard error of measurement curves confirmed that a majority of items from both versions of the CWEQ II provided the most information and subsequently the most reliability at

lower (but not the lowest) levels of SE. Although the three-point CWEQ II generally produced narrower item information curves in comparison to the five-point scale, the test information curve showed that, overall, the five-point CWEQ II is more reliable for a wider range of SE levels. This finding is consistent with previous literature that also found that measurement precision was lost once response options were collapsed [

22].

In summary, we first acknowledge that validity is not a binary condition but is rather a matter of degree [

36]. Second, unlike traditional views of validity that have described validity as a property of the measure itself, more contemporary views have described it as the extent to which empirical evidence supports the intended meaning of test scores for its proposed purpose [

37,

38]. In other words, measurement scholars acknowledge “validity is about whether the inference one makes is appropriate, meaningful, and useful given the individual or sample with which one is dealing, and the context in which the test user and individual/sample are working [

38] (pp. 220–221). Based on this description, we expect the degree of validity of a measure to vary by context and/or respondents characteristics [

37,

38]. The above evidence suggested that both versions of the CWEQ II are less valid and reliable measures when used with nurses who have high levels of SE than when used with nurses who have lower levels of SE (but not the lowest). Therefore, given that employees’ perceptions of SE reflect the conditions of their workplace environments, CWEQ II may not be the most appropriate measure of SE among nurses who are working in more ideal workplace environments such as “magnet hospitals”. Magnet hospitals derive their accreditation, in part, by providing staff with optimal access to empowering structures.

5.3. Implications

CWEQ II requires further refinement before it can measure a wide range of SE levels with an adequate degree of validity and reliability. We acknowledge that the above recommendation is founded upon the assumption that all workplace environments provide some degree of access to empowering workplace structures and consequently SE levels are not expected to stand on the far left of the continuum (e.g., θ < −3). This is important because items’ reliabilities were also poor at the extreme left of the SE continuum.

In situations where the workplace environment of interest is not known to researchers, thus precluding estimations of the overall levels of nurses’ feelings of SE, the five-point CWEQ II may be a more valid and reliable measure of SE than the three-point version. However, the three-point CWEQ II provides a more valid and reliable interpretation of SE scores in situations where nurses present with a restricted range of SE levels. It is often impossible to estimate the range of the ability level (in this case SE) among the study sample prior to data analysis, but if that information is available to researchers, the three-point CWEQ II may be a better option due to its higher discriminant ability for a particular narrow range of SE levels. Due to its higher discriminant ability, the three-point CWEQ II may be a better tool to use for pre-post measures that aim to capture differences in SE levels of individuals over time or differences in SE levels between settings or groups of nurses. In short, given that perceptions of SE are shaped by environmental conditions, the appropriate use of each version of the scale depends on the work setting targeted. This finding is similar to Chang’s conclusion that “the issue of selecting four- versus six-point scales may not be generally resolvable, but may rather depend on the empirical setting” [

39] (p. 205).