1. Introduction

Traffic noise is one of the main pollutants in urban and suburban areas that affects the quality of life of their citizens. As cities grow in size and population, the consequent increase in traffic is making this problem even more present and bothersome. In order to address this issue, European authorities have driven several initiatives to study, prevent and reduce the effects of exposure of population to traffic noise. Among them, the European Noise Directive (END) [

1] is focused on the creation of noise level maps in order to inform citizens of their exposure to noise, besides drawing up appropriate action plans to reduce its negative impact. In general terms, these maps represent the equivalent noise level (

) and are updated every five years [

1]. This is costly and time-consuming process that is undertaken by local and regional governments, and the resulting action plans can only be implemented and evaluated every five years.

These noise maps have been historically collected and generated by means of costly expert measurements using certified devices, based on short term periods that try to be as much representative as possible. However, this classic approach has recently undergone a dramatic change of paradigm thanks to the emergence of the so-called Wireless Acoustic Sensor Networks (WASNs) [

2]. The WASN have been developed under the paradigms of both the Smart City and the Internet-of-Things.

In literature, we can find different WASN designed and deployed for several outdoor applications, some of which focus on security and surveillance purposes through the identification and localization of specific sounds related to hazardous situations. For instance, in [

3], an ad-hoc WASN was designed to detect and locate shots for sniper detection. More recently, in [

4] a WASN based on the FIWARE platform was deployed to locate and identify a broader type of sound sources such as people screaming or talking loudly, shot guns, horns, road accidents, etc. by including ambisonic microphones in the network. Finally, although no specific WASN is explicitly described, the proposal described in [

5] is focused on the detection of road traffic accidents through the acoustic identification of tire skidding and car crashes.

Moreover, WASNs have also been deployed for city noise management, which involves noise mapping, action plans development, policing and improving public awareness, among others [

6]. For instance, the SENSEable project [

7] proposed a WASN to collect information about the acoustic environment of the city of Pisa (Italy) using low-cost acoustic sensors to study the relationship between public health, mobility and pollution through the analysis of citizens’ behaviour. Projects which have adopted a quite similar approach include the IDEA project in Belgium [

8], the RUMEUR network in France [

9] with a specific focus on aircraft noise, the ‘Barcelona noise monitoring network’ that is integrated in the

Sentilo city management platform in Spain [

10], or the DYNAMAP project, which is aimed at developing a dynamic noise mapping system able to detect and represent in real time the acoustic impact of road infrastructures in the cities of Rome and Milan (Italy) [

11].

Nevertheless, the WASN paradigm poses several challenges, ranging from those derived from the design and development of the wireless sensor network itself [

12,

13], e.g., energy harvesting and low-cost hardware development and maintenance, to some specific challenges derived from the automation of the data collection and subsequent signal processing (see [

2,

14] and references therein). For instance, if the WASN is explicitly designed to measure road traffic noise levels, any acoustic event produced by any other noise source that could alter the

measure (e.g., an air-craft flying over, nearby railways, road works, bells, crickets, etc.) should be detected and removed from the map computation to provide a reliable picture of the road noise level.

The detection of sound events is typically based on the segmentation of the input acoustic data into audio chunks that represent a single occurrence of a predefined acoustic class, separating them from other overlapping events if necessary [

15], a task that is also denoted in the literature as polyphonic sound event detection, e.g., see [

16,

17]. The acoustic event detection and classification is closely related to the so-called computational auditory scene analysis (CASA) paradigm [

18], which is devoted to acoustic scene classification and detection of sound events within an acoustic scene or soundscape [

15]. These typically take advantage of being trained on specifically designed environmental databases containing the target finite set of acoustic classes (e.g., see [

15,

19,

20]).

Therefore, it is necessary to design and develop representative audio databases containing this kind of non-desired sounds—hereafter denoted as

anomalous noise events (ANE) [

5,

21]—to allow the signal processing block to automatically detect and discard them when captured by the WASN. However, the highly local, occasional, diverse and unpredictable nature of the ANE concept [

21,

22], together with the naturally unbalanced distribution of acoustic events in real-life environments [

20,

23] makes it difficult to build acoustic databases which are representative enough. This is of paramount importance to allow the WASN becoming reliable in front of ANEs, which should take into account the acoustic salience [

24,

25,

26] of the anomalous noise event with respect to the background noise (i.e., a salient ANE should be detected and removed from the road traffic noise

computation to avoid biasing the noise map generation). To tackle this problem, several works have tried to build these databases by artificially mixing background noise with ANEs and considering different event-to-noise ratio (hereafter, Signal-to-Noise ratio or SNR) distributions, by mixing real background recordings with event excerpts from online digital repositories [

21,

27] or from isolated individual recording of actual sound sources [

19,

22]. Although this approach can help to improve the training process [

22], it can also yield results that may not represent what is actually found in real-life environments due to acoustic data misrepresentation [

28].

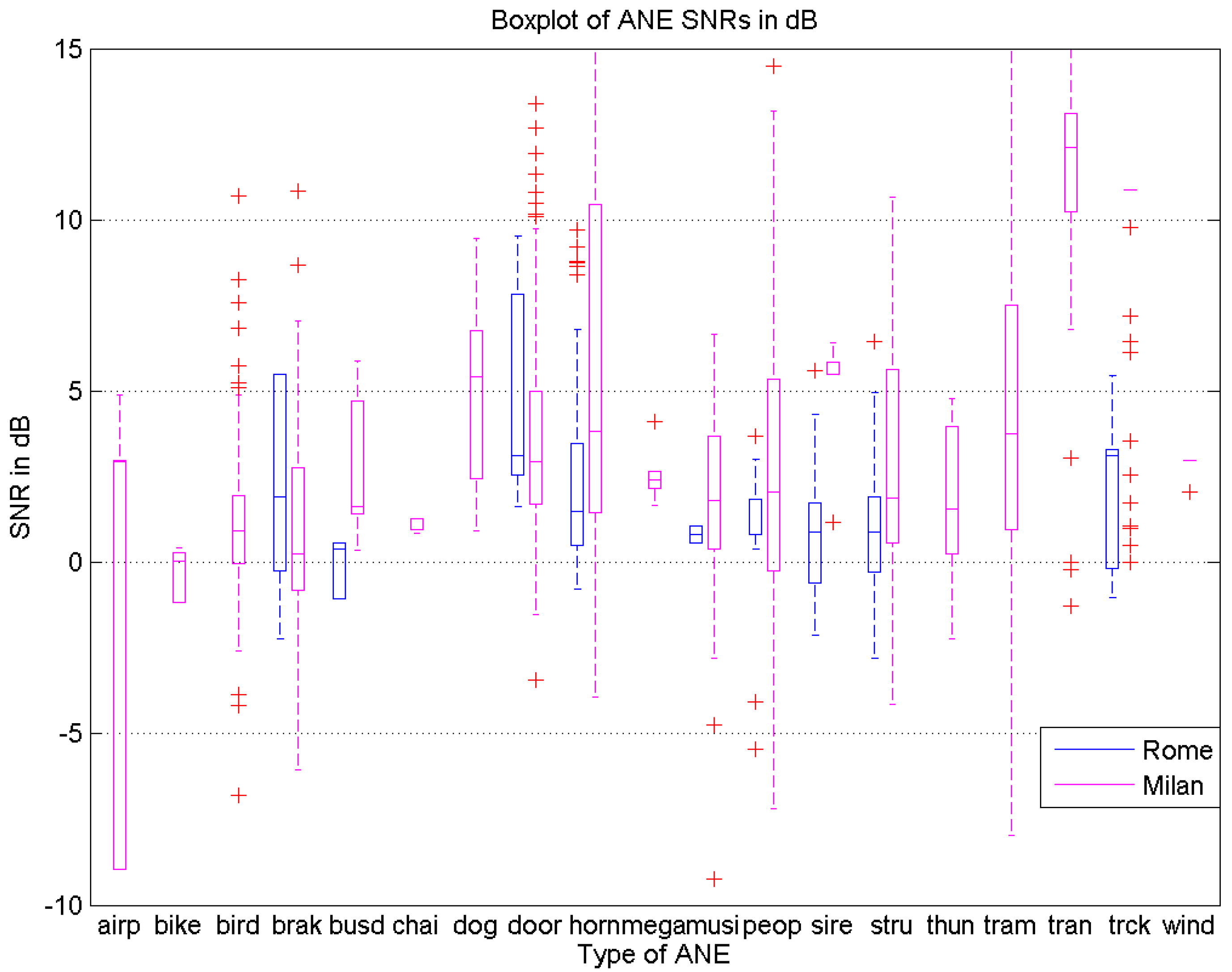

This article describes the design, recording campaign and analysis of a real-life environmental audio database of anomalous noise events gathered in the two pilot areas of the DYNAMAP project (

http://www.life-dynamap.eu) [

11]: an urban area within Milan’s district 9, and a suburban area along the Rome A90 highway. After manually labelling the collected samples of road traffic noise, background noise and anomalous noise events (subsequently divided into 19 subcategories), the description of the ANE is enriched through the automatic computation of their acoustic salience—defined as the contextual SNR of the event with respect to the traffic or background noise. The paper is completed with a comprehensive discussion on the obtained results that tries to shed light on several key aspects that should be taken into consideration when developing ANE databases for machine hearing approaches in real-life environments, and their implication for the modelling and automatic anomalous noise event recognition, whose development is out of the scope of this work. To our knowledge, no explicit studies have been previously conducted to consider the complexity of real-life soundscapes to provide the deployed WASN with the capability to discard ANEs from traffic noise map computation.

This paper is structured as follows. The work related to the most relevant previous attempts to generate environmental audio databases is explained in

Section 2.

Section 3 describes the generation and labelling of the real-life environmental audio database in the urban and suburban scenarios of the two pilot areas of the DYNAMAP project, and provides detailed description of the recording campaign, the annotation of ANE and the automatic process used to annotate the ANE contextual SNRs.

Section 4 analyses the ANE of both urban and suburban audio data in terms of occurrences, durations and SNRs. Next,

Section 5 discusses in detail the results obtained from the database analyses, and the paper finishes with the main conclusions and future work in

Section 6.

3. Environmental Noise Database

In this section, the environmental audio database recorded and built in real-life conditions for the DYNAMAP project is detailed.

Section 3.1 describes the urban and suburban pilot areas on-site inspection and recording campaign conducted to collect both road traffic noise and anomalous noise events samples. The subsequent audio database process generation, including the labelling and acoustic salience (computed as SNR) computation is described in

Section 3.2.

3.1. Urban and Suburban Pilot Areas On-Site Inspections and Recording Campaign

The main goal of the recording campaign was to collect widely diverse samples of road traffic noise and anomalous noise events in order to provide a general picture of the real-life scenarios the dynamic noise mapping system will be working on. To that effect, several recordings were conducted between the 18 and 21 May 2015 in specific locations of the two pilot areas of the DYNAMAP project, covering both urban (Milan) and suburban (Rome) scenarios. These locations were selected according to representative traffic conditions and acoustic characteristics of the pilot areas (see [

36,

37] for further details).

The WASN of the DYNAMAP project is based on low-cost acoustic sensors from Bluewave [

38]. According to the project requirements, the recordings were conducted using two measuring devices simultaneously: the low-cost sensor connected to a ZOOM H4n digital recorder (see

Figure 1b), and a Bruel & Kjaer 2250 sonometer (see

Figure 1a), being the latter used as a certified reference according to the project requirements. However, only the audio data obtained from the low-cost sensor is used for the subsequent analyses. The recording setup was the following:

Situation of both measuring devices: 50 cm distance between them.

Sampling: 48 kHz sampling rate with 24 bits/sample.

Sensitivity verification using a 94 dBSPL, 1 kHz calibration tone.

Clapping: in order to align the audio recordings from both measuring devices, a sequence of 5 s. of clapping was performed between both sensors with a separation that assured a very good signal to noise ratio despite the environmental noise.

Gain adjustment: the input gain of each recorder was selected to guarantee enough room for in-site audio dynamics (no saturation).

Installation: both recording systems were installed on a tripod and included a windscreen to protect the sensor from wind.

Orientation: the final orientation of the DYNAMAP low-cost sensors with respect to the traffic flow is still undefined. For this reason, recordings were made with three orientations: putting the sensor in the direction of the traffic –forward orientation–, in the opposite direction –backward–, or orthogonal to the vehicles flow. Moreover, three elevation angles of the sensors positions were also employed: 0, 45 and −45.

Between 18 and 19 May 2015 , the recordings were conducted in six sites along the A90 highway in Rome to collect suburban audio samples. They constituted a representative subset of the 17 sites in this pilot area according to the following four classes [

36]: single road, additional crossing or parallel roads, railway lines running parallel or crossing the A90 motorway, and a complex scenario including multiple connections. In particular, the recording equipment was installed in six highway portals owned by the DYNAMAP partner ANAS S.p.A. (see

Figure 2), a government-owned company under the control of the Ministry of Infrastructure and Transport in Italy. During these recordings, the weather conditions were dry and sunny, and with an average temperature of 19

C.

From 20 to the 21 May 2015, the recording campaign was moved to district 9 pilot area in Milan to collect urban road traffic noise samples in twelve locations at different times of day and night following [

37] (see

Figure 3 for examples of recording setup). More precisely, the recordings were conducted on the following locations:

Near a hospital, including tramways and low traffic.

One-way road with very-low traffic.

Highly dense but slow traffic, with tramways, stone road surface, traffic lights and retentions.

Railways, very-low traffic.

Tram and railways, fast fluid traffic flow (multi-lane).

City center, shopping road, crossroad with traffic lights. Wet road surface.

Very low fluid traffic two-way road at night (multi-lane).

Two-way road with fluid traffic near university (multi-lane).

The same location as number 8 but with wet road surface.

Narrow two-way road with fluid traffic in a residential area.

Narrow two-way road with very-low-density traffic near a school.

Low traffic, narrow one-way street near the city council.

During the Milan recordings the weather was quite sunny, except on the second day when there were thunderstorms during one of the recordings, and it was possible to record the noise of the thunder as well as road traffic noise with wet road surface.

As a result of the four-day recording campaign between the suburban (Rome) and urban (Milan) scenarios, a total of 9 h and 8 min of audio were collected and prepared for the subsequent labelling and post-processing phase, which is described in

Section 3.2. For more details about the recording campaign, the reader is referred to [

39].

3.2. Real-Life Urban and Suburban Environmental Audio Database Generation

After finishing the recording campaign, a post-processing phase was conducted in order to normalize and label all the recorded audio files and export them into analyzable audio clips. To that effect, we used the Audacity freeware software, generating a total of eighteen audio projects, one for each session during the recording campaign. Six projects were related to the suburban recordings, and twelve to the urban recordings, and their amplitude was normalized by using the calibration tone. Finally, each audio event was manually detected and labelled (including start and end points) by an expert annotator to guarantee the reliability of the annotation. The expert annotator was asked to annotate only those ANEs perceptually distinguishable from the background road traffic noise or from other acoustic events simultaneously occurring in the acoustic mixture.

Specifically, and after an initial listening revision, the expert was asked to distinguish between three major categories: road traffic noise (RTN, assigned to all audio regions containing road transit), background noise (BCK, reserved to those recordings where it was difficult to identify the noise coming from vehicles since they contain the background noise of the city) and ANEs. Anomalous noise events were subsequently labelled into 19 subcategories, taking into account the diversity of the acoustic phenomena gathered during the real-life environmental recording campaign. These subcategories were defined to enrich the description and subsequent analyses of the collected acoustic occurrences for both acoustic environments (urban and suburban). The labels were inspired in the taxonomy defined in [

34] (specifically devoted to urban acoustic environments), although it was not fully adopted since specific noises were identified beyond the ones proposed in that taxonomy (e.g., the noise derived from the portals’ structure vibration in the suburban recordings). Concretely, the following labels were agreed within the DYNAMAP consortium to annotate the ANEs collected in both urban and suburban scenarios:

airp: airplanes.

bike: noise of bikes.

bird: birdsong.

brak: noise of brake or cars’ trimming belt.

busd: opening bus or tramway, door noise, or noise of pressurized air.

chains: noise of chains (e.g., bicycle chains).

dog: barking of dogs.

door: noise of house or vehicle doors, or other object blows.

horn: horn vehicles noise.

mega: noise of people reporting by the public address station.

musi: music in car or in the street.

peop: people talking.

sire: sirens of ambulances, police, fire trucks, etc.

stru: noise of portals structure derived from its vibration, typically caused by the passing-by of very large trucks.

thun: thunder storm.

tram: (stop, start and pass-by of tramways).

tran: (stop, start and pass-by of trains).

trck: noise when trucks or vehicles with heavy load passed over a bump.

wind: noise of wind, or movement of the leaves of trees.

Subsequently, the labelled audio clips were exported as independent ‘.wav’ audio files using a sampling rate of 48 KHz and 16 bits/sample. Each filename contained the following parts: type of sensor, type of event, order of appearance of this type of event in the same audio project, direction of measurement in relation with the traffic direction, elevation angle of the measurements, type of road and traffic density. Subsequently, the ANE audio chunks were also tagged in terms of SNR in dB, that is computing the relative amount of ANE amplitude with respect to the BCK or RTN noise. After a preliminary attempt to compute this calculation manually, we opted to develop a semiautomatic SNR labelling approach, which is explained in

Section 3.3.

Table 1 shows the final inventory of audio files, indicating their durations for each of the two considered recording environments.

As

Table 1 shows, the anomalous noise events recorded in the suburban scenario in Rome is about 3.2% of the database, while this percentage increases to 12.2% in the context of an urban environment in Milan. This result can be understood as an initial indication of the differences between both environments in terms of ANEs distributions, which is discussed in more detail in

Section 4. A total duration of 4 h and 44 min is obtained in the suburban scenario of Rome, being 4 h and 24 min the corresponding duration of the acoustic data collected from the urban environment in Milan.

3.3. Automatic Contextual SNR Labelling of Anomalous Noise Events

One important issue regarding the design of machine hearing systems able to automatically detect specific sound events in real-life environments is the consideration of the sound events salience in relation to background noise. This information, which complements the audio event label, is key to determining which ANEs should be entirely removed from the subsequent computation of road traffic noise levels for the problem at hand. In this section, we describe the automatic process developed to measure the ANE salience computed following a signal-to-noise ratio scheme.

Specifically, the contextual SNR of each ANE is estimated by computing an A-weighted equivalent noise level (

) using the free Matlab “Continuous Sound and Vibration Analysis” toolbox developed by Edward L. Zechman (

https://es.mathworks.com/matlabcentral/fileexchange/21384-continuous-sound-and-vibration-analysis), considering a 30 ms integration time to be in concordance with the feature extraction process [

11]. The contextual SNR is computed from the difference between

, 30 ms (the median

level) within the ANE region and the

, 30 ms level of the surrounding background or road traffic noise region. For the latter, two

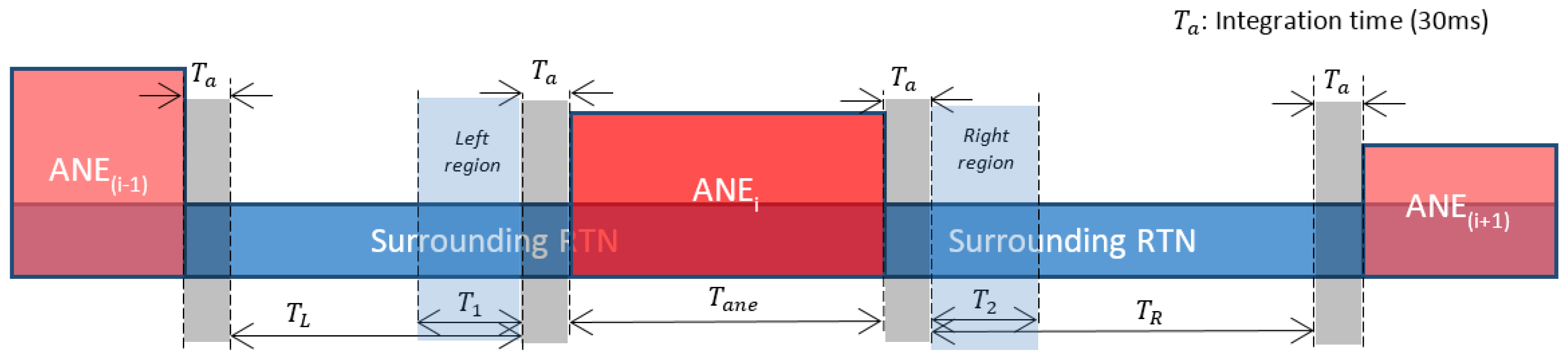

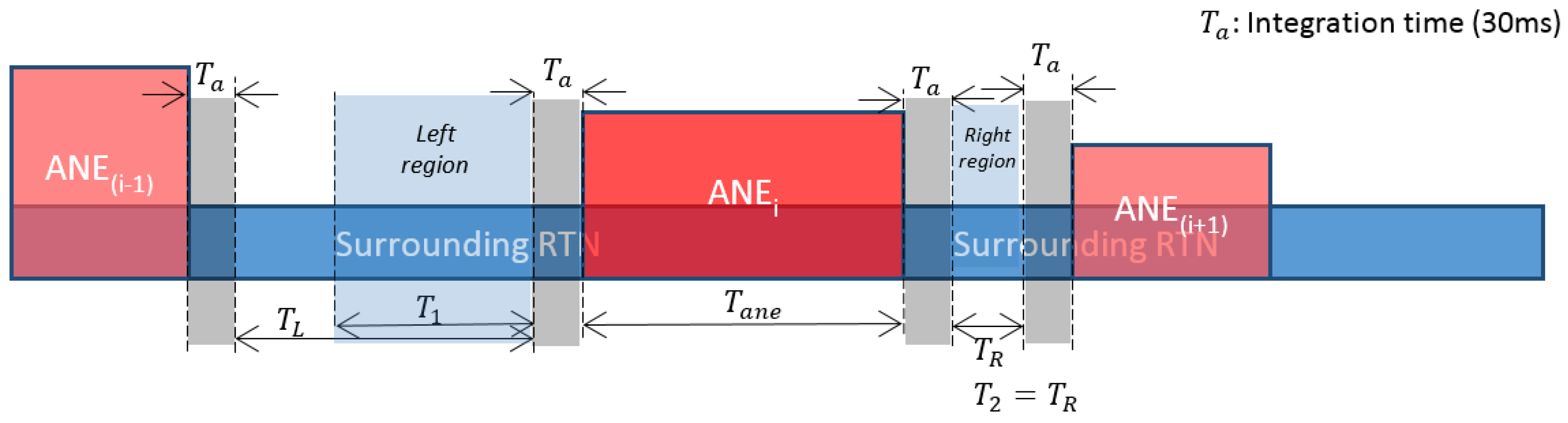

measurements are made: one before the start point of the anomalous event (referred to as left measurement), and another after the end point of the event (called right measurement). When possible, the sum of the lengths of the intervals where these two measurements are made should equal the duration of the anomalous noise event in order to have equivalent statistical data.

For illustration purposes, let us define as the duration of the closest BCK or RTN region before the beginning of the ANE. Analogously, is defined as the duration of the closest BCK or RTN region after the end of the ANE. Let us also define and as the durations of the two BCK or RTN regions considered to compute the corresponding median ( for the background or traffic noise before the ANE start and for the background or traffic noise after its end). From the previous definitions, it can be derived that and . The general aim of the proposed approach is to obtain two BCK or RTN representative enough computation regions, e.g., assuring that could be equal to the anomalous noise event total duration (). When this condition cannot be accomplished, only the available samples of background and/or road traffic noise are used.

Two case studies have been taken into account to implement the ANE contextual SNR computation:

An anomalous noise event is surrounded by road traffic or background noise: This represents the majority of cases in both urban and suburban real-life environmental databases. Within this case study, four possibilities exist:

- –

if and , then (half of the equivalent ANE duration samples can be found in both sides of the event for background or road traffic noise);

- –

if and then and (less samples of background or road traffic noise are available before the anomalous event start point than after its end);

- –

if and then and (less samples of background or road traffic noise are available after the anomalous noise event end point than before its start);

- –

if and , then and (there are less samples of background or road traffic noise than the half of the anomalous noise event duration at both sides).

The automatic process searches for the closest time regions to the current anomalous noise event (ANE

) trying to obtain as many samples of background or road traffic noise as the number of samples contained in the anomalous noise event (

). Moreover, a time margin equal to the integration time used for the

computation is excluded from the ANE surrounding regions to avoid including transients caused by the start and end of the ANE, obtaining more accurate estimations (see

in

Figure 4,

Figure 5 and

Figure 6). In

Figure 4, a theoretical example of a balanced set of regions is shown.

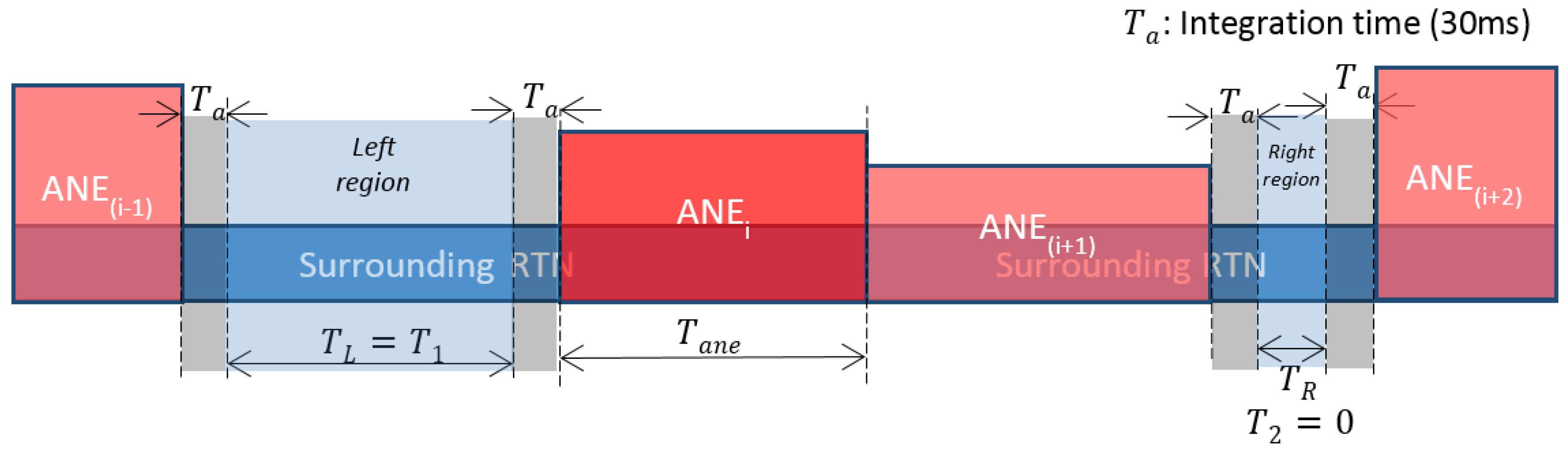

Contrary to the previous example, in

Figure 5 an example where the right region does not contain enough samples to obtain a balanced set of time regions of surrounding RTN is shown. As a consequence, more samples from the left region are considered here for the SNR computation.

Other anomalous noise events occur just before and/or after the analyzed anomalous noise event: In this less-frequent scenario, the selection of the time regions where the BCK or the RTN is computed is a little more intricate. The calculation process looks for the closest time regions to the current ANE following a global idea of measuring the contextual SNR with a proximity criterion, and trying to obtain as many samples of background or road traffic noise as the samples contained in the anomalous noise event duration (). In this case, the closest BCK or RTN region to the analyzed ANE is firstly considered. If the duration of this region is greater than and all its samples are closer to the analyzed ANE than any other sample from the opposite side, then the interval of duration is considered as closest to the analyzed ANE (being T the duration of this time region). Otherwise, when it is possible to obtain samples of BCK and/or RTN from both sides of the analyzed ANE with the general criterion that none of these two time regions are strictly closer to the ANE than the other due to the presence of other ANEs, then samples from both sides of the ANE are used to compute the BCK and/or RTN .

In

Figure 6, an example of this kind of contextual SNR computation where all the samples come from the left region is shown. This is because the RTN samples in the right region are further away than the farthest sample of the RTN left region (then,

).

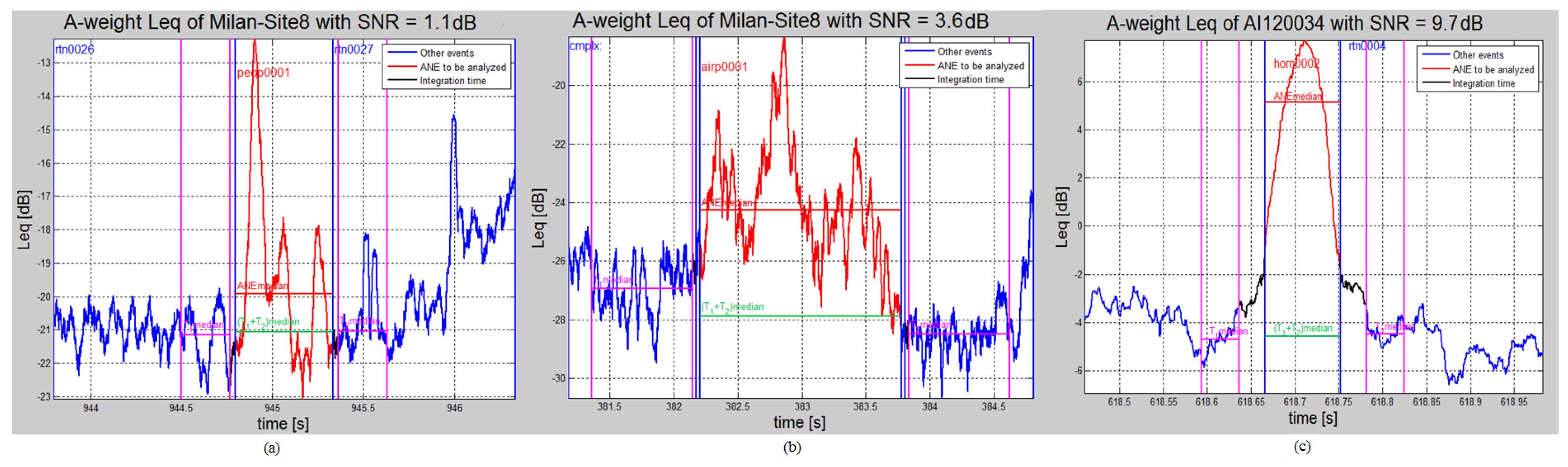

In

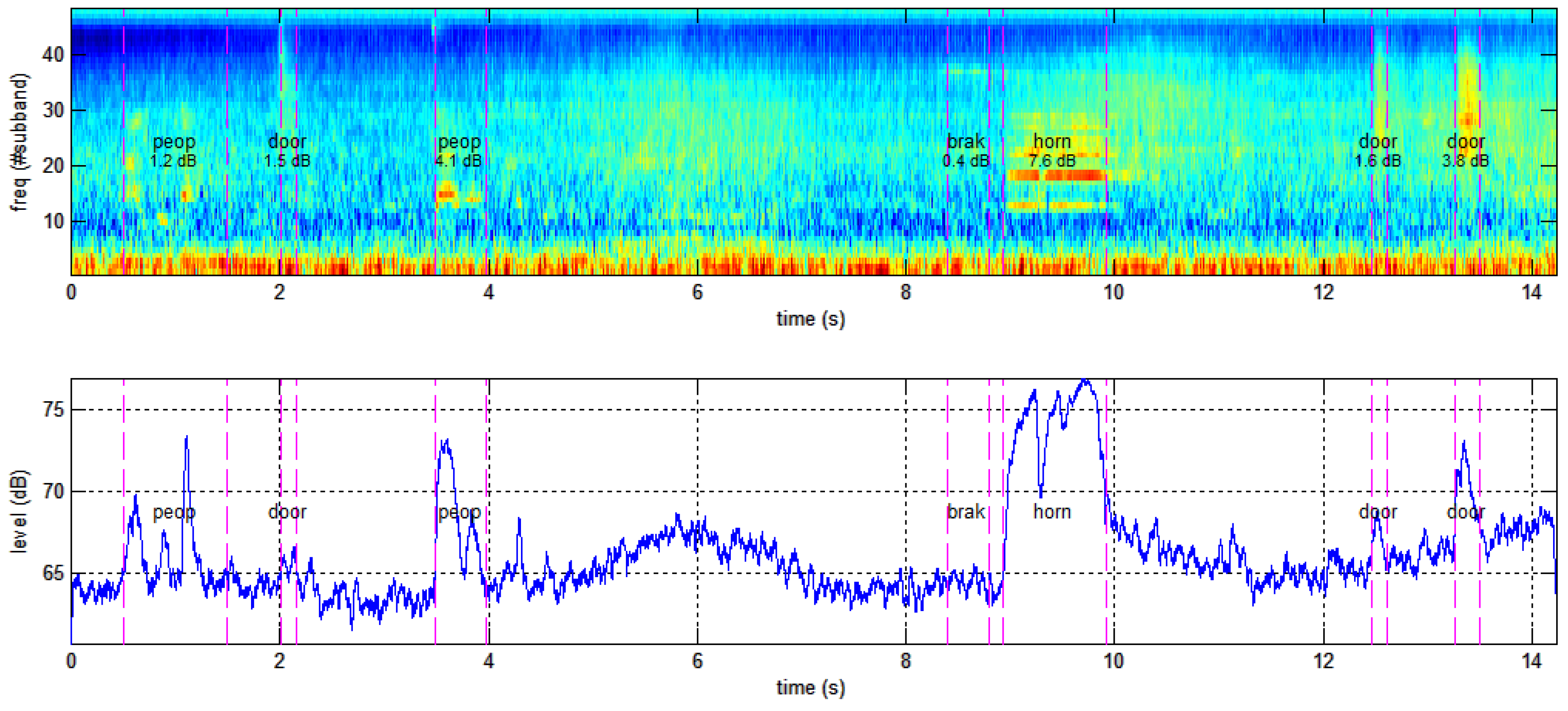

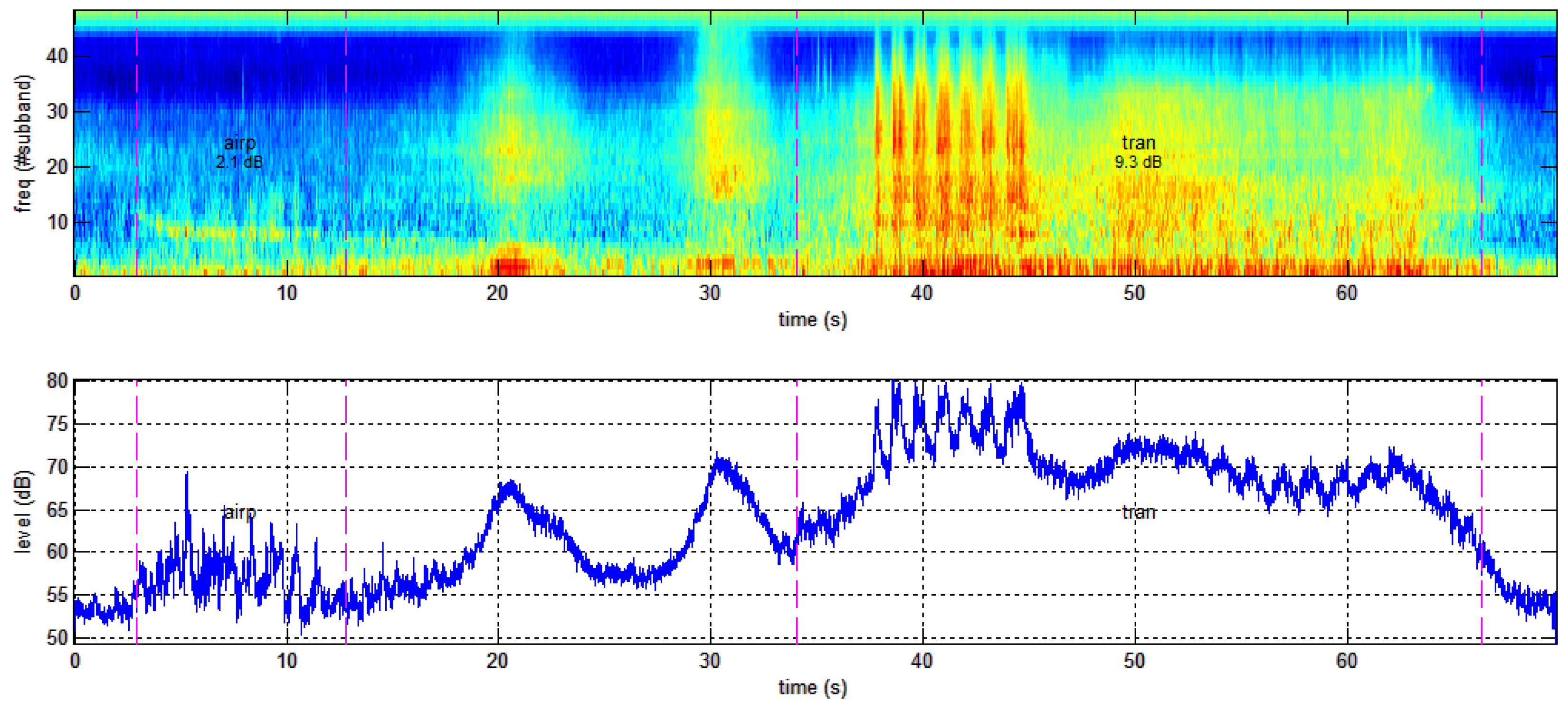

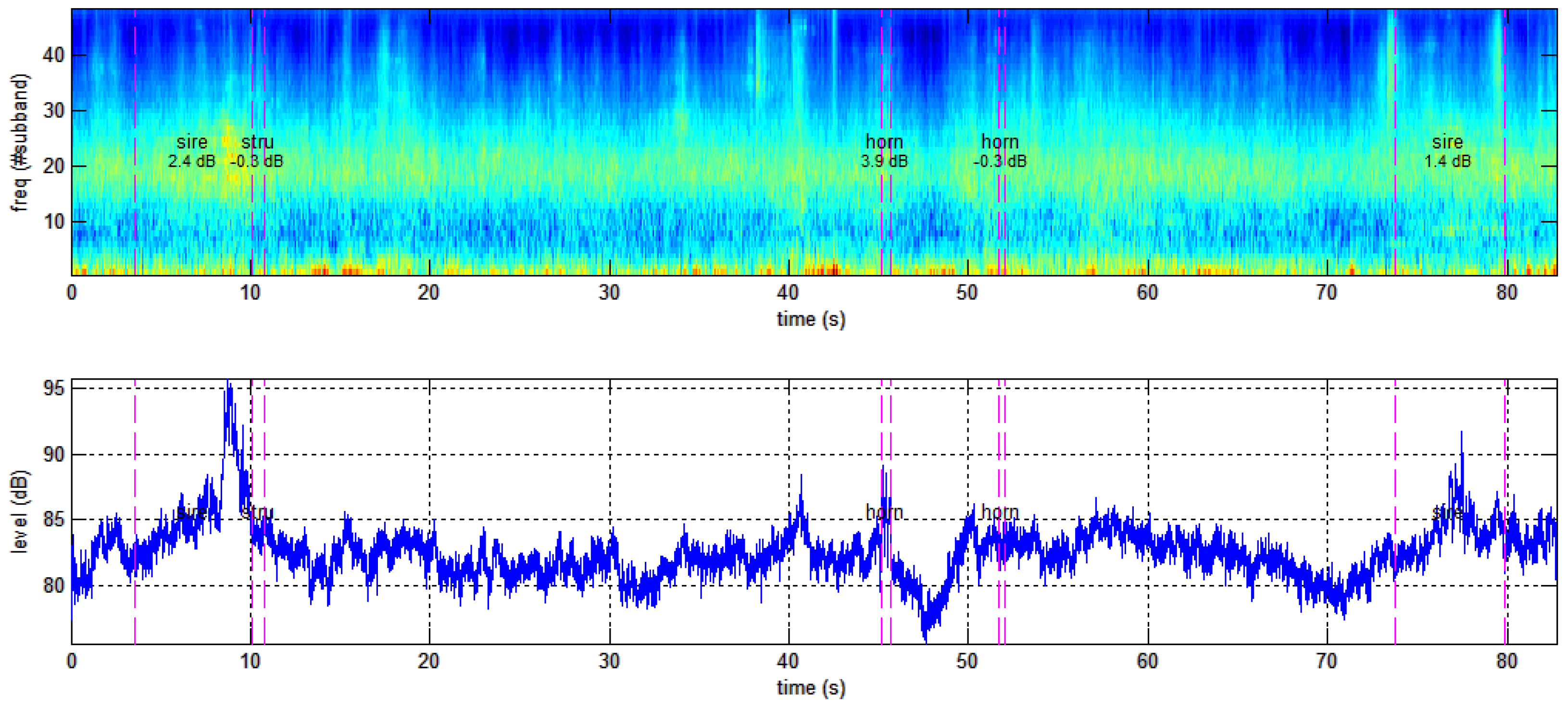

Figure 7, three examples of the most usual ANE contextual SNR computation cases (i.e., an anomalous noise event surrounded by road traffic or background noise) are shown. The A-weighted

curve is highlighted, representing the time region attributed to an anomalous noise event in red, and the background or road traffic noise in blue. A time period equal to the integration time for the

computation is depicted in black at both sides of the anomalous event, in order to prevent transients affecting the SNR computation. The median

for each time region are shown as magenta horizontal lines (for background and RTN at both sides) and horizontal red lines (for the ANE). Finally, the median

of the surrounding background or road traffic noise considering both ANE sides is depicted as a green horizontal line within the ANE time region.

5. Discussion

In this section, several relevant aspects are discussed after the generation, annotation and analysis of the recorded database in real-life urban and suburban scenarios, paying special attention to what has been already considered in previous works. Moreover, some general implications derived from the obtained results are also presented to help the development of ANE databases for automatic detection systems in real-life urban and suburban environments.

5.1. Sensor Locations during the Recording Campaign

During the recording campaign, the sensors were placed at the portals of the highway in the suburban area, which is the exact position they will be installed for the WASN thanks to the collaboration of the DYNAMAP’s ANAS S.p.A partner. However, they were located at more than 2 m from the buildings in the urban scenario, which can differ from the positions where the WASN low-cost sensors will be placed when deploying the pilot system of the DYNAMAP project in Milan (e.g., fixed at the buildings façade). Moreover, also different elevation angles were considered in the sensors’ orientation during the recording campaign. At this point, an interesting question arises: To what extent do the position and orientation of the sensors influence aspects like acoustic salience of sound events and spectral content of sound or road traffic noise? More work needs to be done to answer these questions, e.g., by performing contrast analyses with the gathered measurements obtained with different sensors’ positions and orientations, beyond the preliminary comparisons already conducted.

5.2. Database Annotation

The labelling of the real-life audio database has undergone both manual and automatic processes. The manual labelling of the time boundaries of the audio scenes and events has guaranteed subsequent reliable analyses. However, since it is a burdensome and time consuming task, it may hinder the generation of new databases from field recordings. To alleviate this task, we can consider those approaches focused on the identification of salient audio events regardless of their origin (e.g., [

24,

25,

26]) or semi-supervised techniques that combine confidence-based active learning and self-training [

40], allowing the combination of automatic labelling (high confidence scores) and human annotation (when low scores are obtained). However, whichever the approach followed to cope with non-stationary background noise, it is envisioned as a very challenging task.

On the other hand, the acoustic salience of the anomalous noise events with respect to background noise has been automatically quantified in terms of SNR with higher resolution than in [

34], where a binary salience descriptor was considered to differentiate between foreground and background noises. The computation of the ANE contextual SNR with respect to RTN or BCK noise levels poses several challenges. The RTN

within the ANE time interval is computed by considering the surrounding samples, which entails a simplification due to the non-stationary nature of road traffic noise. In addition, during the ANE time interval, the measured

is also influenced by background noise (this is of especially relevance for those ANE with low SNR). Moreover, real-life noise sources can exhibit relevant level variations along time. For instance, an ambulance passing by with siren generates a long sound with a high noise level when closest to the point of measurement, which then fades in the approaching and receding phases. Ideally, instead of only accounting for the mean SNR value, it would be desirable to obtain SNR labels at different time instants, considering the sound level trajectory of each sound source. This approach could be understood as a time-varying soft-salience labelling. From the ANE salience analysis, we have been provided with some relevant insights on what can be found in real-life urban and suburban environments. Any machine hearing running in real-life conditions should be designed to tackle the diversity of ANE in terms of salience, i.e., being robust to ANE salience variability.

Finally, it is worth mentioning that both the definition and the categories of anomalous noise events can be a matter of discussion. In this work, they have been agreed with the DYNAMAP project consortium, considering ANE to be those audio events that do not reflect regular road traffic noise [

1], i.e., coming from vehicles’ engines or derived from the normal contact of their tires with the road surface. Nevertheless, both the definition and the list of ANE might be subject of modification in future works.

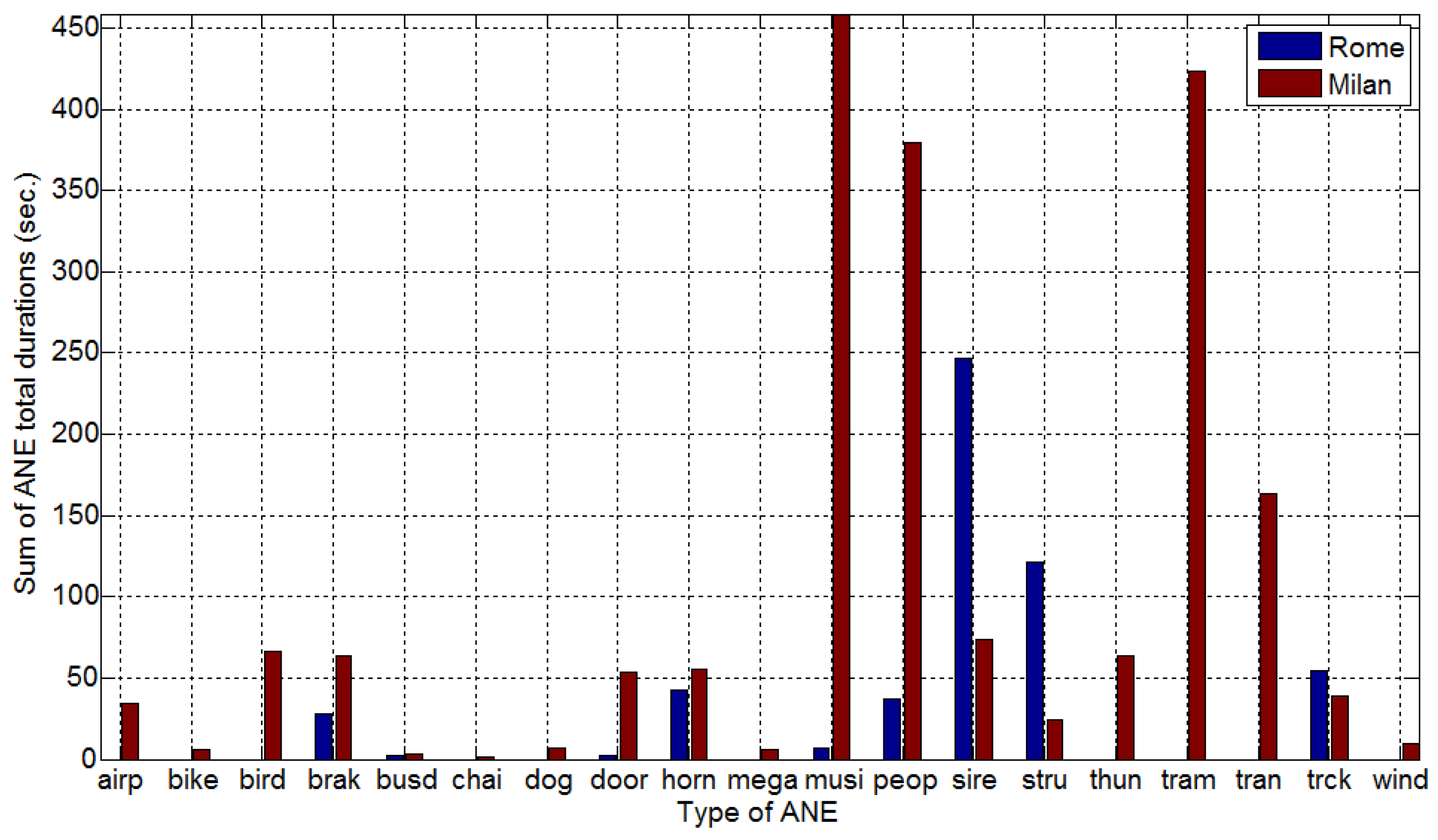

5.3. Implications of the Results

In general terms, the conducted statistical analyses confirm that the two chosen scenarios (urban and suburban) exhibit different patterns in terms of both background noise and anomalous noise events, e.g., showing significant differences in terms of types of ANE and SNRs between them; a result that could be expected a priori. However, beyond specific aspects related to the different characteristics of these soundscapes (e.g., the impulse-like vs. stationary nature of noise events), it is reasonably to remark that both soundscapes entail a large variability and diversity of ANE in terms of occurrences, durations and SNRs. Regarding background noise, the suburban recordings contain mainly continuous road traffic noise, while the urban recordings also include other kinds of road noise like those gathered from interrupted traffic (e.g., crossroad with traffic lights) or the background city noise observed in low-density traffic city roads. Concerning the ANEs, the urban scenario entails a larger diversity of anomalous noise events than the suburban scenario, while the latter presents ANEs with lower contextual SNRs.

Another interesting aspect derived from the analyses is that real-life recordings reflect a highly unbalanced nature between anomalous noise events (uncommon sounds) and road traffic or background city noise (common sounds). In the 9 h 8 min of labelled audio database, the ANE subset represents 3.2% of the total recorded audio in the suburban scenario, while in the urban soundscape it comprises 12.2%. This fact can be of paramount importance when it comes to developing sound event detectors or classifiers, as the training and testing processes can be highly influenced by the ratio between the amount of examples of the classes to be identified [

28,

41]. Furthermore, the limited presence of ANEs in real-life recordings also makes it very difficult to model of such diversity of sound events within individual classes (i.e., one class per event typology). This issue can be alleviated by integrating all types of ANE within the same class, as will be done in the DYNAMAP project to discard them from the road traffic noise computation.

Furthermore, we can also conclude that the generation of synthetic sound mixtures either from online databases or real isolated ANE by considering predefined SNRs as proposed in previous works is still far from what can be observed in both real-life urban and suburban scenarios, which entail a dramatically larger variability. Concretely, ANE variability has been observed both in terms of the number of occurrences per ANE and the diversity of their durations, ranging from almost 0 s for impulse-like sounds such as dogs barks or closing vehicle doors, to more than 30 s for music or sirens. Moreover, the mean SNR values in both real-life urban and suburban recordings also show a significantly larger range of values (from

to

dB) with respect to previous artificially generated audio databases (e.g.,

dB [

22] or

dB plus

dB [

21]), with relevant diversity of intermediate SNR values. In our opinion, this fact is of paramount importance since the acoustic salience of a sound event could highly affect the performance of any automatic recognition system as already observed in [

28]. However, this hypothesis should be further studied in future works.

Finally, although the recording campaign was designed to collect a large diversity of anomalous noise events, it is worth mentioning that the obtained results should not be understood as a generalizable result to any urban or suburban scenario as the recorded database comprises a large but limited set of labelled audio recordings of 9 h 8 min. At best, the obtained general patterns could be observed in very similar urban and suburban environments. Nevertheless, the main conclusions and considerations discussed in this paper can be taken into consideration for similar studies. For instance, from these results, it seems reasonable to avoid characterizing both acoustic environments altogether when it comes to developing the signal processing techniques run at the WASN acoustic sensors. On the contrary, characterizing each type of soundscape independently seems more appropriate. However, the latter approach make the scalability of the system more difficult and costly. Therefore, further studies should be conducted in order to find some trade-off between a general machine hearing system and a specifically trained system for each WASN sensor location.

6. Conclusions

This work has presented the production and analysis of a real-life environmental audio database in two urban and suburban scenarios corresponding to the pilot areas of the DYNAMAP project: the Milan district 9 and the Rome A90 highway, respectively. The collected 9 h 8 min of audio data have been categorized as road traffic noise, background city noise, and anomalous noise events. Due to the relevance of the ANE for the generation of reliable traffic noise maps through WASN, that category has been subsequently annotated using 19 subcategories and automatically labelled in terms of contextual acoustic salience as signal-to-noise ratio.

The in-depth analysis of the distribution of the ANEs collected in the database has shown the high diversity of ANE in terms of occurrence, duration and salience, besides confirming their local and occasional nature. Although, as expected beforehand, specific differences have been found between both soundscapes due to their specific acoustic nature, both environments show high ANE diversity, besides presenting a dramatically large range and variation of SNR values when compared to previous artificially generated ANE audio databases. The obtained results have been also comprehensively discussed to guide the development of automatic ANE classifiers using real-life environmental data in future works, by accounting for the implications of this kind of peculiar acoustic data.

Finally, we do not discard extending the conducted analyses in the considered urban and suburban soundscapes along with the DYNAMAP’s WASN deployment in order to include a larger diversity of weather and traffic conditions, compare the obtained results with the final location of the low-cost sensors in the urban environment, etc., besides studying in more detail those audio clips tagged as ‘complex audio’. Moreover, we also plan to analyze the statistical differences between ANE and road traffic or background noise at the spectral level for the future development of anomalous noise event detection systems in both scenarios.