Tangible User Interface and Mu Rhythm Suppression: The Effect of User Interface on the Brain Activity in Its Operator and Observer

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experiment 1

2.1.1. Subjects

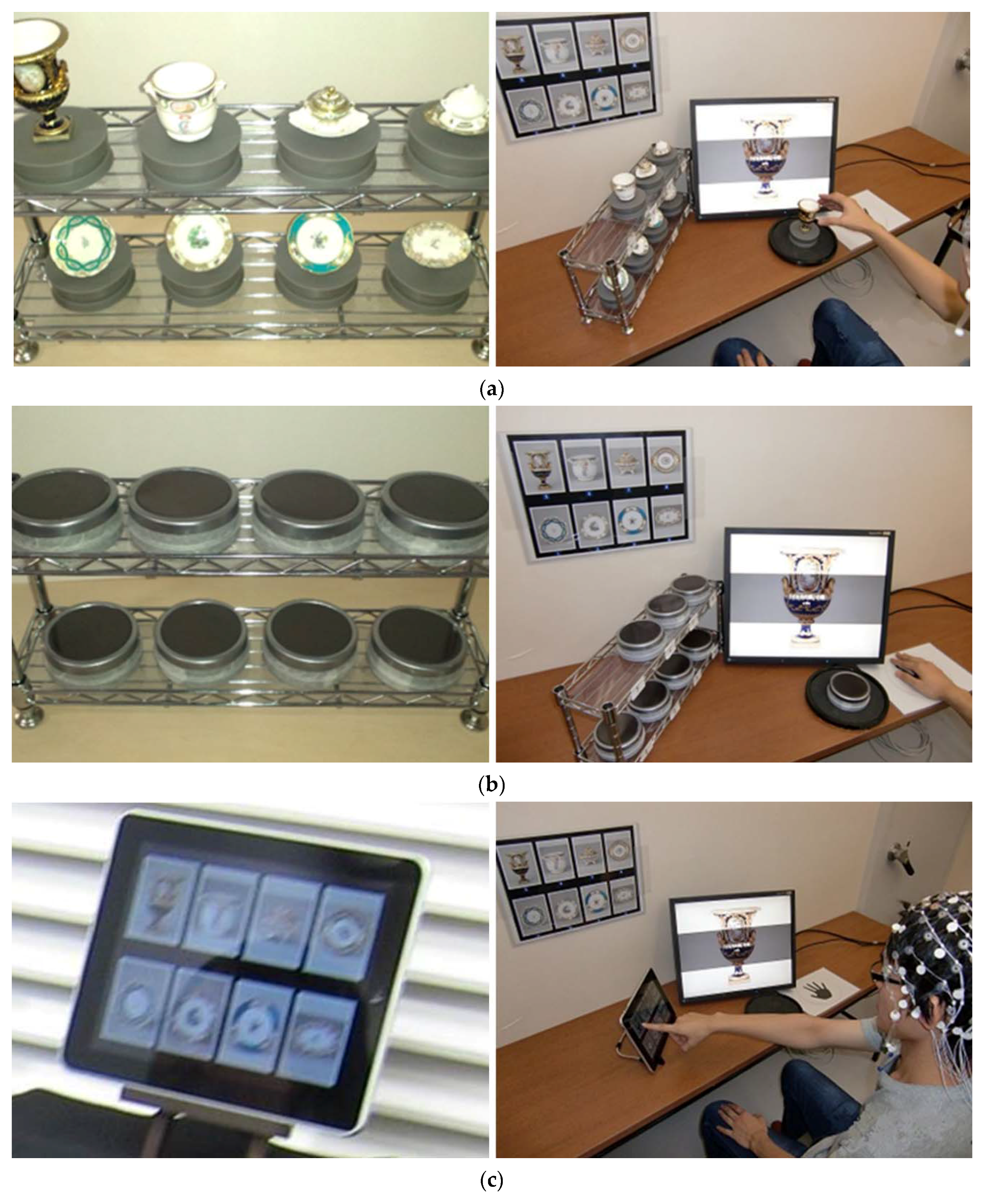

2.1.2. Experimental Conditions

2.1.3. Experimental Procedure

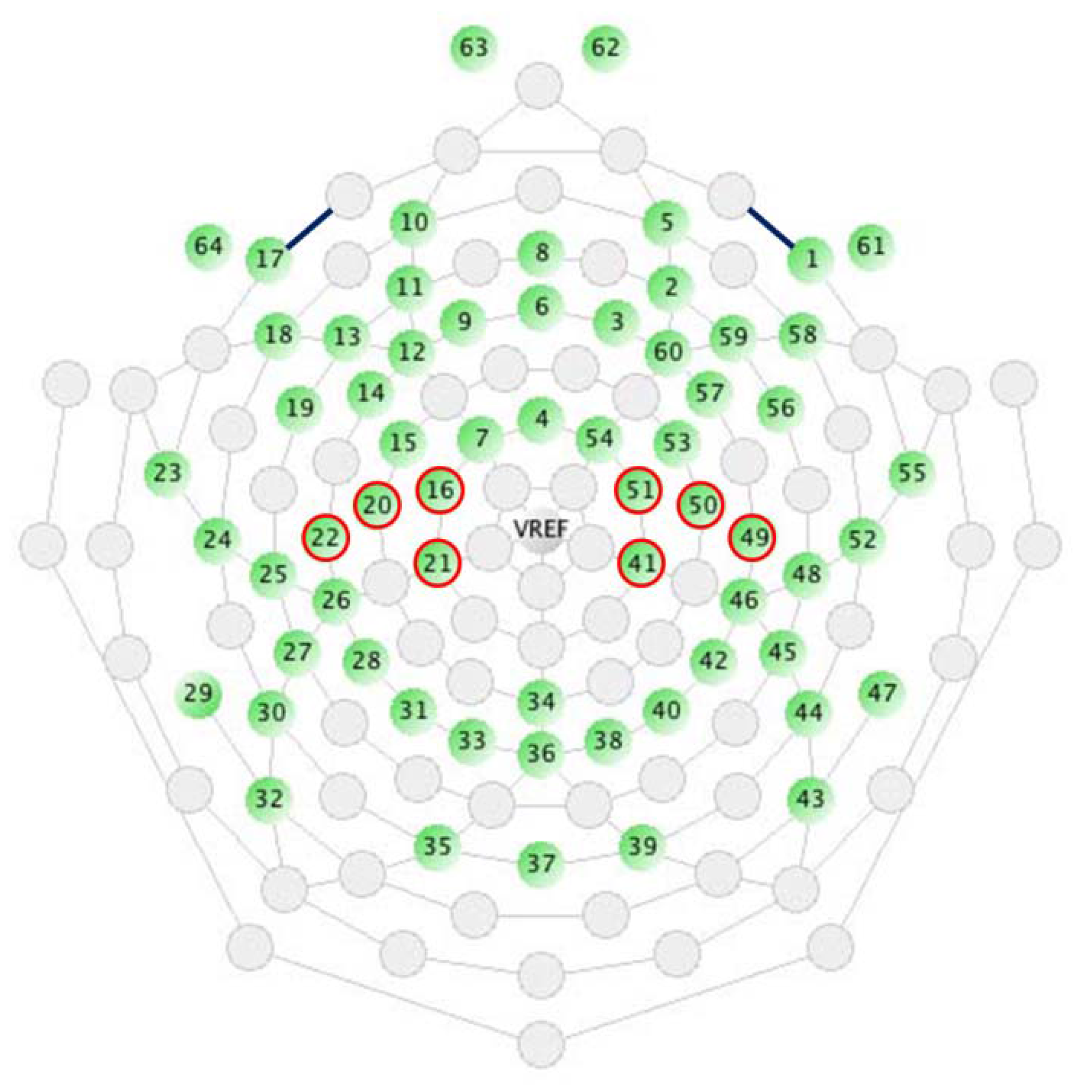

2.1.4. EEG Measurement and Analysis

2.2. Experiment 2

2.2.1. Subjects

2.2.2. Experimental Conditions

2.2.3. Experimental Procedure

2.2.4. EEG Measurement and Analysis

3. Results

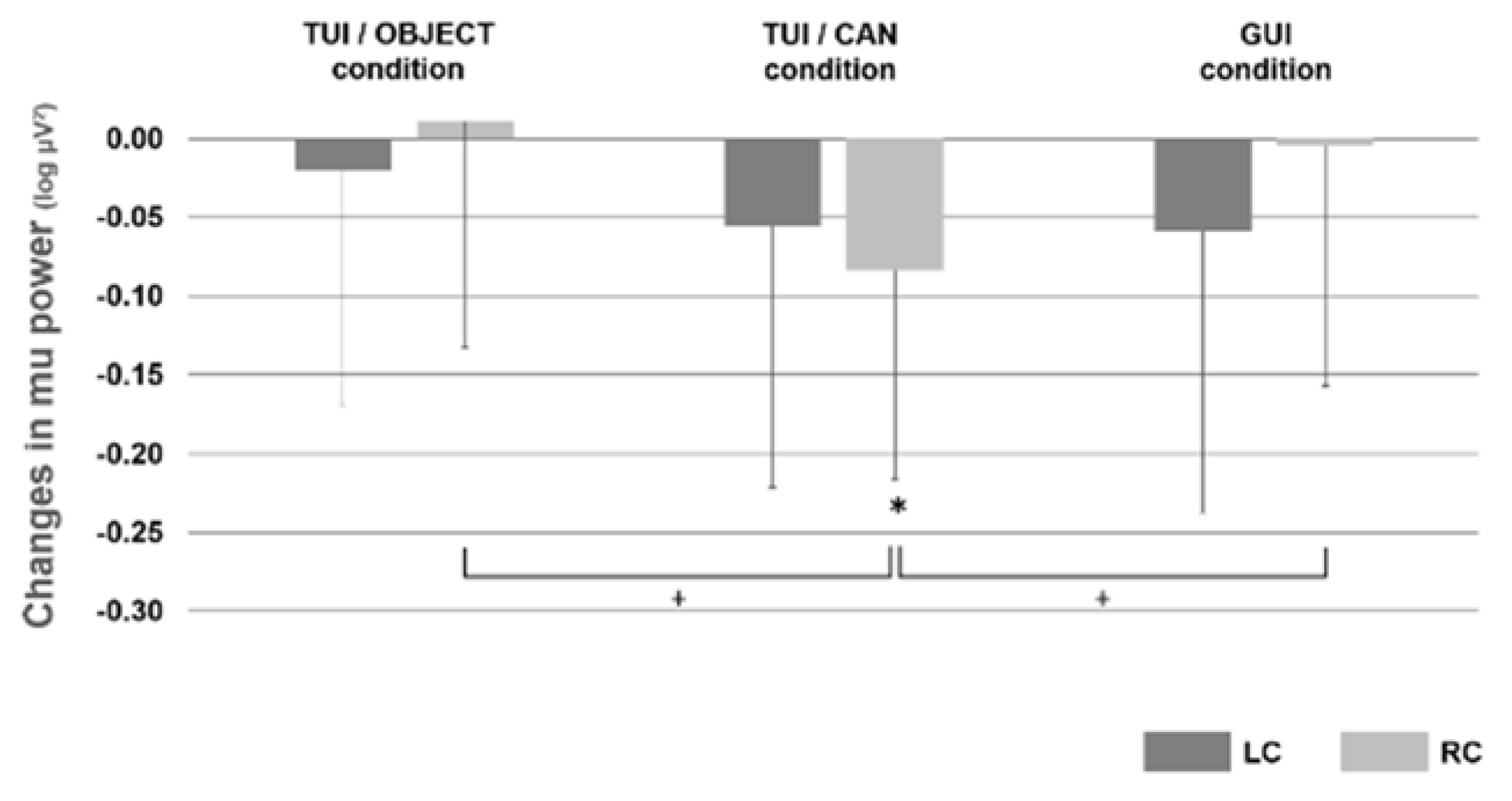

3.1. Experiment 1

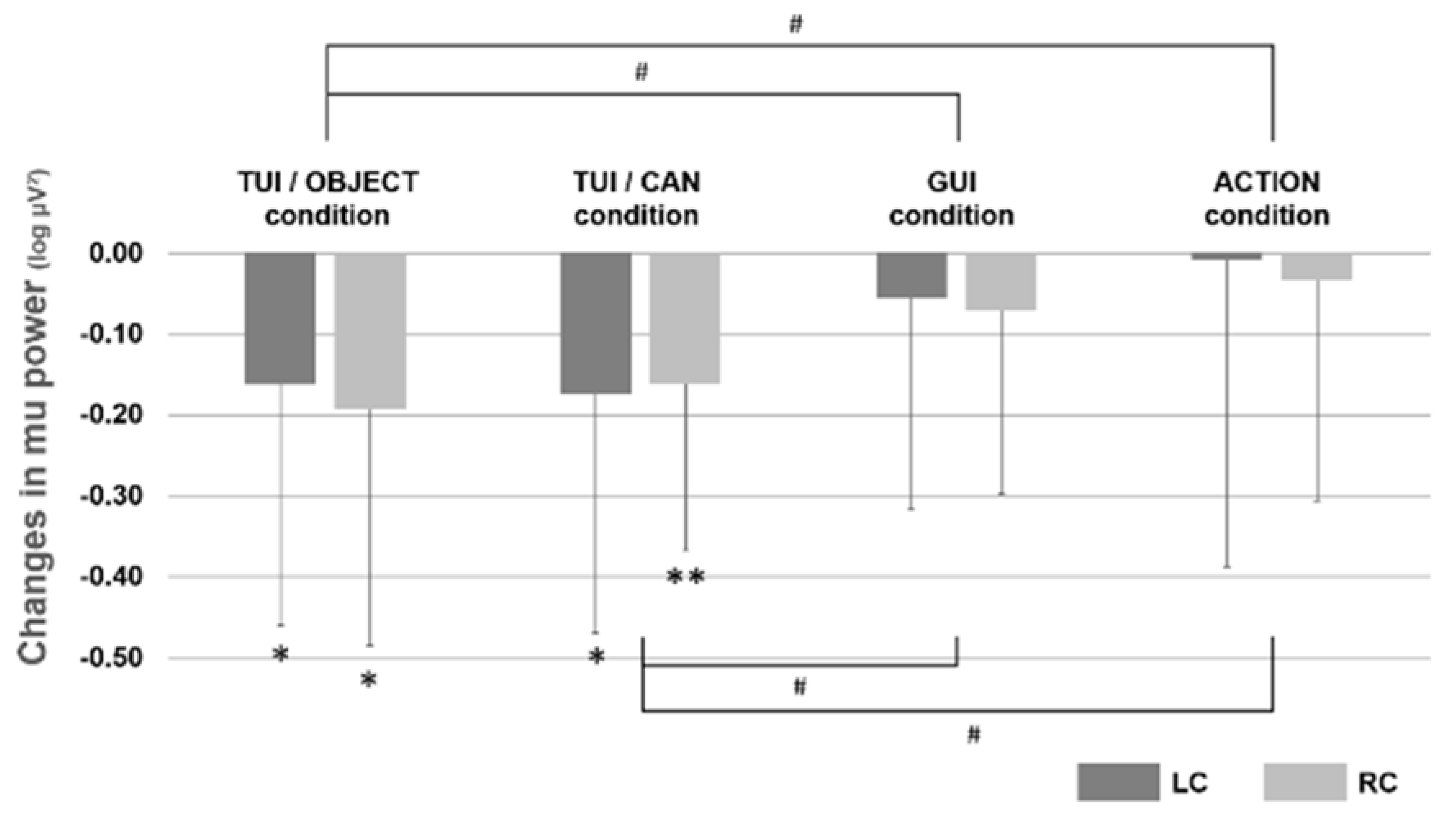

3.2. Experiment 2

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ishii, H.; Ullmer, B. Tangible bits: Towards seamless interfaces between people, bits and atoms. In Proceedings of the Conference on Human Factors in Computing Systems-Proceedings, Atlanta, GA, USA, 22–27 March 1997; pp. 234–241. [Google Scholar]

- Fitzmaurice, G.W. Graspable User Interfaces. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 1996. [Google Scholar]

- Baskinger, M.; Gross, M. Tangible interaction = form + computing. Interactions 2010, 17, 6–11. [Google Scholar] [CrossRef]

- Van Den Hoven, E.; Frens, J.; Aliakseyeu, D.; Martens, J.B.; Overbeeke, K.; Peters, P. Design research & Tangible Interaction. In Proceedings of the First International Conference on Tangible and Embedded Interaction, Baton Rouge, LA, USA, 15–17 February 2007; pp. 109–115. [Google Scholar]

- Wakkary, R.; Muise, K.; Tanenbaum, K.; Hatala, M.; Kornfeld, L. Situating approaches to interactive museum guides. Mus. Manag. Curatorship 2008, 23, 367–383. [Google Scholar] [CrossRef]

- Stanton, D.; Bayon, V.; Neale, H.; Ghali, A.; Benford, S.; Cobb, S.; Ingram, R.; O’Malley, C.; Wilson, J.; Pridmore, T. Classroom collaboration in the design of tangible interfaces for storytelling. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Seattle, WA, USA, 31 March–5 April 2001; pp. 482–489. [Google Scholar]

- Antle, A.N. The cti framework: Informing the design of tangible systems for children. In Proceedings of the First International Conference on Tangible and Embedded Interaction, TEI’07, Baton Rouge, LA, USA, 15–17 February 2007; pp. 195–202. [Google Scholar]

- O’Malley, C.; Fraser, D.S. Literature Review in Learning with Tangible Technologies; A NESTA Futurelab: Bristol, UK, 2004; pp. 1–48. [Google Scholar]

- Shaer, O.; Hornecker, E. Tangible user interfaces: Past, present, and future directions. Found. Trends Hum. Comput. Interact. 2009, 3, 1–137. [Google Scholar] [CrossRef]

- Hornecker, E.; Buur, J. Getting a grip on tangible interaction: A framework on physical space and social interaction. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems, QC, Canada, 22–27 April 2006; pp. 437–446. [Google Scholar]

- Arias, E.; Eden, H.; Fischer, G. Enhancing communication, facilitating shared understanding, and creating better artifacts by integrating physical and computational media for design. In Proceedings of the Conference on Designing Interactive Systems: Processes, Practices, Methods, and Techniques, DIS, New York, NY, USA, 18–20 August 1997; pp. 1–12. [Google Scholar]

- Suzuki, H.; Kato, H. In Interaction-level support for collaborative learning: Algoblock—An open programming language. In Proceedings of the First International Conference on Computer Support for Collaborative Learning, Bloomington, IN, USA, 17–20 October 1995. [Google Scholar]

- Fitzmaurice, G.W.; Buxton, W. An empirical evaluation of graspable user interfaces: Towards specialized, space-multiplexed input. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 22–27 March 1997; pp. 43–50. [Google Scholar]

- Patten, J.; Ishii, H. A comparison of spatial organization strategies in graphical and tangible user interfaces. In Proceedings of the DARE 2000 on Designing Augmented Reality Environments, Elsinore, Denmark, 12–14 April 2000; pp. 41–50. [Google Scholar]

- Zuckerman, O.; Gal-Oz, A. To tui or not to tui: Evaluating performance and preference in tangible vs. Graphical user interfaces. Int. J. Hum. Comput. Stud. 2013, 71, 803–820. [Google Scholar] [CrossRef]

- Reeves, S.; Benford, S.; O’Malley, C.; Fraser, M. Designing the spectator experience. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; pp. 741–750. [Google Scholar]

- Peltonen, P.; Kurvinen, E.; Salovaara, A.; Jacucci, G.; Ilmonen, T.; Evans, J.; Oulasvirta, A.; Saarikko, P. It’s mine, don’t touch!: Interactions at a large multi-touch display in a city centre. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 1285–1294. [Google Scholar]

- Ichino, J.; Isoda, K.; Ueda, T.; Satoh, R. Effects of the display angle on social behaviors of the people around the display: A field study at a museum. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing, San Francisco, CA, USA, 27 February 27–2 March 2016; pp. 26–37. [Google Scholar]

- Gallese, V.; Fadiga, L.; Fogassi, L.; Rizzolatti, G. Action recognition in the premotor cortex. Brain 1996, 119 Pt 2, 593–609. [Google Scholar] [CrossRef] [PubMed]

- Rizzolatti, G.; Fogassi, L.; Gallese, V. Neurophysiological mechanisms underlying the understanding and imitation of action. Nat. Rev. Neurosci. 2001, 2, 661–670. [Google Scholar] [CrossRef] [PubMed]

- Iacoboni, M. Imitation, empathy, and mirror neurons. Annu. Rev. Psychol. 2009, 60, 653–670. [Google Scholar] [CrossRef] [PubMed]

- Iacoboni, M. Neural mechanisms of imitation. Curr. Opin. Neurobiol. 2005, 15, 632–637. [Google Scholar] [CrossRef] [PubMed]

- Pineda, J.A. The functional significance of mu rhythms: Translating “seeing” and “hearing” into “doing”. Brain Res. Brain Res. Rev. 2005, 50, 57–68. [Google Scholar] [CrossRef] [PubMed]

- Gastaut, H.J.; Bert, J. EEG changes during cinematographic presentation; moving picture activation of the EEG. Electroencephalogr. Clin. Neurophysiol. 1954, 6, 433–444. [Google Scholar] [CrossRef]

- Muthukumaraswamy, S.D.; Johnson, B.W.; McNair, N.A. Mu rhythm modulation during observation of an object-directed grasp. Brain Res. Cognit. Brain Res. 2004, 19, 195–201. [Google Scholar] [CrossRef] [PubMed]

- Oberman, L.M.; McCleery, J.P.; Ramachandran, V.S.; Pineda, C. EEG evidence for mirror neuron activity during the observation of human and robot actions: Toward an analysis of the human qualities of interactive robots. Neurocomputing 2007, 70, 2194–2203. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Neuper, C.; Krausz, G. Functional dissociation of lower and upper frequency mu rhythms in relation to voluntary limb movement. Clin. Neurophysiol. 2000, 111, 1873–1879. [Google Scholar] [CrossRef]

- Ishii, H. The tangible user interface and its evolution. Commun. ACM 2008, 51, 32–36. [Google Scholar] [CrossRef]

- Isoda, K.; Sueyoshi, K.; Ikeda, Y.; Nishimura, Y.; Hisanaga, I.; Orlic, S.; Kim, Y.K.; Higuchi, S. Effect of the hand-omitted tool motion on mu rhythm suppression. Front. Hum. Neurosci. 2016, 10. [Google Scholar] [CrossRef] [PubMed]

- Rizzolatti, G.; Matelli, M. Two different streams form the dorsal visual system: Anatomy and functions. Exp. Brain Res. 2003, 153, 146–157. [Google Scholar] [CrossRef] [PubMed]

- Oberman, L.M.; Pineda, J.A.; Ramachandran, V.S. The human mirror neuron system: A link between action observation and social skills. Soc. Cogn. Affect. Neurosci. 2007, 2, 62–66. [Google Scholar] [CrossRef] [PubMed]

- Shmuelof, L.; Zohary, E. Dissociation between ventral and dorsal fmri activation during object and action recognition. Neuron 2005, 47, 457–470. [Google Scholar] [CrossRef] [PubMed]

- Beilock, S.L.; Lyons, I.M.; Mattarella-Micke, A.; Nusbaum, H.C.; Small, S.L. Sports experience changes the neural processing of action language. PNAS 2008, 105, 13269–13273. [Google Scholar] [CrossRef] [PubMed]

- Van Elk, M.; Van Schie, H.T.; Zwaan, R.A.; Bekkering, H. The functional role of motor activation in language processing: Motor cortical oscillations support lexical-semantic retrieval. Neuroimage 2010, 50, 665–677. [Google Scholar] [CrossRef] [PubMed]

- Vogt, S.; Buccino, G.; Wohlschlager, A.M.; Canessa, N.; Shah, N.J.; Zilles, K.; Eickhoff, S.B.; Freund, H.J.; Rizzolatti, G.; Fink, G.R. Prefrontal involvement in imitation learning of hand actions: Effects of practice and expertise. Neuroimage 2007, 37, 1371–1383. [Google Scholar] [CrossRef] [PubMed]

- Perry, A.; Bentin, S. Mirror activity in the human brain while observing hand movements: A comparison between EEG desynchronization in the mu-range and previous fmri results. Brain Res. 2009, 1282, 126–132. [Google Scholar] [CrossRef] [PubMed]

- Frey, S.H.; Vinton, D.; Norlund, R.; Grafton, S.T. Cortical topography of human anterior intraparietal cortex active during visually guided grasping. Cognit. Brain Res. 2005, 23, 397–405. [Google Scholar] [CrossRef] [PubMed]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Isoda, K.; Sueyoshi, K.; Miyamoto, R.; Nishimura, Y.; Ikeda, Y.; Hisanaga, I.; Orlic, S.; Kim, Y.-k.; Higuchi, S. Tangible User Interface and Mu Rhythm Suppression: The Effect of User Interface on the Brain Activity in Its Operator and Observer. Appl. Sci. 2017, 7, 347. https://doi.org/10.3390/app7040347

Isoda K, Sueyoshi K, Miyamoto R, Nishimura Y, Ikeda Y, Hisanaga I, Orlic S, Kim Y-k, Higuchi S. Tangible User Interface and Mu Rhythm Suppression: The Effect of User Interface on the Brain Activity in Its Operator and Observer. Applied Sciences. 2017; 7(4):347. https://doi.org/10.3390/app7040347

Chicago/Turabian StyleIsoda, Kazuo, Kana Sueyoshi, Ryo Miyamoto, Yuki Nishimura, Yuki Ikeda, Ichiro Hisanaga, Stéphanie Orlic, Yeon-kyu Kim, and Shigekazu Higuchi. 2017. "Tangible User Interface and Mu Rhythm Suppression: The Effect of User Interface on the Brain Activity in Its Operator and Observer" Applied Sciences 7, no. 4: 347. https://doi.org/10.3390/app7040347