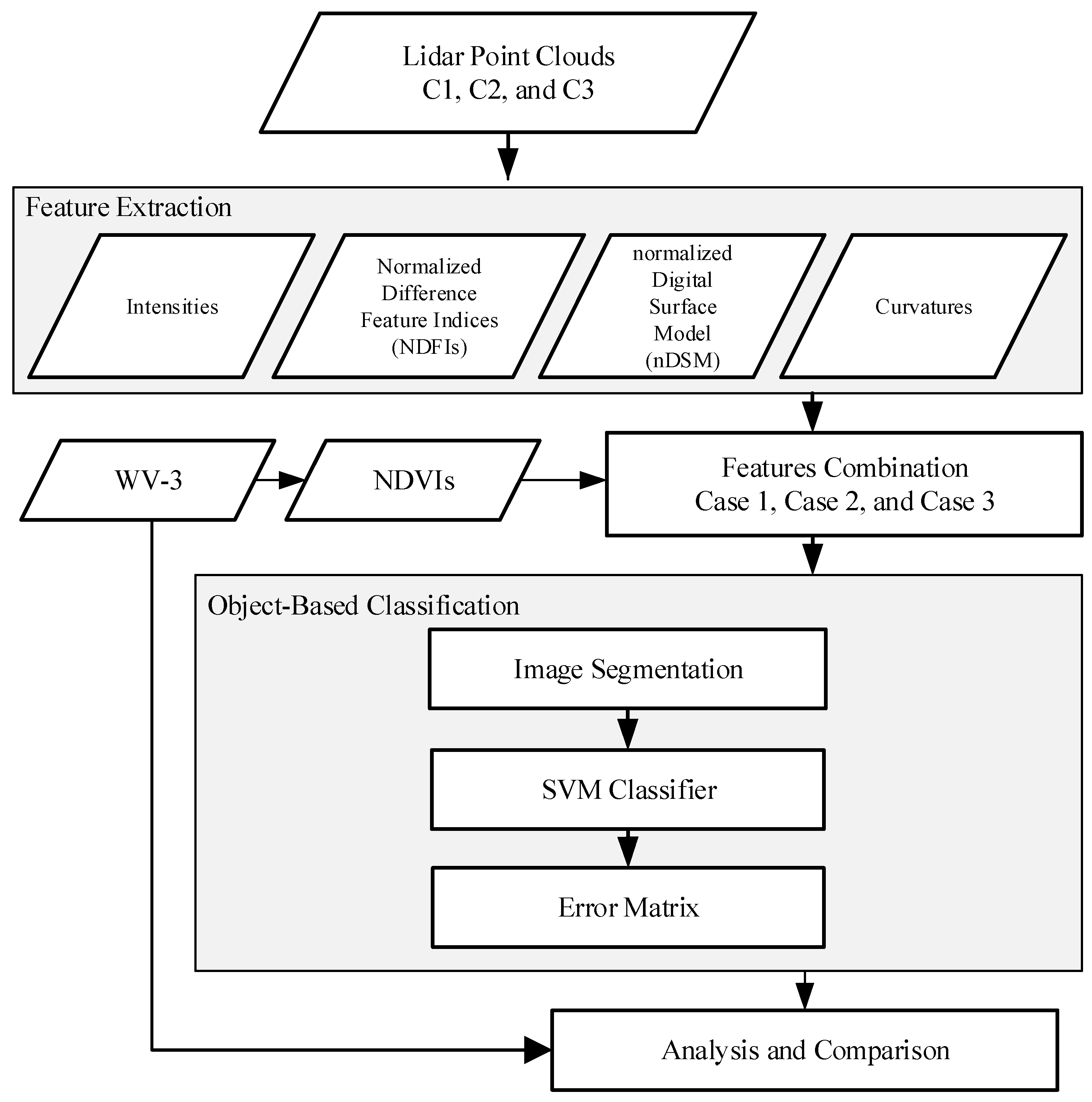

The experiments in this study analyzed three different aspects of the validation procedure. The first aspect compares single- and multi-wavelength LiDAR systems. Three channels (i.e., green, NIR, and MIR) were used to simulate single-wavelength LiDAR individually, and the results from single- and multi-wavelength LiDARs were used to compare the accuracy of land cover classification. The second aspect checks the suitability of spectral and geometrical features in land cover classification by evaluating different features extracted from multi-wavelength LiDAR system to understand the significance of the features. The third aspect compares the vegetation indices from passive multispectral images and active multi-wavelength LiDAR, including the results of vegetation classification and correlation of vegetation index.

4.1. Comparison of Single-Wavelength and Multi-Wavelength LiDAR

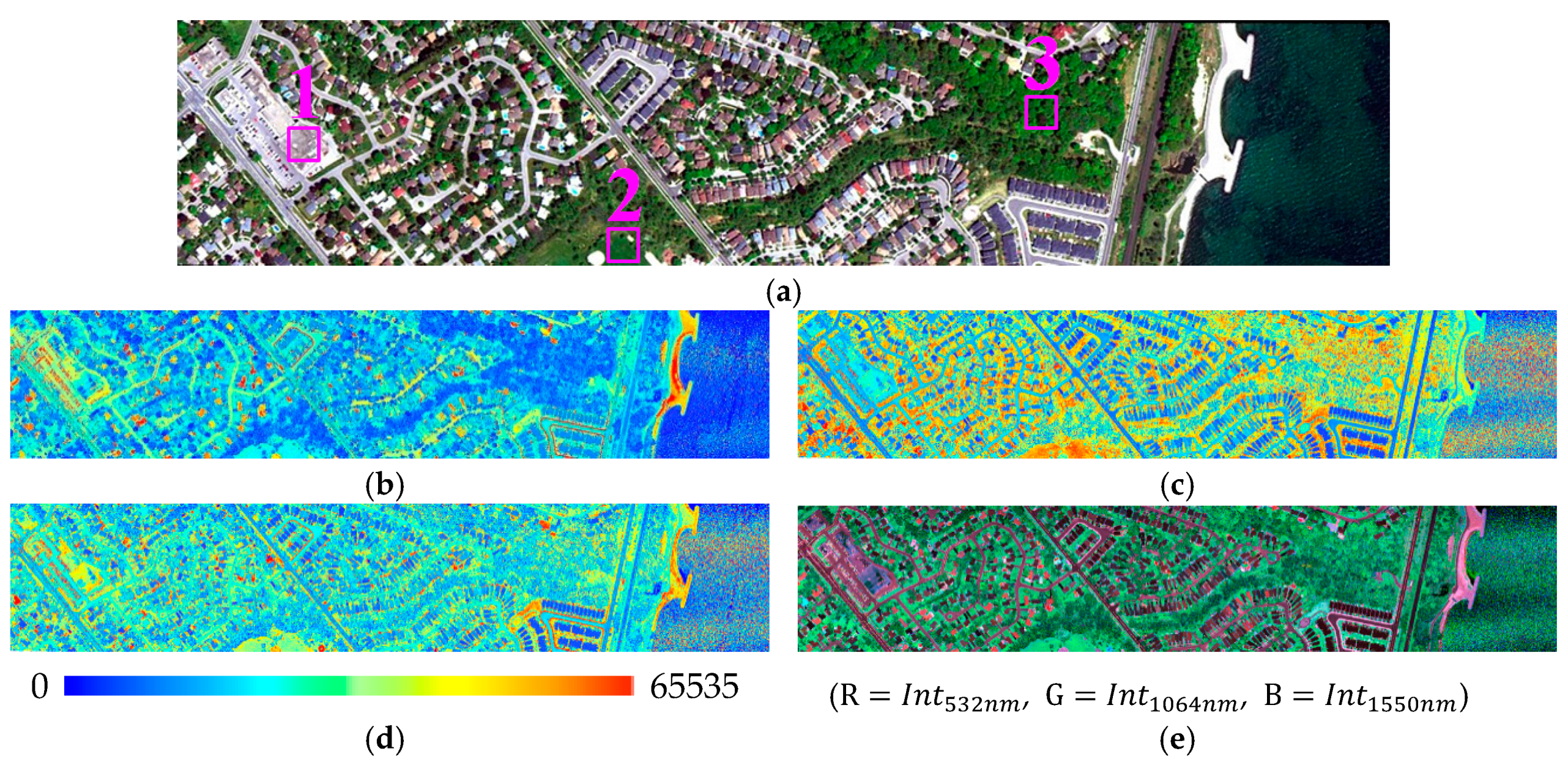

To compare the accuracy of land cover classification between single- and multi-wavelength LiDAR systems, we designed four combinations (

Table 4) including the features intensity, nDSM, and curvature. The first three combinations were single-wavelength LiDAR features from each channel, respectively. The last combination merged all features from three channels, totaling nine, including three intensities, three nDSMs, and three curvatures. The segmentation of single-wavelength LiDAR only considered its own intensity, whereas the segmentation of multi-wavelength LiDAR considered three intensities simultaneously. The classification employed the same training areas but different features.

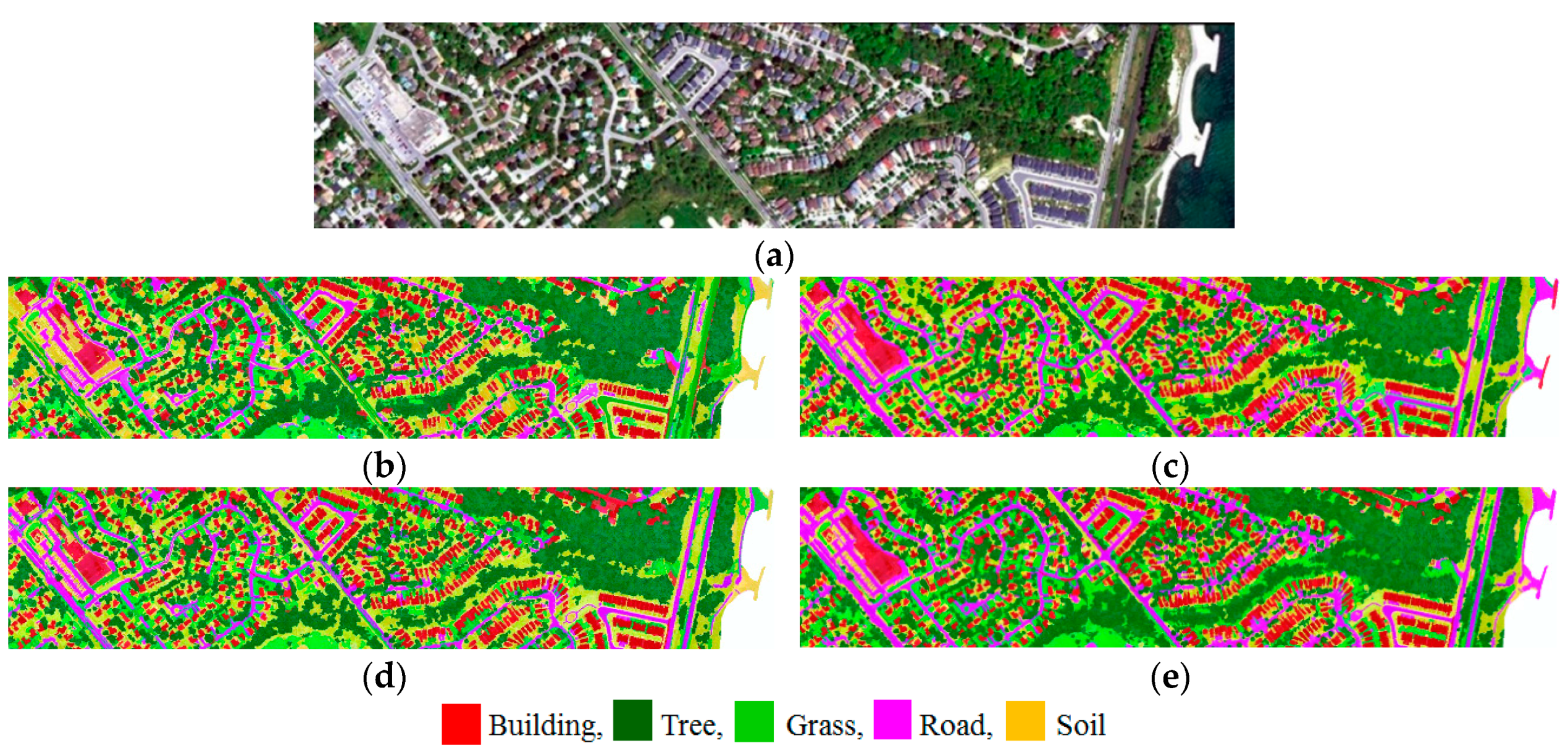

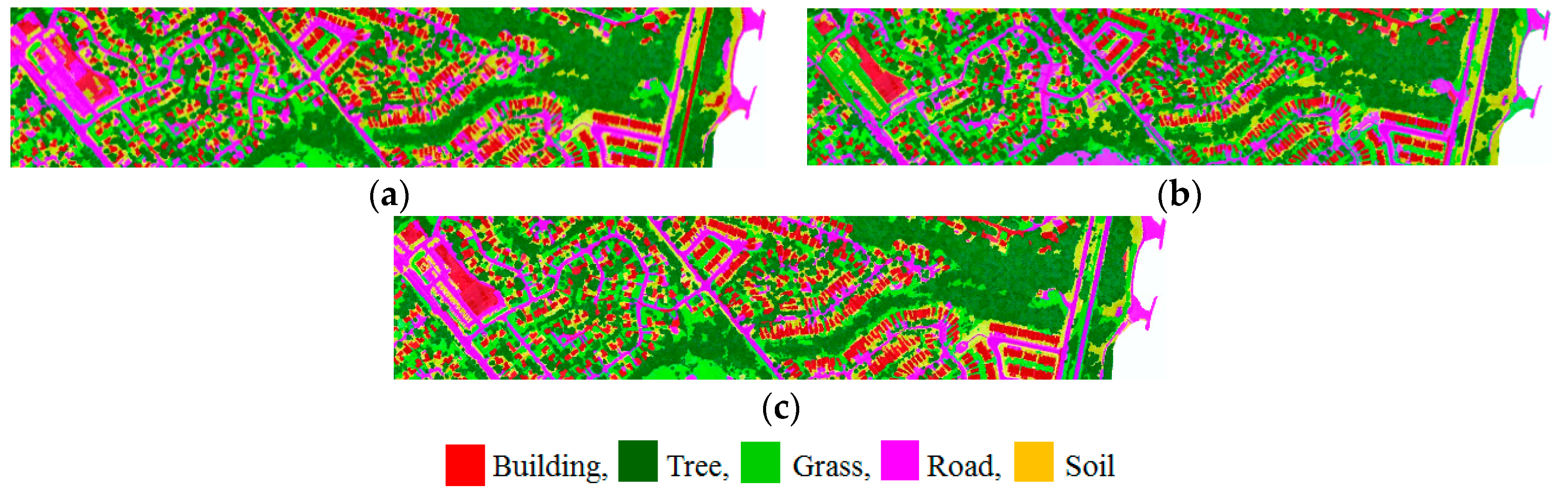

As previously described, the test area was classified into five land cover classes (buildings, trees, grass, roads, and bare soil), and the results from the four combinations were color-coded to indicate each land cover type (

Figure 5).

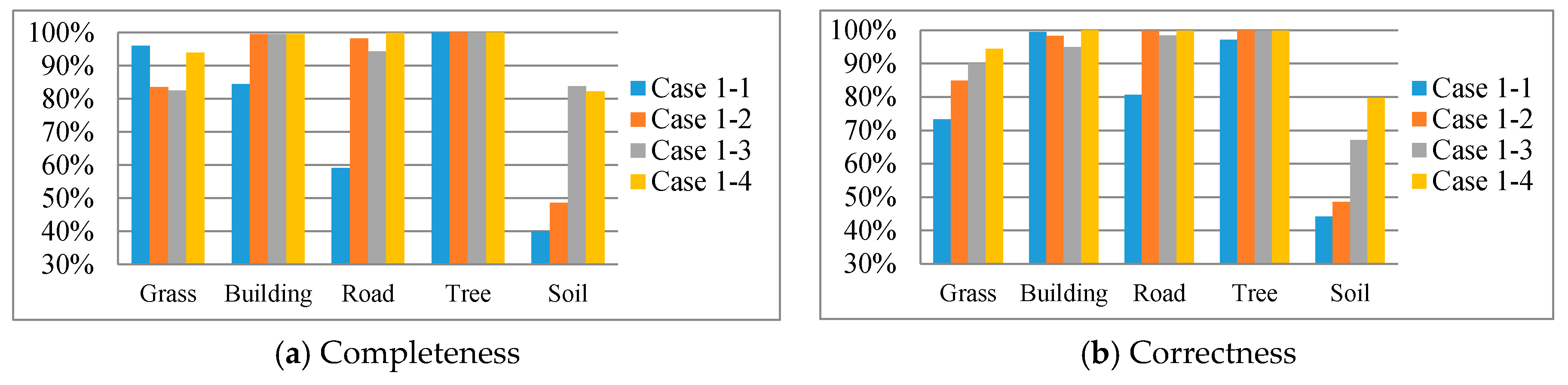

The quantitative results (i.e., completeness and correctness;

Table 5 and

Figure 6) show that, as expected, the accuracy of the green channel was lower than the other two channels in infrared because the green channel is designed to represent water surface in most situation. The completeness of roads for 532 nm (Case 1-1) was only 59%, lower than other cases, meaning that the infrared channel performs better than the green channel for asphalts road. Therefore, the intensity of infrared is widely used to discriminate between asphalt and non-asphalt roads [

36]. The completeness values of bare soil for 532 nm (Case 1-1) and 1064 nm (Case 1-2) were less than 52% because soil, road, and grass have similar height and curvature in the single-wavelength system. The completeness of 1550 nm (Case 1-3) is slightly better than the other two channels because it is near-to-mid infrared. Wang et al. (2012) [

8] also found that results from 1550 nm were better than those from 1064 nm. The integration of all features (Case 1-4) provided better discrimination among soil, road, and grass than single-wavelength features.

Because trees and buildings are significantly higher than other land covers, they show limited improvement when the three wavelengths were integrated. Overall, the completeness improvement from single- to multi-wavelength LiDAR ranged from 1.7 to 42.3 percentage points. In correctness analysis, the behavior of soil, road, and grass was similar to results from completeness. The multi-wavelength features (Case 1-4) also improved the correctness of these three classes from 1.4 to 35.8 percentage points. The soil and grass had a higher improvement rate when the multi-wavelength feature was adopted. The decrease in completeness is less than 2.5 percentage points between cases 1-1 and 1-4 for grass. For correctness, the decrease in accuracy is less than 0.2 percentage points between cases 1-2 and 1-4 for tree.

The classification results lead to overall accuracy (OA) from individual channels of 82%, 90%, and 92% from wavelengths 532, 1064, and 1550 nm, respectively. The OA and kappa coefficient achieved 96% and 95%, respectively, using extracted features from the multi-wavelength LiDAR system. The OA of multi-wavelength LiDAR increased 4–14 percentage points compared to single-wavelength LiDAR (

Figure 7). The single-wavelength LiDAR extracts limited information and caused misclassifications when the classes had similar reflectivity, such as that for roads and grass in the green channel. The OA of classified results were similar to the features from 1064 nm and 1550 nm (90% and 92%) (

Figure 7), but misclassifications still appeared for grass and soil (

Figure 6).

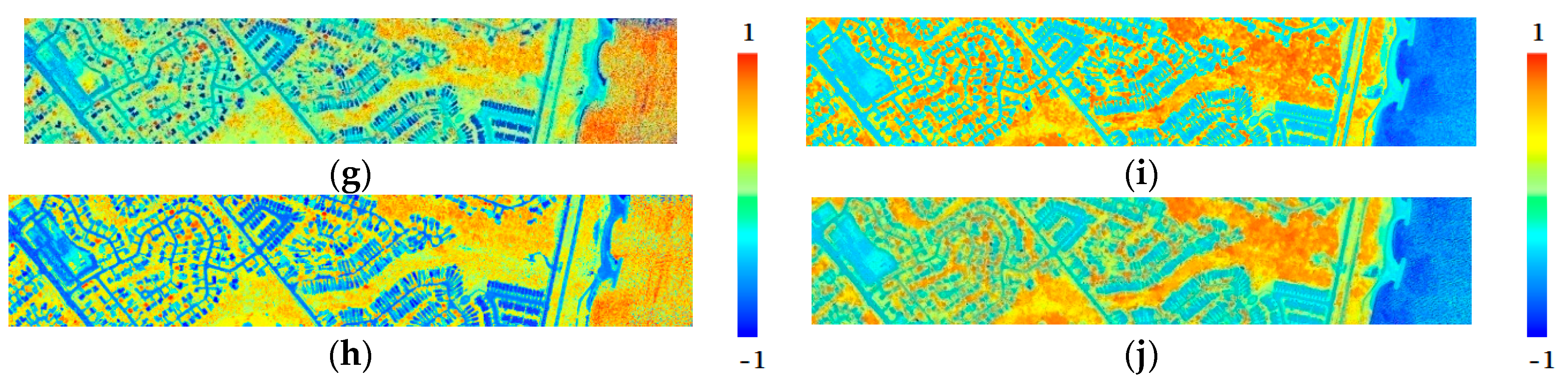

4.2. Comparison of Spectral and Geometrical Features from Multi-Wavelength LiDAR

Airborne LiDAR records both 3D coordinates and reflected signals, and the LiDAR points can be used to generate the spectral features (i.e., intensity and vegetation index) and geometrical features (i.e., height and roughness). One characteristic of multi-wavelength LiDAR is providing spectral features at different wavelengths. The spectral feature of multi-wavelength LiDAR is not only the intensity, but also the vegetation index from the infrared and green channels. To compare the capability and benefits of spectral and geometric features for different land cover classification, we designed three combinations (

Table 6): spectral features included intensity and NDFIs; geometrical features included nDSM and curvature; and the third merged the features of previous two combinations. For these three cases, the input features for classification were also the features for segmentation, and we used equal weight when combining all these input features in segmentation. We summarized the results of these three cases (

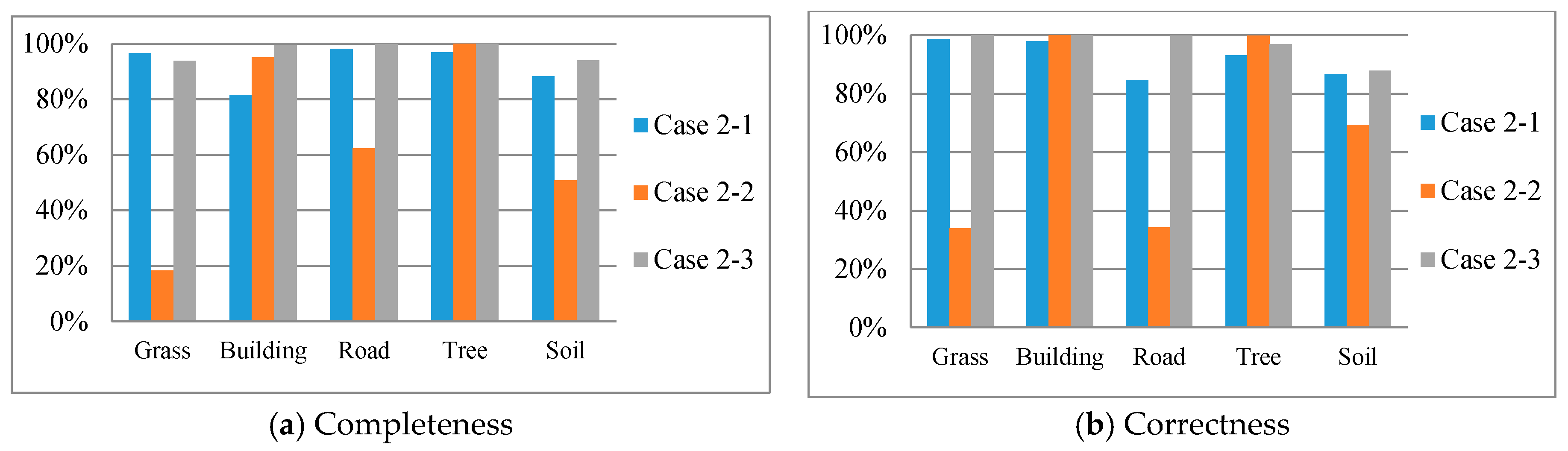

Figure 8) and the completeness and correctness for each land cover in different combinations (

Table 7,

Figure 9).

The completeness from spectral features (Case 2-1) was higher than 80% because road, soil, and grass have different spectral signatures [

37]. In addition, the results of spectral features were significantly better than for the geometrical features (Case 2-2) for road, soil, and grass. The geometrical features showed higher accuracy for buildings and trees because these two objects have different roughness (i.e., curvature). Because the heights of grass, road, and soil classes are similar; these geometrical features are not easily discriminated among those objects. The integration of spectral and geometrical features (Case 2-3) improved the accuracy of these three classes from 1.7 to 75.5 percentage points.

In correctness analysis, the integration of all features (Case 2-3) improved the accuracy from 1.1 to 65.6 percentage points. Most accuracy indices from spectral features were higher than those from geometrical features (

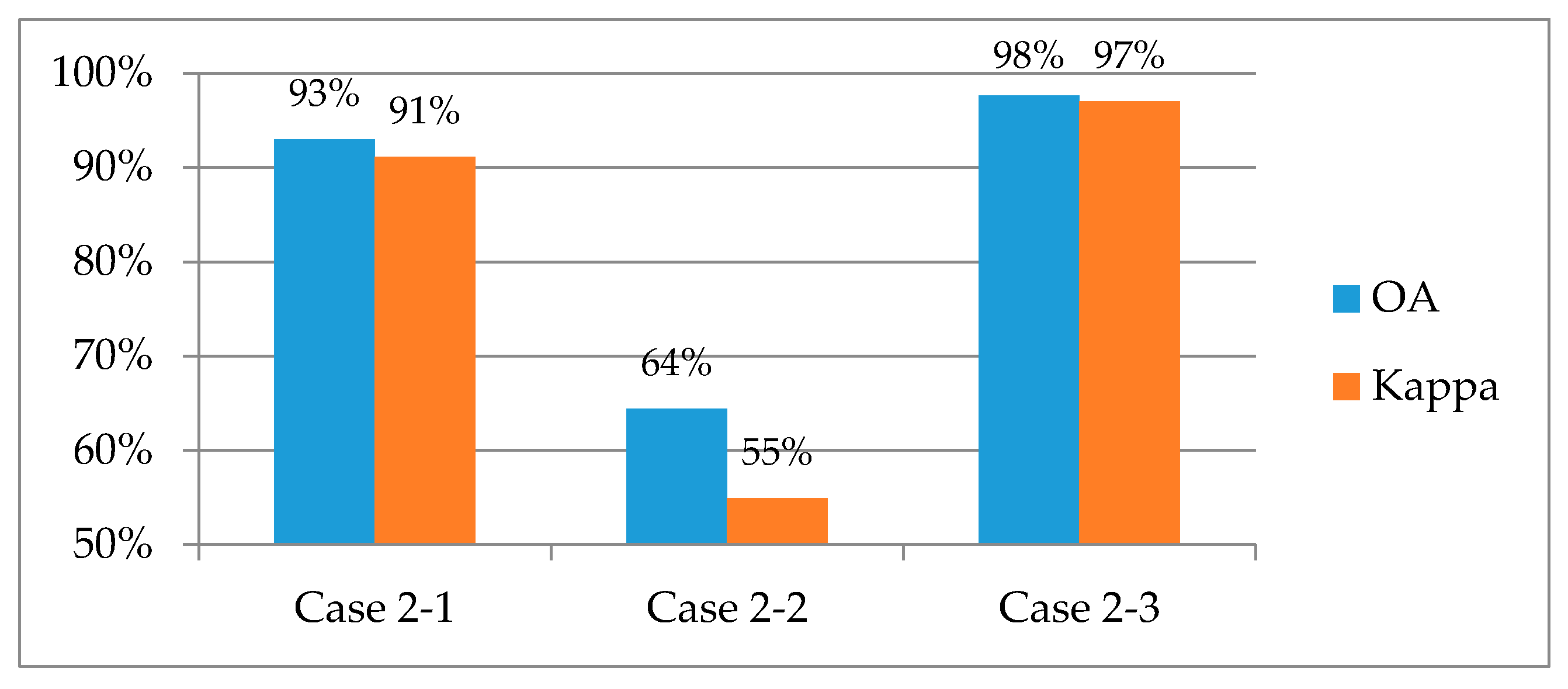

Table 7); in other words, the results indicate that the use of LiDAR spectral features contributes more than the geometrical features. The OA of spectral features reaches 93% but only 64% for geometrical features (

Figure 10) because of the higher accuracy for buildings and trees but lower accuracy for grasses, roads, and soils. The results demonstrate that spectral features from LiDAR could be useful features in land cover classification when multi-wavelength intensities are available.

4.3. Comparison of Vegetation Index from Active and Passive Sensor

Passive sensors (e.g., optical multispectral images) can only be used to detect naturally available energy (e.g., solar energy). By contrast, active sensors (e.g., LiDAR) provide their own energy source for illumination and obtain data regardless of time of day or season; therefore, active LiDAR sensors are more flexible than optical sensors in different weather conditions. Vegetation detection is an important task in ground-point selection for the digital terrain model (DTM) generation from LiDAR. Traditionally, vegetated areas are detected using the surface roughness from irregular points; intensity from LiDAR data is seldom used in the separation of vegetation and non-vegetation. With the development of multi-wavelength LiDAR, the intensities from different wavelengths are possibly similar to passive sensors in vegetation detection. Our goal is to discover the capability of the vegetation index from LiDAR, and the aim of this section is to compare the performance of vegetation index from active and passive sensors.

The NDVI has been widely used in vegetation detection, but it is calculated from passive multispectral images. Because the passive sensor has inherent limitations, such as day operation and haze, an active sensor like LiDAR can be operated at night and penetrate haze and might be an option to overcome these restrictions. We designed two cases to analyze the similarity and consistency (

Table 8) between vegetation indices from active and passive sensors. Because the reflectance of the multispectral WV-3 image represents the radiance of the object surface (e.g., tree top), we selected the intensity of the highest point in a 1.2 × 1.2 m cell (i.e., pixel size of WV-3 multispectral image) as the LiDAR intensity for LiDAR-derived vegetation index so that both reflectance of the passive sensor and return energy of the active sensor represent the energy of object surface in different wavelengths. For the training and test data, the grass and tree classes were merged into a vegetation class, and the building, road, and soil classes were combined into a non-vegetation class; only the vegetation indices were used in segmentation and classification. A visual comparison of the two results of vegetation detection (

Figure 11) show high consistency between these two vegetation indices.

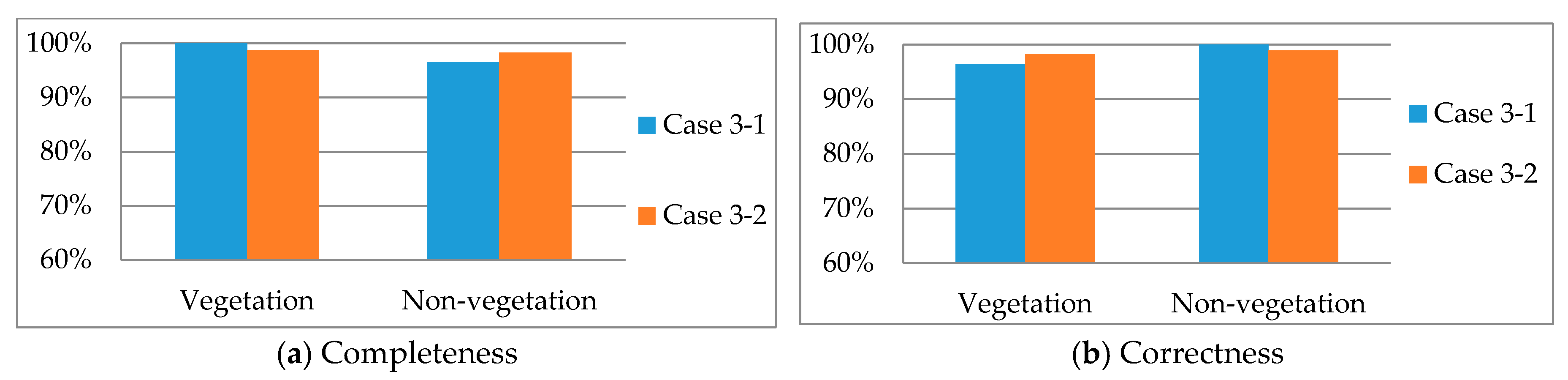

Completeness and correctness were compared between vegetation and non-vegetation in different combinations (

Table 9 and

Figure 12). Here we considered only two classes: vegetation and non-vegetation; therefore, the results were better than the results in

Section 4.1 and

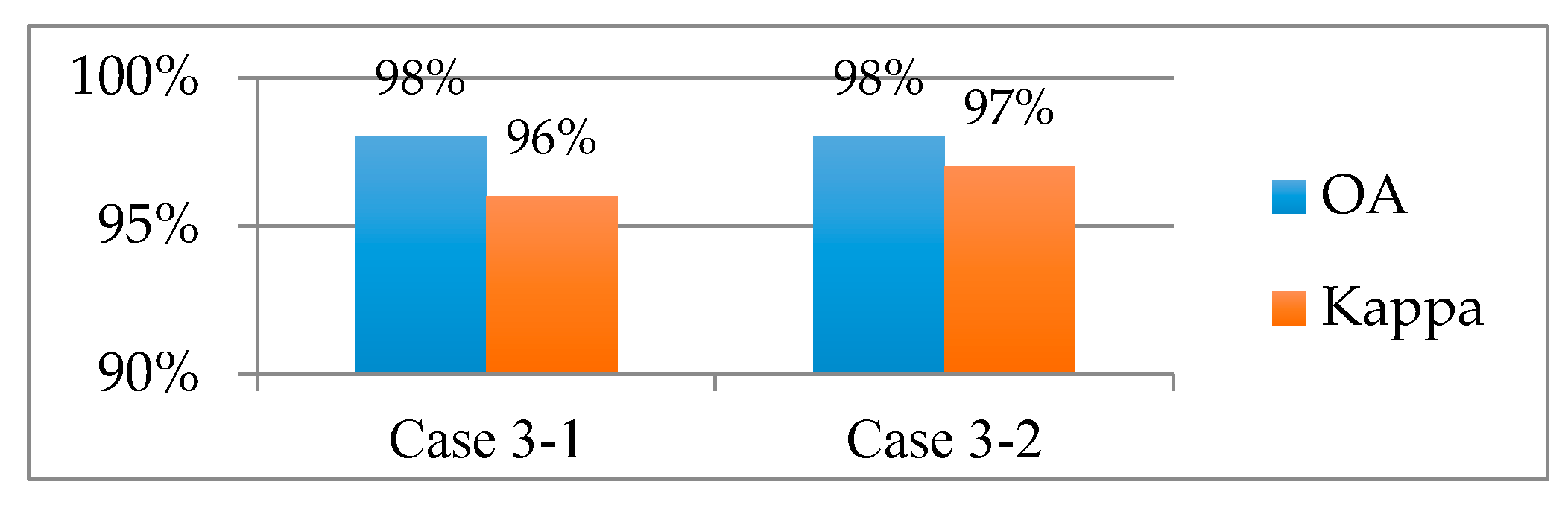

Section 4.2 (i.e., five classes). The completeness and correctness analysis for active LiDAR and passive imagery were higher than 96%. The correctness of vegetation detection from the passive sensor was 98% in test samples and slightly lower, 96%, for the active sensor. A small amount of vegetation and non-vegetation was misclassified, but the overall accuracy reached 98% in both cases. The overall accuracies and kappa coefficients were also similar in this test area (

Figure 13), with vegetation indices generated from multi-wavelength LiDAR were similar to those from passive multispectral imagery. The kappa of the passive sensor was slightly better (1%) than that for the active sensor. This experiment demonstrated that the vegetation index from active LiDAR sensor could be used to detect vegetation area effectively.

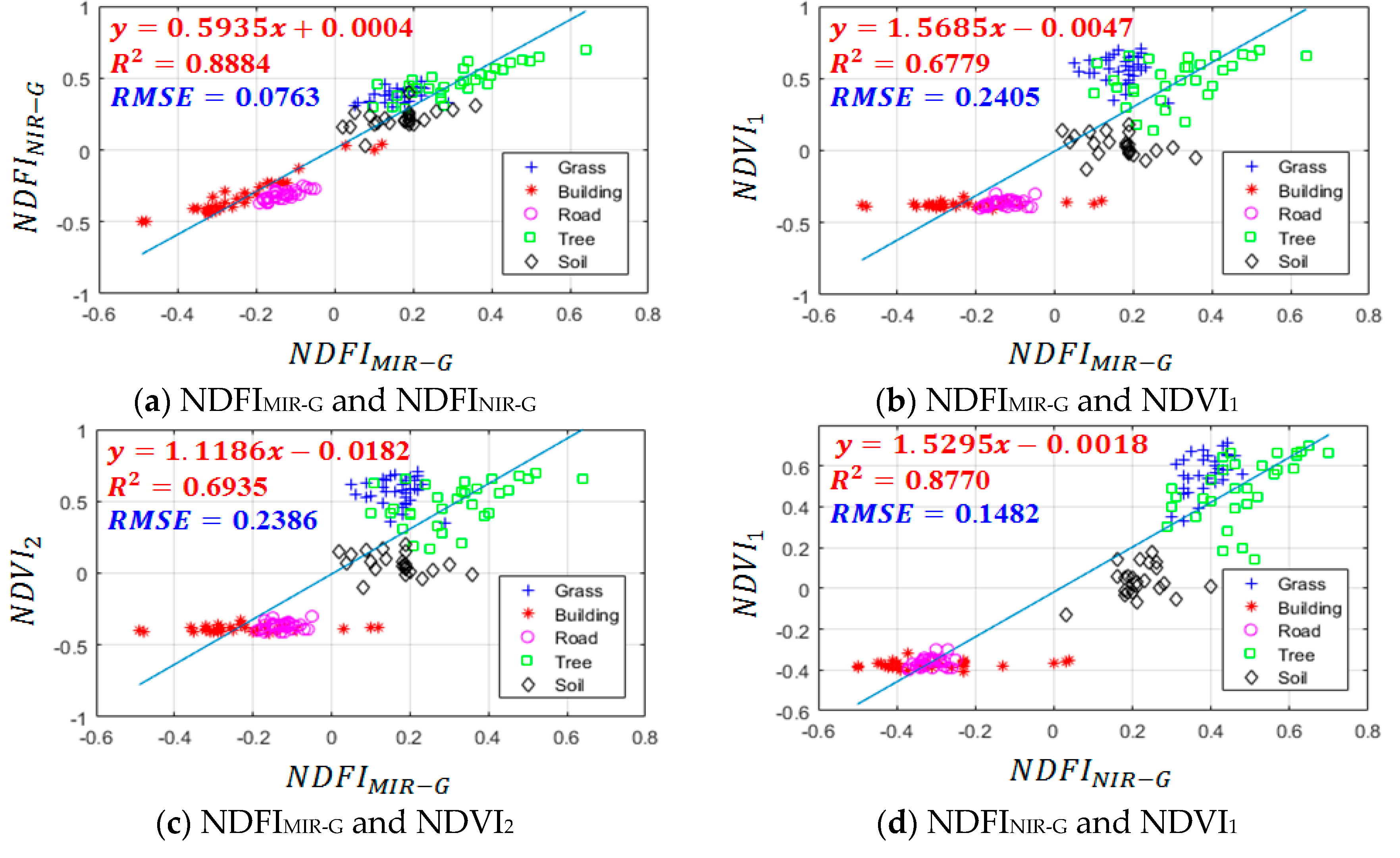

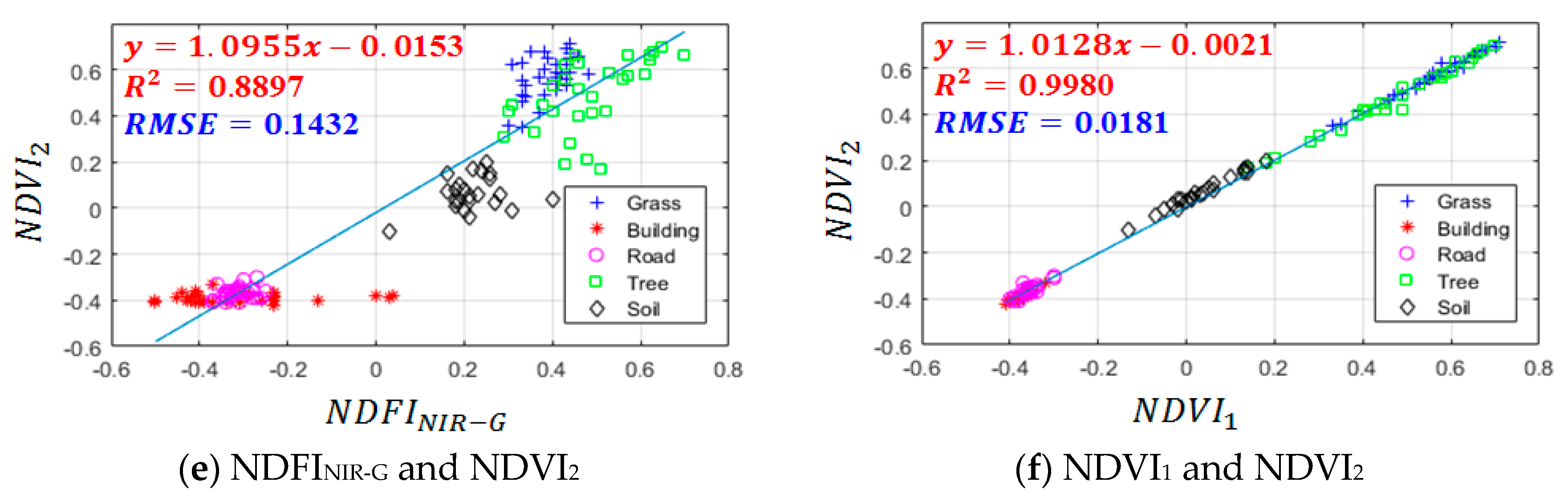

We also used the training data to calculate the coefficient of determination (R

2) among these vegetation indices (

Figure 14). For each training dataset, four vegetation indices were extracted from both active and passive sensors. Any two vegetation indices were selected to determine the similarity between them, and six combinations were selected for similarity analysis. The R

2 between two passive vegetation indices (i.e., NDVI

1 and NDVI

2) reached 0.99 while the R

2 between two active vegetation indices (i.e., NDFI

MIR-G and NDFI

NIR-G) reached 0.88. The R

2 among active or passive vegetation indices showed high consistency in these training data.

In the analysis across sensors, the R

2 for combinations of (NDFI

MIR-G and NDVI

1) and (NDFI

MIR-G and NDVI

2) were less than 0.7 because the NDFI

MIR-G used the MIR while the NDVI

1 and NDVI

2 used NIR. The root-mean-square errors (RMSEs) of (NDFI

MIR-G and NDVI

1) and (NDFI

MIR-G and NDVI

2) (i.e., 0.2405 and 0.2386) also had a large fitting error compared to other cases. For NDFI

NIR-G, NDVI

1, and NDVI

2, all calculated from NIR, the R

2 of combinations (NDFI

NIR-G and NDVI

1) and (NDFI

NIR-G and NDVI

2) were higher than 0.87 for both combinations. Anderson et al. (2016) [

38] also found that the vegetation indices from active sensors had high correlations. They reported that the vegetation indices measured from active and passive sensors were correlated (R

2 > 0.70) for the same plant species. Although the vegetation index recorded by the active sensor was consistently lower than that of the passive sensor, differences were small and within the range of possibility. In this study, the vegetation indices from active LiDAR were also lower than those from passive imagery and were highly correlated (R

2 > 0.87) between active and passive sensors. In addition, the overall accuracy in classification of vegetation from non-vegetation was higher than 98%. Our analysis clearly showed that vegetation indices from multi-wavelength could be a helpful feature for vegetation detection.