Modified Adversarial Hierarchical Task Network Planning in Real-Time Strategy Games

Abstract

:1. Introduction

- Players can pursue actions simultaneously with the actions of other players, and need not take turns, as in games such as chess. Moreover, player actions can be conducted over very short decision times, allowing for rapid sequences of actions.

- Players can pursue concurrent actions employing multiple controllable units. This is much more complex than conventional board games, where only a single action is performed with each turn.

- Player actions are durative, in that an action requires numerous time steps to be executed. This is also much more complex than conventional board games, where actions must be completed within a single turn.

- The state space and branch factors are typically very large. For example, a typical 128 × 128 map in “StarCraft” generally includes about 400 player controllable units. Considering only the location of each unit, the number of possible states is about 101685, whereas the state space of chess is typically estimated to be around 1050. Of course, even larger values are obtained when including the other factors in the RTS game.

- The environment of an RTS game is dynamic. Unlike in conventional board games such as chess, where the fundamental natures of the board and game pieces never change, the environment of an RTS game may change drastically in response to player decisions, which can invalidate a generated plan.

- The HTN description used by AHTN cannot express complex relationships among tasks and accommodate the impact of the environment on tasks. For example, consider a task involving the forced occupation of a fortified enemy emplacement, denoted as the capture-blockhouse task, which consists of two subtasks. The first subtask involves luring the enemy away from the emplacement (denoted as the luring-enemy subtask), and the second subtask involves attacking the emplacement (denoted as the attacking subtask). Here, the capture-blockhouse task will fail if the attacking subtask fails. However, failure of the luring-enemy subtask would not necessarily lead to an overall failure of the capture-blockhouse task, but would rather tend to increase the cost of completing the parent task because the attacking subtask can still be executed even though the luring-enemy subtask fails. This type of relation cannot be expressed by the HTN description employed in AHTN. Relations related to conditions where subtasks are triggered by the environment or where the execution results of a parent task depend on both the environment and the successful completion of its subtasks also cannot be expressed by the HTN description employed in AHTN.

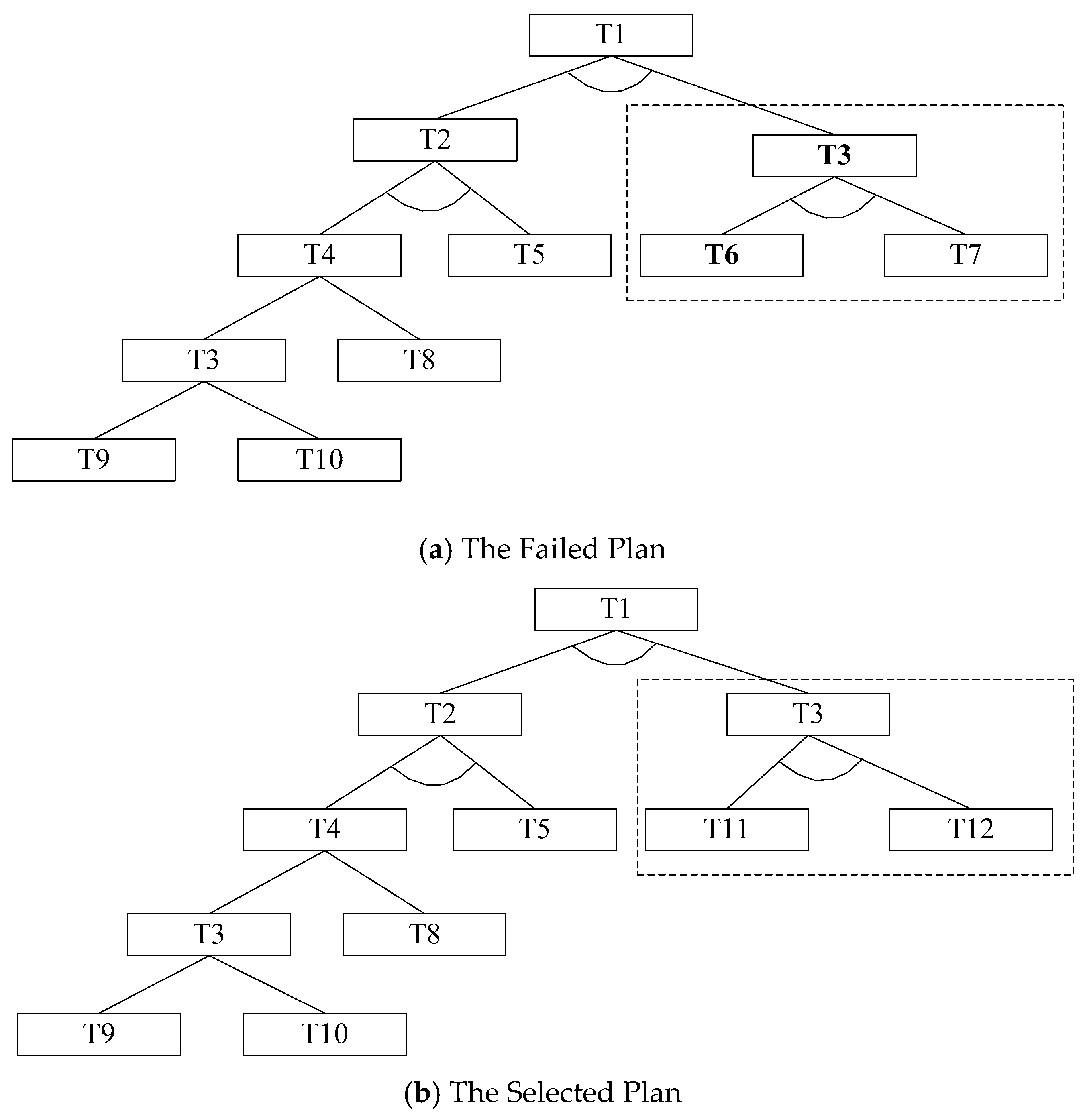

- AHTN planning cannot effectively address task failures that occur during plan execution. The planning process in AHTN generates a new plan at each frame of the game whenever idle units which are not assigned or execute actions exist. The new plan processing cannot cancel assigned actions of the previous planning. If units have been assigned actions in previous planning, they will keep executing those actions until those actions are failed or completed. In AHTN, each failed task of the previous plan is simply removed at the time of failure without considering its impacts on remaining executing tasks. The remaining tasks will continue executing though these executions may be meaningless. To illustrate this point, we note that, if the failure of a task leads to the failure of its plan directly, the remaining executing tasks of the plan should not be continued execution because they cannot affect the failure of the plan. The remaining executing tasks should therefore be terminated so that the released resources can be employed to repair failed tasks or formulate a new plan. Thus, when one task fails and cannot be repaired, all related tasks in the plan should be terminated, and the AI player should attempt to repair the task to maintain the validity of the original plan.

2. Related Work

3. Extended HTN Description

3.1. Requirements Analysis

3.1.1. Essential Task Attribute

- if the essential task attributes of both are true, the relationship between and is AND;

- if the essential task attributes of both are false, the relationship between and is OR;

- if the essential task attribute of only one is true, the relationship is neither AND nor OR.

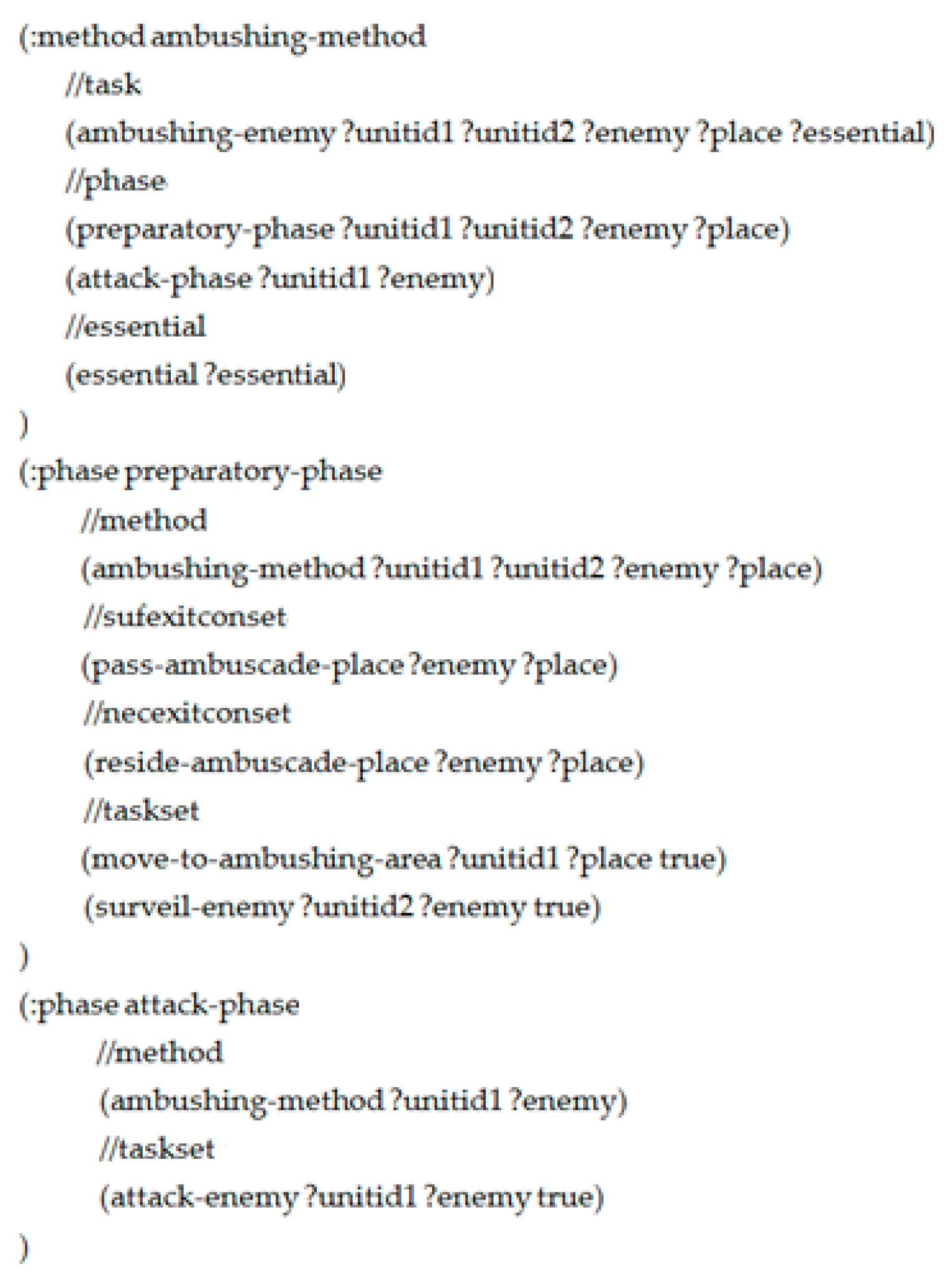

3.1.2. Phase and Exit Condition Attributes

- The environment can control the start of tasks once previous tasks have been completed. For example, the task associated with the sudden concealed attack on an enemy, denoted as the ambushing-enemy task, consists of two subtasks: the first is associated with movement to the staging area of the ambuscade, denoted as move-to-ambushing-area, and the actual activity of attacking, denoted as attack-enemy. It is obvious that the attack-enemy subtask could not be executed immediately upon completing the move-to-ambushing-area subtask because the attack-enemy task cannot be executed until the enemy resides in the ambuscade area.

- The environment could necessitate the failure of a task. For the ambushing-enemy task example, the move-to-ambushing-area subtask must be completed before the enemy has passed the ambuscade staging area; otherwise, the ambushing-enemy task would fail.

- is the name of the phase.

- consists of the name of the method to which the phase belongs and a list of parameters for the phase.

- is the set of sufficient exit conditions. Each element is a logic expression of literals which consist of the name and a list of parameters.

- is the set of necessary exit conditions. Each element is a logic expression of literals which consist of the name and a list of parameters. Here, subsequent phases can be executed only when all necessary exit conditions are satisfied.

- is the set of subtasks that should be completed to accomplish a compound task. The subtasks can be either compound tasks or primitive tasks. Each element in consists of the subtask’s name and a list of parameters.

- Any essential task of the phase has failed.

- Any sufficient exit condition is satisfied, with one or more essential tasks still executing.

- Any sufficient exit condition is satisfied, and no subtasks have been completed.

- All subtasks have failed.

3.2. Definition of the Extended HTN Description

- is the set of current world states, and consists of all information that is relevant to the planning process.

- is the set of task decomposition methods. Each method can be applied to decompose a task into a set of subtasks.

- is the set of operators. Each operator is an execution of a primitive task.

- is the current task network. It is a tree whose nodes are tasks, methods, or phases.

- is the state transform function. Given , defines the transition of the state when an primitive task is executed by an agent. If , the operator is not applicable in .

- is the name of the method.

- is the name of the task to which the method is applied.

- is the logical preconditions for the method, which should be satisfied when the method is applied.

- is the list of phases. The sequence of the elements in is the execution sequence.

- is a Boolean value, and is true to indicate that a task to which the method is applied is an essential task.

- is the primitive task that can be applied by this operator.

- is the precondition that must be satisfied before task execution.

- are the delete effects.

- are the add effects.

- is a Boolean value, and is true to indicate that the task to which the operator is applied is an essential task.

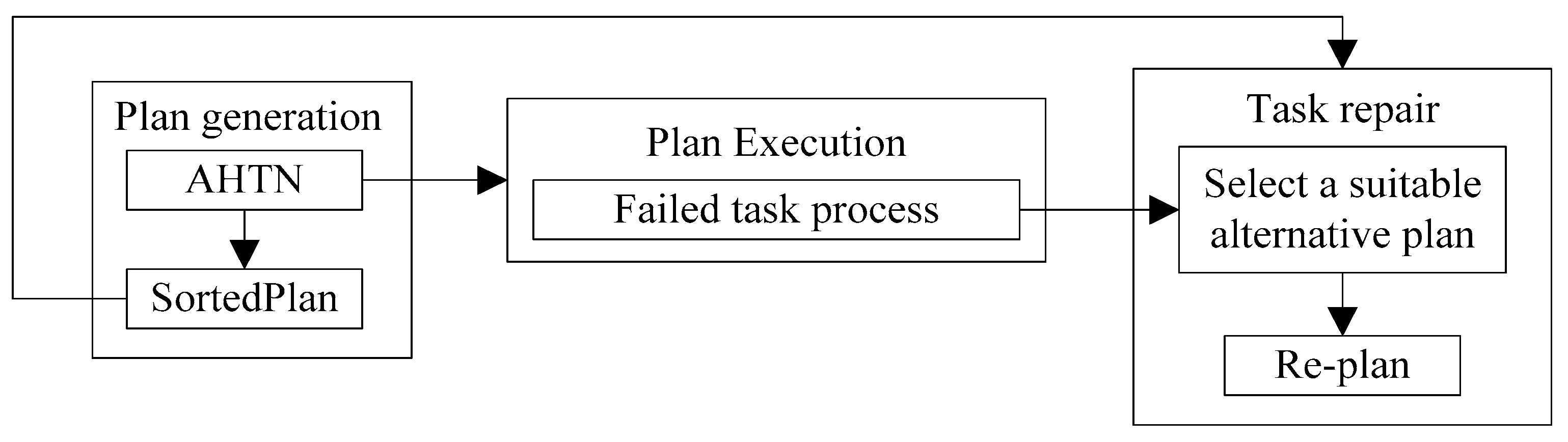

4. AHTN-R Planning Algorithm

4.1. ATHNR Framework

| Algorithm 1 Returns |

| 1. If then |

| 2. Get the root of N, denoted as the |

| 3. Return |

| 4. End If |

| 5. Acquire the phase p to which t belongs |

| 6. Get all tasks denoted as of |

| 7. If all tasks in have been executed then |

| 8. Get the next phase of |

| 9. If then |

| 10. Return |

| 11. End If |

| 12. Get all tasks denoted as of |

| 13. For all do |

| 14. If then |

| 15. Return |

| 16. End If |

| 17. End For |

| 18. Return |

| 19. End If |

| 20. For all that have not been executed do |

| 21. If is a primitive task then |

| 22. Return |

| 23. End If |

| 24. Get the first phase of the method applied to |

| 25. Get all tasks denoted as of |

| 26. For all do |

| 27. If then |

| 28. Return |

| 29. End If |

| 30. End For |

| 31. End For |

| 32. Return |

4.2. Failed Task Monitoring Strategy Using the Extended HTN Description

| Algorithm 2 Return TaskFailed(t) |

| 1. If then |

| 2. SubTaskFailed(t) |

| 3. Return |

| 4. End If |

| 5. If t is a compound task then |

| 6. Acquire all methods m of t |

| 7. For each method |

| 8. Get the precondition set of |

| 9. If all is satisfied then |

| 10. Add t into RepairTaskList |

| 11. Return |

| 12. End if |

| 13. End For |

| 14. End if |

| 15. SubTaskFailed(t) |

| Algorithm 3 SubTaskFailed(t) |

| 1. Acquire the phase corresponding to t |

| 2. If then return |

| 3. End If |

| 4. If one of the sufficient conditions of is met then |

| 5. If any essential tasks of are failed then |

| 6. PhaseFailed(); |

| 7. MethodFailed(); |

| 8. Else If has no essential task |

| 9. (all tasks of were executed or did not start) |

| 10. PhaseFailed(); |

| 11. MethodFailed(); |

| 12. End If |

| 13. End If |

| 14. Else If any essential tasks of fail |

| 15. ( has no essential task all tasks of fail) |

| 16. PhaseFailed(); |

| 17. MethodFailed(); |

| 18. End If |

| 19. End If |

| Algorithm 4 PhaseFailed() |

| 1. Get all tasks denoted as of |

| 2. For all tasks |

| 3. If then |

| 4. continue; |

| 5. End If |

| 6. If t is a compound task then |

| 7. Get the method m applied to t |

| 8. Get all phases within of m |

| 9. For all phases |

| 10. PhaseFailed(q) |

| 11. End For |

| 12. Else |

| 13. Cancel(t) |

| 14. End If |

| 15. End For |

| Algorithm 5 MethodFailed (p) |

| 1. Get the method m to which p belongs |

| 2. For all phases of m |

| 3. If then |

| 4. PhaseFailed(q) |

| 5. End If |

| 6. End For |

| 7. Get task t to which m is applied |

| 8. TaskFailed(t) |

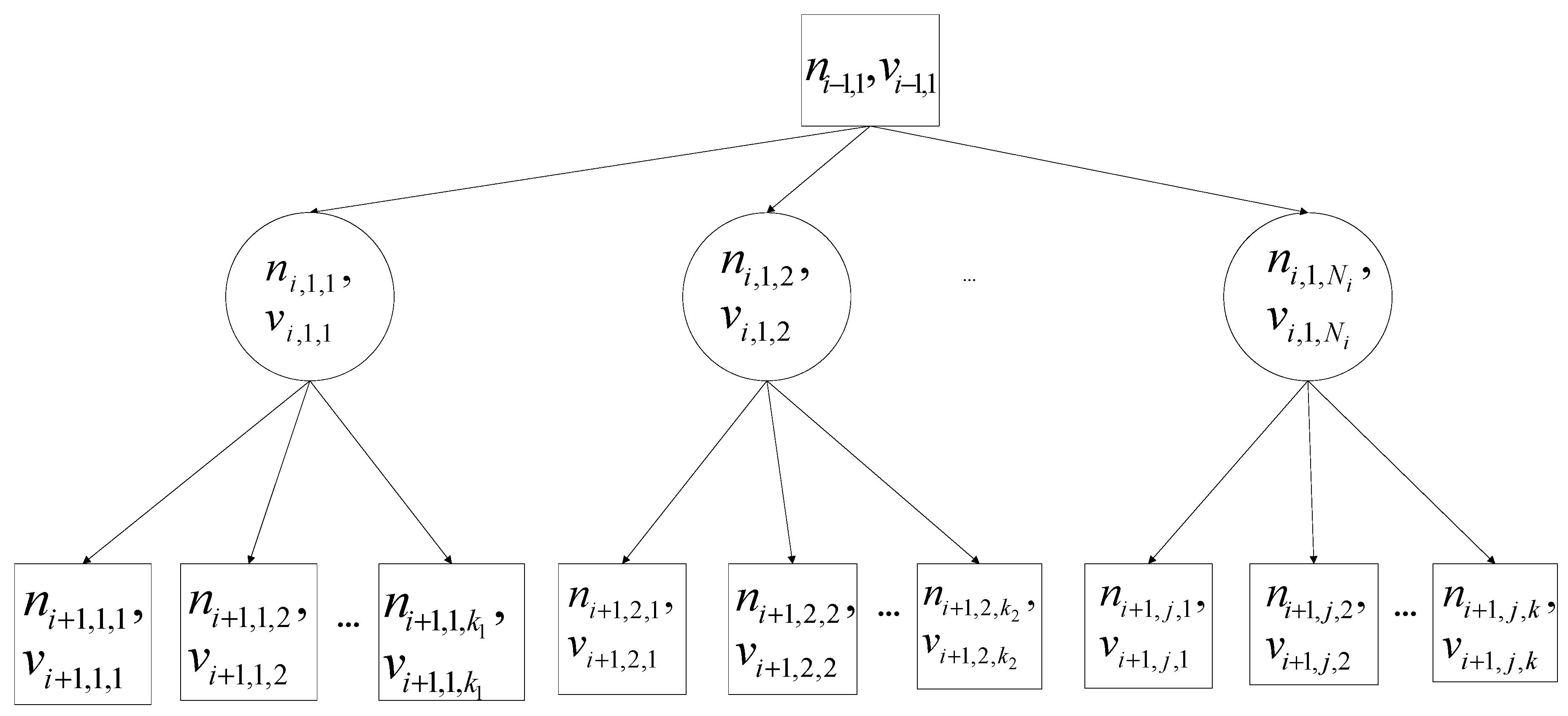

4.3. Task Repair Strategy Based on the Priorities of the Alternative Plans

- 1.

- if ni,j (i.e., the parent node) is a max node, its subnodes (or child nodes) are ordered decreasingly from left to right according to their evaluations;

- 2.

- if ni,j is a min node, its subnodes are ordered increasingly from left to right according to their evaluations.

| Algorithm 6 SortedPlan(root) |

| 1. Get the list of all subnodes of root |

| 2. If then |

| 3. Return |

| 4. End If |

| 5. If root is a max node then |

| 6. Sort decreasingly according to subnode evaluations |

| 7. Else |

| 8. Sort increasingly according to subnode evaluations |

| 9. End If |

| 10. For each |

| 11. SortedPlan() |

| 12. End For |

5. Experimental

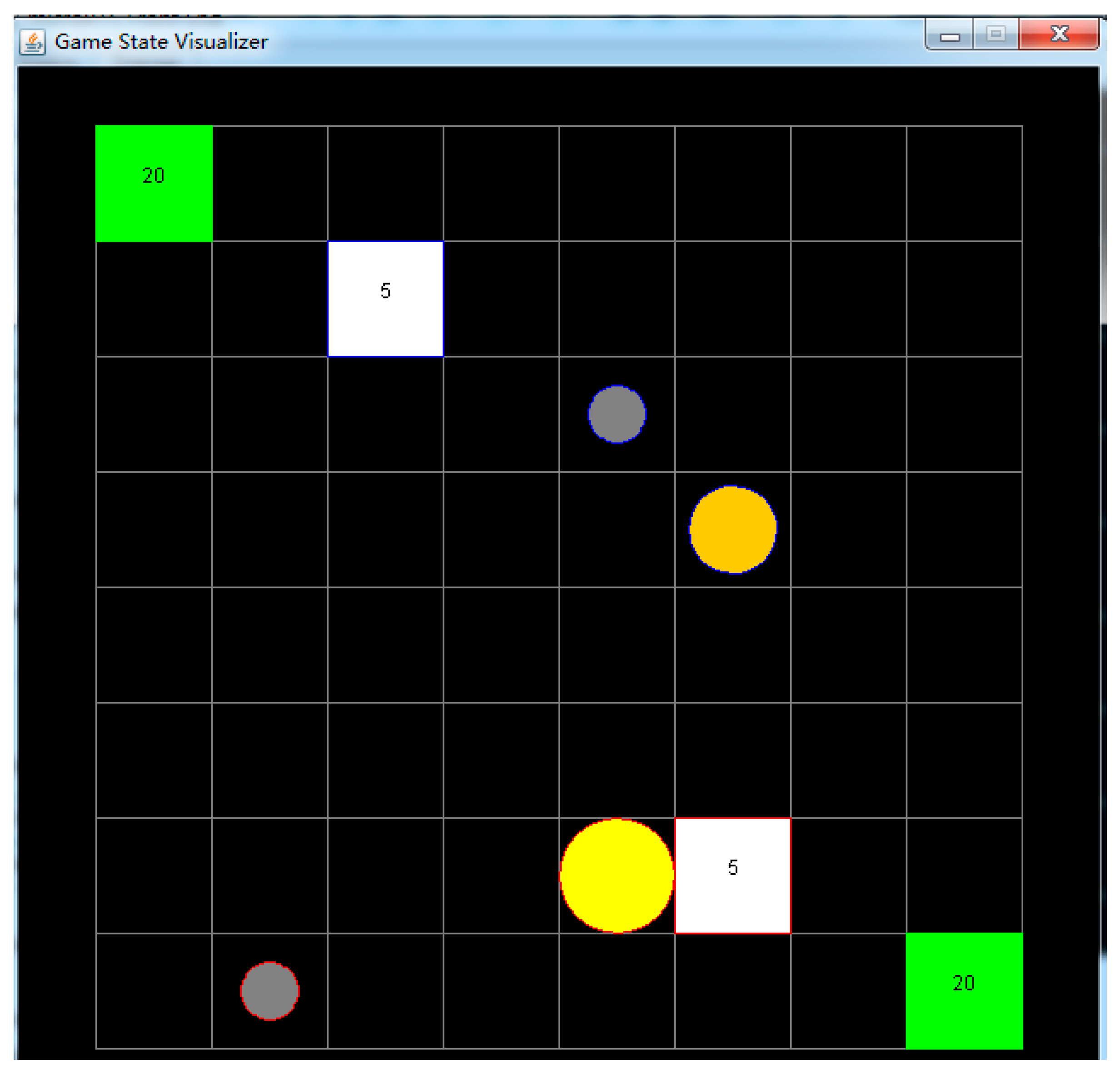

5.1. Experimental Environment and Settings

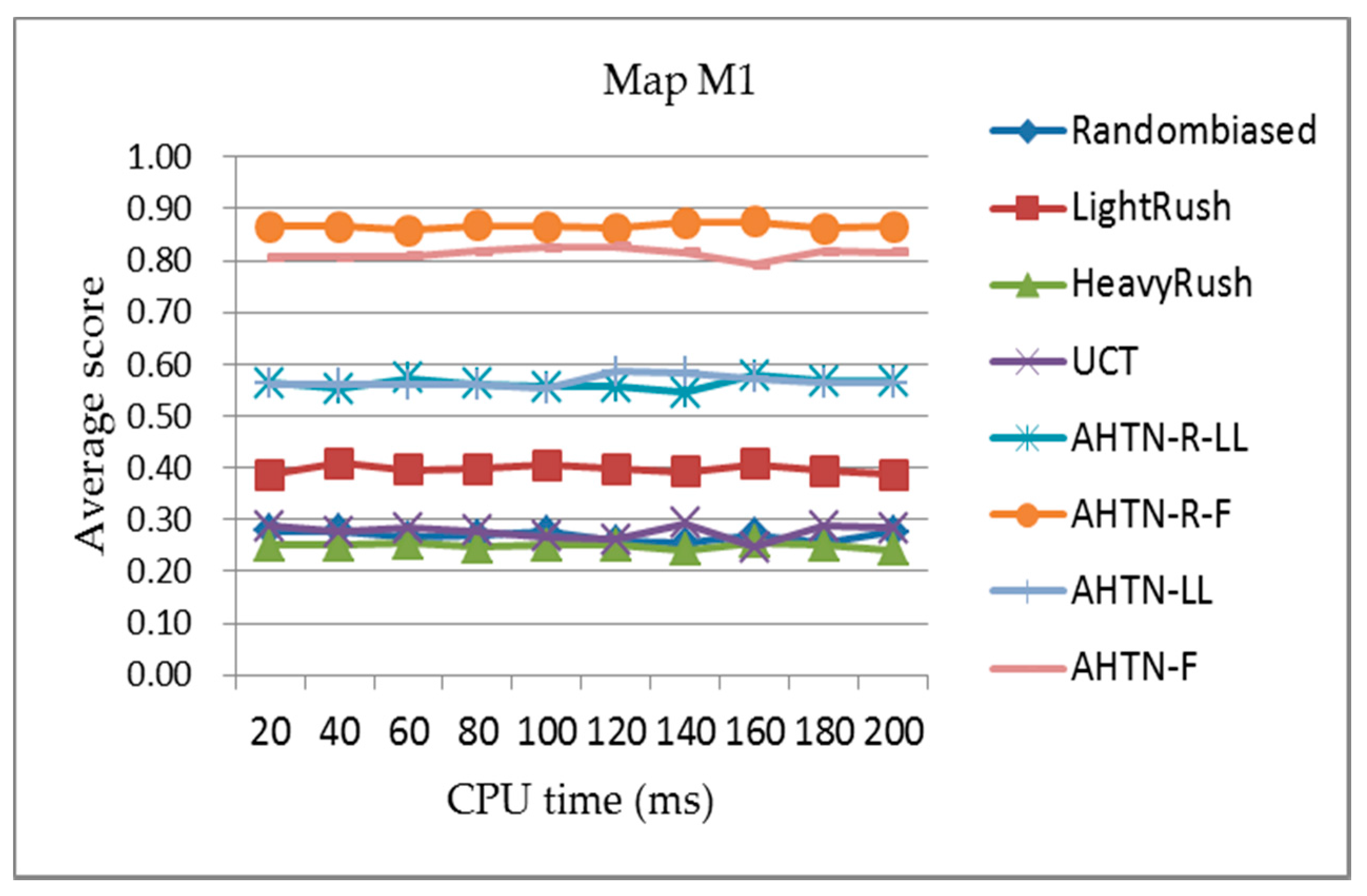

- Randombiased: An AI player employing a random biased strategy that executes actions randomly.

- LightRush: A hard-coded strategy. This AI player produces light attackers, and commands them to attack the enemy immediately.

- HeavyRush: A hard-coded strategy. This AI player produces heavy attackers, and commands them to attack the enemy immediately.

- UCT: We employ an implementation with the extension for accommodating simultaneous and durative actions [10].

- CPU time: The limited amount of CPU time allowed for an AI player per game frame. In our experiments, we employ different CPU time settings from 20 to 200 ms to test the performances of the algorithms.

- Playout policy: The Randombiased playout policy is employed for the AHTN-R, AHTN, and UCT algorithms in our experiments [10].

- Playout time: The maximum running time of a playout. The playout time is 100 cycles.

- Pathfinding algorithm: In our experiments, the AI player employs the A* pathfinding algorithm to obtain the path from a current location to a destination location.

- Maximum game time: The maximum game time is limited to 3000 cycles. This means that, if both players have living units at the 3000th cycle, the game is declared a tie.

- Maps: The three maps used in our experiments are M1 (8 × 8 tiles), M2 (12 × 12 tiles), and M3 (16 × 16 tiles).

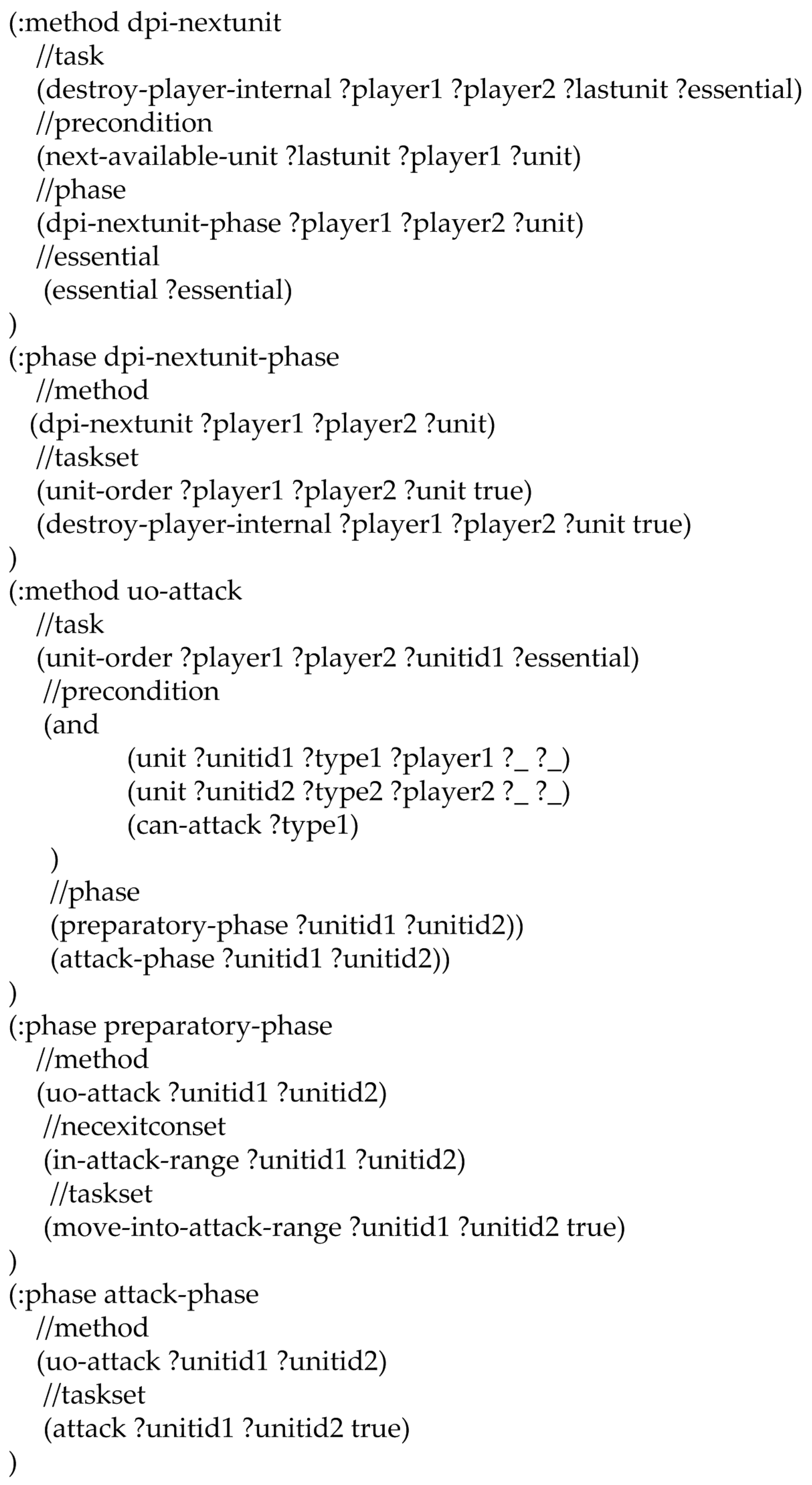

- Low Level: contains 12 operators (primitive tasks) and 9 methods for 3 types of tasks.

- Flexible: contains the 12 operators of the Low Level, but provides 49 methods and 9 types of tasks. This functionality allows methods to employ parallel execution tasks.

5.2. Experimental Results and Analysis

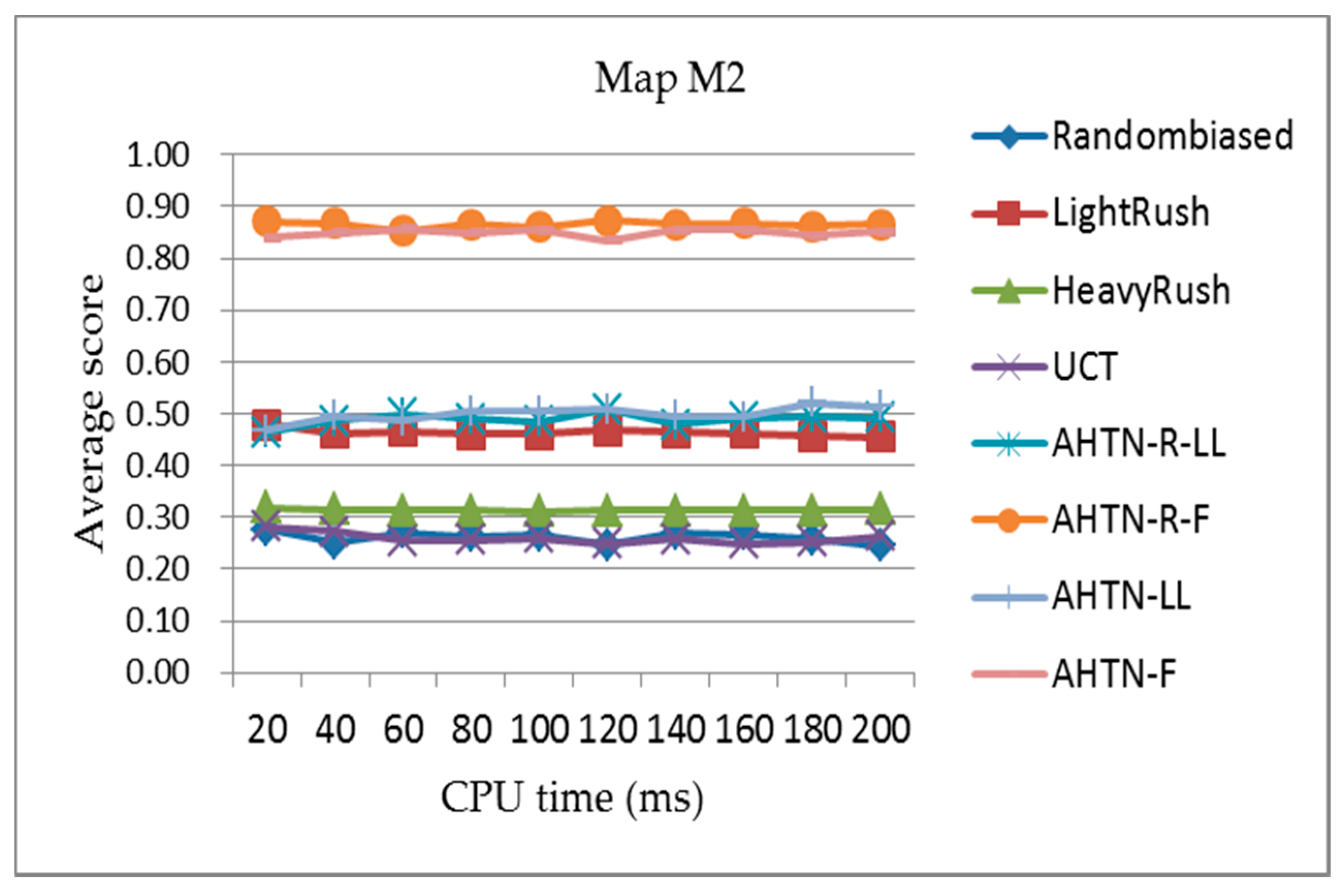

5.2.1. Average Score under Different CPU Time Settings for the Three Maps

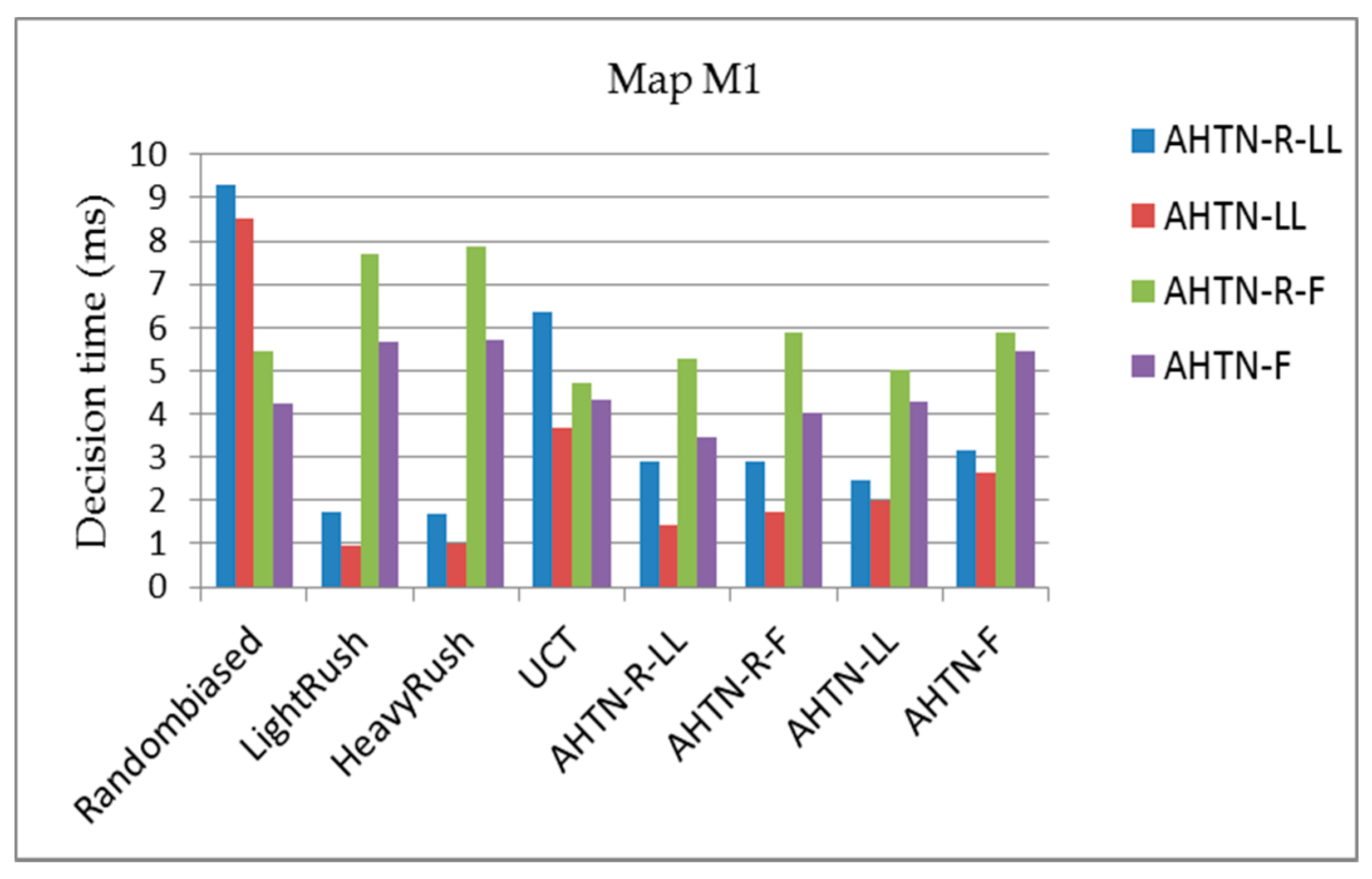

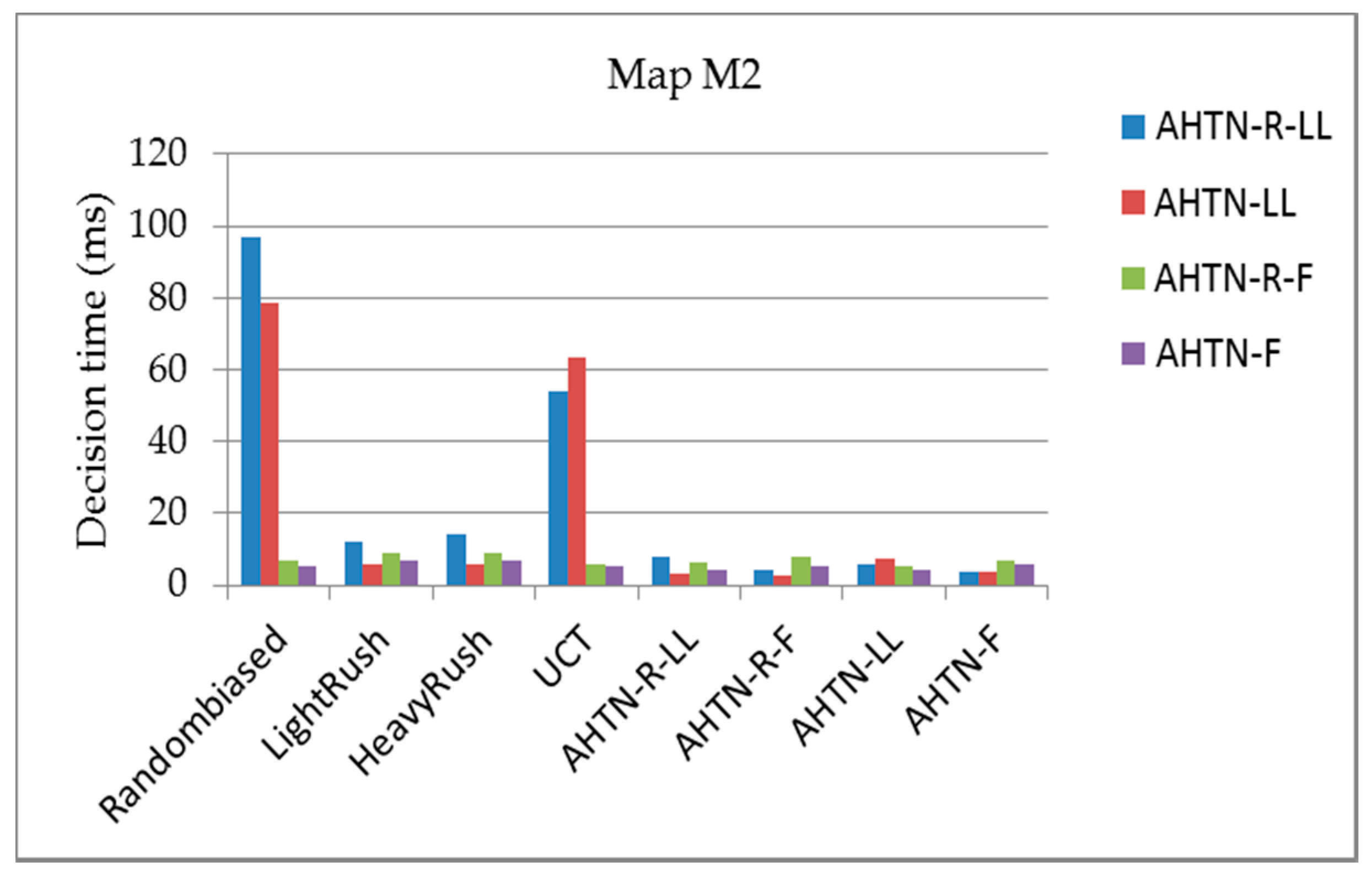

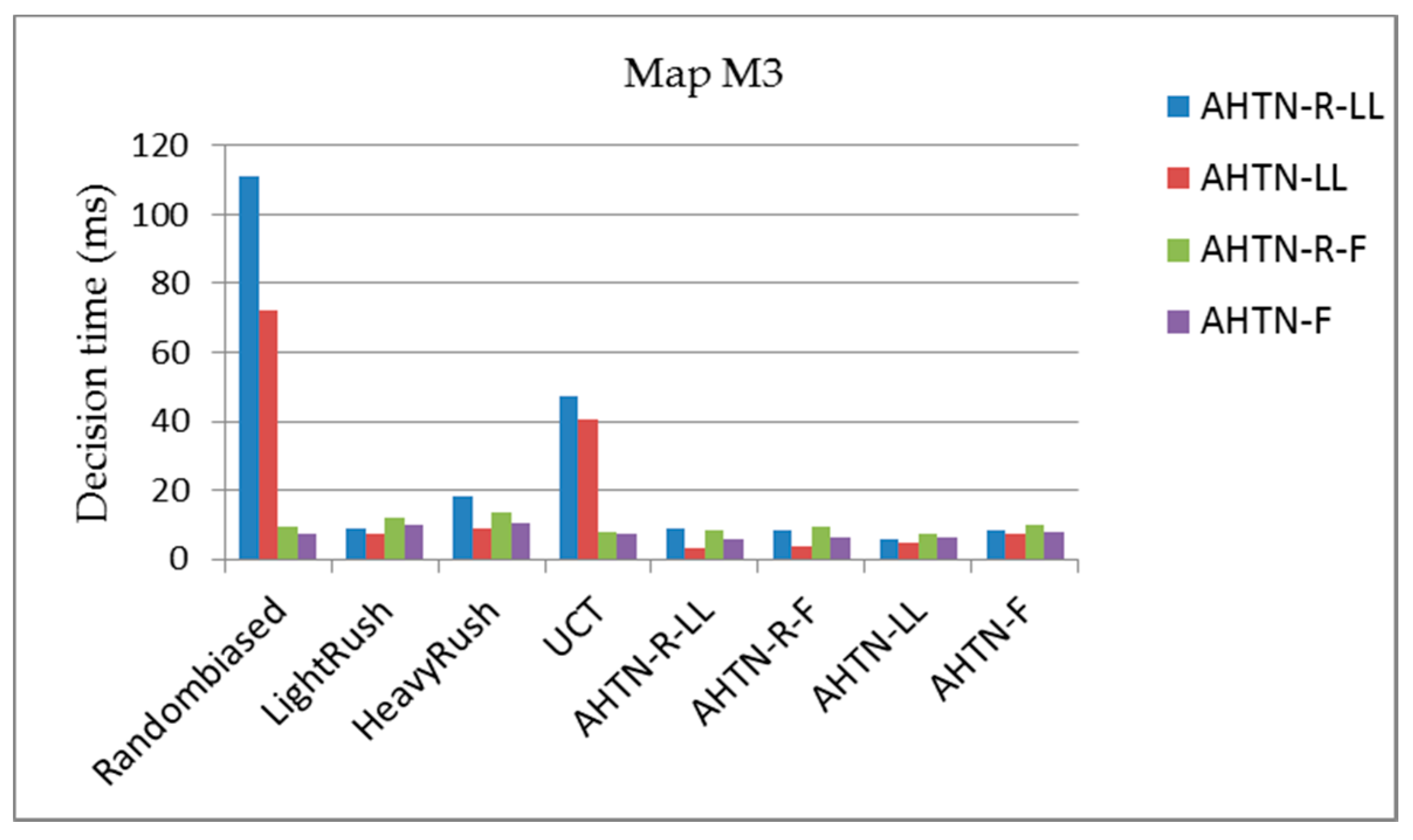

5.2.2. Average Decision Time for the Three Maps

5.2.3. Average Failed Task Repair Rate for the Three Maps

6. Conclusions and Future Work

- (1)

- An extended HTN description employing three additional elements denoted as essential task, phase and exit conditions is introduced to express complex relationships among tasks and accommodate the impacts of the environment.

- (2)

- A monitoring strategy based on the extended HTN description is employed in AHTN-R to identify and terminate all affected tasks localized to the failed task to the greatest extent possible. AHTN-R searches and terminates the affected tasks from the bottom to the top until a parent task has an optional useful method. The strategy is designed to limit the number of tasks affected by the failed task.

- (3)

- A novel task repair strategy based on a prioritized listing of alternative plans is used in AHTN-R to repair failed tasks. In the planning process, AHTN-R saves and sorts all generated plans in the game search tree according to their primary features. When a plan fails, AHTN-R selects the alternative plan with the highest priority from its saved plans to repair the failed task.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Buro, M. Real-time strategy games: A new AI research challenge. In Proceedings of the 8th International Joint Conference on Artificial Intelligence, Acapulco, Mexico, 9–15 August 2003; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2003; pp. 1534–1535. [Google Scholar]

- Ontanón, S.; Synnaeve, G.; Uriarte, A.; Richoux, F.; Churchill, D.; Preuss, M. A survey of real-time strategy game AI research and competition in starcraft. IEEE Trans. Comput. Intell. AI Games 2013, 5, 293–311. [Google Scholar] [CrossRef]

- Knuth, D.E.; Moore, R.W. An analysis of alpha-beta pruning. Artif. Intell. 1975, 6, 293–326. [Google Scholar] [CrossRef]

- Ontañón, S. Experiments with game tree search in real-time strategy games. arXiv, 2012; arXiv:1208.1940. [Google Scholar]

- Chung, M.; Buro, M.; Schaeffer, J. Monte Carlo planning in RTS games. In Proceedings of the 2005 IEEE Symposium on Computational Intelligence and Games (CIG05), Essex University, Colchester, Essex, UK, 4–6 April 2005; pp. 117–172. [Google Scholar]

- Balla, R.K.; Fern, A. UCT for tactical assault planning in real-time strategy games. In Proceedings of the 21st International Jont Conference on Artifical Intelligence, Pasadena, CA, USA, 11–17 July 2009; AAAI Press: Palo Alto, CA, USA, 2009; pp. 40–45. [Google Scholar]

- Churchill, D.; Saffidine, A.; Buro, M. Fast Heuristic Search for RTS Game Combat Scenarios. In Proceedings of the Artificial Intelligence and Interactive Digital Entertainment (AIIDE), Stanford, CA, USA, 8–12 October 2012. [Google Scholar]

- Ontanón, S. The combinatorial multi-armed bandit problem and its application to real-time strategy games. In Proceedings of the 9th Artificial Intelligence and Interactive Digital Entertainment Conference (AIIDE), Boston, MA, USA, 14–18 October 2013. [Google Scholar]

- Shleyfman, A.; Komenda, A.; Domshlak, C. On combinatorial actions and CMABs with linear side information. In Proceedings of the 21st European Conference on Artificial Intelligence, Prague, Czech Republic, 18–22 August 2014. [Google Scholar]

- Ontanón, S.; Buro, M. Adversarial hierarchical-task network planning for complex real-time games. In Proceedings of the 24th International Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015; AAAI Press: Palo Alto, CA, USA, 2015. [Google Scholar]

- Sacerdoti, E.D. The nonlinear nature of plans. In Proceedings of the 4th International Joint Conference on Artificial Intelligence, Tblisi, Georgia, 3–8 September 1975; No. SRI-TN-101. Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1975. [Google Scholar]

- Nau, D.; Cao, Y.; Lotem, A.; Muftoz-Avila, H. SHOP: Simple hierarchical ordered planner. In Proceedings of the 16th International Joint Conference on Artificial Intelligence-Volume 2, Stockholm, Sweden, 31 July–6 August 1999; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1999; pp. 968–973. [Google Scholar]

- Nau, D.S.; Ilghami, O.; Kuter, U.; Murdock, J.W.; Wu, D.; Yaman, F. SHOP2: An HTN planning system. J. Artif. Intell. Res. 2003, 20, 379–404. [Google Scholar]

- Kelly, J.-P.; Botea, A.; Koenig, S. Planning with hierarchical task networks in video games. In Proceedings of the ICAPS-07 Workshop on Planning in Games, Providence, RI, USA, 22–26 September 2007. [Google Scholar]

- Menif, A.; Jacopin, E.; Cazenave, T. SHPE: HTN Planning for Video Games. In Proceedings of the Third Workshop on Computer Games, Prague, Czech Republic, 18 August 2014. [Google Scholar]

- Soemers, D.J.N.J.; Winands, M.H.M. Hierarchical Task Network Plan Reuse for Video Games. In Proceedings of the 2016 IEEE Conference on Computational Intelligence and Games (CIG), Santorini, Greece, 20–23 September 2016. [Google Scholar]

- Humphreys, T. Exploring HTN planners through examples. In Game AI Pro: Collected Wisdom of Game AI Professionals; A K Peters, Ltd.: Natick, MA, USA, 2013; pp. 149–167. [Google Scholar]

- Muñoz-Avila, H.; Aha, D. On the role of explanation for hierarchical case-based planning in real-time strategy games. In Proceedings of the ECCBR-04 Workshop on Explanations in CBR, Madrid, Spain, 30 August–2 September 2004; Springer: Berlin, Germany, 2004. [Google Scholar]

- Spring RTS. Available online: http://springrts.com (accessed on 1 February 2016).

- Laagland, J. A HTN Planner for a Real-Time Strategy Game. 2014. Available online: http://hmi.ewi.utwente.nl/verslagen/capita-selecta/CS-Laagland-Jasper.pdf (accessed on 20 October 2015).

- Naveed, M.; Kitchin, D.E.; Crampton, A. A hierarchical task network planner for pathfinding in real-time strategy games. In Proceedings of the Third International Symposium on AI & Games, Leicester, UK, 29 March–1 April 2010. [Google Scholar]

- Sánchez-Garzón, I.; Fdez-Olivares, J.; Castillo, L. A Repair-Replanning Strategy for HTN-Based Therapy Planning Systems. 2011. Available online: https://decsai.ugr.es/~faro/LinkedDocuments/FinalSubmission_DC_AIME11.pdf (accessed on 5 October 2015).

- Gateau, T.; Lesire, C.; Barbier, M. Hidden: Cooperative plan execution and repair for heterogeneous robots in dynamic environments. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar]

- Ayan, N.F.; Kuter, U.; Yaman, F.; Goldman, R.P. Hotride: Hierarchical ordered task replanning in dynamic environments. In Proceedings of the 3rd Workshop on Planning and Plan Execution for Real-World Systems, Providence, RI, USA, 22–26 September 2007. [Google Scholar]

- Erol, K.; Hendler, J.A.; Nau, D.S. UMCP: A Sound and Complete Procedure for Hierarchical Task-network Planning. In Proceedings of the Second International Conference on Artificial Intelligence Planning Systems, Chicago, IL, USA, 13–15 June 1994. [Google Scholar]

- Lin, S. AHTN-R. Available online: https://github.com/mksl163/AHTN-R (accessed on 20 August 2017).

- Ontañón, S. microRTS. 2016. Available online: https://github.com/santiontanon/microrts (accessed on 22 February 2016).

- Churchill, D.; Buro, M. Portfolio greedy search and simulation for large scale combat in StarCraft. In Proceedings of the 2013 IEEE Conference Computational Intelligence in Games (CIG), Niagara Falls, ON, Canada, 11–13 August 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–8. [Google Scholar]

- Zhuo, H.H.; Hu, D.H.; Hogg, C.; Yang, Q.; Munoz-Avila, H. Learning HTN method preconditions and action models from partial observations. In Proceedings of the 21st International Jont Conference on Artifical Intelligence, Pasadena, CA, USA, 11–17 July 2009; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2009; pp. 1804–1810. [Google Scholar]

- Kuter, U.; Nau, D. Forward-chaining planning in nondeterministic domains. In Proceedings of the 19th National Conference on Artifical Intelligence, San Jose, CA, USA, 25–29 July 2004; AAAI Press: Palo Alto, CA, USA, 2004. [Google Scholar]

- Kuter, U.; Nau, D. Using domain-configurable search control for probabilistic planning. In Proceedings of the National Conference on Artificial Intelligence, Pittsburgh, PA, USA, 9–13 July 2005; AAAI Press: Palo Alto, CA, USA, 2005; pp. 1169–1174. [Google Scholar]

- Bonet, B.; Geffner, H. Planning with incomplete information as heuristic search in belief space. In Proceedings of the 5th International Conference on Artificial Intelligence Planning Systems, Breckenridge, CO, USA, 14–17 April 2000; AAAI Press: Palo Alto, CA, USA, 2000; pp. 52–61. [Google Scholar]

- Bonet, B.; Geffner, H. Labeled RTDP: Improving the Convergence of Real-Time Dynamic Programming. In Proceedings of the Thirteenth International Conference on Automated Planning and Scheduling, Trento, Italy, 9–13 June 2003; AAAI Press: Palo Alto, CA, USA, 2003; Volume 3. [Google Scholar]

- Bertsekas, D.P. Dynamic Programming and Optimal Control; Athena Scientific: Belmont, MA, USA, 1995; Volume 1. [Google Scholar]

| AI Player | M1 | M2 | M3 |

|---|---|---|---|

| Randombiased | 0.1682 | 0.0003 | 0.0003 |

| LightRush | 0.0381 | 0.0078 | 0.0105 |

| HeavyRush | 0.0355 | 0.0066 | 0.0070 |

| UCT | 0.0498 | 0.0057 | 0.0060 |

| AHTN-R-LL | 0.0202 | 0.0128 | 0.0137 |

| AHTN-R-F | 0.034 | 0.0188 | 0.0103 |

| AHTN-LL | 0.0221 | 0.0103 | 0.0114 |

| AHTN-F | 0.0176 | 0.0174 | 0.0071 |

| AI Player | M1 | M2 | M3 |

|---|---|---|---|

| Randombiased | 0.0265 | 0.0290 | 0.0228 |

| LightRush | 0.0226 | 0.0259 | 0.0217 |

| HeavyRush | 0.0216 | 0.0275 | 0.0219 |

| UCT | 0.027 | 0.0274 | 0.0275 |

| AHTN-R-LL | 0.0312 | 0.0348 | 0.0391 |

| AHTN-R-F | 0.0302 | 0.0353 | 0.0272 |

| AHTN-LL | 0.0232 | 0.0279 | 0.0283 |

| AHTN-F | 0.0192 | 0.0263 | 0.0141 |

| AI Player | M1 | M2 | M3 |

|---|---|---|---|

| Randombiased | 0.262 | 0.278 | 0.227 |

| LightRush | - | 0.324 | 0.037 |

| HeavyRush | - | 0.929 | 0 |

| UCT | 0.618 | 0.591 | 0.553 |

| AHTN-R-LL | 0 | 0 | 0 |

| AHTN-R-F | 0.005 | 0 | 0 |

| AHTN-LL | 0.355 | 0.343 | 0.373 |

| AHTN-F | 0.236 | 0.233 | 0.213 |

| AI Player | M1 | M2 | M3 |

|---|---|---|---|

| Randombiased | 0.904 | 0.886 | 0.852 |

| LightRush | - | 0.753 | 0.449 |

| HeavyRush | 1 | 1 | 0.767 |

| UCT | 0.945 | 0.907 | 0.906 |

| AHTN-R-LL | 0.008 | 0.044 | 0 |

| AHTN-R-F | 0.016 | 0.01 | 0.018 |

| AHTN-LL | 0.401 | 0.618 | 0.697 |

| AHTN-F | 0.407 | 0.373 | 0.452 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, L.; Jiao, P.; Xu, K.; Yin, Q.; Zha, Y. Modified Adversarial Hierarchical Task Network Planning in Real-Time Strategy Games. Appl. Sci. 2017, 7, 872. https://doi.org/10.3390/app7090872

Sun L, Jiao P, Xu K, Yin Q, Zha Y. Modified Adversarial Hierarchical Task Network Planning in Real-Time Strategy Games. Applied Sciences. 2017; 7(9):872. https://doi.org/10.3390/app7090872

Chicago/Turabian StyleSun, Lin, Peng Jiao, Kai Xu, Quanjun Yin, and Yabing Zha. 2017. "Modified Adversarial Hierarchical Task Network Planning in Real-Time Strategy Games" Applied Sciences 7, no. 9: 872. https://doi.org/10.3390/app7090872