Using a Combination of Spectral and Textural Data to Measure Water-Holding Capacity in Fresh Chicken Breast Fillets

Abstract

:1. Introduction

2. Materials and Methods

2.1. Samples Preparation

2.2. Measurement of WHC and Sample Classification

2.3. Image Acquisition and Correction

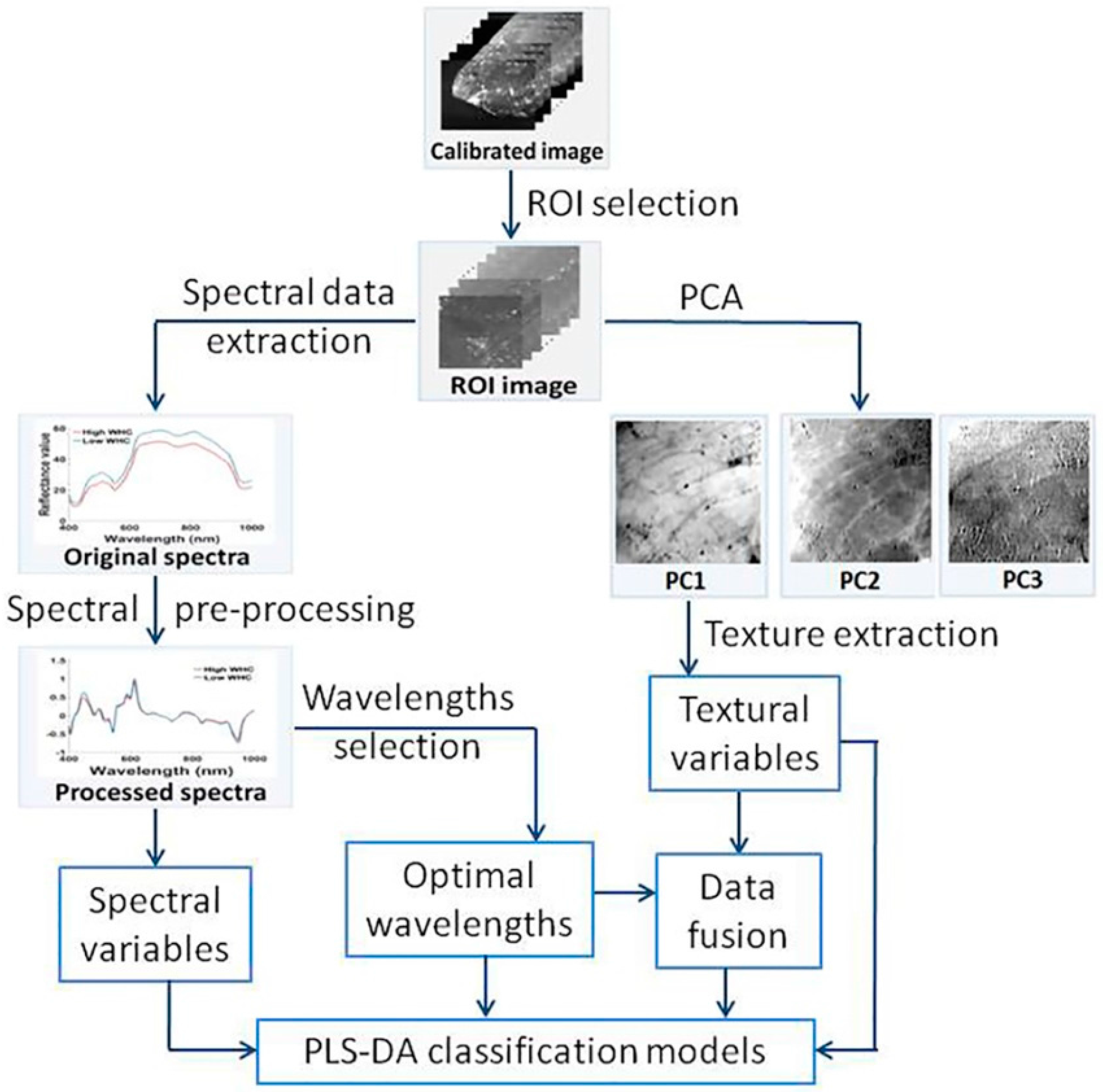

2.4. Spectral Data Extraction and Pre-Processing

2.5. Textural Feature Extraction

2.6. Combination of Spectral and Textural Data

2.7. Development of Classification Models

3. Results and Discussion

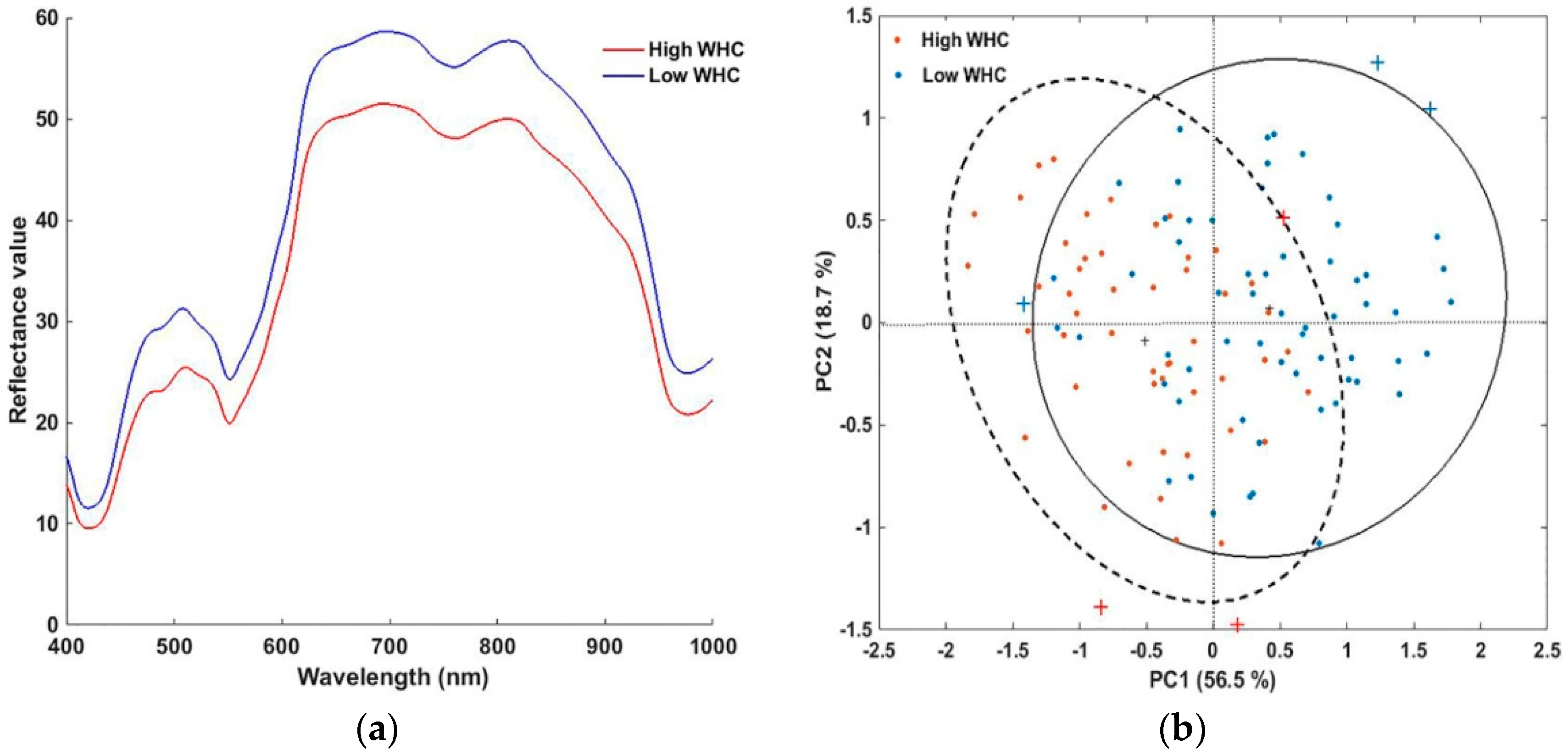

3.1. Spectral Features and Pre-Processing

3.2. Statistics of Measured Drip Loss Values

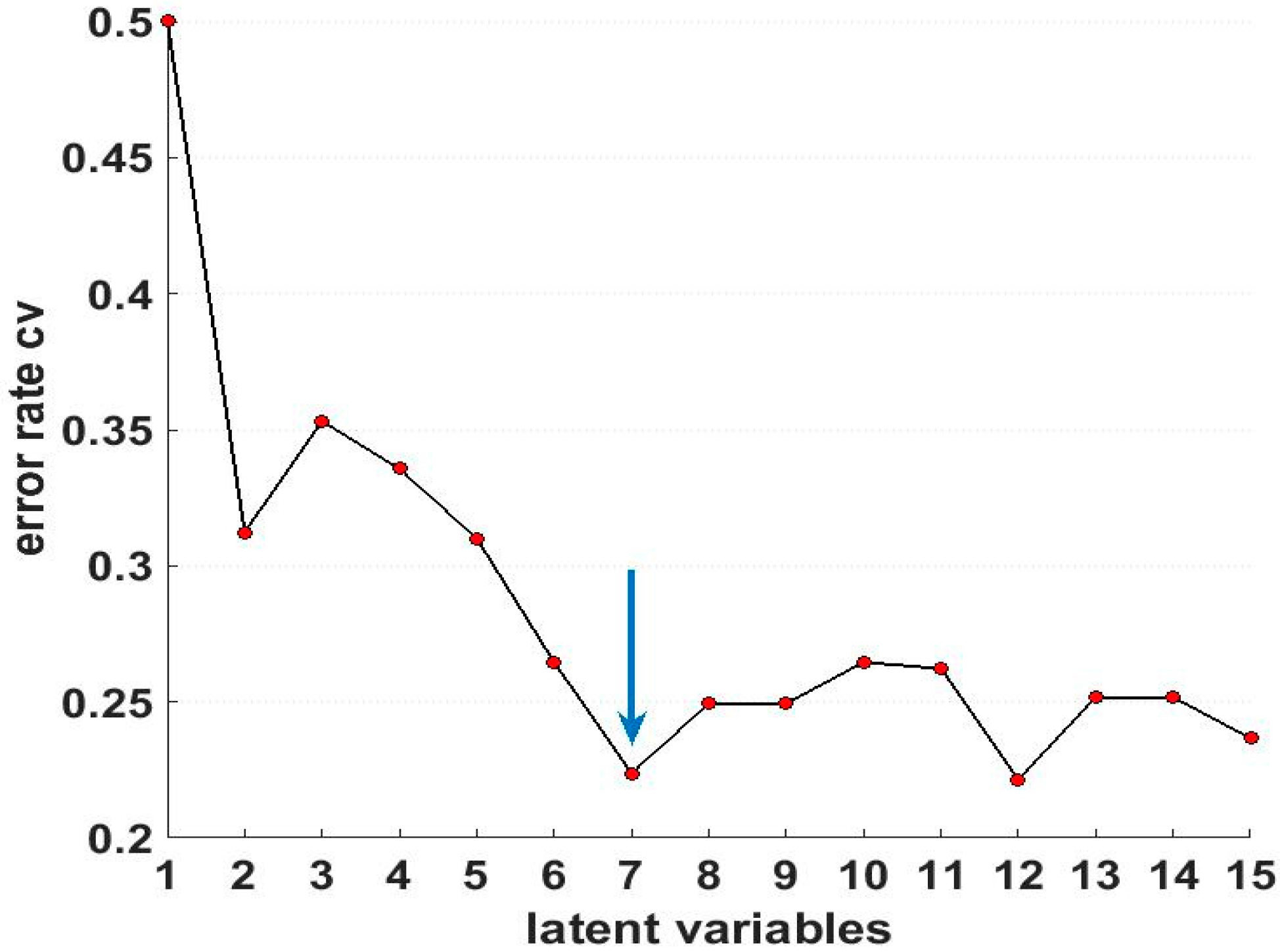

3.3. Modeling Based on Spectral Data

3.4. Modeling Based on Texture Data

3.5. Modeling Based on Data Fusion

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Barbin, D.F.; Kaminishikawahara, C.M.; Soares, A.L.; Mizubuti, I.Y.; Grespan, M.; Shimokomaki, M.; Hirooka, E.Y. Prediction of chicken quality attributes by near infrared spectroscopy. Food Chem. 2015, 168, 554–560. [Google Scholar] [CrossRef] [PubMed]

- Kapper, C.; Walukonis, C.J.; Scheffler, T.L.; Scheffler, J.M.; Don, C.; Morgan, M.T.; Gerrard, D.E. Moisture absorption early postmortem predicts ultimate drip loss in fresh pork. Meat Sci. 2014, 96, 971–976. [Google Scholar] [CrossRef] [PubMed]

- Kamruzzaman, M.; Elmasry, G.; Sun, D.W.; Allen, P. Prediction of some quality attributes of lamb meat using near-infrared hyperspectral imaging and multivariate analysis. Anal. Chim. Acta 2012, 714, 57–67. [Google Scholar] [CrossRef] [PubMed]

- Micklander, E.; Bertram, H.C.; Marno, H.; Bak, L.S.; Andersen, H.J.; Engelsen, S.B.; Nørgaard, L. Early post-mortem discrimination of water-holding capacity in pig longissimus muscle using new ultrasound method. LWT-Food Sci. Technol. 2005, 38, 437–445. [Google Scholar] [CrossRef]

- Elmasry, G.; Sun, D.W.; Allen, P. Non-destructive determination of water-holding capacity in fresh beef by using nir hyperspectral imaging. Food Res. Int. 2011, 44, 2624–2633. [Google Scholar] [CrossRef]

- Zhang, M.; Mittal, G.S.; Barbut, S. Effects of test conditions on the water holding capacity of meat by a centrifugal method. LWT-Food Sci. Technol. 1995, 28, 50–55. [Google Scholar] [CrossRef]

- Honikel, K.O. Reference methods for the assessment of physical characteristics of meat. Meat Sci. 1998, 49, 447–457. [Google Scholar] [CrossRef]

- Brondum, J.; Munck, L.; Henckel, P.; Karlsson, A.; Tornberg, E.; Engelsen, S.B. Prediction of water-holding capacity and composition of porcine meat by comparative spectroscopy. Meat Sci. 2000, 55, 177–185. [Google Scholar] [CrossRef]

- Wilhelm, A.E.; Maganhini, M.B.; Hernández-Blazquez, F.J.; Ida, E.I.; Shimokomaki, M. Protease activity and the ultrastructure of broiler chicken PSE (pale, soft, exudative) meat. Food Chem. 2010, 119, 1201–1204. [Google Scholar] [CrossRef]

- Bowker, B.; Hawkins, S.; Zhuang, H. Measurement of water-holding capacity in raw and freeze-dried broiler breast meat with visible and near-infrared spectroscopy. Poult. Sci. 2014, 93, 1834–1841. [Google Scholar] [CrossRef] [PubMed]

- Wu, D.; Sun, D.W. Application of visible and near infrared hyperspectral imaging for non-invasively measuring distribution of water-holding capacity in salmon flesh. Talanta 2013, 116, 266–276. [Google Scholar] [CrossRef] [PubMed]

- Goetz, A.F.H.; Vane, G.; Solomon, J.E.; Rock, B.N. Imaging spectrometry for earth remote sensing. Science 1985, 228, 1147–1153. [Google Scholar] [CrossRef] [PubMed]

- Senthilkumar, T.; Jayas, D.S.; White, N.D.G. Detection of different stages of fungal infection in stored canola using near-infrared hyperspectral imaging. J. Stored Prod. Res. 2015, 63, 80–88. [Google Scholar] [CrossRef]

- Menesatti, P.; Urbani, G.; Lanza, G. Spectral imaging Vis-NIR system to forecast the chilling injury onset on citrus fruits. Acta Hortic. 2005, 682, 1347–1354. [Google Scholar] [CrossRef]

- Wu, D.; Sun, D.W.; He, Y. Application of long-wave near infrared hyperspectral imaging for measurement of color distribution in salmon fillet. Innov. Food Sci. Emerg. Technol. 2012, 16, 361–372. [Google Scholar] [CrossRef]

- Wu, D.; Sun, D.W.; He, Y. Novel non-invasive distribution measurement of texture profile analysis (TPA) in salmon fillet by using visible and near infrared hyperspectral imaging. Food Chem. 2014, 145, 417–426. [Google Scholar] [CrossRef] [PubMed]

- Lim, J.; Kim, G.; Mo, C.; Kim, M.S.; Chao, K.; Qin, J.; Cho, B.K. Detection of melamine in milk powders using near-infrared hyperspectral imaging combined with regression coefficient of partial least square regression model. Talanta 2016, 151, 183–191. [Google Scholar] [CrossRef] [PubMed]

- Govindarajankonda, N.; Laurenm, G.; Jeyamkondan, S.; Chrisr, C.; Ashok, S.; Georgee, M. Visible/near-infrared hyperspectral imaging for beef tenderness prediction. Comput. Electron. Agric. 2008, 64, 225–233. [Google Scholar]

- Yoon, S.C.; Park, B.; Lawrence, K.C.; Windham, W.R.; Heitschmidt, G.W. Line-scan hyperspectral imaging system for real-time inspection of poultry carcasses with fecal material and ingesta. Comput. Electron. Agric. 2011, 79, 159–168. [Google Scholar] [CrossRef]

- Xiong, Z.; Sun, D.W.; Pu, H.; Zhu, Z.; Luo, M. Combination of spectra and texture data of hyperspectral imaging for differentiating between free-range and broiler chicken meats. LWT-Food Sci. Technol. 2015, 60, 649–655. [Google Scholar] [CrossRef]

- Fan, S.; Zhang, B.; Li, J.; Liu, C.; Huang, W.; Tian, X. Prediction of soluble solids content of apple using the combination of spectra and textural features of hyperspectral reflectance imaging data. Postharvest Biol. Technol. 2016, 121, 51–61. [Google Scholar] [CrossRef]

- Liu, D.; Pu, H.; Sun, D.W.; Wang, L.; Zeng, X.A. Combination of spectra and texture data of hyperspectral imaging for prediction of pH in salted meat. Food Chem. 2014, 160, 330–337. [Google Scholar] [CrossRef] [PubMed]

- Pu, H.; Sun, D.W.; Ma, J.; Cheng, J.H. Classification of fresh and frozen-thawed pork muscles using visible and near infrared hyperspectral imaging and textural analysis. Meat Sci. 2015, 99, 81–88. [Google Scholar] [CrossRef] [PubMed]

- Yang, D.; He, D.; Lu, A.; Ren, D.; Wang, J. Combination of spectral and textural information of hyperspectral imaging for the prediction of the moisture content and storage time of cooked beef. Infrared Phys. Technol. 2017, 83, 206–216. [Google Scholar] [CrossRef]

- Yoon, S.C.; Lawrence, K.C.; Park, B. Automatic counting and classification of bacterial colonies using hyperspectral imaging. Food Bioprocess Technol. 2015, 8, 2047–2065. [Google Scholar] [CrossRef]

- Feng, Y.Z.; Sun, D.W. Application of hyperspectral imaging in food safety inspection and control: A review. Crit. Rev. Food Sci. Nutr. 2012, 52, 1039–1058. [Google Scholar] [CrossRef] [PubMed]

- Jia, B.; Yoon, S.C.; Zhuang, H.; Wang, W.; Li, C. Prediction of ph of fresh chicken breast fillets by VNIR hyperspectral imaging. J. Food Eng. 2017, 208, 57–65. [Google Scholar] [CrossRef]

- Dai, Q.; Sun, D.W.; Cheng, J.; Pu, H.; Zeng, X.; Xiong, Z. Recent Advances in De-Noising Methods and Their Applications in Hyperspectral Image Processing for the Food Industry. Compr. Rev. Food Sci. Food Saf. 2015, 13, 891–905. [Google Scholar] [CrossRef]

- Oquendo, E.R.F.; Clemente, J.B.; Malinao, J.A.; Adorna, H.N. Characterizing classes of potential outliers through traffic data set data signature 2D nMDS projection. 2017. Philipp. Inf. Technol. J. 2011, 4, 37–42. [Google Scholar]

- Salido, J.A. Outlier identification using nonmetric multidimensional scaling of yeast cell cycle phase using gene expression data. In Proceedings of the International MultiConference of Engineers and Computer Scientists, Hong Kong, China, 16–18 March 2016. [Google Scholar]

- Araújo, M.C.U.; Saldanha, T.C.B.; Galvão, R.K.H.; Yoneyama, T.; Chame, H.C.; Visani, V. The successive projections algorithm for variable selection in spectroscopic multicomponent analysis. Chemom. Intell. Lab. Syst. 2001, 57, 65–73. [Google Scholar] [CrossRef]

- Paiva, H.M. A graphical user interface for variable selection employing the successive projections algorithm. Chemom. Intell. Lab. Syst. 2012, 118, 260–266. [Google Scholar] [CrossRef]

- Kavdir, I.; Guyer, D.E. Comparison of artificial neural networks and statistical classifiers in apple sorting using textural features. Biosyst. Eng. 2004, 89, 331–344. [Google Scholar] [CrossRef]

- Zhu, H.; Chu, B.; Chu, Z.; Fei, L.; Jiang, L.; Yong, H. Hyperspectral imaging for presymptomatic detection of tobacco disease with successive projections algorithm and machine-learning classifiers. Sci. Rep. 2017, 7, 4125. [Google Scholar] [CrossRef] [PubMed]

- Kruizinga, P.; Petkov, N. Nonlinear operator for oriented texture. IEEE Trans. Signal Process. 1999, 8, 1395–1407. [Google Scholar] [CrossRef] [PubMed]

- Park, B.; Kise, M.; Windham, W.R.; Lawrence, K.C.; Yoon, S.C. Textural analysis of hyperspectral images for improving contaminant detection accuracy. Sens. Instrum. Food Qual. Saf. 2008, 2, 208–214. [Google Scholar] [CrossRef]

- Ma, F.; Qin, H.; Shi, K.; Zhou, C.; Chen, C.; Hu, X.; Zheng, L. Feasibility of combining spectra with texture data of multispectral imaging to predict heme and non-heme iron contents in pork sausages. Food Chem. 2016, 190, 142–149. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Zhao, J.; Chen, Q.; Zhang, Y. Rapid detection of total viable count (TVC) in pork meat by hyperspectral imaging. Food Res. Int. 2013, 54, 821–828. [Google Scholar] [CrossRef]

- Mendoza, F.; Dejmek, P.; Aguilera, J.M. Colour and image texture analysis in classification of commercial potato chips. Food Res. Int. 2007, 40, 1146–1154. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Texture features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Pohl, C.; Genderen, J.L.V. Review article multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Barker, W.; Rayens, W. Partial least squares for discrimination. J. Chemom. 2003, 17, 166–173. [Google Scholar] [CrossRef]

- Pérez-Enciso, M.; Tenenhaus, M. Prediction of clinical outcome with microarray data: A partial least squares discriminant analysis (PLS-DA) approach. Hum. Genet. 2003, 112, 581–592. [Google Scholar] [PubMed]

- Kamruzzaman, M.; Barbin, D.; Elmasry, G.; Sun, D.W.; Allen, P. Potential of hyperspectral imaging and pattern recognition for categorization and authentication of red meat. Innov. Food Sci. Emerg. Technol. 2012, 16, 316–325. [Google Scholar] [CrossRef]

- Peerapattana, J.; Shinzawa, H.; Otsuka, K.; Hattori, Y.; Otsuka, M. Partial least square discriminant analysis of mangosteen pericarp powder by near infrared spectroscopy. J. Near Infrared Spectrosc. 2013, 21, 195–202. [Google Scholar] [CrossRef]

- Barbut, S. Problem of pale soft exudative meat in broiler chickens. Br. Poult. Sci. 1997, 38, 355–358. [Google Scholar] [CrossRef] [PubMed]

| Parameters | Min | Max | Mean | SD |

|---|---|---|---|---|

| High WHC | 0.03 | 0.94 | 0.57 | 0.22 |

| Low WHC | 0.97 | 5.90 | 2.22 | 1.16 |

| Features | Variable Numbers | Latent Variables | CCR (%) | ||

|---|---|---|---|---|---|

| Calibration | Cross Validation | Prediction | |||

| Full spectra | 472 | 7 | 85 | 78 | 78 |

| Optimal spectra | 7 | 6 | 76 | 72 | 73 |

| Texture data | 16 | 5 | 64 | 53 | 59 |

| Data fusion | 23 | 8 | 90 | 83 | 86 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, B.; Wang, W.; Yoon, S.-C.; Zhuang, H.; Li, Y.-F. Using a Combination of Spectral and Textural Data to Measure Water-Holding Capacity in Fresh Chicken Breast Fillets. Appl. Sci. 2018, 8, 343. https://doi.org/10.3390/app8030343

Jia B, Wang W, Yoon S-C, Zhuang H, Li Y-F. Using a Combination of Spectral and Textural Data to Measure Water-Holding Capacity in Fresh Chicken Breast Fillets. Applied Sciences. 2018; 8(3):343. https://doi.org/10.3390/app8030343

Chicago/Turabian StyleJia, Beibei, Wei Wang, Seung-Chul Yoon, Hong Zhuang, and Yu-Feng Li. 2018. "Using a Combination of Spectral and Textural Data to Measure Water-Holding Capacity in Fresh Chicken Breast Fillets" Applied Sciences 8, no. 3: 343. https://doi.org/10.3390/app8030343