The Level of Automation in Emergency Quick Disconnect Decision Making

Abstract

:1. Introduction

2. Literature Review on the EQD Process

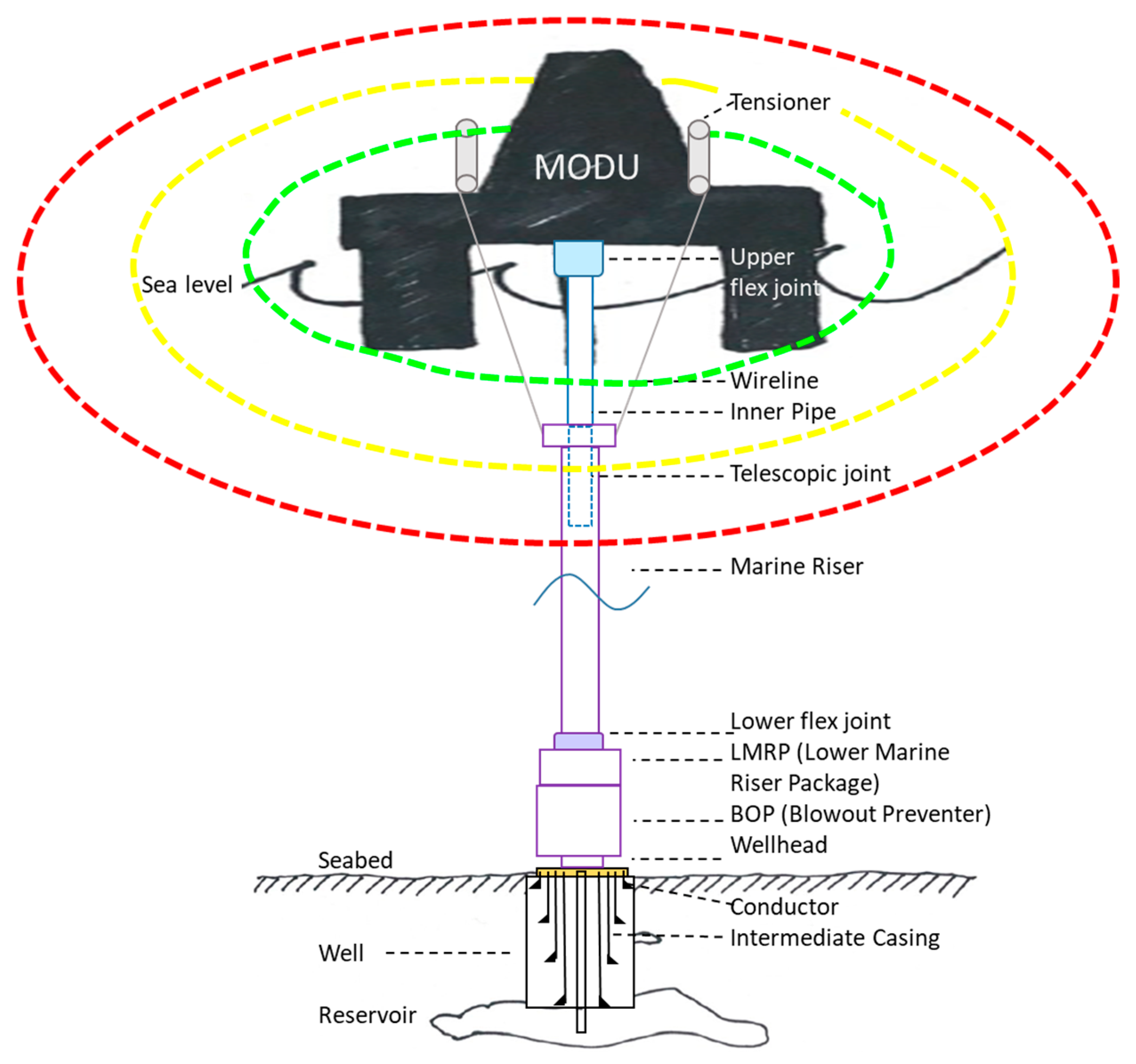

2.1. The Emergency Quick Disconnect Function

2.2. Parameters Related to “Loss of Position”

2.3. Auto-EQD

2.4. The Well Specific Operating Guidelines

2.5. Reported EQD Incidents

2.6. EQD Scenario Development with DP Operators

2.6.1. Stage 1: Information Acquisition: Detect Loss of Position, 10 s

2.6.2. Stage 2: Information Analysis: Diagnose Drive off Event, 5 s

2.6.3. Stage 3: Decision Selection: Decide on Mitigating Actions

2.6.4. Stage 4: Action Implementations: Initiate Emergency Disconnect Sequence

3. Literature Review on the Process of Decision-Making

3.1. The Rational Approach

- The decision maker needs to be capable of generating all possible scenarios and potential outcomes of the situation.

- The decision maker should be able to evaluate differences in the attractiveness of available alternatives.

- It should be possible to aggregate the partial, or local, evaluations into a global evaluation.

- The decision maker chooses the global alternative that has the most favorable evaluation.

3.2. Criticism of Rationalistic Decision Making

- Parsimony: the decision maker uses only a small part of available information

- Reliability: the information is considered to be sufficiently relevant to justify the decision made

- Decidability: the information applied in the decision-making process may vary between people and decisions.

3.3. NDM—Recognition Primed Decision Making

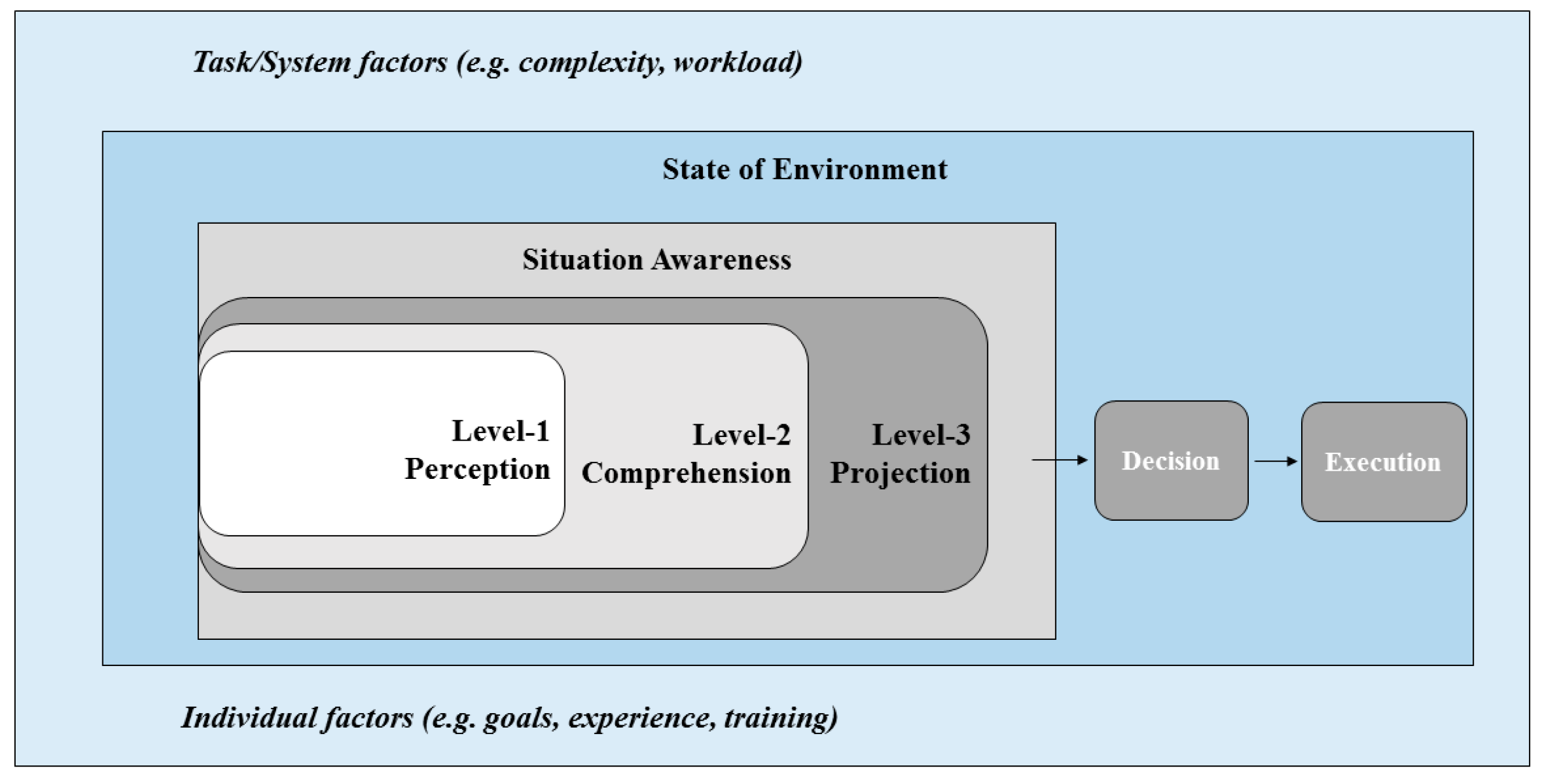

3.4. Situational Awareness

3.5. Computers as Decision Makers

3.6. Challenges with Computers as Decision Makers

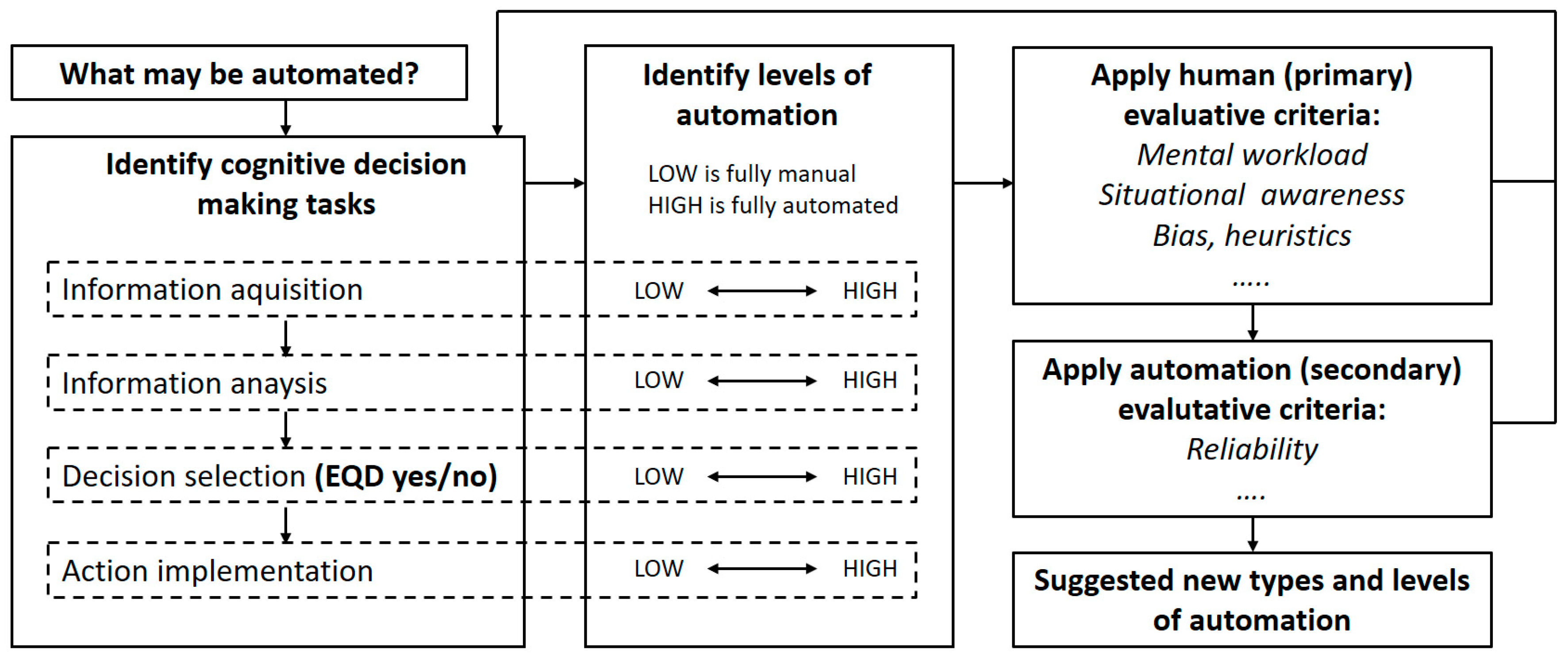

3.7. Allocation of Functions between Human and Machines

4. Research Questions and Method

4.1. Research Framework

4.2. Research Questions

- RQ1:

- Which information is available and relevant for EQD decision making?

- RQ2:

- How is the information acquired?

- RQ3:

- How is the information evaluated and analyzed?

- RQ4:

- Once decided, how is an EQD implemented?

- RQ5:

- To what degree is automation applied in the process, i.e., what is the level of automation?

- RQ6:

- May increasing the level of automation improve EQD as a safety barrier?

4.3. Methods for Data Collection and Analysis

5. Results

5.1. Interviews of Operators

5.2. Analysis of the Current Level of Automation

5.2.1. Information Acquisition

5.2.2. Information Analysis

5.2.3. Decision Selection

5.2.4. Action Implementation

5.3. Current Level of Automation in EQD

6. Discussion

6.1. Information Acquisition

6.2. Analysis and Decision of Initiating EQD

6.3. Action Implementation

6.4. Limitations and Further Research

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

| Variability | Time Perspective | Parameter Source | Operational Rules | |||

|---|---|---|---|---|---|---|

| No | EQD Information Parameter | Explaination | Static (ST), Dynamic (DY) | Seconds (S), Minutes (M), Hours (H) | Human Observation (HO), Monitoring Systems (MS), Expertise (EX) | Threshold Values (TV), Yes/No (YN), No Rules (NR) |

| 1 | HV (high voltage) switchboards/blackout | Loss of power means that the MODU can not hold its position | DY | S | HO, MS | YN |

| 2 | Position offset | Offset from intended position increases strain on riser system and well (deeper water provides more time) | DY | S, M | MS | TV |

| 3 | Heading offset | Offset due to current and winds, might affect how easy it is to operate vessel | DY | S | MS | NR |

| 4 | Power consumption each network (3-split configuration) | Helps operator to detect anomalies in critical systems | DY | S | MS | NR |

| 5 | Thruster force on each switchboard | Truster force is the means for the vessel to move in the wanted direction | DY | S | MS | NR |

| 6 | Position reference available | Needed to keep the vessel in position | DY | S | MS | YN |

| 7 | DP control system operational | A malfunction in the DP system can compromise control of the rig position | DY | S | MS | YN |

| 8 | Wind sensors operational | Wind force may exceed the power of the thrusters, leading to loss of position | DY | M | MS | YN |

| 9 | Motion sensors (MRU) operational | Motion sensor is used for aiding and compensation of vessel motions | DY | S | MS | YN |

| 10 | Heading sensors (Gyro) operational | Provides the heading information from which the DP system computes steering commands | DY | S | MS | YN |

| 11 | DP-UPS operational | UPS offers power protection and energy backup to DP control system | DY | S | MS | YN |

| 12 | IAS System, DP Network operational | IAS is the Integrated Automation System, or the "brain" in the DP system | DY | S | MS | YN |

| 13 | Fire Alarm System status | Malfunction may lead to increased response time in case of fire | DY | S | MS | NR |

| 14 | Communication Systems | Malfunction makes it difficult to coordinate team effort and distribute information | DY | S | MS | YN |

| 15 | Riser limitation UFJ | The UFJ (Upper Flex Joint) is a part of the riser, and indicates the riser strength | DY | S | MS | Not known |

| 16 | Riser limitation LFJ | The LFJ (Lower Flex Joint) is a part of the riser, and indicates the riser strength | DY | S | MS | Not known |

| 17 | Riser angle | The angle between the vertical plane and the riser | DY | S | MS | Not known |

| 18 | Wind speed | Wind force may exceed the power of the thrusters, leading to loss of position | DY | M | MS | NR |

| 19 | Wave height | Increased forces from waves may compromise position, stability and deck operations | DY | M | HO | Not known |

| 20 | Heading Deviation from BOP Landing | Affects the behaviour of the rig at a certain moment in time, helping in assessing if operations may continue or EQD is needed | DY | M | MS | Not known |

| 21 | Presence of unshearable tools/equipment in BOP | May prevent a safe and effective EQD | DY | M | MS | YN |

| 22 | GPS raw data available | May indicates delays or imprecisions in the DP system data | DY | S | MS | YN |

| 23 | Other sensor data/parameters (unspecified) | Other information from sensors and instruments, details not known | Not known | Not known | MS | Not known |

| 24 | Sound from thrusters | May help indicate malfunction | DY | S | HO | NR |

| 25 | Sound from bearings | May help indicate malfunction | DY | S | HO | NR |

| 26 | Sound of generators | May help indicate malfunction | DY | S | HO | NR |

| 27 | Position of crew/people | May influence communication and desissions | DY | S | HO, EX | NR |

| 28 | Presence of hydrocarbons on deck | Procedures can be different if there are hydrocarbon on deck | DY | S | MS | NR |

| 29 | Presence of tools/equipment in moonpool | May prevent a safe and effective EQD | DY | S | HO | NR |

| 30 | Areas to be cleared of people if EQD | Moving parts during EQD process may injure people in close proximity | DY | M | EX | NR |

| 31 | Time delay of position updates in DP system | May allow the rig to move beyond limits without proper alarms being activated | DY | S | EX | NR |

| 32 | Delay from activation of thrusters until effect on rig movement (inertia) | Indicates need to anticipate and take preventive action | DY | S | EX | NR |

| 33 | Different systems have different limits | Allows for subjective evaluation of parameters and overall situation | ST | Not relevant | EX | NR |

| 34 | Speed of the rig | Affects the behaviour of the rig at a certain moment in time, helping in assessing if operations may continue of EQD is needed | DY | S | MS | NR |

| 35 | Vessel displacement (weight) | Affects delays in rig movement when exposed to force (acceleration and retardations) | ST | Not relevant | EX | Not relevant |

| 36 | Vessel shape | Affects how rig responds to forces from wind, currents and thrusters | ST | Not relevant | EX | Not relevant |

| 37 | Vessel translation and rotation (3 dimensions) | The resulting behaviour of the rig based on the overall weather situation, helping in assessing if operations may continue or EQD is needed | DY | S | HO | Not relevant |

| 38 | Mechanical properties of riser and BOP (joints, tensioners, connectors, casings) | Provides limitations for vertical and horisontal movement and forces from the rig | ST | Not relevant | EX | Not relevant |

| 39 | Soil model | Provides limitations for vertical and horisontal movement and forces from the rig | ST | Not relevant | EX | Not relevant |

| 40 | Sea current | Resulting forces may exceed the power of the thrusters, leading to loss of position | DY | H | MS | NR |

| 41 | Weather forecast | Helps project to what degree the rig will be exposed to forces from wind, waves and currents | DY | H | Other | NR |

| 42 | High cost of EQD | Operators are aware of the potentially high cost of initiating an EQD, which may delay the decision during a critial incidents, or make them push beyond predefined limits | DY | H | EX | NR |

| 43 | Emergencies or blackout on nearby vessels | May drift towards the rig and become a collision hazard | DY | S | HO | NR |

| 44 | Confirmed fire | Depends on location and severity, OIM to evaluate | DY | S | HO, MS | NR |

| 45 | Uncontrollable situation in the well | Normally try to get control using the well control procedures or diverter. EQD activated if the well treathens the safety of the MODU | DY | S | MS | NR |

References

- Pate-Cornell, M.E. Learning from the piper alpha accident: A postmortem analysis of technical and organizational factors. Risk Anal. 1993, 13, 215–232. [Google Scholar] [CrossRef]

- Holen, A. The North Sea oil rig disaster. In International Handbook of Traumatic Stress Syndromes; Springer: New York, NY, USA, 1993; pp. 471–478. [Google Scholar]

- Peterson, C.H.; Rice, S.D.; Short, J.W.; Esler, D.; Bodkin, J.L.; Ballachey, B.E.; Irons, D.B. Long-term ecosystem response to the Exxon Valdez oil spill. Science 2003, 302, 2082–2086. [Google Scholar] [CrossRef] [PubMed]

- Shapiro, K.; Khanna, S.; Ustin, S.L. Vegetation impact and recovery from oil-induced stress on three ecologically distinct wetland sites in the Gulf of Mexico. J. Mar. Sci. Eng. 2016, 4, 33. [Google Scholar] [CrossRef]

- White, H.K.; Hsing, P.Y.; Cho, W.; Shank, T.M.; Cordes, E.E.; Quattrini, A.M.; Nelson, R.K.; Camilli, R.; Demopoulos, A.W.; German, C.R.; et al. Impact of the Deepwater Horizon oil spill on a deep-water coral community in the Gulf of Mexico. Proc. Natl. Acad. Sci. USA 2012, 109, 20303–20308. [Google Scholar] [CrossRef] [PubMed]

- Grattan, L.M.; Roberts, S.; Mahan, W.T., Jr.; McLaughlin, P.K.; Otwell, W.S.; Morris, J.G., Jr. The early psychological impacts of the Deepwater Horizon oil spill on Florida and Alabama communities. Environ. Health Perspect. 2011, 119, 838–843. [Google Scholar] [CrossRef] [PubMed]

- European Commision, Seveso, I.I. Council Directive 96/82. EC of 1996. 1996. Available online: http://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:01996L0082-20120813&from=EN (accessed on 28 November 2017).

- European Parliament, Council of the European Union. The Machinery Directive 2006/42/EC. EC of 2006. 2006. Available online: http://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32006L0042&rid=1 (accessed on 28 November 2017).

- International Electrotechnical Commission (IEC). IEC 61508. In Functional Safety of Electrical/Electronic/Programmable Electronic Safety-Related Systems; International Electrotechnical Commission (IEC): Geneva, Switzerland, 2010. [Google Scholar]

- Sklet, S. Safety barriers: Definition, classification, and performance. J. Loss Prev. Process Ind. 2006, 19, 494–506. [Google Scholar] [CrossRef]

- Cabrera-Miranda, J.M.; Jeom Kee, P. On the probabilistic distribution of loads on a marine riser. Ocean Eng. 2017, 134, 105–118. [Google Scholar] [CrossRef]

- Bray, D. Dynamic Positioning; Oilfield seamanship; Oilfield Publications Limited: Ledbury, UK, 1999; Volume 9. [Google Scholar]

- Sørensen, A.J.; Leira, B.; Peter Strand, J.; Larsen, C.M. Optimal setpoint chasing in dynamic positioning of deep-water drilling and intervention vessels. Int. J. Robust Nonlinear Control 2001, 11, 1187–1205. [Google Scholar] [CrossRef]

- Verhoeven, H.; Chen, H.; Moan, T. Safety of Dynamic positioning Operation on Mobile Offshore Drilling Units. In Proceedings of the Dynamic Positioning (DP) Conference, Houston, TX, USA, 28–30 September 2004. [Google Scholar]

- Bhalla, K.; Cao, Y.S. Watch Circle Assessment of Drilling Risers during a Drift-Off and Drive-Off Event of a Dynamically Positioned Vessel. Presented at the Dynamic Positioning Conference, Houston, TX, USA, 15–16 November 2005; Marine Technology Society: Washington, DC, USA, 2005. [Google Scholar]

- Chen, H.; Moan, T.; Verhoeven, H. Safety of dynamic positioning operations on mobile offshore drilling units. Reliab. Eng. Syst. Saf. 2008, 93, 1072–1090. [Google Scholar] [CrossRef]

- Det Norske Veritas and Germanischer Lloyd. DNVGL-RP-E307: Dynamic Positioning Systems—Operation Guidance; Recommended Practice: Oslo, Norway, 2015. [Google Scholar]

- International Marine Contractors Association (IMCA). Dynamic Positioning Station Keeping Incidents—Incidents Reported from 2000–2016; IMCA: London, UK, 2016. [Google Scholar]

- Costa, M.S.R.; Machado, G.B. Analyzing Petrobras DP Incident. Presented at the Dynamic Positioning Conference, Houston, TX, USA, 17–18 October 2006; Marine Technology Society: Washington, DC, US, 2006. [Google Scholar]

- Hauff, K.S. Analysis of Loss of Position Incidents for Dynamically Operated Vessels. Master’s Thesis, Department of Marine Technology, Norwegian University of Science and Technology (NTNU), Trondheim, Norway, 2014. [Google Scholar]

- Broyde, H.; Falk, K.; Arntzen, A.A.B. Autonomous Security System Specification within Oil and Gas Industry for Offshore Vessel: Requirement and Concept. Presented at the Society for Design and Process Science (SDPS), Birmingham, AL, USA, 5–8 November 2017. [Google Scholar]

- Martinsen, T.J.S. Characteristics of Critical Incidents in Dynamic Positioning. Master’s Thesis, Høgskolen i Vestfold, Horten, Norway, 2013. [Google Scholar]

- Pedersen, R.N. QRA Techniques on Dynamic Positioning Systems During Drilling Operations in the Arctic: With Emphasis on the Dynamic Positioning Operator. Master’s Thesis, UiT the Arctic University of Norway, Tromsø, Norway, 2015. [Google Scholar]

- Bye, A.; Laumann, K.; Taylor, C.; Rasmussen, M.; Øie, S.; van de Merwe, K.; Øien, K.; Boring, R.L.; Paltrinieri, N.; Wærø, I.; et al. The Petro-HRA Guideline (IFE/HR/F-2017/001); Institute for Energy Technology: Halden, Norway, 2017. [Google Scholar]

- Pareto, V. Manual of Political Economy; Kelley: New York, NY, USA, 1927. [Google Scholar]

- Barthélemy, J.P.; Bisdorff, R.; Coppin, G. Human centered processes and decision support systems. Eur. J. Oper. Res. 2002, 136, 233–252. [Google Scholar] [CrossRef]

- Saaty, T.L. Decision making with the analytic hierarchy process. Int. J. Serv. Sci. 2008, 1, 83–98. [Google Scholar] [CrossRef]

- Simon, H.A. A behavioral model of rational choice. Q. J. Econ. 1955, 69, 99–118. [Google Scholar] [CrossRef]

- Simon, H.A. Human nature in politics: The dialogue of psychology with political science. Am. Political Sci. Rev. 1985, 79, 293–304. [Google Scholar] [CrossRef]

- Simon, H.A. Theories of decision-making in economics and behavioral science. Am. Econ. Rev. 1959, 49, 253–283. [Google Scholar]

- Tversky, A.; Kahneman, D. Judgment under uncertainty: Heuristics and biases. In Utility, Probability, and Human Decision Making; Springer: Dordrecht, The Netherlands, 1975; pp. 141–162. [Google Scholar]

- Barthélemy, J.P.; Mullet, E. Choice basis: A model for multi-attribute preference. Br. J. Math. Stat. Psychol. 1986, 39, 106–124. [Google Scholar] [CrossRef] [PubMed]

- Klein, G. Naturalistic decision making. Hum. Factors 2008, 50, 456–460. [Google Scholar] [CrossRef] [PubMed]

- Naderpour, M.; Nazir, S.; Lu, J. The role of situation awareness in accidents of large-scale technological systems. Process Saf. Environ. Prot. 2015, 97, 13–24. [Google Scholar] [CrossRef]

- Endsley, M.R. Toward a theory of situation awareness in dynamic systems. Hum. Factors 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Øvergård, K.I.; Sorensen, L.J.; Nazir, S.; Martinsen, T.J. Critical incidents during dynamic positioning: Operators’ situation awareness and decision-making in maritime operations. Theor. Issues Ergon. Sci. 2015, 16, 366–387. [Google Scholar] [CrossRef]

- Hilbert, M.; López, P. The world’s technological capacity to store, communicate, and compute information. Science 2011, 332, 60–65. [Google Scholar] [CrossRef] [PubMed]

- Kidd, A. (Ed.) Knowledge Acquisition for Expert Systems: A Practical Handbook; Springer Science & Business Media: Berlin, Germany, 2012. [Google Scholar]

- Liao, S.H. Expert system methodologies and applications—A decade review from 1995 to 2004. Expert Syst. Appl. 2005, 28, 93–103. [Google Scholar] [CrossRef]

- Hsu, F.H. IBM’s deep blue chess grandmaster chips. IEEE Micro 1999, 19, 70–81. [Google Scholar]

- Tiwari, V.; Keskar, A.; Shivaprakash, N.C. Towards creating a reference based self-learning model for improving human machine interaction. CSI Trans. ICT 2017, 5, 201–208. [Google Scholar] [CrossRef]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Wiener, E.L.; Curry, R.E. Flight-deck automation: Promises and problems. Ergonomics 1980, 23, 995–1011. [Google Scholar] [CrossRef]

- Billings, C.E. Aviation Automation: The Search for a Human-Centered Approach; CRC Press: Boca Raton, FL, USA, 1997. [Google Scholar]

- Sarter, N.B.; Woods, D.D.; Billings, C.E. Automation surprises. In Handbook of Human Factors and Ergonomics; Wiley: Hoboken, NJ, USA, 1997; Volume 2, pp. 1926–1943. [Google Scholar]

- Norman, D.A. The ‘problem’ with automation: Inappropriate feedback and interaction, not ‘over-automation’. Philos. Trans. R. Soc. Lond. B Biol. Sci. 1990, 327, 585–593. [Google Scholar] [CrossRef] [PubMed]

- Strauch, B. Ironies of Automation: Still Unresolved After All These Years. IEEE Trans. Hum. Mach. Syst. 2017. [Google Scholar] [CrossRef]

- Onken, R.; Schulte, A. System-Ergonomic Design of Cognitive Automation: Dual-Mode Cognitive Design of Vehicle Guidance and Control Work Systems; Springer: Berlin, Germany, 2010. [Google Scholar]

- Fitts, P.M. Human Engineering for an Effective Air-Navigation and Traffic-Control System; National Research Council: Washington, DC, USA, 1951. [Google Scholar]

- De Winter, J.C.; Dodou, D. Why the Fitts list has persisted throughout the history of function allocation. Cogn. Technol. Work 2014, 16, 1–11. [Google Scholar] [CrossRef]

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2000, 30, 286–297. [Google Scholar] [CrossRef]

- Vagia, M.; Transeth, A.A.; Fjerdingen, S.A. A literature review on the levels of automation during the years. What are the different taxonomies that have been proposed? Appl. Ergon. 2016, 53, 190–202. [Google Scholar] [CrossRef] [PubMed]

- Patton, M.Q. Enhancing the quality and credibility of qualitative analysis. Health Serv. Res. 1999, 34, 1189. [Google Scholar] [PubMed]

- Kvale, S. Doing Interviews (Qualitative Research Kit); Sage Publications Ltd.: London, UK, 2008. [Google Scholar]

- Bowen, G.A. Document analysis as a qualitative research method. Qual. Res. J. 2009, 9, 27–40. [Google Scholar] [CrossRef]

- Marois, R.; Ivanoff, J. Capacity limits of information processing in the brain. Trends Cogn. Sci. 2005, 9, 296–305. [Google Scholar] [CrossRef] [PubMed]

- Véronneau, S.; Cimon, Y. Maintaining robust decision capabilities: An integrative human–systems approach. Decis. Support Syst. 2007, 43, 127–140. [Google Scholar] [CrossRef]

| Green | Blue | Yellow | Red | |

|---|---|---|---|---|

| Label | Normal operations | Advisory status | Reduced status | Emergency status |

| Definition | All systems are fully operational. Operations are commencing within acceptable limits. | Operations are approaching performance or alarm limits. Operations may continue, but risk must be assessed. And/or there is a failure that does not affect redundancy. | Operational or performance limits are reached, and/or there are components or system failure that result in loss of redundancy. The vessel is not out of position. | Pre-defined operational or performance limits are exceeded, and/or there are critical component or system failures. Loss of control or position. |

| Response | Meet all conditions in the green column in order to commence operations. | Conduct a risk assessment to determine if operations should be adjusted or ceased. | Stop operations, initiate contingency procedures and prepare to disconnect. Operations may be resumed if redundancy is recovered and all operational risks have been assessed. | Cease operations. Take immediate action and initiate EQD sequence. Ensure the safety of people, environment, the vessel and equipment. |

| Example 1: DP position footprint | <5 m | >5 m | 10 m | 15 m |

| Example 2: Riser limitation UFJ | 0–1.5 deg. | 2 deg. | Situation-specific | Situation-specific |

| Example 3: Wind direction | Situation-specific | Situation-specific | Situation-specific | Situation-specific |

| Process Steps |

|---|

|

|

|

|

|

| Phenomenon | Description |

|---|---|

| Brittleness | Modern socio-technical systems may be so complex that it is almost impossible to define all relevant functions and alternatives, as well as the scope of system limits and relevant interfaces with other systems. |

| Opacity | Technology systems have limited capability to express and explain what it is doing, and what it is planning to do next, to the human operator. |

| Literalism | Automata stick to the rules and instructions given by their programmers or operators (the process), even if they may lead to obviously undesired outcomes (lack of goal orientation). |

| Clumsiness | The system has little understanding of the work situation of the operator, and thus does not aid when needed or call for attention when operator workload is very high. |

| Data overload | Producing large amounts of information, of which only a small part is useful for the operator. The situation may also be opposite: that the system does not produce information that obviously would be helpful from the perspective of the operator. |

| Role | Vessel | EQD System | Water Depths (m) | Years of Experience | No. of EQD Experienced |

|---|---|---|---|---|---|

| OIM | DP3 rig | Manual | 250–500 | 16 | 1 |

| DPO | DP3 rig | Automatic | 310–360 | 5 | 1 |

| DPO | DP3 ship | Manual | 150–200 | 8 | 0 |

| Expert Drilling Advisor | DP3 rig | Manual | 100–1700 | >20 | 0 |

| No. | Parameter | Input from Role |

|---|---|---|

| 1 | Red lamp and sound alarm | All |

| 2 | DP system failure | All |

| 3 | Rig moving towards positional limits | All |

| 4 | Weather conditions (current situation and weather forecast) | All |

| 5 | Black out on supply vessel nearby (lost communication with supply vessel, and vessel drifted towards the rig) | OIM |

| 6 | Confirmed fire (depends on where, OIM to evaluate) | OIM, expert |

| 7 | Failure on machinery or thrusters | OIM |

| 8 | Unshearable tubulars inside the BOP upon emergency | All |

| 9 | Sudden failure on heave compensating detected by the operator (sound) | OIM |

| 10 | Limits specified in the Well Specific Operational Guideline (WSOG), procedure placed on the DP console at all times | DPO drill rig |

| 11 | GPS raw data (used when the DP system failed to read the sudden change in position due to a large wave that pushed the rig out of position) | DPO drill rig |

| 12 | DP system reference failure (sensor failure, DPO calls for EQD whithout reaching the position limit due to not knowing the position of the rig) | DPO drill rig |

| 13 | Communication between driller/DPO/OIM upon emergency (you would like to know that the driller is aware of the situation, preferably the driller should activate the EQD) | DPO drill rig, Expert |

| 14 | DP drive off/drift off/black out | DPO drill ship |

| 15 | Detection of hydrocarbons on deck | OIM, DPO drill ship |

| 16 | Wind sensor from DP system | Expert |

| 17 | Uncontrollable situation in the well | Expert |

| Role | Findings |

|---|---|

| OIM | “I think it is positive that we do not have auto-EQD, so we can move slightly outside the limit of 15 m.”…”need to keep a cool head to let it (the rig) drift outside the red circle”. |

| “If installed, I will trust the auto-EQD system”…”If required by the oil company, we will install it”. | |

| “A drift off goes very fast, auto-EQD provides safety”. | |

| “Have not heard frustrations from those (rigs) using auto-EQD”. | |

| “If operating in deep waters you have plenty of time (to decide), but on shallow water depths there are almost no time”. | |

| DPO drill rig | “This is the system (auto-EQD) that I know of today, and I am satisfied with it”. |

| “Have heard several times that the DP loses references, and the DPO pushes the button (starts EQD) before breaching predefined limits”. | |

| “Auto-EQD is there to improve safety”. | |

| “The operator does not need to defend the decision of doing the EQD—if limits are exceeded, the system initiates”. | |

| “If you see that the rig is moving outside the limits, and you keep a clear mind, it is possible to disable the auto-EQD. This is not according to procedure. Has been discussed, but cannot be done without changing the procedure”. | |

| DPO drill ship | “If you have a drive off instead of a drift off…or a black out, then an auto-EQD would be good”. |

| “In some situations, it is good to be able to chase the position (slightly exceed the limits)”. | |

| “Could be good to combine auto-EQD with type of situation, drive off, drift off, black out, hydrocarbons on deck…”. | |

| “What is good with the auto-EQD, is that the company has already made the decision (to disconnect)”. | |

| Expert | “A reliable auto-EQD will increase the safety”. |

| “A more automatic system will reduce the probability of failing to disconnect due to the angle”. | |

| “My concern is that if you include more software and more automatic systems, failure can arise and there are no one to interpret it, and suddenly you disonnect without intention”. | |

| “I would have worked on a rig with auto-EQD, but I would also have asked som questions…how do you do maintenance, when was it checked the last time, DP trial…”. | |

| “The challenge with the manual system is that you have to trust humans all the way, and the communication, the communication needs to be interpreted correctly”. |

| Variability | Time Perspective | Parameter Source | Operational Rules |

|---|---|---|---|

| Static (ST): 5 | Seconds (S): 30 | Human observation (HO): 10 | Threshold values (TV): 1 |

| Dynamic (DY): 39 | Minutes (M): 7 | Monitoring systems (MS): 27 | Yes/No rule (YN): 12 |

| Not known: 1 | Hours (H): 3 | Expertise (EX): 10 | No clear Rules (NR): 22 |

| Not known: 1 | Other: 1 | Not known: 6 | |

| Not relevant: 5 | Not relevant: 5 |

| Information Acquisition | Information Analysis | Decision Selection | Action Implementation |

|---|---|---|---|

| 7 (from HMI) | 7 (with auto-EQD) | 10 (with auto-EQD) | 10 (fully automated sequence) |

| 1 (manual observation) | 4 (without auto-EQD) | 4 (without auto-EQD) | |

| 1 (long term) |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marius, I.; Kristin, F.; Marianne, K.; Salman, N. The Level of Automation in Emergency Quick Disconnect Decision Making. J. Mar. Sci. Eng. 2018, 6, 17. https://doi.org/10.3390/jmse6010017

Marius I, Kristin F, Marianne K, Salman N. The Level of Automation in Emergency Quick Disconnect Decision Making. Journal of Marine Science and Engineering. 2018; 6(1):17. https://doi.org/10.3390/jmse6010017

Chicago/Turabian StyleMarius, Imset, Falk Kristin, Kjørstad Marianne, and Nazir Salman. 2018. "The Level of Automation in Emergency Quick Disconnect Decision Making" Journal of Marine Science and Engineering 6, no. 1: 17. https://doi.org/10.3390/jmse6010017