1. Introduction

The aim of this paper is to study the new entropy type information measures introduced by Kitsos and Tavoularis [

1] and the multivariate hyper normal distribution defined by them. These information measures are defined, adopting a parameter

, as the

-moment of the score function (see

Section 2), where

is an integer, while in principle

. One of the merits of this generalized normal distribution is that it belongs to the Kotz-type distribution family [

2],

i.e., it is an elliptically contoured distribution (see

Section 3). Therefore it has all the characteristics and applications discussed in Baringhaus and Henze [

3], Liang

et al. [

4] and Nadarajah [

5]. The parameter information measures related to the entropy, are often crucial to the optimal design theory applications, see [

6]. Moreover, it is proved that the defined generalized normal distribution provides equality to a new generalized information inequality (Kitsos and Tavoularis [

1,

7]) regarding the generalized information measure as well as the generalized Shannon entropy power (see

Section 3).

In principle, the information measures are divided into three main categories: parametric (a typical example is Fisher’s information [

8]), non parametric (with Shannon’s information measure being the most well known) and entropy type [

9].

The new generalized entropy type measure of information

, defined by Kitsos and Tavoularis [

1], is a function of density, as:

From (1), we obtain that

equals:

For

, the measure of information

is the Fisher’s information measure:

i.e.,

. That is,

is a generalized Fisher’s entropy type information measure, and as the entropy, it is a function of density.

Proposition 1.1. When is a location parameter, then .

Proof. Considering the parameter

as a location parameter and transforming the family of densities to

, the differentiation with respect to

is equivalent to the differentiation with respect to

. Therefore we can prove that

. Indeed, from (1) we have:

and the proposition has been proved.

Recall that the score function is defined as:

with

and

under some regularity conditions, see Schervish [

10] for details. It can be easily shown that when

, then

. Therefore

behaves as the

-moment of the score function of

. The generalized power is still the power of the white Gaussian noise with the same entropy, see [

11], considering the entropy power of a random variable.

Recall that the Shannon entropy

is defined as

, see [

9]. The entropy power

is defined through

as:

The definition of the entropy power of a random variable

was introduced by Shannon in 1948 [

11] as the independent and identically distributed components of a

p-dimensional white Gaussian random variable with entropy

.

The generalized entropy power

is of the form;

with the normalizing factor being the appropriate generalization of

,

i.e.,

is still the power of the white Gaussian noise with the same entropy. Trivially, with

, the definition in (6) is reduced to the entropy power,

i.e.,

. In turn, the quantity:

appears very often when we define various normalizing factors, under this line of thought.

Theorem 1.1. Generalizing the Information Inequality (Kitsos-Tavoularis [

1]). For the variance of

,

and the generalizing Fisher’s entropy type information measure

, it holds:

with

as in (7).

Corollary 1.1. When

then

, and the Gramer-Rao inequality ([

9], Th. 11.10.1) holds.

Proof. Indeed, as .

For the above introduced generalized entropy measures of information we need a distribution to play the "role of normal", as in the Fisher's information measure and Shannon entropy. In Kitsos and Tavoularis [

12] extend the normal distribution in the light of the introduced generalized information measures and the optimal function satisfying the extension of the LSI. We form the following general definition for an extension of the multivariate normal distribution, the

-order generalized normal, as follows:

Definition 1.1. The

-dimensional random variable

follows the

-order generalized Normal, with mean

and covariance matrix

, when the density function is of the form:

with

, where

is the inner product of

) and

is the transpose of

. We shall write

. The normality factor

is defined as:

Notice that for

is the well known multivariate distribution.

Recall that the symmetric Kotz type multivariate distribution [

2] has density:

where

and the normalizing constant

is given by:

see also [

1] and [

12].

Therefore, it can be shown that the distribution follows from the symmetric Kotz type multivariate distribution for , and , i.e., . Also note that for the normal distribution it holds , while the normalizing factor is .

2. The Kullback-Leibler Information for –Order Generalized Normal Distribution

Recall that the Kullback-Leibler (K-L) Information for two

-variate density functions

is defined as [

13]:

The following Lemma provides a generalization of the Kullback-Leibler information measure for the introduced generalized Normal distribution.

Lemma 2.1. The K-L information

of the generalized normals

and

is equal to:

where

.

Proof. We have consecutively:

and thus

For

and

, we finally obtain:

where

.

Notice that the quadratic forms can be written in the form of respectively, and thus the lemma has been proved.

Recall now the well known multivariate K-L information measure between two multivariate normal distributions with

is:

which, for the univariate case, is:

In what follows, an investigation of the K-L information measure is presented and discussed, concerning the introduced generalized normal. New results are provided that generalize the notion of K-L information. In fact, the following Theorem 2.1 generalizes for the -order generalized Normal, assuming that . Various “sequences” of the K-L information measures are discussed in Corollary 2.1, while the generalized Fisher’s information of the generalized Normal is provided in Theorem 2.2.

Theorem 2.1. For

the K-L information

is equal to:

Proof. We write the result from the previous Lemma 2.1 in the form of:

where:

We can calculate the above integrals by writing them as:

and then we substitute

. Thus, we get respectively:

and:

Using the known integrals:

and

can be calculated as:

respectively. Thus, (11) can be written as:

and by substitution of

from (15) and

from definition 1.1, we get:

Assuming

, from (12),

is equal to:

and using the known integral (14), we have:

Thus, (16) finally takes the form:

However, and , and so (10) has been proved.

For

and for

from (16) we obtain:

where, from (12):

For

we have the only case in which

, and therefore, the integral of

in (12) can be simplified. Specifically, by setting

,

and

, from (18) we have consecutively:

Due to the known integrals (13) and (14), which for

are reduced respectively to:

we obtain:

i.e.,

and hence:

Finally, using the above relationship for

, (17) implies:

and the theorem has been proved.

Corollary 2.1. For the Kullback-Leibler information

it holds:

- (i)

Provided that

,

has a strict ascending order as dimension

rises,

i.e.,

- (ii)

Provided that

and

, and for a given

, we have:

- (iii)

For in , we obtain

- (iv)

Given and , the K-L information has a strict descending order as rises, i.e.,

- (v)

is a lower bound of all for

Proof. From Theorem 2.1

is a linear expression of

,

i.e.,

with a non-negative slope:

This is because, from applying the known logarithmic inequality

(where equality holds only for

) to

, we obtain:

and hence

, where the equality holds respectively only for

,

i.e., only for

. Thus, as the dimension

rises:

also rises.

In the general

-variate case,

is a linear expression of

,

i.e.,

, provided that

, with a non-negative slope:

Indeed,

as:

and since

, we get:

which implies that

. The equality holds respectively only for

,

i.e., only for

. Thus, as the dimension

rises,

also rises,

i.e.,

, and so

, provided that

(see

Figure 1).

Now, for given

and

, if we choose

, we have

or

. Thus,

i.e.,

. Consequently,

and so

(see

Figure 2).

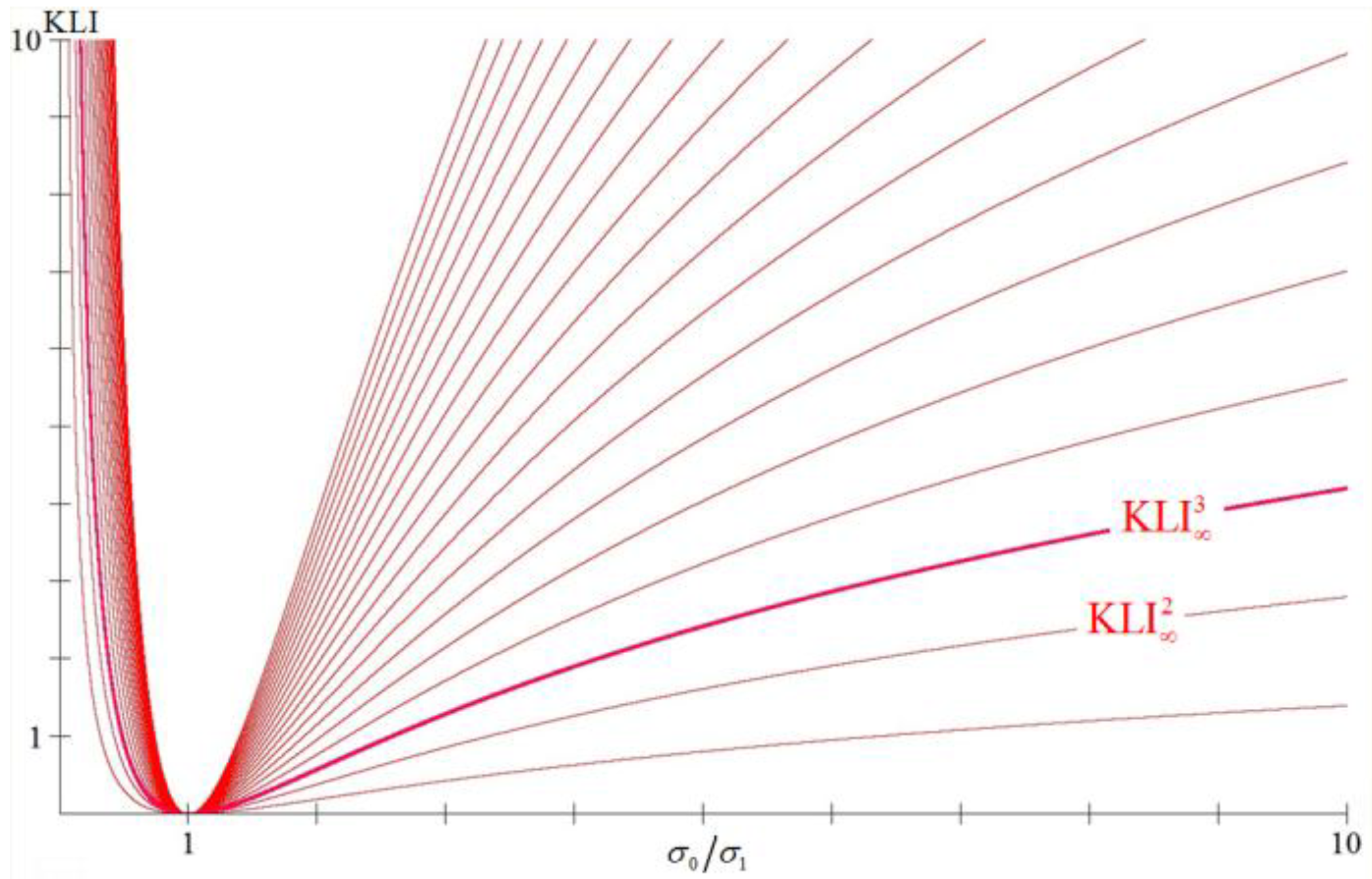

Figure 1.

The graphs of the , for dimensions , as functions of the quotient (provided that ), where we can see that .

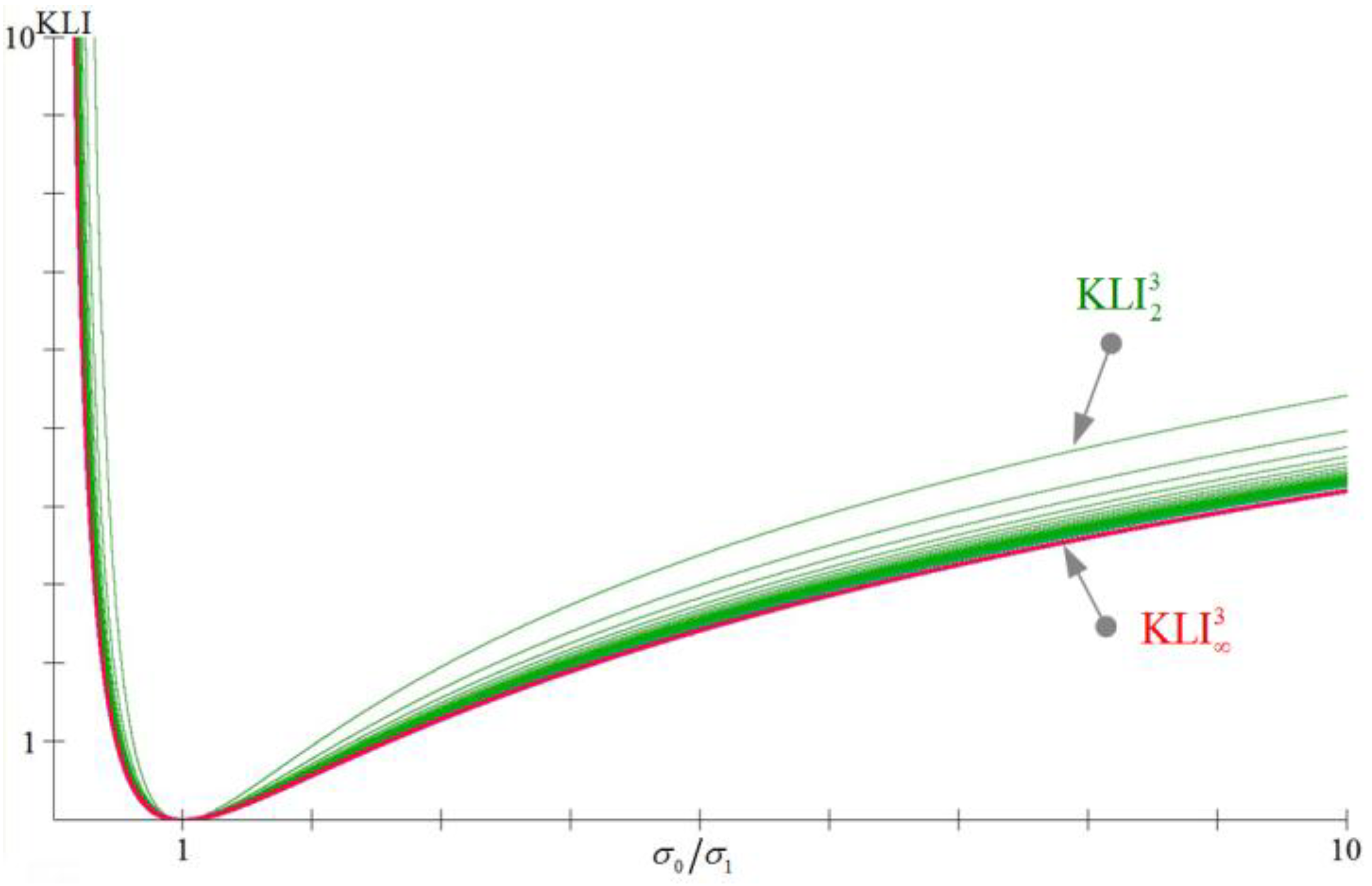

Figure 2.

The graphs of the trivariate , for , and , as functions of the quotient (provided that ), where we can see that and .

The Shannon entropy of a random variable

, which follows the generalized normal

is:

This is due to the entropy of the symmetric Kotz type distribution (9) and it has been calculated (see [

7]) for

,

,

.

Theorem 2.2. The generalized Fisher’s information of the generalized Normal

is:

Therefore, from (2),

equals eventually:

Switching to hyperspherical coordinates and taking into account the value of

we have the result (see [

7] for details).

Corollary 2.2. Due to Theorem 2.2, it holds that and .

Proof. Indeed, from (20),

and the fact that

(which has been extensively studied under the Logarithmic Sobolev Inequalities, see [

1] for details and [

14]), Corollary 2.2 holds.

Notice that, also from (20):

and therefore,

.

3. Discussion and Further Analysis

We examine the behavior of the multivariate

-order generalized Normal distribution. Using Mathematica, we proceed to the following helpful calculations to analyze further the above theoretical results, see also [

12].

Figure 3 represents the univariate

-order generalized Normal distribution for various values of

:

(normal distribution),

,

,

, while

Figure 4, represents the bivariate 10-order generalized Normal

with mean 0 and covariance matrix

.

Figure 3.

The univariate -order generalized Normals for .

Figure 4.

The bivariate 10-order generalized normal .

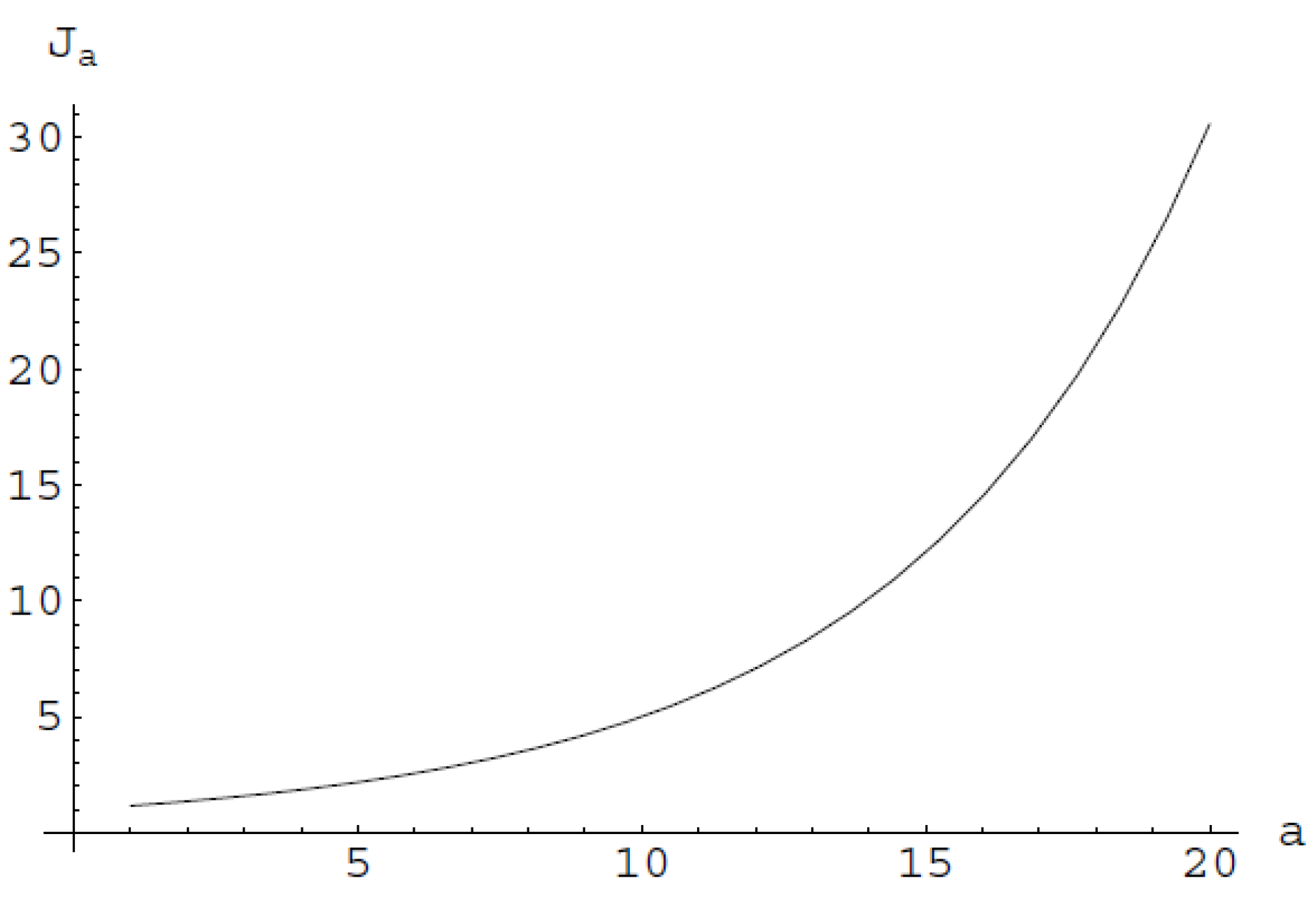

Figure 5 provides the behavior of the generalized information measure for the bivariate generalized normal distribution,

i.e.,

, where

.

Figure 5.

For the introduced generalized information measure of the generalized Normal distribution it holds that:

For

and

, it is the typical entropy power for the normal distribution.

The greater the number of variables involved at the multivariate -order generalized normal, the larger the generalized information , i.e., for . In other words, the generalized information measure of the multivariate generalized Normal distribution is an increasing function of the number of the involved variables.

Let us consider and to be constants. If we let vary, then the generalized information of the multivariate -order generalized Normal is a decreasing function of , i.e., for we have except for .

Proposition 3.1. The lower bound of is the Fisher’s entropy type information.

Proof. Letting vary and excluding the case , the generalized information of the multivariate generalized normal distribution is an increasing function of , i.e., for . That is the Fisher’s information is smaller than the generalized information measure for all , provided that .

When , the more variables involved at the multivariate generalized Normal distribution the larger the generalized entropy power , i.e., for . The dual occurs when . That is, when the number of involved variables defines an increasing generalized entropy power, while for the generalized entropy power is a decreasing function of the number of involved variables. Let us consider and to be constants and let vary. When the generalized entropy power of the multivariate generalized Normal distribution is increasing in .

When , the generalized entropy power of the multivariate generalized Normal distribution is decreasing in . If we let vary and let then, for the generalized entropy power of the multivariate generalized Normal distribution, we have for certain and , i.e., the generalized entropy power of the multivariate generalized Normal distribution is an increasing function of .

Now, letting vary, is a decreasing function of , i.e., provided that . The well known entropy power provides an upper bound for the generalized entropy power, i.e., , for given and .