Quaternionic Multilayer Perceptron with Local Analyticity

Abstract

:1. Introduction

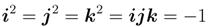

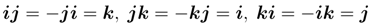

2. Quaternionic Algebra

2.1. Definition of Quaternion

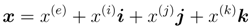

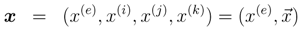

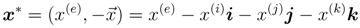

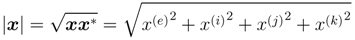

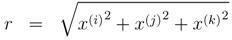

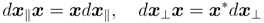

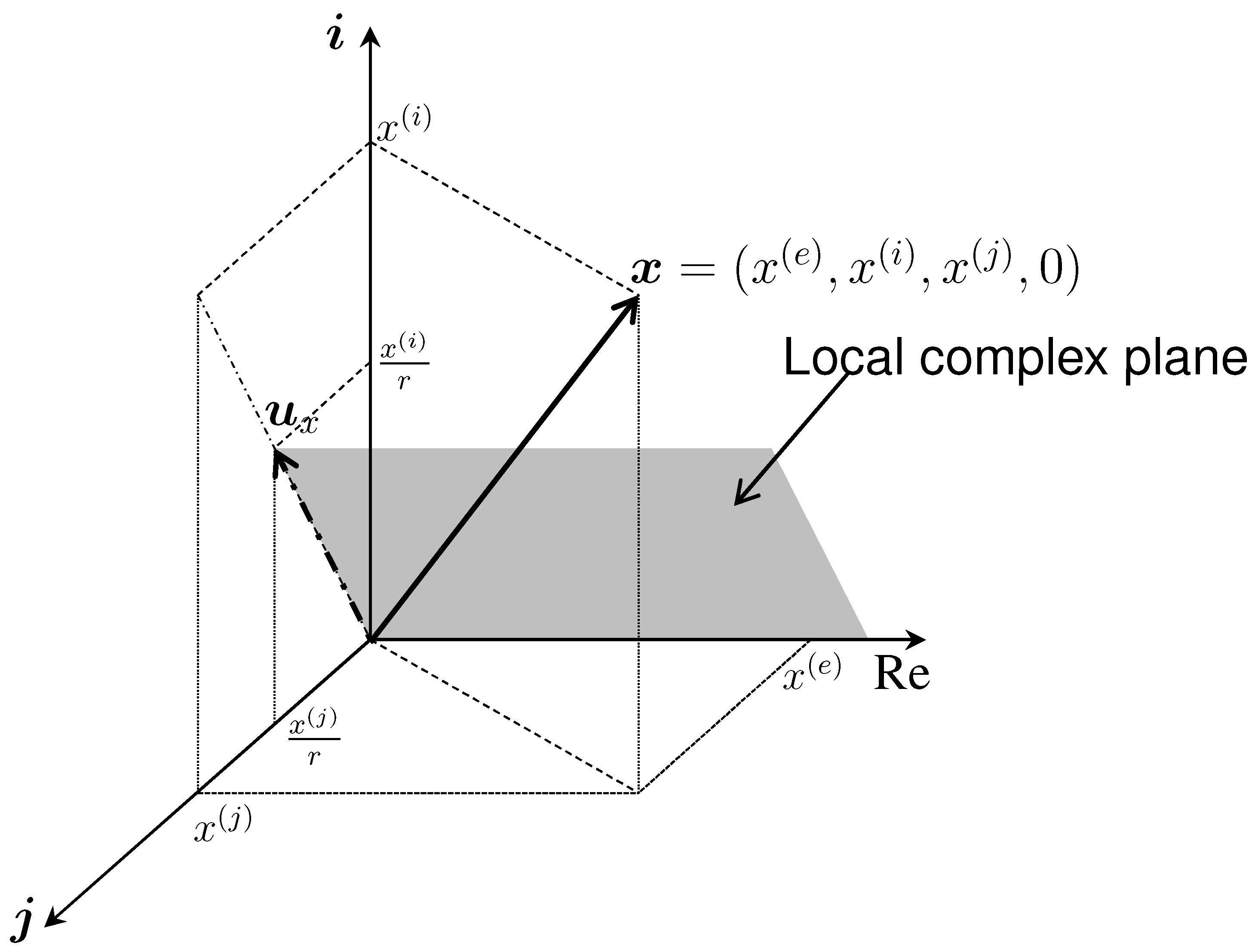

= { x(i), x(j), x(k)}. In this representation, x(e) is the scalar part of x, and

= { x(i), x(j), x(k)}. In this representation, x(e) is the scalar part of x, and  forms the vector part. The quaternion conjugate is defined as

forms the vector part. The quaternion conjugate is defined as

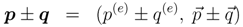

) = (p(e), p(i), p(j), p(k)) and q = (q(e),

) = (p(e), p(i), p(j), p(k)) and q = (q(e),  ) = (q(e), q(i), q(j), q(k)). The addition and subtraction of quaternions are defined in a similar manner as for complex-valued numbers or vectors, i.e.,

) = (q(e), q(i), q(j), q(k)). The addition and subtraction of quaternions are defined in a similar manner as for complex-valued numbers or vectors, i.e.,

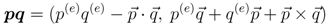

·

·  and

and  ×

×  denote the dot and cross products, respectively, between three-dimensional vectors

denote the dot and cross products, respectively, between three-dimensional vectors  and

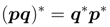

and  . The conjugate of the product is given as

. The conjugate of the product is given as

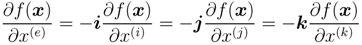

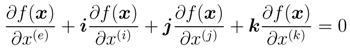

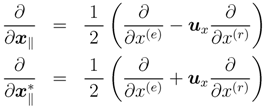

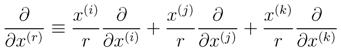

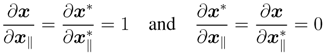

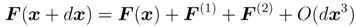

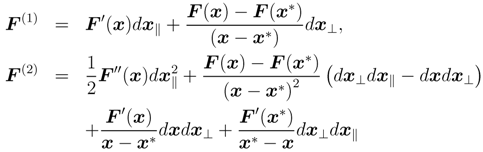

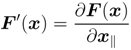

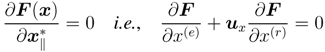

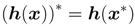

2.2. Quaternionic Analyticity

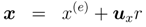

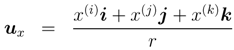

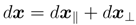

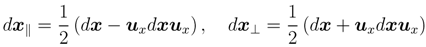

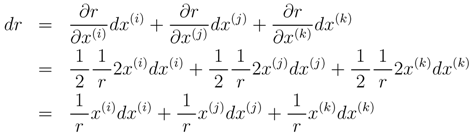

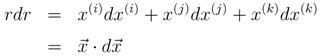

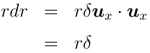

=0, because ux is a quaternion without a real part. Thus, ux and d

=0, because ux is a quaternion without a real part. Thus, ux and d  are parallel to each other. Then, d

are parallel to each other. Then, d  = uxδ can be obtained, where δ is a real-valued constant. From Equation (14), it follows that

= uxδ can be obtained, where δ is a real-valued constant. From Equation (14), it follows that

= uxr and d

= uxr and d  = uxδ, we obtain

= uxδ, we obtain

= uxdr is obtained. dx is represented as

= uxdr is obtained. dx is represented as  .

.

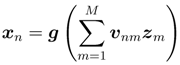

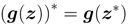

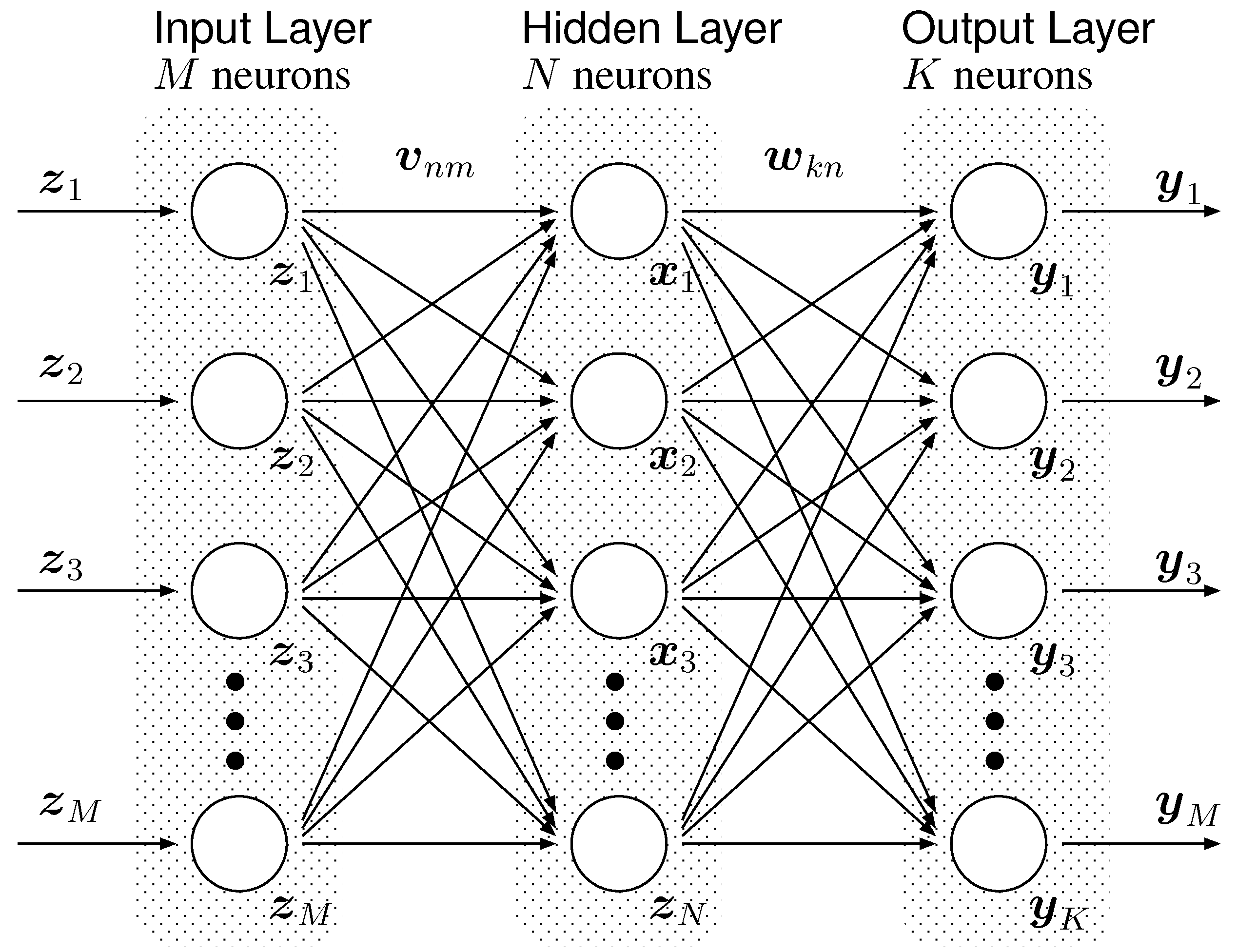

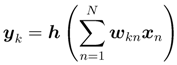

3. Quaternionic Multilayer Perceptron

3.1. Network Model

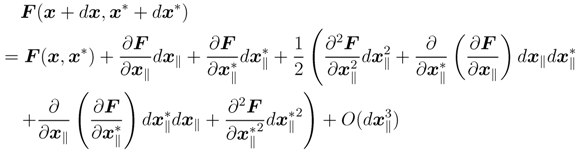

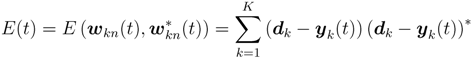

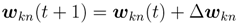

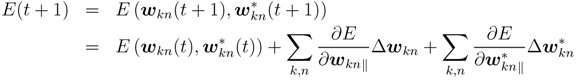

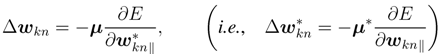

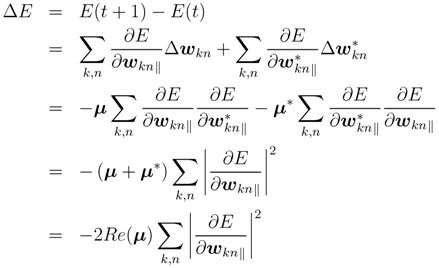

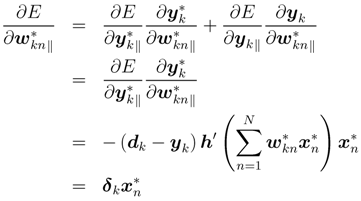

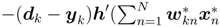

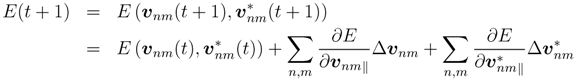

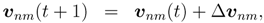

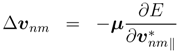

3.2. Learning Algorithm

.

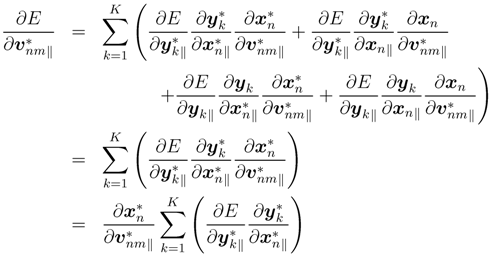

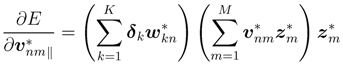

.

, and

, and  , we finally obtain

, we finally obtain

3.3. Universal Approximation Capability

4. Conclusions and Discussion

Acknowledgments

References

- Hirose, A. Complex-Valued Neural Networks: Theories and Application; World Scientific Publishing: Singapore, 2003. [Google Scholar]

- Hirose, A. Complex-Valued Neural Networks; Springer-Verlag: Berlin, Germany, 2006. [Google Scholar]

- Nitta, T. Complex-Valued Neural Networks: Utilizing High-Dimensional Parameters; Information Science Reference: New York, NY, USA, 2009. [Google Scholar]

- Hamilton, W.R. Lectures on Quaternions; Hodges and Smith: Dublin, Ireland, 1853. [Google Scholar]

- Hankins, T.L. Sir William Rowan Hamilton; Johns Hopkins University Press: Baltimore, MD, USA, 1980. [Google Scholar]

- Mukundan, R. Quaternions: From classical mechanics to computer graphics, and beyond. In Proceedings of the 7th Asian Technology Conference in Mathematics, Melaka, Malaysia, 17-21 December 2002; pp. 97–105.

- Kuipers, J.B. Quaternions and Rotation Sequences: A Primer with Applications to Orbits, Aerospace and Virtual Reality; Princeton University Press: Princeton, NJ, USA, 1998. [Google Scholar]

- Hoggar, S.G. Mathematics for Computer Graphics; Cambridge University Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Nitta, T. An extension of the back-propagation algorithm to quaternions. In Proceedings of International Conference on Neural Information Processing (ICONIP’96), Hong Kong, China, 24-27 September 1996; 1, pp. 247–250.

- Arena, P.; Fortuna, L.; Muscato, G.; Xibilia, M. Multilayer perceptronsto approximate quaternion valued functions. Neural Netw. 1997, 10, 335–342. [Google Scholar] [CrossRef]

- Buchholz, S.; Sommer, G. Quaternionic spinor MLP. In Proceeding of 8th European Symposium on Artificial Neural Networks (ESANN 2000), Bruges, Belgium, 26-28 April 2000; pp. 377–382.

- Matsui, N.; Isokawa, T.; Kusamichi, H.; Peper, F.; Nishimura, H. Quaternion neural network with geometrical operators. J. Intell. Fuzzy Syst. 2004, 15, 149–164. [Google Scholar]

- Mandic, D.P.; Jahanchahi, C.; Took, C.C. A quaternion gradient operator and its applications. IEEE Signal Proc. Lett. 2011, 18, 47–50. [Google Scholar] [CrossRef]

- Ujang, B.C.; Took, C.C.; Mandic, D.P. Quaternion-valued nonlinear adaptive filtering. IEEE Trans. Neural Netw. 2011, 22, 1193–1206. [Google Scholar] [CrossRef]

- Kusamichi, H.; Isokawa, T.; Matsui, N.; Ogawa, Y.; Maeda, K. Anewschemeforcolornight vision by quaternion neural network. In Proceedings of the 2nd International Conferenceon Autonomous Robots and Agents (ICARA2004), Palmerston North, New Zealand, 13-15 December 2004; pp. 101–106.

- Isokawa, T.; Matsui, N.; Nishimura, H. Quaternionic neural networks: Fundamental properties and applications. In Complex-Valued Neural Networks: Utilizing High-Dimensional Parameters; Nitta, T., Ed.; Information Science Reference: New York, NY, USA, 2009; pp. 411–439, Chapter XVI. [Google Scholar]

- Nitta, T. A solution to the 4-bit parity problem with a single quaternary neuron. Neural Inf. Process. Lett. Rev. 2004, 5, 33–39. [Google Scholar]

- Yoshida, M.; Kuroe, Y.; Mori, T. Models of hopfield-type quaternion neural networks and their energy functions. Int. J. Neural Syst. 2005, 15, 129–135. [Google Scholar] [CrossRef]

- Isokawa, T.; Nishimura, H.; Kamiura, N.; Matsui, N. Fundamental properties of quaternionic hopfield neural network. In Proceedings of 2006 International Joint Conference on Neural Networks, Vancouver BC, USA, 30 October 2006; pp. 610–615.

- Isokawa, T.; Nishimura, H.; Kamiura, N.; Matsui, N. Associative memoryin quaternionic hopfield neural network. Int. J. Neural Syst. 2008, 18, 135–145. [Google Scholar] [CrossRef]

- Isokawa, T.; Nishimura, H.; Kamiura, N.; Matsui, N. Dynamics of discrete-time quaternionic hopfield neural networks. In Proceedings of 17th International Conference on Artificial Neural Networks, Porto, Portugal, 9-13 September 2007; pp. 848–857.

- Isokawa, T.; Nishimura, H.; Matsui, N. On the fundamental properties of fully quaternionic hopfield network. In Proceedings of IEEE World Congress on Computational Intelligence (WCCI2012), Brisbane, Australia, 10-15 June 2012; pp. 1246–1249.

- Isokawa, T.; Nishimura, H.; Saitoh, A.; Kamiura, N.; Matsui, N. On the scheme of quaternionic multistate hopfield neural network. In Proceedings of Joint 4th International Conference on Soft Computing and Intelligent Systems and 9th International Symposium on Advanced Intelligent Systems (SCIS&ISIS 2008), Nagoya, Japan, 17-21 September 2008; pp. 809–813.

- Isokawa, T.; Nishimura, H.; Matsui, N. Commutative quaternion and multistate hopfield neural networks. In Proceedings of IEEE World Congress on Computational Intelligence (WCCI2010), Barcelona, Spain, 18-23 July 2010; pp. 1281–1286.

- Isokawa, T.; Nishimura, H.; Matsui, N. An iterative learning schemefor multistate complex-valued and quaternionic hopfield neural networks. In Proceedings of International Joint Conference on Neural Networks (IJCNN2009), Atlanta, GA, USA, 14-19 June 2009; pp. 1365–1371.

- Leo, S.D.; Rotelli, P.P. Local hypercomplex analyticity. 1997. Available online: http://arxiv.org/abs/funct-an/9703002 (accessed on 20 November 2012).

- Leo, S.D.; Rotelli, P.P. Quaternonic analyticity. Appl. Math. Lett. 2003, 16, 1077–1081. [Google Scholar] [CrossRef]

- Schwartz, C. Calculus with a quaternionic variable. J. Math. Phys. 2009, 50, 013523:1–013523:11. [Google Scholar]

- Kim, T.; Adalı, T. Approximationby fully complex multilayer perceptrons. Neural Comput. 2003, 15, 1641–1666. [Google Scholar] [CrossRef]

- Wirtinger, W. Zur formalen theorie der funktionen von mehr komplexen veränderlichen. Math. Ann. 1927, 97, 357–375. [Google Scholar] [CrossRef]

- Cybenko, G. Approximations by superpositions of sigmoidal functions. Math. Control Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Hornik, K. Approximation capabilities of multilayer feedforward networks. Neural Netw. 1991, 4, 215–257. [Google Scholar]

- Segre, C. The real representations of complex elements and extension to bicomplex systems. Math. Ann. 1892, 40, 322–335. [Google Scholar]

- Catoni, F.; Cannata, R.; Zampetti, P. An Introduction to commutative quaternions. Adv. Appl. CliffordAlgebras 2006, 16, 1–28. [Google Scholar] [CrossRef]

- Davenport, C.M. A commutative hypercomplex algebra with associated function theory. In Clifford Algebra With Numeric and Symbolic Computation; Ablamowicz, R., Ed.; Birkhauser: Boston, MA, USA, 1996; pp. 213–227. [Google Scholar]

- Pei, S.C.; Chang, J.H.; Ding, J.J. Commutative reduced biquaternions and their Fourier Transformfor signal and image processing applications. IEEE Trans. Signal Proc. 2004, 52, 2012–2031. [Google Scholar] [CrossRef]

- Hirose, A. Continuous complex-valued back-propagation learning. Electron. Lett. 1992, 28, 1854–1855. [Google Scholar] [CrossRef]

- Georgiou, G.M.; Koutsougeras, C. Complex domain backpropagation. IEEE Trans. Circuits Syst. II 1992, 39, 330–334. [Google Scholar] [CrossRef]

© 2012 by the authors; licensee MDPI, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Isokawa, T.; Nishimura, H.; Matsui, N. Quaternionic Multilayer Perceptron with Local Analyticity. Information 2012, 3, 756-770. https://doi.org/10.3390/info3040756

Isokawa T, Nishimura H, Matsui N. Quaternionic Multilayer Perceptron with Local Analyticity. Information. 2012; 3(4):756-770. https://doi.org/10.3390/info3040756

Chicago/Turabian StyleIsokawa, Teijiro, Haruhiko Nishimura, and Nobuyuki Matsui. 2012. "Quaternionic Multilayer Perceptron with Local Analyticity" Information 3, no. 4: 756-770. https://doi.org/10.3390/info3040756