Multi-Label Classification from Multiple Noisy Sources Using Topic Models †

Abstract

:1. Introduction

Contributions

- We introduce a novel topic model for multi-label classification; our model has the distinctive feature of exploiting any additional information provided by the absence of classes. In addition, The use of topics enables our model to capture correlation between the classes. We refer to our topic model as ML-PA-LDA (Multi-Label Presence-Absence LDA).

- We enhance our model to account for the scenario where several heterogeneous annotators with unknown qualities provide the labels for the training set. We refer to this enhanced model as ML-PA-LDA-MNS (ML-PA-LDA with Multiple Noisy Sources). A feature of ML-PA-LDA-MNS is that it does not require an annotator to label all classes for a document. Even partial labeling by the annotators up to the granularity of labels within a document is adequate.

- We test the performance of ML-PA-LDA on several real world datasets and establish its superior performance over the state of the art.

- Furthermore, we study the performance of ML-PA-LDA-MNS, with simulated annotators providing the labels for these datasets. In spite of the noisy labels, ML-PA-LDA-MNS demonstrates excellent performance and the qualities of the annotators learnt approximate closely the true qualities of the annotators.

2. Related Work

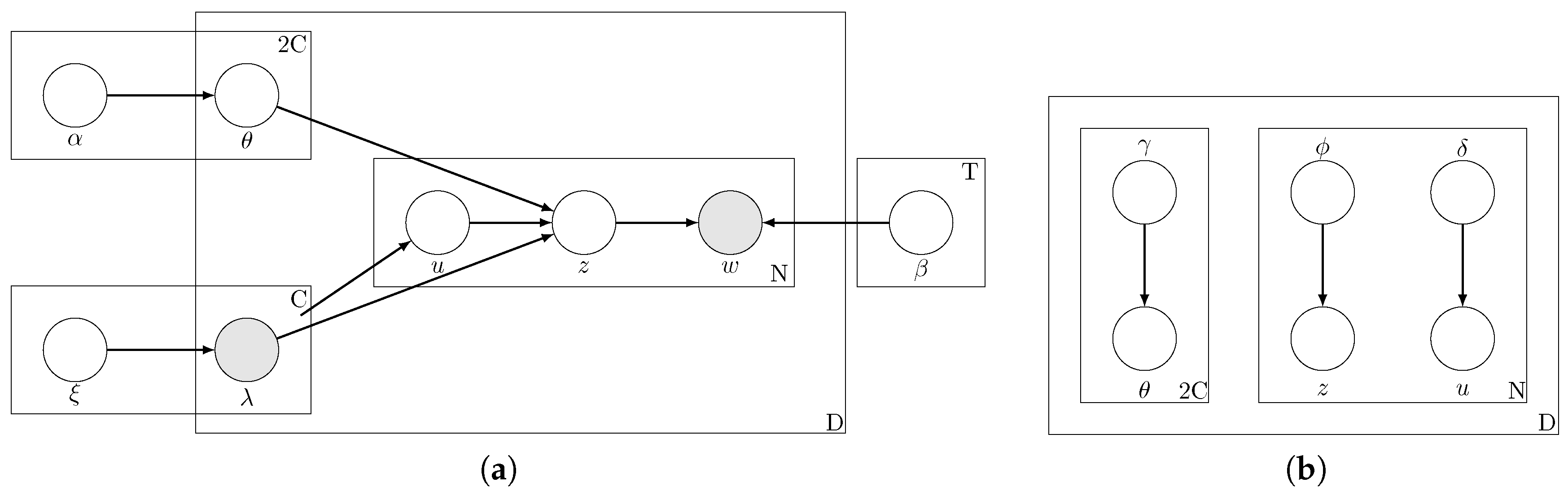

3. Proposed Approach for Multi-Label Classification from a Single Source: ML-PA-LDA

Topic Model for the Documents

- Draw class membership for every class .

- Draw for , for , where are the parameters of a Dirichlet distribution with T parameters. provides the parameters of a multinomial distribution for generating topics.

- For every word w in the document

- (a)

- Sample from one of the C classes.

- (b)

- Generate a topic , where are the parameters of a multinomial distribution in T dimensions.

- (c)

- Generate the word where are the parameters of a multinomial distribution in V dimensions.

4. Variational EM for Learning the Parameters of ML-PA-LDA

4.1. E-Step Updates for ML-PA-LDA

4.2. M-Step Updates for ML-PA-LDA

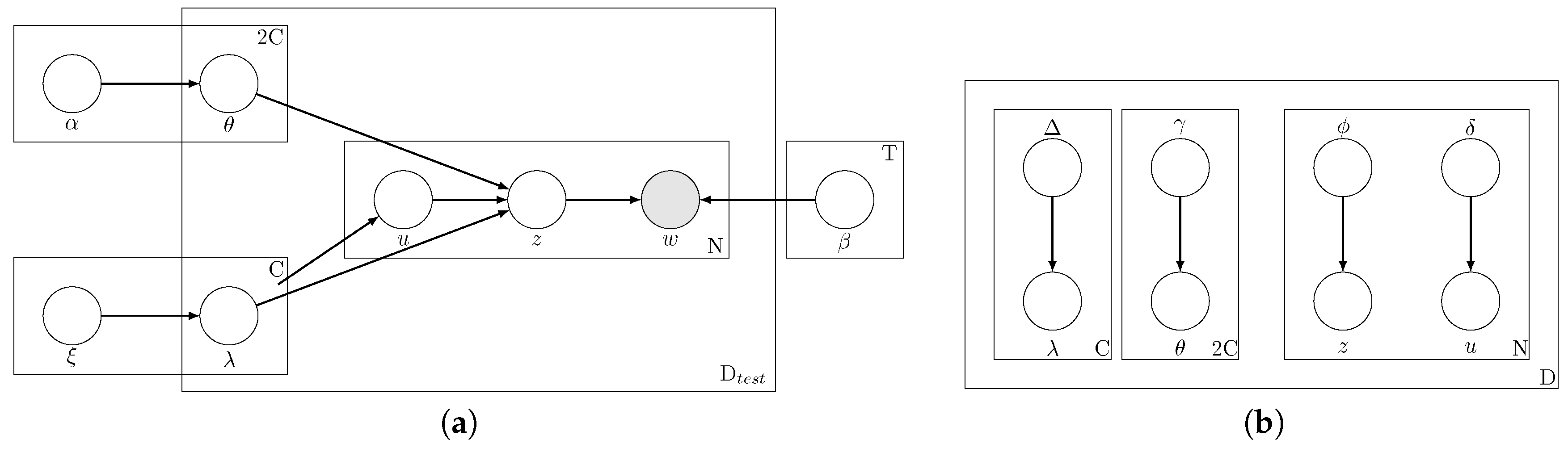

5. Inference in ML-PA-LDA

| Algorithm 1 Algorithm for inferring class labels for a new document d during test phase of Multi-Label Presence-Absence Latent Dirichlet Allocation (ML-PA-LDA). |

| Require: Document d, Model parameters |

| Initialize |

| repeat |

| Update using Equation (18) |

| Update using Equation (20) |

| Update using Equation (21) |

| Update using Equations (22) and (23) |

| until convergence |

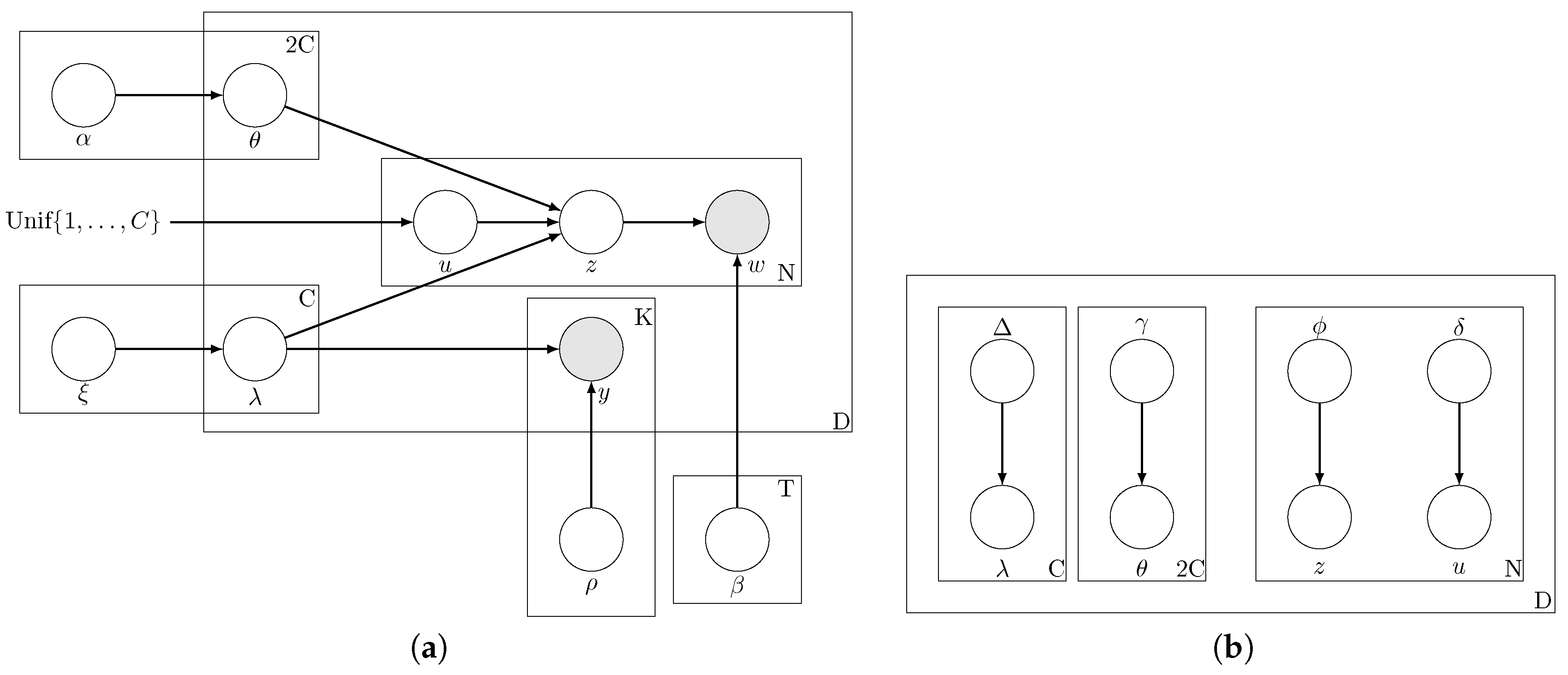

6. Proposed Approach for Multi-Label Classification from Multiple Sources: ML-PA-LDA-MNS

Single Coin Model for the Annotators

7. Variational EM for ML-PA-LDA-MNS

7.1. E-Step Updates for ML-PA-LDA-MNS

7.2. M-Step Updates for ML-PA-LDA-MNS

| Algorithm 2 Algorithm for learning the parameters during the training phase of ML-PA-LDA-MNS. | |

| repeat | |

| for d = 1 to D do | |

| Initialize | ▹ E-step |

| repeat | |

| Update sequentially using Equations (32), (34)–(37). | |

| until convergence | |

| end for | ▹ M-step |

| Update using Equation (38) | |

| Update using Equation (39) | |

| Update using Equation (40) | |

| Perform NR updates for using Equation (41), till convergence. | |

| until convergence | |

Inference

8. Smoothing

9. Experiments

9.1. Dataset Descriptions

9.1.1. Text Datasets

9.1.2. Non-Text Datasets

9.2. Results: ML-PA-LDA (with a Single Source)

Statistical Significance Tests:

9.3. Results: ML-PA-LDA-MNS (with Multiple Noisy Sources)

Adversarial Annotators

10. Conclusions

Author Contributions

Conflicts of Interest

References

- Rao, Y.; Xie, H.; Li, J.; Jin, F.; Wang, F.L.; Li, Q. Social Emotion Classification of Short Text via Topic-Level Maximum Entropy Model. Inf. Manag. 2016, 53, 978–986. [Google Scholar] [CrossRef]

- Xie, H.; Li, X.; Wang, T.; Lau, R.Y.; Wong, T.L.; Chen, L.; Wang, F.L.; Li, Q. Incorporating Sentiment into Tag-based User Profiles and Resource Profiles for Personalized Search in Folksonomy. Inf. Process. Manag. 2016, 52, 61–72. [Google Scholar] [CrossRef]

- Li, X.; Xie, H.; Chen, L.; Wang, J.; Deng, X. News impact on stock price return via sentiment analysis. Knowl. Based Syst. 2014, 69, 14–23. [Google Scholar] [CrossRef]

- Li, X.; Xie, H.; Song, Y.; Zhu, S.; Li, Q.; Wang, F.L. Does Summarization Help Stock Prediction? A News Impact Analysis. IEEE Intell. Syst. 2015, 30, 26–34. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet Allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Heinrich, G. A Generic Approach to Topic Models. In Proceedings of the European Conference on Machine Learning and Knowledge Discovery in Databases: Part I (ECML PKDD ’09), Shanghai, China, 18–21 May 2009; pp. 517–532. [Google Scholar]

- Krestel, R.; Fankhauser, P. Tag recommendation using probabilistic topic models. In Proceedings of the International Workshop at the European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases, Bled, Slovenia, 7 September 2009; pp. 131–141. [Google Scholar]

- Li, F.F.; Perona, P. A bayesian hierarchical model for learning natural Scene categories. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005; Volume 2, pp. 524–531. [Google Scholar]

- Pritchard, J.K.; Stephens, M.; Donnelly, P. Inference of population structure using multilocus genotype data. Genetics 2000, 155, 945–959. [Google Scholar] [PubMed]

- Marlin, B. Collaborative Filtering: A Machine Learning Perspective. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2004. [Google Scholar]

- Erosheva, E.A. Grade of Membership and Latent Structure Models with Application To Disability Survey Data. Ph.D. Thesis, Office of Population Research, Princeton University, Princeton, NJ, USA, 2002. [Google Scholar]

- Girolami, M.; Kabán, A. Simplicial Mixtures of Markov Chains: Distributed Modelling of Dynamic User Profiles. In Proceedings of the 16th International Conference on Neural Information Processing Systems (NIPS’03), Washington, DC, USA, 21–24 August 2003; pp. 9–16. [Google Scholar]

- Mcauliffe, J.D.; Blei, D.M. Supervised Topic Models. In Proceedings of the Twenty-First Annual Conference on Neural Information Processing Systems (NIPS’07), Vancouver, BC, Canada, 3–6 December 2007; pp. 121–128. [Google Scholar]

- Wang, H.; Huang, M.; Zhu, X. A Generative Probabilistic Model for Multi-label Classification. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining (ICDM’08), Washington, DC, USA, 15–19 December 2008; pp. 628–637. [Google Scholar]

- Rubin, T.N.; Chambers, A.; Smyth, P.; Steyvers, M. Statistical Topic Models for Multi-label Document Classification. Mach. Learn. 2012, 88, 157–208. [Google Scholar] [CrossRef]

- Cherman, E.A.; Monard, M.C.; Metz, J. Multi-label Problem Transformation Methods: A Case Study. CLEI Electron. J. 2011, 14, 4. [Google Scholar]

- Tsoumakas, G.; Katakis, I.; Vlahavas, I. Random k-Labelsets for Multilabel Classification. IEEE Trans. Knowl. Data Eng. 2011, 23, 1079–1089. [Google Scholar] [CrossRef]

- Elisseeff, A.; Weston, J. A Kernel Method for Multi-labelled Classification. In Proceedings of the 14th International Conference on Neural Information Processing Systems: Natural and Synthetic (NIPS’01), Vancouver, BC, Canada, 3–8 December 2001; pp. 681–687. [Google Scholar]

- Zhang, M.L.; Zhou, Z.H. A Review on Multi-Label Learning Algorithms. IEEE Trans. Knowl. Data Eng. 2014, 26, 1819–1837. [Google Scholar] [CrossRef]

- McCallum, A.K. Multi-label text classification with a mixture model trained by EM. In Proceedings of the AAAI 99 Workshop on Text Learning, Orlando, FL, USA, 18–22 July 1999. [Google Scholar]

- Ueda, N.; Saito, K. Parametric mixture models for multi-labeled text. In Proceedings of the Neural Information Processing Systems 15 (NIPS’02), Vancouver, BC, Canada, 9–14 December 2002; pp. 721–728. [Google Scholar]

- Soleimani, H.; Miller, D.J. Semi-supervised Multi-Label Topic Models for Document Classification and Sentence Labeling. In Proceedings of the 25th ACM International on Conference on Information and Knowledge Management (CIKM’16), Indianapolis, IN, USA, 24–28 October 2016; pp. 105–114. [Google Scholar]

- Raykar, V.C.; Yu, S.; Zhao, L.H.; Valadez, G.H.; Florin, C.; Bogoni, L.; Moy, L. Learning From Crowds. J. Mach. Learn. Res. 2010, 11, 1297–1322. [Google Scholar]

- Bragg, J.; Mausam; Weld, D.S. Crowdsourcing Multi-Label Classification for Taxonomy Creation. In Proceedings of the First AAAI Conference on Human Computation and Crowdsourcing (HCOMP), Palm Springs, CA, USA, 7–9 November 2013. [Google Scholar]

- Deng, J.; Russakovsky, O.; Krause, J.; Bernstein, M.S.; Berg, A.; Fei-Fei, L. Scalable Multi-label Annotation. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’14), Toronto, ON, Canada, 26 April–1 May 2014; pp. 3099–3102. [Google Scholar]

- Duan, L.; Satoshi, O.; Sato, H.; Kurihara, M. Leveraging Crowdsourcing to Make Models in Multi-label Domains Interoperable. Available online: http://hokkaido.ipsj.or.jp/info2014/papers/20/Duan_INFO.pdf (accessed on 4 May 2017).

- Rodrigues, F.; Ribeiro, B.; Lourenço, M.; Pereira, F. Learning Supervised Topic Models from Crowds. In Proceedings of the Third AAAI Conference on Human Computation and Crowdsourcing (HCOMP), San Diego, CA, USA, 8–11 November 2015. [Google Scholar]

- Ramage, D.; Manning, C.D.; Dumais, S. Partially Labeled Topic Models for Interpretable Text Mining. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD’11), San Diego, CA, USA, 21–24 August 2011; pp. 457–465. [Google Scholar]

- Bishop, C. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- ML-PA-LDA-MNS Overview. Available online: https://bitbucket.org/divs1202/ml-pa-lda-mns (accessed on 4 May 2017).

- Lichman, M. UCI Machine Learning Repository; University of California, Irvine: Irvine, CA, USA, 2013. [Google Scholar]

- Porter, M.F. An algorithm for suffix stripping. Program 1980, 14, 130–137. [Google Scholar] [CrossRef]

- Katakis, I.; Tsoumakas, G.; Vlahavas, I. Multilabel text classification for automated tag suggestion. ECML PKDD Discov. Chall. 2008, 75–83. [Google Scholar]

- Tsoumakas, G.; Spyromitros-Xioufis, E.; Vilcek, J.; Vlahavas, I. Mulan: A Java Library for Multi-Label Learning. J. Mach. Learn. Res. 2011, 12, 2411–2414. [Google Scholar]

- Tsoumakas, G.; Katakis, I.; Vlahavas, I. Effective and Efficient Multilabel Classification in Domains with Large Number of Labels. In Proceedings of the ECML/PKDD 2008 Workshop on Mining Multidimensional Data (MMD’08), Atlanta, GA, USA, 24–26 April 2008; pp. 30–44. [Google Scholar]

- Boutell, M.R.; Luo, J.; Shen, X.; Brown, C.M. Learning multi-label Scene classification. Pattern Recognit. 2004, 37, 1757–1771. [Google Scholar] [CrossRef]

- Gibaja, E.; Ventura, S. A Tutorial on Multilabel Learning. ACM Comput. Surv. 2015, 47. [Google Scholar] [CrossRef]

- Read, J.; Martino, L.; Luengo, D. Efficient Monte Carlo optimization for multi-label classifier chains. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 3457–3461. [Google Scholar]

- Read, J.; Pfahringer, B.; Holmes, G.; Frank, E. Classifier Chains for Multi-label Classification. Mach. Learn. 2011, 85, 333–359. [Google Scholar] [CrossRef]

- Zaragoza, J.H.; Sucar, L.E.; Morales, E.F.; Bielza, C.; Larrañaga, P. Bayesian Chain Classifiers for Multidimensional Classification. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence (IJCAI’11), Barcelona, Spain, 16–22 July 2011; Volume 3, pp. 2192–2197. [Google Scholar]

- MEKA: A Multi-label Extension to WEKA. Available online: http://meka.sourceforge.net (accessed on 4 May 2017).

- Blumer, A.; Ehrenfeucht, A.; Haussler, D.; Warmuth, M.K. Occam’s Razor. Inf. Process. Lett. 1987, 24, 377–380. [Google Scholar] [CrossRef]

- Cochran, W.G. The Efficiencies of the Binomial Series Tests of Significance of a Mean and of a Correlation Coefficient. J. R. Stat. Soc. 1937, 100, 69–73. [Google Scholar] [CrossRef]

- Rohatgi, V.K.; Saleh, A.M.E. An Introduction to Probability and Statistics; John Wiley & Sons: New York, NY, USA, 2015. [Google Scholar]

| Dataset | RAKel (J48) | MCC | BRq | BCC | SLDA | ML-PA-LDA | ML-PA-LDA-MNS |

|---|---|---|---|---|---|---|---|

| Reuters | 0.881 | 0.876 | 0.863 | 0.867 | 0.897 | 0.969 | 0.942 |

| Bibtex | 0.293 | 0.290 | 0.309 | 0.299 | 0.984 | 0.984 | 0.981 |

| Enron | 0.402 | 0.389 | 0.430 | 0.411 | 0.937 | 0.939 | 0.938 |

| Delicious | 0.316 | 0.307 | 0.338 | 0.345 | 0.799 | 0.804 | 0.803 |

| Scene | 0.577 | 0.580 | 0.550 | 0.594 | 0.823 | 0.823 | 0.818 |

| Yeast | 0.415 | 0.432 | 0.462 | 0.413 | 0.767 | 0.767 | 0.767 |

| % of Training | ML-PA-LDA | ML-PA-LDA | ML-PA-LDA-MNS | ML-PA-LDA-MNS | Ann RMSE |

|---|---|---|---|---|---|

| Set Used | Avg Accuracy | Avg Microf1 | Avg Accuracy | Avg Microf1 | |

| 10 | 0.949 | 0.762 | 0.927 | 0.616 | 0.023 |

| 30 | 0.953 | 0.784 | 0.930 | 0.619 | 0.014 |

| 50 | 0.955 | 0.787 | 0.936 | 0.629 | 0.011 |

| 70 | 0.961 | 0.828 | 0.937 | 0.650 | 0.010 |

| 100 | 0.969 | 0.829 | 0.942 | 0.669 | 0.009 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Padmanabhan, D.; Bhat, S.; Shevade, S.; Narahari, Y. Multi-Label Classification from Multiple Noisy Sources Using Topic Models. Information 2017, 8, 52. https://doi.org/10.3390/info8020052

Padmanabhan D, Bhat S, Shevade S, Narahari Y. Multi-Label Classification from Multiple Noisy Sources Using Topic Models. Information. 2017; 8(2):52. https://doi.org/10.3390/info8020052

Chicago/Turabian StylePadmanabhan, Divya, Satyanath Bhat, Shirish Shevade, and Y. Narahari. 2017. "Multi-Label Classification from Multiple Noisy Sources Using Topic Models" Information 8, no. 2: 52. https://doi.org/10.3390/info8020052