A Practical Point Cloud Based Road Curb Detection Method for Autonomous Vehicle

Abstract

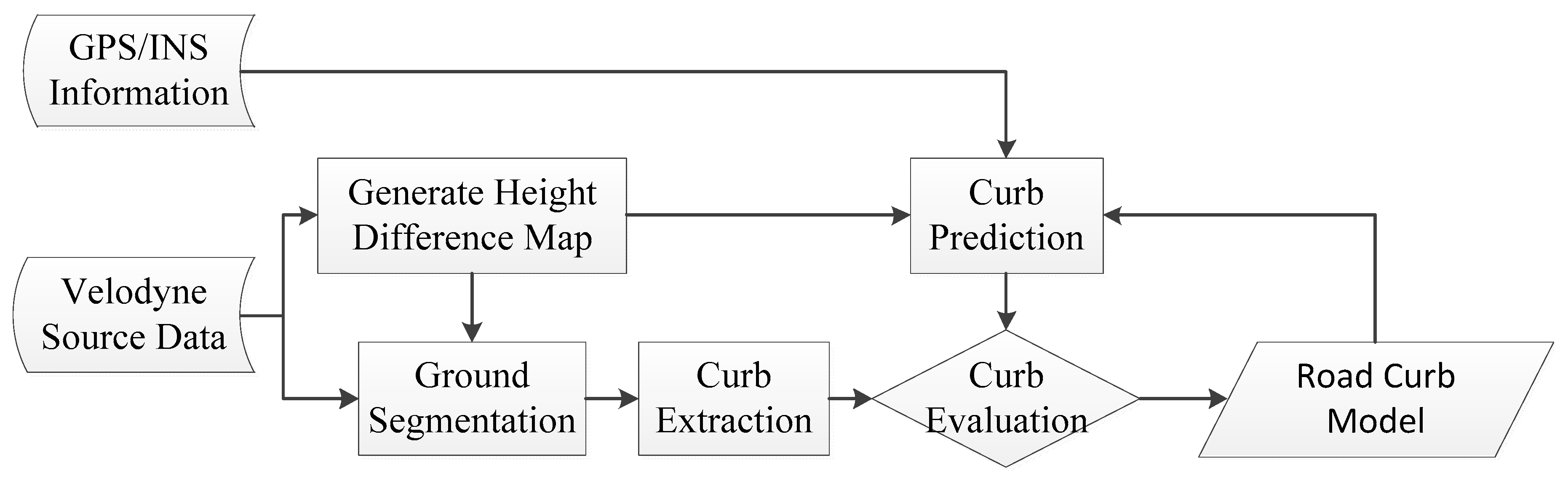

:1. Introduction

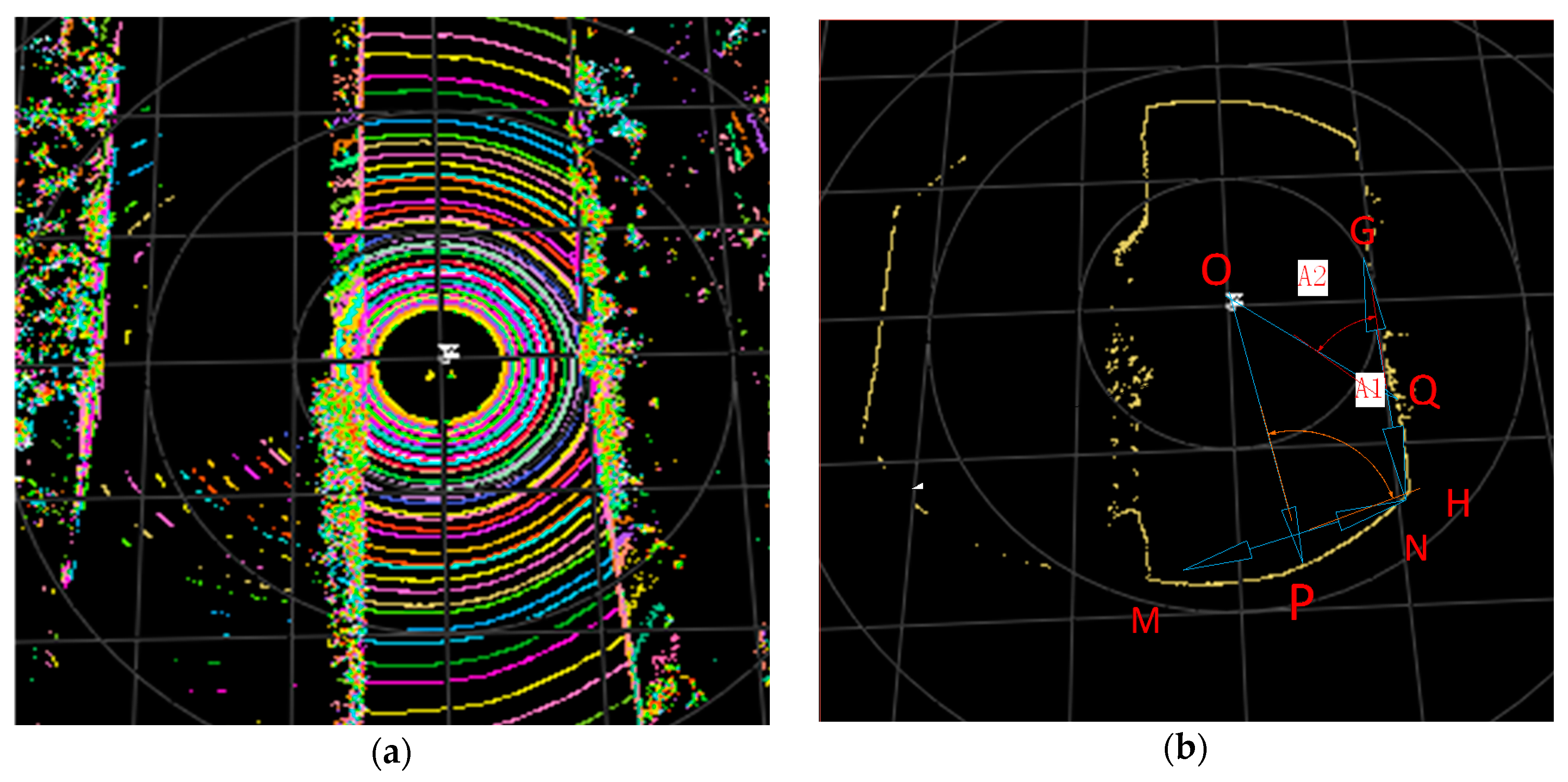

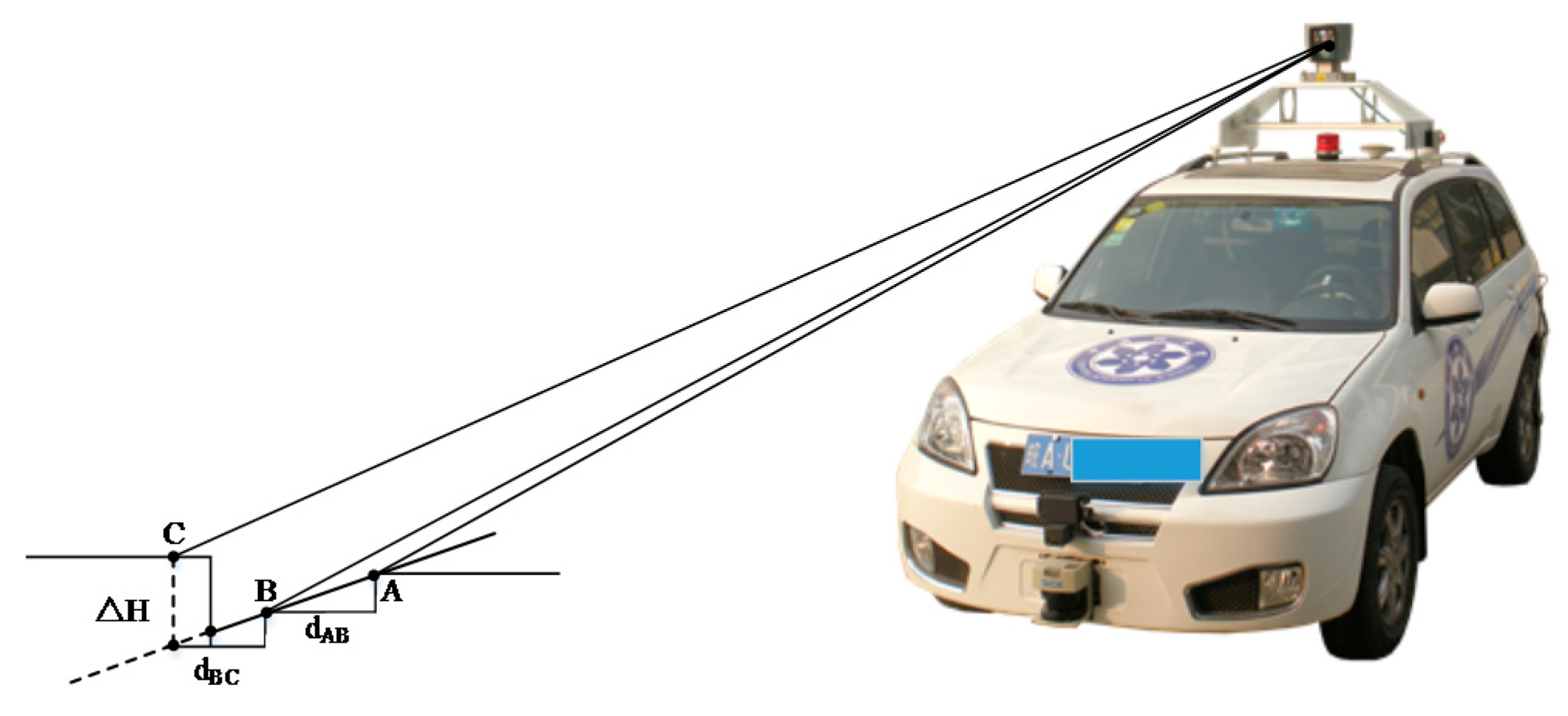

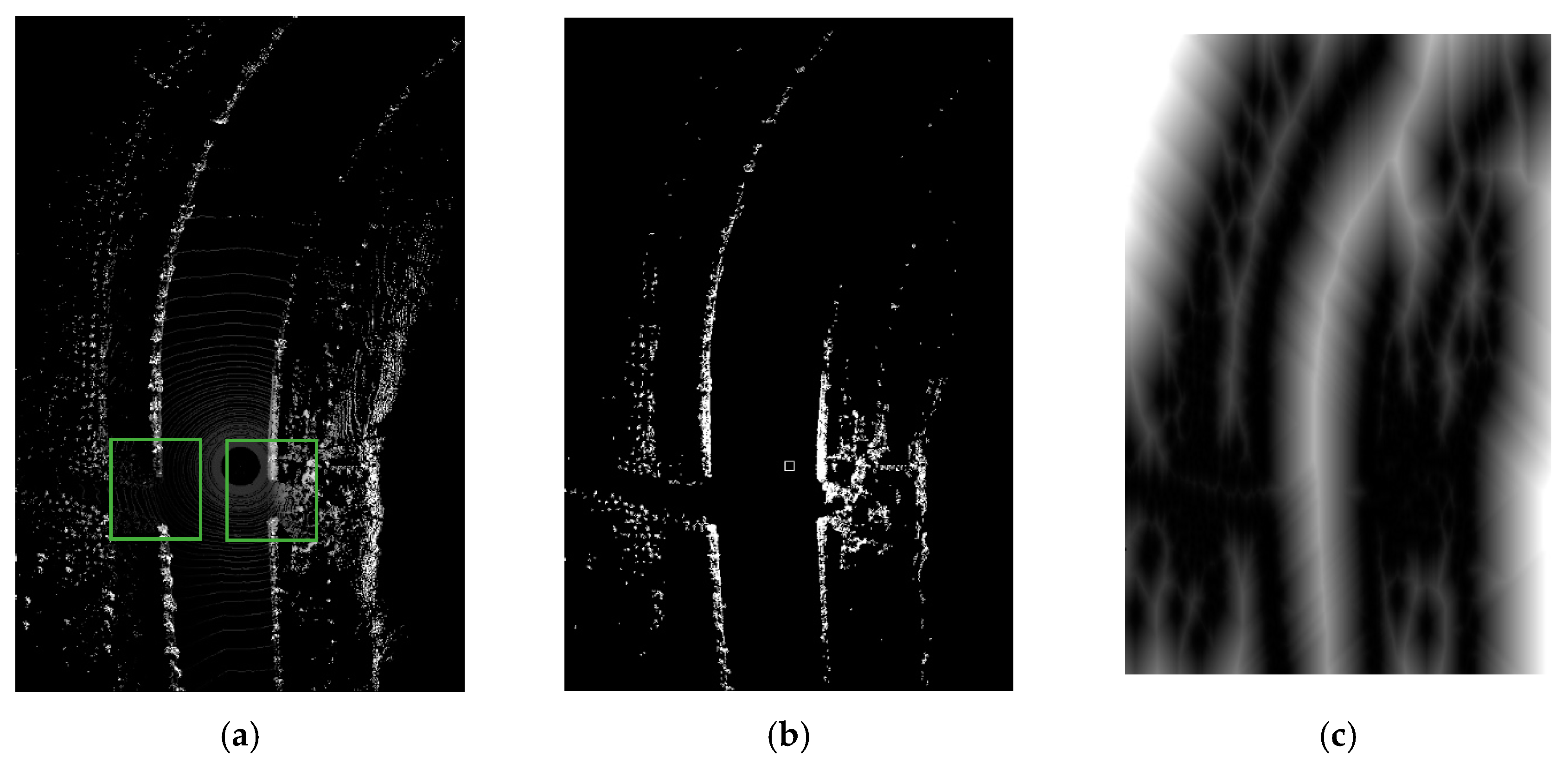

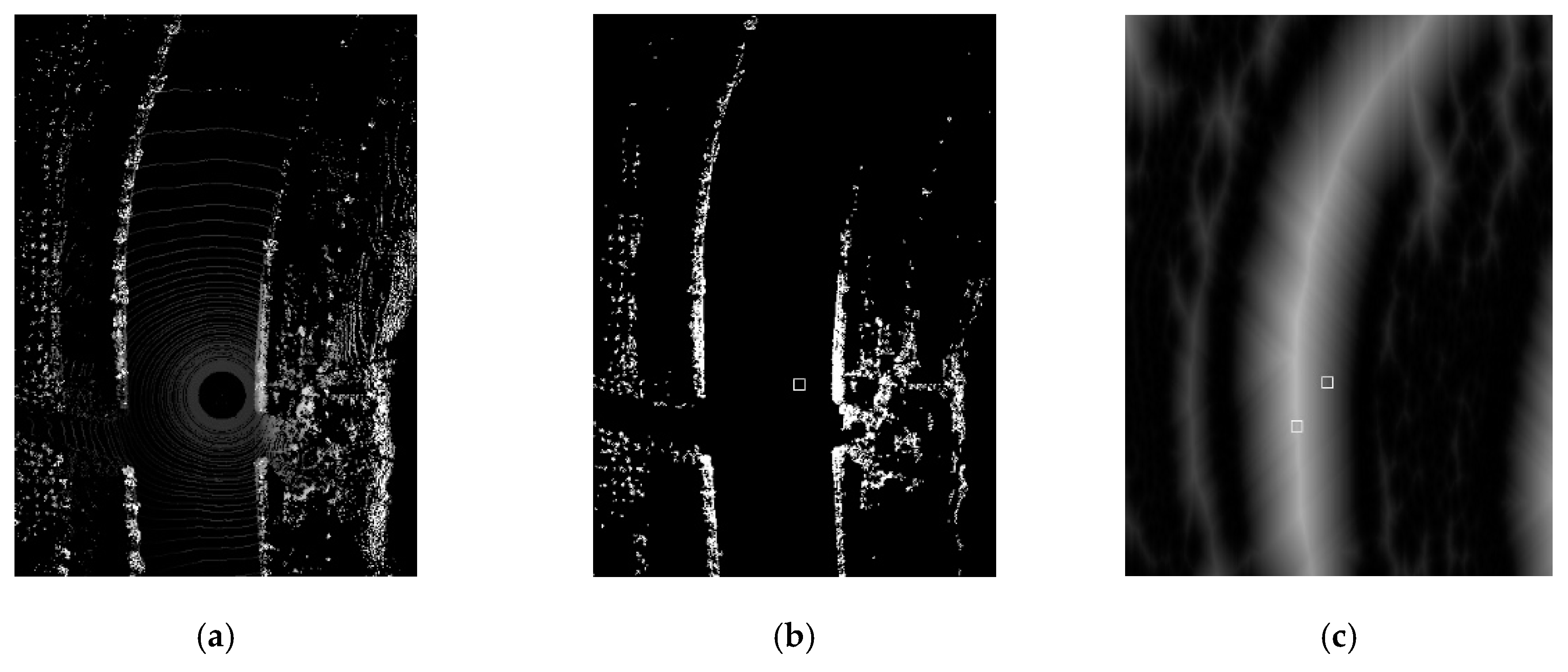

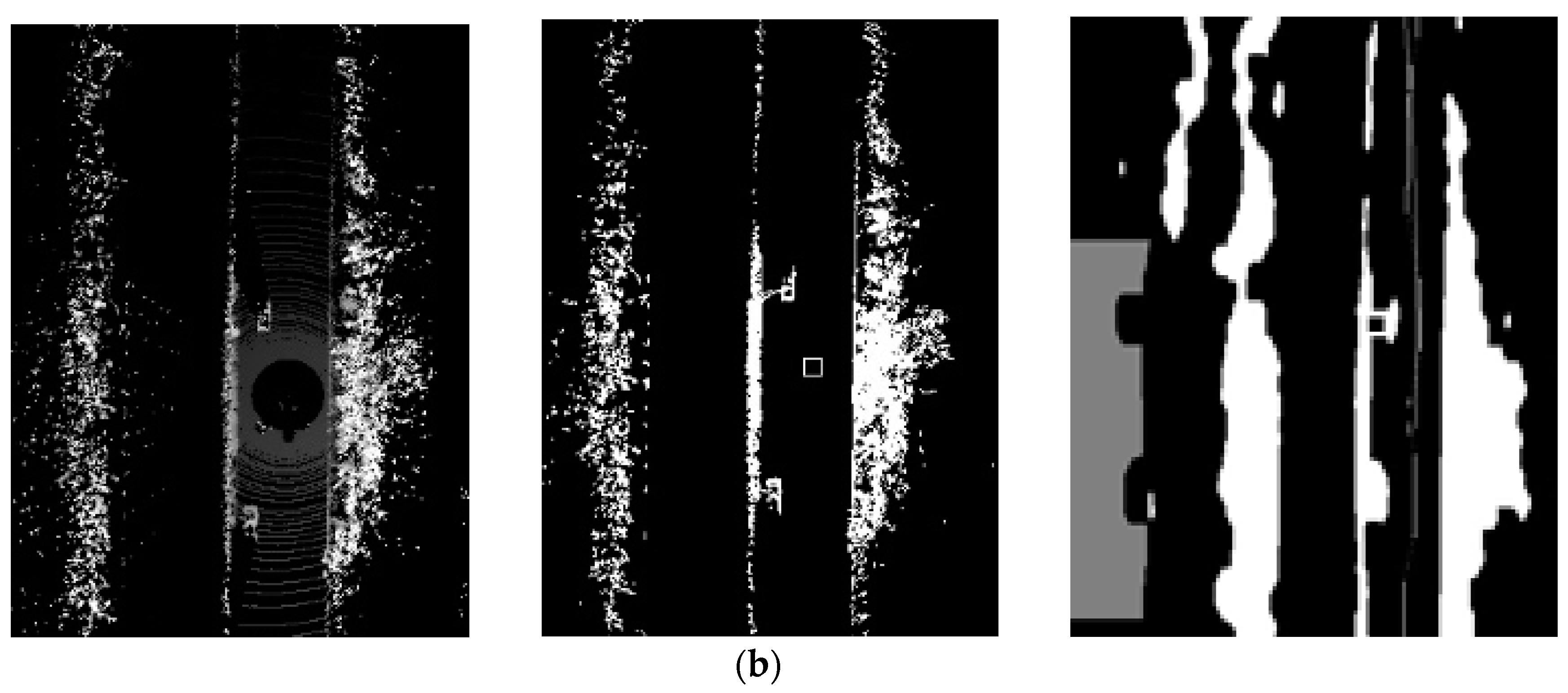

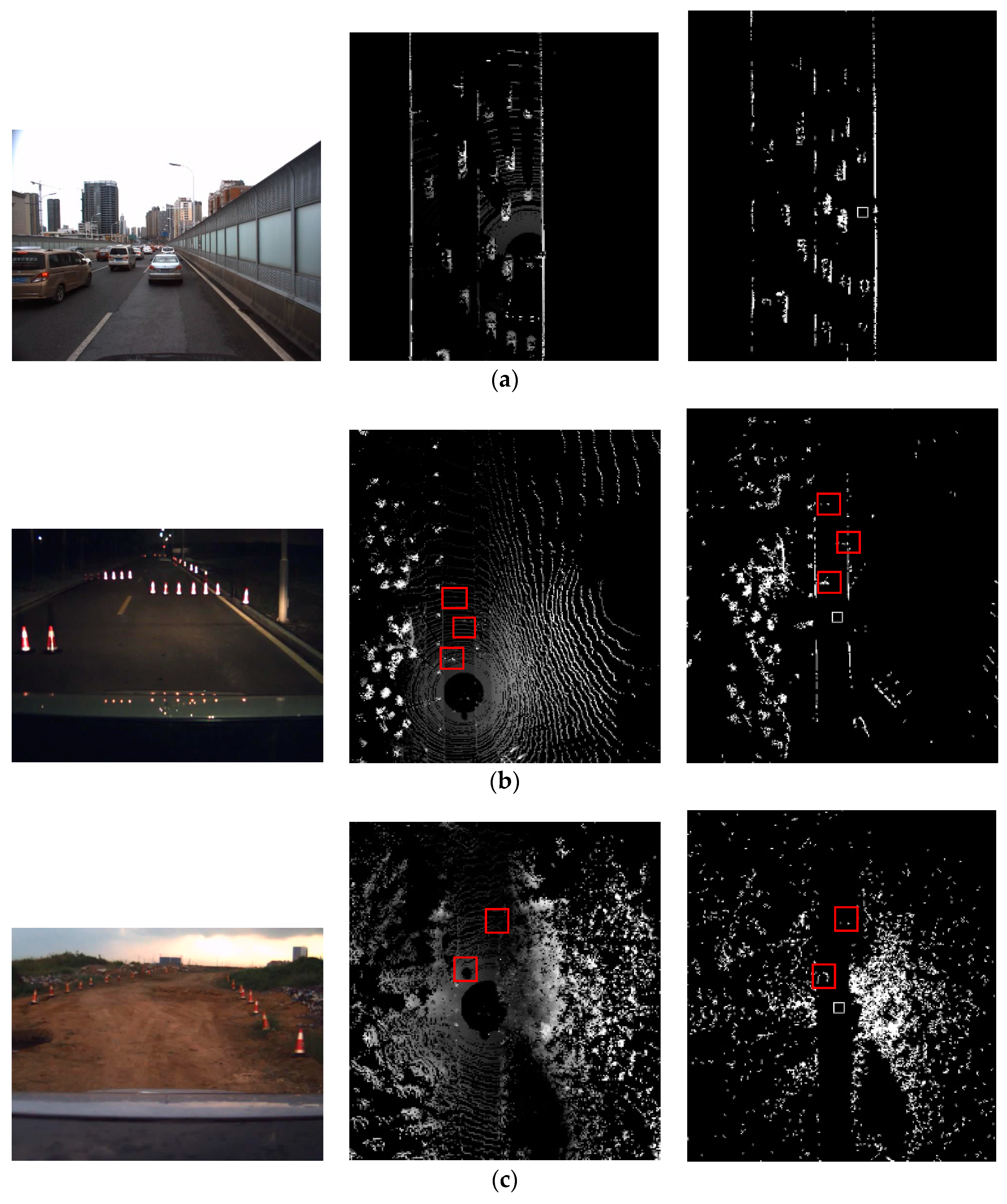

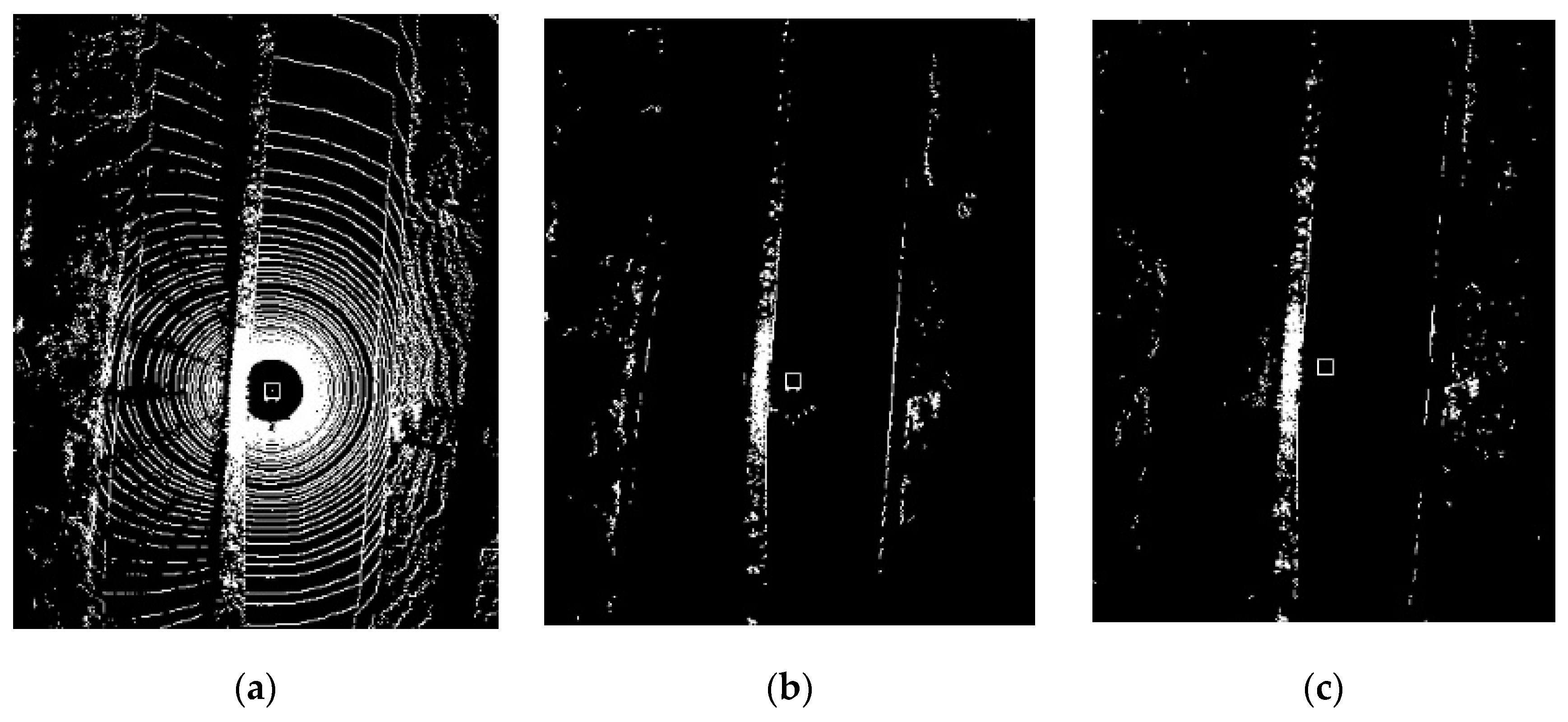

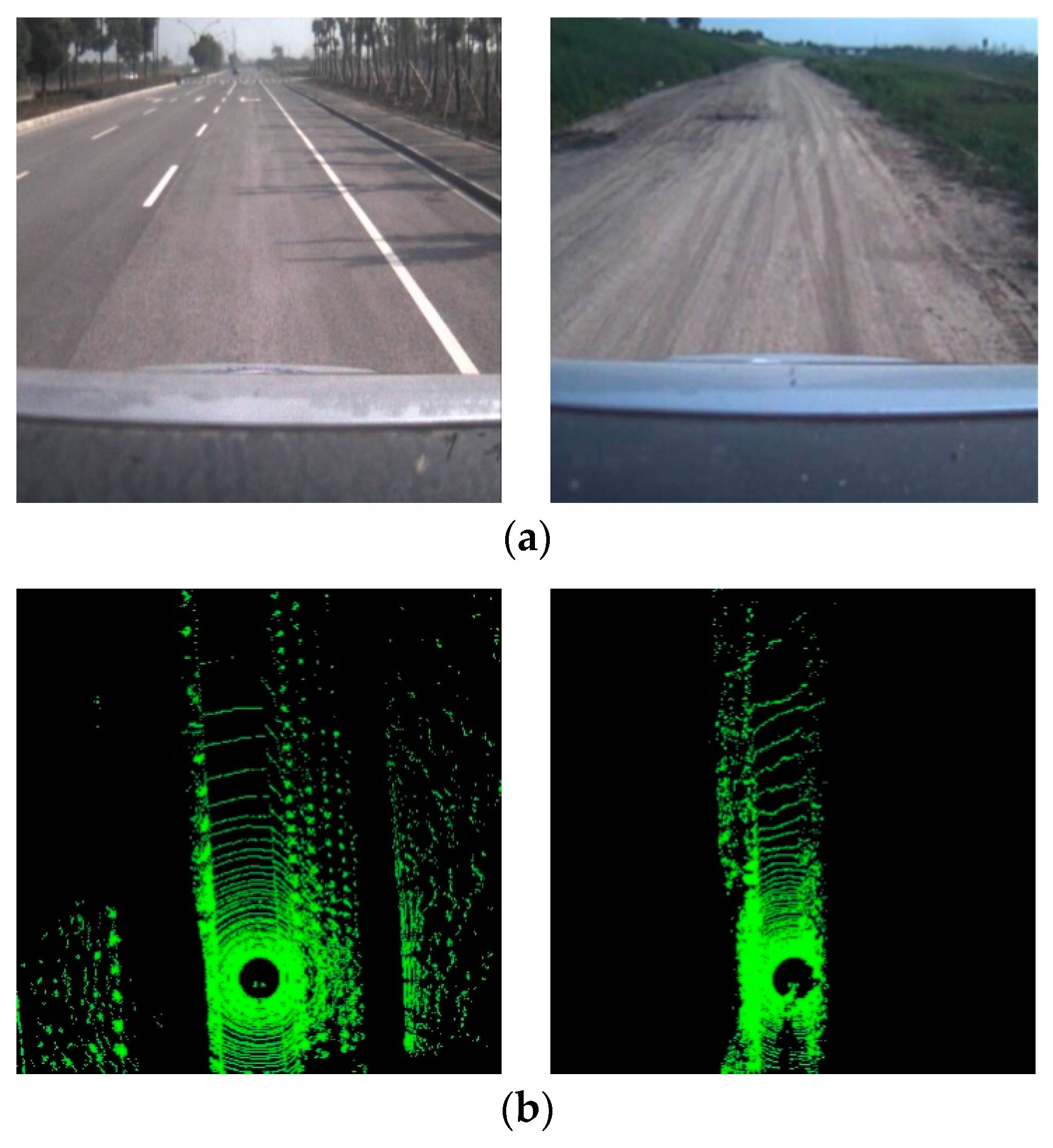

2. Ground Segmentation

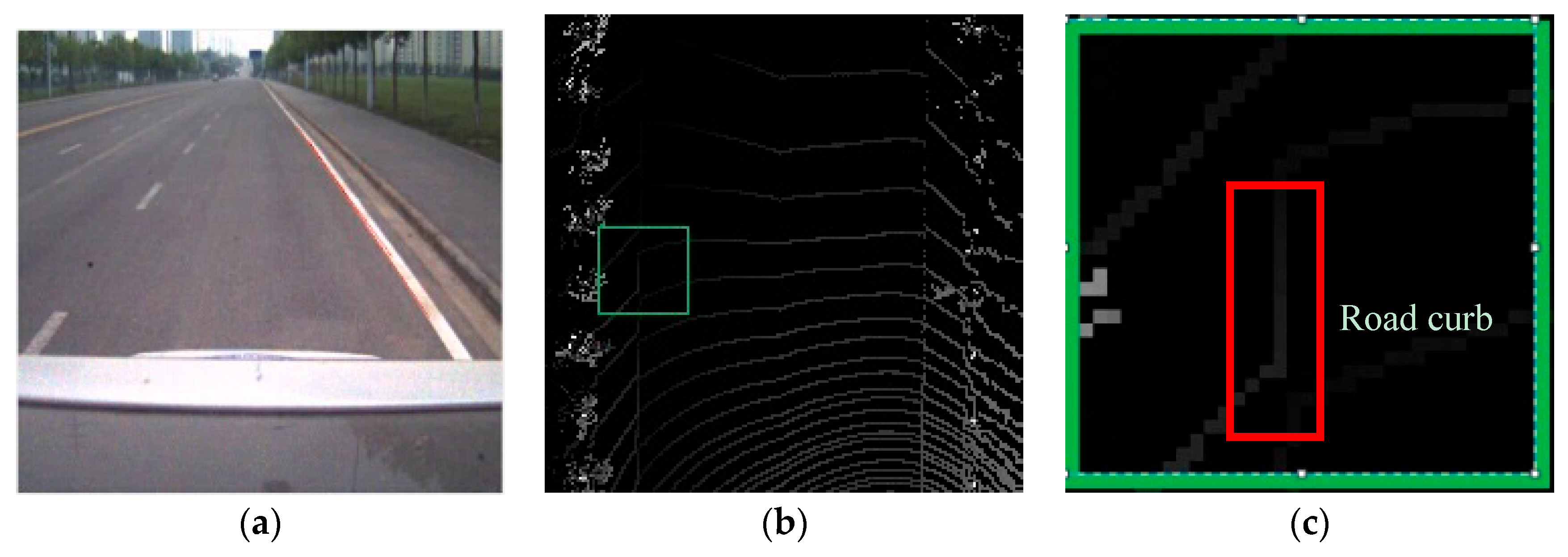

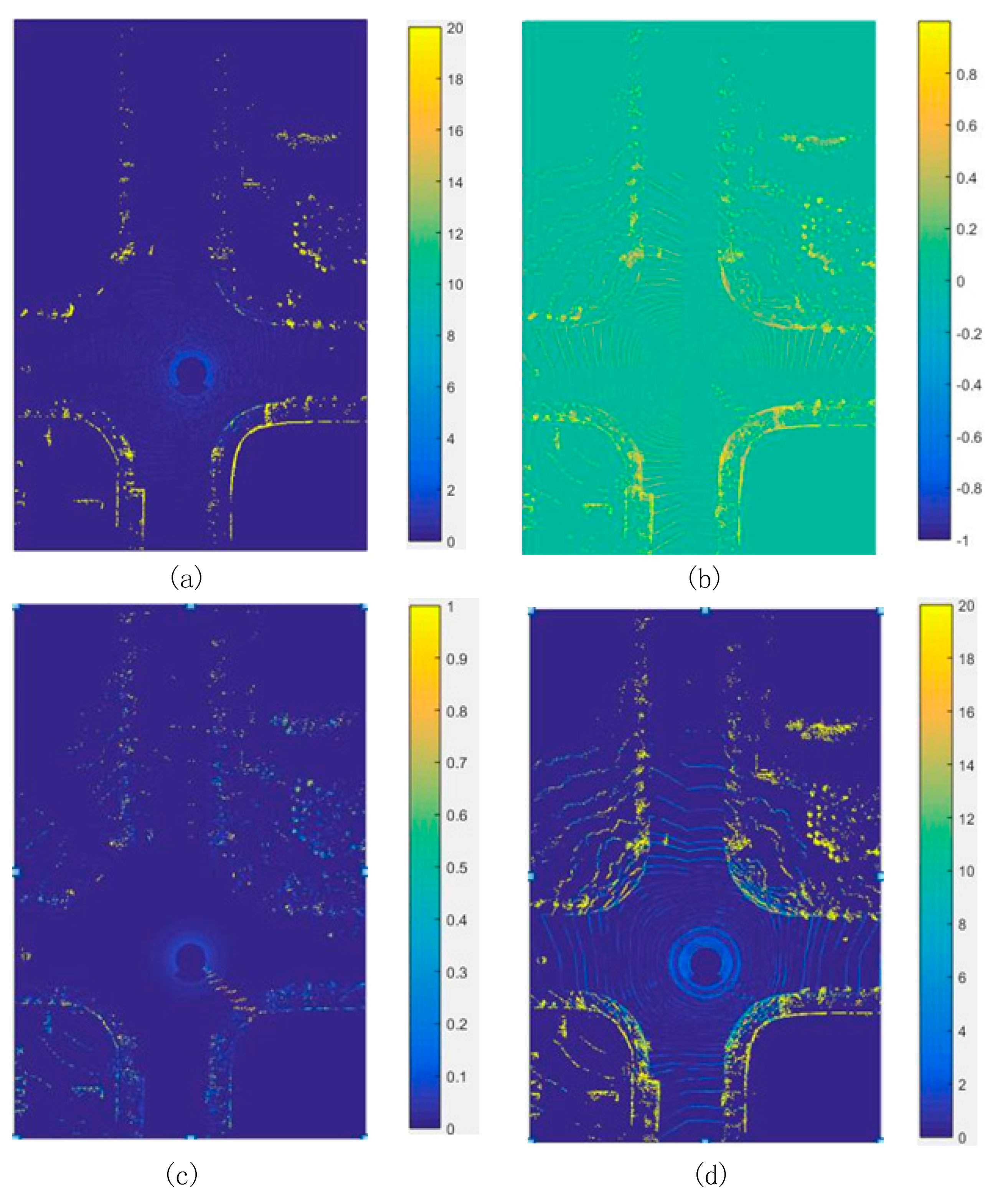

2.1. Maximum Height Difference in One Grid (MaxHD)

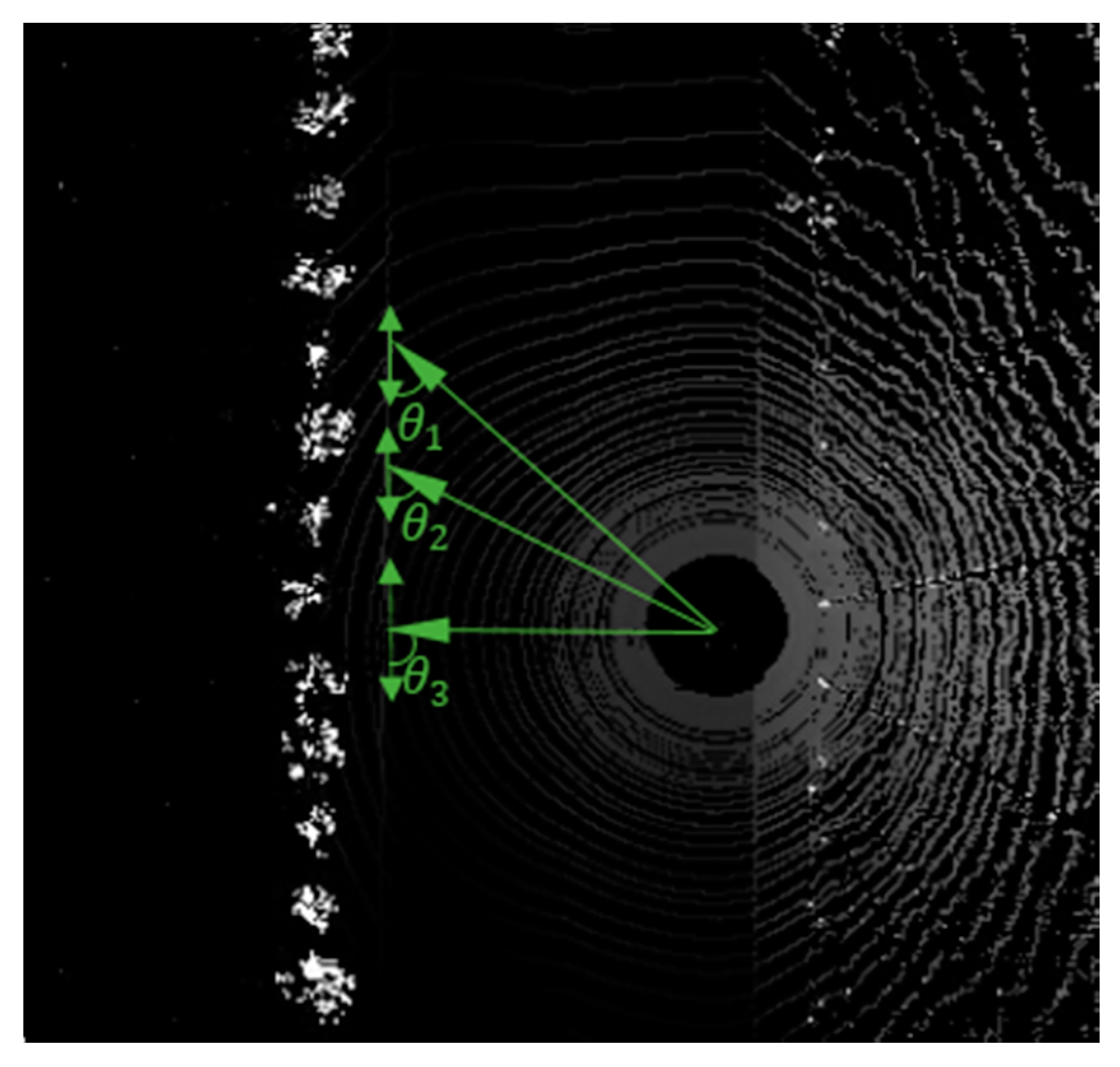

2.2. Tangential Angle Feature (TangenAngle)

2.3. Change in Distance between Neighboring Points in One Spin (RadiusRatio)

2.4. Height Difference Based on the Prediction of the Radial Gradient (HDInRadius)

2.5. Selection of the Threshold and Scope for Each Feature

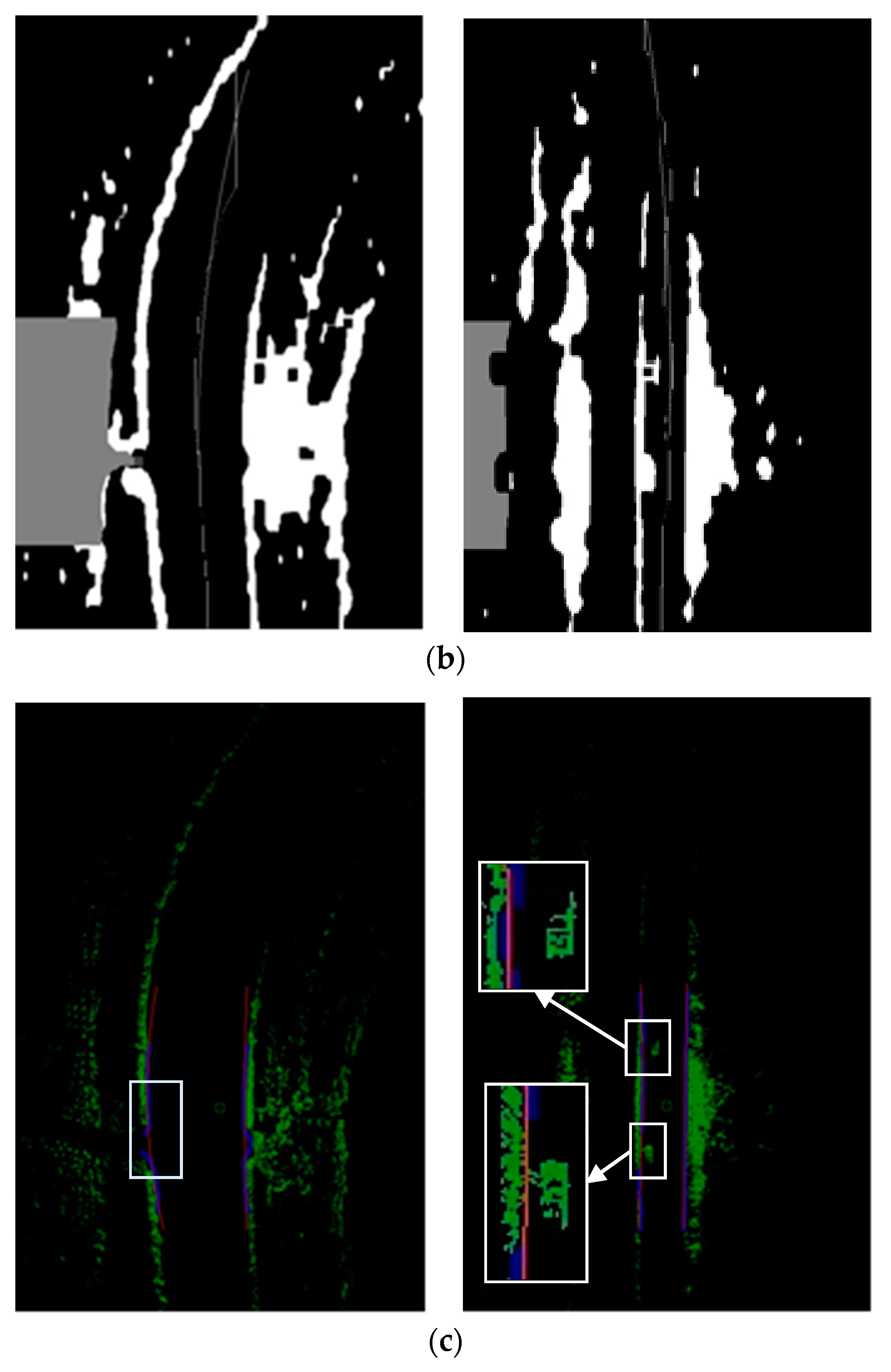

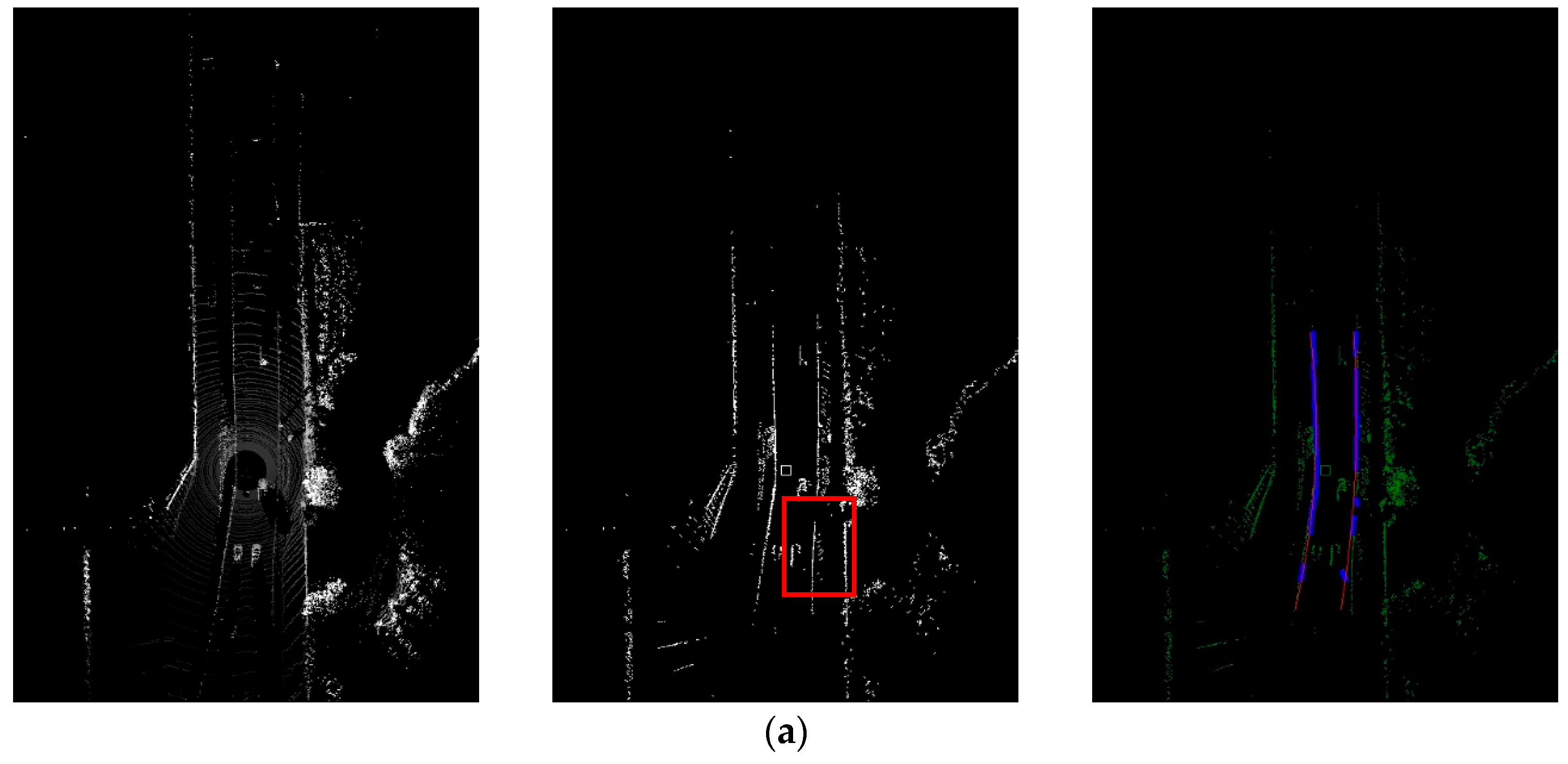

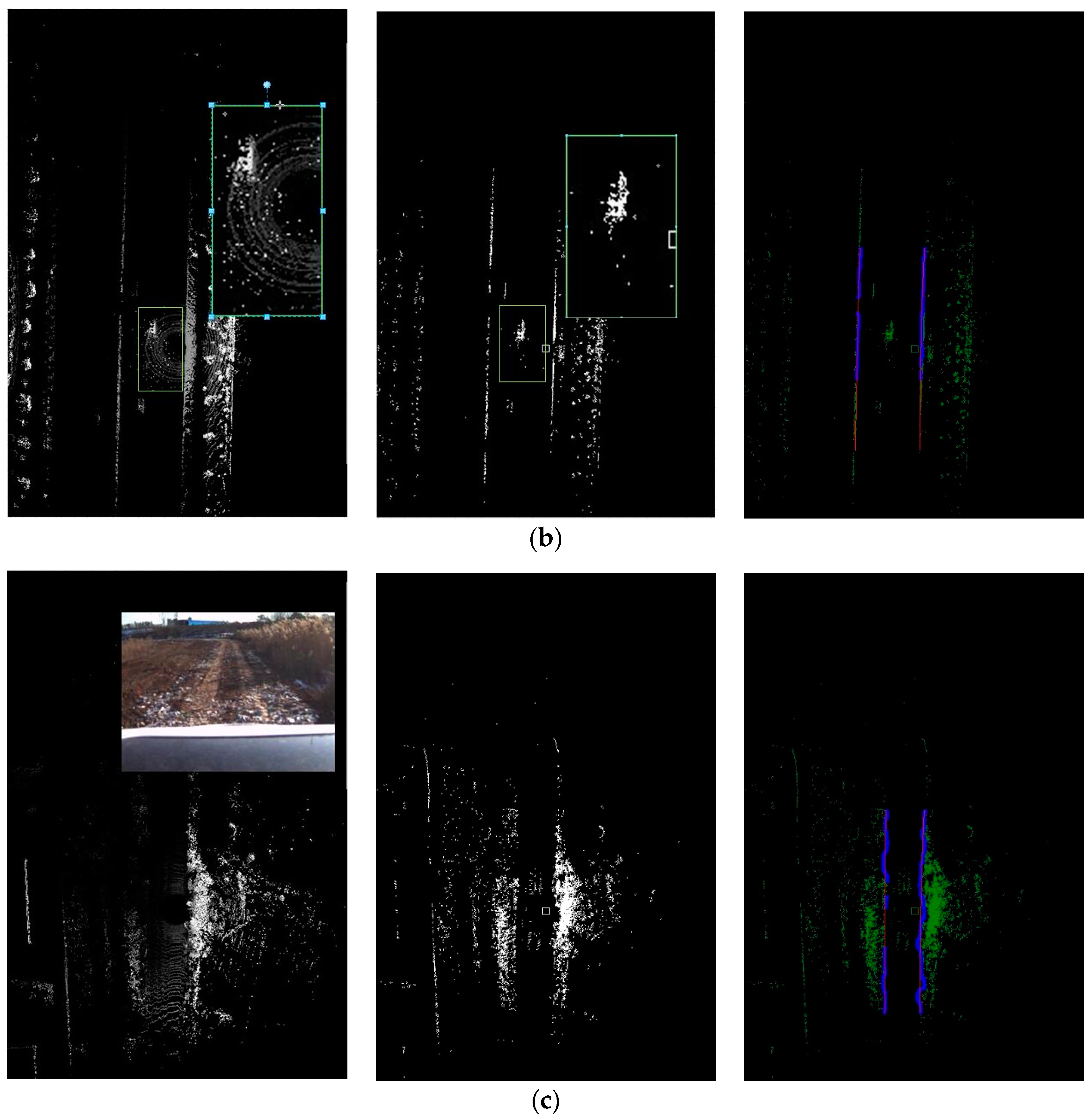

3. Road Curb Detection

3.1. Road Shape Evaluation

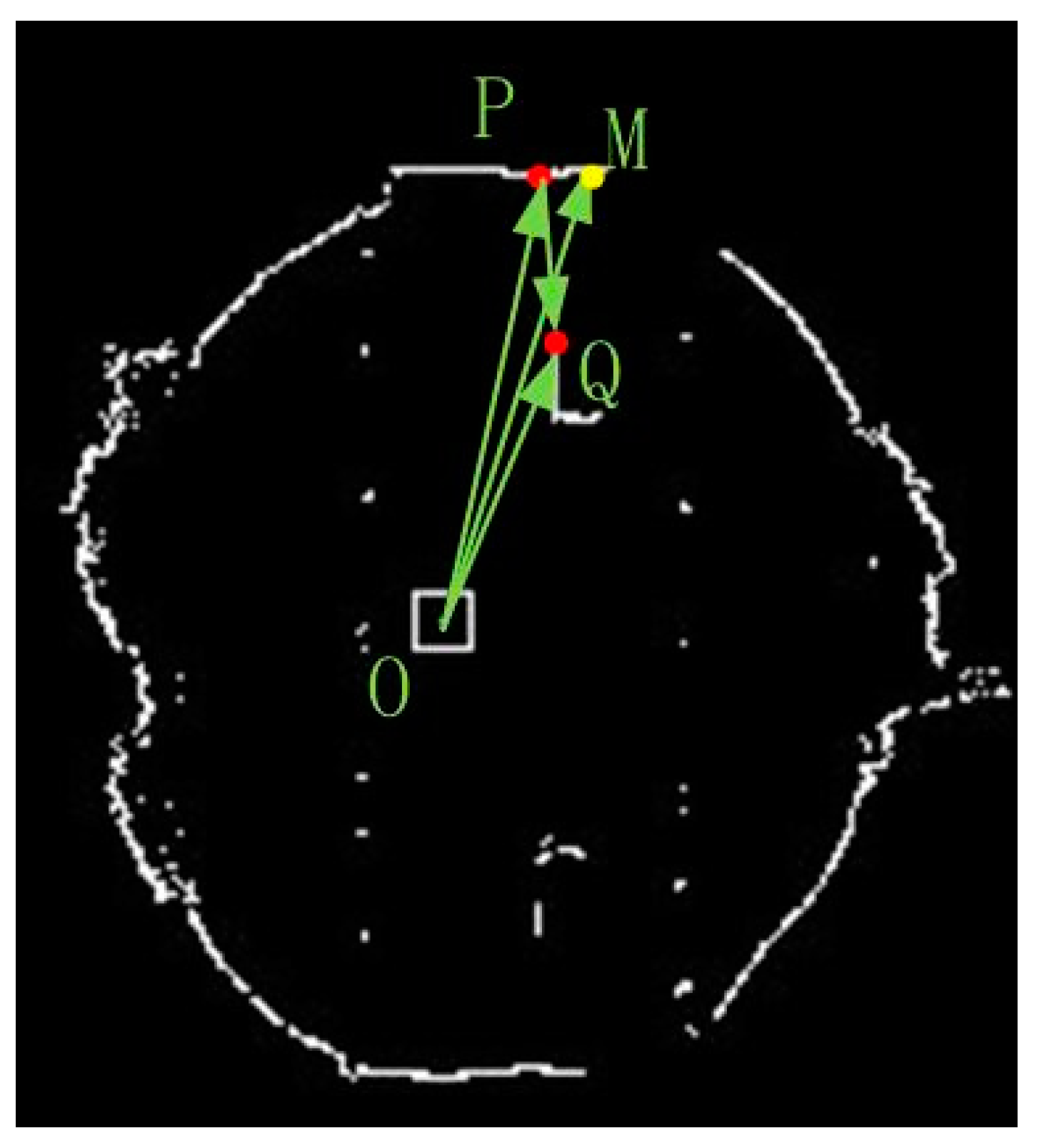

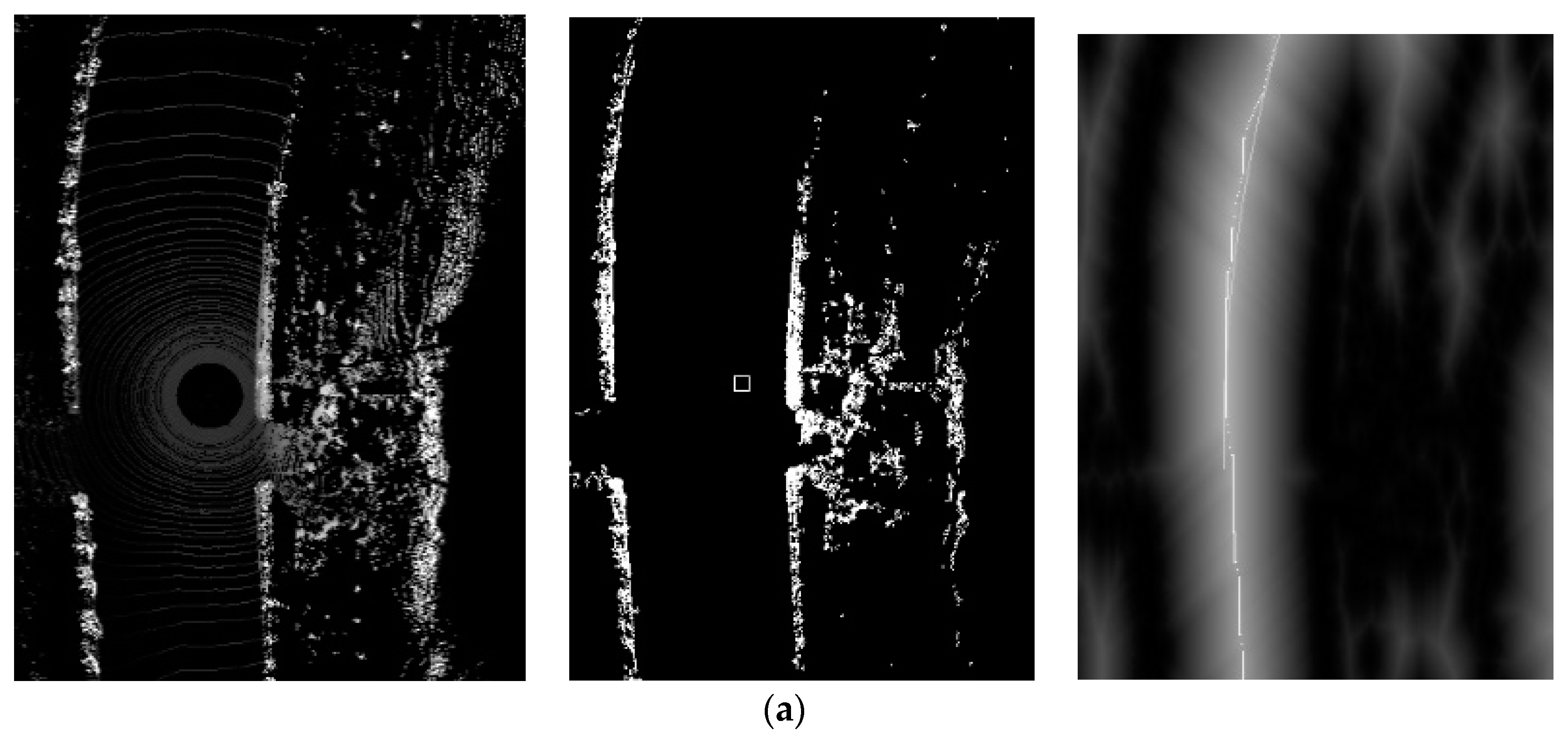

3.1.1. Centerline Extraction

| Algorithm 1. Distance Map Generation Process |

| Distance Transform Algorithm |

| Input: ObstacleMap |

| Input: MAP_WIDTH |

| Input: MAP_HEIGHT |

| Output: DistanceMap |

| Function Begin |

| PixelValueBinaryzation(OMap) |

| for i← 1 to MAP_HEIGHT − 2 |

| for j← 1 to MAP_WITH − 2 |

| DistanceMap [i + 1][j] = min(ObstacleMap [i][j] + 1, ObstacleMap [i + 1][j]) |

| DistanceMap [i + 1][j + 1] = min(ObstacleMap [i][j] + 1, ObstacleMap [i + 1][j + 1]) |

| DistanceMap [i][j + 1] = min(ObstacleMap [i][j] + 1, ObstacleMap [i][j + 1]) |

| DistanceMap [i − 1][j + 1] = min(ObstacleMap [i][j] + 1, ObstacleMap [i − 1][j + 1]) |

| end for |

| end for |

| for i← MAP_HEIGHT − 2 to 1 |

| for j← MAP_WITH − 2 to 1 |

| DistanceMap [i − 1][j] = min(ObstacleMap [i][j] + 1, ObstacleMap [i − 1][j]) |

| DistanceMap [i − 1][j − 1] = min(ObstacleMap [i][j] + 1, ObstacleMap [i − 1][j − 1]) |

| DistanceMap [i][j − 1] = min(ObstacleMap [i][j] + 1, ObstacleMap [i][j − 1]) |

| DistanceMap [i + 1][j − 1] = min(ObstacleMap [i][j] + 1, ObstacleMap [i + 1][j − 1]) |

| end for |

| end for |

| Function End |

3.1.2. Road Width Estimation

| Algorithm 2. Process for the Analysis of Width Distribution |

| RoadWidthAnalysis |

| RoadWidthCount[0]← {0} |

| maxWidthCount← 0 |

| For y← STARTY to ENDY |

| LeftWidth← 0 |

| While ThreSegImg[y][x − LeftWidth] == 0 |

| LeftWidth ++ |

| End While |

| RightWidth← 0 |

| While ThreSegImg[y][x + RightWidth] == 0 |

| RightWidth ++ |

| End While |

| RoadWidth[y]← LeftWidth + RightWidth |

| Save the Point (x, y) to WidthPoints[RoadWidth[y]/10] |

| RoadWidthCount[RoadWidth[y]/10] ++ |

| If(RoadWidthCount[RoadWidth[y]/10] > maxWidthCount) |

| maxWidthCount← RoadWidthCount[RoadWidth[y]/10] |

| MaxRoadWidthPortion = RoadWidth[y]/10; |

| End if |

| End For |

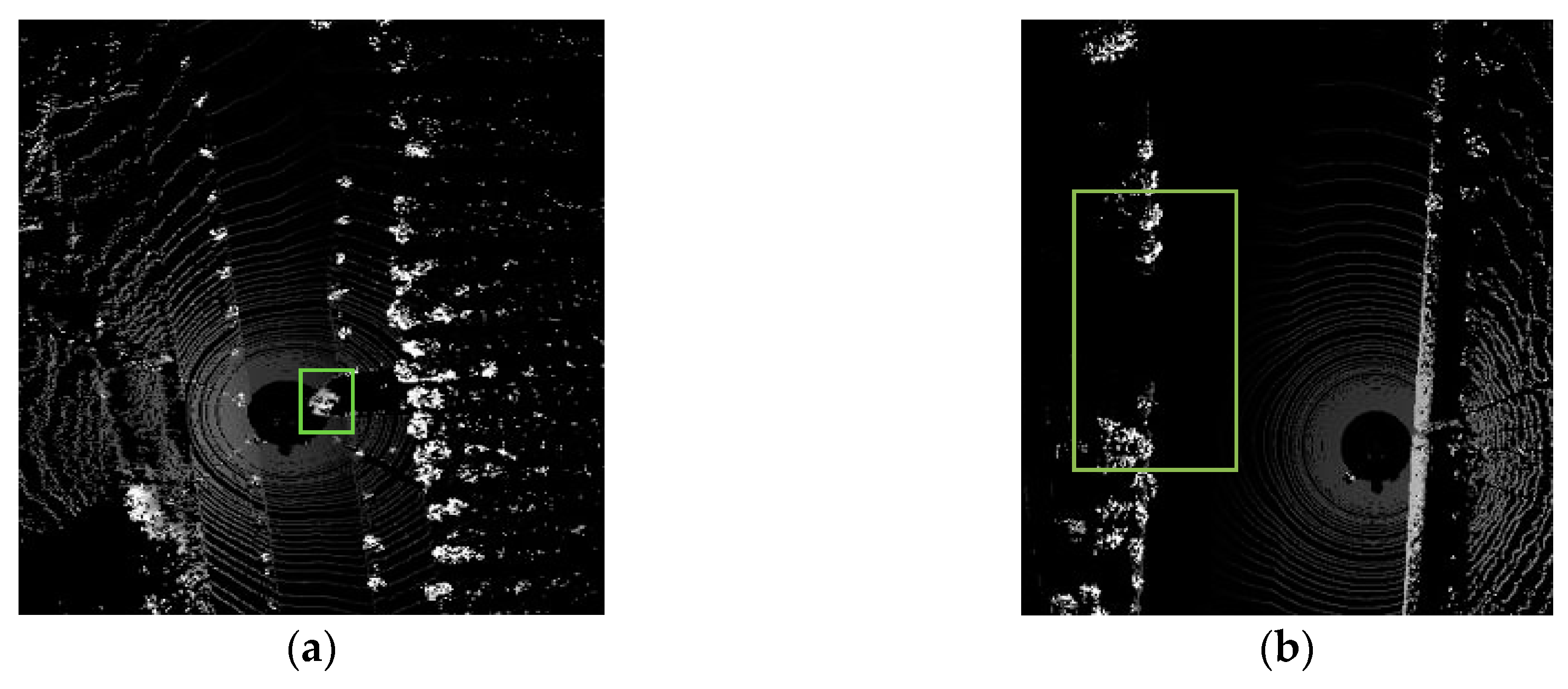

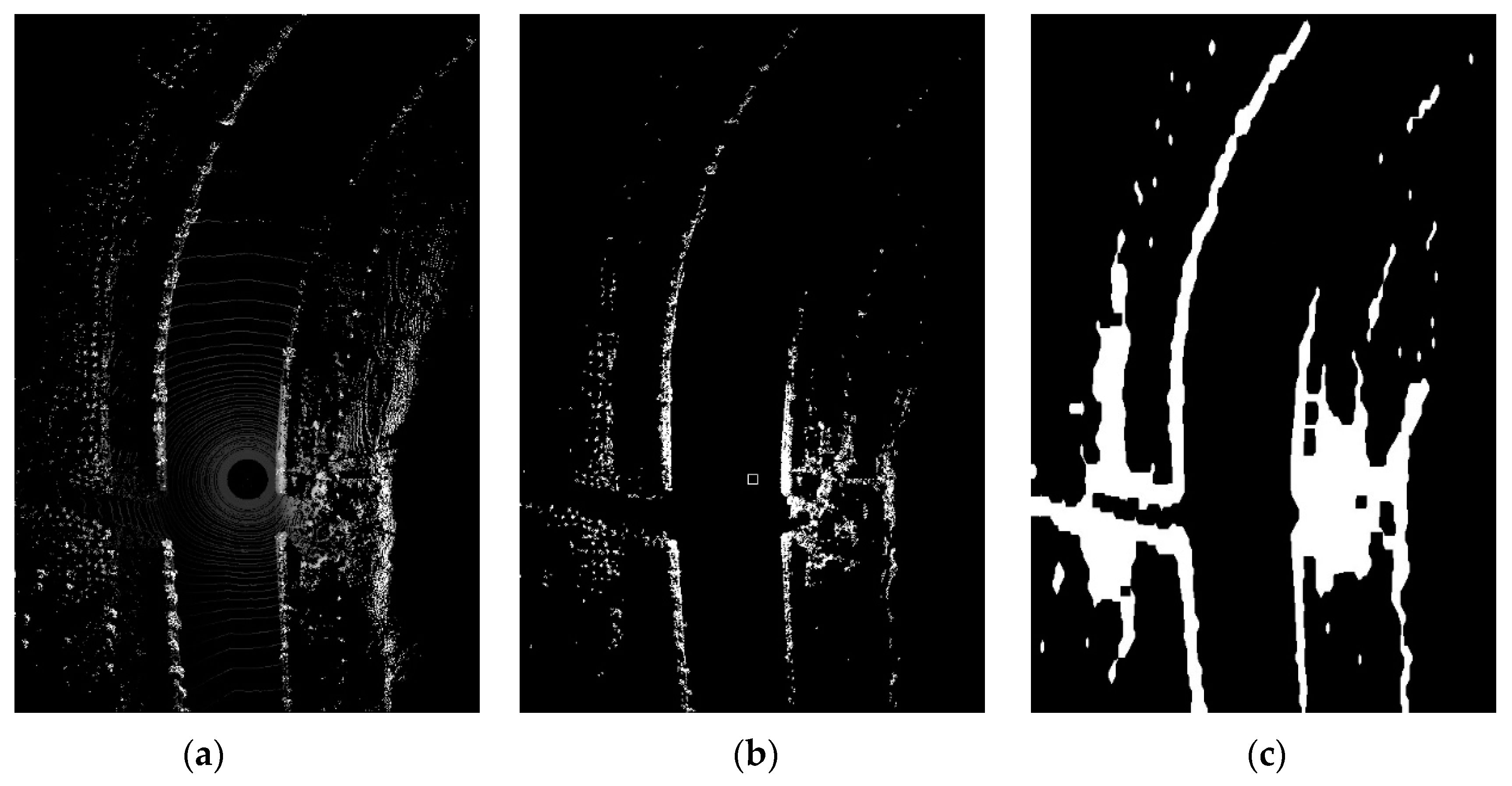

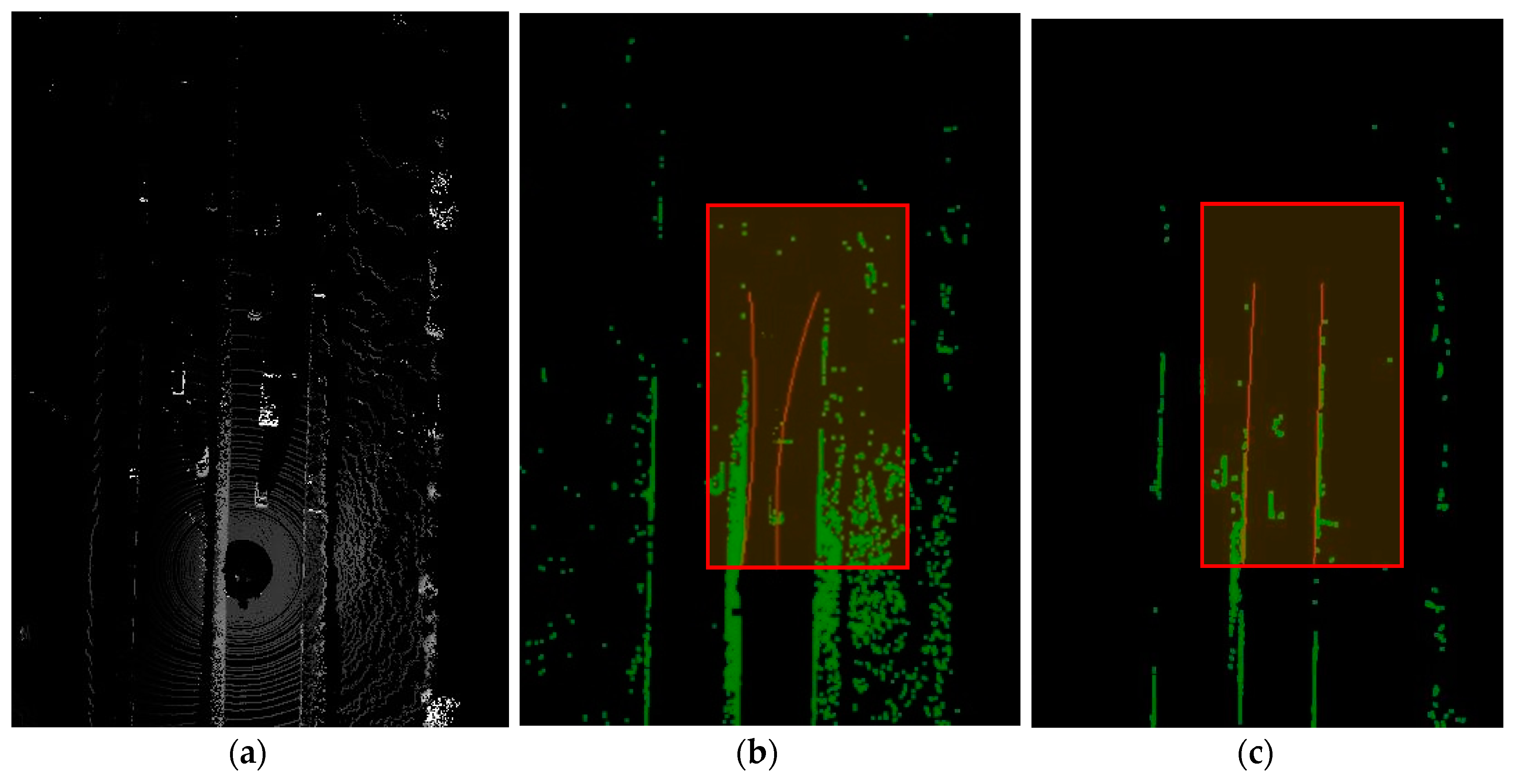

3.2. Curb Detection and Update

3.2.1. Shape of the Road Curb

3.2.2. Fit Degree between the Road Curb and the Scene

- The number of the detected curb points.

- The maximum difference of the y value between the detected points.

- The variance of the width distribution

3.2.3. Variance between the Curve and History Information

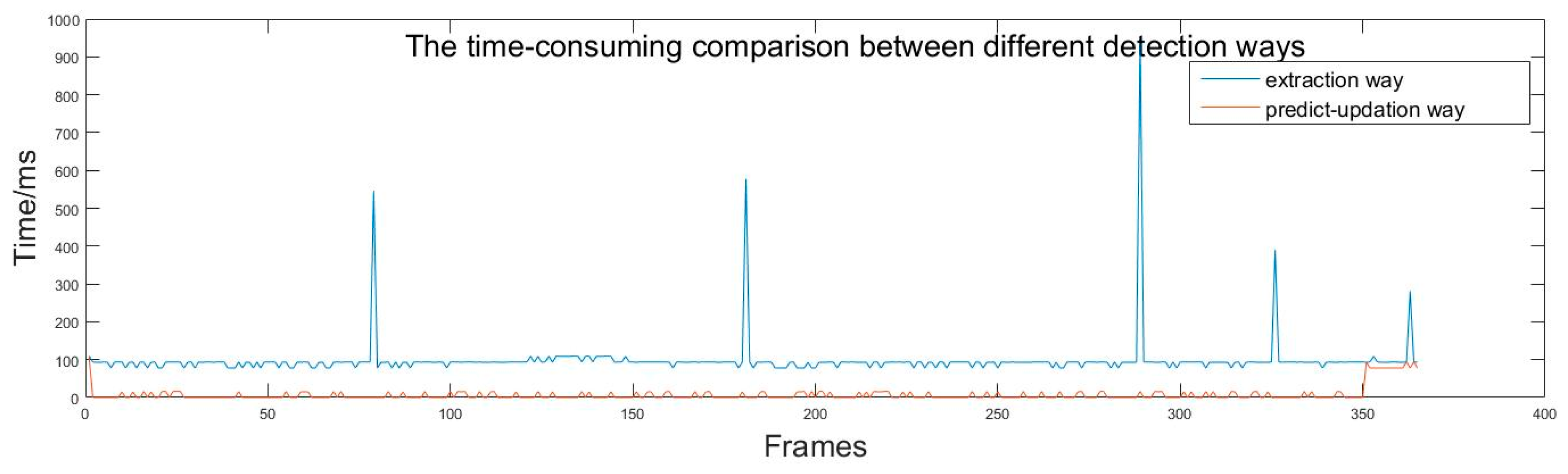

4. Experimental Results and Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian Detection: An Evaluation of the State of the Art. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

- Shu, Y.; Tan, Z. Vision based lane detection in autonomous vehicle. Intell. Control Autom. 2004, 6, 5258–5260. [Google Scholar]

- Bertozzi, M.; Broggi, A.; Cellario, M. Artificial vision in road vehicles. Proc. IEEE 2002, 90, 1258–1271. [Google Scholar] [CrossRef]

- Wen, X.; Shao, L.; Xue, Y. A rapid learning algorithm for vehicle classification. Inf. Sci. 2015, 295, 395–406. [Google Scholar] [CrossRef]

- Sivaraman, S.; Trivedi, M.M. A General Active-Learning Framework for On-Road Vehicle Recognition and Tracking. IEEE Trans. Intell. Transp. Syst. 2010, 11, 267–276. [Google Scholar] [CrossRef]

- Arrospide, J.; Salgado, L.; Marinas, J. HOG-Like Gradient-Based Descriptor for Visual Vehicle Detection. IEEE Intell. Veh. Symp. 2012. [Google Scholar] [CrossRef]

- Sun, Z.H.; Bebis, G.; Miller, R. On-road vehicle detection: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 694–711. [Google Scholar] [PubMed]

- Huang, J.; Liang, H.; Wang, Z.; Mei, T.; Song, Y. Robust lane marking detection under different road conditions. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics, Shenzhen, China, 12–14 December 2013; pp. 1753–1758. [Google Scholar]

- Bengler, K.; Dietmayer, K.; Farber, B.; Maurer, M.; Stiller, C.; Winner, H. Three decades of driver assistance systems: Review and future perspectives. IEEE Intell. Trans. Syst. Mag. 2014, 6, 6–22. [Google Scholar] [CrossRef]

- Buehler, M.; Iagnemma, K.; Singh, S. The 2005 DARPA Grand Challenge; Springer: Berlin, Germany, 2007. [Google Scholar]

- Buehler, M.; Iagnemma, K.; Singh, S. The DARPA Urban Challenge; Springer: Berlin, Germany, 2009. [Google Scholar]

- Baidu Leads China’s Self-Driving Charge in Silicon Valley. Available online: http://www.reuters.com/article/us-autos-baidu-idUSKBN19L1KK (accessed on 22 July 2017).

- Ford Promises Fleets of Driverless Cars within Five Years. Available online: https://www.nytimes.com/2016/08/17/business/ford-promises-fleets-of-driverless-cars-within-five-years.html (accessed on 22 July 2017).

- What Self-Driving Cars See. Available online: https://www.nytimes.com/2017/05/25/automobiles/wheels/lidar-self-driving-cars.html (accessed on 22 July 2017).

- Uber Engineer Barred from Work on Key Self-Driving Technology, Judge Says. Available online: https://www.nytimes.com/2017/05/15/technology/uber-self-driving-lawsuit-waymo.html (accessed on 22 July 2017).

- Brehar, R.; Vancea, C.; Nedevschi, S. Pedestrian Detection in Infrared Images Using Aggregated Channel Features. In Proceedings of the 2014 IEEE International Conference on Intelligent Computer Communication and Processing, Cluj Napoca, Romania, 4–6 September 2014; pp. 127–132. [Google Scholar]

- Von Hundelshausen, F.; Himmelsbach, M.; Hecker, F.; Mueller, A.; Wuensche, H.-J. Driving with Tentacles: Integral Structures for Sensing and Motion. J. Field Robot 2008, 25, 640–673. [Google Scholar] [CrossRef]

- Urmson, C.; Anhalt, J.; Bagnell, D.; Baker, C.; Bittner, R.; Clark, M.N.; Dolan, J.; Duggins, D.; Galatali, T.; Geyer, C.; et al. Autonomous Driving in Urban Environments: Boss and the Urban Challenge. J. Field Robot 2008, 25, 425–466. [Google Scholar] [CrossRef]

- Kammel, S.; Ziegler, J.; Pitzer, B.; Werling, M.; Gindele, T.; Jagzent, D.; Schroder, J.; Thuy, M.; Goebl, M.; von Hundelshausen, F.; et al. Team AnnieWAY’s Autonomous System for the 2007 DARPA Urban Challenge. J. Field Robot 2008, 25, 615–639. [Google Scholar] [CrossRef]

- Montemerlo, M.; Becker, J.; Bhat, S.; Dahlkamp, H.; Dolgov, D.; Ettinger, S.; Haehnel, D.; Hilden, T.; Hoffmann, G.; Huhnke, B.; et al. Junior: The Stanford Entry in the Urban Challenge. J. Field Robot 2008, 25, 569–597. [Google Scholar] [CrossRef]

- Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; et al. Stanley: The Robot that Won the DARPA Grand Challenge. J. Field Robot 2006, 23, 661–692. [Google Scholar] [CrossRef]

- Moosmann, F.; Pink, O.; Stiller, C. Segmentation of 3D Lidar Data in Non-Flat Urban Environments Using a Local Convexity Criterion. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 215–220. [Google Scholar]

- Cheng, J.; Xiang, Z.; Cao, T.; Liu, J. Robust vehicle detection using 3D Lidar under complex urban environment. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 691–696. [Google Scholar]

- Liu, J.; Liang, H.; Wang, Z.; Chen, X. A Framework for Applying Point Clouds Grabbed by Multi-Beam LIDAR in Perceiving the Driving Environment. Sensors 2015, 15, 21931–21956. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Dai, B.; Liu, D.; Song, J.; Liu, Z. Velodyne-based curb detection up to 50 meters away. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium, Seoul, South Korea, 28 June–1 July 2015; pp. 241–248. [Google Scholar]

- Zhao, G.; Yuan, J. Curb detection and tracking using 3D-LIDAR scanner. In Proceedings of the 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 437–440. [Google Scholar]

- Hata, A.Y.; Osorio, F.S.; Wolf, D.F. Robust Curb Detection and Vehicle Localization in Urban Environments. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium, Dearborn, MI, USA, 8–11 June 2014; pp. 1264–1269. [Google Scholar]

- Zhang, Y.; Wang, J.; Wang, X.; Li, C.; Wang, L. A real-time curb detection and tracking method for UGVs by using a 3D-LIDAR sensor. In Proceedings of the IEEE Conference on Control Applications, Sydney, Australia, 21–23 September 2015; pp. 1020–1025. [Google Scholar]

| Feature | Beam Range (m) | Rotation Range | Threshold |

|---|---|---|---|

| MaxHD | [0, 45] | All | >3 cm |

| TangenAngle | All | [0, 300] || [600, 1200] || [1200, 1800] | >0.6 |

| RadiusRatio | [0, 50] | All | [0.9, 0.95] || [1.05, 1.1] |

| HDInRadius | All | All | >5 cm |

| Index | Threshold |

|---|---|

| Road curb curvature | < 0.001 |

| Point number consisted the road curb | > 150 |

| Maximum difference of the y value between the detected points | > 200 |

| Variance of the width distribution | < 10 |

| Difference of the road width between two consecutive frames | < 10 |

| - | Scenes |

|---|---|

| Proposed method | Straight road |

| Winding road | |

| Method of [24] | Straight road |

| Winding road |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, R.; Chen, J.; Liu, J.; Liu, L.; Yu, B.; Wu, Y. A Practical Point Cloud Based Road Curb Detection Method for Autonomous Vehicle. Information 2017, 8, 93. https://doi.org/10.3390/info8030093

Huang R, Chen J, Liu J, Liu L, Yu B, Wu Y. A Practical Point Cloud Based Road Curb Detection Method for Autonomous Vehicle. Information. 2017; 8(3):93. https://doi.org/10.3390/info8030093

Chicago/Turabian StyleHuang, Rulin, Jiajia Chen, Jian Liu, Lu Liu, Biao Yu, and Yihua Wu. 2017. "A Practical Point Cloud Based Road Curb Detection Method for Autonomous Vehicle" Information 8, no. 3: 93. https://doi.org/10.3390/info8030093