Source Code Documentation Generation Using Program Execution †

Abstract

:1. Introduction

public class URL {

…

/**

* Gets the authority part of this URL.

* @return the authority part of this URL

*/

public String getAuthority () {...}

}

public class URL {

...

/**

* Gets the authority part of this URL.

* @return the authority part of this URL

* @examples When called on http://example.com/path?query,

* the method returned "example.com".<br>

* When called on http://user:[email protected]:80/path,

* the method returned "user:[email protected]:80".

*/

public String getAuthority() {...}

}

2. Representation-Based Documentation Approach

2.1. Tracing

1 function around (method)

2 // save string representations of arguments and object state

3 arguments ← []

4 for arg in method.args

5 arguments.add(to_string(arg))

6 end for

7 before ← to_string (method.this)

8

9 // run the original method, save return value or thrown exception

10 result ← method (method.args)

11 if result is return value

12 returned ← to_string (result)

13 else if result is thrown exception

14 exception ← to_string(result)

15 end if

16

17 // save object representation again and write record to trace file

18 after ← to_string (method.this)

19 write_record (method, arguments, before, returned, exception, after)

20

21 // proceed as usual (not affecting the program’s semantics)

22 return/throw result

23 end function

-

method: the method identifier (file, line number),

-

arguments: an array of string representations of all argument values,

-

before: a string representation of the target object state before method execution,

-

return: a string representation of the return value, if the method is non-void and did not throw an exception,

-

exception: a thrown exception converted to a string (if it was thrown),

-

after: a string representation of the target object state after method execution.

2.2. Selection of Examples

2.3. Documentation Generation

3. Qualitative Evaluation

- Apache Commons Lang (https://commons.apache.org/lang/),

- Google Guava (https://github.com/google/guava),

- and Apache FOP (Formatting Objects Processor; https://xmlgraphics.apache.org/fop/).

3.1. Utility Methods

When ch = ’A’, the method returned “\u0041”.

CharUtils.unicodeEscaped (’A’) = “\u0041”

- to save time spent writing examples,

- to ensure the documentation is correct and up-to-date.

3.2. Data Structures

When called on {foo ={1=a, 3=c}, bar ={1= b}}, the method returned 3.

Table<String, Integer, Character> table = HashBasedTable.create (); table.put (“foo”, 1, ’a’); table.put (“foo”, 3, ’c’); table.put (“bar”, 1, ’b’); System.out.println (table. size ()); // prints 3

3.3. Changing Target Object State

When called on [sans-serif] with prop = Symbol, the object changed to [sans-serif, Symbol].

The method was called on

org.apache.fop.layoutmgr.LayoutManagerMapping@260a3a5e.

3.4. Changing Argument State

The method was called with array = [1, 2, 3].

3.5. Operations Affecting the External World

When dvalue = 20.0, unit = "pt" and res = 1.0, the method returned

20000.

3.6. Methods Doing Too Much

The method was called on ... RegionAfter@206be60b [@id= null] with elementName = "region-after", locator = ... LocatorProxy@292158f8, attlist = ... AttributesProxy@4674d90 and pList = ... StaticPropertyList@6354dd57.

3.7. Example Selection

When called on (5..+∞), the method returned OPEN. When called on [4..4], the method returned CLOSED. When called on [4..4), the method returned CLOSED. When called on [5..7], the method returned CLOSED. When called on [5..8), the method returned CLOSED.

When values = [0], the method returned [0..0]. When values = [5, -3], the method returned [-3..5]. When values = [0, null], the method threw java.lang.NullPointerException. When values = [1, 2, 2, 2, 5, -3, 0, -1], the method returned [-3..5]. When values = [], the method threw java. util. NoSuchElementException.

4. Quantitative Evaluation

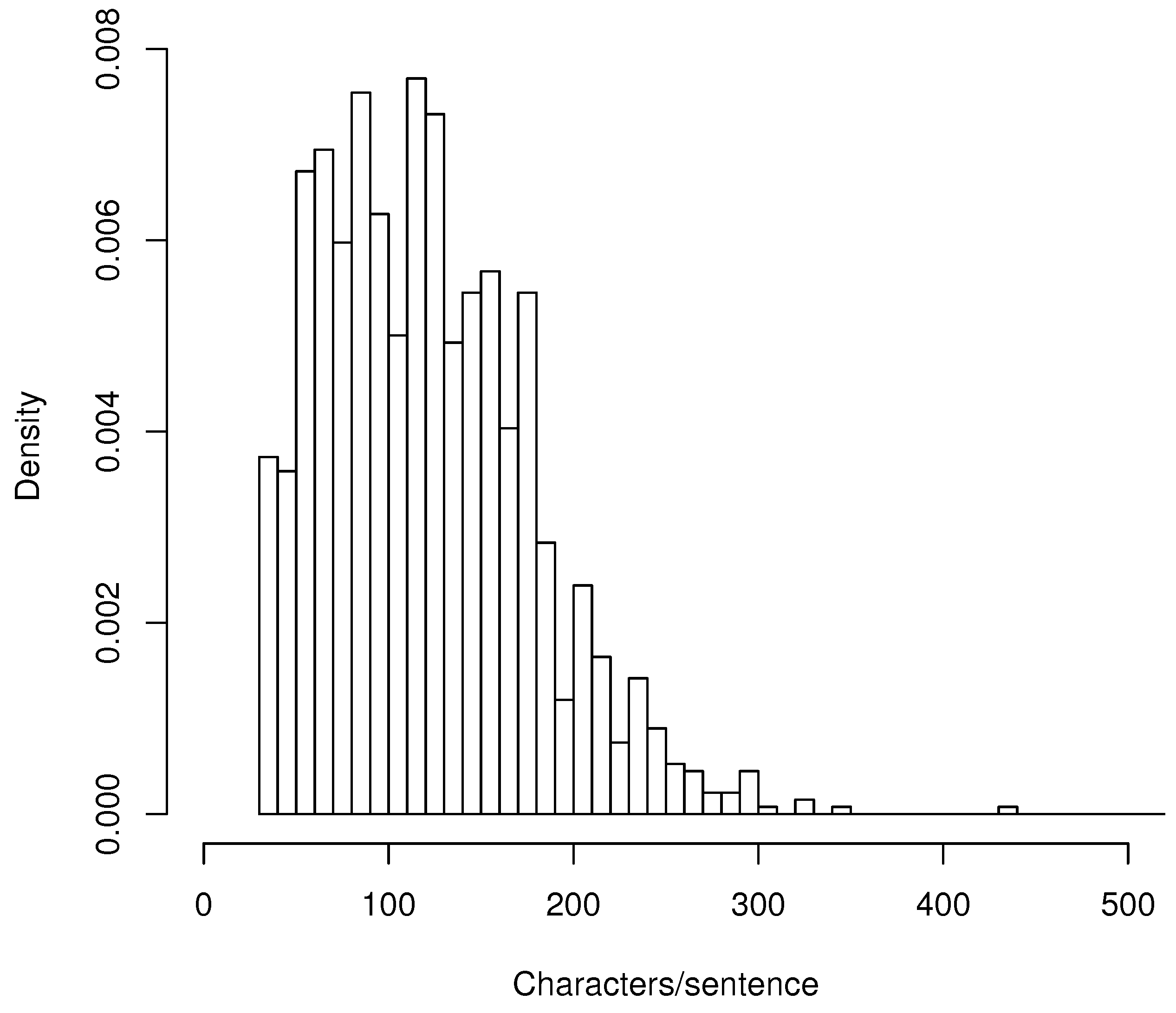

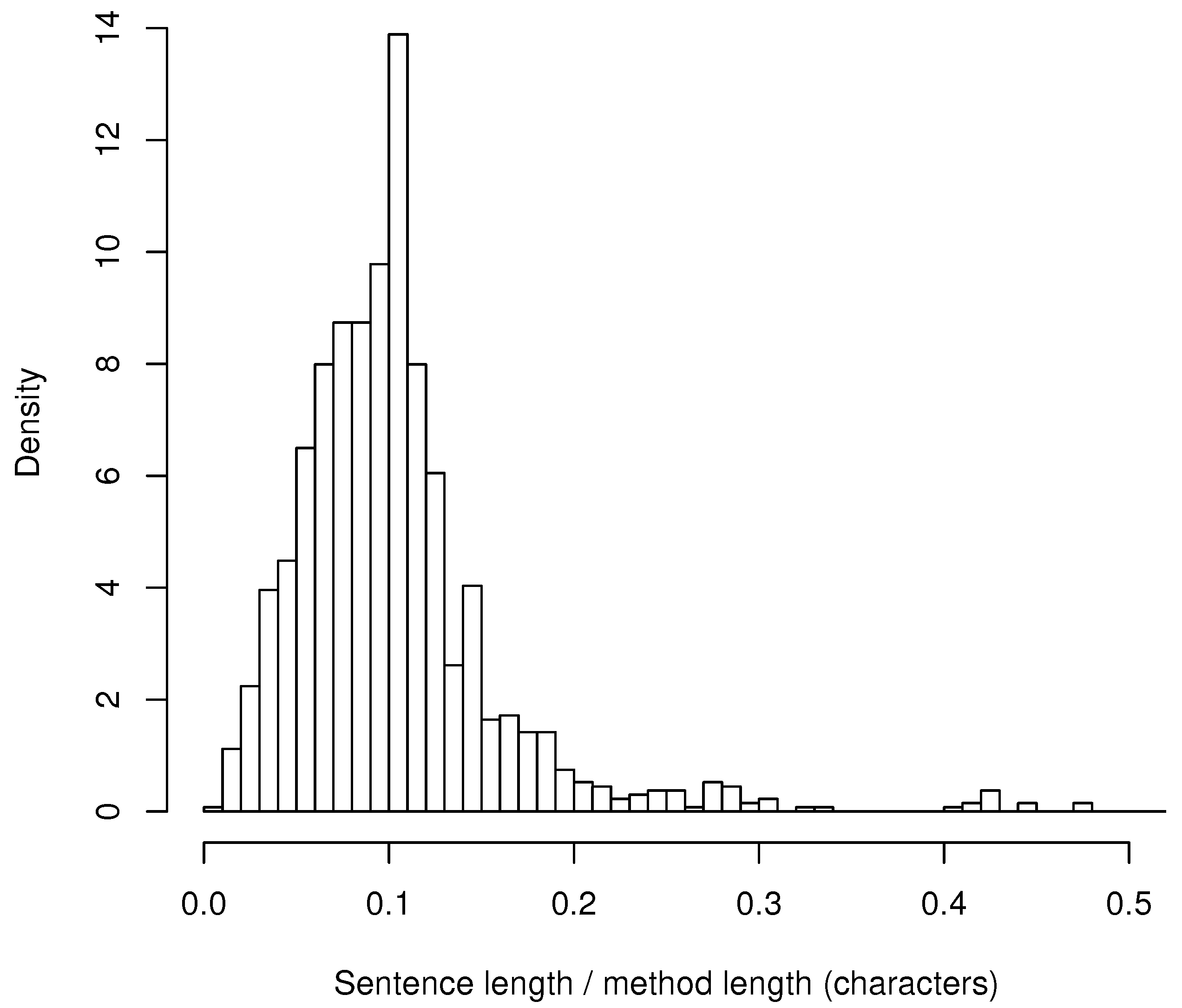

4.1. Documentation Length

4.1.1. Method

- the length of each sentence in characters,

- the “proportional length”: the length of each sentence divided by the length of the corresponding method’s source code (in characters).

4.1.2. Results

4.1.3. Threats to Validity

4.1.4. Conclusions

4.2. Overridden String Representation in Documentation

4.2.1. Method

4.2.2. Results

5. Variable-Based Documentation Approach

5.1. Example

public class Ticket {

private String type;

public void setType(String type) {

this.type = type;

}

}

public class Person {

private int age;

private Ticket ticket = new Ticket ();

public int setAge(int age) {

this.age = age;

ticket.setType ((age < 18) ? "child" : "full");

}

}

When age was 20, this.age was set to 20 and this. ticket. type was set to "full".

5.2. Approach

5.2.1. Tracing

- the reads of the method’s primitive parameters,

- all reads and writes of primitive variables in all objects/classes,

- the reads of non-primitive objects, but only if they are a part of object comparison (==, !=),

- and writes (assignments) of non-primitive objects (x = object;).

- For a method’s parameter, the name is the parameter’s name as it is written in the source code.

- If the variable is a member of this instance, the name is this.memberName.

- If the variable is a member of another object, references are gradually recorded to obtain a connection with the current method/object and the other object. In this case, the name is denoted as a chain of references: either this.member_1 .....member_n (if the reference chain begins with a member variable of this instance) or local_variable.member_1 .....member_n (if the reference chain begins with a local variable or a parameter).

- If the variable is static, its name is ClassName.variableName.

- Array elements are denoted by square brackets: array[index].

5.2.2. Duplicate Removal

- the first read of each variable; unless the variable was written before it was read for the first time; in this case, we ignore all reads of this variable

- and the last write of each variable.

write x 1, read x 1, read y 2, write y 3, write y 4

The sample trace is reduced to this one:

write x 1, read y 2, write y 4

5.2.3. Selection of Examples

5.2.4. Documentation Generation

When {read_1_name} was {read_1_value}, ...

and {read_n_name} was {read_n_value},

{write_1_name} was set to {write_1_value}, ...

and {write_m_name} was set to {write_m_value}.

5.3. Discussion

6. Related Work

6.1. Static Analysis and Repository Mining

6.2. Dynamic Analysis

7. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Kramer, D. API Documentation from Source Code Comments: A Case Study of Javadoc. In Proceedings of the 17th Annual International Conference on Computer Documentation, SIGDOC’99, New Orleans, LA, USA, 12–14 September 1999; ACM: New York, NY, USA, 1999; pp. 147–153. [Google Scholar]

- Uddin, G.; Robillard, M.P. How API Documentation Fails. IEEE Softw. 2015, 32, 68–75. [Google Scholar] [CrossRef]

- Nazar, N.; Hu, Y.; Jiang, H. Summarizing Software Artifacts: A Literature Review. J. Comput. Sci. Technol. 2016, 31, 883–909. [Google Scholar] [CrossRef]

- Sridhara, G.; Pollock, L.; Vijay-Shanker, K. Automatically Detecting and Describing High Level Actions Within Methods. In Proceedings of the 33rd International Conference on Software Engineering, ICSE ’11, Waikiki, Honolulu, HI, USA, 21–28 May 2011; ACM: New York, NY, USA, 2011; pp. 101–110. [Google Scholar]

- McBurney, P.W.; McMillan, C. Automatic Documentation Generation via Source Code Summarization of Method Context. In Proceedings of the 22nd International Conference on Program Comprehension, ICPC 2014, Hyderabad, India, 2–3 June 2014; ACM: New York, NY, USA, 2014; pp. 279–290. [Google Scholar]

- Kim, J.; Lee, S.; Hwang, S.W.; Kim, S. Adding Examples into Java Documents. In Proceedings of the 2009 IEEE/ACM International Conference on Automated Software Engineering, ASE ’09, Auckland, New Zealand, 16–20 November 2009; IEEE Computer Society: Washington, DC, USA, 2009; pp. 540–544. [Google Scholar]

- Lo, D.; Maoz, S. Scenario-based and Value-based Specification Mining: Better Together. In Proceedings of the IEEE/ACM International Conference on Automated Software Engineering, ASE ’10, Antwerp, Belgium, 20–24 September 2010; ACM: New York, NY, USA, 2010; pp. 387–396. [Google Scholar]

- Lefebvre, G.; Cully, B.; Head, C.; Spear, M.; Hutchinson, N.; Feeley, M.; Warfield, A. Execution Mining. In Proceedings of the 8th ACM SIGPLAN/SIGOPS Conference on Virtual Execution Environments, VEE ’12, London, UK, 3–4 March 2012; ACM: New York, NY, USA, 2012; pp. 145–158. [Google Scholar]

- Sulír, M.; Porubän, J. Generating Method Documentation Using Concrete Values from Executions. In OpenAccess Series in Informatics (OASIcs), Proceedings of the 6th Symposium on Languages, Applications and Technologies (SLATE’17), Vila do Conde, Portugal, 26–27 June 2017; Dagstuhl Research Online Publication Server: Wadern, Germany, 2017; Volume 56, pp. 3:1–3:13. [Google Scholar]

- Sridhara, G.; Hill, E.; Muppaneni, D.; Pollock, L.; Vijay-Shanker, K. Towards Automatically Generating Summary Comments for Java Methods. In Proceedings of the IEEE/ACM International Conference on Automated Software Engineering, ASE ’10, Antwerp, Belgium, 20–24 September 2010; ACM: New York, NY, USA, 2010; pp. 43–52. [Google Scholar]

- Sridhara, G.; Pollock, L.; Vijay-Shanker, K. Generating Parameter Comments and Integrating with Method Summaries. In Proceedings of the 2011 IEEE 19th International Conference on Program Comprehension, ICPC ’11, Kingston, ON, Canada, 20–24 June 2011; IEEE Computer Society: Washington, DC, USA, 2011; pp. 71–80. [Google Scholar]

- Buse, R.P.; Weimer, W.R. Automatic Documentation Inference for Exceptions. In Proceedings of the 2008 International Symposium on Software Testing and Analysis, ISSTA ’08, Seattle, WA, USA, 20–24 July 2008; ACM: New York, NY, USA, 2008; pp. 273–282. [Google Scholar]

- Long, F.; Wang, X.; Cai, Y. API Hyperlinking via Structural Overlap. In Proceedings of the the 7th Joint Meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on the Foundations of Software Engineering, ESEC/FSE ’09, Amsterdam, The Netherlands, 24–28 August 2009; ACM: New York, NY, USA, 2009; pp. 203–212. [Google Scholar]

- Buse, R.P.; Weimer, W.R. Synthesizing API usage examples. In Proceedings of the 2012 34th International Conference on Software Engineering (ICSE), Zurich, Switzerland, 2–9 June 2012; pp. 782–792. [Google Scholar]

- Montandon, J.a.E.; Borges, H.; Felix, D.; Valente, M.T. Documenting APIs with examples: Lessons learned with the APIMiner platform. In Proceedings of the 2013 20th Working Conference on Reverse Engineering (WCRE), Koblenz, Germany, 14–17 October 2013; pp. 401–408. [Google Scholar]

- Hoffman, D.; Strooper, P. Prose + Test Cases = Specifications. In Proceedings of the 34th International Conference on Technology of Object-Oriented Languages and Systems, TOOLS ’00, Santa Barbara, CA, USA, 30 July–3 August 2000; IEEE Computer Society: Washington, DC, USA, 2000; pp. 239–250. [Google Scholar]

- Sulír, M.; Nosáľ, M.; Porubän, J. Recording concerns in source code using annotations. Comput. Languages, Syst. Struct. 2016, 46, 44–65. [Google Scholar] [CrossRef]

- Sulír, M.; Porubän, J. Semi-automatic Concern Annotation Using Differential Code Coverage. In Proceedings of the 2015 IEEE 13th International Scientific Conference on Informatics, Poprad, Slovakia, 18–20 November 2015; pp. 258–262. [Google Scholar]

- Dallmeier, V.; Lindig, C.; Wasylkowski, A.; Zeller, A. Mining Object Behavior with ADABU. In Proceedings of the 2006 International Workshop on Dynamic Systems Analysis, WODA ’06, Shanghai, China, 23 May 2006; ACM: New York, NY, USA, 2006; pp. 17–24. [Google Scholar]

- Panichella, S.; Panichella, A.; Beller, M.; Zaidman, A.; Gall, H.C. The Impact of Test Case Summaries on Bug Fixing Performance: An Empirical Investigation. In Proceedings of the 38th International Conference on Software Engineering, ICSE ’16, Austin, TX, USA, 14–22 May 2016; ACM: New York, NY, USA, 2016; pp. 547–558. [Google Scholar]

- Zhang, S.; Zhang, C.; Ernst, M.D. Automated Documentation Inference to Explain Failed Tests. In Proceedings of the 2011 26th IEEE/ACM International Conference on Automated Software Engineering, ASE ’11, Lawrence, KS, USA, 6–10 November 2011; IEEE Computer Society: Washington, DC, USA, 2011; pp. 63–72. [Google Scholar]

- Sohan, S.M.; Anslow, C.; Maurer, F. SpyREST: Automated RESTful API Documentation Using an HTTP Proxy Server. In Proceedings of the 2015 30th IEEE/ACM International Conference on Automated Software Engineering (ASE), ASE ’15, Lincoln, NE, USA, 9–13 November 2015; IEEE Computer Society: Washington, DC, USA, 2015; pp. 271–276. [Google Scholar]

- Tan, S.H.; Marinov, D.; Tan, L.; Leavens, G.T. @tComment: Testing Javadoc Comments to Detect Comment-Code Inconsistencies. In Proceedings of the 2012 IEEE Fifth International Conference on Software Testing, Verification and Validation, ICST ’12, Montreal, QC, Canada, 17–21 April 2012; IEEE Computer Society: Washington, DC, USA, 2012; pp. 260–269. [Google Scholar]

- Sulír, M.; Porubän, J. RuntimeSearch: Ctrl+F for a Running Program. In Proceedings of the 2017 32nd IEEE/ACM International Conference on Automated Software Engineering (ASE), Urbana Champaign, IL, USA, 30 October–3 November 2017; pp. 388–393. [Google Scholar]

| Method Kind | Parameters | Return Type | Exception | State Changed | Sentence Template |

|---|---|---|---|---|---|

| static | 0 | void | no | no | - |

| non-void | The method returned {return}. | ||||

| any | yes | The method threw {exception}. | |||

| ≥1 | void | no | The method was called with {arguments}. | ||

| non-void | When {arguments}, the method returned {return}. | ||||

| any | yes | When {arguments}, the method threw {exception}. | |||

| instance | 0 | void | no | no | The method was called on {before}. |

| yes | When called on {before}, the object changed to {after}. | ||||

| non-void | no | When called on {before}, the method returned {return}. | |||

| yes | When called on {before}, the object changed to {after} and the method returned {return}. | ||||

| any | yes | no | When called on {before}, the method threw {exception}. | ||

| yes | When called on {before}, the object changed to {after} and the method threw {exception}. | ||||

| ≥1 | void | no | no | The method was called on {before} with {arguments}. | |

| yes | When called on {before} with {arguments}, the object changed to {after}. | ||||

| non-void | no | When called on {before} with {arguments}, the method returned {return}. | |||

| yes | When called on {before} with {arguments}, the object changed to {after} and the method returned {return}. | ||||

| any | yes | no | When called on {before} with {arguments}, the method threw {exception}. | ||

| yes | When called on {before} with {arguments}, the object changed to {after} and the method threw {exception}. |

| Project | Overridden | Default |

|---|---|---|

| Apache Commons Lang | 27 | 3 |

| Google Guava | 22 | 5 |

| Apache FOP | 21 | 2 |

| Total | 70 (87.5%) | 10 (12.5%) |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sulír, M.; Porubän, J. Source Code Documentation Generation Using Program Execution. Information 2017, 8, 148. https://doi.org/10.3390/info8040148

Sulír M, Porubän J. Source Code Documentation Generation Using Program Execution. Information. 2017; 8(4):148. https://doi.org/10.3390/info8040148

Chicago/Turabian StyleSulír, Matúš, and Jaroslav Porubän. 2017. "Source Code Documentation Generation Using Program Execution" Information 8, no. 4: 148. https://doi.org/10.3390/info8040148