Histopathological Breast-Image Classification Using Local and Frequency Domains by Convolutional Neural Network

Abstract

:1. Introduction

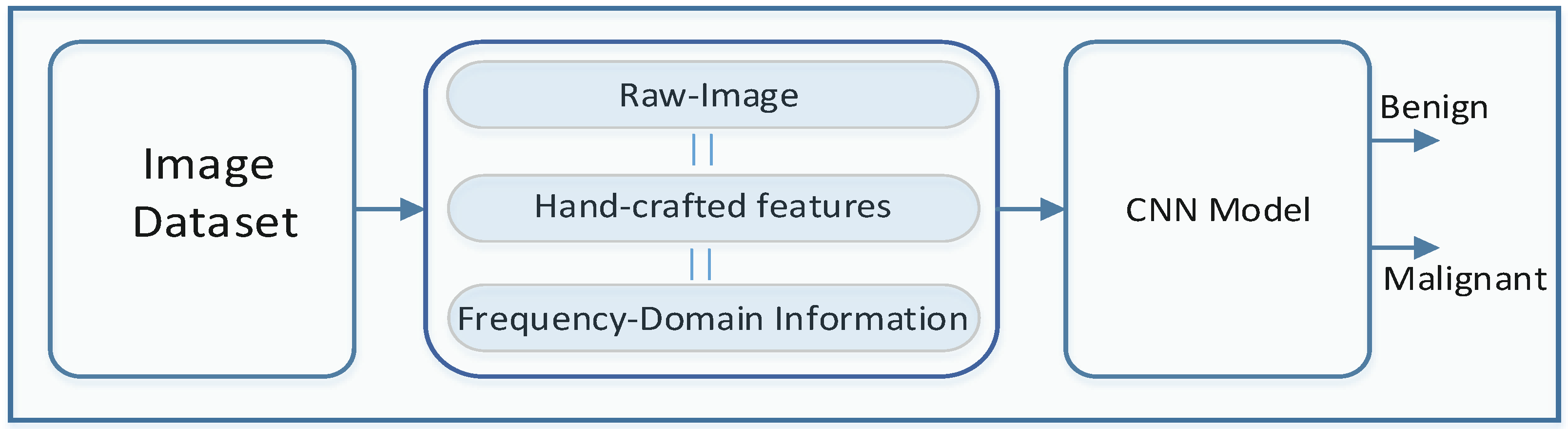

2. Overall Architecture

- Case1: In this case, the image has been directly fed to the CNN model, which is named CNN-I. To reduce the complexity, we have reshaped each of the original images of the dataset to a new image matrix of size , where C represents the number of channels. As we have utilized RGB images, the value of .

- Case2: Case2 utilizes local descriptive features that have been collected through the Contourlet Transform (CT), Histogram information and Local Binary Pattern (LBP). Case2 is further divided into two sub cases:

- Case2a: Selected statistical information has been collected from the CT coefficient data and this statistical data has been further concatenated with the Histogram information. This case has been named CNN-CH. The feature matrix for each of the images is represented as .

- Case2b: Selected statistical information has been collected from the CT coefficient data and this statistical data has been further concatenated with the LBP. This case has been named CNN-CL. The feature matrix for each of the images is represented as .

- Case3: Case3 utilizes frequency-domain information for the image classification, collected using the Discrete Fourier Transform (DFT) and the Discrete Cosine Transform (DCT). This case has been further subdivided into two sub-cases:

- Case3a: DFT coefficients have been utilized as an input for the classifier model, named CNN-DF. The feature matrix for each of the images is represented as .

- Case3b: DCT coefficients have been utilized as an input for the classifier model, named CNN-DC. The feature matrix for each of the images is represented as .

3. Feature Extraction and Data Preparation

3.1. Data Preparation for Case2

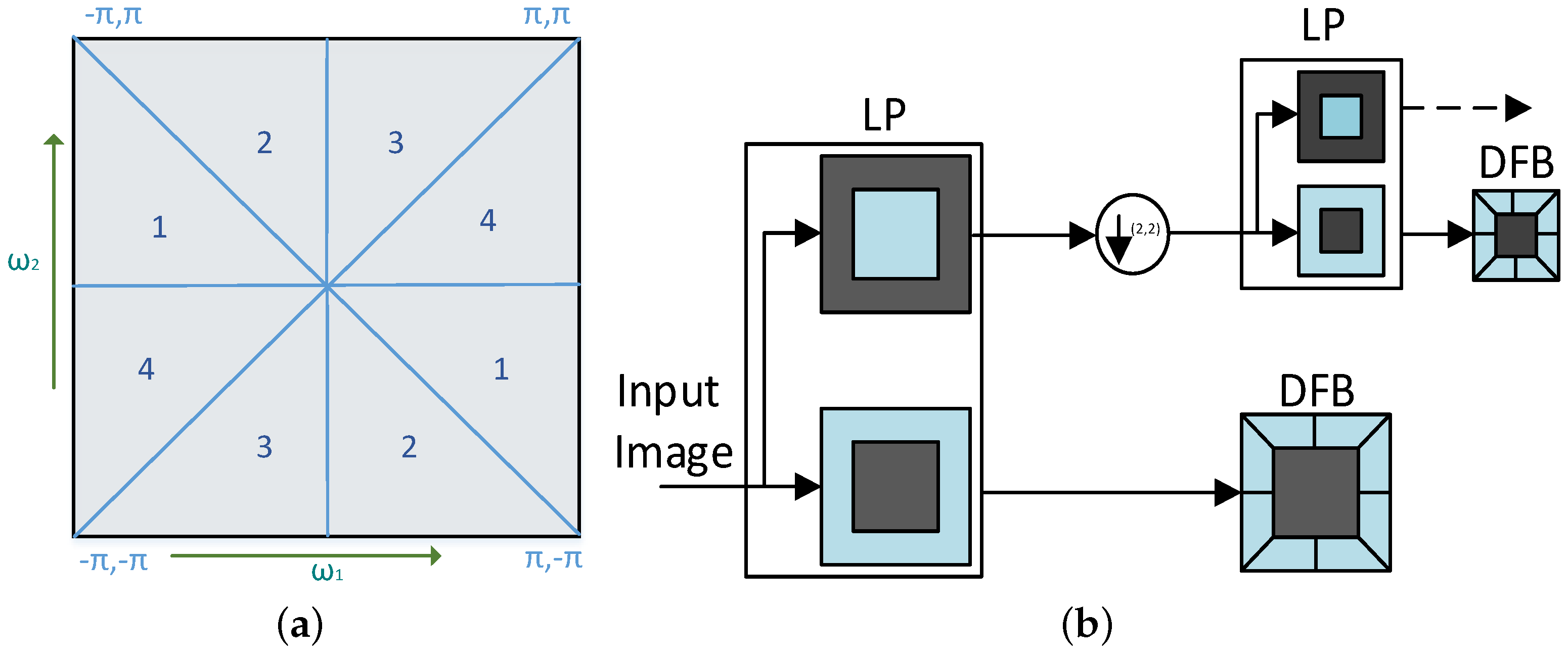

- Laplacian Pyramid (LP): The image pyramid is an image-representation technique where the represented image contains only relatively important information. This technique also produces a series of replications of the original images, but those replicated images have less resolution. A few pyramid methods are available such as Gaussian, Laplacian and WT. Burt and Adelson introduced the Laplacian Pyramid (LP) method. In the case of CT, the LP filter decomposes the input signal into a coarse image and a detailed image (bandpass image) [35]. Each bandpass is further processed and the bandpass directional sub-band signals calculated.

- Directional Filter Bank: A DFB sub-divides the input image into sub-bands. Each of the sub-bands has a wedge-shaped frequency response. Figure 4a shows the wedge-shaped frequency response for a 4-band response.

- Maximum Value (MA),

- Minimum Value (MI),

- Kurtosis (KU),

- Standard Deviation (ST).

3.1.1. Histogram Information

3.1.2. Local Binary Pattern

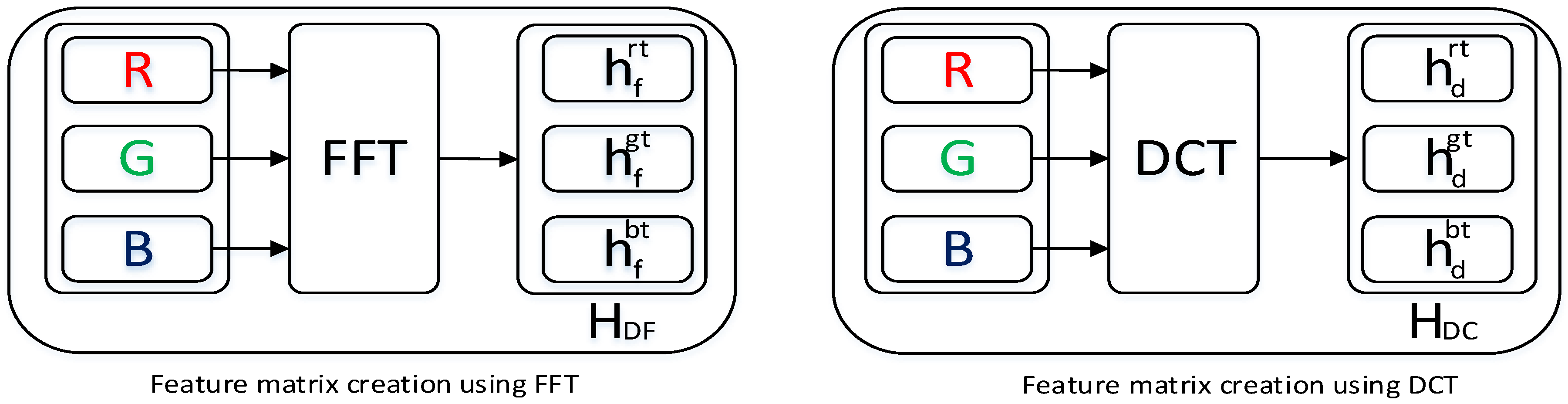

3.2. Data Preparation for Case3

3.2.1. DFT for Feature Selection

3.2.2. DCT for Feature Selection

- Convolutional Neural Network CT Histogram (CNN-CH)

- Convolutional Neural Network CT LBP (CNN-CL)

- Convolutional Neural Network Discrete Fourier Transform (CNN-DF)

- Convolutional Neural Network Discrete Cosine Transform (CNN-DC)

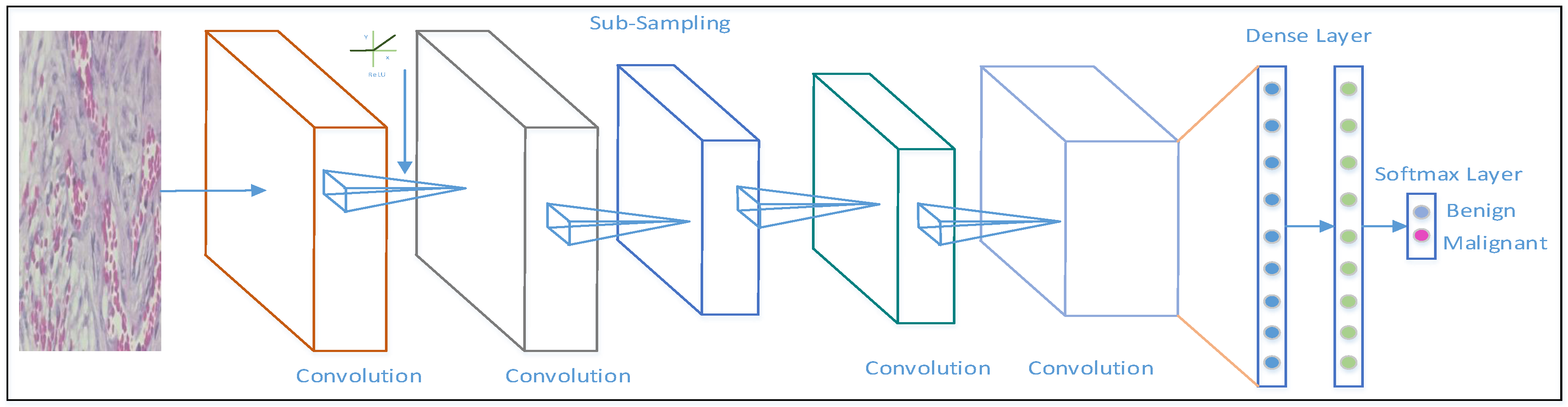

4. Convolutional Neural Network

- Convolutional Layer: The Convolutional layer is considered as the main working ingredient in a CNN model and plays a vital determining part of this model. A kernel (filter), which is basically an matrix successively goes through all the pixels and extracts the information from them.

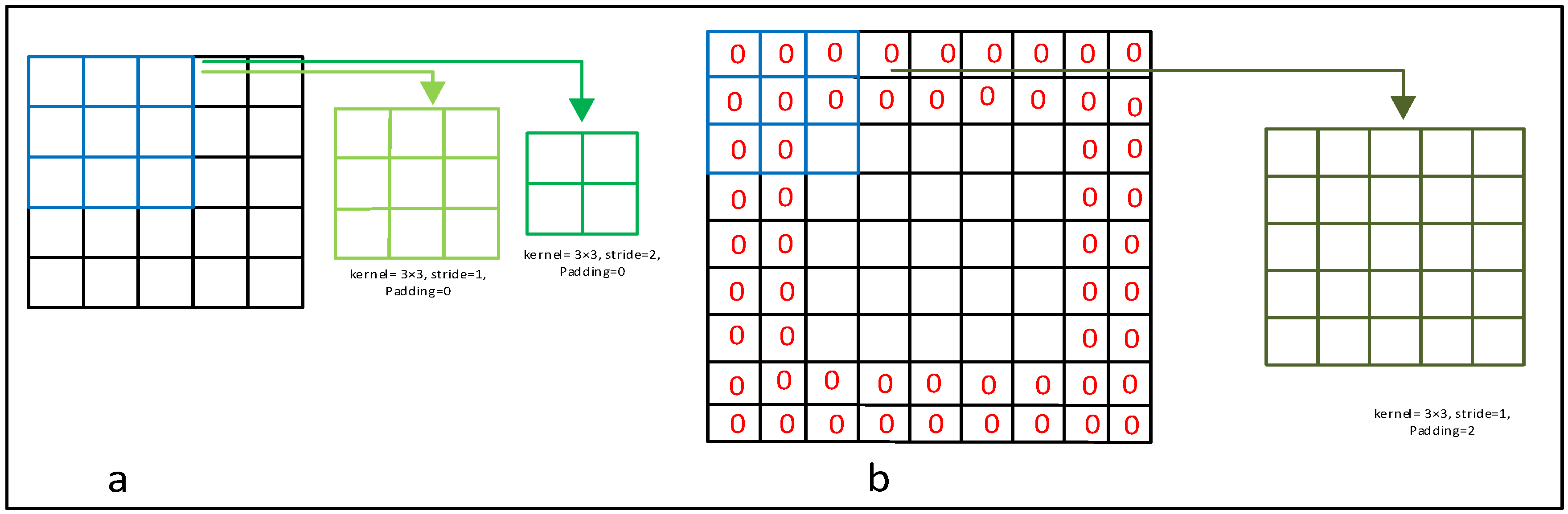

- Stride and Padding: The number of pixels a kernel will move in a step is determined by the stride size; conventionally, the size of the stride keeps to 1. Figure 6a shows an input data matrix of size , which is scanned with a kernel. The light-green image shows the output with stride size 1, and the green image represents the output with stride size 2. When we use a kernel, and stride size 1, then the convolved output is a matrix; however, when we use stride size 2, the convolved output is . Interestingly, if we use a kernel on the above input matrix with stride 1, the output will be a matrix. Thus, the size of the output image has changed with both the size of the stride and the size of the kernel. To overcome this issue, we can utilize extra rows and columns at the end of the matrices that contain 0 s. This adding of rows and columns that contain only zero values is known as zero padding.For example, Figure 6b shows how two extra rows have been added at the top as well as the bottom of the original matrix. Similarly, two extra columns have been added at the beginning as well as the end of the original matrix. Now, the olive-green image of Figure 6b shows a convolved image where we have utilized a kernel of size , stride size 1 and padding size zero. The convolved image is also a matrix, which is the same as the original data size. Thus, by adding the proper amount of zero padding, we can reduce the loss of information that lies at the border.

- Nonlinear Performance: Each layer of the NN produces linear output, and by definition adding two linear functions will also produce another linear output. Due to the linear nature of the output, adding more NN layers will show the same behavior as a single NN layer. To overcome this issue, a rectifier function, such as Rectified Linear Unit (ReLU), Leaky ReLU, TanH, Sigmoid, etc., has been introduced to make the output nonlinear.

- Pooling Operation: A CNN model produces a large amount of feature information. To reduce the feature dimensionality, a down-sampling method named a pooling operation has been performed. A few pooling operation methods are well known such as

- Max Pooling,

- Average Pooling.

For our analysis, we have utilized the Max Pooling operation that selects the maximum values within a particular patch. - Drop-Out: Due to the over training of the model, it shows very poor performance on the test dataset, which is known as over-fitting. These over-fitting issues have been controlled by removing some of the neurons from the network, which is known as Drop-Out.

- Decision Layer: For the classification decision, at the end of a CNN model, a decision layer is introduced. Normally, a Softmax layer or a SVM layer is introduced for this purpose. This layer contains a normalized exponential function and calculates the loss function for the data classification.

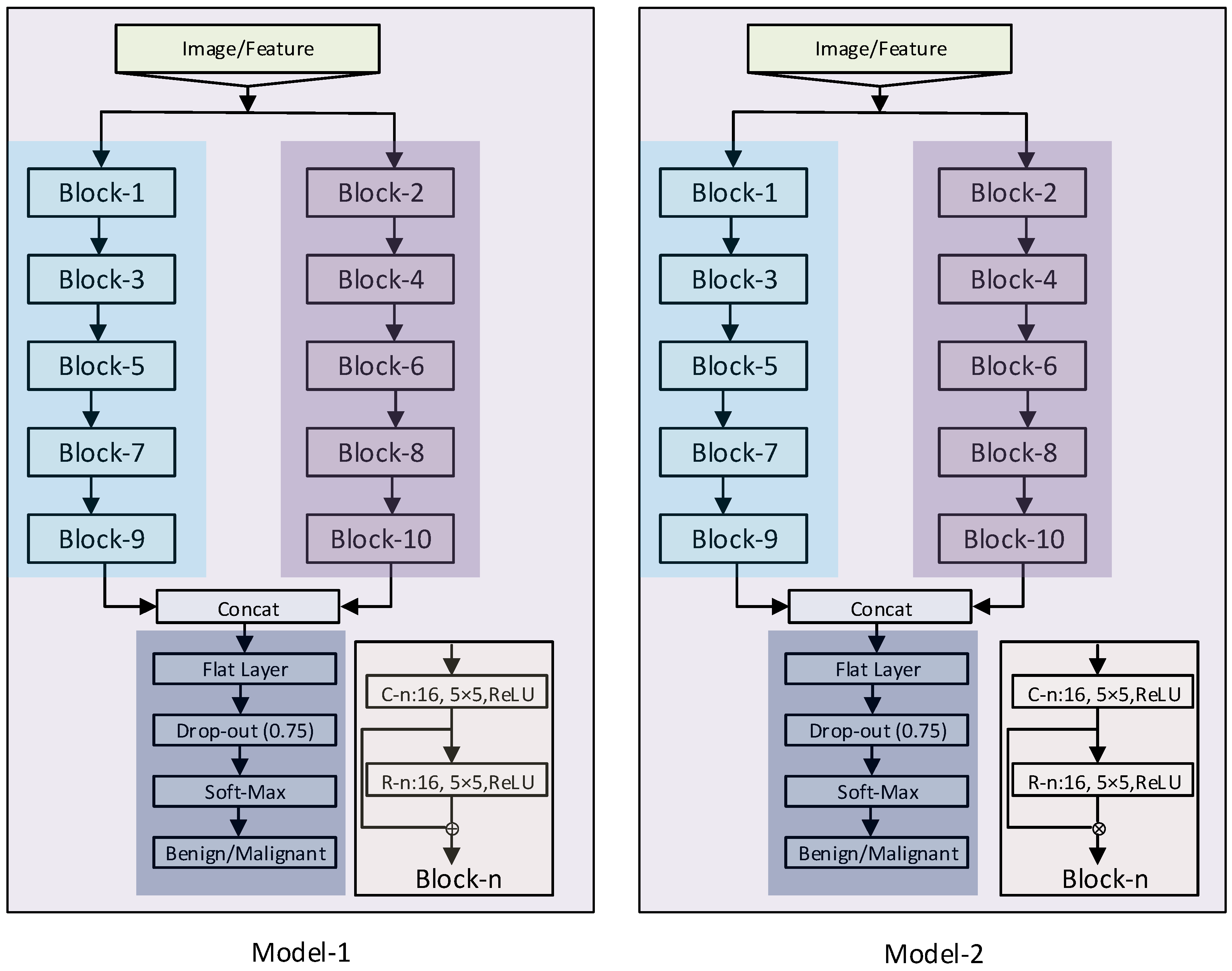

CNN Model for Image Classification

- Model-1: Model-1 utilizes a residual block, represented as Block-n. Each Block-n contains two convolutional blocks named C-n and R-n. The C-n layer convolves the input data with a kernel along with a ReLU rectifier and produces 16 feature maps. The output of the C-n layer passes through the R-n convolutional layer, which also utilizes a kernel along with a ReLU rectifier. The R-n layer also produces 16 feature maps. The output of the R-n layer is merged with the output of the layer and produces a residual output. The output of Block-n can be represented aswhere represents the weight matrix and represents the bias vector.The input matrix passes through Block-1 and Block-2 as shown in Figure 8 (left image). The Output of Block-1 is fed to Block-3, the output of Block-3 is fed as an input of Block-5, the output of Block-5 is fed as an input of Block-7, and the output of Block-7 is fed as an input of Block-9. Similarly, the output of Block-2 is fed to Block-4, the output of Block-4 is fed as an input of Block-6, the output of Block-6 is fed as an input of Block-8, and the output of Block-8 is fed as an input of the Block-10. Now, the output of Block-9 and Block-10 are concatenated in the Concat layer. After the Concat layer, a Flat Layer, a Drop-Out Layer and a Softmax layer have been placed one after another. The output of the Softmax layer has been used to classify the images into Benign and Malignant classes.

- Model-2: Model-2 utilizes almost the same architecture as Model-1. The only difference is that, in each Block-n, the output of layer C-n is multiplied (rather than added) with the output of layer R-n. The output of Block-n can be represented as

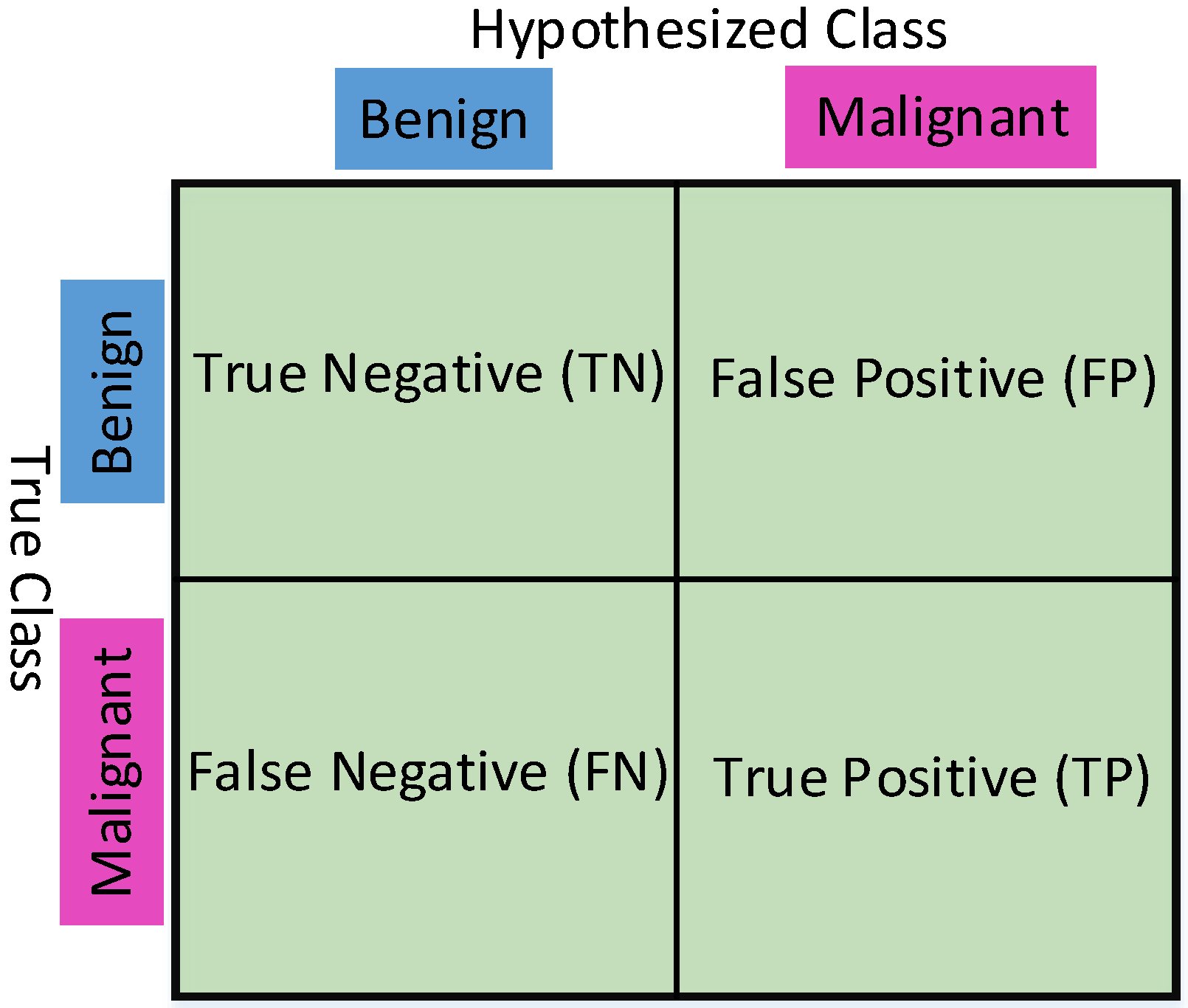

5. Performance Measuring Parameter and Utilized Platform

Platforms Used

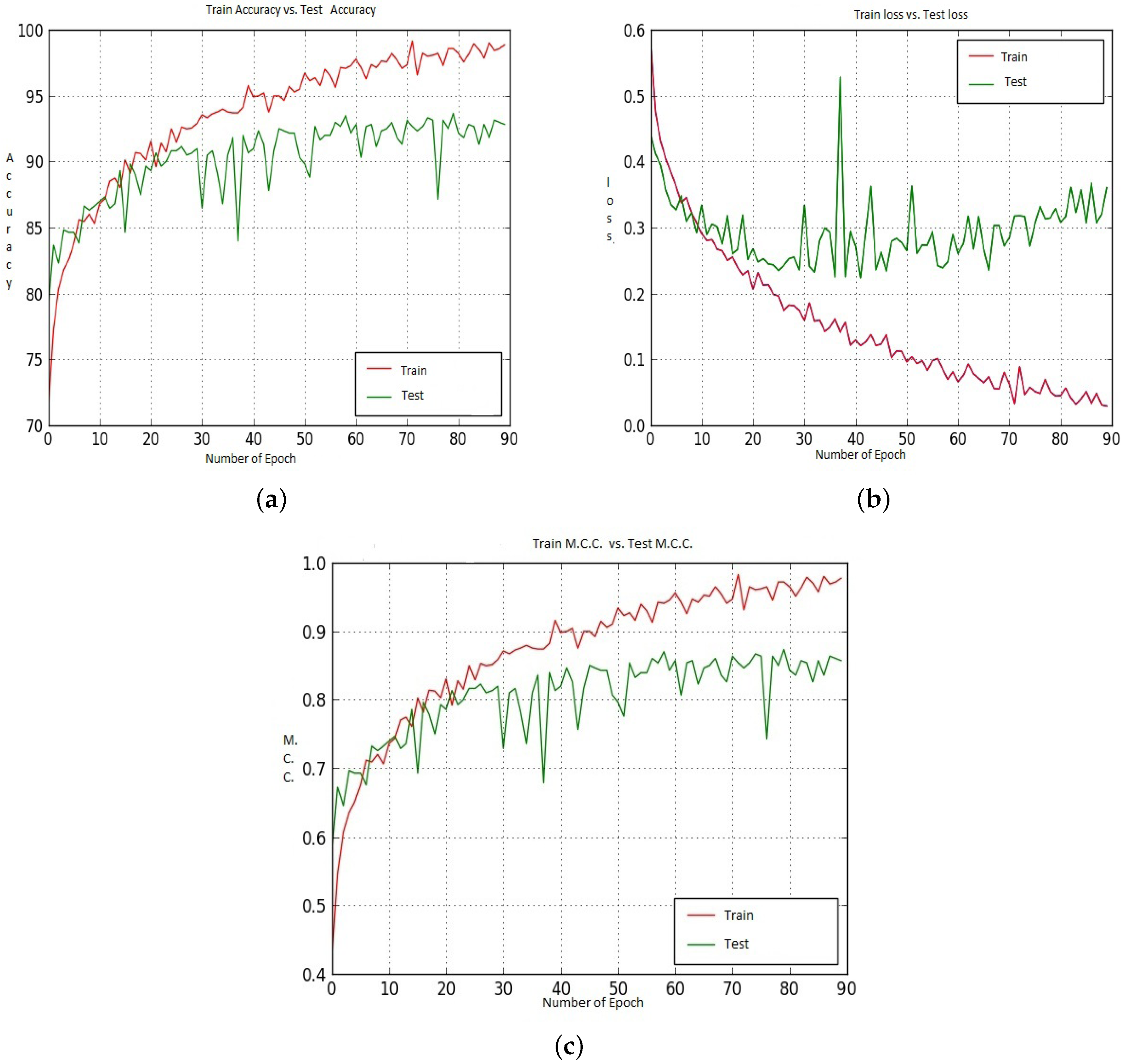

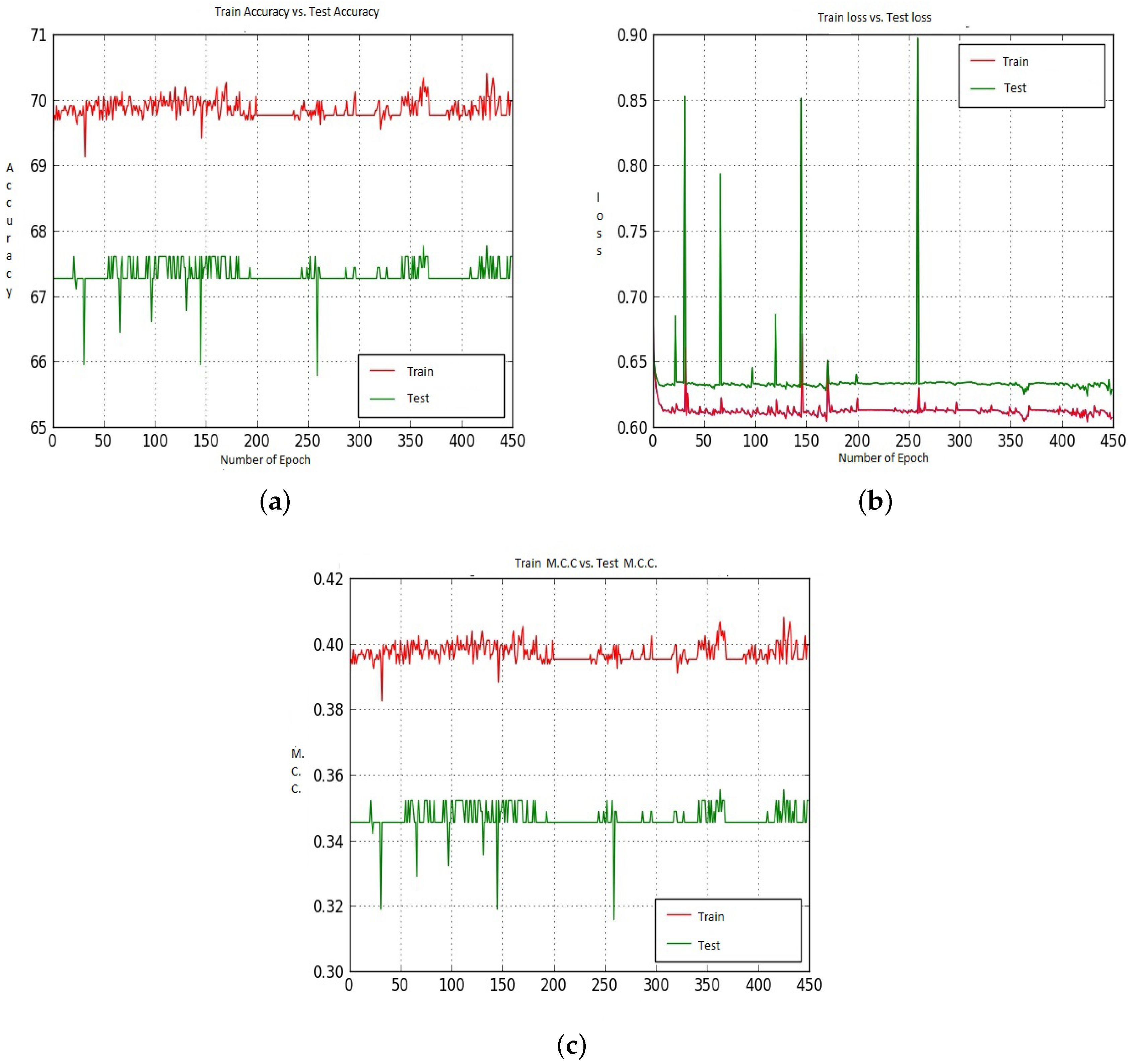

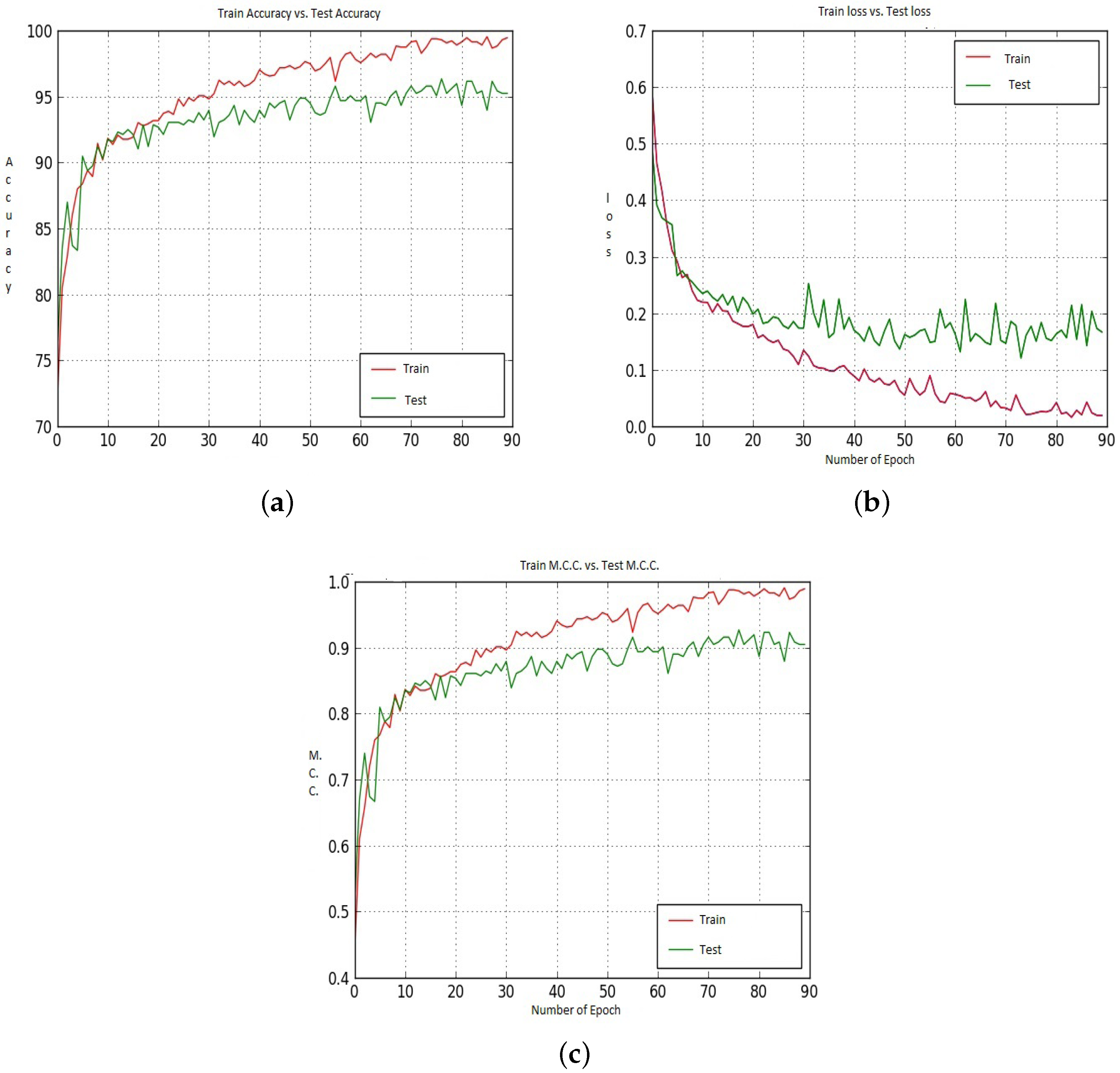

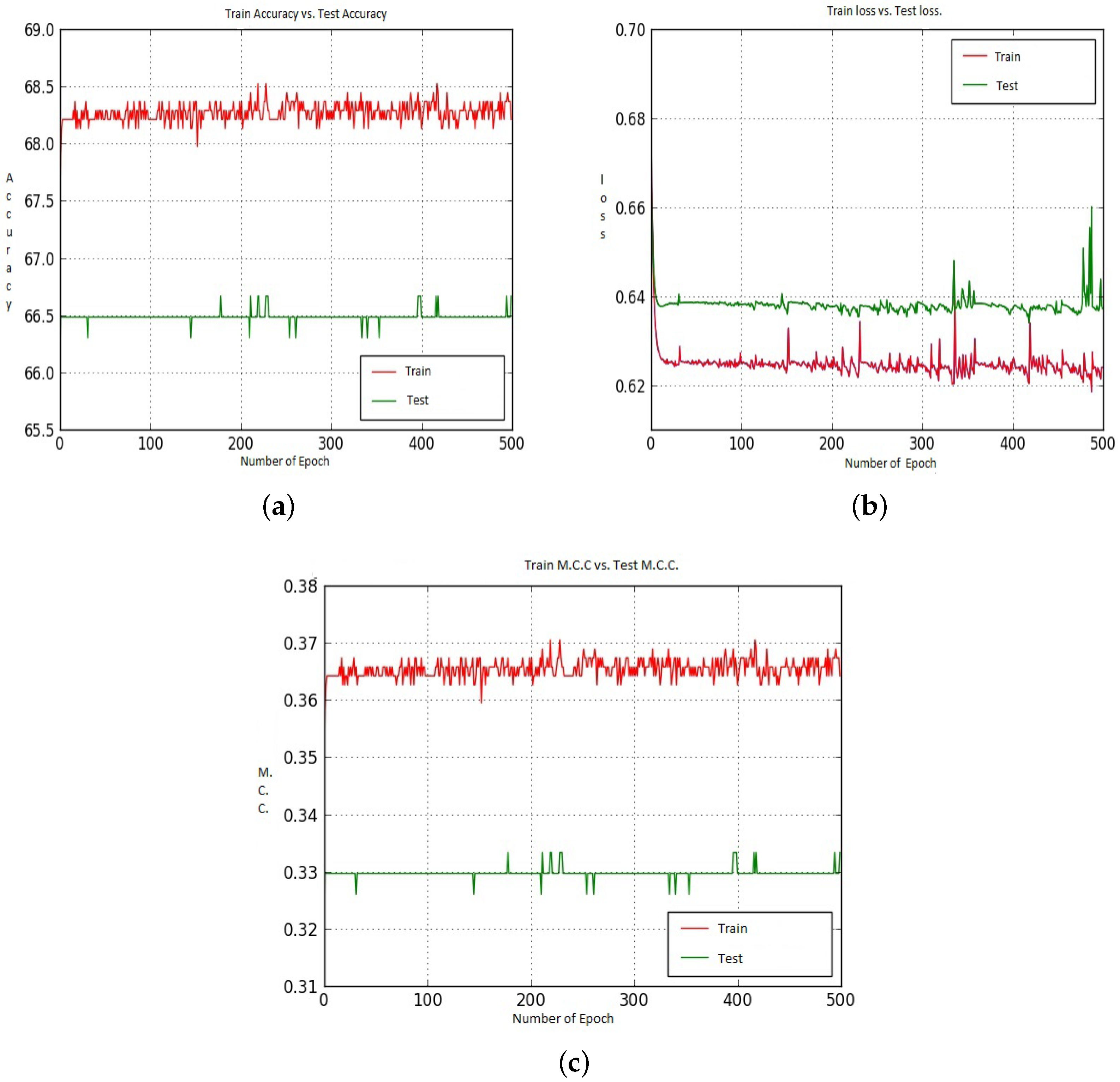

6. Results and Discussion

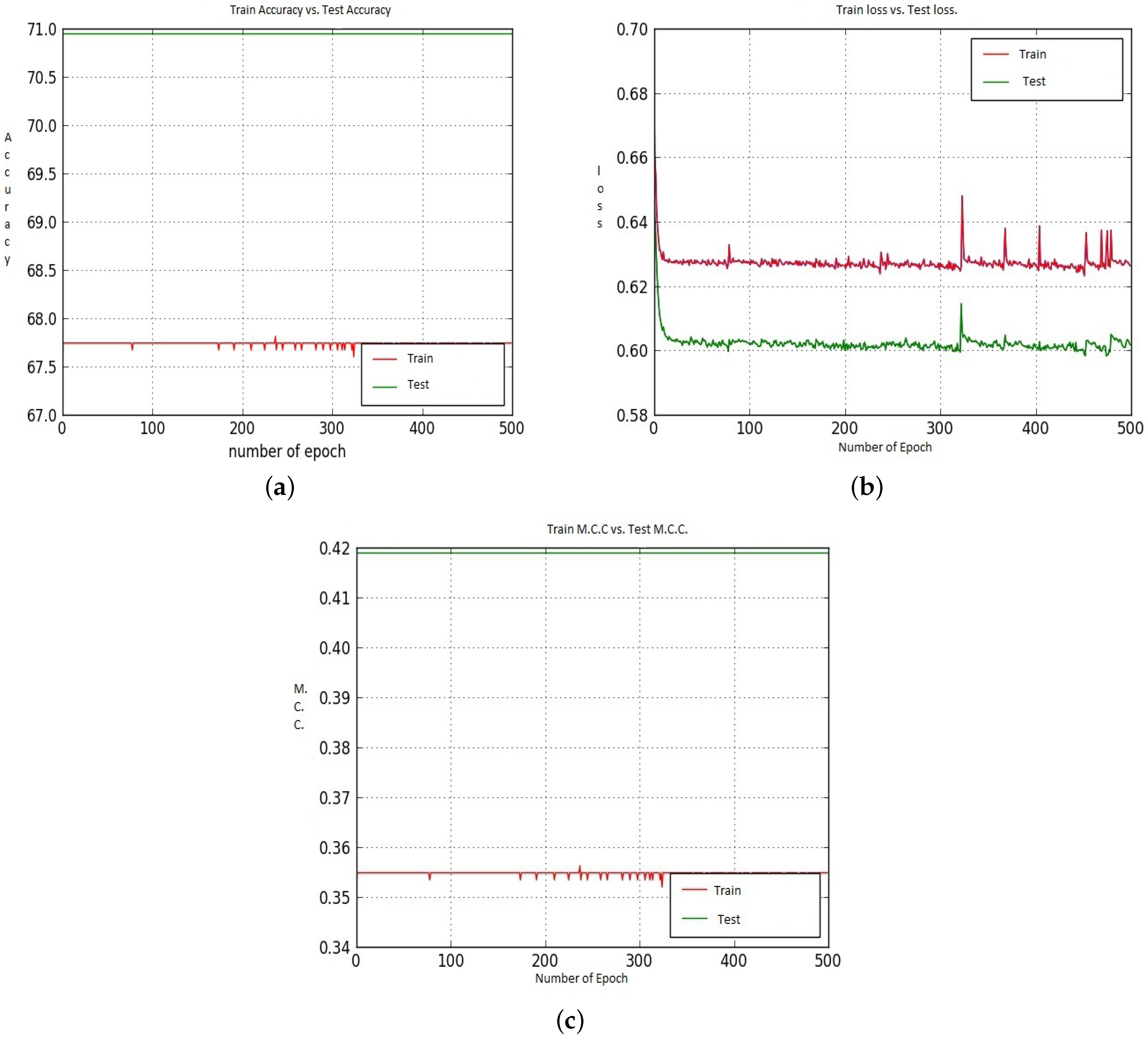

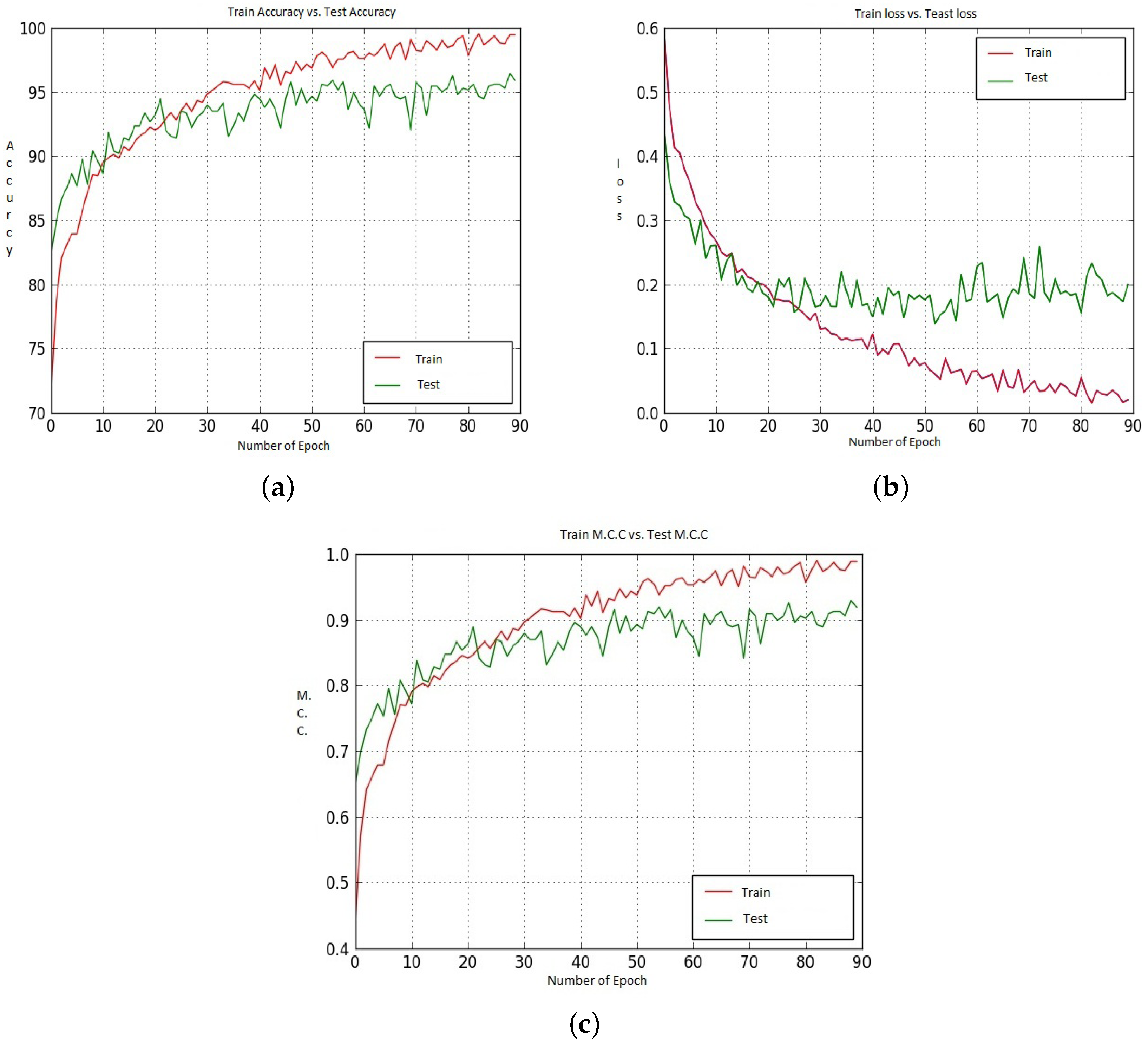

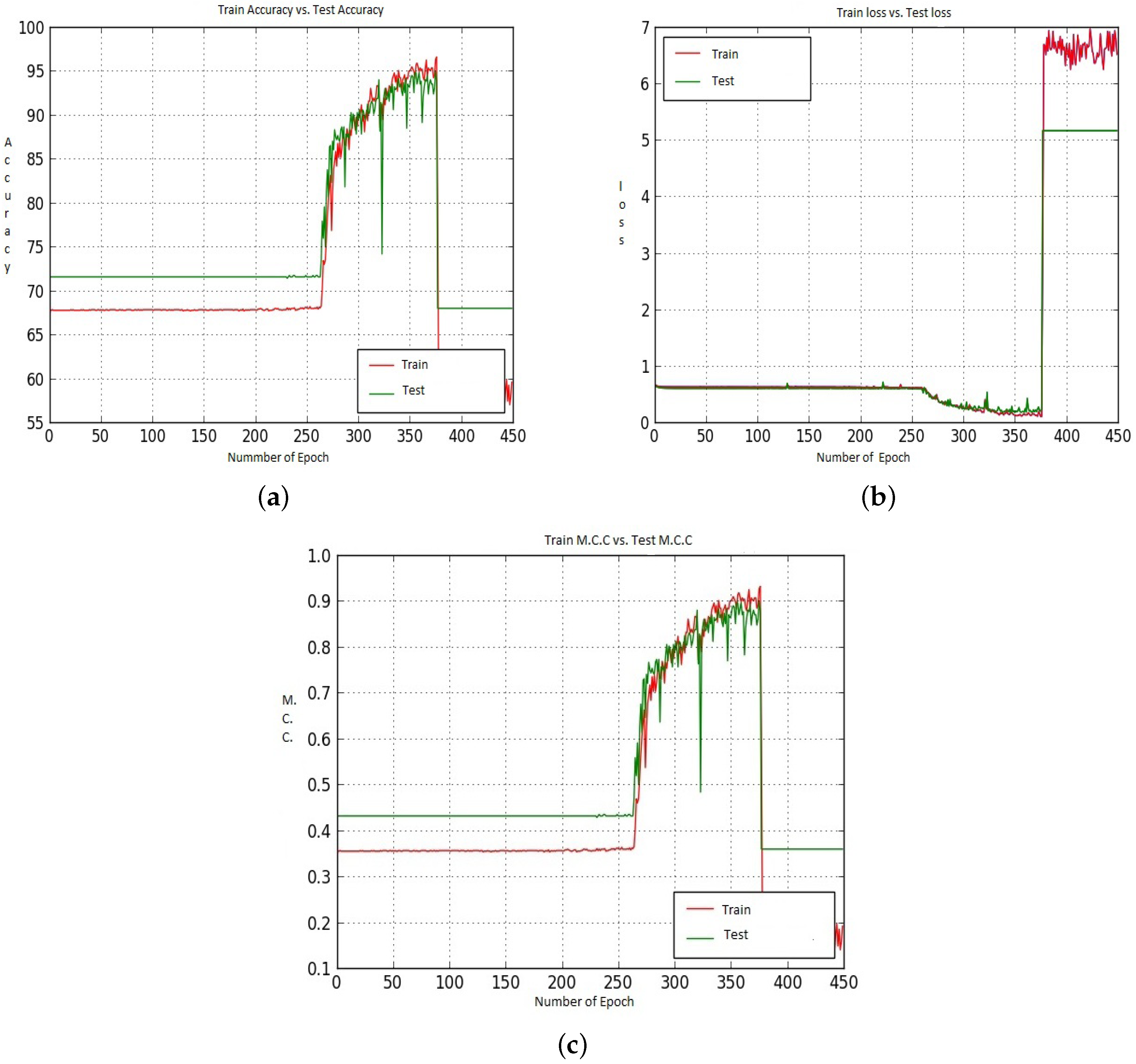

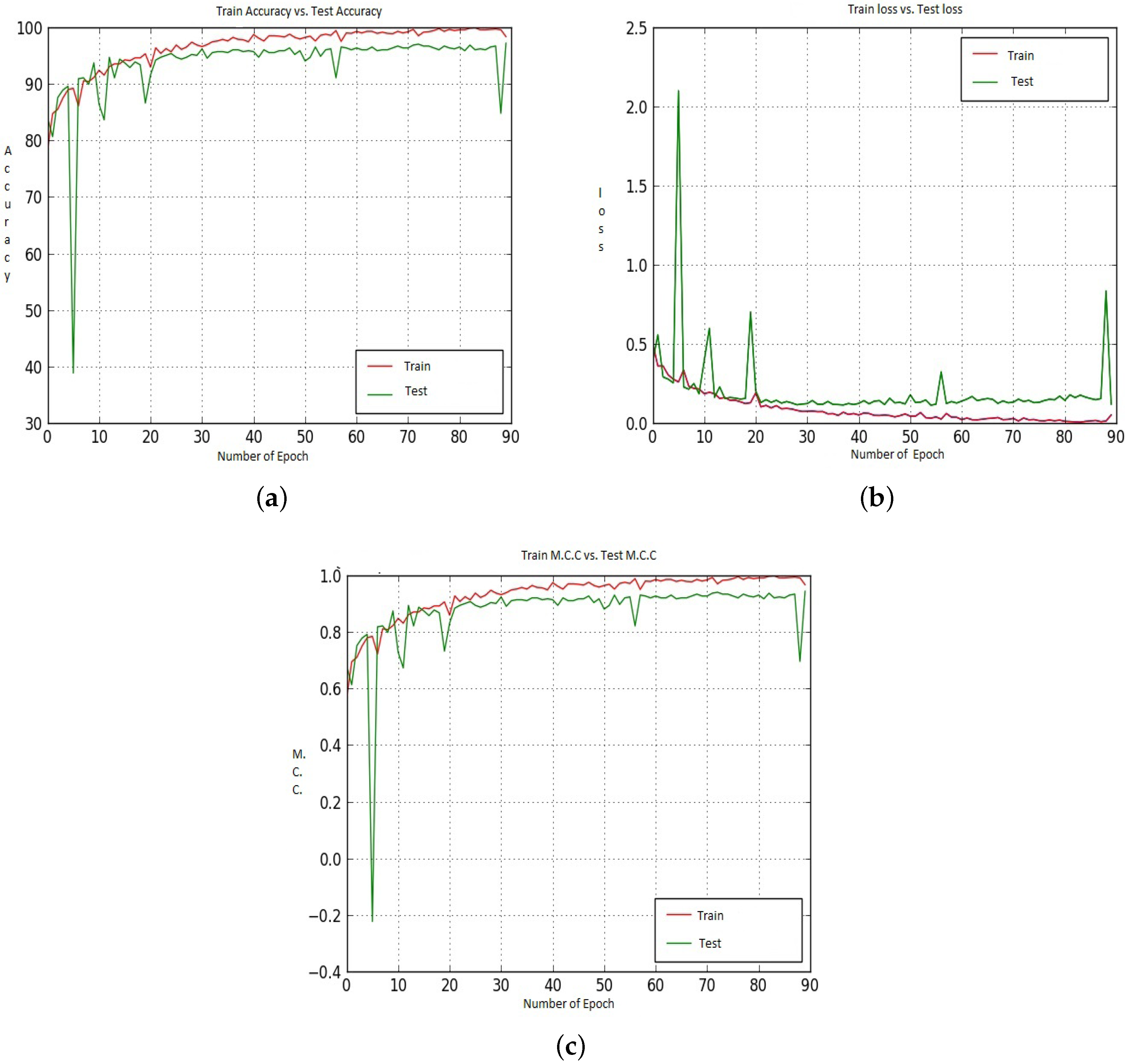

6.1. Performance of 40× Dataset

6.2. Performance of 100× Dataset

6.3. Performance of 200× Dataset

6.4. Performance of 400× Dataset

6.5. Required Time and Parameters

6.6. Comparison with Findings

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bazzani, A.; Bevilacqua, A.; Bollini, D.; Brancaccio, R.; Campanini, R.; Lanconelli, N.; Riccardi, A.; Romani, D. An SVM classifier to separate false signals from microcalcifications in digital mammograms. Phys. Med. Biol. 2002, 46, 1651–1663. [Google Scholar]

- El-Naqa, I.; Yang, Y.; Wernick, M.; Galatsanos, N.; Nishikawa, R. A support vector machine approach for detection of microcalcifications. IEEE Trans. Med. Imaging 2002, 21, 1552–1563. [Google Scholar] [CrossRef] [PubMed]

- Mhala, N.C.; Bhandari, S.H. Improved approach towards classification of histopathology images using bag-of-features. In Proceedings of the 2016 International Conference on Signal and Information Processing (IConSIP), Vishnupuri, India, 6–8 October 2016. [Google Scholar]

- Dheeba, J.; Selvi, S.T. Classification of malignant and benign microcalcification using svm classifier. In Proceedings of the 2011 International Conference on Emerging Trends in Electrical and Computer Technology, Nagercoil, India, 23–24 March 2011; pp. 686–690. [Google Scholar]

- Taheri, M.; Hamer, G.; Son, S.H.; Shin, S.Y. Enhanced breast cancer classification with automatic thresholding using svm and harris corner detection. In Proceedings of the International Conference on Research in Adaptive and Convergent Systems (RACS‘ 16), Odense, Denmark, 11–14 October 2016; ACM: New York, NY, USA, 2016; pp. 56–60. [Google Scholar]

- Shirazi, F.; Rashedi, E. Detection of cancer tumors in mammography images using support vector machine and mixed gravitational search algorithm. In Proceedings of the 2016 1st Conference on Swarm Intelligence and Evolutionary Computation (CSIEC), Bam, Iran, 9–11 March 2016; pp. 98–101. [Google Scholar]

- Levman, J.; Leung, T.; Causer, P.; Plewes, D.; Martel, A.L. Classification of dynamic contrast-enhanced magnetic resonance breast lesions by support vector machines. IEEE Trans. Med. Imaging 2008, 27, 688–696. [Google Scholar] [CrossRef] [PubMed]

- Angayarkanni, S.P.; Kamal, N.B. Mri mammogram image classification using id3 algorithm. In Proceedings of the IET Conference on Image Processing (IPR 2012), London, UK, 3–4 July 2012; pp. 1–5. [Google Scholar]

- Gatuha, G.; Jiang, T. Evaluating diagnostic performance of machine learning algorithms on breast cancer. In Revised Selected Papers, Part II, Proceedings of the 5th International Conference on Intelligence Science and Big Data Engineering. Big Data and Machine Learning Techniques (IScIDE), Suzhou, China, 14–16 June 2015; Springer-Verlag Inc.: New York, NY, USA, 2015; Volume 9243, pp. 258–266. [Google Scholar]

- Zhang, Y.; Zhang, B.; Lu, W. Breast cancer classification from histological images with multiple features and random subspace classifier ensemble. AIP Conf. Proc. 2011, 1371, 19–28. [Google Scholar]

- Diz, J.; Marreiros, G.; Freitas, A. Using Data Mining Techniques to Support Breast Cancer Diagnosis; Springer International Publishing: New York, NY, USA, 2015; pp. 689–700. [Google Scholar]

- Kendall, E.J.; Flynn, M.T. Automated Breast Image Classification Using Features from Its Discrete Cosine Transform. PLoS ONE 2014, 9, e91015. [Google Scholar] [CrossRef] [PubMed]

- Burling-Claridge, F.; Iqbal, M.; Zhang, M. Evolutionary algorithms for classification of mammographie densities using local binary patterns and statistical features. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; pp. 3847–3854. [Google Scholar]

- Rajakeerthana, K.T.; Velayutham, C.; Thangavel, K. Mammogram Image Classification Using Rough Neural Network. In Computational Intelligence, Cyber Security and Computational Models; Springer India: New Delhi, India, 2014; pp. 133–138. [Google Scholar]

- Lessa, V.; Marengoni, M. Applying Artificial Neural Network for the Classification of Breast Cancer Using Infrared Thermographic Images. In Computer Vision and Graphics, Proceedings of the International Conference on Computer Vision and Graphics (ICCVG 2016), Warsaw, Poland, 19–21 September 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 429–438. [Google Scholar]

- Peng, W.; Mayorga, R.; Hussein, E. An automated confirmatory system for analysis of mammograms. Comput. Methods Programs Biomed. 2016, 125, 134–144. [Google Scholar] [CrossRef] [PubMed]

- Silva, S.; Costa, M.; Pereira, W.; Filho, C. Breast tumor classification in ultrasound images using neural networks with improved generalization methods. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 6321–6325. [Google Scholar]

- Lopez-Melendez, E.; Lara-Rodriguez, L.D.; Lopez-Olazagasti, E.; Sanchez-Rinza, B.; Tepichin-Rodriguez, E. Bicad: Breast image computer aided diagnosis for standard birads 1 and 2 in calcifications. In Proceedings of the 22nd International Conference on Electrical Communications and Computers (CONIELECOMP 2012), Cholula, Puebla, Mexico, 27–29 February 2012; pp. 190–195. [Google Scholar]

- Fukushima, K. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 1980, 36, 193–202. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.Y.; Lo, S.C.B.; Freedman, M.T.; Hasegawa, A.; Zuurbier, R.A.; Mun, S.K. Classification of microcalcifications in radiographs of pathological specimen for the diagnosis of breast cancer. In Medical Imaging 1994: Image Processing; International Society for Optics and Photonics: Bellingham, WA, USA, 1994. [Google Scholar]

- Arevalo, J.; González, F.A.; Ramos-Pollán, R.; Oliveira, J.L.; Lopez, M.A.G. Representation learning for mammography mass lesion classification with convolutional neural networks. Comput. Methods Programs Biomed. 2016, 127, 248–257. [Google Scholar] [CrossRef] [PubMed]

- Zejmo, M.; Kowal, M.; Korbicz, J.; Monczak, R. Classification of breast cancer cytological specimen using convolutional neural network. J. Phys. Conf. Ser. 2017, 783, 012060. [Google Scholar] [CrossRef]

- Qiu, Y.; Wang, Y.; Yan, S.; Tan, M.; Cheng, S.; Liu, H.; Zheng, B. An initial investigation on developing a new method to predict short-term breast cancer risk based on deep learning technology. In Proceedings of the SPIE Medical Imaging, San Diego, CA, USA, 27 February–3 March 2016. [Google Scholar]

- Jiang, F.; Liu, H.; Yu, S.; Xie, Y. Breast mass lesion classification in mammograms by transfer learning. In Proceedings of the 5th International Conference on Bioinformatics and Computational Biology (ICBCB‘17), Hong Kong, China, 6–8 January 2017; ACM: New York, NY, USA, 2017; pp. 59–62. [Google Scholar]

- Suzuki, S.; Zhang, X.; Homma, N.; Ichiji, K.; Sugita, N.; Kawasumi, Y.; Ishibashi, T.; Yoshizawa, M. Mass detection using deep convolutional neural network for mammographic computer-aided diagnosis. In Proceedings of the 2016 55th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Tsukuba, Japan, 20–23 September 2016; pp. 1382–1386. [Google Scholar]

- Rezaeilouyeh, H.; Mollahosseini, A.; Mahoor, M.H. Microscopic medical image classification framework via deep learning and shearlet transform. J. Med. Imaging 2016, 3, 044501. [Google Scholar] [CrossRef] [PubMed]

- Sharma, K.; Preet, B. Classification of mammogram images by using cnn classifier. In Proceedings of the 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Jaipur, India, 21–24 September 2016; pp. 2743–2749. [Google Scholar]

- Jiao, Z.; Gao, X.; Wang, Y.; Li, J. A deep feature based framework for breast masses classification. Neurocomputing 2016, 197, 221–231. [Google Scholar] [CrossRef]

- Kooi, T.; Litjens, G.; van Ginneken, B.; Gubern-Merida, A.; Sanchez, C.I.; Mann, R.; den Heeten, A.; Karssemeijer, N. Large scale deep learning for computer aided detection of mammographic lesions. Med. Image Anal. 2017, 35, 303–312. [Google Scholar] [CrossRef] [PubMed]

- Anand, S.; Rathna, R.A.V. Detection of architectural distortion in mammogram images using contourlet transform. In Proceedings of the 2013 IEEE International Conference on Emerging Trends in Computing, Communication and Nanotechnology (ICECCN), Tirunelveli, India, 25–26 March 2013; pp. 177–180. [Google Scholar]

- Moayedi, F.; Azimifar, Z.; Boostani, R.; Katebi, S. Contourlet-Based Mammography Mass Classification; Springer: Berlin/Heidelberg, Germany, 2007; pp. 923–934. [Google Scholar]

- Jasmine, J.; Baskaran, S.; Govardhan, A. Nonsubsampled contourlet transform based classification of microcalcification in digital mammograms. Proc. Eng. 2012, 38, 622–631. [Google Scholar] [CrossRef]

- Pak, F.; Kanan, H.R.; Alikhassi, A. Breast cancer detection and classification in digital mammography based on Non-Subsampled Contourlet Transform (NSCT) and Super Resolution. Comput. Methods Programs Biomed. 2015, 122, 89–107. [Google Scholar] [CrossRef] [PubMed]

- Do, M.N.; Vetterli, M. The contourlet transform: An efficient directional multiresolution image representation. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef] [PubMed]

- Burt, P.; Adelson, E. The laplacian pyramid as a compact image code. IEEE Trans. Commun. 1983, 31, 532–540. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Strang, G. The discrete cosine transform. SIAM Rev. 1999, 41, 135–147. [Google Scholar] [CrossRef]

- Marom, N.D.; Rokach, L.; Shmilovici, A. Using the confusion matrix for improving ensemble classifiers. In Proceedings of the 2010 IEEE 26-th Convention of Electrical and Electronics Engineers in Israel, Eliat, Israel, 17–20 November 2010; pp. 555–559. [Google Scholar]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. A dataset for breast cancer histopathological image classification. IEEE Trans. Biomed. Eng. 2016, 63, 1455–1462. [Google Scholar] [CrossRef] [PubMed]

- Brook, E.; El-yaniv, R.; Isler, E.; Kimmel, R.; Member, S.; Meir, R.; Peleg, D. Breast cancer diagnosis from biopsy images using generic features and svms. IEEE Trans. Biomed. Eng. 2006. [Google Scholar]

- Zhang, B. Breast cancer diagnosis from biopsy images by serial fusion of random subspace ensembles. In Proceedings of the 2011 4th International Conference on Biomedical Engineering and Informatics (BMEI), Shanghai, China, 15–17 October 2011; pp. 180–186. [Google Scholar]

- Cireşan, D.C.; Giusti, A.; Gambardella, L.M.; Schmidhuber, J. Mitosis Detection in Breast Cancer Histology Images with Deep Neural Networks; Springer: Berlin/Heidelberg, Germany, 2013; pp. 411–418. [Google Scholar]

- Wang, H.; Roa, A.C.; Basavanhally, A.N.; Gilmore, H.; Shih, N.; Feldman, M.; Tomaszewski, J.; Gonzalez, F.; Madabhushi, A. Mitosis detection in breast cancer pathology images by combining handcrafted and convolutional neural network features. J. Med. Imaging 2014, 1, 034003. [Google Scholar] [CrossRef] [PubMed]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. Breast cancer histopathological image classification using convolutional neural networks. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 2560–2567. [Google Scholar]

- Han, Z.; Wei, B.; Zheng, Y.; Yin, Y.; Li, K.; Li, S. Breast cancer multi-classification from histopathological images with structured deep learning model. Sci. Rep. 2017, 7, 4172. [Google Scholar] [CrossRef] [PubMed]

- Dimitropoulos, K.; Barmpoutis, P.; Zioga, C.; Kamas, A.; Patsiaoura, K.; Grammalidis, N. Grading of invasive breast carcinoma through grassmannian vlad encoding. PLoS ONE 2017, 12, e0185110. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Hu, X.; Li, Y.; Liu, Q.; Zhu, X. Automatic cell nuclei segmentation and classification of breast cancer histopathology images. Signal Process. 2016, 122, 1–13. [Google Scholar] [CrossRef]

| Case Name | CNN-CH | CNN-CL | CNN-DF | CNN-DC |

|---|---|---|---|---|

| Total Features (Hand Crafted) | 961 | 961 | 2883 | 2883 |

| Metric Name | Mathematical Expression | Highest Value | Lowest Value |

|---|---|---|---|

| Recall | +1 | 0 | |

| Precision | +1 | 0 | |

| Specificity | +1 | 0 | |

| F-measure | +1 | 0 | |

| M.C.C. | +1 | −1 |

| Accuracy % | TNR/Specificity % | FPR (%) | FNR (%) | TPR/Recall (%) | Precision (%) | F-Measure (%) | ||

|---|---|---|---|---|---|---|---|---|

| Model-1 | CNN-CH | 94.40 | 86.00 | 14.00 | 04.00 | 96.00 | 94.00 | 95.00 |

| CNN-CL | 93.32 | 85.05 | 15.95 | 03.20 | 96.70 | 94.00 | 95.00 | |

| CNN-I | 87.47 | 79.31 | 20.68 | 10.00 | 91.00 | 91.00 | 91.00 | |

| CNN-DF | 88.31 | 78.16 | 21.80 | 07.52 | 92.47 | 91.00 | 92.00 | |

| CNN-DC | 86.47 | 74.37 | 25.86 | 08.47 | 91.52 | 90.00 | 91.00 | |

| Model-2 | CNN-CH | 70.00 | 0.00 | 100.00 | 0.00 | 100.00 | 66.00 | 80.00 |

| CNN-CL | 80.30 | 45.77 | 54.07 | 5.6 | 94.35 | 81.00 | 79.00 | |

| CNN-I | 88.31 | 69.45 | 30.45 | 4.00 | 96.00 | 88.50 | 84.78 | |

| CNN-DF | 85.47 | 73.56 | 26.43 | 9.40 | 90.35 | 89.30 | 83.45 | |

| CNN-DC | 86.50 | 52.87 | 47.12 | 3.2 | 96.72 | 83.00 | 90.00 |

| Accuracy % | TNR/Specificity % | FPR (%) | FNR (%) | TPR/Recall (%) | Precision (%) | F-Measure (%) | ||

|---|---|---|---|---|---|---|---|---|

| Model-1 | CNN-CH | 95.93 | 94.85 | 05.15 | 03.64 | 96.36 | 98.00 | 97.00 |

| CNN-CL | 92.00 | 89.10 | 10.90 | 06.70 | 93.30 | 96.00 | 94.00 | |

| CNN-I | 87.15 | 67.42 | 32.50 | 05.00 | 95.00 | 88.00 | 95.00 | |

| CNN-DF | 89.26 | 81.14 | 18.85 | 07.50 | 92.5 | 93.00 | 93.00 | |

| CNN-DC | 87.15 | 78.28 | 21.71 | 09.31 | 90.68 | 91.00 | 91.00 | |

| Model-2 | CNN-CH | 67.96 | 43.00 | 57.00 | 22.00 | 78.00 | 78.00 | 78.00 |

| CNN-CL | 78.53 | 31.42 | 68.52 | 2.73 | 97.27 | 78.00 | 75.00 | |

| CNN-I | 86.12 | 81.87 | 18.18 | 11.26 | 88.78 | 93.00 | 87.00 | |

| CNN-DF | 85.47 | 85.71 | 14.20 | 17.95 | 82.05 | 94.00 | 87.00 | |

| CNN-DC | 86.11 | 65.71 | 34.28 | 6.13 | 93.86 | 87.00 | 90.00 |

| Accuracy % | TNR/Specificity % | FPR (%) | FNR (%) | TPR/Recall (%) | Precision (%) | F-Measure (%) | ||

|---|---|---|---|---|---|---|---|---|

| Model-1 | CNN-CH | 97.19 | 94.94 | 5.06 | 1.70 | 98.20 | 98.00 | 98.00 |

| CNN-CL | 94.00 | 92.42 | 07.57 | 05.65 | 09.41 | 96.00 | 95.00 | |

| CNN-I | 86.44 | 79.31 | 24.74 | 08.10 | 91.89 | 88.00 | 86.00 | |

| CNN-DF | 87.10 | 88.38 | 11.60 | 13.51 | 86.48 | 94.00 | 90.00 | |

| CNN-DC | 85.61 | 71.71 | 28.28 | 08.00 | 92.00 | 87.00 | 90.00 | |

| CNN-CH | 67.60 | 1.00 | 98.98 | 0.00 | 100.00 | 67.00 | 81.00 | |

| Model-2 | CNN-CL | 67.27 | 0.00 | 100.00 | 0.00 | 100.00 | 67.00 | 80.00 |

| CNN-I | 86.00 | 81.87 | 18.18 | 11.26 | 88.78 | 93.00 | 87.00 | |

| CNN-DF | 85.28 | 72.22 | 27.77 | 8.30 | 96.60 | 87.00 | 89.00 | |

| CNN-DC | 67.00 | 0 | 100.00 | 0 | 100.00 | 67.00 | 80.00 |

| Accuracy % | TNR/Specificity % | FPR (%) | FNR (%) | TPR/Recall (%) | Precision (%) | F-Measure (%) | ||

|---|---|---|---|---|---|---|---|---|

| Model-1 | CNN-CH | 96.00 | 90.16 | 9.84 | 2.2 | 97.79 | 95.00 | 96.00 |

| CNN-CL | 80.00 | 80.87 | 19.10 | 6.60 | 9.39 | 91.00 | 92.00 | |

| CNN-I | 84.43 | 70.49 | 29.50 | 8.50 | 91.46 | 86.00 | 89.00 | |

| CNN-DF | 93.00 | 87.43 | 12.56 | 7.70 | 92.30 | 94.00 | 93.00 | |

| CNN-DC | 92.00 | 85.70 | 14.20 | 7.10 | 92.20 | 93.00 | 93.00 | |

| Model-2 | CNN-CH | 67.80 | 0.005 | 99.95 | 0.00 | 1.00 | 0.67 | 0.80 |

| CNN-CL | 66.48 | 00.00 | 100.00 | 0.00 | 100/00 | 44.00 | 53.00 | |

| CNN-I | 86.34 | 75.40 | 24.59 | 10.46 | 89.50 | 88.00 | 89.00 | |

| CNN-DF | 87.17 | 74.86 | 25.13 | 6.61 | 93.30 | 88.00 | 91.00 | |

| CNN-DC | 86.26 | 73.22 | 26.77 | 7.11 | 92.83 | 87.00 | 90.00 |

| Model | Case | Parameters | Time (s) | Model | Case | Parameters | Time (s) |

|---|---|---|---|---|---|---|---|

| Model-1 | CNN-CH | 120,466 | 45 | Model-2 | CNN-CH | 119,666 | 45 |

| CNN-CL | 119,666 | 45 | CNN-CL | 120,466 | 45 | ||

| CNN-I | 119,666 | 38 | CNN-I | 120,466 | 38 | ||

| CNN-DF | 119,666 | 40 | CNN-DF | 120,466 | 40 | ||

| CNN-DC | 119,666 | 40 | CNN-DC | 120,466 | 40 |

| Authors | Dataset Details | Features | Classification Tool | Number of Classes | Accuracy % | Sensitivity % | Recall % | Precision % | ROC % |

|---|---|---|---|---|---|---|---|---|---|

| Brook et al. [40] | Total Sample: 361 | 1. Local Features 2. 1050 Features. | SVM | 1. 3 Classes a. normal tissue b. carcinoma in situ c. invasive ductal | 96.40 | — | — | — | — |

| Zhang [41] | Total Sample: 361 | 1. Local Feature 2. Texural property 3. Curvelet transform | Ensemble | 1. 3 Classes a. normal tissue b. carcinoma as situ c. invasive ductal | 97.00 | — | — | — | — |

| Ciresan et al. [42] | ICPR12 | 1. Globaal Features | DNN | 2 Classes | — | — | 70.00 | 88.00 | — |

| Wang et al. [43] | ICPR12 | 1. Global Feature 2. Textural Features | — | — | — | — | — | — | 73.45 |

| Wang et al. [47] | Total 68 images | 1. Local Features | SVM | 2 Classes | 95.50 | 99.32 | 94.14 | — | — |

| Spanhol et al. [44] | BreakHis a. 40× b. 100× c. 200× d. 400× | Global Features | CNN | 2 Classes | a. 90.40 b. 87.40 c. 85.00 d. 83.00 | — | — | — | — |

| Han et al. [45] | BreakHis a. 40× b. 100× c. 200× d. 400× | Global Features | VLAD | 2 Classes | a. 95.80 ± 3.1 b. 96.90 ± 1.9 c. 96.70 ± 2.0 d. 94.90 ± 2.8 | — | — | — | — |

| Dimitropoulos et al. [46] | BreakHis a. 40× b. 100× c. 200× d. 400× | Global Features | VLAD | 2 Classes | a. 91.80 b. 92.10 c. 91.40 d. 90.20 | — | — | — | — |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nahid, A.-A.; Kong, Y. Histopathological Breast-Image Classification Using Local and Frequency Domains by Convolutional Neural Network. Information 2018, 9, 19. https://doi.org/10.3390/info9010019

Nahid A-A, Kong Y. Histopathological Breast-Image Classification Using Local and Frequency Domains by Convolutional Neural Network. Information. 2018; 9(1):19. https://doi.org/10.3390/info9010019

Chicago/Turabian StyleNahid, Abdullah-Al, and Yinan Kong. 2018. "Histopathological Breast-Image Classification Using Local and Frequency Domains by Convolutional Neural Network" Information 9, no. 1: 19. https://doi.org/10.3390/info9010019