A Hybrid Information Mining Approach for Knowledge Discovery in Cardiovascular Disease (CVD)

Abstract

:1. Introduction

1.1. Big Data and Business Intelligence

1.2. Knowledge Discovery in Healthcare

2. Literature Review

3. Research Method

3.1. Domain Context: CVD Risk Dataset

3.2. Data Source

4. Analysis Methods

4.1. Descriptive Method: Clustering

4.2. Local Method: Association Rules

Apriori Algorithm

- Step 1: Count item occurrences to calculate large 1-itemsets;

- Step 2: Iterate until no new large 1-itemsets are found;

- Step 3: (K + 1)-length candidate itemsets are identified from K large itemsets;

- Step 4: Candidate itemsets containing non-large subsets of K length are not considered;

- Step 5: Count the Support of each candidate itemset by scanning the dataset;

- Step 6: Remove candidate itemsets that are small.

4.3. Predictive Method: Neural Networks

- The first layer is called an input layer and is in direct contact with the input data;

- The intermediate layer is called a hidden layer and has no direct contact with the outside, as it receives data from the input layer and sends it to the output neuron layer;

- The last level is the output layer, which receives data from the neurons of the intermediate layer and interfaces with the output.

5. Data Analysis and Results

5.1. Data Preprocessing

- Data Selection. Selection of the data and attributes that are most advantageous for mining.

- Data Cleaning. Cleaning of all data considered noisy data. In addition, some patients had not collected all attributes; we integrated the missing data where this was possible and we discarded the information about these patients where it was not possible to integrate.

- Data Discretization. Transformation into discrete variables of attributes that take a small set of values, partitioning the values of continuous attributes within a small list of intervals. For the same attributes, the process of discretization it was done manually on the basis of the healthcare literature; in other cases, we used the “Discretize” function of the Weka software (v 3.8.1).

- Data Integration. In the data mining task, usually, a single dataset must first be set when there are data that come from several sources. In this case, we have multiple sources: (1) tables that stored patients’ monitoring data of specific attributes of CVD risk; (2) tables related to hospital admissions, which also stored other information such as the death of the patients; (3) tables related to specialist health services; (4) tables related to medicines used; and (5) tables related to diagnosis of diseases pertinent to CVD risk. Integrating all these data, a general database was created.

- Data Filtering. Every data mining problem requires a search domain restriction based on the purpose of the task. Thus, each resulting temporary table will contain only patient attributes and data with respect to the applied filter.

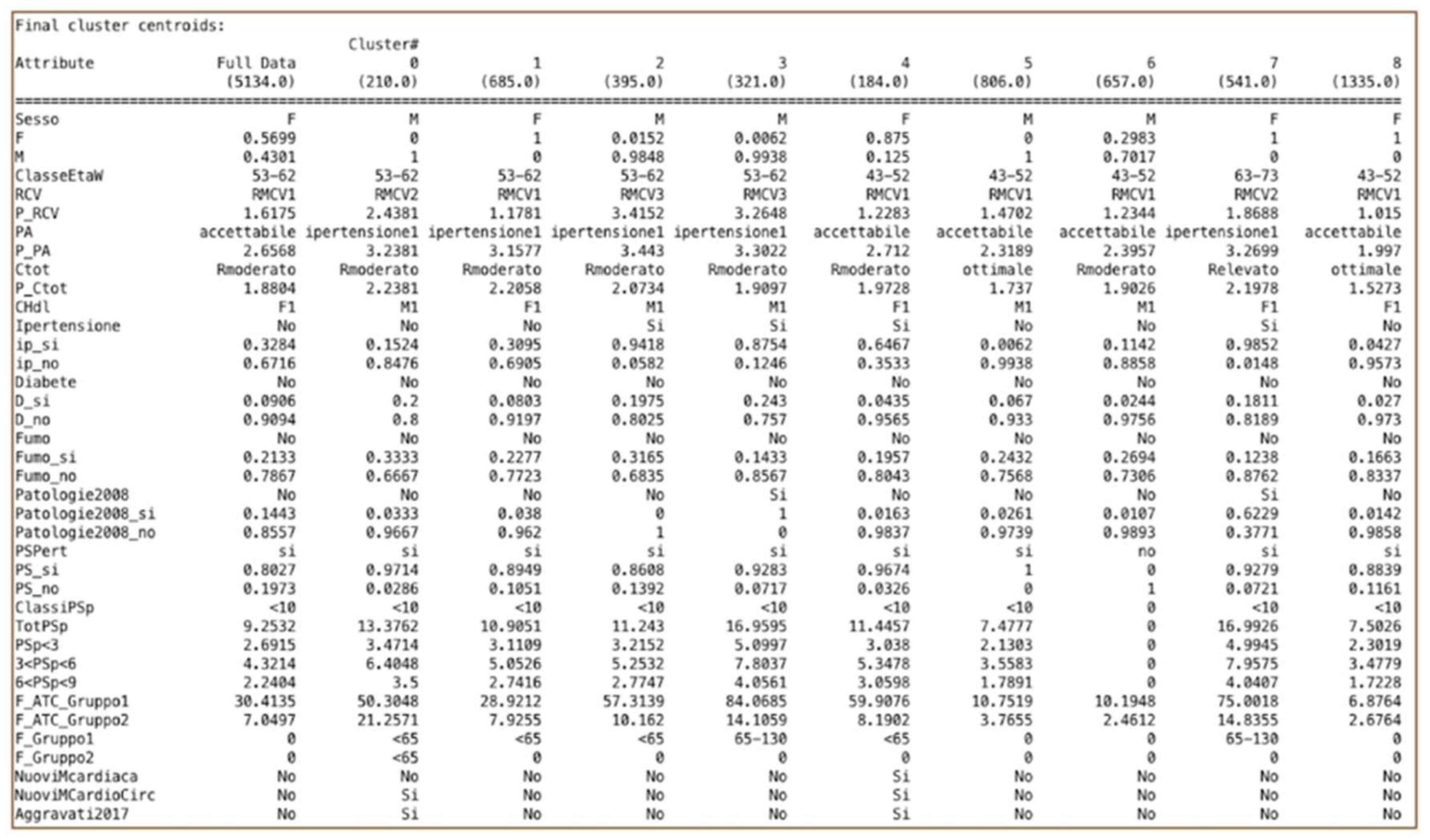

5.2. Clustering Results

5.3. Association Rules Results

- Diabete2008=No AltrePP2008=No F_Gruppo2=0 ClassiRp=0 3517 ==> Aggravati2017=No 3260 conf:(0.93)

- AltrePP2008=No F_Gruppo2=0 ClassiRp=0 3700 ==> Aggravati2017=No 3429 conf:(0.93)

- Diabete2008=No F_Gruppo2=0 ClassiRp=0 3526 ==> Aggravati2017=No 3267 conf:(0.93)

- F_Gruppo2=0 ClassiRp=0 3710 ==> Aggravati2017=No 3437 conf:(0.93)

- Diabete2008=No AltrePP2008=No F_Gruppo2=0 ClassiRp=0 Decesso=no 3420 ==> Aggravati2017=No 3167 conf:(0.93)

- Diabete2008=No F_Gruppo2=0 ClassiRp=0 Decesso=no 3428 ==> Aggravati2017=No 3173 conf:(0.93)

- AltrePP2008=No F_Gruppo2=0 ClassiRp=0 Decesso=no 3583 ==> Aggravati2017=No 3316 conf:(0.93)

- F_Gruppo2=0 ClassiRp=0 Decesso=no 3591 ==> Aggravati2017=No 3322 conf:(0.93)

- Diabete2008=No AltrePP2008=No F_Gruppo2=0 Decesso=no 3606 ==> Aggravati2017=No 3305 conf:(0.92)

- Diabete2008=No AltrePP2008=No F_Gruppo2=0 3723 ==> Aggravati2017=No 3412 conf:(0.92)

- AltrePP2008=No 581 ==> Aggravati2017=Si 581 conf:(1)

- ClassiRp=0 440 ==> Aggravati2017=Si 440 conf:(1)

- Fumo2008=No 439 ==> Aggravati2017=Si 439 conf:(1)

- AltrePP2008=No ClassiRp=0 436 ==> Aggravati2017=Si 436 conf:(1)

- Fumo2008=No AltrePP2008=No 435 ==> Aggravati2017=Si 435 conf:(1)

- Sesso=M 332 ==> Aggravati2017=Si 332 conf:(1)

- F_Gruppo2=0 332 ==> Aggravati2017=Si 332 conf:(1)

- Fumo2008=No ClassiRp=0 332 ==> Aggravati2017=Si 332 conf:(1)

- Fumo2008=No AltrePP2008=No ClassiRp=0 330 ==> Aggravati2017=Si 330 conf:(1)

- Sesso=M AltrePP2008=No 329 ==> Aggravati2017=Si 329 conf:(1)

- Ipertensione=Si 32 ==> Aggravati2017=Si 32 conf:(1)

- Ipertensione=Si Patologie2008=No 32 ==> Aggravati2017=Si 32 conf:(1)

- Ipertensione=Si F_Gruppo2=<65 31 ==> Aggravati2017=Si 31 conf:(1)

- Ipertensione=Si Patologie2008=No F_Gruppo2=<65 31 ==> Aggravati2017=Si 31 conf:(1)

- RCV=RMCV1 Diabete=No 29 ==> Aggravati2017=Si 29 conf:(1)

- RCV=RMCV1 Diabete=No PSPert=si 29 ==> Aggravati2017=Si 29 conf:(1)

- Ipertensione=Si PSPert=si 28 ==> Aggravati2017=Si 28 conf:(1)

- RCV=RMCV1 CHdl=M1 Diabete=No 28 ==> Aggravati2017=Si 28 conf:(1)

- RCV=RMCV1 Ipertensione=No Diabete=No 28 ==> Aggravati2017=Si 28 conf:(1)

- RCV=RMCV1 Diabete=No Patologie2008=No 28 ==> Aggravati2017=Si 28 conf:(1)

- F_Gruppo2=0 130 ==> Aggravati2017=Si 124 conf:(0.95)

- PSPert=si F_Gruppo2=0 124 ==> Aggravati2017=Si 118 conf:(0.95)

- RCV=RMCV1 F_Gruppo2=0 110 ==> Aggravati2017=Si 104 conf:(0.95)

- RicovPert=no F_Gruppo2=0 110 ==> Aggravati2017=Si 104 conf:(0.95)

- PSPert=si 178 ==> Aggravati2017=Si 168 conf:(0.94)

- Fumo=No 148 ==> Aggravati2017=Si 139 conf:(0.94)

- Fumo=No PSPert=si 142 ==> Aggravati2017=Si 133 conf:(0.94)

- RicovPert=no 155 ==> Aggravati2017=Si 145 conf:(0.94)

- RCV=RMCV1 151 ==> Aggravati2017=Si 141 conf:(0.93)

- CHdl=F1 120 ==> Aggravati2017=Si 112 conf:(0.93)

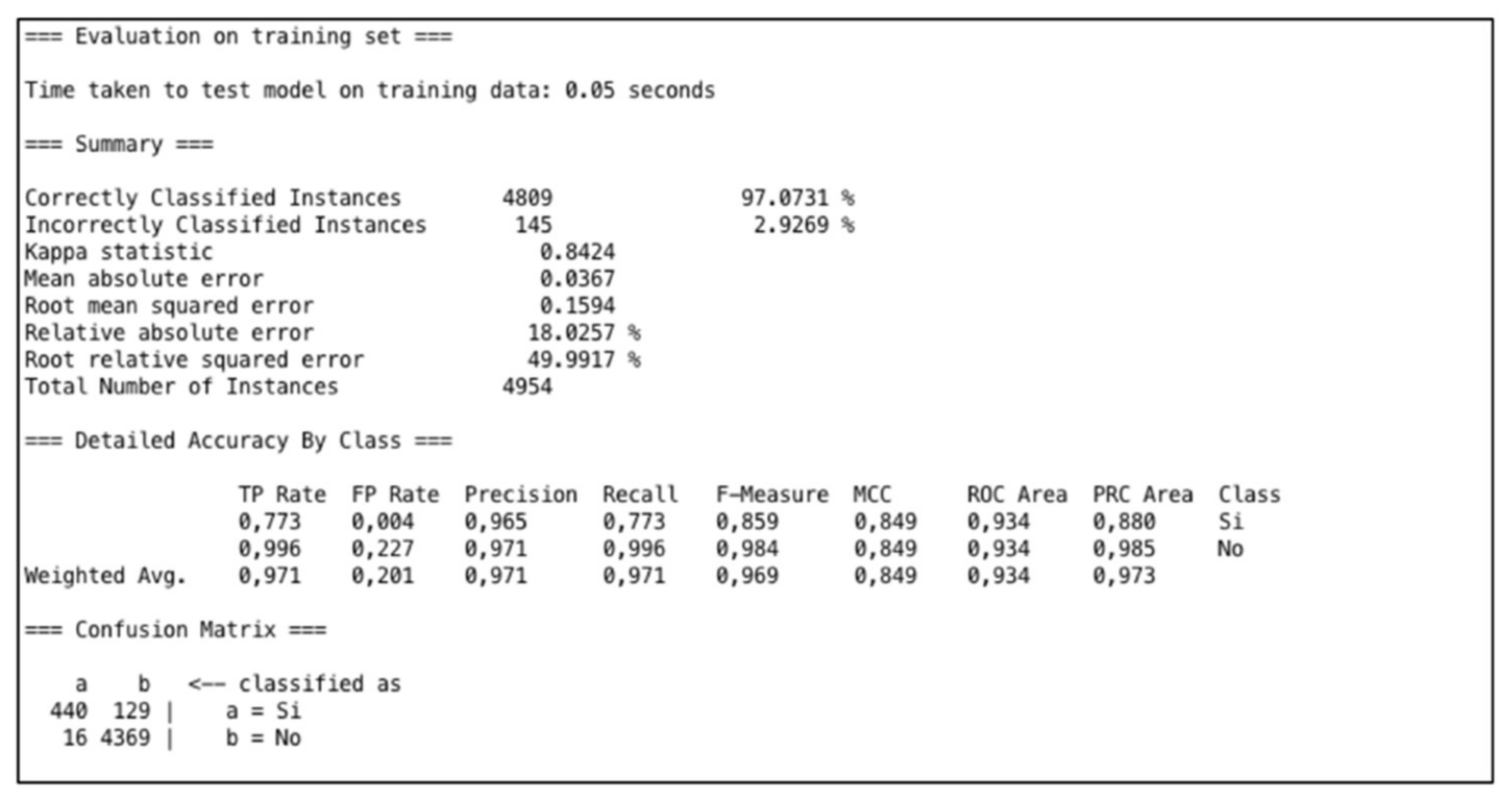

5.4. Artificial Neural Network Results

6. Discussion

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Paiano, R.; Pasanisi, S. A New Challenge for Information Mining. Broad Res. Artif. Intell. Neurosci. 2017, 8, 63–80. [Google Scholar]

- Di Blas, N.; Mazuran, M.; Paolini, P.; Quintarelli, T.; Tanca, L. Exploratory computing: A draft Manifesto. In Proceedings of the 2014 International Conference on Data Science and Advanced Analytics (DSAA), Shanghai, China, 30 October–1 November 2014; pp. 577–580. [Google Scholar]

- Kimball, R.; Ross, M. The Data Warehouse Toolkit: The Definitive Guide to Dimensional Modeling; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Berry, M.L.; Linoff, G. Data Mining Techniques: For Marketing, Sales, and Customer Support; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1997. [Google Scholar]

- Nambiar, R.; Bhardwaj, R.; Sethi, V.; Vargheese, R. A look at challenges and opportunities of big data analytics in healthcare. In Proceedings of the 2013 IEEE International Conference on Big Data, Silicon Valley, CA, USA, 6–9 October 2013; pp. 17–22. [Google Scholar]

- Zhang, Y.; Qiu, M.; Tsai, C.W.; Hassan, M.A.; Alamri, A. Health-CPS: Healthcare cyber-physical system assisted by cloud and big data. IEEE Syst. J. 2017, 11, 88–95. [Google Scholar] [CrossRef]

- Brittain, J.M.; MacDougall, J. Information as a resource in the National Health Service. Int. J. Inf. Manag. 1995, 15, 127–133. [Google Scholar] [CrossRef]

- Wang, Y.; Kung, B.; Byrd, T.A. Big data analytics: Understanding its capabilities and potential benefits for healthcare organizations. Technol. Forecast. Soc. Chang. 2018, 126, 3–13. [Google Scholar] [CrossRef]

- Gillespie, G. There’s gold in them thar’ databases. Health Data Manag. 2000, 8, 40–44. [Google Scholar] [PubMed]

- Koh, H.T.; Tan, G. Data mining applications in healthcare. J. Healthc. Inf. Manag. 2011, 19, 65. [Google Scholar]

- Milley, A. Healthcare and data mining. Health Manag. Technol. 2000, 21, 44–47. [Google Scholar]

- Meenal Baliyan, N.; Bassi, V. Towards Heart Disease Prediction Using Hybrid Data Mining. Master’s Thesis, Thapar Institute of Engineering & Technology Digital Repository (TuDR), Patiala, India, August 2017. [Google Scholar]

- Shouman, M.; Turner, S.; Stocker, R. Using data mining techniques in heart disease diagnosis and treatment. In Proceedings of the 2012 Japan-Egypt Conference on Electronics, Communications and Computers (JEC-ECC), Alexandria, Egypt, 6–9 March 2012; pp. 173–177. [Google Scholar]

- Srinivas, K.; Rani, B.G.; Govrdhan, A. Applications of data mining techniques in healthcare and prediction of heart attacks. Int. J. Comput. Sci. Eng. 2010, 2, 250–255. [Google Scholar]

- Srinivas, K.; Rao, G.G.; Govardhan, A. Rough-Fuzzy classifier: A system to predict the heart disease, by blending two different set theories. Arabian J. Sci. 2014, 39, 2857–2868. [Google Scholar] [CrossRef]

- Palaniappan, A.; Awang, R. Intelligent heart disease prediction system using data mining techniques. In Proceedings of the IEEE/ACS International Conference on Computer Systems and Applications, AICCSA 2008, Doha, Qatar, 31 March–4 April 2008; pp. 108–115. [Google Scholar]

- Chaurasia, V.; Pal, S. Early prediction of heart diseases using data mining techniques. Caribb. J. Sci. Technol. 2013, 1, 208–217. [Google Scholar]

- Karaolis, M.A.; Moutiris, J.A.; Hadjipanayi, P.; Pattichis, C.S. Assessment of the risk factors of coronary heart events based on data mining with decision trees. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 559–566. [Google Scholar] [CrossRef] [PubMed]

- Zolfaghar, K.; Meadem, N.; Teredesai, A.; Roy, S.B.; Chin, S.M.; Muckian, B. Big data solutions for predicting risk-of-readmission for congestive heart failure patients. In Proceedings of the 2013 IEEE International Conference on Big Data, Silicon Valley, CA, USA, 6–9 October 2013; pp. 64–71. [Google Scholar]

- National Plan of Chronicity of the Italian Ministry of Health. 2013. Available online: http://www.salute.gov.it (accessed on 23 February 2018).

- Matrice Project. 2014. Available online: www.agenas.it/monitor35 (accessed on 23 February 2018).

- Risk Assessment, “The Cuore Project” by National Institute of Health. 1998. Available online: http://www.cuore.iss.it/eng/default.asp (accessed on 23 February 2018).

- Witten, I.H.; Frank, E.; Hall, M.P.; Pal, C.J. Data Mining: Practical Machine Learning Tools and Techniques; Morgan Kaufmann: Burlington, MA, USA, 2016. [Google Scholar]

- Giudici, P. Applied Data Mining: Statistical Methods for Business and Industry; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- El-Halees, A. Mining Students Data to Analyze Learning Behavior: A Case Study. In Proceedings of the 2008 international Arab Conference of Information Technology (ACIT2008), Sfax, Tunisia, 15–18 December 2008. [Google Scholar]

- Nazeer, K.S.; Sebastian, M.P. Improving the Accuracy and Efficiency of the k-means Clustering Algorithm. In Proceedings of the World Congress on Engineering, London, UK, 1–3 July 2009; Volume I, pp. 1–3. [Google Scholar]

- Suresh, J.; Ramanjaneyulu, T. Mining Frequent Itemsets Using Apriori Algorithm. Int. J. Comput. Trends Technol. 2013, 4, 760–764. [Google Scholar]

- Agrawal, S.; Srikant, R. Fast algorithms for mining association rules. In Proceedings of the 20th International Conference on Very Large Data Bases, San Francisco, CA, USA, 12–15 September 1994; Volume 1215, pp. 487–499. [Google Scholar]

- Rosenblatt, F. Principles of Neurodynamics: Perceptrons and the Theory of Brain Mechanisms; Spartan Books: Washington, WA, USA, 1961. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.W.; Williams, R.J. Learning Internal Representations by Error Propagation; James McClelland, L., Rumelhart, D.E., Eds.; Parallel Distributed Processing; MIT Press: Cambridge, MA, USA, 1987; pp. 318–362. [Google Scholar]

- Melville, P.; Yang, S.M.; Saar-Tsechansky, M.; Mooney, R. Active learning for probability estimation using Jensen-Shannon divergence. In Proceedings of the European Conference on Machine Learning, Porto, Portugal, 3–7 October 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 268–279. [Google Scholar]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

| CVD Risk Class | Score | No. |

|---|---|---|

| CVD1 | <5% | 3414 |

| CVD2 | 5–10% | 931 |

| CVD3 | 10–15% | 391 |

| CVD4 | 15–20% | 195 |

| CVD5 | 20–30% | 143 |

| CVD6 | >30% | 60 |

| Disease | Yes | No |

|---|---|---|

| diabetes | 465 | 4669 |

| hypertension | 1686 | 3448 |

| cardiac disease | 682 | 4452 |

| cardio-circulatory disease | 104 | 5030 |

| other CV disease | 26 | 5108 |

| Disease | New Diagnoses | Total |

|---|---|---|

| diabetes | 135 | 600 |

| hypertension | 54 | 1740 |

| cardiac disease | 338 | 1020 |

| cardio-circulatory disease | 413 | 517 |

| other CV disease | 36 | 62 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pasanisi, S.; Paiano, R. A Hybrid Information Mining Approach for Knowledge Discovery in Cardiovascular Disease (CVD). Information 2018, 9, 90. https://doi.org/10.3390/info9040090

Pasanisi S, Paiano R. A Hybrid Information Mining Approach for Knowledge Discovery in Cardiovascular Disease (CVD). Information. 2018; 9(4):90. https://doi.org/10.3390/info9040090

Chicago/Turabian StylePasanisi, Stefania, and Roberto Paiano. 2018. "A Hybrid Information Mining Approach for Knowledge Discovery in Cardiovascular Disease (CVD)" Information 9, no. 4: 90. https://doi.org/10.3390/info9040090