Hierarchical Guidance Strategy and Exemplar-Based Image Inpainting

Abstract

:1. Introduction

2. Related Work

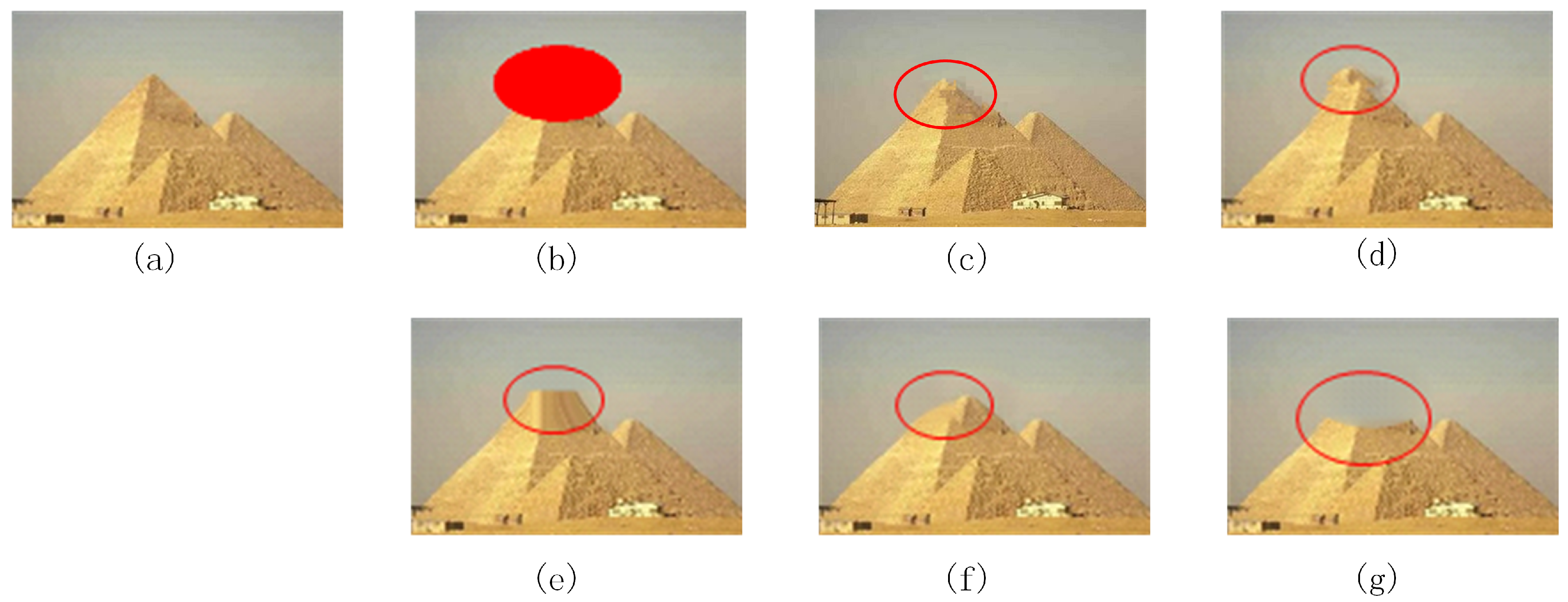

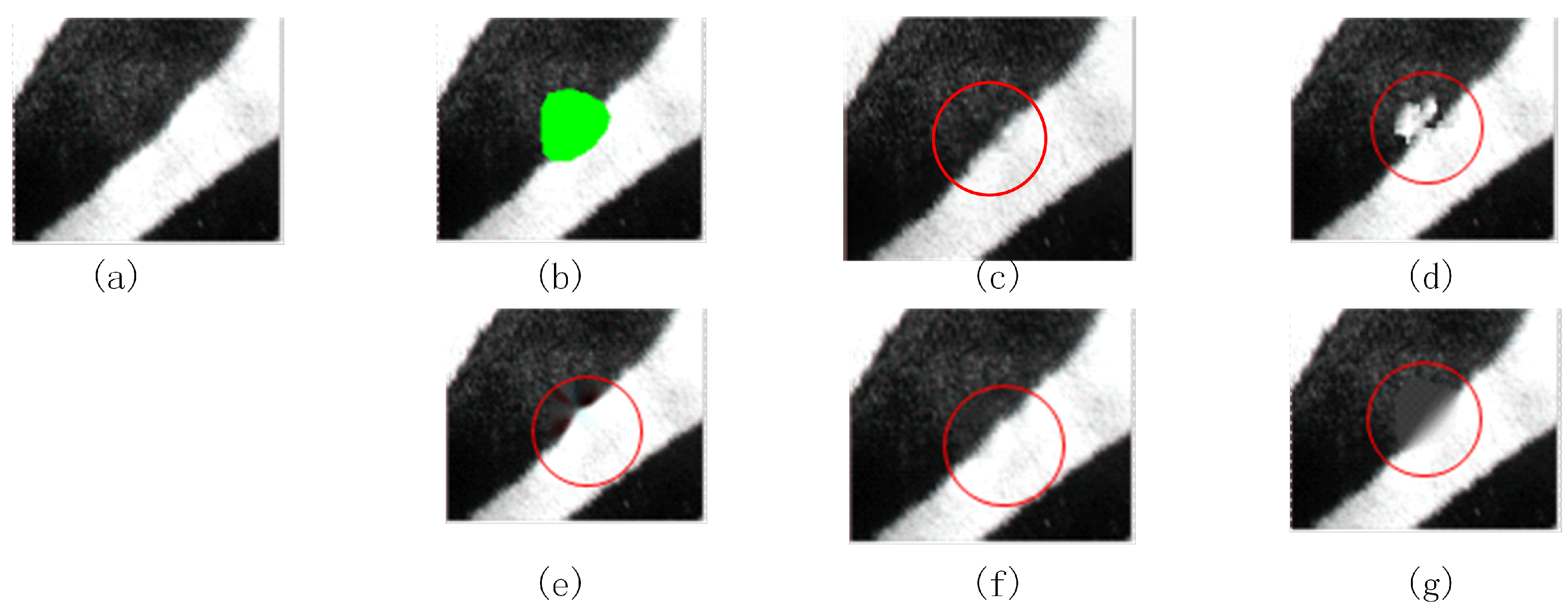

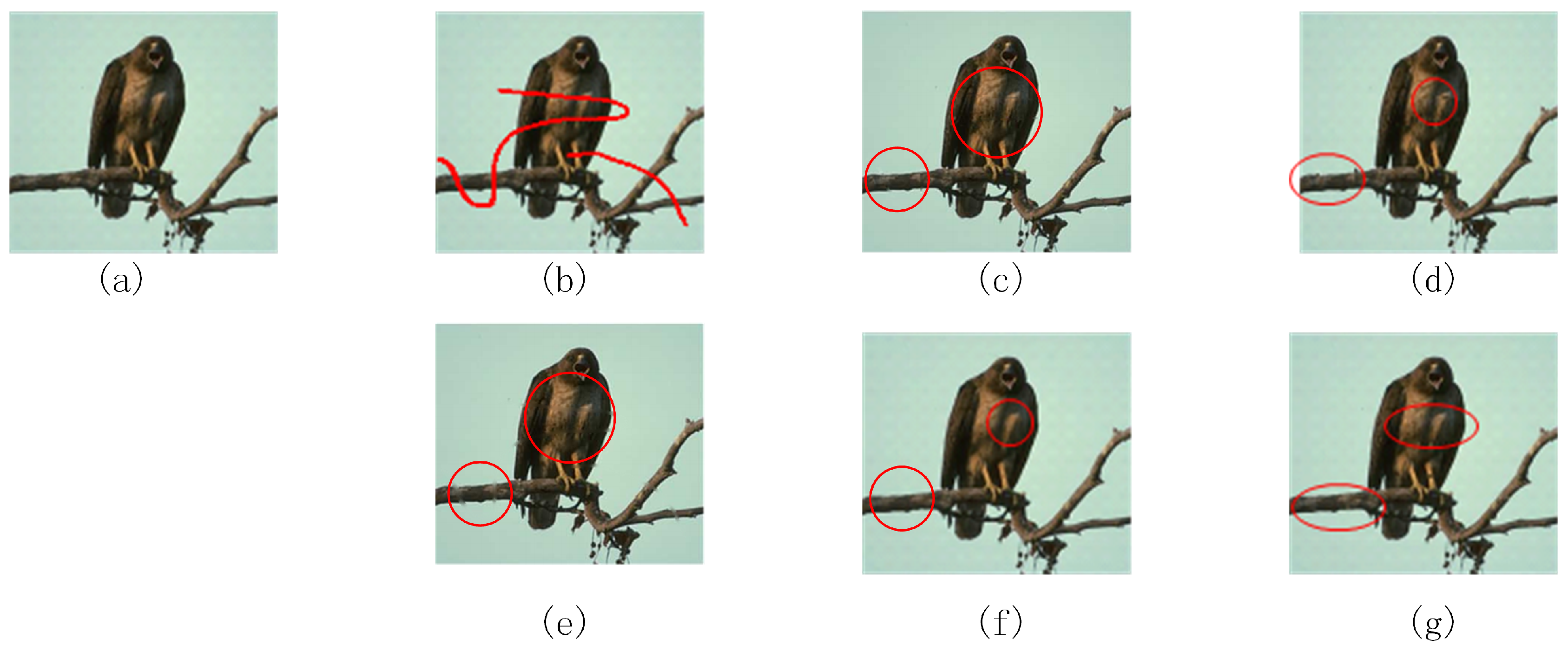

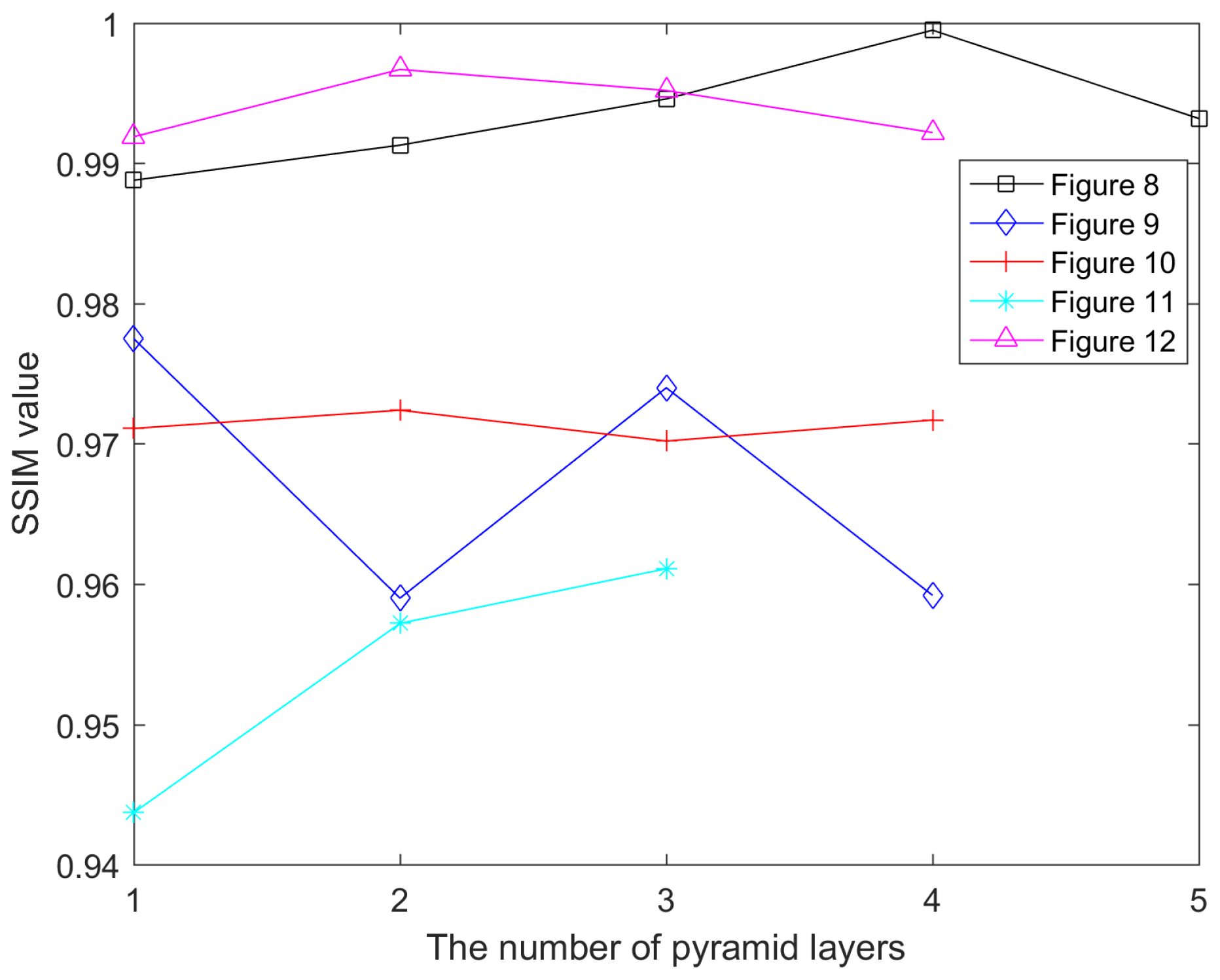

2.1. Exemplar-Based Technique

2.2. Pyramid Decomposition

3. Hierarchical Guidance Strategy Inpainting

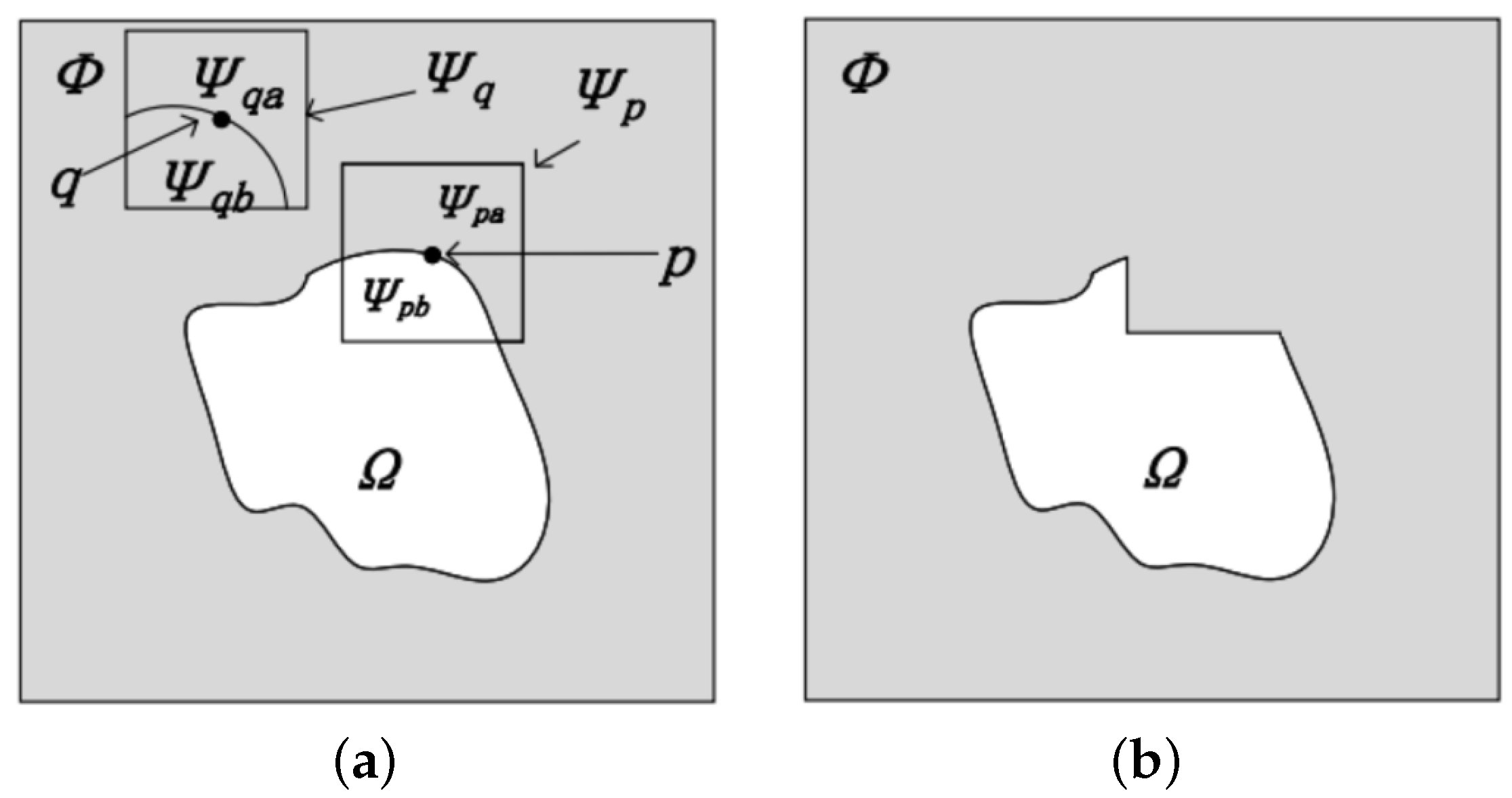

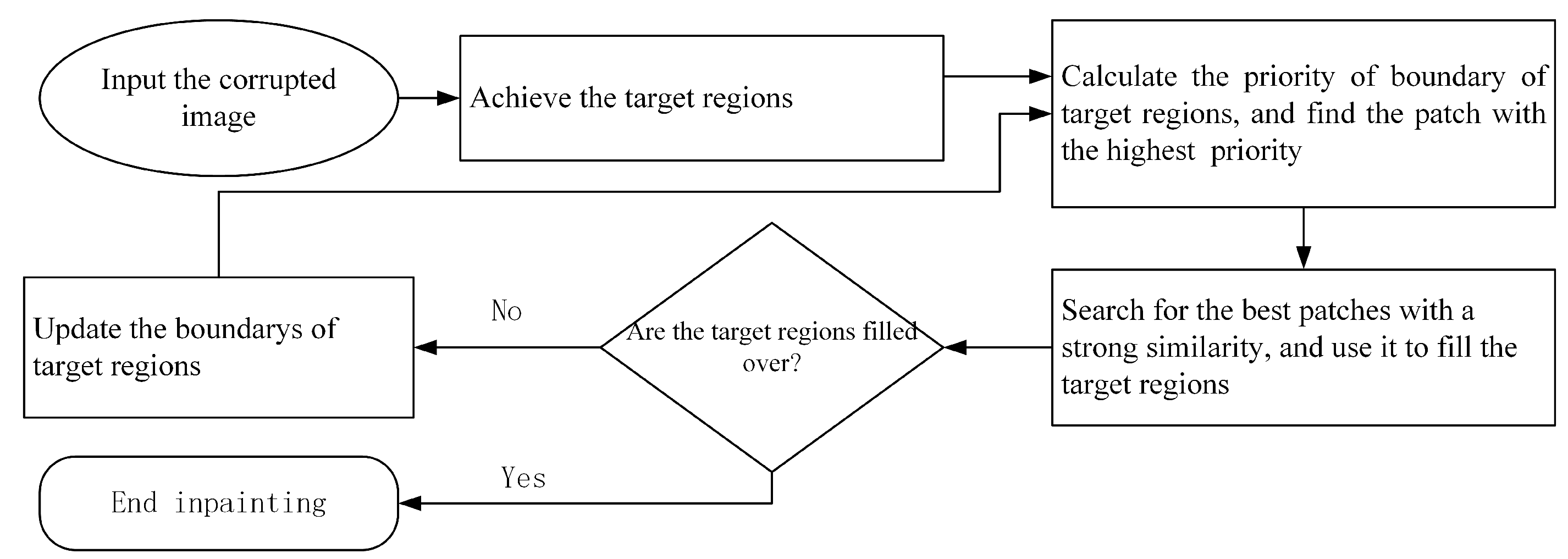

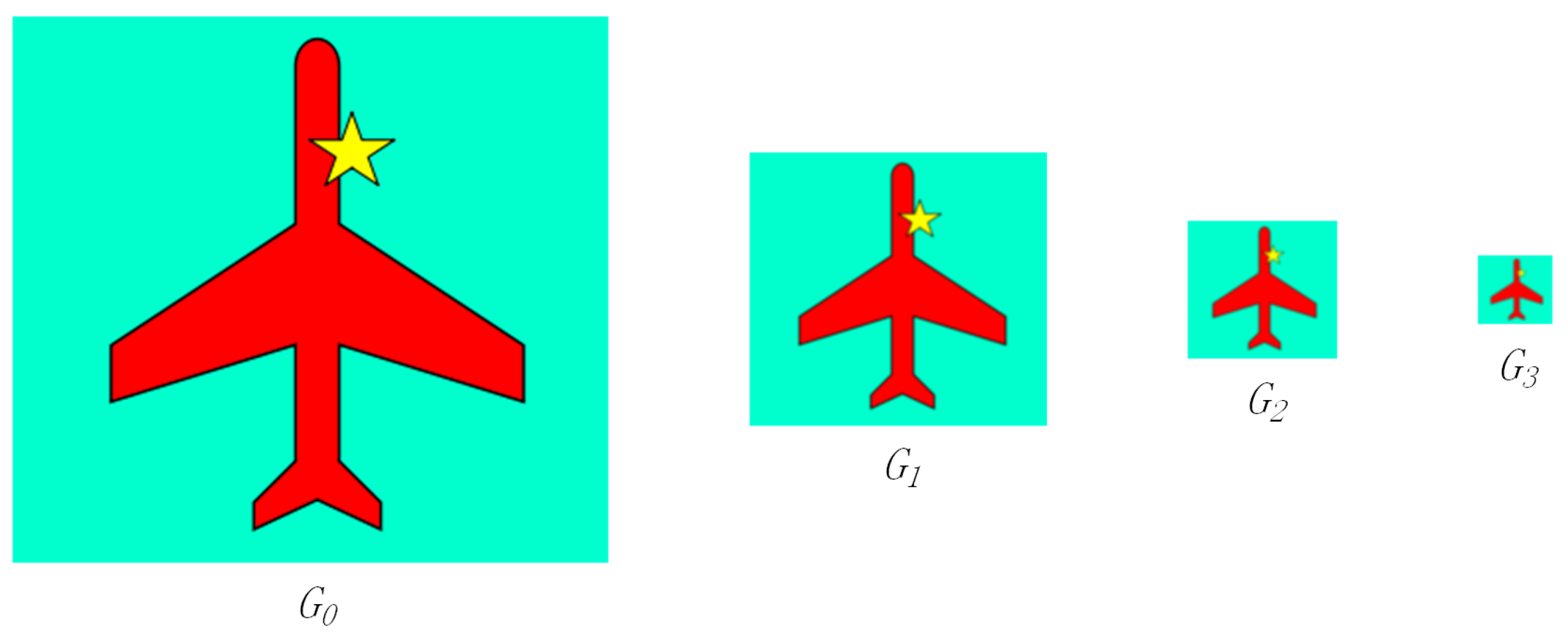

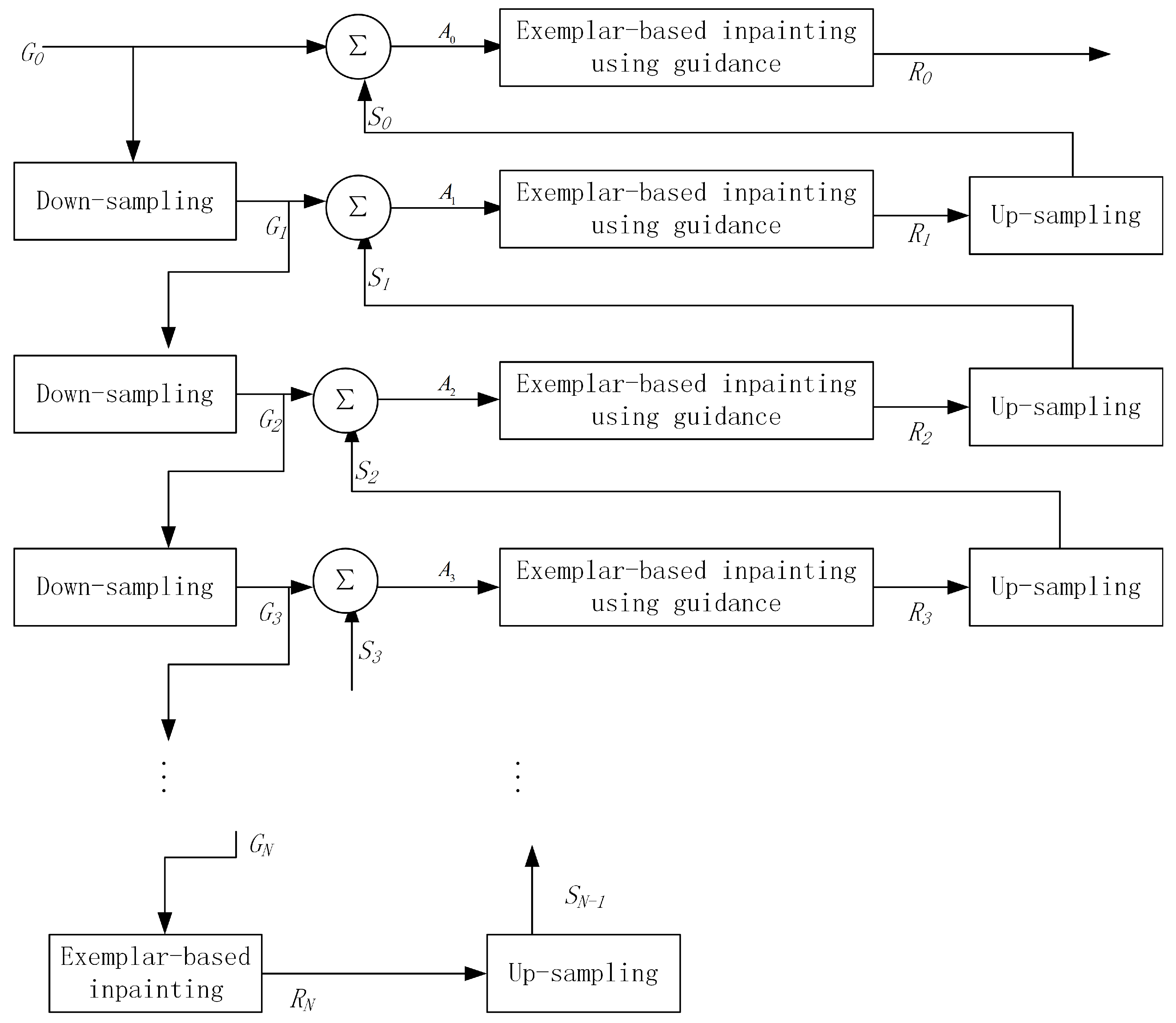

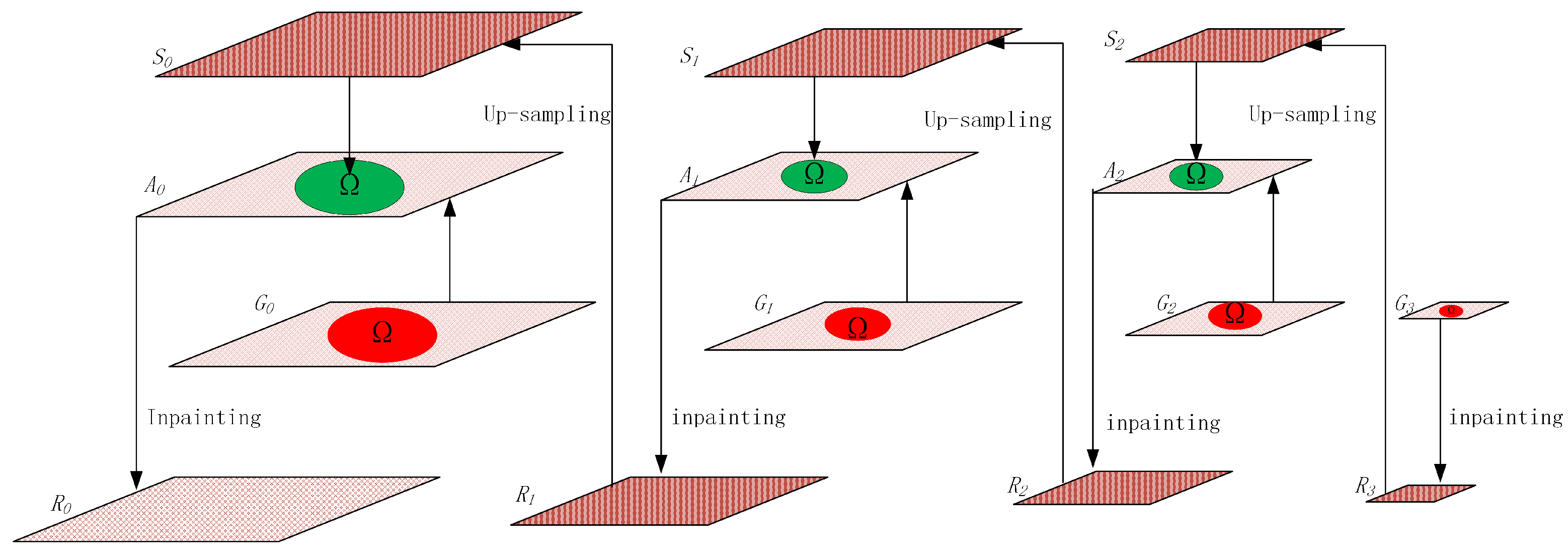

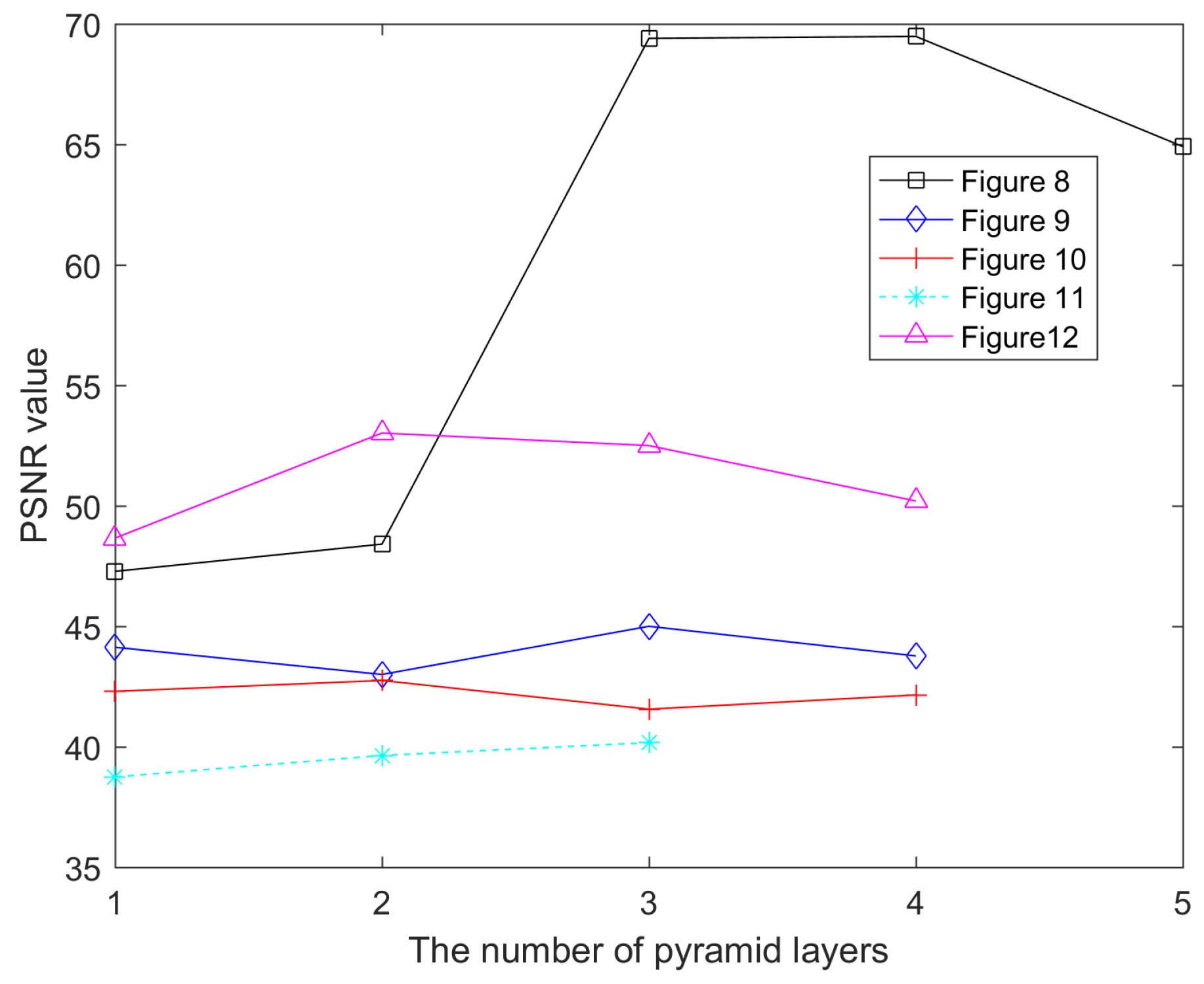

3.1. The Process of the Hierarchical Guidance Strategy

3.2. Middle Layer Inpainting of Hierarchical Guidance

| Algorithm 1 Hierarchical guidance strategy and exemplar-based image inpainting. | |

| Input: The corrupted image I; The mask for identifying the target regions; | |

| Output: The restored image; | |

| 1: | Build the pyramid images with N layers |

| 2: | flag ← True |

| 3: | fordo |

| 4: | if flag is True then |

| 5: | When inpainting the top layer of pyramid using exemplar-based method, we can acquire |

| 6: | |

| 7: | the |

| 8: | flag ←False |

| 9: | end if |

| 10: | Obtain by up-sampling |

| 11: | Merging the image and image into image |

| 12: | The image is generated when restoring the image by exemplar-based technique and |

| 13: | |

| 14: | hierarchical guidance |

| 15: | end for |

| 16: | Achieve the result of inpainting( ) |

| 17: | Output possion blending result of |

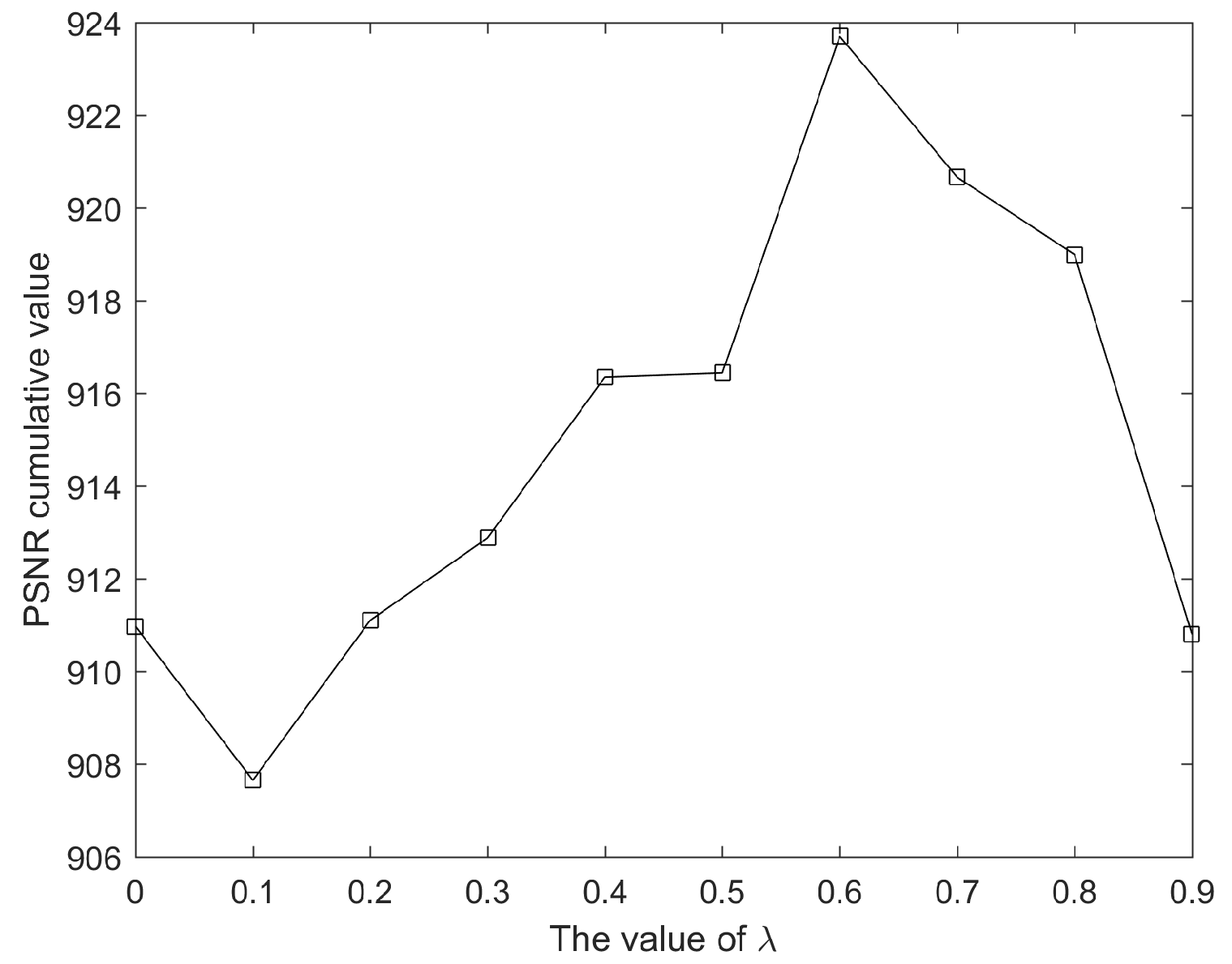

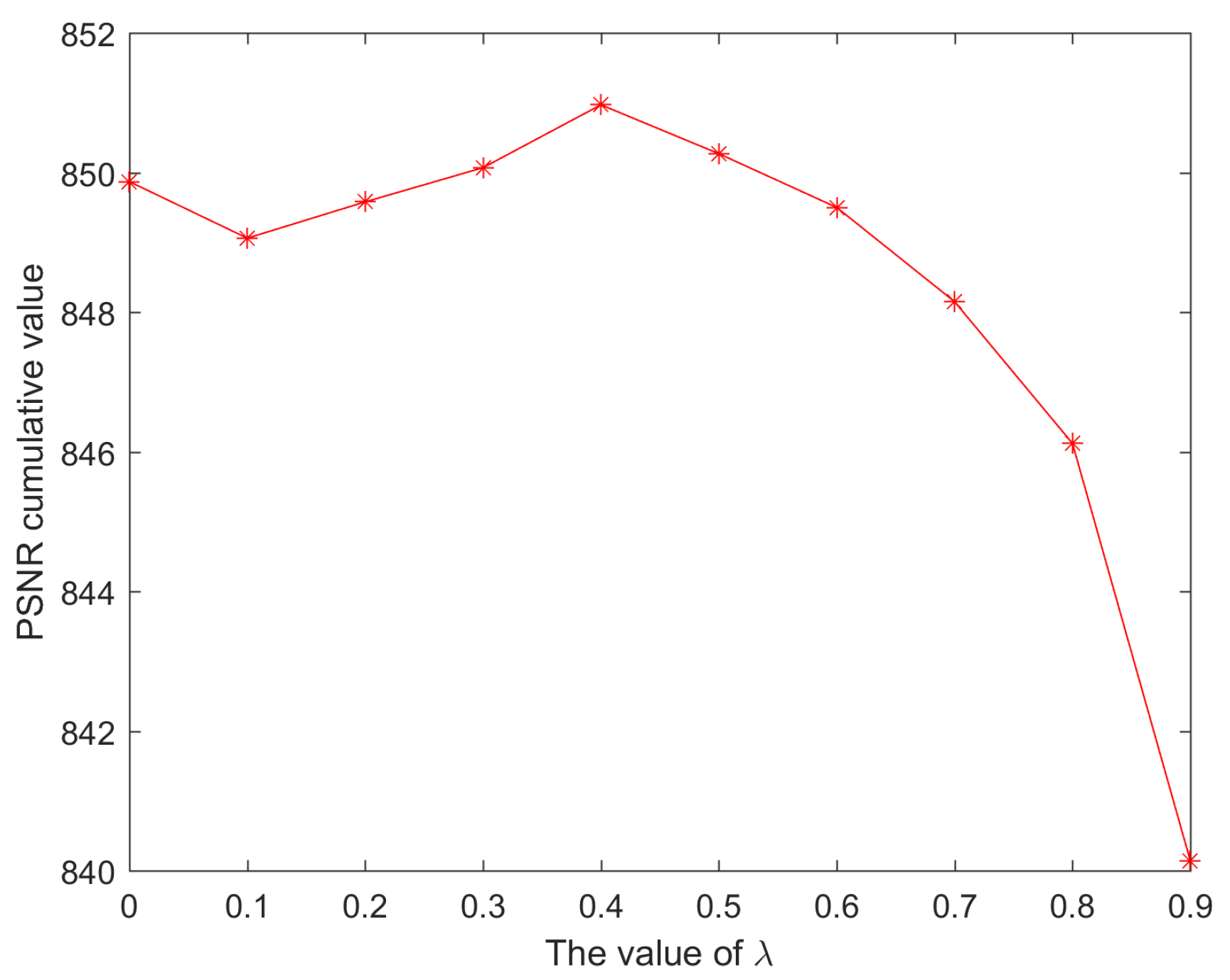

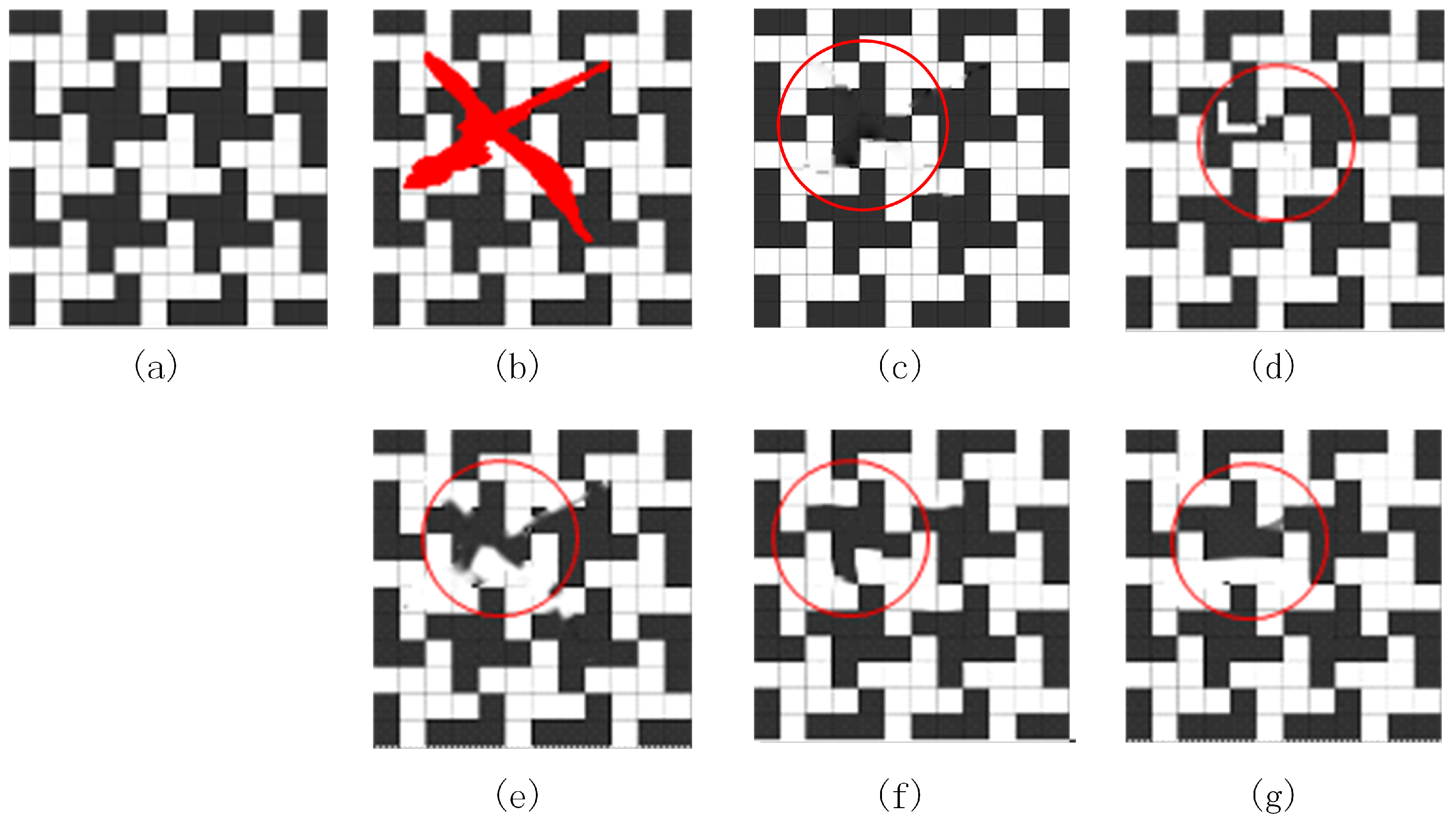

4. Experimental Analysis

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image Inpainting. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000; pp. 417–424. [Google Scholar]

- Chan, T.F. Nontexture inpainting by curvature-driven diffusions. J. Vis. Commun. Image Represent. 2001, 12, 436–449. [Google Scholar] [CrossRef]

- Chan, T.F.; Kang, S.H.; Shen, J.H. Euler’s elastica and curvature-based inpainting. Siam J. Appl. Math. 2003, 63, 564–592. [Google Scholar]

- Shen, J.; Chan, T.F. Mathematical models for local nontexture inpaintings. SIAM J. Appl. Math. 2002, 62, 1019–1043. [Google Scholar] [CrossRef]

- Criminisi, A.; Perez, P.; Toyama, K. Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 2004, 13, 1200–1212. [Google Scholar] [CrossRef] [PubMed]

- Drori, I.; Cohen-Or, D.; Yeshurun, H. Fragment-based image completion. ACM Trans. Graph. (TOG) 2003, 22, 303–312. [Google Scholar] [CrossRef]

- Lee, J.; Lee, D.K.; Park, R.H. Robust exemplar-based inpainting algorithm using region segmentation. IEEE Trans. Consum. Electron. 2012, 58, 553–561. [Google Scholar] [CrossRef]

- Zhang, Q.; Lin, J. Exemplar-based image inpainting using color distribution analysis. J. Inf. Sci. Eng. 2012, 28, 641–654. [Google Scholar]

- Xu, Z.B.; Sun, J. Image Inpainting by Patch Propagation Using Patch Sparsity. IEEE Trans. Image Process. 2010, 19, 1153–1165. [Google Scholar] [PubMed]

- Bertalmio, M.; Vese, L.; Sapiro, G.; Osher, S. Simultaneous structure and texture image inpainting. IEEE Trans. Image Process. 2003, 12, 882–889. [Google Scholar] [CrossRef] [PubMed]

- Padmavathi, S.; Soman, B.P.; Soman, K.P. Hirarchical Digital Image Inpainting Using Wavelets. Signal Image Process 2012, 3, 85. [Google Scholar] [CrossRef]

- Ignácio, U.A.; Jung, C.R. Block-based image inpainting in the wavelet domain. Vis. Comput. 2007, 23, 733–741. [Google Scholar] [CrossRef]

- Wang, F.; Liang, D.; Wang, N.; Cheng, Z.; Tang, J. An new method for image inpainting using wavelets. In Proceedings of the 2011 International Conference on Multimedia Technology, Hangzhou, China, 26–28 July 2011; pp. 201–204. [Google Scholar]

- Burt, P.J.; Adelson, E.H. The Laplacian pyramid as a compact image code. In Readings in Computer Vision; Elsevier: Amsterdam, The Netherlands, 1987; pp. 671–679. [Google Scholar]

- Farid, M.S.; Khan, H.; Mahmood, A. Image inpainting based on pyramids. In Proceedings of the 2010 IEEE 10th International Conference on Signal Processing (ICSP), Beijing, China, 24–28 October 2010; pp. 711–715. [Google Scholar]

- Kim, B.S.; Kim, J.; Park, J. Exemplar based inpainting in a multi-scaled space. Opt. Int. J. Light Electron Opt. 2015, 126, 3978–3981. [Google Scholar] [CrossRef]

- Liu, Y.Q.; Caselles, V. Exemplar-Based Image Inpainting Using Multiscale Graph Cuts. IEEE Trans. Image Process. 2013, 22, 1699–1711. [Google Scholar] [PubMed]

- Chan, R.H.; Yang, J.F.; Yuan, X.M. Alternating Direction Method for Image Inpainting in Wavelet Domains. Siam J. Imaging Sci. 2011, 4, 807–826. [Google Scholar] [CrossRef]

- Xie, J.; Xu, L.; Chen, E. Image denoising and inpainting with deep neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 341–349. [Google Scholar]

- Cai, N.; Su, Z.; Lin, Z.; Wang, H.; Yang, Z.; Ling, B.W.K. Blind inpainting using the fully convolutional neural network. Vis. Comput. 2017, 33, 249–261. [Google Scholar] [CrossRef]

- Köhler, R.; Schuler, C.; Schölkopf, B.; Harmeling, S. Mask-specific inpainting with deep neural networks. In Proceedings of the German Conference on Pattern Recognition, Muenster, Germany, 2–5 September 2014; pp. 523–534. [Google Scholar]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context encoders: Feature learning by inpainting. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2536–2544. [Google Scholar]

- Yang, C.; Lu, X.; Lin, Z.; Shechtman, E.; Wang, O.; Li, H. High-resolution image inpainting using multi-scale neural patch synthesis. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4076–4084. [Google Scholar]

- Pérez, P.; Gangnet, M.; Blake, A. Poisson image editing. ACM Trans. Graph. (TOG) 2003, 22, 313–318. [Google Scholar] [CrossRef]

- Telea, A. An image inpainting technique based on the fast marching method. J. Graph. Tools 2004, 9, 23–34. [Google Scholar] [CrossRef]

- Barnes, C.; Shechtman, E.; Finkelstein, A.; Goldman, D.B. PatchMatch: A randomized correspondence algorithm for structural image editing. ACM Trans. Graph.-TOG 2009, 28, 24. [Google Scholar]

- Getreuer, P. Total variation inpainting using split Bregman. Image Process. Line 2012, 2, 147–157. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Lu, G.; Bi, X.; Wang, W. Hierarchical Guidance Strategy and Exemplar-Based Image Inpainting. Information 2018, 9, 96. https://doi.org/10.3390/info9040096

Liu H, Lu G, Bi X, Wang W. Hierarchical Guidance Strategy and Exemplar-Based Image Inpainting. Information. 2018; 9(4):96. https://doi.org/10.3390/info9040096

Chicago/Turabian StyleLiu, Huaming, Guanming Lu, Xuehui Bi, and Weilan Wang. 2018. "Hierarchical Guidance Strategy and Exemplar-Based Image Inpainting" Information 9, no. 4: 96. https://doi.org/10.3390/info9040096