The Singularity Isn’t Simple! (However We Look at It) A Random Walk between Science Fiction and Science Fact

Abstract

:1. Introduction: Problems with ‘Futurology’

1.1. Futurology ‘Success’

- Positives: predictions that have (to a greater or lesser extent) come to pass within any suggested time frame [the futurologist predicted it and it happened];

- False positives: predictions that have failed to transpire or have only done so in limited form or well beyond a suggested time frame [the futurologist predicted it but it didn’t happen];

- Negatives: events or developments either completely unforeseen within the time frame or implied considerably out of context [the futurologist didn’t see it coming].

- Positives: (as of 2018) voice interface computers, tricorders (now in the form of mobile phones), Bluetooth headsets, in-vision/hands-free displays, portable memory units (now disks, USB sticks, etc.), GPS, tractor beams and cloaking devices (in limited form), tablet computers, automatic doors, large-screen/touch displays, universal translators, teleconferencing, transhumanist bodily enhancement (bionic eyes for the blind, etc.), biometric health and identity data, diagnostic hospital beds [4];

- False Positives: (to date) replicators (expected to move slowly to positive in future?) warp drives and matter-antimatter power, transporters, holodecks (slowly moving to positive?), the moneyless society, human colonization of other planets [5];

- Justification: pseudo-scientific-technological in part but with social, ethical, political, environmental and demographic elements?

1.2. Futurology ‘Failure’

- Advances in various technologies do not happen independently or in isolation: Prophesying the progress made by artificial intelligence in ten years’ time may be challenging; similarly, the various states-of-the art in robotics, personal communications, the Internet of Things and big data analytics are difficult to predict with much confidence. However, combine all these and the problem becomes far worse. Visioning an ‘always-on’, fully-connected, fully-automated world driven by integrated data and machine intelligence superior to ours is next to impossible;

- A dominant technological development can have a particularly unforeseen influence on many others: Moorcock’s technological paradise may look fairly silly with no Internet but the ‘big thing that we’re not yet seeing’ is an ever-present hazard to futurologists. As we approach (the possibility of) the singularity, what else (currently concealed) may arise to undermine the best intentioned of forecasts?

- Technological development has wider influences and repercussions than merely the technology: Technology does not emerge or exist in a vacuum: its development is often driven by social or economic need and it then has to take its place in the human world. We produce it but it then changes us. Wider questions of ethics, morality, politics and law may be restricting or accelerating influences but they most certainly cannot be dismissed as irrelevant.

2. Problems with Axioms, Definitions and Models

“The technological singularity (also, simply, the singularity) [23] is the hypothesis that the invention of artificial superintelligence will abruptly trigger runaway technological growth, resulting in unfathomable changes to human civilization [24]. According to this hypothesis, an upgradable intelligent agent (such as a computer running software-based artificial general intelligence) would enter a “runaway reaction” of self-improvement cycles, with each new and more intelligent generation appearing more and more rapidly, causing an intelligence explosion and resulting in a powerful superintelligence that would, qualitatively, far surpass all human intelligence. Stanislaw Ulam reports a discussion with John von Neumann “centered on the accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue” [25]. Subsequent authors have echoed this viewpoint [22,26]. I. J. Good’s “intelligence explosion” model predicts that a future superintelligence will trigger a singularity [27]. Emeritus professor of computer science at San Diego State University and science fiction author Vernor Vinge said in his 1993 essay The Coming Technological Singularity that this would signal the end of the human era, as the new superintelligence would continue to upgrade itself and would advance technologically at an incomprehensible rate [27].

At the 2012 Singularity Summit, Stuart Armstrong did a study of artificial general intelligence (AGI) predictions by experts and found a wide range of predicted dates, with a median value of 2040 [28].

Many notable personalities, including Stephen Hawking and Elon Musk, consider the uncontrolled rise of artificial intelligence as a matter of alarm and concern for humanity’s future [29,30]. The consequences of the singularity and its potential benefit or harm to the human race have been hotly debated by various intellectual circles.”

2.1. What Defines the ‘Singularity’?

- Process-based: (forward-looking) We have a model of what technological developments are required. When each emerges and combines with the others, the TS should happen: ‘The TS will occur when …’; [This can also be considered as a ‘white box’ definition: we have an idea of how the TS might come about];

- Results-based: (backward-looking) We have a certain outcome or expectation of the TS. The TS has arrived when technology passes some test: ‘The TS will have occurred when …’; [Also a ‘black box’ definition: we have a notion of functionality but not (necessarily) operation];

- Heuristic: (‘rule-of-thumb’, related models) Certain developments in technology, some themselves defined better than others, will continue along (or alongside) the road towards the TS and may contribute, directly or indirectly, to it: ‘The TS will occur as …’; [Perhaps a ‘grey box’ definition?].

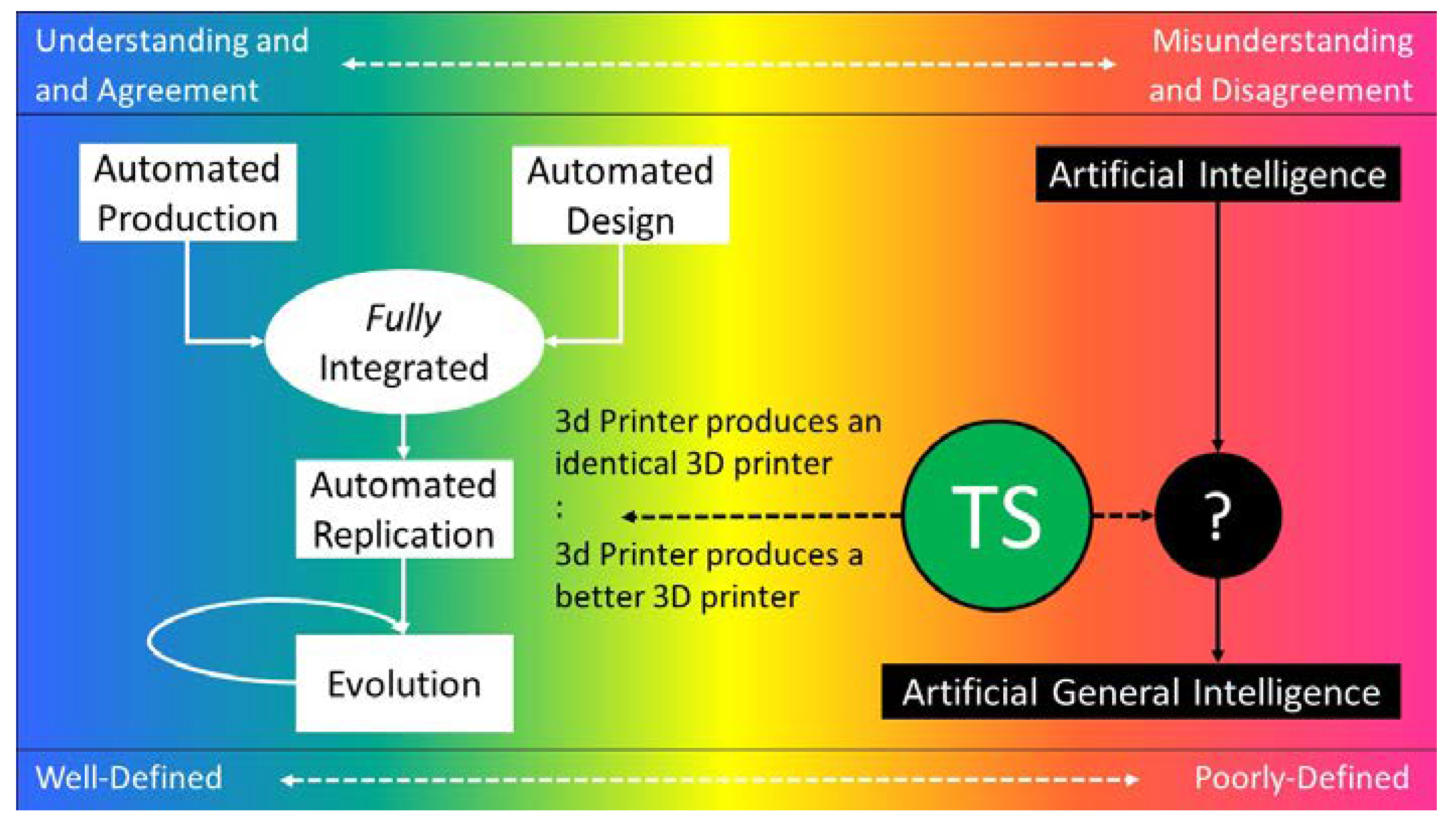

2.2. How Might the ‘Singularity’ Happen?

- [Design] Currently, the use of design software and algorithms is piecemeal in most industries. Although some of the high-intensity computational work is naturally undertaken by automated procedures, there is almost always human intervention and mediation between these sub-processes. It varies considerably from application to application but nowhere yet is design automation close to comprehensive in terms of a complete ‘product’.

- [Production] A somewhat more mundane objection: but even an entirely self-managed production line has to be fed with materials; or components perhaps if production is multi-stage, but raw materials have to start the process. If this part of the TS framework is to be fully automated and sustainable then either the supply of materials has to be similarly seamless or the production hardware must somehow be able to source its own. Neither option is in sight presently.

- [Replication] Fully integrating automated design with production is decidedly non-trivial. Although there are numerous examples of production lines employing various types of feedback, to correct and improve performance, this is far short of the concept of taking a final product design produced by software and immediately implementing it to hardware. Often, machinery has to be reset to implement each new design or human intervention is necessary in some other form. Whilst 1 and 2 require considerable technological advance, this may ask for something more akin to a human ‘leap of faith’.

- [Evolution] Finally, if 1, 2 and 3 were to be checked off at some point in the future (not theoretically impossible), the transition from static replication to a chain of improvements across subsequent iterations requires a further ingredient: the guiding environment, or purpose, required for evolution across generations. Where would this come from? Suppose it requires some extra program code or an adjustment to the production hardware to produce the necessary improvement at each generation, how would (even could) such a decision be taken and what would be the motivation (of the hardware and software) for taking it? Couched in terms of an optimization problem [38], what would the objective function be and where would the responsibility lie to improve it?

3. Further Problems with ‘Thinking’, ‘Intelligence’, ‘Consciousness’, General Metaphysics and ‘The Singularity’

3.1. ‘Thinking’ Machines

3.2. ‘Intelligent’ Machines

3.3. ‘Adaptable’ Machines

3.4. ‘Conscious’ Machines

3.5. Models of ‘Consciousness’

- Consciousness is simply the result of neural complexity. Build something with a big enough ‘brain’ and it will acquire consciousness. There is possibly some sort of critical neural mass and/or degree of complexity/connectivity for this to happen.

- Similar to 1 but the ‘brain’ needs energy. It needs power (food, fuel, electricity, etc.) to make it work (Grout’s ‘model’ [44]).

- Similar to 2 but with some symbiosis. A physical substrate is needed to carry signals of a particular type. The relationship between the substrate and signals (hardware and software) takes a particular critical form (maybe the two are indistinguishable) and we have yet to fully grasp this.

- Similar to 3 but there is a biological requirement. Consciousness is the preserve of carbon life forms, perhaps. How and/or why we do not yet understand, and it is conceivable that we may not be able to.

- Extending 5. Consciousness is completely separate from the body and could exist independently. Dependent on more fundamental beliefs, we might call it a ‘soul’.

- Taking 6 to the limit? Consciousness, the soul, comes from God.

- In a simple sense, they (Models 1–7) could be considered as ranging from the ‘ultra-scientific’ to the ‘ultra-spiritual’.

- Increasingly sophisticated machinery will progressively (in turn) achieve some of these. 1 and 2 may be trivial, 3 a distinct possibility, and 4 is difficult to assert. After that, it would appear to get more challenging. In a sense, we might disprove each model by producing a machine that satisfied the requirements at each stage, yet failed to ‘wake up’.

3.6. A Word of Caution … for Everyone

“The Theological Objection: Thinking is a function of man’s immortal soul. God has given an immortal soul to every man and woman, but not to any other animal or to machines. Hence no animal or machine can think.”

“I am unable to accept any part of this, but will attempt to reply in theological terms. I should find the argument more convincing if animals were classed with men, for there is a greater difference, to my mind, between the typical animate and the inanimate than there is between man and the other animals. … It appears to me that the argument quoted above implies a serious restriction of the omnipotence of the Almighty. It is admitted that there are certain things that He cannot do such as making one equal to two, but should we not believe that He has freedom to confer a soul on an elephant if He sees fit? We might expect that He would only exercise this power in conjunction with a mutation which provided the elephant with an appropriately improved brain to minister to the needs of this sort. An argument of exactly similar form may be made for the case of machines. It may seem different because it is more difficult to ‘swallow’. But this really only means that we think it would be less likely that He would consider the circumstances suitable for conferring a soul. … In attempting to construct such machines we should not be irreverently usurping His power of creating souls, any more than we are in the procreation of children: rather we are, in either case, instruments of His will providing mansions for the souls that He creates.”

4. The Wider View: Ethics, Economics, Politics, the Fermi Paradox and Some Conclusions

4.1. Will We Get to See the TS?

4.2. Can We Really Understand the TS?

“Sudden, precipitous change is an option for engineers, but in wild nature the summit of Mount Improbable can be reached only if a gradual ramp upwards from a given starting point can be found. The wheel may be one of those cases where the engineering solution can be seen in plain view, yet be unattainable in evolution because it lies the other side of a deep valley, cutting unbridgeably across the massif of Mount Improbable.”

4.3. Therefore, Can the TS Really Happen?

“I’ve come up with a set of rules that describe our reactions to technologies:

- 1.

- 2.

- 3.

Conflicts of Interest

References

- Can Futurology ‘Success’ Be ‘Measured’? Available online: https://vicgrout.net/2017/10/11/can-futurology-success-be-measured/ (accessed on 12 October 2017).

- Goodman, D.A. Star Trek Federation; Titan Books: London, UK, 2013; ISBN 978-1781169155. [Google Scholar]

- Siegel, E. Treknology: The Science of Star Trek from Tricorders to Warp Drive; Voyageur Press: Minneapolis, MN, USA, 2017; ISBN 978-0760352632. [Google Scholar]

- Here Are All the Technologies Star Trek Accurately Predicted. Available online: https://qz.com/766831/star-trek-real-life-technology/ (accessed on 20 September 2017).

- Things Star Trek Predicted Wrong. Available online: https://scifi.stackexchange.com/questions/100545/things-star-trek-predicted-wrong (accessed on 20 September 2017).

- Mattern, F.; Floerkemeier, C. From the Internet of Computers to the Internet of Things. Inf. Spektrum 2010, 33, 107–121. [Google Scholar] [CrossRef]

- How Come Star Trek: The Next Generation did not Predict the Internet? Is It Because It Will Become Obsolete in the 24th Century? Available online: https://www.quora.com/How-come-Star-Trek-The-Next-Generation-did-not-predict-the-internet-Is-it-because-it-will-become-obsolete-in-the-24th-century (accessed on 20 September 2017).

- From Hoverboards to Self-Tying Shoes: Predictions that Back to the Future II Got Right. Available online: http://www.telegraph.co.uk/technology/news/11699199/From-hoverboards-to-self-tying-shoes-6-predictions-that-Back-to-the-Future-II-got-right.html (accessed on 20 September 2017).

- The Top 5 Technologies That ‘Star Wars’ Failed to Predict, According to Engineers. Available online: https://www.inc.com/chris-matyszczyk/the-top-5-technologies-that-star-wars-failed-to-predict-according-to-engineers.html (accessed on 21 September 2017).

- How Red Dwarf Predicted Wearable Tech. Available online: https://www.wareable.com/features/rob-grant-interview-how-red-dwarf-predicted-wearable-tech (accessed on 21 September 2017).

- Star Trek: The Start of the IoT? Available online: https://www.ibm.com/blogs/internet-of-things/star-trek/ (accessed on 21 September 2017).

- Moorcock, M. Dancers at the End of Time; Gollancz: London, UK, 2003; ISBN 978-0575074767. [Google Scholar]

- When Hari Kunzru Met Michael Moorcock. Available online: https://www.theguardian.com/books/2011/feb/04/michael-moorcock-hari-kunzru (accessed on 19 September 2017).

- Forster, E.M. The Machine Stops; Penguin Classics: London, UK, 2011; ISBN 978-0141195988. [Google Scholar]

- Leinster, M. A Logic Named Joe; Baen Books: Wake Forest, CA, USA, 2005; ISBN 978-0743499101. [Google Scholar]

- Asimov, I. The Naked Sun; Panther/Granada: London, UK, 1973; ISBN 978-0586010167. [Google Scholar]

- Twain, M. From the ‘London Times’ of 1904; Century: London, UK, 1898. [Google Scholar]

- Adams, D. The Hitch Hiker’s Guide to the Galaxy: A Trilogy in Five Parts; Heinemann: London, UK, 1995; ISBN 978-0434003488. [Google Scholar]

- Rifkin, G.; Harrar, G. The Ultimate Entrepreneur: The Story of Ken Olsen and Digital Equipment Corporation; Prima: Roseville, CA, USA, 1990; ISBN 978-1559580229. [Google Scholar]

- Did Digital Founder Ken Olsen Say There Was ‘No Reason for Any Individual to Have a Computer in His Home’? Available online: http://www.snopes.com/quotes/kenolsen.asp (accessed on 22 September 2017).

- The Problem with ‘Futurology’. Available online: https://vicgrout.net/2013/09/20/the-problem-with-futurology/ (accessed on 26 October 2017).

- Technological Singularity. Available online: https://en.wikipedia.org/wiki/Technological_singularity (accessed on 16 January 2018).

- Cadwalladr, C. Are the robots about to rise? Google’s new director of engineering thinks so …. The Guardian (UK), 22 February 2014. [Google Scholar]

- Eden, A.H.; Moor, J.H. Singularity Hypothesis: A Scientific and Philosophical Assessment; Springer: New York, NY, USA, 2013; ISBN 978-3642325601. [Google Scholar]

- Ulam, S. Tribute to John von Neumann. Bull. Am. Math. Soc. 1958, 64, 5. [Google Scholar]

- Chalmers, D. The Singularity: A philosophical analysis. J. Conscious. Stud. 2010, 17, 7–65. [Google Scholar]

- Vinge, V. The Coming Technological Singularity: How to survive the post-human era. In Vision-21 Symposium: Interdisciplinary Science and Engineering in the Era of Cyberspace; NASA Lewis Research Center and Ohio Aerospace Institute: Washington, DC, USA, 1993. [Google Scholar]

- Armstrong, S. How We’re Predicting AI. In Singularity Conference; Springer: San Francisco, CA, USA, 2012. [Google Scholar]

- Sparkes, M. Top scientists call for caution over artificial intelligence. The Times (UK), 24 April 2015. [Google Scholar]

- Hawking: AI could end human race. BBC News, 2 December 2014.

- Kurzweil, R. The Singularity is Near: When Humans Transcend Biology; Duckworth: London, UK, 2006; ISBN 978-0715635612. [Google Scholar]

- Everitt, T.; Goertzel, B.; Potapov, A. Artificial General Intelligence; Springer: New York, NY, USA, 2017; ISBN 978-3319637020. [Google Scholar]

- Storr, A.; McWaters, J.F. Off-Line Programming of Industrial Robots: IFIP Working Conference Proceedings; Elsevier Science: Amsterdam, The Netherlands, 1987; ISBN 978-0444701374. [Google Scholar]

- Aouad, G. Computer Aided Design for Architecture, Engineering and Construction; Routledge: Abingdon, UK, 2011; ISBN 978-0415495073. [Google Scholar]

- Liu, B.; Grout, V.; Nikolaeva, A. Efficient Global Optimization of Actuator Based on a Surrogate Model. IEEE Trans. Ind. Electron. 2018, 65, 5712–5721. [Google Scholar] [CrossRef]

- Liu, B.; Irvine, A.; Akinsolu, M.; Arabi, O.; Grout, V.; Ali, N. GUI Design Exploration Software for Microwave Antennas. J. Comput. Des. Eng. 2017, 4, 274–281. [Google Scholar] [CrossRef]

- Wu, M.; Karkar, A.; Liu, B.; Yakovlev, A.; Gielen, G.; Grout, V. Network on Chip Optimization Based on Surrogate Model Assisted Evolutionary Algorithms. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–8 July 2014; pp. 3266–3271. [Google Scholar]

- Sioshansi, R.; Conejo, A.J. Optimization in Engineering: Models and Algorithms; Springer: New York, NY, USA, 2017; ISBN 978-3319567679. [Google Scholar]

- Rhodius, A. The Argonautica; Reprinted; CreateSpace: Seattle, WA, USA, 2014; ISBN 978-1502885616. [Google Scholar]

- Shelley, M. Frankenstein: Or, the Modern Prometheus; Reprinted; Wordsworth: Ware, UK, 1992; ISBN 978-1853260230. [Google Scholar]

- Sawyer, R.J. WWW: Wake; The WWW Trilogy Part 1; Gollancz: London, UK, 2010; ISBN 978-0575094086. [Google Scholar]

- Sawyer, R.J. WWW: Watch; The WWW Trilogy Part 2; Gollancz: London, UK, 2011; ISBN 978-0575095052. [Google Scholar]

- Sawyer, R.J. WWW: Wonder; The WWW Trilogy Part 3; Gollancz: London, UK, 2012; ISBN 978-0575095090. [Google Scholar]

- Grout, V. Conscious; Clear Futures Publishing: Wrexham, UK, 2017; ISBN 978-1520590127. [Google Scholar]

- Turing, A.M. Computing Machinery and Intelligence. Mind 1950, 49, 433–460. [Google Scholar] [CrossRef]

- How Singular Is the Singularity? Available online: https://vicgrout.net/2015/02/01/how-singular-is-the-singularity/ (accessed on 16 February 2018).

- Dick, P.K. Do Androids Dream of Electric Sheep; Reprinted; Gollancz: London, UK, 2007; ISBN 978-0575079939. [Google Scholar]

- Poelti, M. A.I. Insurrection: The General’s War; CreateSpace: Seattle, WA, USA, 2018; ISBN 978-1981490585. [Google Scholar]

- Computer AI Passes Turing Test in ‘World First’. Available online: http://www.bbc.co.uk/news/technology-27762088 (accessed on 19 February 2018).

- Adaptability: The True Mark of Genius. Available online: https://www.huffingtonpost.com/tomas-laurinavicius/adaptability-the-true-mar_b_11543680.html (accessed on 16 February 2018).

- Our Computers Are Learning How to Code Themselves. Available online: https://futurism.com/4-our-computers-are-learning-how-to-code-themselves/ (accessed on 19 February 2018).

- Why Your Brain Isn’t a Computer. Available online: https://www.forbes.com/sites/alexknapp/2012/05/04/why-your-brain-isnt-a-computer/#3e238fdc13e1 (accessed on 19 February 2018).

- Schneider, S.; Velmans, M. The Blackwell Companion to Consciousness, 2nd ed.; Wiley-Blackwell: Hoboken, NJ, USA, 2017; ISBN 978-0470674079. [Google Scholar]

- August 29th: Skynet Becomes Self-Aware. Available online: http://www.neatorama.com/neatogeek/2013/08/29/August-29th-Skynet-Becomes-Self-aware/ (accessed on 23 March 2018).

- Time-to-Live (TTL). Available online: http://searchnetworking.techtarget.com/definition/time-to-live (accessed on 23 March 2018).

- Seager, W. The Routledge Handbook of Panpsychism; Routledge Handbooks in Philosophy; Routledge: London, UK, 2018; ISBN 978-1138817135. [Google Scholar]

- “The Theological Objection”. Available online: https://vicgrout.net/2016/03/06/the-theological-objection/ (accessed on 27 March 2018).

- Gödel, K. Über formal unentscheidbare Sätze der Principia Mathematica und verwandter Systeme, I. Mon. Math. Phys. 1931, 38, 173–198. (In German) [Google Scholar]

- Turing, A.M. On computable numbers, with an application to the Entscheidungsproblem. Proc. Lond. Math. Soc. 1937, 42, 230–265. (In German) [Google Scholar] [CrossRef]

- Heisenberg, W. Über den anschaulichen Inhalt der quantentheoretischen Kinematik und Mechanik. Z. Phys. 1927, 43, 172–198. (In German) [Google Scholar]

- Popoff, A. The Fermi Paradox; CreateSpace: Seattle, WA, USA, 2015; ISBN 978-1514392768. [Google Scholar]

- Webb, S. If the Universe is Teeming with Aliens… Where is Everybody? Fifty Solutions to the Fermi Paradox and the Problem of Extraterrestrial Life; Springer: New York, NY, USA, 2010; ISBN 978-1441930293. [Google Scholar]

- Braga, A.; Logan, R.K. The Emperor of Strong AI Has No Clothes: Limits to Artificial Intelligence. Information 2017, 8, 156. [Google Scholar] [CrossRef]

- Do Intelligent Civilizations Across the Galaxies Self Destruct? For Better and Worse, We’re the Test Case. Available online: http://www.manyworlds.space/index.php/2017/02/01/do-intelligent-civilizations-across-the-galaxies-self-destruct-for-better-and-worse-were-the-test-case/ (accessed on 28 March 2018).

- Technocapitalism. Available online: https://vicgrout.net/2016/09/15/technocapitalism/ (accessed on 16 February 2018).

- The Algorithm of Evolution. Available online: https://vicgrout.net/2014/02/03/the-algorithm-of-evolution/ (accessed on 2 April 2018).

- Why don’t Animals Have Wheels? Available online: http://sith.ipb.ac.rs/arhiva/BIBLIOteka/270ScienceBooks/Richard%20Dawkins%20Collection/Dawkins%20Articles/Why%20don%3Ft%20animals%20have%20wheels.pdf (accessed on 2 April 2018).

- Logan, R.K. Can Computers Become Conscious, an Essential Condition for the Singularity? Information 2017, 8, 161. [Google Scholar] [CrossRef]

- Russell, B. History of Western Philosophy; reprinted; Routledge Classics: London, UK, 2004; ISBN 978-1447226260. [Google Scholar]

- Adams, D. The Salmon of Doubt: Hitchhiking the Galaxy One Last Time; reprinted; Pan: London, UK, 2012; ISBN 978-0415325059. [Google Scholar]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Grout, V. The Singularity Isn’t Simple! (However We Look at It) A Random Walk between Science Fiction and Science Fact. Information 2018, 9, 99. https://doi.org/10.3390/info9040099

Grout V. The Singularity Isn’t Simple! (However We Look at It) A Random Walk between Science Fiction and Science Fact. Information. 2018; 9(4):99. https://doi.org/10.3390/info9040099

Chicago/Turabian StyleGrout, Vic. 2018. "The Singularity Isn’t Simple! (However We Look at It) A Random Walk between Science Fiction and Science Fact" Information 9, no. 4: 99. https://doi.org/10.3390/info9040099