Working Memory Training for Schoolchildren Improves Working Memory, with No Transfer Effects on Intelligence

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Materials: Transfer Tasks

2.2.1. Raven’s Progressive Matrices (RPM)

2.2.2 .Wechsler Intelligence Scale for Children—Revised (WISC-R)

2.2.3. OSPAN

2.3. Materials: Training Tasks

2.4. Procedure

2.4.1. Initial Psychometric Testing

2.4.2. Training

2.4.3. Second Psychometric Testing

2.4.4. Delayed Testing

2.5. Incentive System

3. Results

3.1. Practice Effects

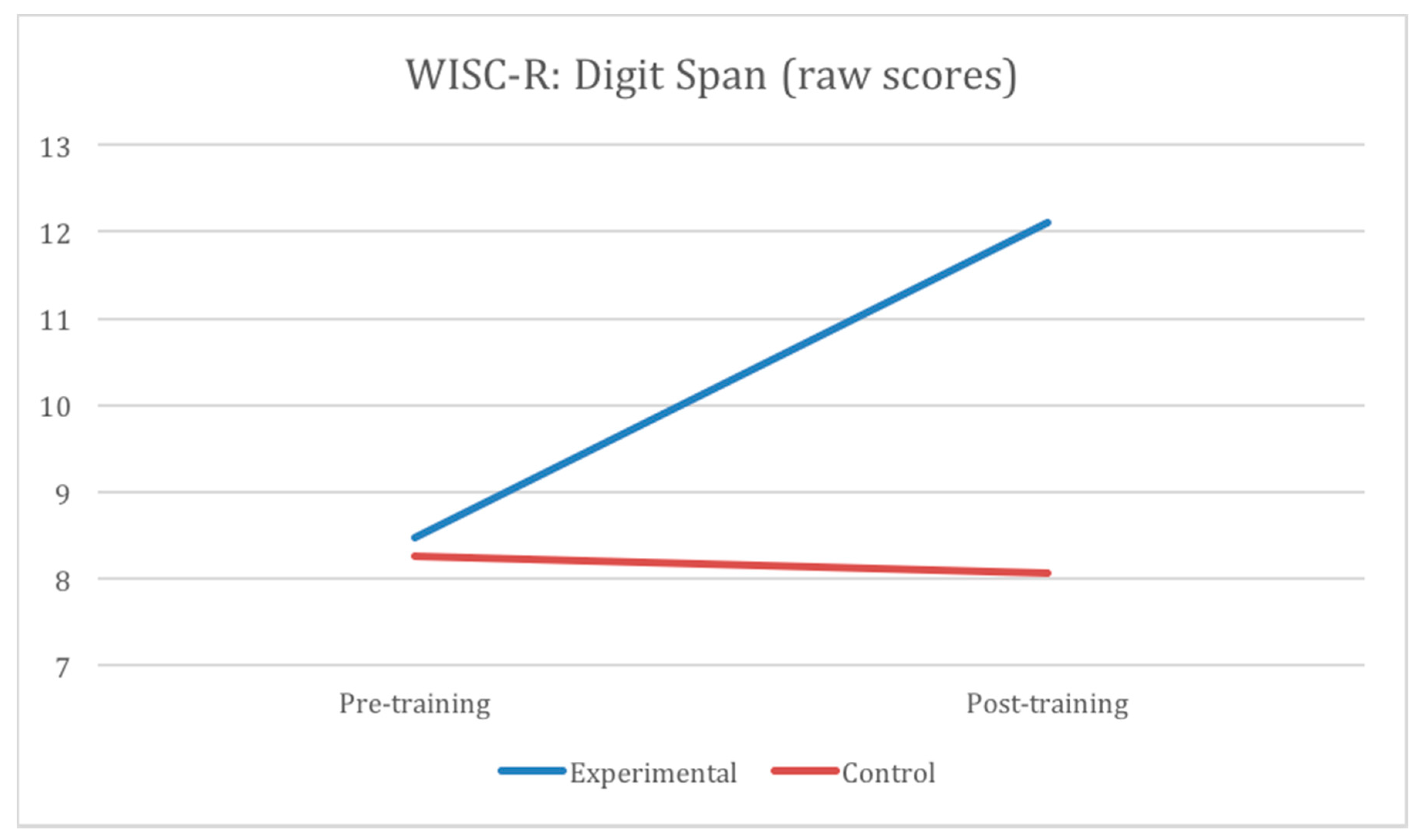

3.2. Near-Transfer Effects: Working Memory

3.3. Far-Transfer Effects: Intelligence

3.3.1. Raven’s Progressive Matrices

3.3.2. Wechsler Intelligence Scale for Children—Revised (WISC-R)

3.4. Delayed Testing (after 15 Months)

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Ethical Statement

Appendix A. Description of the Training Tasks

Appendix A.1. Sausage Dog (Picture Completion)

Appendix A.2. The Big Tidy-Up (Object Arrangement)

Appendix A.3. Gotcha! (Catching the Thief)

Appendix A.4. Zoo (Feeding the Animals)

Appendix A.5. Control Training Tasks

References

- Moody, D.E. Can intelligence be increased by training on a task of working memory? Intelligence 2009, 37, 327–328. [Google Scholar] [CrossRef]

- Melby-Lervåg, M.; Hulme, C. Is working memory training effective? A meta-analytic review. Dev. Psychol. 2013, 49, 270–291. [Google Scholar] [CrossRef] [PubMed]

- Shipstead, Z.; Redick, T.S.; Engle, R.W. Is working memory training effective? Psychol. Bull. 2012, 138, 628–654. [Google Scholar] [CrossRef] [PubMed]

- Danielsson, H.; Zottarel, V.; Palmqvist, L.; Lanfranchi, S. The effectiveness of working memory training with individuals with intellectual disabilities—A meta-analytic review. Front. Psychol. 2015. [Google Scholar] [CrossRef] [PubMed]

- Jensen, A.R. The g Factor: The Science of Mental Ability; Praeger: Westport, CT, USA, 1998. [Google Scholar]

- Sternberg, R.J. Increasing intelligence is possible after all. Proc. Natl. Acad. Sci. USA 2008, 105, 6791–6792. [Google Scholar] [CrossRef] [PubMed]

- Gottfredson, L.S. Mainstream science on intelligence: An editorial with 52 signatories, history, and bibliography. Intelligence 1997, 24, 13–23. [Google Scholar] [CrossRef]

- Baddeley, A. Working Memory; Clarendon Press: Oxford, UK, 1986. [Google Scholar]

- Baddeley, A. Is working memory still working? Eur. Psychol. 2002, 7, 85–97. [Google Scholar] [CrossRef]

- Miyake, A.; Shah, P. Models of Working Memory: Mechanisms of Active Maintenance and Executive Control; Cambridge University Press: Cambridge, UK, 1999. [Google Scholar]

- Alloway, T.P.; Gathercole, S.E.; Kirkwood, H.; Elliott, J. The cognitive and behavioral characteristics of children with low working memory. Child Dev. 2009, 80, 606–621. [Google Scholar] [CrossRef] [PubMed]

- Bull, R.; Scerif, G. Executive functioning as a predictor of children’s mathematics ability: Inhibition, switching, and working memory. Dev. Neuropsychol. 2001, 19, 273–293. [Google Scholar] [CrossRef] [PubMed]

- Gathercole, S.E.; Pickering, S.J.; Knight, C.; Stegmann, Z. Working memory skills and educational attainment: Evidence from national curriculum assessments at 7 and 14 years of age. Appl. Cogn. Psychol. 2003, 18, 1–16. [Google Scholar] [CrossRef]

- Gersten, R.; Jordan, N.C.; Flojo, J.R. Early identification and interventions for students with mathematics difficulties. J. Learn. Disabil. 2005, 38, 293–304. [Google Scholar] [CrossRef] [PubMed]

- Siegel, L.S.; Ryan, E.B. The development of working memory in normally achieving and subtypes of learning disabled children. Child Dev. 1989, 60, 973–980. [Google Scholar] [CrossRef] [PubMed]

- St Clair-Thompson, H.L.; Gathercole, S.E. Executive functions and achievements in school: Shifting, updating, inhibition, and working memory. Q. J. Exp. Psychol. 2006, 59, 745–759. [Google Scholar] [CrossRef] [PubMed]

- Shipstead, Z.; Redick, T.S.; Engle, R.W. Does working memory training generalize? Psychol. Belg. 2010, 50, 3–4. [Google Scholar] [CrossRef]

- Chein, J.M.; Morrison, A.B. Expanding the mind’s workspace: Training and transfer effects with a complex working memory span task. Psychon. Bull. Rev. 2010, 17, 193–199. [Google Scholar] [CrossRef] [PubMed]

- Dahlin, E.; Neely, A.S.; Larsson, A.; Bäckman, L.; Nyberg, L. Transfer of learning after updating training mediated by the striatum. Science 2008, 320, 1510–1512. [Google Scholar] [CrossRef] [PubMed]

- Jaeggi, S.M.; Buschkuehl, M.; Perrig, W.J.; Meier, B. The concurrent validity of the N-back task as a working memory measure. Memory 2010, 18, 394–412. [Google Scholar] [CrossRef] [PubMed]

- Klingberg, T.; Forssberg, H.; Westerberg, H. Training of working memory in children with ADHD. J. Clin. Exp. Neuropsychol. 2002, 24, 781–791. [Google Scholar] [CrossRef] [PubMed]

- Li, S.C.; Schmiedek, F.; Huxhold, O.; Röcke, C.; Smith, J.; Lindenberger, U. Working memory plasticity in old age: Practice gain, transfer, and maintenance. Psychol. Aging 2008, 23, 731–742. [Google Scholar] [CrossRef] [PubMed]

- McNab, F.; Varrone, A.; Farde, L.; Jucaite, A.; Bystritsky, P.; Forssberg, H.; Klingberg, T. Changes in cortical dopamine D1 receptor binding associated with cognitive training. Sci. Signal. 2009, 323, 800–802. [Google Scholar]

- Owen, A.M.; Hampshire, A.; Grahn, J.A.; Stenton, R.; Dajani, S.; Burns, A.S.; Ballard, C.G. Putting brain training to the test. Nature 2010, 465, 775–778. [Google Scholar] [CrossRef] [PubMed]

- Thompson, T.W.; Waskom, M.L.; Garel, K.L.A.; Cardenas-Iniguez, C.; Reynolds, G.O.; Winter, R.; Gabrieli, J.D. Failure of working memory training to enhance cognition or intelligence. PLoS ONE 2013, 8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bergman Nutley, S.; Söderqvist, S.; Bryde, S.; Thorell, L.B.; Humphreys, K.; Klingberg, T. Gains in fluid intelligence after training non-verbal reasoning in 4-year-old children: A controlled, randomized study. Dev. Sci. 2011, 14, 591–601. [Google Scholar] [CrossRef] [PubMed]

- Jaeggi, S.M.; Buschkuehl, M.; Jonides, J.; Shah, P. Short-and long-term benefits of cognitive training. Proc. Natl. Acad. Sci. USA 2011, 108, 10081–10086. [Google Scholar] [CrossRef] [PubMed]

- Shavelson, R.J.; Yuan, K.; Alonzo, A. On the impact of computer training on working memory and fluid intelligence. In Fostering Change in Institutions, Environments, and People: A Festschrift in Honor of Gavriel Salomon; Berliner, D.C., Kuermintz, H., Eds.; Routledge: New York, NY, USA, 2008; pp. 35–48. [Google Scholar]

- Thorell, L.B.; Lindqvist, S.; Bergman Nutley, S.; Bohlin, G.; Klingberg, T. Training and transfer effects of executive functions in preschool children. Dev. Sci. 2008, 12, 106–113. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Wang, Y.; Liu, D.; Zhou, R. Effect of updating training on fluid intelligence in children. Chin. Sci. Bull. 2011, 56, 2202–2205. [Google Scholar] [CrossRef]

- Witt, M. School based working memory training: Preliminary finding of improvement in children’s mathematical performance. Adv. Cogn. Psychol. 2011, 7, 7–15. [Google Scholar] [CrossRef] [PubMed]

- Colom, R.; Quiroga, M.Á.; Shih, P.C.; Martínez, K.; Burgaleta, M.; Martínez-Molina, A.; Ramírez, I. Improvement in working memory is not related to increased intelligence scores. Intelligence 2010, 38, 497–505. [Google Scholar] [CrossRef]

- Harrison, T.L.; Shipstead, Z.; Hicks, K.L.; Hambrick, D.Z.; Redick, T.S.; Engle, R.W. Working memory training may increase working memory capacity but not fluid intelligence. Psychol. Sci. 2013, 24, 2409–2419. [Google Scholar] [CrossRef] [PubMed]

- Salminen, T.; Frensch, P.; Strobach, T.; Schubert, T. Age-specific differences of dual n-back training. Aging Neuropsychol. Cogn. 2016, 23, 18–39. [Google Scholar] [CrossRef] [PubMed]

- Seidler, R.D.; Bernard, J.A.; Buschkuehl, M.; Jaeggi, S.; Jonides, J.; Humfleet, J. Cognitive Training as an Intervention to Improve Driving Ability in the Older Adult; Technical Report No. M-CASTL 2010-01; University of Michigan: Ann Arbor, MI, USA, 2010. [Google Scholar]

- Karbach, J.; Kray, J. How useful is executive control training? Age differences in near and far transfer of task-switching training. Dev. Sci. 2009, 12, 978–990. [Google Scholar] [CrossRef] [PubMed]

- Karbach, J.; Mang, S.; Kray, J. Transfer of task-switching training in older age: The role of verbal processes. Psychol. Aging 2010, 25, 677–683. [Google Scholar] [CrossRef] [PubMed]

- Salminen, T.; Strobach, T.; Schubert, T. On the impacts of working memory training on executive functioning. Front. Hum. Neurosci. 2012, 6, 166. [Google Scholar] [CrossRef] [PubMed]

- Brehmer, Y.; Rieckmann, A.; Bellander, M.; Westerberg, H.; Fischer, H.; Bäckman, L. Neural correlates of training-related working-memory gains in old age. Neuroimage 2011, 58, 1110–1120. [Google Scholar] [CrossRef] [PubMed]

- Dahlin, E.; Nyberg, L.; Bäckman, L.; Neely, A.S. Plasticity of executive functioning in young and older adults: Immediate training gains, transfer, and long-term maintenance. Psychol. Aging 2008, 23, 720–730. [Google Scholar] [CrossRef] [PubMed]

- Redick, T.S.; Shipstead, Z.; Harrison, T.L.; Hicks, K.L.; Fried, D.E.; Hambrick, D.Z.; Engle, R.W. No evidence of intelligence improvement after working memory training: A randomized, placebo-controlled study. J. Exp. Psychol. Gen. 2013, 142, 359–379. [Google Scholar] [CrossRef] [PubMed]

- Richmond, L.L.; Morrison, A.B.; Chein, J.M.; Olson, I.R. Working memory training and transfer in older adults. Psychol. Aging 2011, 26, 813–822. [Google Scholar] [CrossRef] [PubMed]

- Jaeggi, S.M.; Buschkuehl, M.; Jonides, J.; Perrig, W.J. Improving fluid intelligence with training on working memory. Proc. Natl. Acad. Sci. USA 2008, 105, 6829–6833. [Google Scholar] [CrossRef] [PubMed]

- Karbach, J.; Strobach, T.; Schubert, T. Adaptive working-memory training benefits reading, but not mathematics in middle childhood. Child Neuropsychol. 2015, 21, 285–301. [Google Scholar] [CrossRef] [PubMed]

- Rudebeck, S.R.; Bor, D.; Ormond, A.; O’Reilly, J.X.; Lee, A.C. A potential spatial working memory training task to improve both episodic memory and fluid intelligence. PLoS ONE 2012, 7. [Google Scholar] [CrossRef] [PubMed]

- Stephenson, C.L.; Halpern, D.F. Improved matrix reasoning is limited to training on tasks with a visuospatial component. Intelligence 2013, 41, 341–357. [Google Scholar] [CrossRef]

- Dahlin, K.I. Effects of working memory training on reading in children with special needs. Read. Writ. 2011, 24, 479–491. [Google Scholar] [CrossRef]

- Holmes, J.; Gathercole, S.E.; Dunning, D.L. Adaptive training leads to sustained enhancement of poor working memory in children. Dev. Sci. 2009, 12, F9–F15. [Google Scholar] [CrossRef] [PubMed]

- Holmes, J.; Gathercole, S.E.; Place, M.; Dunning, D.L.; Hilton, K.A.; Elliott, J.G. Working memory deficits can be overcome: Impacts of training and medication on working memory in children with ADHD. Appl. Cogn. Psychol. 2009, 24, 827–836. [Google Scholar] [CrossRef]

- Klingberg, T.; Fernell, E.; Olesen, P.; Johnson, M.; Gustafsson, P.; Dahlström, K.; Gillberg, C.G.; Forssberg, H.; Westerberg, H. Computerized Training of Working Memory in Children With ADHD—A Randomized, Controlled Trial. J. Am. Acad. Child Adolesc. Psychiatry 2005, 44, 177–186. [Google Scholar] [CrossRef] [PubMed]

- Beck, S.J.; Hanson, C.A.; Puffenberger, S.S.; Benninger, K.L.; Benninger, W.B. A controlled trial of working memory training for children and adolescents with ADHD. J. Clin. Child Adolesc. Psychol. 2010, 39, 825–836. [Google Scholar] [CrossRef] [PubMed]

- St Clair-Thompson, H.L. Executive functions and working memory behaviours in children with a poor working memory. Learn. Individ. Differ. 2011, 21, 409–414. [Google Scholar] [CrossRef]

- St Clair-Thompson, H.L.; Holmes, J. Improving short-term and working memory: Methods of memory training. In New Research on Short-Term Memory; Nova Science Publishers: New York, NY, USA, 2008; pp. 125–154. [Google Scholar]

- Wang, Z.; Zhou, R.; Shah, P. Spaced cognitive training promotes training transfer. Front. Hum. Neurosc. 2014, 8, 217. [Google Scholar] [CrossRef] [PubMed]

- Friedman, N.P.; Miyake, A.; Corley, R.P.; Young, S.E.; DeFries, J.C.; Hewitt, J.K. Not all executive functions are related to intelligence. Psychol. Sci. 2006, 17, 172–179. [Google Scholar] [CrossRef] [PubMed]

- Miyake, A.; Friedman, N.P.; Emerson, M.J.; Witzki, A.H.; Howerter, A.; Wager, T.D. The unity and diversity of executive functions and their contributions to complex frontal lobe tasks: A latent variable analysis. Cogn. Psychol. 2000, 41, 49–100. [Google Scholar] [CrossRef] [PubMed]

- Lehto, J.E.; Juujärvi, P.; Kooistra, L.; Pulkkinen, L. Dimensions of executive functioning: Evidence from children. Br. J. Dev. Psychol. 2003, 21, 59–80. [Google Scholar] [CrossRef]

- Morrison, A.B.; Chein, J.M. Does working memory training work? The promise and challenges of enhancing cognition by training working memory. Psychon. Bull. Rev. 2011, 18, 46–60. [Google Scholar] [CrossRef] [PubMed]

- Cattell, R.B. Abilities: Their Structure, Growth, and Action; Houghton Mifflin: Boston, MA, USA, 1971. [Google Scholar]

- Jaworowska, A.; Szustrowa, T. Test Matryc Ravena w Wersji Standard: Formy: Klasyczna, Równoległa, Plus; Raven’s Matrices Test in the Standard Version, Forms Regular, Paralel, and Plus; Pracownia Testów Psychologicznych PTP: Warszawa, Poland, 2010. [Google Scholar]

- Raven, J.; Raven, J.C.; Court, J.H. Section 3: The Standard Progressive Matrices. In Manual for Raven’s Progressive Matrices and Vocabulary Scales; Harcourt Assessment: San Antonio, TX, USA, 2000. [Google Scholar]

- Matczak, A.; Piotrowska, A.; Ciarkowska, W.; Wechsler, D. Skala Inteligencji D. Wechslera dla Dzieci-Wersja Zmodyfikowana (WISC-R): Podręcznik; The Wechsler Intelligence Scale for Children—Revised Edition (WISC-R): A Manual; Pracownia Testów Psychologicznych PTP: Warszawa, Poland, 2008. [Google Scholar]

- Wechsler, D. The Wechsler Intelligence Scale for Children, 4th ed.; Pearson Assessment: London, UK, 2004. [Google Scholar]

- Kane, M.J.; Engle, R.W. Working-memory capacity and the control of attention: The contributions of goal neglect, response competition, and task set to Stroop interference. J. Exp. Psychol. Gen. 2003, 132, 47–70. [Google Scholar] [CrossRef] [PubMed]

- Turner, M.L.; Engle, R.W. Is working memory capacity task dependent? J. Mem. Lang. 1989, 28, 127–154. [Google Scholar] [CrossRef]

- Yntema, D.B. Keeping track of several things at once. Hum. Factors 1963, 5, 7–17. [Google Scholar] [CrossRef] [PubMed]

- Kirchner, W.Y. Age differences in short-term retention of rapidly changing information. J. Exp. Psychol. 1958, 55, 352–358. [Google Scholar] [CrossRef] [PubMed]

- McErlee, B. Working memory and focal attention. J. Exp. Psychol. Learn. Mem. Cogn. 2001, 27, 817–835. [Google Scholar]

- Matuszczak, M.; Krejtz, I.; Orylska, A.; Bielecki, M. Trening pamięci operacyjnej u dzieci z zespołem hiperkinetycznym (ADHD). Working memory training in children with ADHD. Czasopismo Psychologiczne 2009, 15, 87–103. [Google Scholar]

- Conway, A.R.A.; Engle, R.W. Working memory and retrieval: A source-dependent inhibition model. J. Exp. Psychol. Gen. 1994, 123, 354–373. [Google Scholar] [CrossRef] [PubMed]

- Dunning, D.L.; Holmes, J. Does working memory training promote the use of strategies on untrained working memory tasks? Mem. Cogn. 2014, 42, 854–862. [Google Scholar] [CrossRef] [PubMed]

- Alloway, T.P.; Alloway, R.G. The Efficacy of Working Memory Training in Improving Crystallized Intelligence. Nat. Preced. 2009. Available online: http://precedings.nature.com/documents/3697/version/1 (accessed on 13 December 2017).

- Lawlor-Savage, L.; Goghari, V.M. Dual n-back working memory training in healthy adults: A randomized comparison to processing speed training. PLoS ONE 2016, 11, e0151817. [Google Scholar] [CrossRef] [PubMed]

- Melby-Lervåg, M.; Redick, T.S.; Hulme, C. Working memory training does not improve performance on measures of intelligence or other measures of “far transfer” evidence from a meta-analytic review. Perspect. Psychol. Sci. 2016, 11, 512–534. [Google Scholar] [CrossRef] [PubMed]

- Schwarb, H.; Nail, J.; Schumacher, E.H. Working memory training improves visual short-term memory capacity. Psychol. Res. 2016, 80, 128–148. [Google Scholar] [CrossRef] [PubMed]

- Soveri, A.; Antfolk, J.; Karlsson, L.; Salo, B.; Laine, M. Working memory training revisited: A multi-level meta-analysis of n-back training studies. Psychon. Bull. Rev. 2017. [Google Scholar] [CrossRef] [PubMed]

- Kaufman, A.S. Intelligent Testing with the WISC-III; John Wiley & Sons: New York, NJ, USA, 1994. [Google Scholar]

- Krasowicz-Kupis, G.; Wiejak, K. Skala Inteligencji Wechslera dla Dzieci (WISC-R) w Praktyce Psychologicznej; The Wechsler Intelligence Scale for Children (WISC-R) in Psychological Practice; Wydawnictwo Naukowe PWN: Warszawa, PL, USA, 2006. [Google Scholar]

- Schmiedek, F.; Lövdén, M.; Lindenberger, U. Hundred days of cognitive training enhance broad cognitive abilities in adulthood: Findings from the COGITO study. Front. Aging Neurosci. 2010, 2, 27. [Google Scholar] [CrossRef] [PubMed]

- Buschkuehl, M.; Jaeggi, S.M.; Hutchison, S.; Perrig-Chiello, P.; Däpp, C.; Müller, M.; Perrig, W.J. Impact of working memory training on memory performance in old-old adults. Psychol. Aging 2008, 23, 743–753. [Google Scholar] [CrossRef] [PubMed]

- Ackerman, P.L.; Beier, M.E.; Boyle, M.O. Working memory and intelligence: The same or different constructs? Psychol. Bull. 2005, 131, 30–60. [Google Scholar] [CrossRef] [PubMed]

- Geary, D.C.; Hoard, M.K.; Byrd-Craven, J.; Catherine DeSoto, M. Strategy choices in simple and complex addition: Contributions of working memory and counting knowledge for children with mathematical disability. J. Exp. Child Psychol. 2004, 88, 121–151. [Google Scholar] [CrossRef] [PubMed]

- St Clair-Thompson, H.; Stevens, R.; Hunt, A.; Bolder, E. Improving children’s working memory and classroom performance. Educ. Psychol. 2010, 30, 203–219. [Google Scholar] [CrossRef]

- Henry, L.A.; Messer, D.J.; Nash, G. Testing for near and far transfer effects with a short, face-to-face adaptive working memory training intervention in typical children. Infant Child Dev. 2014, 23, 84–103. [Google Scholar] [CrossRef]

- Loosli, S.V.; Buschkuehl, M.; Perrig, W.J.; Jaeggi, S.M. Working memory training improves reading processes in typically developing children. Child Neuropsychol. 2012, 18, 62–78. [Google Scholar] [CrossRef] [PubMed]

- Nevo, E.; Breznitz, Z. The development of working memory from kindergarten to first grade in children with different decoding skills. J. Exp. Child Psychol. 2013, 114, 217–228. [Google Scholar] [CrossRef] [PubMed]

- Daneman, M.; Merikle, P.M. Working memory and language comprehension: A meta-analysis. Psychon. Bull. Rev. 1996, 3, 422–433. [Google Scholar] [CrossRef] [PubMed]

- Daneman, M.; Green, I. Individual differences in comprehending and producing words in context. J. Mem. Lang. 1986, 25, 1–18. [Google Scholar] [CrossRef]

- Green, C.S.; Strobach, T.; Schubert, T. On methodological standards in training and transfer experiments. Psychol. Res. 2014, 78, 756–772. [Google Scholar] [CrossRef] [PubMed]

| The Training Task | Before Training | After Training | ||

|---|---|---|---|---|

| EXP | CTRL | EXP | CTRL | |

| Sausage Dog | 73.16 | 70.66 | 91.34 | 74.11 |

| (10.68) | (18.25) | (4.77) | (10.78) | |

| Big Tidy-up | 78.17 | 71.59 | 80.76 | 75.53 |

| (11.10) | (12.71) | (4.84) | (12.34) | |

| Gotcha! | 0.31 | −2.22 | 10.40 | −1.48 |

| (5.44) | (7.52) | (1.90) | (7.11) | |

| Zoo | −1.84 | −2.56 | 3.69 | −2.56 |

| (4.50) | (7.31) | (2.33) | (5.27) | |

| OSPAN Condition | Before Training | After Training | ||

|---|---|---|---|---|

| EXP | CTRL | EXP | CTRL | |

| OSPAN full | 6.27 | 5.67 | 9.56 | 4.30 |

| (4.24) | (3.5) | (4.56) | (2.83) | |

| Sequences: | ||||

| 2-element | 2.95 | 3.04 | 3.88 | 2.44 |

| (0.25) | (0.31) | (0.1) | (0.26) | |

| 3-element | 2.27 | 1.85 | 2.93 | 1.51 |

| (0.25) | (0.31) | (0.22) | (0.27) | |

| 4-element | 0.88 | 0.59 | 2.10 | 0.26 |

| (0.19) | (0.24) | (0.22) | (0.27) | |

| 5-element | 0.17 | 0.19 | 0.66 | 0.07 |

| (0.11) | (0.13) | (0.14) | (0.18) | |

| WISC-R Measure | Pre-Test | Post-Test | ||

|---|---|---|---|---|

| EXP | CTRL | EXP | CTRL | |

| WISC-R full scale | 202.10 | 191.22 | 241.90 | 207.37 |

| (25.04) | (29.76) | (25.30) | (32.09) | |

| Verbal Scale | 67.10 | 67.25 | 81.74 | 67.37 |

| (12.32) | (12.97) | (13.84) | (14.55) | |

| Information | 11.67 | 10.44 | 13.05 | 11.30 |

| (2.69) | (3.17) | (3.18) | (3.90) | |

| Similarities | 12.10 | 12.41 | 14.95 | 12.81 |

| (4.25) | (2.76) | (3.70) | (2.98) | |

| Arithmetic | 10.88 | 10.67 | 12.12 | 10.44 |

| (1.63) | (2.30) | (1.93) | (2.26) | |

| Vocabulary | 23.98 | 25.48 | 29.52 | 24.74 |

| (5.99) | (6.17) | (7.66) | (6.14) | |

| Digit Span | 8.48 | 8.26 | 12.10 | 8.07 |

| (1.76) | (1.83) | (2.63) | (2.06) | |

| Nonverbal Scale | 135.00 | 123.96 | 160.17 | 140.00 |

| (17.34) | (20.64) | (17.14) | (21.83) | |

| Picture Compl. | 17.21 | 16.96 | 18.43 | 17.59 |

| (2.31) | (2.26) | (2.03) | (2.81) | |

| Picture Arr. | 28.88 | 26.19 | 33.79 | 29.67 |

| (5.70) | (4.94) | (4.95) | (6.97) | |

| Block Design | 21.31 | 18.59 | 24.98 | 20.37 |

| (5.13) | (4.03) | (5.02) | (3.85) | |

| Object Ass. | 29.40 | 26.11 | 35.76 | 31.26 |

| (6.39) | (7.17) | (5.57) | (6.57) | |

| Coding | 38.19 | 36.11 | 47.21 | 41.11 |

| (7.46) | (8.50) | (9.06) | (8.98) | |

| Dependent Variable | Pre-Test | Post-Test | Delayed Post-Test |

|---|---|---|---|

| Gotcha! | |||

| EXP | 0.44 (6.07) | 10.67 (1.75) | 9.97 (2.14) |

| CTRL | 0.57 (7.54) | 0.14 (7.24) | 2.86 (8.32) |

| Zoo | |||

| EXP | −2.06 (1.16) | 3.77 (0.87) | 4.24 (1.18) |

| CTRL | −0.93 (1.28) | −1.29 (0.96) | −0.64 (1.29) |

| Raven | |||

| EXP | 34.44 (9.14) | 35.28 (8.97) | 40.11 (6.71) |

| CTRL | 30.21 (7.34) | 31.07 (7.56) | 34.00 (5.75) |

| Similarities | |||

| EXP | 12.50 (0.93) | 14.83 (0.79) | 18.17 (0.98) |

| CTRL | 13.00 (1.06) | 13.29 (0.89) | 16.64 (1.11) |

| Vocabulary | |||

| EXP | 24.61 (6.62) | 27.83 (8.38) | 33.00 (9.34) |

| CTRL | 26.21 (6.04) | 26.50 (6.32) | 34.57 (6.32) |

| Digit Span | |||

| EXP | 8.78 (2.07) | 12.61 (3.09) | 10.72 (2.54) |

| CTRL | 8.43 (1.83) | 8.43 (2.17) | 10.14 (2.93) |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Żelechowska, D.; Sarzyńska, J.; Nęcka, E. Working Memory Training for Schoolchildren Improves Working Memory, with No Transfer Effects on Intelligence. J. Intell. 2017, 5, 36. https://doi.org/10.3390/jintelligence5040036

Żelechowska D, Sarzyńska J, Nęcka E. Working Memory Training for Schoolchildren Improves Working Memory, with No Transfer Effects on Intelligence. Journal of Intelligence. 2017; 5(4):36. https://doi.org/10.3390/jintelligence5040036

Chicago/Turabian StyleŻelechowska, Dorota, Justyna Sarzyńska, and Edward Nęcka. 2017. "Working Memory Training for Schoolchildren Improves Working Memory, with No Transfer Effects on Intelligence" Journal of Intelligence 5, no. 4: 36. https://doi.org/10.3390/jintelligence5040036