A 3D Printing Model Watermarking Algorithm Based on 3D Slicing and Feature Points

Abstract

:1. Introduction

2. Related Works

2.1. 3D Model Watermarking

2.2. 3D Printing-Based Watermarking

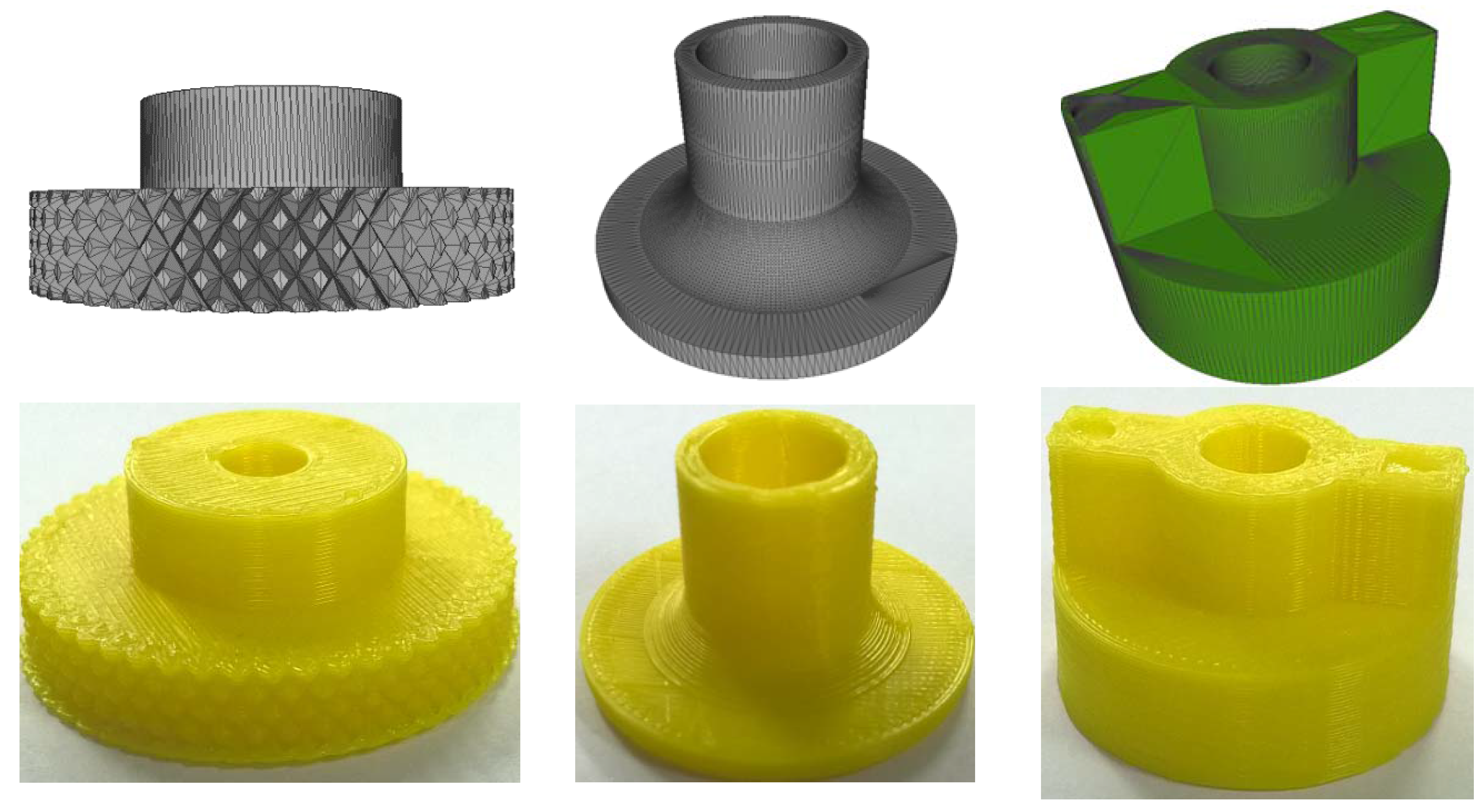

3. The Proposed Algorithm

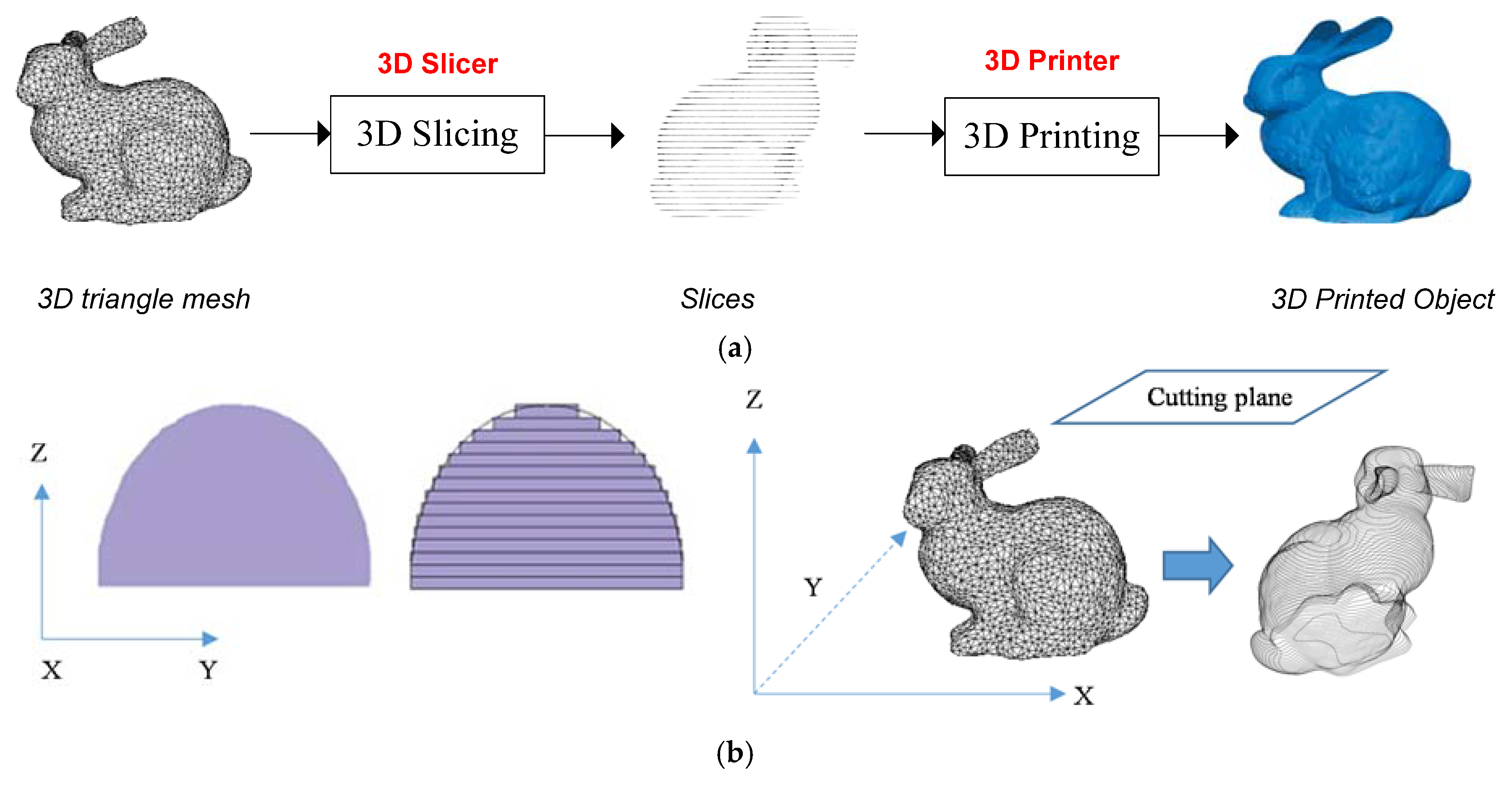

3.1. Overview

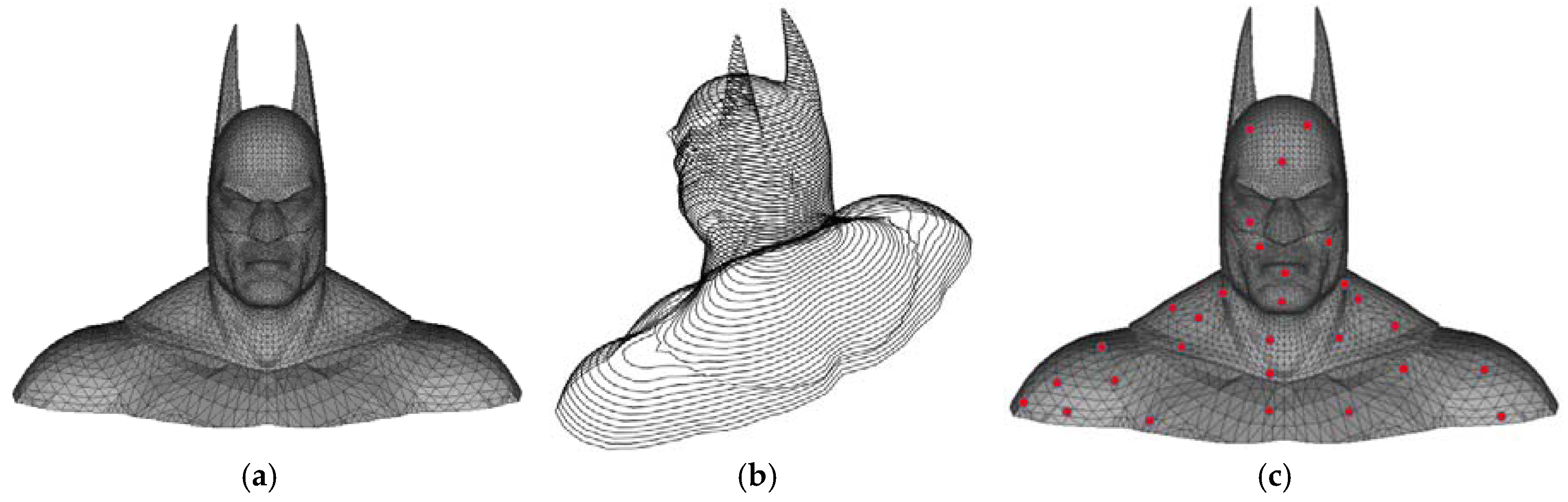

3.2. Feature Points Computation

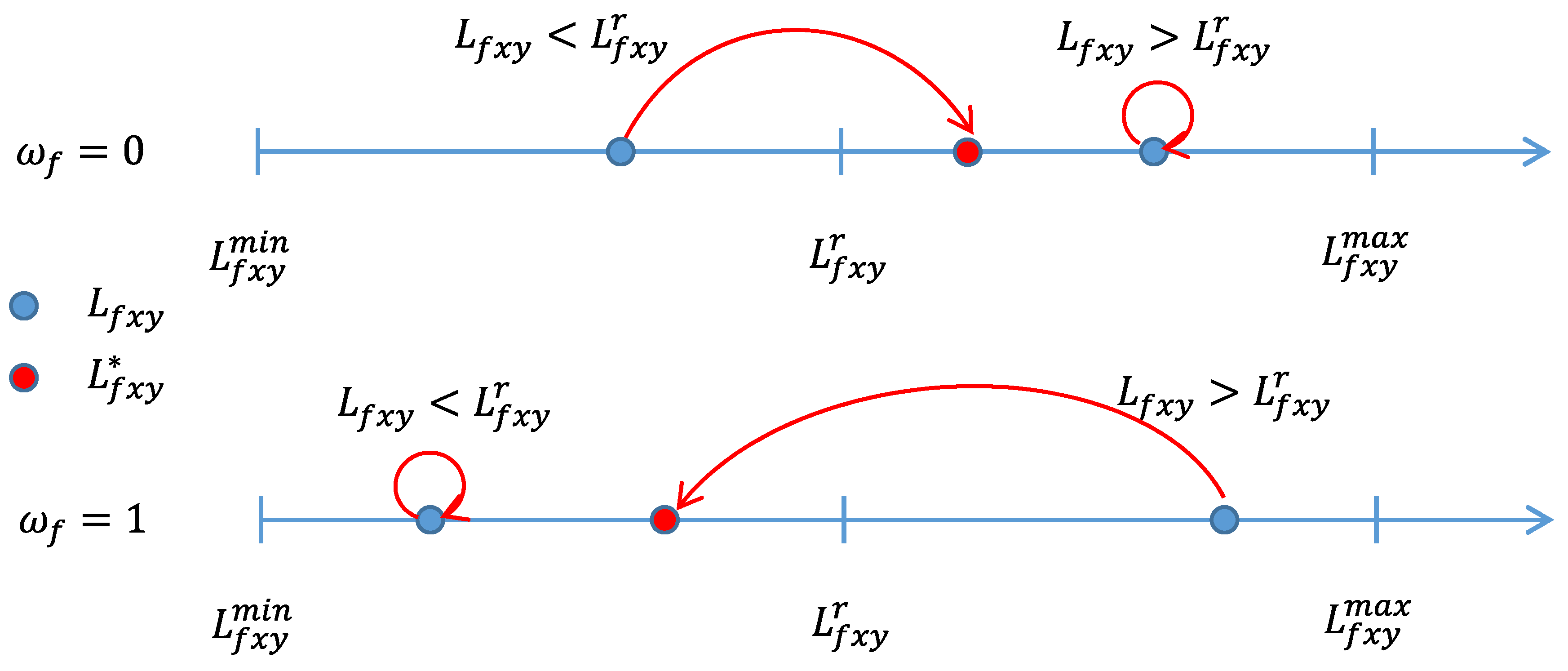

3.3. Watermark Embedding

3.4. Watermark Extracting

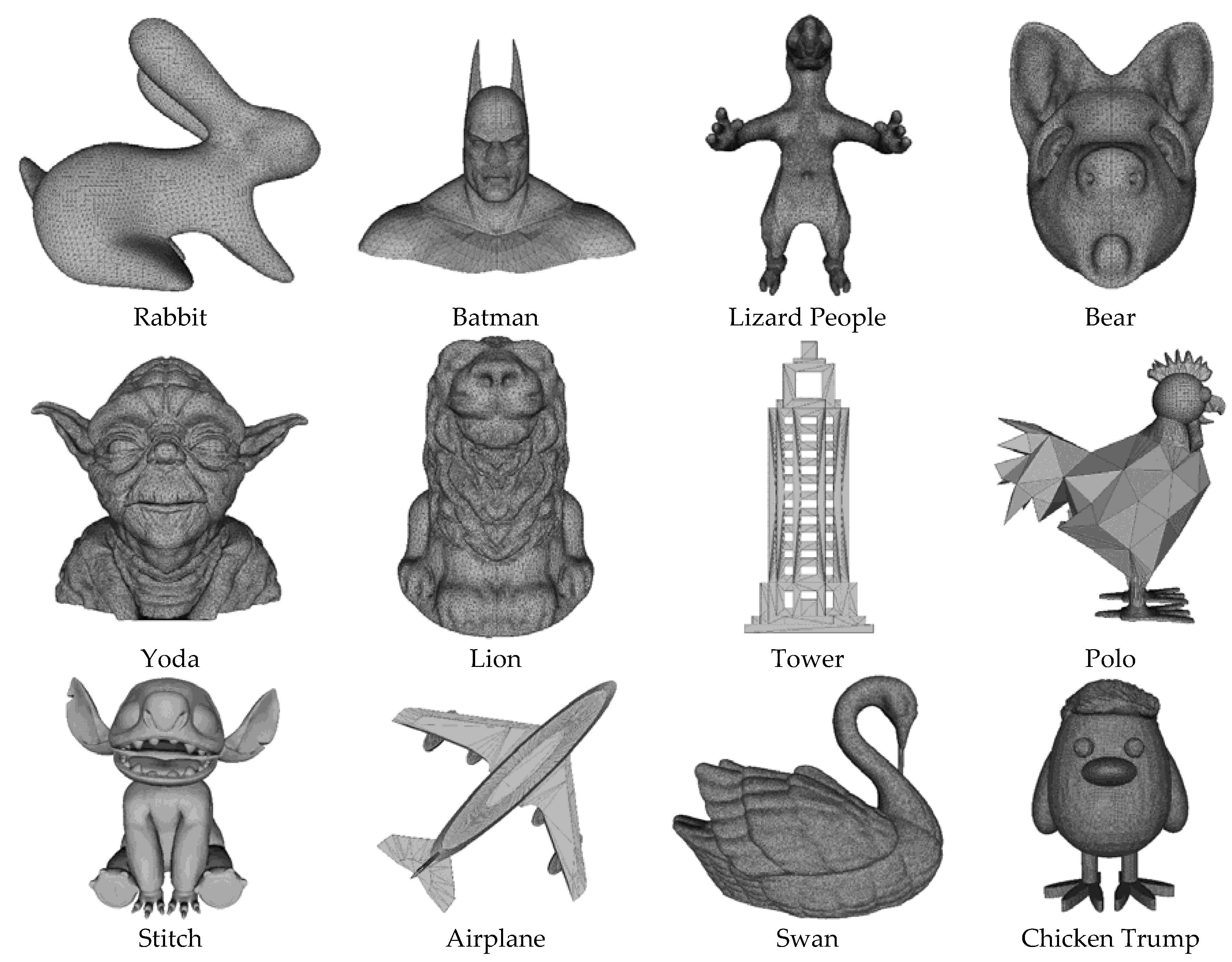

4. Experimental Results and Evaluation

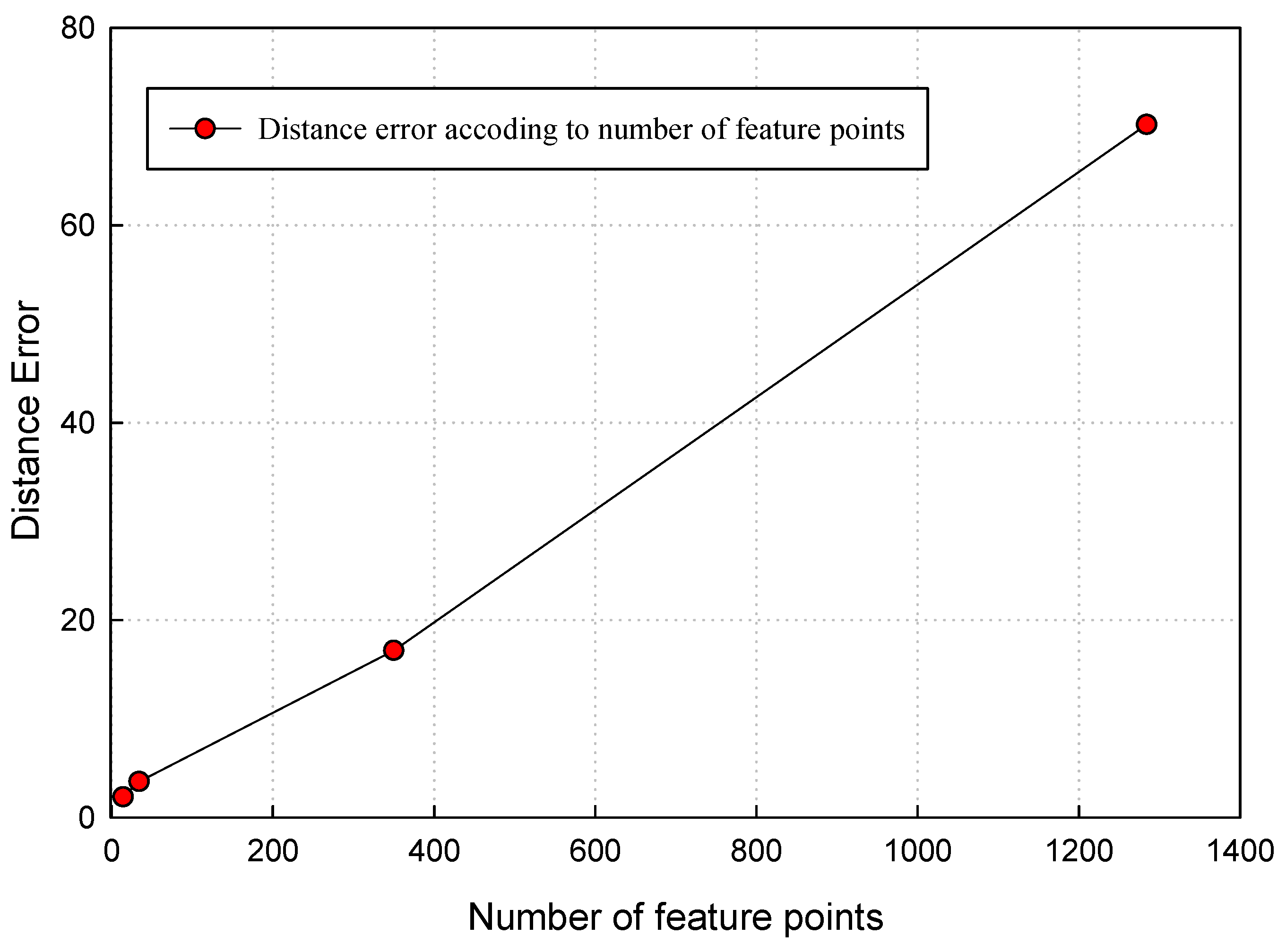

4.1. Invisibility Evaluation

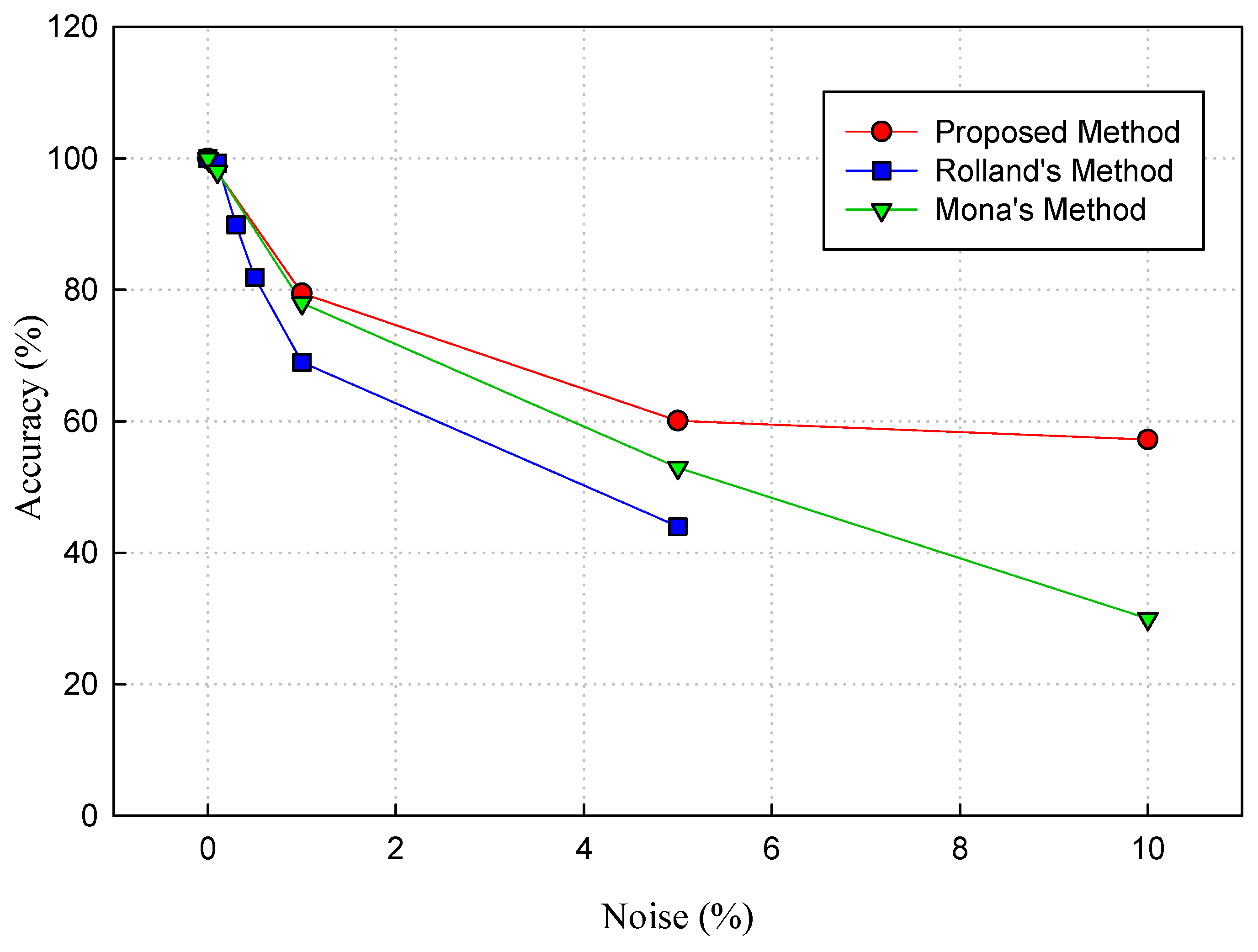

4.2. Robustness Evaluation

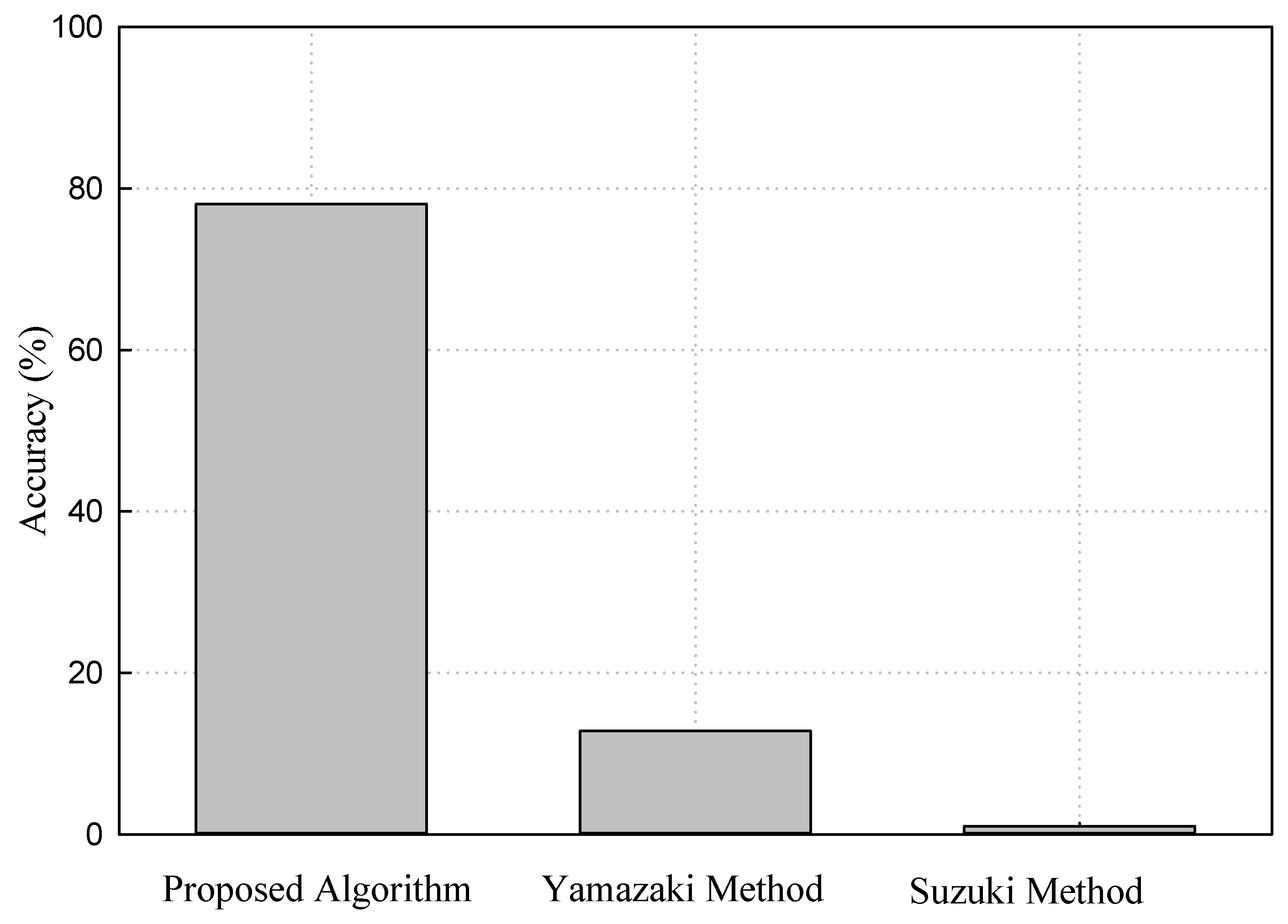

4.3. Performance Evaluation

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- How 3D Printing Works: The Vision, Innovation and Technologies Behind Inkjet 3D Printing; 3D Systems: Rock Hill, CA, USA, 2012; Available online: http://www.officeproductnews.net/sites/default/files/3dWP_0.pdf (accessed on 16 February 2018).

- Lidia, H.A.; Paul, A.J.; Jose, R.J.; Will, H.; Vincent, C. Ascent. White Paper: 3D Printing; Atos: Irving, TX, USA, 2014; Available online: https://atos.net/wp-content/uploads/2016/06/01052014-AscentWhitePaper-3dPrinting-1.pdf (accessed on 16 February 2018).

- Ira, S.; Parker, S. Copyright Issues in 3D Printing. In Proceedings of the International Technology Law Conference, Paris, France, 1–14 October 2014. [Google Scholar]

- Ai, Q.; Liu, Q.; Zhou, D.; Yang, L.; Xi, Q. A new digital watermarking scheme for 3D triangular mesh models. J. Signal Process. 2009, 89, 2159–2170. [Google Scholar] [CrossRef]

- Tamane, C.; Ratnadeep, R. Blind 3D Model Watermarking Based on Multi-Resolution Representation and Fuzzy Logic. Int. J. Comput. Sci. Inf. Technol. 2012, 4, 117–126. [Google Scholar] [CrossRef]

- Tan, X.H. A 3D Model Asymmetric Watermarking Algorithm Based on Optimization Statistics. J. Theor. Appl. Inf. Technol. 2011, 51, 175–181. [Google Scholar]

- Hu, Q.; Lang, Z. The study of 3D Digital Watermarking Algorithm Which is based on a Set of Complete System of Legendre Orthogonal Function. Open Autom. Control Syst. J. 2014, 6, 1710–1716. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Y.; He, W.; Li, Y. A New Watermarking Method of 3D Mesh Model. Indones. J. Electr. Eng. 2014, 12, 1610–1617. [Google Scholar] [CrossRef]

- Mona, M.; Ella, A.; Hoda, O. Robust Watermarking Approach for 3D Triangular Mesh using Self Organization Map. In In Proceedings of the 8th International Conference on Computer Engineering & Systems, Cairo, Egypt, 26–28 November 2013; pp. 99–104. [Google Scholar]

- Rolland, X.; Do, D.; Pierre, A. Triangle Surface Mesh Watermarking based on a Constrained Optimization Framework. IEEE Trans. Inf. Forensics Secur. 2014, 9, 1491–1501. [Google Scholar] [CrossRef]

- Ho, J.U.; Kim, D.G.; Choi, S.H.; Lee, H.K. 3D Print-Scan Resilient Watermarking Using a Histogram-Based Circular Shift Coding Structure. In Proceedings of the 3rd ACM Workshop on Information Hiding and Multimedia Security, Portland, OR, USA, 17–19 June 2015; pp. 115–121. [Google Scholar]

- Hou, J.U.; Kim, D.G.; Lee, H.K. Blind 3D Mesh Watermarking for 3D Printed Model by Analyzing Layering Artifact. EEE Trans. Inf. Forensics Secur. 2017, 12, 2712–2725. [Google Scholar] [CrossRef]

- Feng, X.; Liu, Y.; Fang, L. Digital Watermark of 3D CAD Product Model. Int. J. Secur. Appl. 2015, 9, 305–320. [Google Scholar] [CrossRef]

- Wang, Y.; Jing, L.; Yang, Y.; Ma, D.; Liu, R. 3D model watermarking algorithm robust to geometric attacks. IET Image Proc. 2017, 11, 822–832. [Google Scholar] [CrossRef]

- Yamazaki, S.; Satoshi, K.; Masaaki, M. Extracting Watermark from 3D Prints. In Proceedings of the 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 4576–4581. [Google Scholar]

- Suzuki, M.; Piyarat, S.; Kazutake, U.; Hiroshi, U.; Takashima, Y. Copyright Protection for 3D Printing by Embedding Information inside Real Fabricated Objects. In Proceedings of the 10th International Conference on Computer Vision Theory and Applications, Berlin, Germany, 11–14 March 2015; pp. 180–185. [Google Scholar]

- STL Format in 3D Printing. Available online: https://all3dp.com/what-is-stl-file-format-extension-3d-printing/ (accessed on 13 February 2018).

- Munir, E. Slicing 3D CAD Model in STL Format and Laser Path Generation. Int. J. Innov. Manag. Technol. 2013, 4, 410–413. [Google Scholar] [CrossRef]

- Vatani, M.; Rahimi, R.; Brazandeh, F.; Sanatinezhad, A. An Enhanced Slicing Algorithm Using Nearest Distance Analysis for Layer Manufacturing. Int. J. Mech. Aerosp. Ind. Mechatron. Manuf. Eng. 2009, 3, 74–79. [Google Scholar]

- 3D Slicer. Available online: https://www.slicer.org/ (accessed on 13 February 2018).

- KIS Slicer. Available online: http://www.kisslicer.com/ (accessed on 13 February 2018).

- XYZ Pro 3 in 1 Printer. Available online: https://www.xyzprinting.com/en-US/product/da-vinci-1-0-pro-3-in-1 (accessed on 13 February 2018).

| Name | # Vertices | # Feature Point | Distance Error |

|---|---|---|---|

| Rabbit | 10,530 | 11 | 2.6611 × 10−6 |

| Batman | 6785 | 12 | 0.1079 × 10−6 |

| Lizard People | 34,625 | 19 | 3.4201 × 10−6 |

| Bear | 20,248 | 34 | 0.1099 × 10−6 |

| Yoda | 24,910 | 34 | 7.0214 × 10−6 |

| Lion | 39,583 | 35 | 3.7276 × 10−6 |

| Tower | 1311 | 48 | 1.6932 × 10−4 |

| Polo | 8526 | 263 | 1.5386 × 10−4 |

| Stitch | 384,034 | 737 | 0.1020 × 10−4 |

| Airplane | 2796 | 1072 | 0.7655 × 10−4 |

| Chicken Trump | 49,424 | 1194 | 1.2391 × 10−4 |

| Swan | 70,682 | 1587 | 0.1013 × 10−4 |

| Name | Accuracy (%) | |||

|---|---|---|---|---|

| No Noise | Noise 1% | Noise 5% | Noise 10% | |

| Rabbit | 100 | 100 | 100 | 54.54 |

| Batman | 100 | 83.33 | 58.33 | 58.33 |

| Lizard People | 100 | 94.74 | 52.63 | 57.89 |

| Bear | 100 | 94.12 | 32.35 | 52.94 |

| Yoda | 100 | 100 | 76.47 | 76.47 |

| Lion | 100 | 45.71 | 45.71 | 51.43 |

| Tower | 100 | 50.00 | 58.33 | 58.33 |

| Polo | 100 | 80.61 | 62.74 | 51.33 |

| Stitch | 100 | 75.71 | 51.29 | 52.78 |

| Airplane | 100 | 99.22 | 83.12 | 68.67 |

| Chicken Trump | 100 | 58.46 | 51.67 | 53.01 |

| Swan | 100 | 54.66 | 48.51 | 51.21 |

| Average | 100 | 78.05 | 60.10 | 57.24 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pham, G.N.; Lee, S.-H.; Kwon, O.-H.; Kwon, K.-R. A 3D Printing Model Watermarking Algorithm Based on 3D Slicing and Feature Points. Electronics 2018, 7, 23. https://doi.org/10.3390/electronics7020023

Pham GN, Lee S-H, Kwon O-H, Kwon K-R. A 3D Printing Model Watermarking Algorithm Based on 3D Slicing and Feature Points. Electronics. 2018; 7(2):23. https://doi.org/10.3390/electronics7020023

Chicago/Turabian StylePham, Giao N., Suk-Hwan Lee, Oh-Heum Kwon, and Ki-Ryong Kwon. 2018. "A 3D Printing Model Watermarking Algorithm Based on 3D Slicing and Feature Points" Electronics 7, no. 2: 23. https://doi.org/10.3390/electronics7020023