A New Combined Vision Technique for Micro Aerial Vehicle Pose Estimation

Abstract

:1. Introduction

2. Previous Research in On-Ground Visual Navigation for MAV Pose Estimation

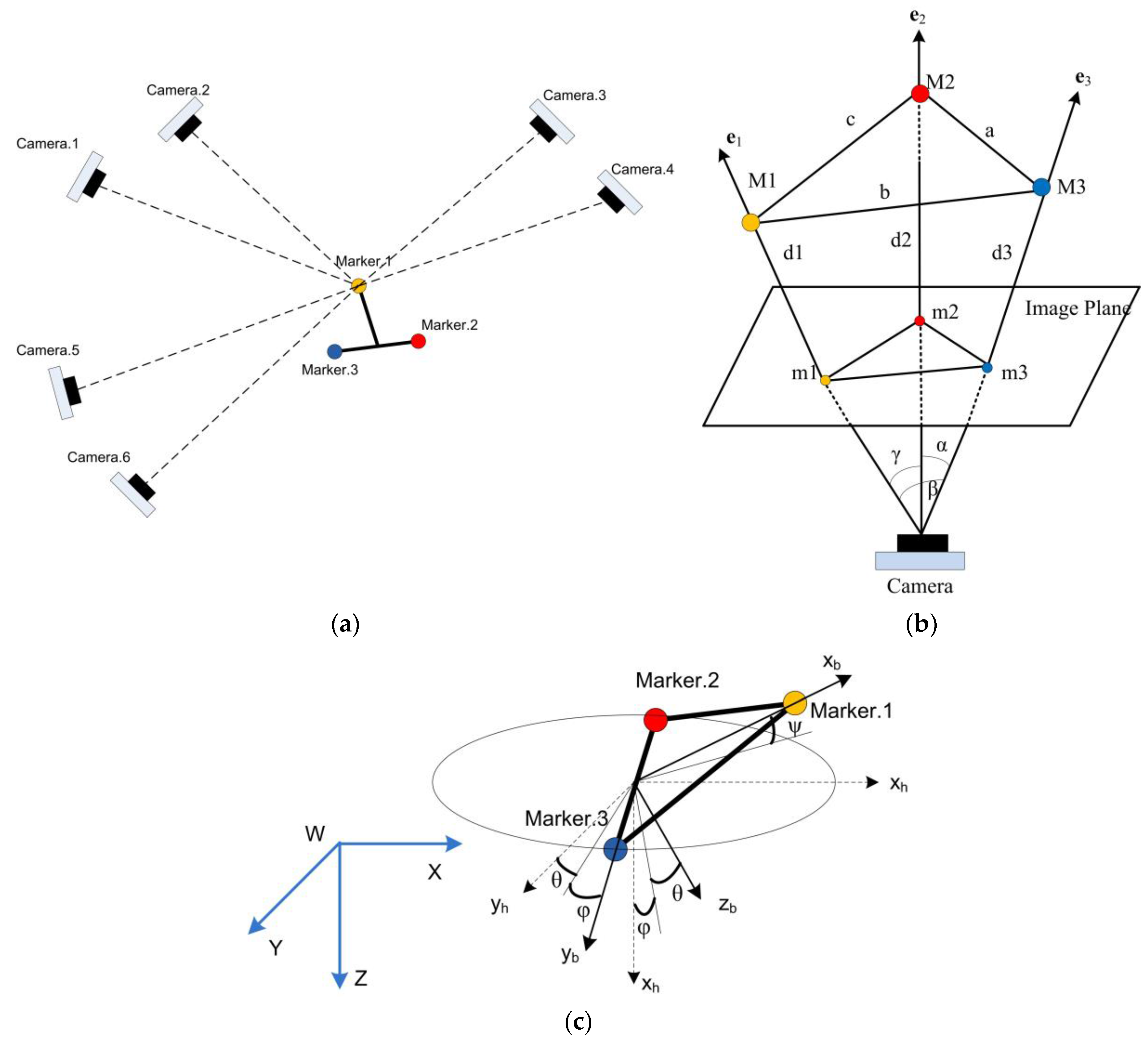

3. Description of the Visual Measurement System

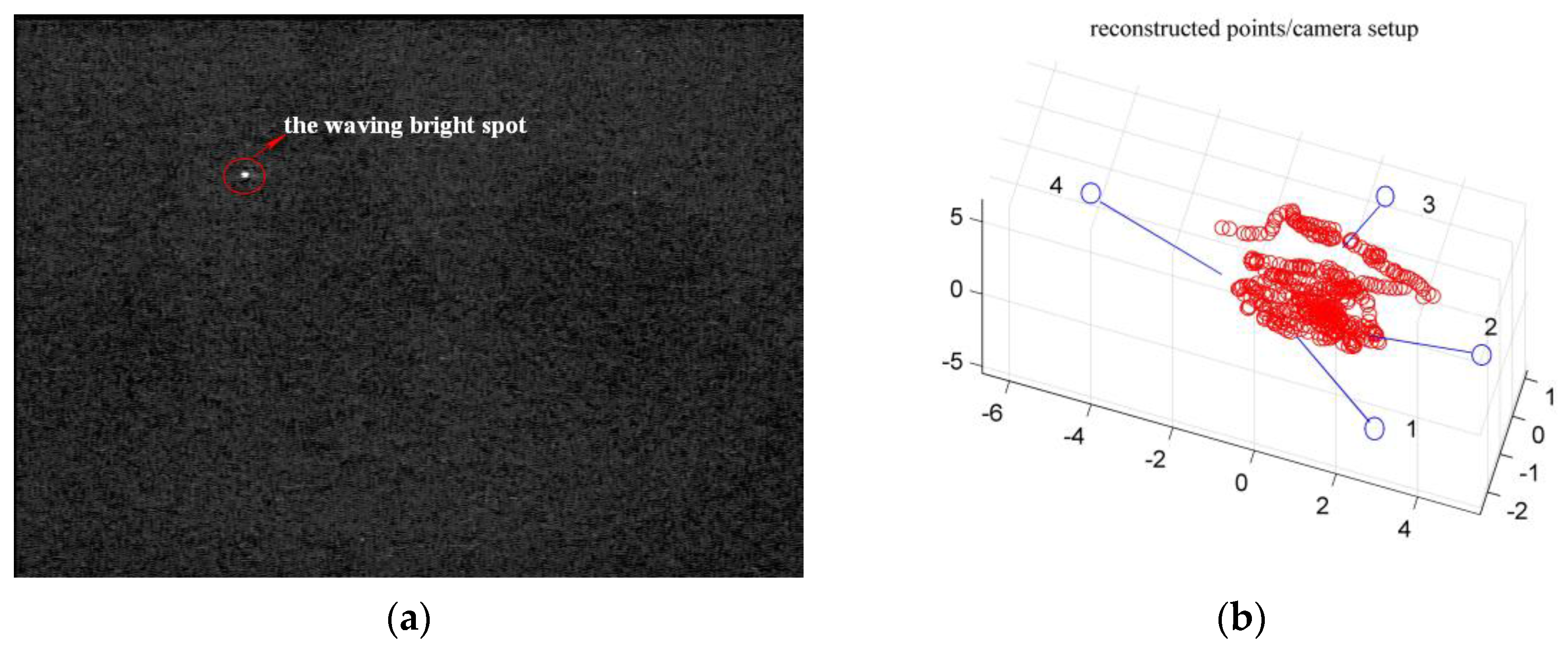

3.1. Multi-Camera System Calibration

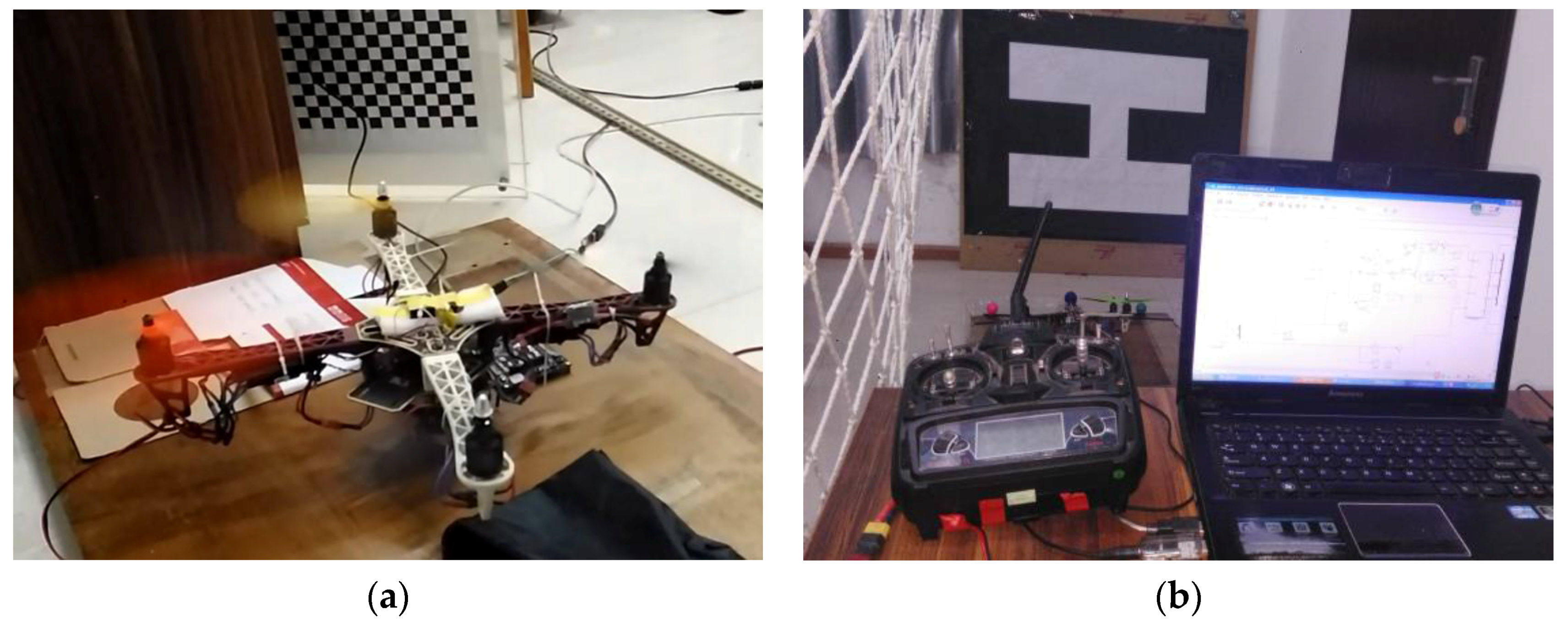

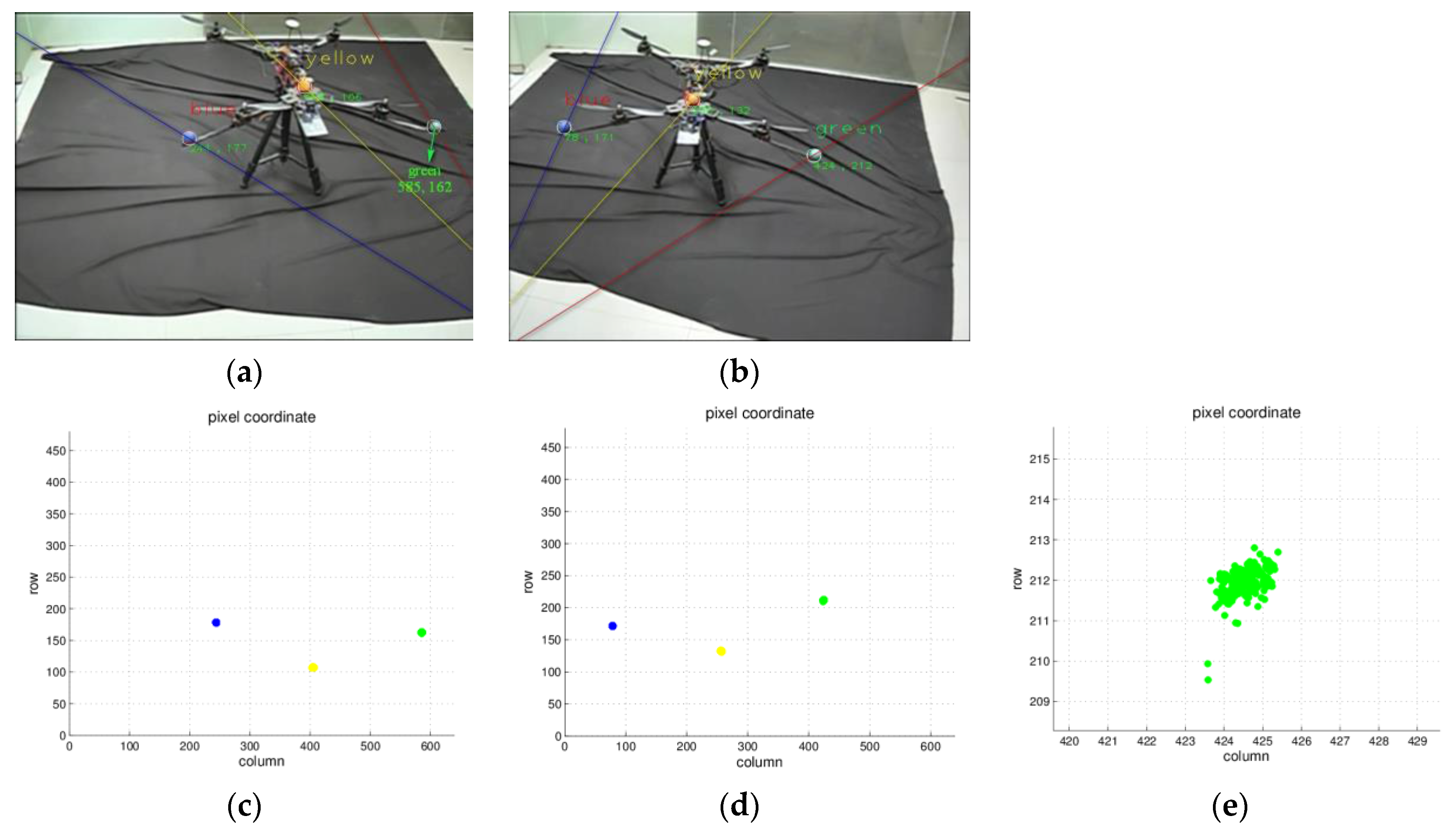

3.2. MAV Design and Detection

4. Pose Estimation

4.1. Marker Location and MAV Pose Computation

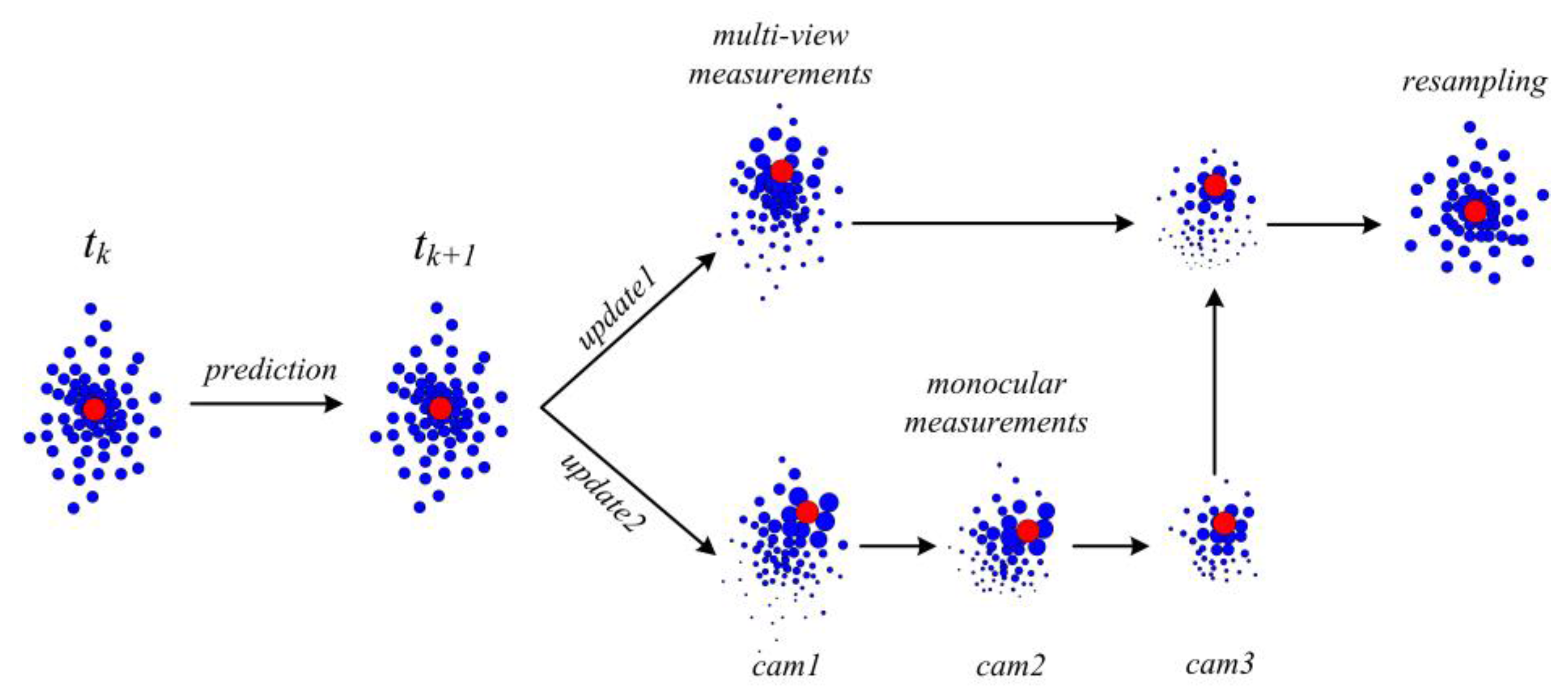

4.2. Pose Estimation

5. Experiments and Analyses

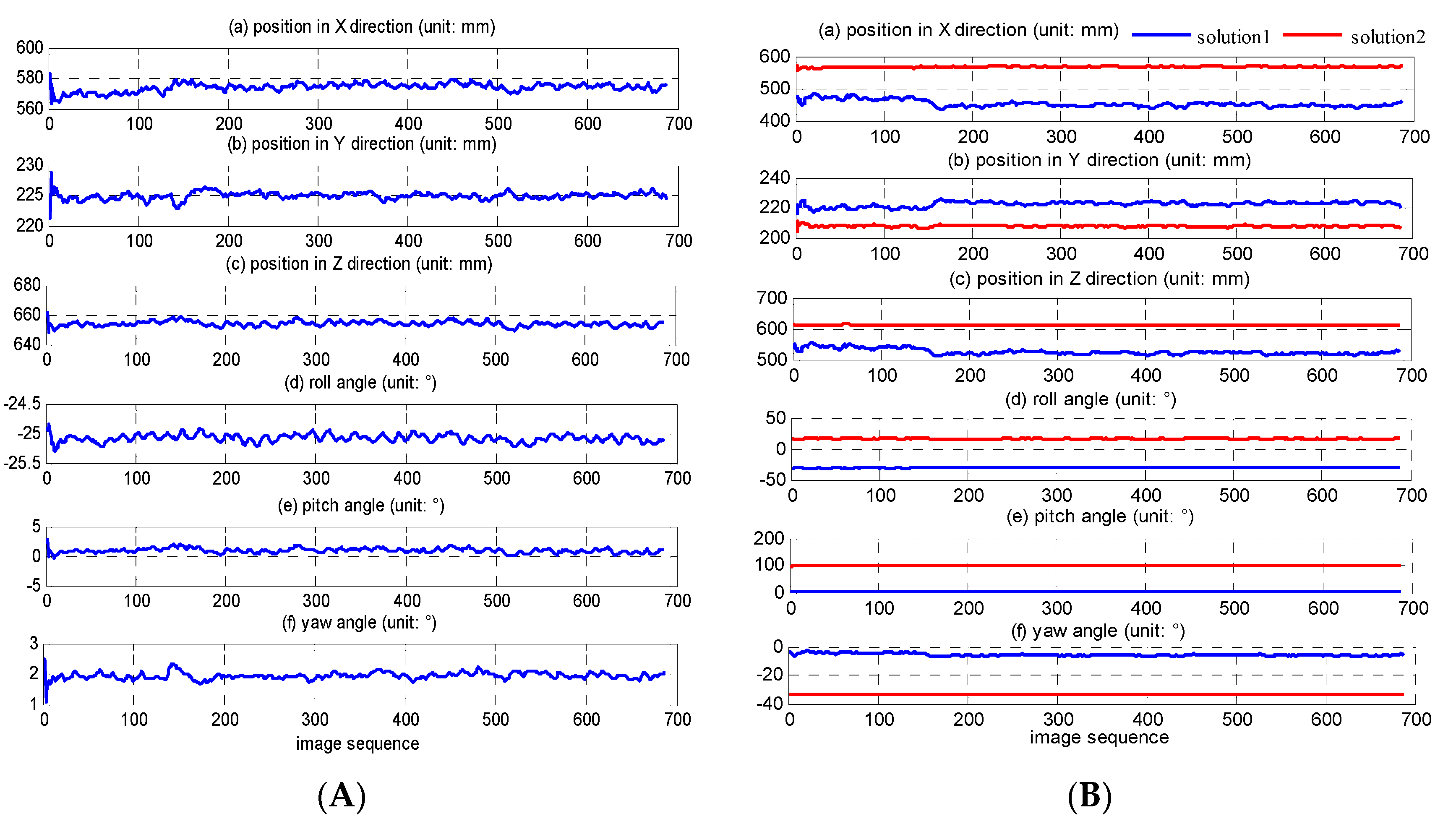

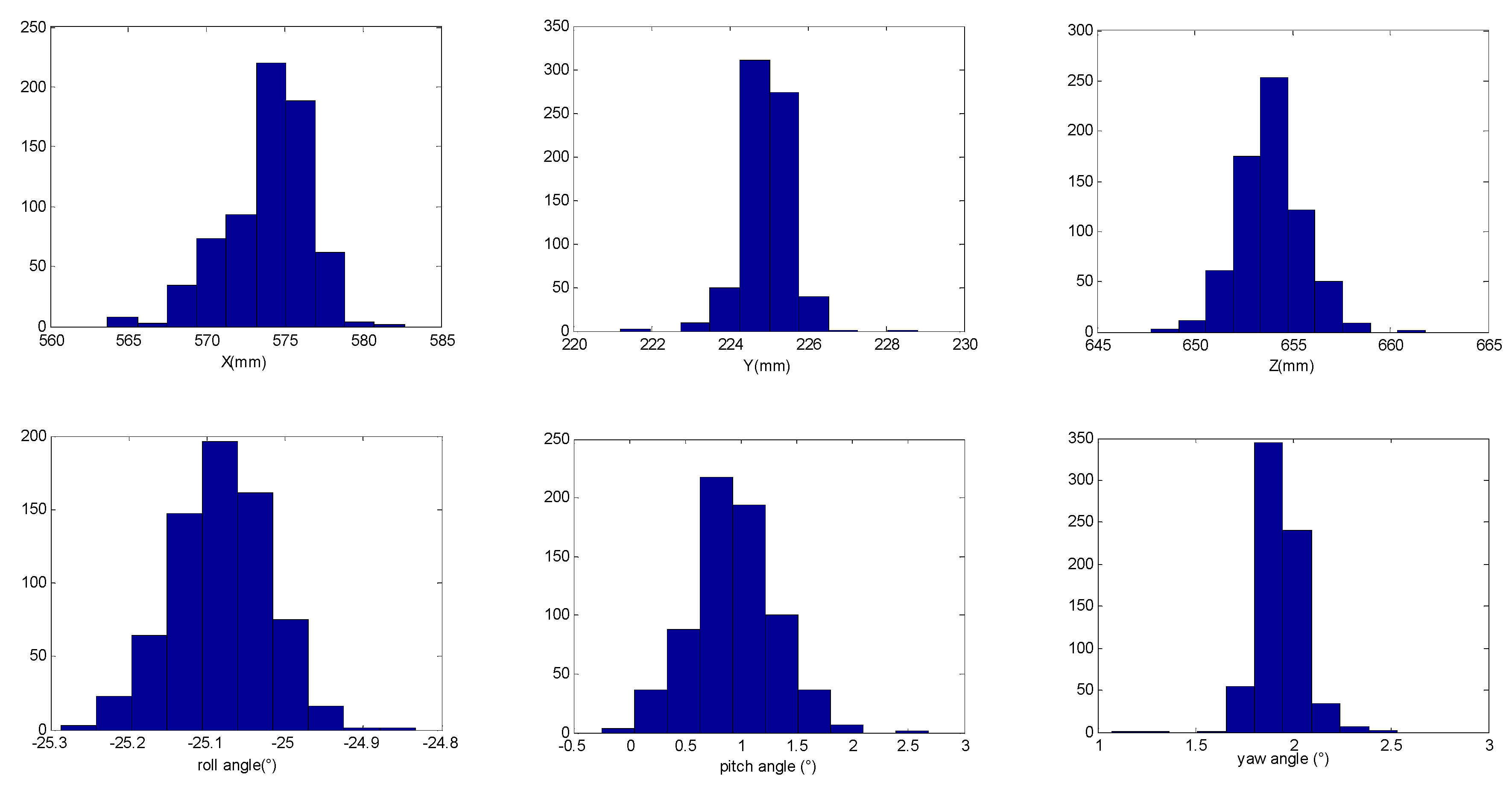

5.1. Pose Computation Results

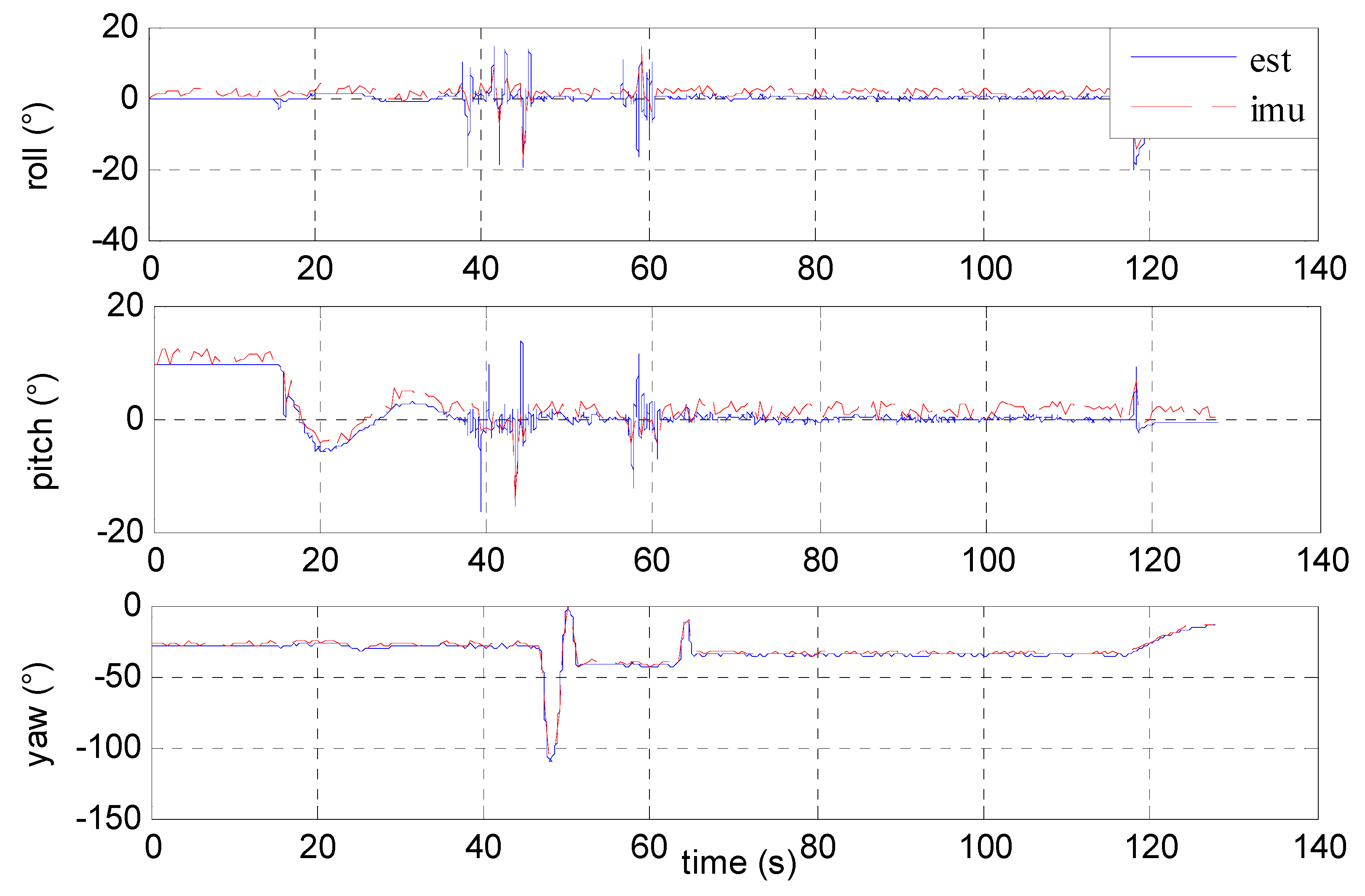

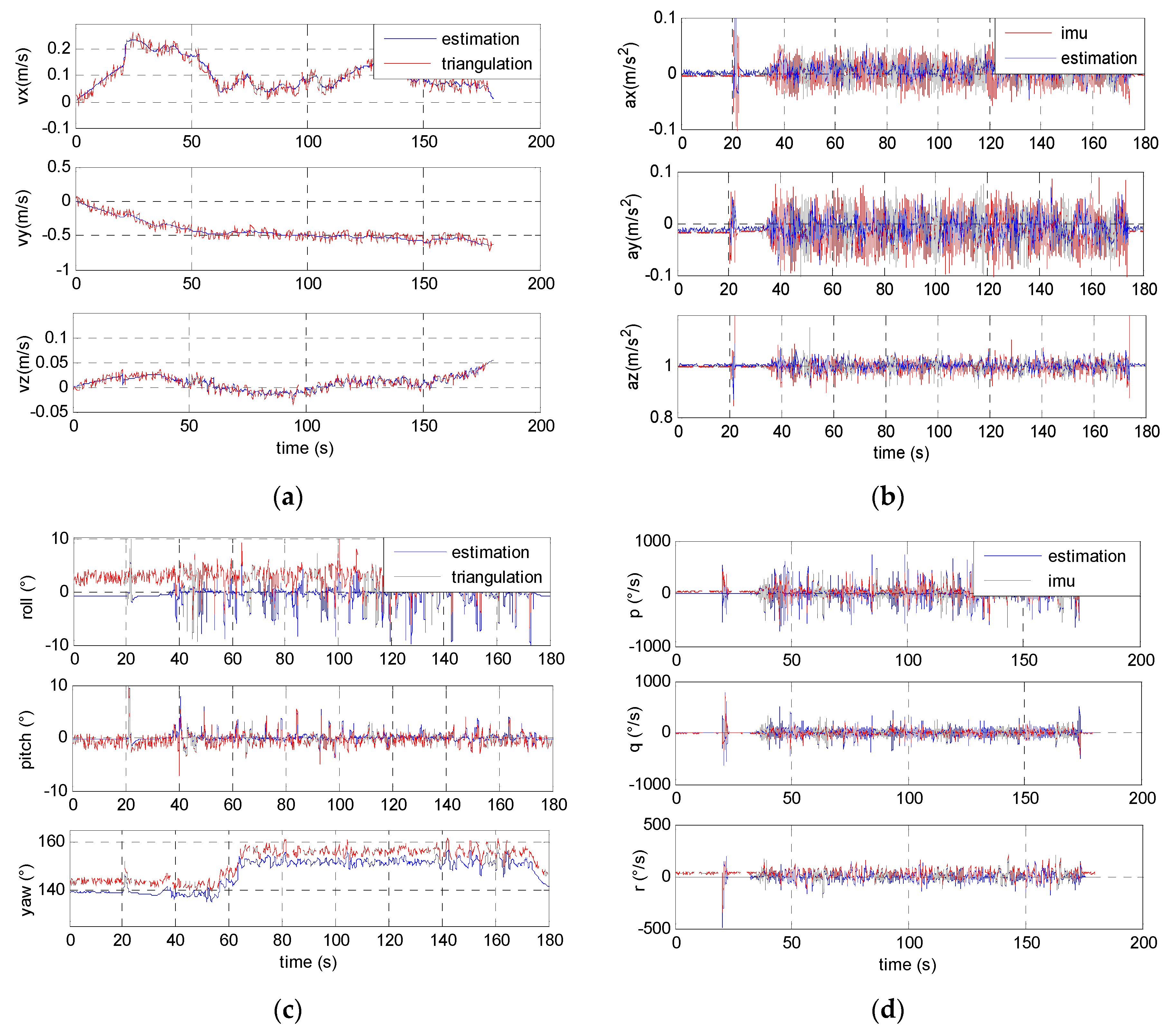

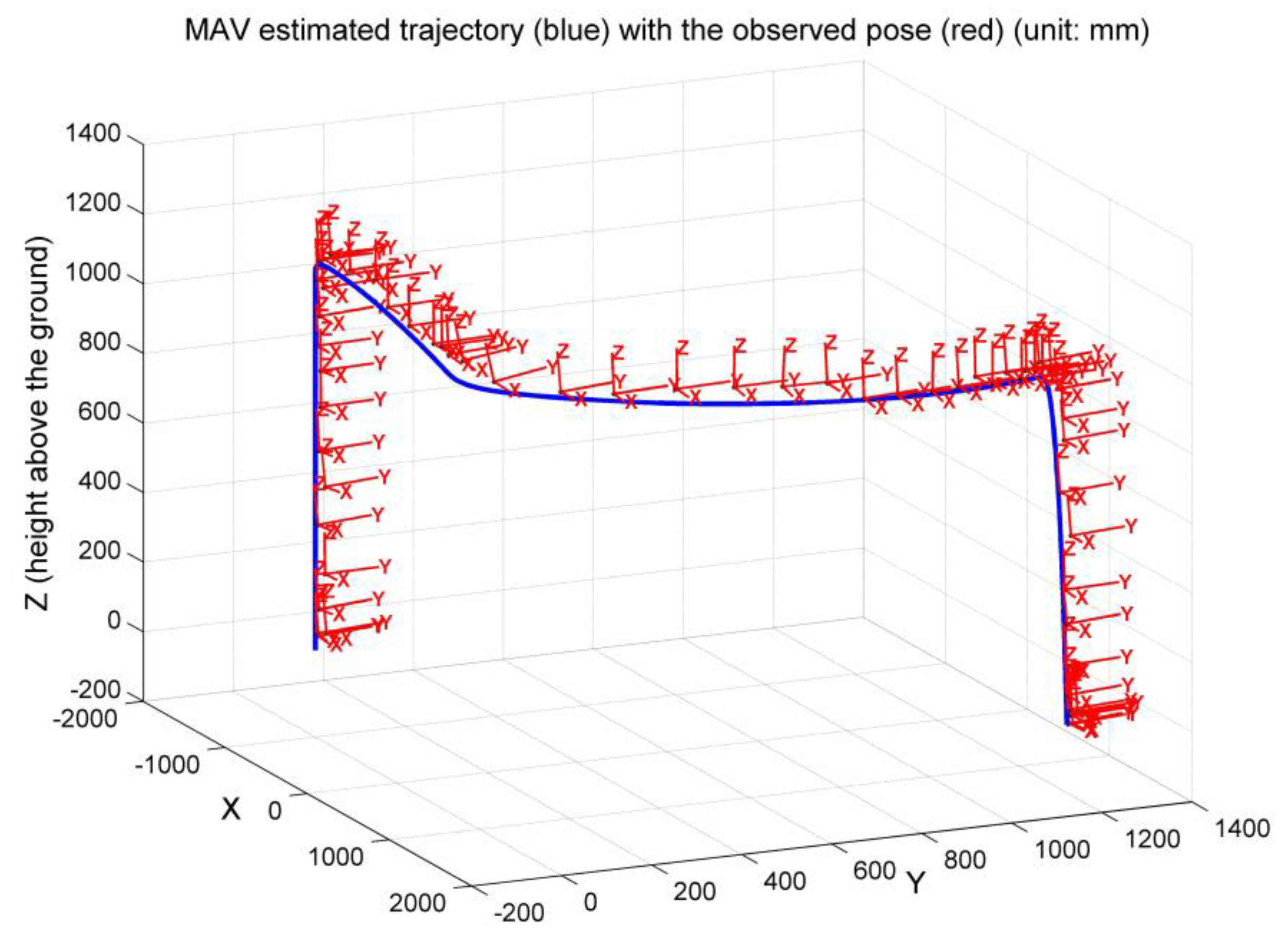

5.2. MAV Pose Estimation

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Sanchez-Lopez, J.L.; Pestana, J.; Saripalli, S.; Campoy, P. An Approach toward Visual Autonomous Ship Board Landing of a VTOL UAV. J. Intell. Robot. Syst. 2014, 74, 113–127. [Google Scholar] [CrossRef]

- Maza, I.; Kondak, K.; Bernard, M.; Ollero, A. Multi-UAV Cooperation and Control for Load Transportation and Deployment. J. Intell. Robot. Syst. 2010, 57, 417–449. [Google Scholar] [CrossRef]

- Mulgaonkar, Y.; Araki, B.; Koh, J.S.; Guerrero-Bonilla, L.; Aukes, D.M.; Makineni, A.; Tolley, M.T.; Rus, D.; Wood, R.J.; Kumar, V. The Flying Monkey: A Mesoscale Robot That Can Run, fly, and grasp. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Brockers, R.; Humenberger, M.; Kuwata, Y.; Matthies, L.; Weiss, S. Computer Vision for Micro Air Vehicles. In Advances in Embedded Computer Vision; Springer: New York, NY, USA, 2014; pp. 73–107. [Google Scholar]

- Mondragón, I.F.; Campoy, P.; Martinez, C.; Olivares-Méndez, M.A. 3D pose estimation based on planar object tracking for UAVs control. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010. [Google Scholar]

- Gomez-Balderas, J.E.; Flores, G.; García Carrillo, L.R.; Lozano, R. Tracking a Ground Moving Target with a Quadrotor Using Switching Control. J. Intell. Robot. Syst. 2013, 70, 65–78. [Google Scholar] [CrossRef]

- Xu, G.; Qi, X.; Zeng, Q.; Tian, Y.; Guo, R.; Wang, B. Use of Land’s Coorperative Object to Estimate UAV’s Pose for Autonomous Landing. Chin. J. Aeronaut. 2013, 26, 1498–1505. [Google Scholar] [CrossRef]

- Shen, S.; Mulgaonkar, Y.; Michael, N.; Kumar, V. Vision-Based State Estimation for Autonomous Rotorcraft MAVs in complex Environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Chowdhary, G.; Johnson, E.N.; Magree, D.; Wu, A.; Shein, A. GPS-denied Indoor and Outdoor Monocular Vision Aided Navigation and Control of Unmanned Aircraft. J. Field Robot. 2013, 30, 415–438. [Google Scholar] [CrossRef]

- Weiss, S.; Achtelik, M.W.; Lynen, S.; Achtelik, M.C.; Kneip, L.; Chli, M.; Siegwart, R. Monocular Vision for Long-term Micro Aerial Vehicle State Estimation: A Compendium. J. Field Robot. 2013, 30, 803–831. [Google Scholar] [CrossRef]

- Yang, S.; Scherer, S.A.; Schauwecker, K.; Zell, A. Autonomous Landing of MAVs on an Arbitrarily Textured Landing Site Using Onboard Monocular Vision. J. Intell. Robot. Syst. 2014, 74, 27–43. [Google Scholar] [CrossRef]

- Thurrowgood, S.; Moore, R.J.; Soccol, D.; Knight, M.; Srinivasan, M.V. A Biologically Inspired, Vision-based Guidance System for Automatic Landing of a Fixed-wing Aircraft. J. Field Robot. 2014, 31, 699–727. [Google Scholar] [CrossRef]

- Herissé, B.; Hamel, T.; Mahony, R.; Russotto, F.X. Landing a VTOL Unmanned Aerial Vehicle on a Moving Platform Using Optical Flow. IEEE Trans. Robot. 2012, 28, 77–89. [Google Scholar] [CrossRef]

- Lupashin, S.; Hehn, M.; Mueller, M.W.; Schoellig, A.P.; Sherback, M.; D’Andrea, R. A Platform for Aerial Robotics Research and Demonstration: The Flying Machine Arena. Mechatronics 2014, 24, 41–54. [Google Scholar] [CrossRef]

- Michael, N.; Mellinger, D.; Lindsey, Q.; Kumar, V. The grasp multiple micro UAV testbed. IEEE Robot. Autom. Mag. 2010, 17, 56–65. [Google Scholar] [CrossRef]

- Valenti, M.; Bethke, B.; Fiore, G.; How, J.P. Indoor Multivehicle Flight Testbed for Fault Detection, Isolation and Recovery. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Keystone, CO, USA, 21–24 August 2006. [Google Scholar]

- How, J.; Bethke, B.; Frank, A.; Dale, D.; Vian, J. Real-time Indoor Autonomous Vehicle Test Environment. IEEE Control Syst. Mag. 2008, 28, 51–64. [Google Scholar] [CrossRef]

- Martínez, C.; Mondragón, I.F.; Olivares-Méndez, M.A.; Campoy, P. On-board and Ground Visual Pose Estimation Techniques for UAV Control. J. Intell. Robot. Syst. 2010, 61, 301–320. [Google Scholar] [CrossRef]

- Faessler, M.; Mueggler, E.; Schwabe, K.; Scaramuzza, D. A Monocular Pose Estimation System based on Infrared LEDs. In Proceedings of the IEEE International Conference on Robotics & Automation (ICRA), Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Oh, H.; Won, D.Y.; Huh, S.S.; Shim, D.H.; Tahk, M.J.; Tsourdos, A. Indoor UAV Control Using Multi-Camera Visual Feedback. J. Intell. Robot. Syst. 2011, 61, 57–84. [Google Scholar] [CrossRef]

- Yoshihata, Y.; Watanabe, K.; Iwatani, Y.; Hashimoto, K. Multi-camera visual servoing of a micro helicopter under occlusions. Proceedings on the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007. [Google Scholar]

- Altug, E.; Ostrowski, J.P.; Taylor, C.J. Control of A Quadrotor Helicopter Using Dual Camera Visual Feedback. Int. J. Robot. Res. 2005, 24, 329–341. [Google Scholar] [CrossRef]

- Jean-Yves Bouguet. Camera Calibration Toolbox for Matlab. 2008. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/index.html (accessed on 2 March 2015).

- Svoboda, T.; Martinec, D.; Pajdla, T. A convenientmulti-camera self-calibration for virtual environments. PRESENCE: Teleoper. Virtual Environ. 2005, 14, 407–422. [Google Scholar] [CrossRef]

- Lynen, S.; Achtelik, M.W.; Weiss, S.; Chli, M.; Siegwart, R. A Robust and Modular Multi-Sensor Fusion Approach Applied to MAV Navigation. In Proceedings of the IEEE/Rsj International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- De Marina, H.G.; Pereda, F.J.; Giron-Sierra, J.M.; Espinosa, F. UAV Attitude Estimation Using Unscented Kalman Filter and TRIAD. IEEE Trans. Ind. Electron. 2012, 59, 4465–4475. [Google Scholar] [CrossRef]

- Gustafsson, F.; Gunnarsson, F.; Bergman, N.; Forssell, U.; Jansson, J.; Karlsson, R.; Nordlund, P.J. Particle Filters for Positioning, Navigation, and Tracking. IEEE Trans. Signal Process. 2002, 50, 425–438. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, H.; Xiao, C.; Xiu, S.; Wen, Y.; Zhou, C.; Li, Q. A New Combined Vision Technique for Micro Aerial Vehicle Pose Estimation. Robotics 2017, 6, 6. https://doi.org/10.3390/robotics6020006

Yuan H, Xiao C, Xiu S, Wen Y, Zhou C, Li Q. A New Combined Vision Technique for Micro Aerial Vehicle Pose Estimation. Robotics. 2017; 6(2):6. https://doi.org/10.3390/robotics6020006

Chicago/Turabian StyleYuan, Haiwen, Changshi Xiao, Supu Xiu, Yuanqiao Wen, Chunhui Zhou, and Qiliang Li. 2017. "A New Combined Vision Technique for Micro Aerial Vehicle Pose Estimation" Robotics 6, no. 2: 6. https://doi.org/10.3390/robotics6020006