1. Introduction

A signed distance field (SDF), sometimes referred to as a distance function, is an implicit surface representation that embeds geometry into a scalar field whose defining property is that its value represents the distance to the

nearest surface of the embedded geometry. Additionally, the field is positive outside the geometry, i.e., in free space, and negative inside. SDFs have been extensively applied to e.g., speeding up image-alignment [

1] and raycasting [

2] operations as well as collision detection [

3], motion planning [

4] and articulated-body motion tracking [

5]. The truncated SDF [

6] (TSDF), which is the focus of the present work, side-steps some of the difficulties that arise when fields are computed and updated based on incomplete information. This has proved useful in applications of particular relevance to the field of robotics research: accurate scene reconstruction ([

7,

8,

9]) as well as for rigid-body ([

10,

11]) pose estimation.

The demonstrated practicality of distance fields and other voxel-based representations such as occupancy grids [

12] and the direct applicability of a vast arsenal of image processing methods to such representations make them a compelling research topic. However, a major drawback in such representations is the large memory requirement for storage, which severely limits their applicability for large-scale environments. For example, a space measuring

m

mapped with voxels of 2 cm size requires at least 800 MB at 32 bits per voxel.

Mitigating strategies, such as cyclic buffers ([

8,

9]), octrees ([

13,

14]), and key-block swapping [

15], have been proposed to limit the memory cost of using volumetric distance-fields in very different ways. In the present work, we address the issue of volumetric voxel-based map compression by an alternative strategy. We propose encoding (and subsequently decoding) the TSDF in a low-dimensional feature space by projection onto a learned set of basis (eigen-) vectors derived via principal component analysis [

16] (PCA) of a large data set of sample reconstructions. We also show that this compression method preserves important structures in the data while filtering out noise, allowing for more stable camera-tracking to be done against the model, using the SDF Tracker [

10] algorithm. We show that this method compares favorably to nonlinear methods based on auto-encoders (AE) in terms of compression, but slightly less so in terms of tracking performance.

The proposed compression strategies can be applied to scenarios in which robotic agents with limited on-board memory and computational resources need to map large spaces. The actively reconstructed TSDF need only be a small fraction of the size of the entire environment and be allowed to move. The regions that are no longer observed can be efficiently compressed into a low-dimensional feature space and re-observed regions can be decompressed back into the original TSDF representation. Although the compression is lossy, most of the content is lost in the higher-frequency domain which we show to have positive side effects in terms of noise removal. When a robot re-observes previously explored regions of a compressed map, the low-dimensional feature representation may serve as a descriptor, providing an opportunity for the robot to, still in the descriptor-space, make the decision to selectively decompress regions of the map that may be of particular interest. A proof of concept for this scenario and tracking performance evaluations on lossily reconstructed maps are presented in

Section 5.

The remainder of the paper is organized as follows: an overview on related work in given in

Section 2. In

Section 3, we formalize the definition of TSDFs, and present a brief introduction to the topics of PCA and AE networks. In

Section 4, we elaborate on the training data used, followed by a description of our evaluation methodology.

Section 5 contains experimental results, followed by

Section 7, which presents some possible extensions to the present work, and

Section 6 lastly presents our conclusions.

2. Related Work

Our work is perhaps most closely related to sparse coded surface models [

17] which use K-SVD [

18] to reduce the dimensionality of textured surface patches. K-SVD is an algorithm that generalizes k-means clustering to learn a sparse dictionary of code words that can be linearly combined to reconstruct data. Another recent contribution in this category is the Active Patch Model for 2D images [

19]. Active patches consist of a dictionary of data patches in input space that can be warped to fit new data. A low-dimensional representation is derived by optimizing the selection of patches and pre-defined warps that best use the patches to reconstruct the input. The operation on surface patches instead of volumetric image data is more efficient for compression for smooth surfaces, but may require an unbounded number of patches for arbitrarily complex geometry. As an analogy, our work can be thought of as an application of eigenfaces [

20] to the problem of 3D shape compression and low-level scene understanding. Operating directly on a volumetric representation, as we propose, has the advantage of a constant compression ratio per unit volume, regardless of the surface complexity, as well as avoiding the problem of estimating the optimal placement of patches. The direct compression of the TSDF also permits the proposed method to be integrated into several popular algorithms that rely on this representation, with minimal overhead. There are a number of data-compression algorithms designed for directly compressing volumetric data. Among these, we find video and volumetric image compression ([

21,

22]), including work dealing with distance fields specifically [

23]. Although these methods produce high-quality compression results, they typically require many sequential operations and complex extrapolation and/or interpolation schemes. A side effect of this is that these compressed representations may require information far from the location that is to be decoded. They also do not generate a mapping to a feature space wherein similar inputs map to similar features, so possible uses as descriptors are limited at best.

4. Methodology

4.1. Training Data

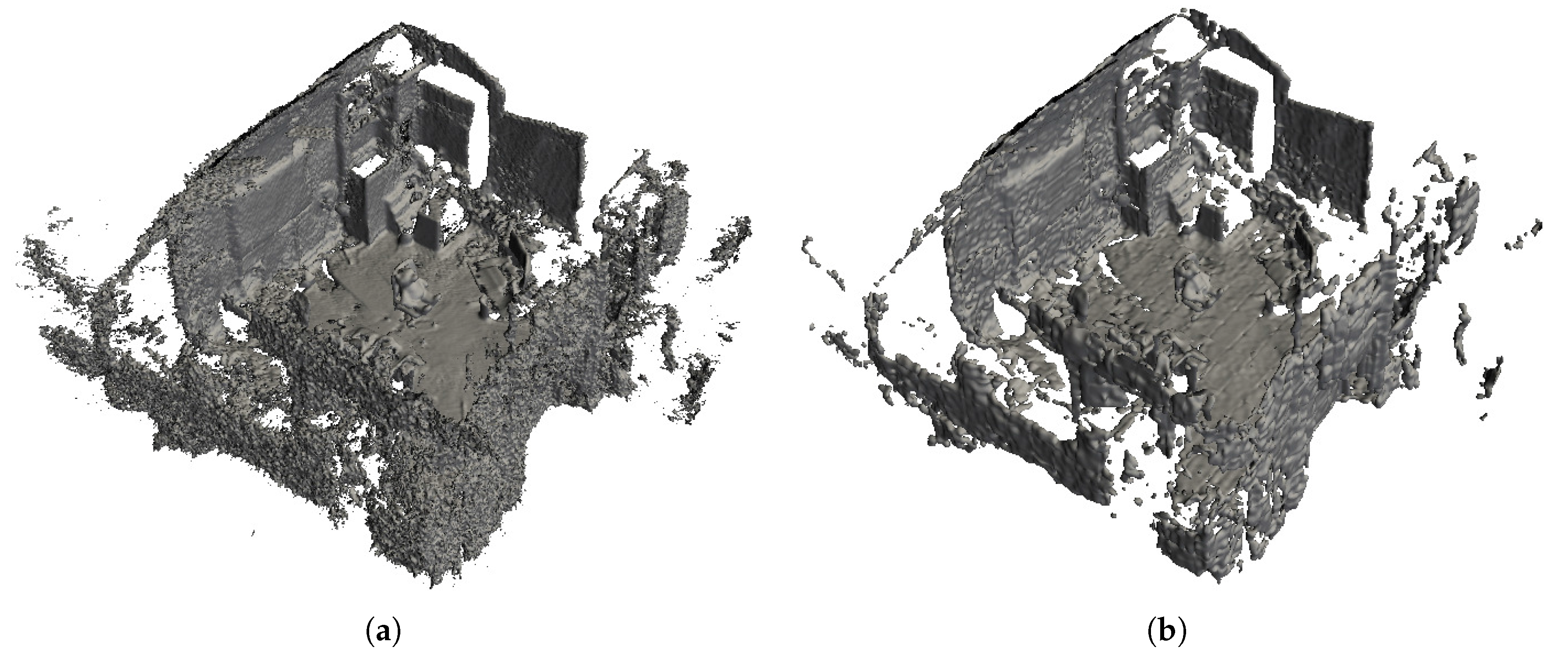

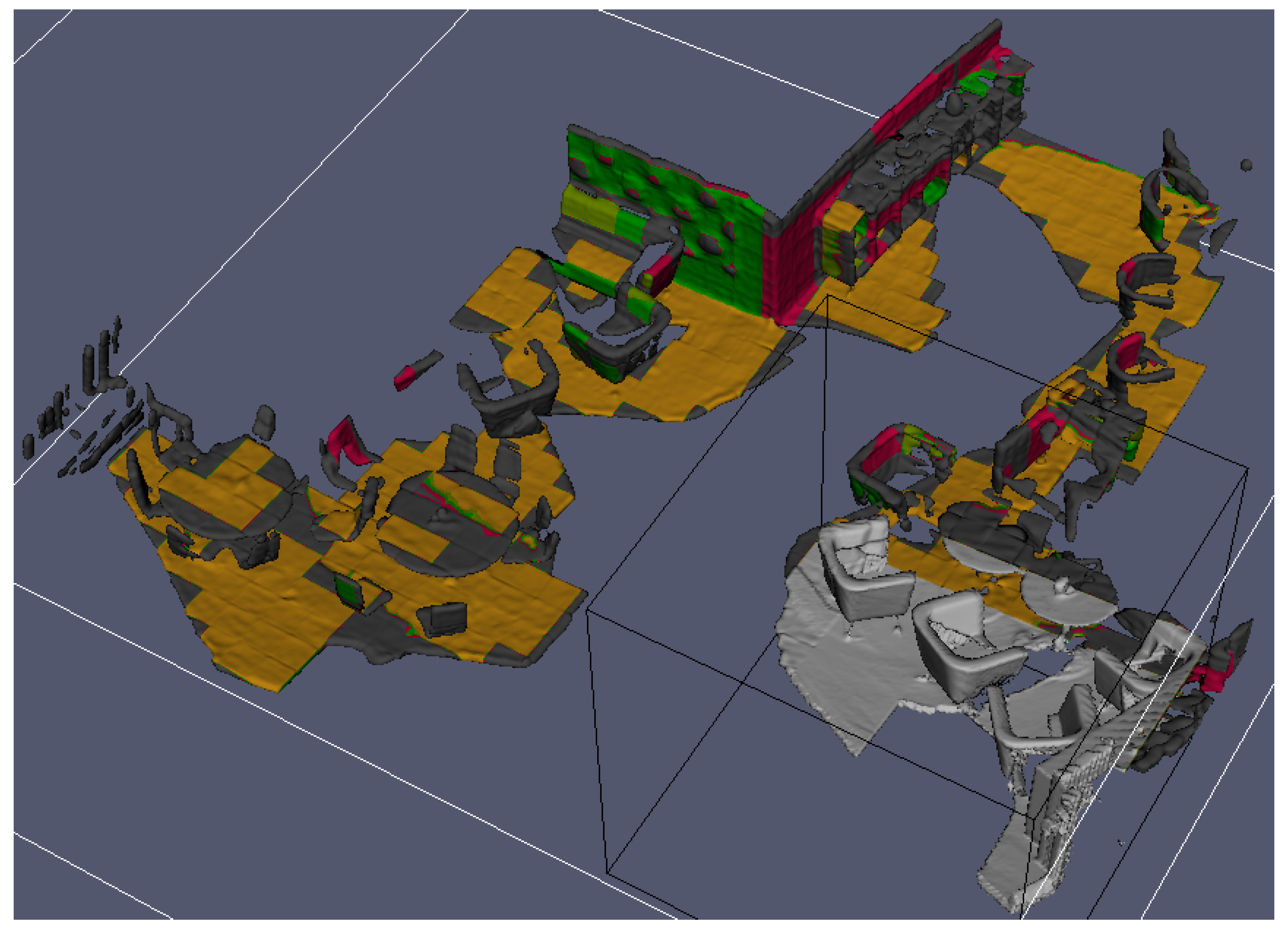

The data set used for training consists of sub-volumes sampled from 3D reconstructions of real-world industrial and office environments c.f.

Figure 1. These reconstructions are obtained by fusing sequences of depth images into a TSDF as described in [

6] with truncation limits set to

and

. Camera pose estimates were produced by the SDF Tracker algorithm (though any method with low drift would do just as well). The real-world data are augmented by synthetic samples of TSDFs, procedurally generated using

libsdf [

26], an open-source C++ library that implements simple implicit geometric primitives (as described in [

2,

27]). Some examples from our synthetic data set can be seen in

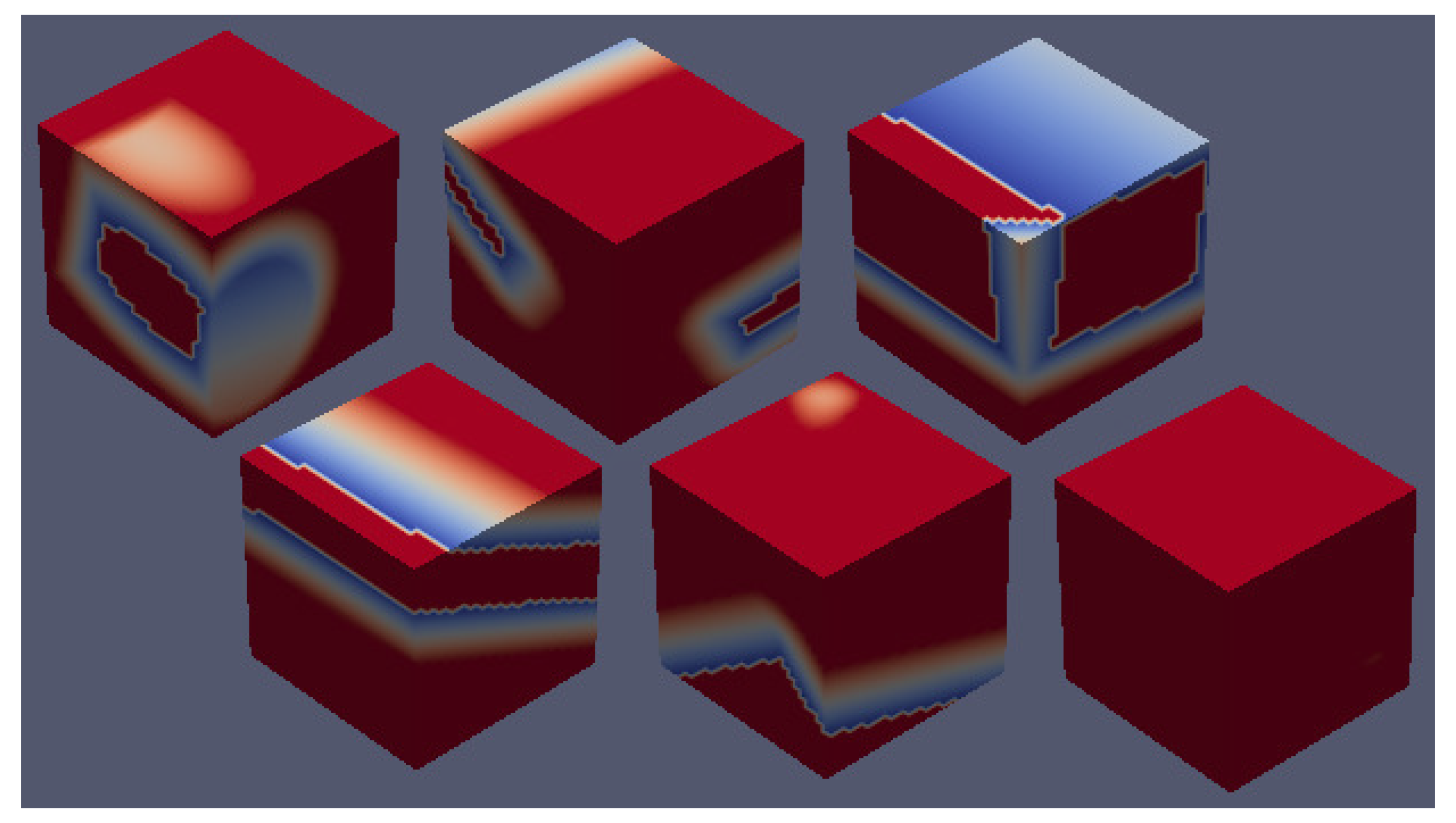

Figure 2.

Sub-volumes were sampled from the real-world reconstructions by taking samples at every eight voxels along each spatial dimension and permuting the indexing order along each axis for every sample to generate five additional reflections at each location. Distance values are then mapped from the interval to and saved. Furthermore, to avoid a disproportionate amount of samples of empty space, sub-volumes for which the mean sum of normalized () distances is below are discarded, and a small proportion of empty samples is explicitly included instead.

The procedurally generated shapes were sampled in the same manner as the real-world data. It was generated by randomly picking a sequence of randomly parametrized and oriented shapes from several shape categories representing fragments of: flat surfaces, concave edges, convex edges, concave corners, convex corners, rounded surfaces, and rounded edges. For non-oriented surfaces, convexity and concavity does not matter, since one is representable by a rotation of the other, but need to be represented as separate cases here, since the sign is inverted in the TSDF. The distances are truncated and converted to the same intervals as with the real data. The use of synthetic data allows generating training examples in a vast number of poses, with a greater degree of geometric variation than would be feasible to collect manually through scene reconstructions alone.

The sub-volumes, when unrolled, define an input of a dimensionality equal to (i.e., ), and the combined number of samples number . Our data set is then .

4.2. Evaluation Methodology

Having defined methods for obtaining features, either using PCA or ANN, what is a good balance between compression ratio and map quality in the context of robotics? We explore this question by means of two different fitness quality measures. Reconstruction fidelity and ego-motion estimation. To aid in our analysis, we use a publicly available RGB-D (color plus depth) data set [

28] with ground truth pose estimates provided by an independent external camera-tracking system. Using the provided ground truth poses, we generate a map, by fusing the depth images into a TSDF representation. This produces a ground truth map. We chose

teddy, room, desk, desk2, 360 and

plant from the

freiburg-1 collection for evaluation as these are representative of real-world challenges that arise in simultaneous localization and mapping (SLAM) and visual odometry, including motion blur, sensor noise and occasional lack of geometric structure needed for tracking. We do not use the RGB components of the data for any purpose in this work. We perform two types of experiments, designed to test the reconstruction fidelity and the reliability of tracking algorithms operating on the reconstructed volume.

As a measure for reconstruction error, we compute the mean squared errors of the decoded distance fields relative to the input. This metric is relevant to path planning, manipulation and object detection tasks since it indirectly relates to the fidelity of surface locations. For each data set, using each encoder/decoder, we compute a lossy version of the original data and report the average and standard deviation across all data sets.

Ego-motion estimation performance is measured by the absolute trajectory error (ATE) [

28]. The absolute trajectory error is the integrated distance between all pose estimates relative to the ground truth trajectory. The evaluations are performed by loading a complete TSDF map into memory and setting the initial pose according to ground truth. Then, as depth images are loaded from the RGB-D data set, we estimate the camera transformation that minimizes the point to model distance for each new frame. The evaluation was performed on all the data sets, processed through each compression and subsequent decompression method. As a baseline, we also included the original map, processed with a Gaussian blur kernel of size 9 × 9 × 9 voxels and a

parameter of

.

Building on the experimental results, we are able to demonstrate two applications: selective map decompression based on descriptor matching and large-scale mapping in a dense TSDF space, by fast on-the-fly compression and decompression.

5. Experimental Results

The PCA basis was produced, using the dimensionality reduction tools from the

scikit-learn [

29] library. Autoencoders were trained using

pylearn2 [

30] using batch gradient descent with the change in reconstruction error on a validation data set as a stopping criterion. The data set was split into 400 batches containing 500 samples each, of which 300 batches were used for training, 50 for testing, and 50 for validation. The networks use

sigmoid activation units and contain

nodes, with

d representing the number of dimensions of the descriptor.

The runtime implementation for the encoder/decoder architectures was done using the cuBLAS [

31] and Thrust [

32] libraries, enabling matrix-vector and array computation on the graphics processing unit (GPU).

5.1. Reconstruction Error

We report the average reconstruction error over all non-empty blocks in all data sets and the standard deviation among data sets in

Table 1. The reconstruction errors obtained strongly suggest that increasing the size of the codes for individual encoders yields better performance, though with diminishing returns. Several attempts were made, to out-perform the PCA approach, using Artificial Neural Networks (ANN) trained as auto-encoders, but this was generally unsuccessful. PCA-based encoders, using 32, 64 and 128 components, produce better results than ANN encoders in all of our experiments.

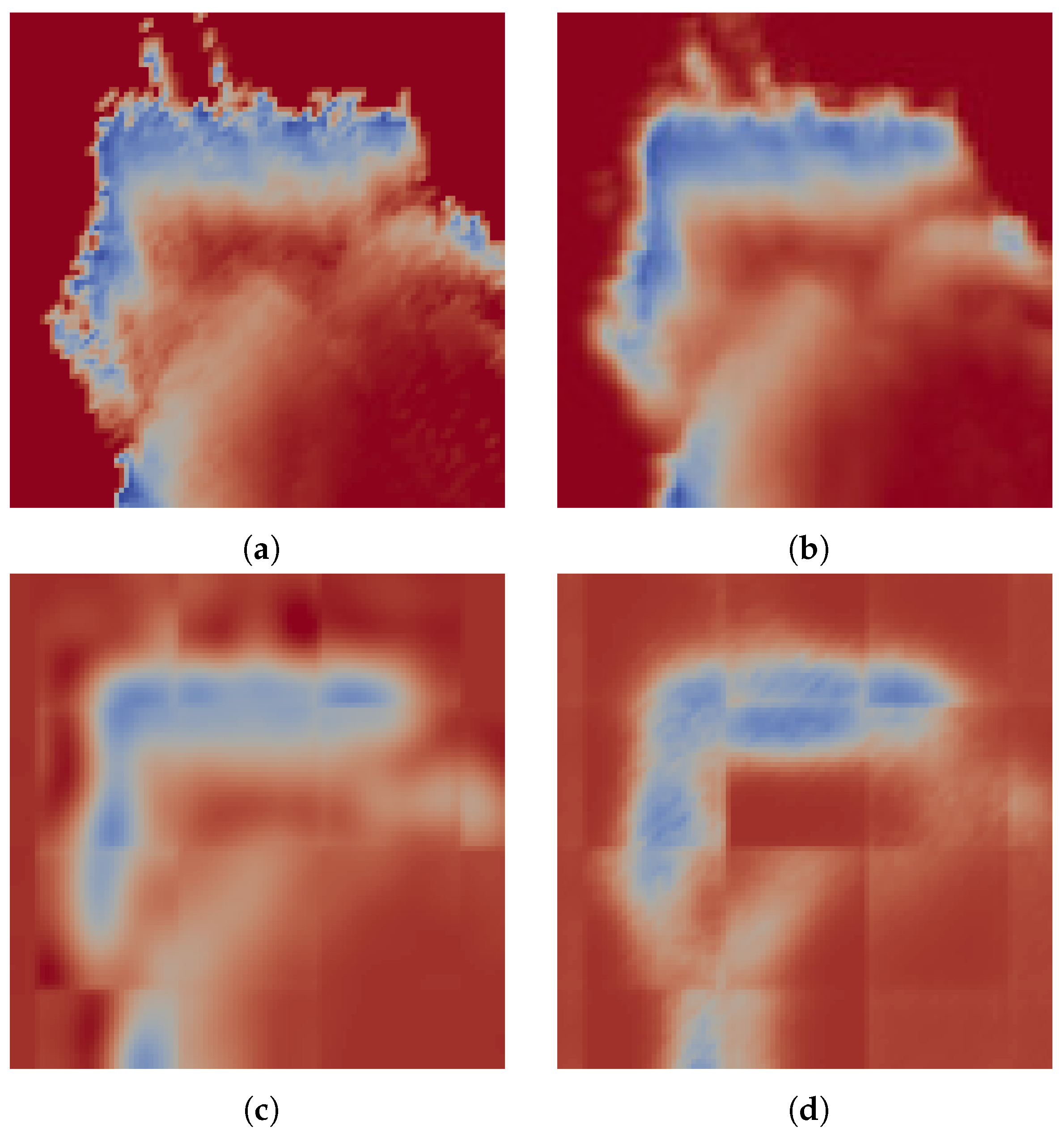

The best overall reconstruction performance is given by the PCA encoder/decoder, using 128 components. We illustrate this with an image from the

teddy data set in

Figure 3. Note that the decoded data set is smoother, so, in a sense, the measured discrepancy is partly related to a qualitative improvement.

5.2. Ego-Motion Estimation

The ego-motion estimation, performed by the SDF Tracker algorithm, uses the TSDF as a cost function to which subsequent 3D points are aligned. This requires that the gradient of the TSDF be of correct magnitude and point in the right direction. To get a good alignment, the minimum absolute distance should coincide with the actual location of the surface.

In spite of being given challenging camera trajectories, performance using the decoded maps is on average better than the unaltered map. When the tracker keeps up with the camera motion, we have observed that the performance resulting from the use of each map is in the order of their respective reconstruction errors. In this case, the closer the surface is to the ground truth model, the better. However, tracking may fail for various reasons, e.g., when there is little overlap between successive frames, when the model or depth image contains noise or when there is not enough geometric variation to properly constrain the pose estimation. In some of these cases, the maps that offer simplified approximations to the original distance field fare better. The robustness in tracking is most likely owed to the denoising effect that the encoding has, as evidenced by the performance on the Gaussian blurred map. Of the encoded maps, we see that the AE compression results in better pose estimation. In

Figure 4, we see a slice through a volume color-coded by distance. Here, we note that, even though the PCA-based map is more similar to the original, on the left side of the image, it is evident that the field is not monotonically increasing away from the surface. Such artifacts cause the field gradient to point in the wrong direction, possibly contributing to failure in finding the correct alignment. The large difference between the median and mean values for the pose estimation errors are indicative of mostly accurate pose estimations, with occasional gross misalignments.

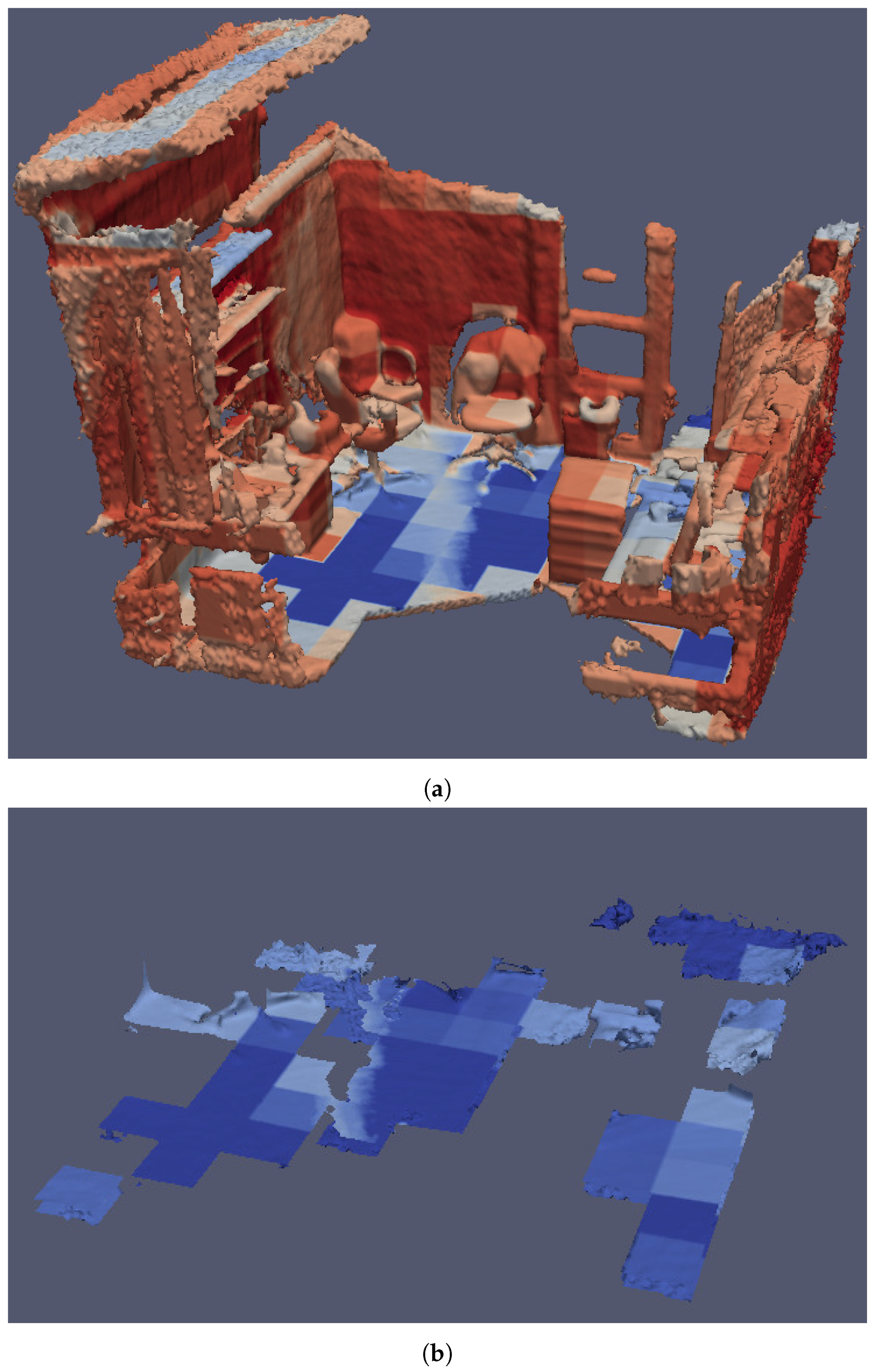

5.3. Selective Feature-Based Map Expansion

Although the descriptors we obtain are clearly not invariant to affine transformations (if they were, the decompression would not reproduce the field in its correct location/orientation), we can still create descriptor-based models for geometries of particular interest by sampling their TSDFs over the range of transformations to which we want the model to be invariant. If information about the orientation of the map is known a priori, e.g., some dominant structures are axis-aligned with the voxel lattice, or dominant structures are orthogonal to each other, the models can be made even smaller. In the example illustrated in

Figure 5, a descriptor-based model for floors was first created by encoding the TSDFs of horizontal planes at 15 different offsets, generating one 64-element vector each. Each descriptor in the compressed map can then be compared to this small model by the squared norm of their differences and only those beneath a threshold of similarity need to be considered for expansion. Here, an advantage of the PCA-based encoding becomes evident: since PCA generates its linear subspace in an ordered manner, feature vectors of different dimensionality can be tested for similarity up to the number of elements of the smallest, i.e., a 32-dimensional feature descriptor can be matched against the first half of a 64-dimensional feature descriptor. This property is useful in handling multiple levels of compression, for different applications, whilst maintaining a common way to describe them.

5.4. Large-Scale Mapping

By extending the SDF Tracker algorithm with a moving active volume centered around the camera translation vector, for every 16-voxel increment, we can encode the voxel blocks exiting the active TSDF on the lagging end, (if not empty), and decode the voxel blocks entering the active TSDF on the front end (if they contain a previously stored descriptor). This allows mapping much larger areas, since each voxel block can be reduced from its 4096 voxels to the chosen size of a descriptor or a token, if the block is empty. Since the actual descriptor computation happens on the GPU, the performance impact on the tracking and mapping part of the algorithm is not too severe. An example of this is shown in

Figure 6.

The tracking and mapping algorithm itself is a real-time capable system with performance that scales with the size of the volume and resolution of the depth images given as input. While it only handles volumes of approximately voxels at QVGA resolution (320 × 240 pixels) and lower at 30 Hz, it does so solely on an Intel i7-4770K 3.50 GHz CPU (Örebro, Sweden). This leaves the GPU free to perform on-the-fly compression and decompression.

Timing the execution of copying data to the GPU, encoding, decoding and copying it back to the main CPU memory results in an average between 405 and 520 s per block of voxels on a Nvidia GTX Titan GPU (Örebro, Sweden). For an active TSDF volume of 160 × 160 × 160 voxels, shifting one voxel-block sideways would result in 100 blocks needing to be encoded, 100 blocks decoded, and memory in both directions’ transfers, in the worst case. Our round-trip execution timing puts this operation at approximately 41 to 52 milliseconds.

The span in timings depend on the encoding method used, with PCA-based encoding representing the lower end and ANN the upper, for descriptors of 128 elements.

6. Conclusions

In this paper, we presented the use of dimensionality reduction of TSDF volumes, which lie at the core of many algorithms across a wide domain of applications with close ties to robotics. We proposed PCA and ANN encoding strategies and evaluated their performance with respect to a camera tracking application and to reconstruction error.

We demonstrate that we can compress volumetric data using PCA and neural nets to small sizes (between 128:1 and 32:1) and still use them in camera tracking applications with good results. We show that PCA produces superior reconstruction results and although neural nets have inherently greater expressive power, training them is not straightforward, often resulting in lower quality reconstructions but nonetheless offering slightly better performance in ego-motion estimation applications. Finally, we have shown that this entire class of methods can be successfully applied to both compress and imbue the data with some low-level semantic meaning and suggested an application in which both of these characteristics are simultaneously desirable.