Convolutional Neural Network based Estimation of Gel-like Food Texture by a Robotic Sensing System

Abstract

:1. Introduction

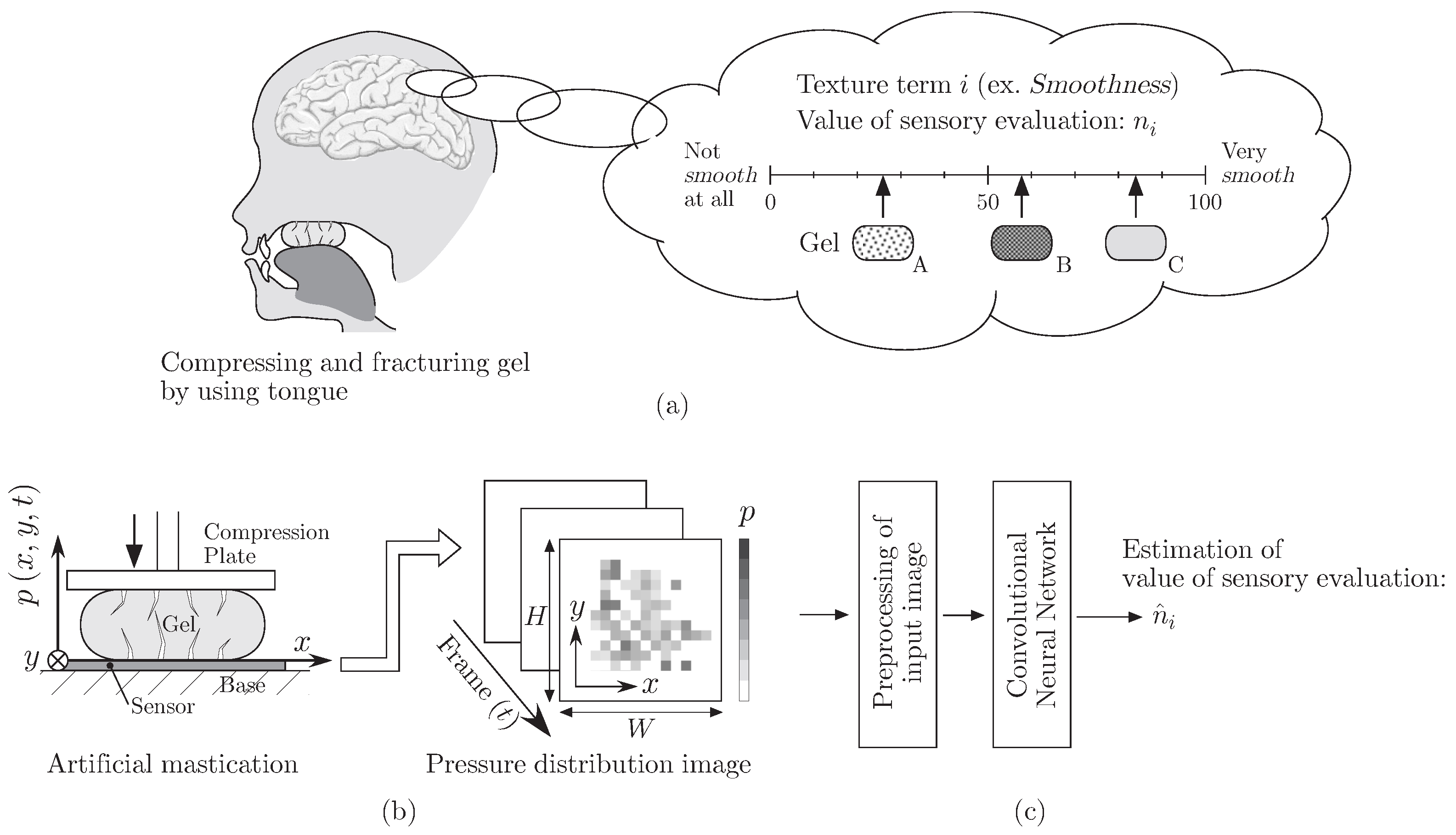

2. Outline of the Proposed Sensing System

3. Pressure Distribution Measurement

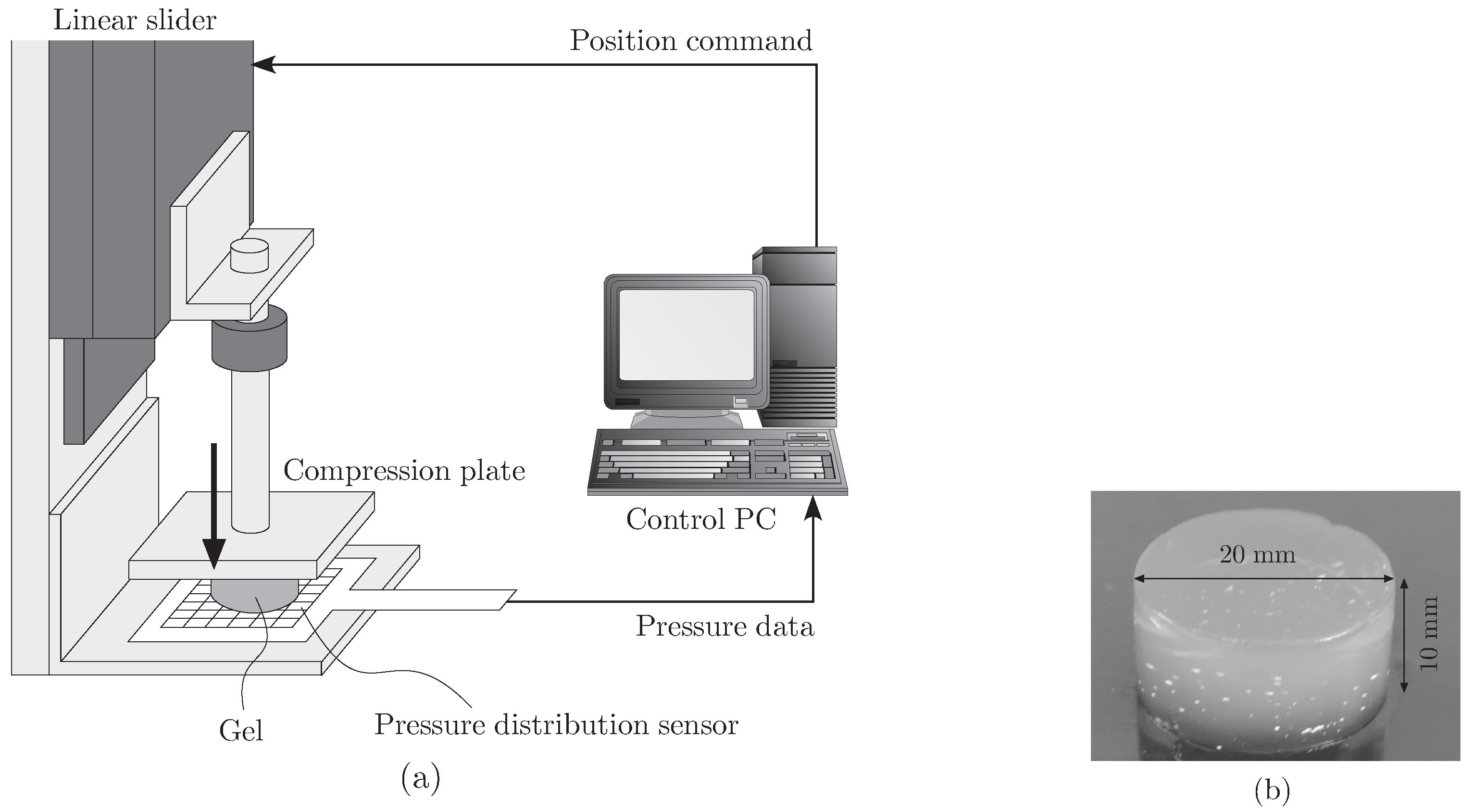

3.1. Artificial Mastication

3.2. Pressure Distribution Image

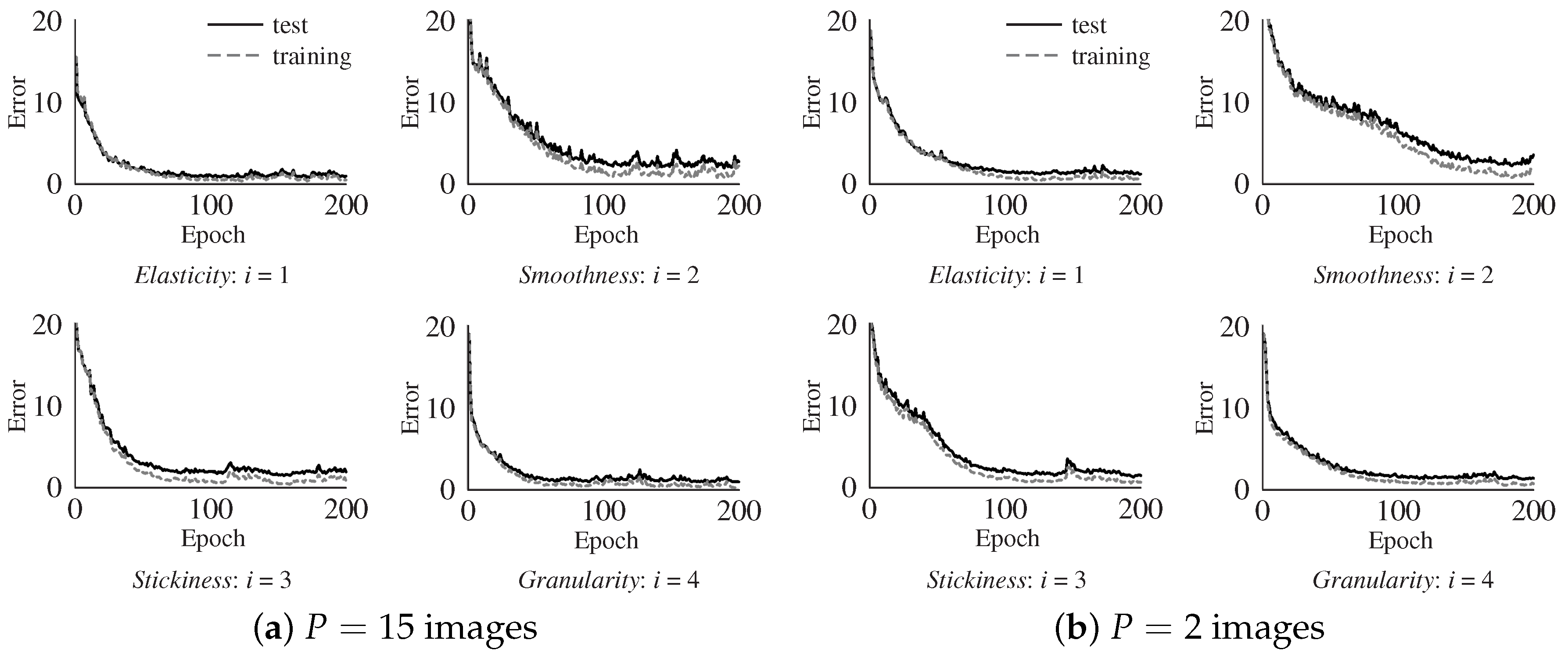

4. Texture Estimation Processing Using CNN

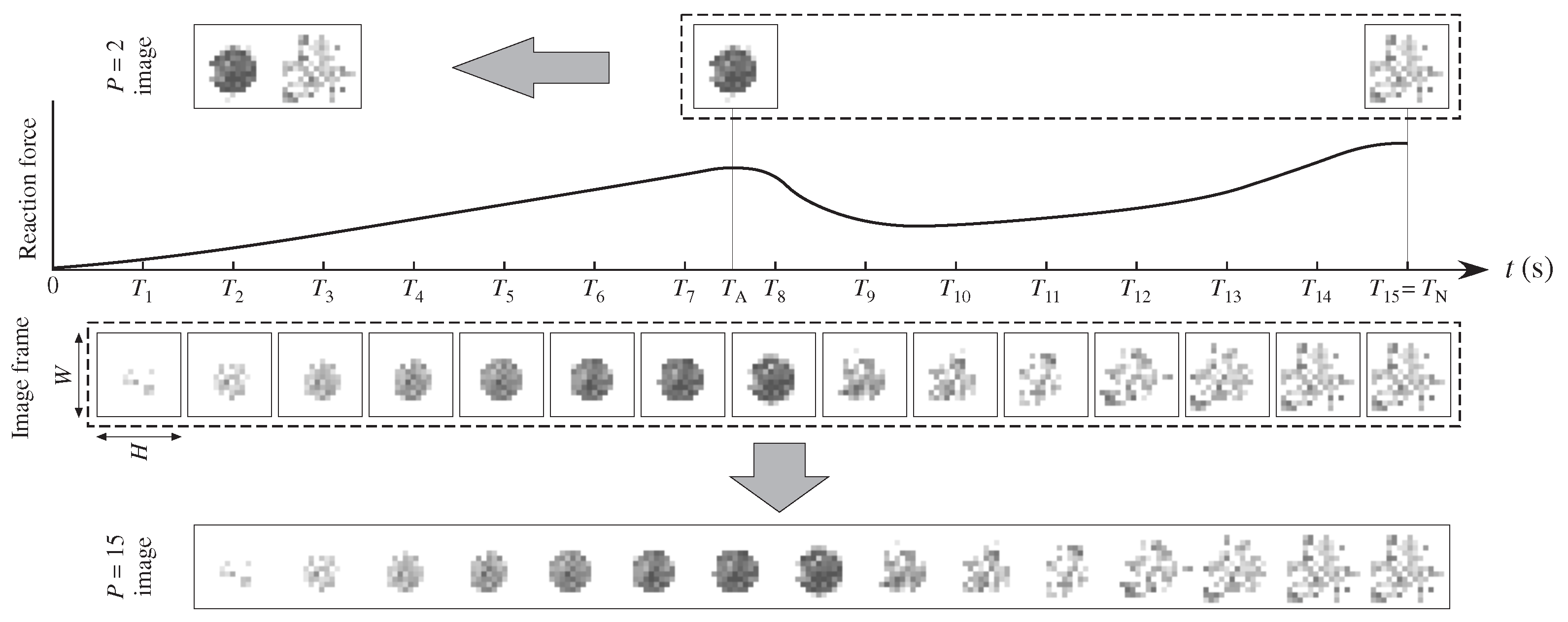

4.1. Input Image

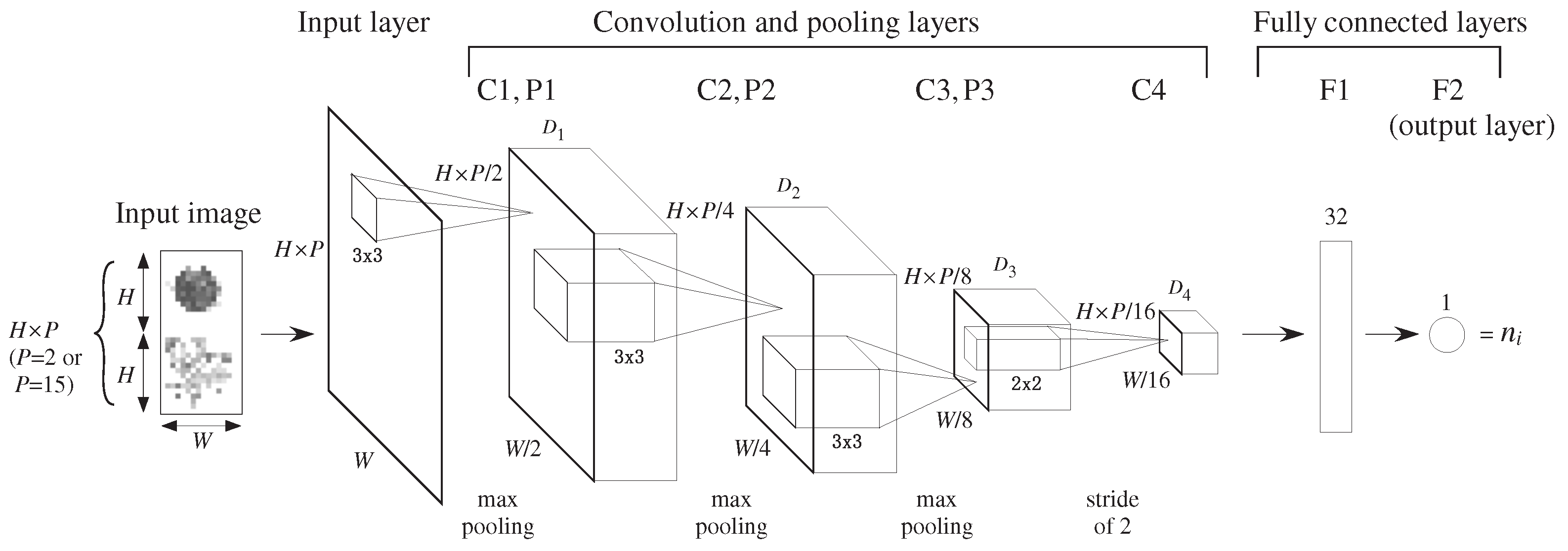

4.2. CNN Model

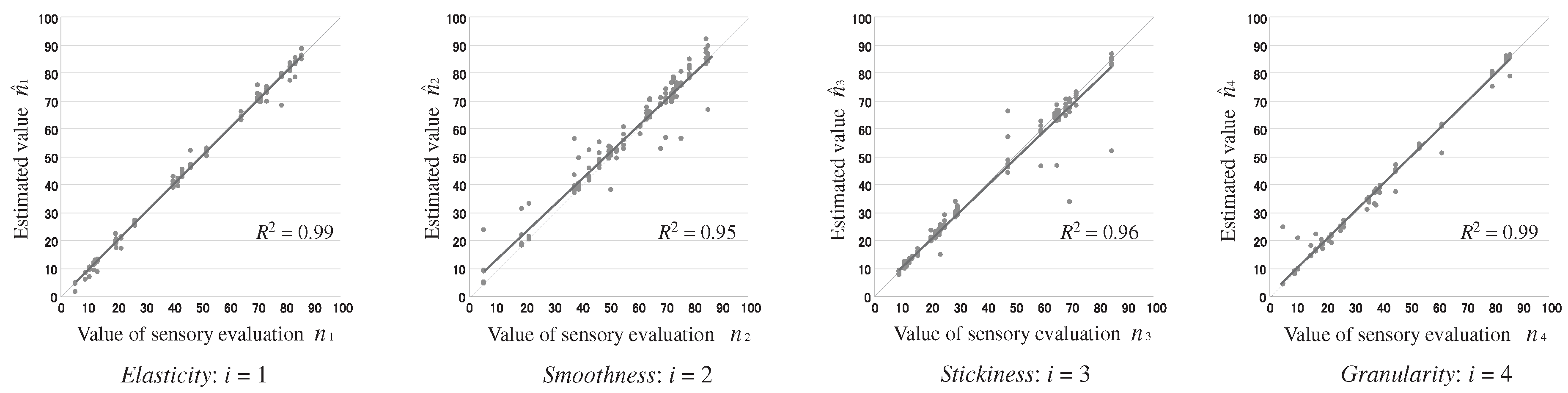

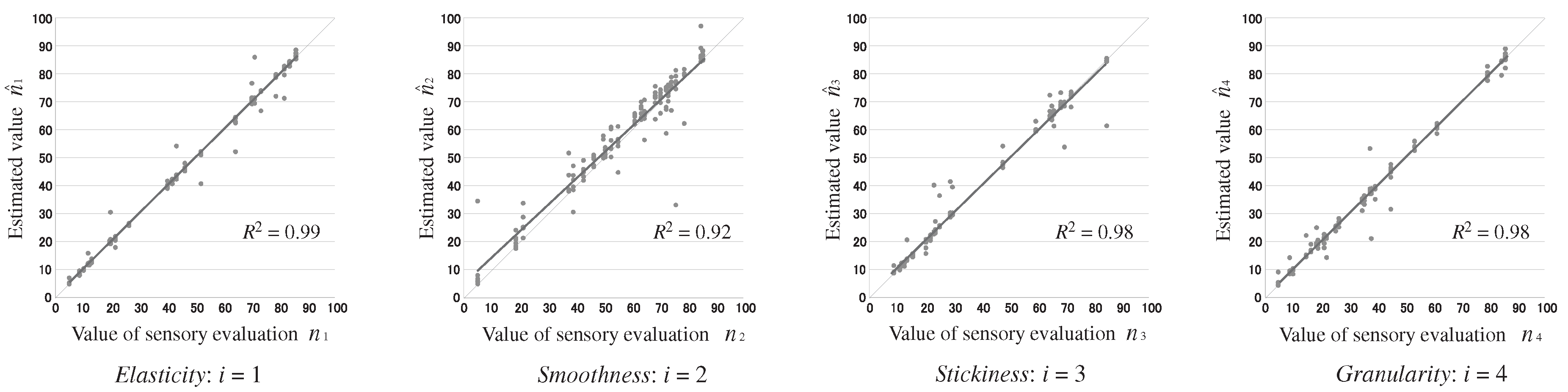

5. Experimental Validation

5.1. Materials and Method

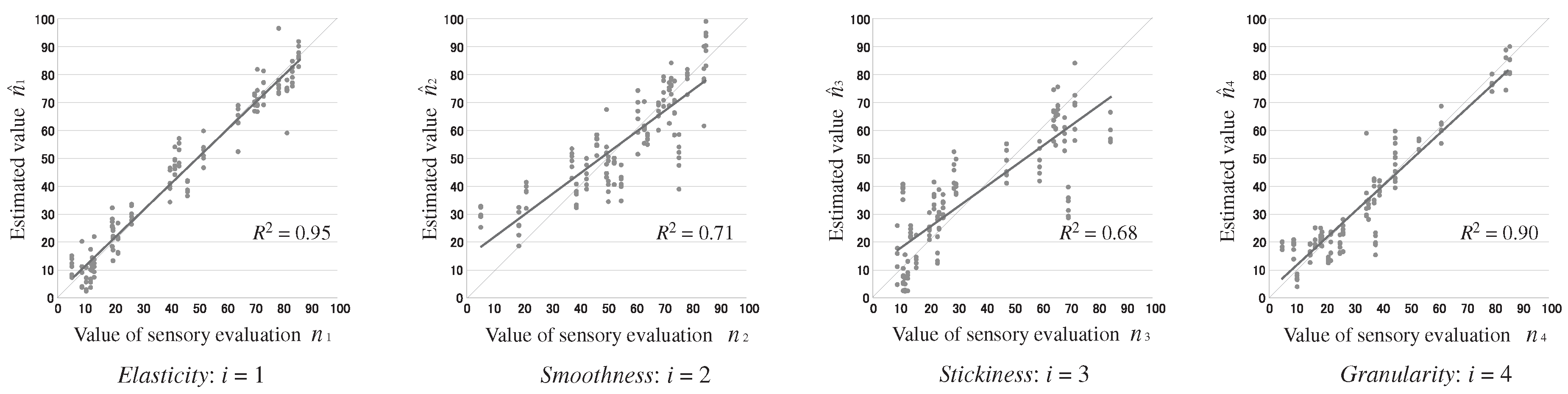

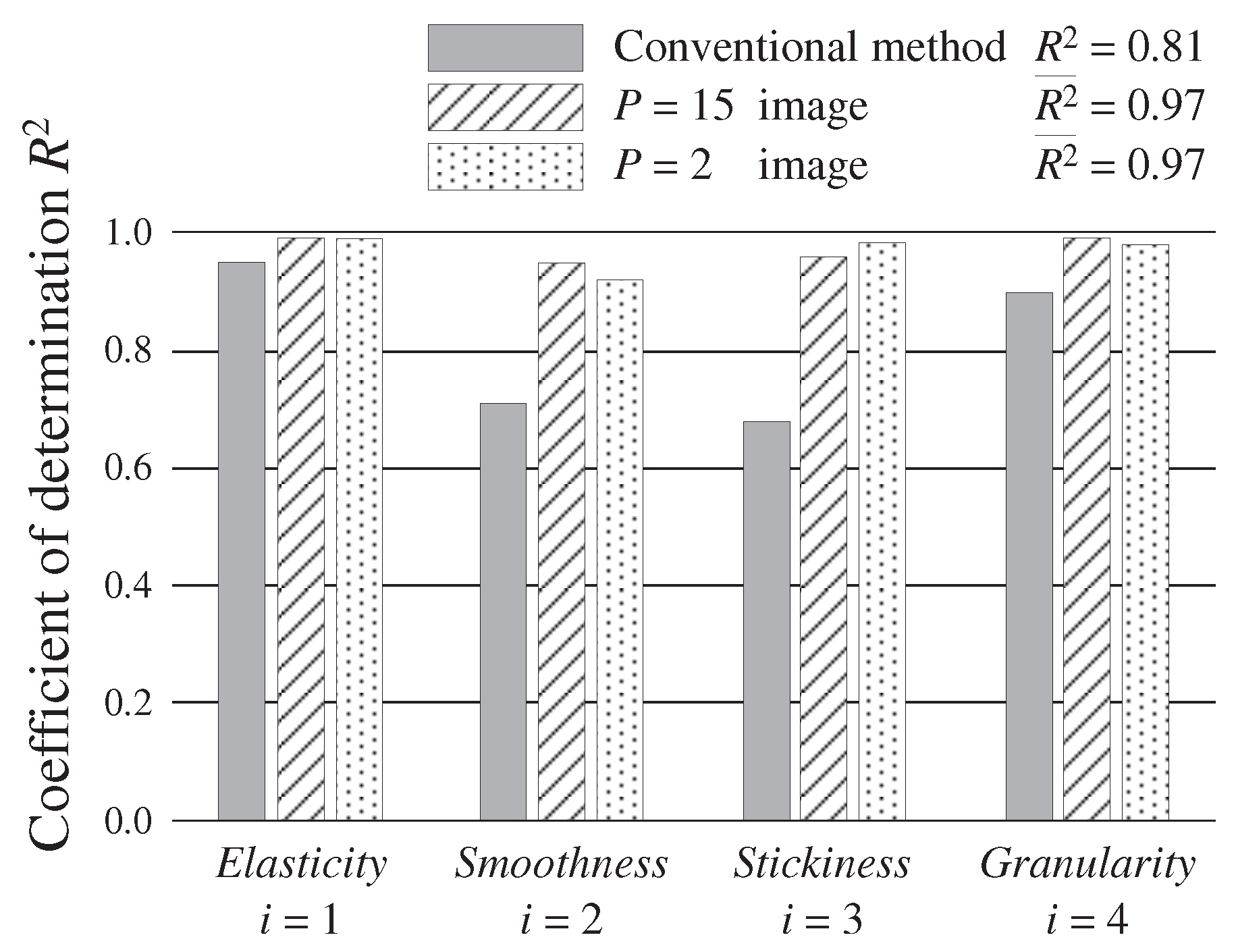

5.2. Results and Discussion

6. Conclusions

Author Contributions

Conflicts of Interest

References

- Lederman, S.J.; Jones, L.A. Tactile and Haptic Illusions. IEEE Trans. Haptics 2011, 4, 273–294. [Google Scholar] [CrossRef] [PubMed]

- Jimenez, M.C.; Fishel, J.A. Evaluation of force, vibration and thermal tactile feedback in prosthetic limbs. In Proceedings of the IEEE Haptics Symposium, Houston, TX, USA, 23–26 February 2014; pp. 437–441. [Google Scholar]

- Hoshi, T.; Takahashi, M.; Iwamoto, T.; Shinoda, H. Noncontact Tactile Display Based on Radiation Pressure of Airborne Ultrasound. IEEE Trans. Haptics 2010, 3, 155–165. [Google Scholar] [CrossRef] [PubMed]

- Nishinari, K. Texture and Rheology in Food and Health. Food Sci. Technol. Res. 2009, 15, 99–106. [Google Scholar] [CrossRef]

- Funami, T. Next Target for Food Hydrocolloid Studies: Texture Design of Foods Using Hydrocolloid Technology. Food Hydrocoll. 2011, 25, 1904–1914. [Google Scholar] [CrossRef]

- Nishinari, K. Rheology, Food Texture and Mastication. J. Texture Stud. 2004, 35, 113–124. [Google Scholar] [CrossRef]

- Nishinari, K.; Hayakawa, F.; Xia, C.-F.; Huang, L.; Meullenet, J.-F.; Sieffermann, J.-M. Comparative Study of Texture Terms: English, French, Japanese and Chinese. J. Texture Stud. 2008, 39, 530–568. [Google Scholar] [CrossRef]

- Szczesniak, A.S. Texture is a Sensory Property. Food Qual. Preference 2002, 13, 215–225. [Google Scholar] [CrossRef]

- Bourne, M.C. Food Texture and Viscosity, Second Edition: Concept and Measurement; Academic Press: Cambridge, MA, USA, 2002; ISBN 978-0-12-119062-0. [Google Scholar]

- Stable Micro Systems Ltd. Available online: http://www.stablemicrosystems.com/ (accessed on 24 November 2017).

- Illinois Tool Works Inc. Available online: http://www.instron.com/ (accessed on 24 November 2017).

- Iwata, H.; Yano, H.; Uemura, T.; Moriya, T. Food Texture Display. In Proceedings of the 12th International Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Chicago, IL, USA, 27–28 March 2004; pp. 310–315. [Google Scholar]

- Sun, C.; Bronlund, J.E.; Huang, L.; Morgenstern, M.P.; Xu, W.L. A Linkage Chewing Machine for Food Texture Analysis. In Proceedings of the 15th International Conference on Mechatronics and Machine Vision in Practice, Auckland, New Zealand, 2–4 December 2008; pp. 299–304. [Google Scholar]

- Xu, W.L.; Torrance, J.D.; Chen, B.Q.; Potgieter, J.; Bronlund, J.E.; Pap, J.S. Kinematics and Experiments of a Life-Sized Masticatory Robot for Characterizing Food Texture. IEEE Trans. Ind. Electron. 2008, 55, 2121–2132. [Google Scholar] [CrossRef]

- Hoebler, C.; Karinthi, A.; Devaux, M.F.; Guillon, F.; Gallant, D.J.; Bouchet, B.; Melegari, C.; Barry, J.L. Physical and Chemical Transformations of Cereal Food During Oral Digestion in Human Subjects. Br. J. Nutr. 1998, 80, 429–436. [Google Scholar] [CrossRef] [PubMed]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Arvisenet, G.; Billy, L.; Poinot, P.; Vigneau, E.; Bertrand, D.; Prost, C. Effect of Apple Particle State on the Release of Volatile Compounds in a New Artificial Mouth Device. J. Agric. Food Chem. 2008, 56, 3245–3253. [Google Scholar] [CrossRef] [PubMed]

- Tournier, C.; Grass, M.; Zope, D.; Salles, C.; Bertrand, D. Characterization of Bread Breakdown During Mastication by Image Texture Analysis. J. Food Eng. 2012, 113, 615–622. [Google Scholar] [CrossRef]

- Kohyama, K.; Nishi, M.; Suzuki, T. Measuring Texture of Crackers with a Multiple-Point Sheet Sensor. J. Food Sci. 1997, 62, 922–925. [Google Scholar] [CrossRef]

- Dan, H.; Azuma, T.; Kohyama, K. Characterization of Spatiotemporal Stress Distribution During Food Fracture by Image Texture Analysis Methods. J. Food Eng. 2007, 81, 429–436. [Google Scholar] [CrossRef]

- Yamamoto, T.; Higashimori, M.; Nakauma, M.; Nakao, S.; Ikegami, A.; Ishihara, S. Pressure Distribution-Based Texture Sensing by Using a Simple Artificial Mastication System. In Proceedings of the 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 27–31 August 2014; pp. 864–869. [Google Scholar]

- Johnson, M.K.; Adelson, E.H. Retrographic sensing for the measurement of surface texture and shape. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1070–1077. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems 25 (NIPS 2012), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the CVPR2015, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the CVPR2016, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- I-Scan System. Available online: http://www.tekscan.com/products-solutions/systems/i-scan-system (accessed on 24 November 2017).

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features With 3D Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Washington, DC, USA, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Saitoh, T.; Zhou, Z.; Zhao, G.; Pietikainen, M. Concatenated Frame Image Based CNN for Visual Speech Recognition. In Proceedings of the Computer Vision – ACCV 2016 Workshops Part II, Taipei, Taiwan, 20–24 November 2016; pp. 277–289. [Google Scholar]

- Wewers, M.E.; Lowe, N.K. A Critical Review of Visual Analogue Scales in the Measurement of Clinical Phenomena. Res. Nurs. Health 1990, 13, 227–236. [Google Scholar] [CrossRef] [PubMed]

- Arlot, S. A Survey of Cross-Validation Procedures for Model Selection. Stat. Surv. 2010, 4, 40–79. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

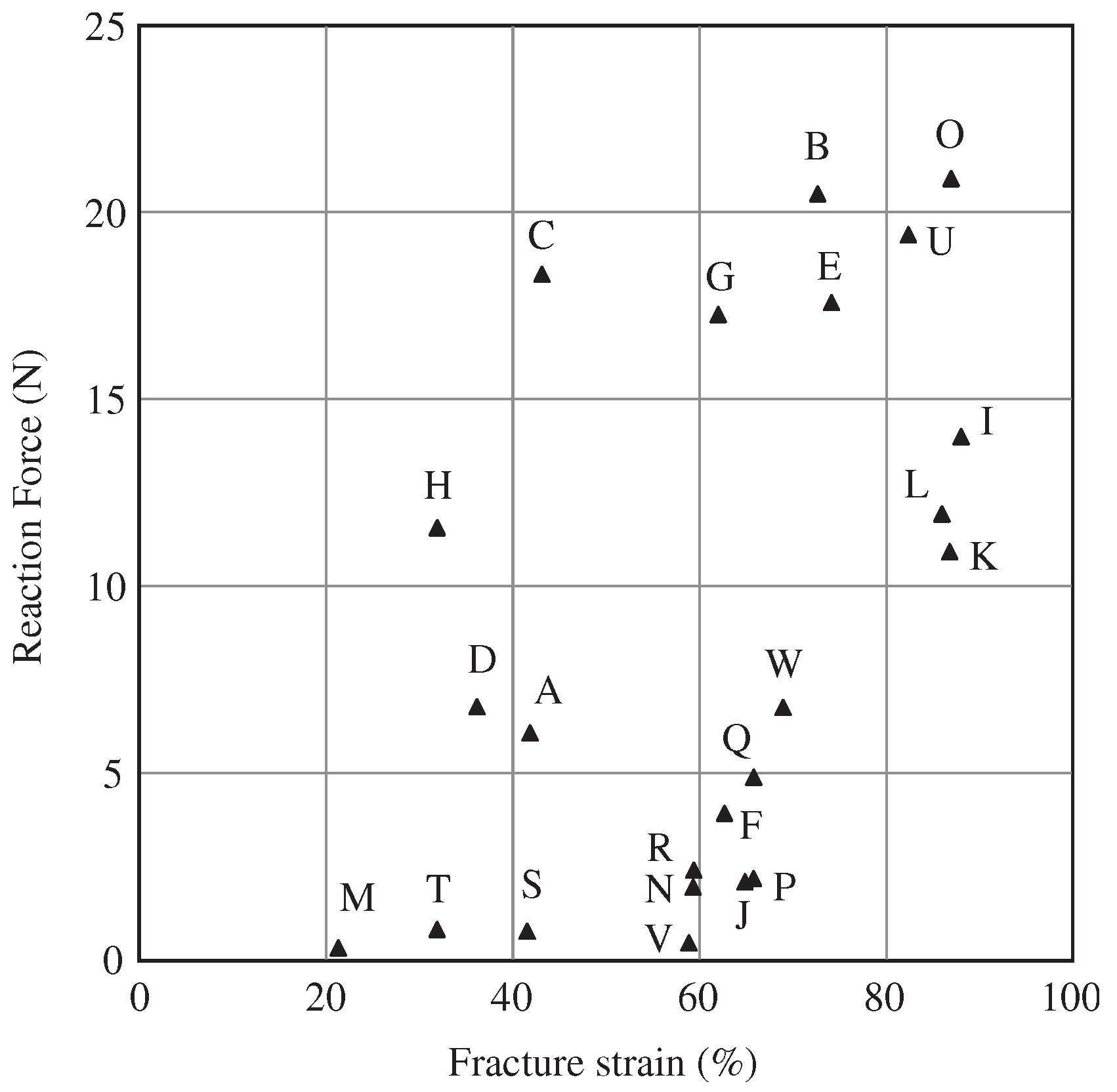

| Gel-Like Food | Elasticity | Smoothness | Stickiness | Granularity |

|---|---|---|---|---|

| A | 11.8 | 70.3 | 11.5 | 61.5 |

| B | 82.0 | 61.2 | 21.9 | 45.0 |

| C | 19.5 | 84.8 | 12.5 | 86.0 |

| D | 13.0 | 73.1 | 10.9 | 53.5 |

| E | 83.9 | 21.2 | 85.0 | 34.8 |

| F | 19.8 | 78.9 | 13.5 | 45.0 |

| G | 26.4 | 85.5 | 15.5 | 79.6 |

| H | 10.1 | 74.3 | 8.8 | 84.6 |

| I | 73.6 | 52.7 | 64.6 | 25.3 |

| J | 52.1 | 75.9 | 29.0 | 22.0 |

| K | 70.4 | 42.8 | 72.3 | 16.4 |

| L | 79.0 | 49.9 | 66.1 | 18.4 |

| M | 5.0 | 4.9 | 10.8 | 38.0 |

| N | 43.4 | 39.1 | 65.4 | 14.6 |

| O | 86.1 | 72.4 | 23.1 | 26.4 |

| P | 46.4 | 37.5 | 29.8 | 21.1 |

| Q | 41.9 | 63.5 | 20.3 | 39.4 |

| R | 40.1 | 64.6 | 25.1 | 18.9 |

| S | 12.3 | 55.2 | 69.9 | 4.6 |

| T | 8.6 | 50.6 | 23.6 | 8.8 |

| U | 71.4 | 68.5 | 47.8 | 37.5 |

| V | 21.5 | 18.5 | 59.6 | 9.9 |

| W | 64.5 | 46.4 | 68.6 | 35.5 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shibata, A.; Ikegami, A.; Nakauma, M.; Higashimori, M. Convolutional Neural Network based Estimation of Gel-like Food Texture by a Robotic Sensing System. Robotics 2017, 6, 37. https://doi.org/10.3390/robotics6040037

Shibata A, Ikegami A, Nakauma M, Higashimori M. Convolutional Neural Network based Estimation of Gel-like Food Texture by a Robotic Sensing System. Robotics. 2017; 6(4):37. https://doi.org/10.3390/robotics6040037

Chicago/Turabian StyleShibata, Akihide, Akira Ikegami, Makoto Nakauma, and Mitsuru Higashimori. 2017. "Convolutional Neural Network based Estimation of Gel-like Food Texture by a Robotic Sensing System" Robotics 6, no. 4: 37. https://doi.org/10.3390/robotics6040037