1. Introduction

Object reconstruction is one of the primary tasks in the field of remote sensing image interpretation. It can obtain useful features with respect to objects, such as the shape and the size, and can thereby improve the accuracy and efficiency of target interpretation. However, according to the special imaging mechanism, geometric features such as double-bounce, layover and multi-bounce, and scattering centre features are the main features of synthetic aperture radar (SAR) images. Objects in SAR images are composed of a series of strong scattering points and are different from objects in natural or optical images. Therefore, it is difficult to utilize features such as texture, etc., for computer-assisted object reconstruction in SAR images.

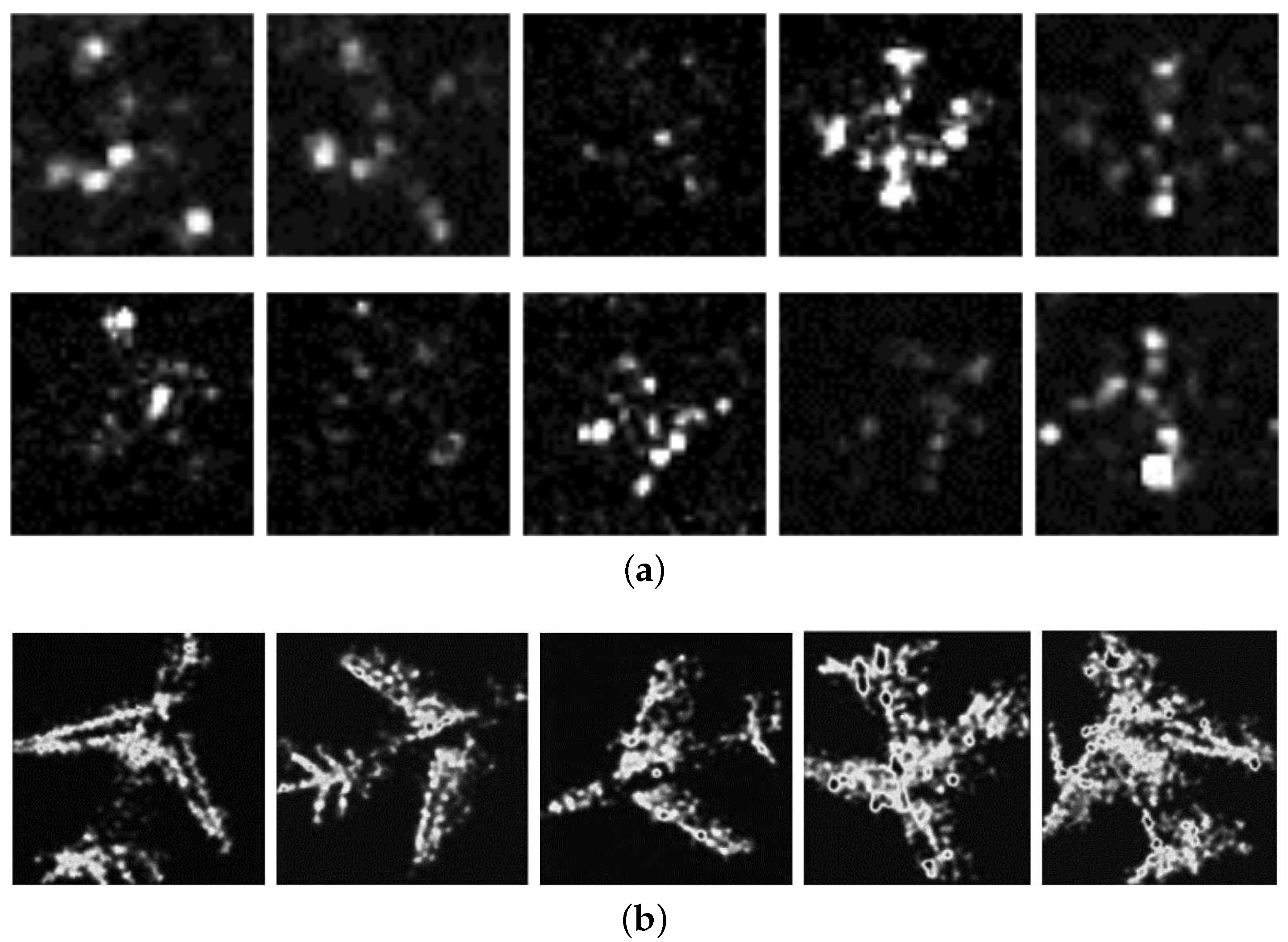

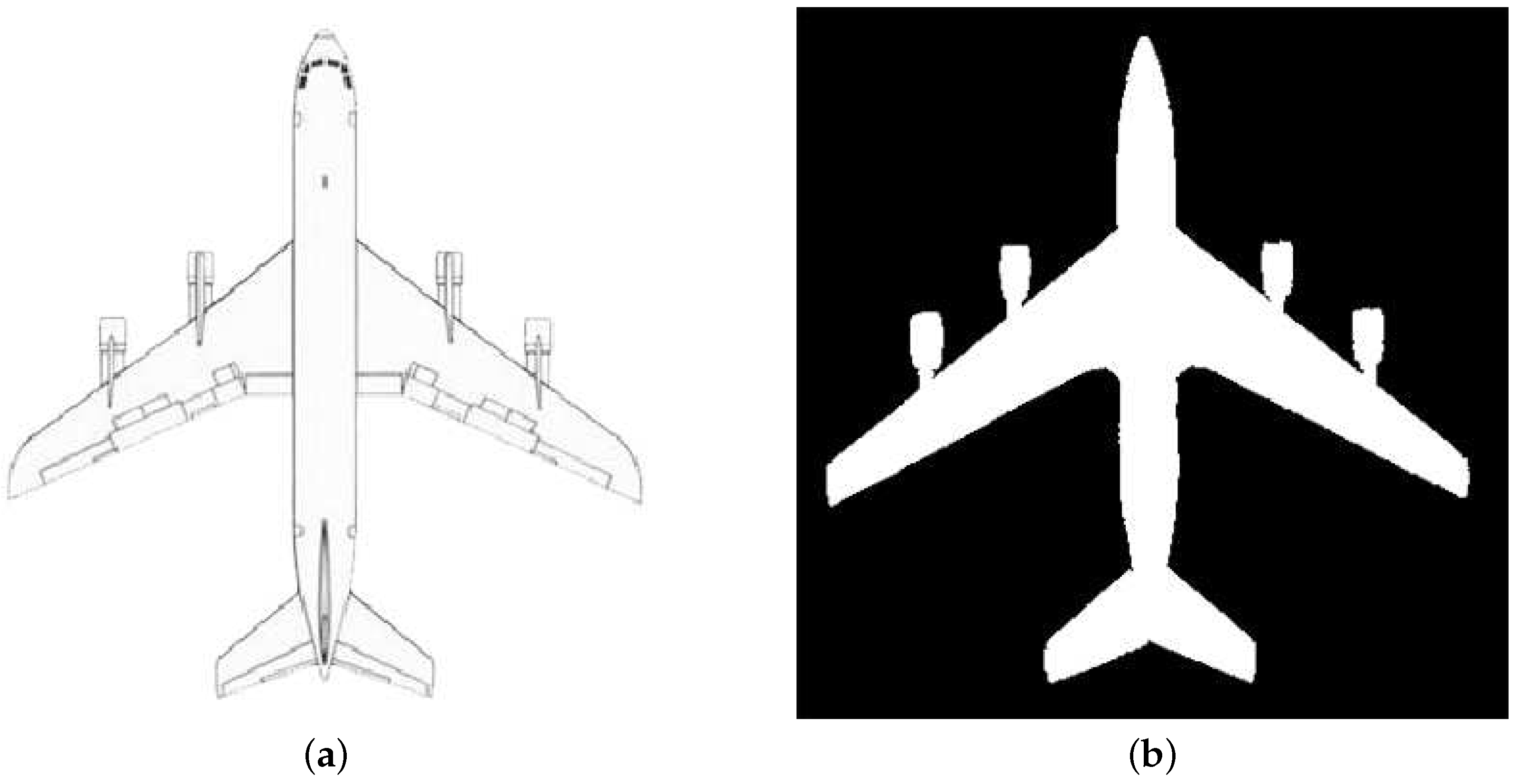

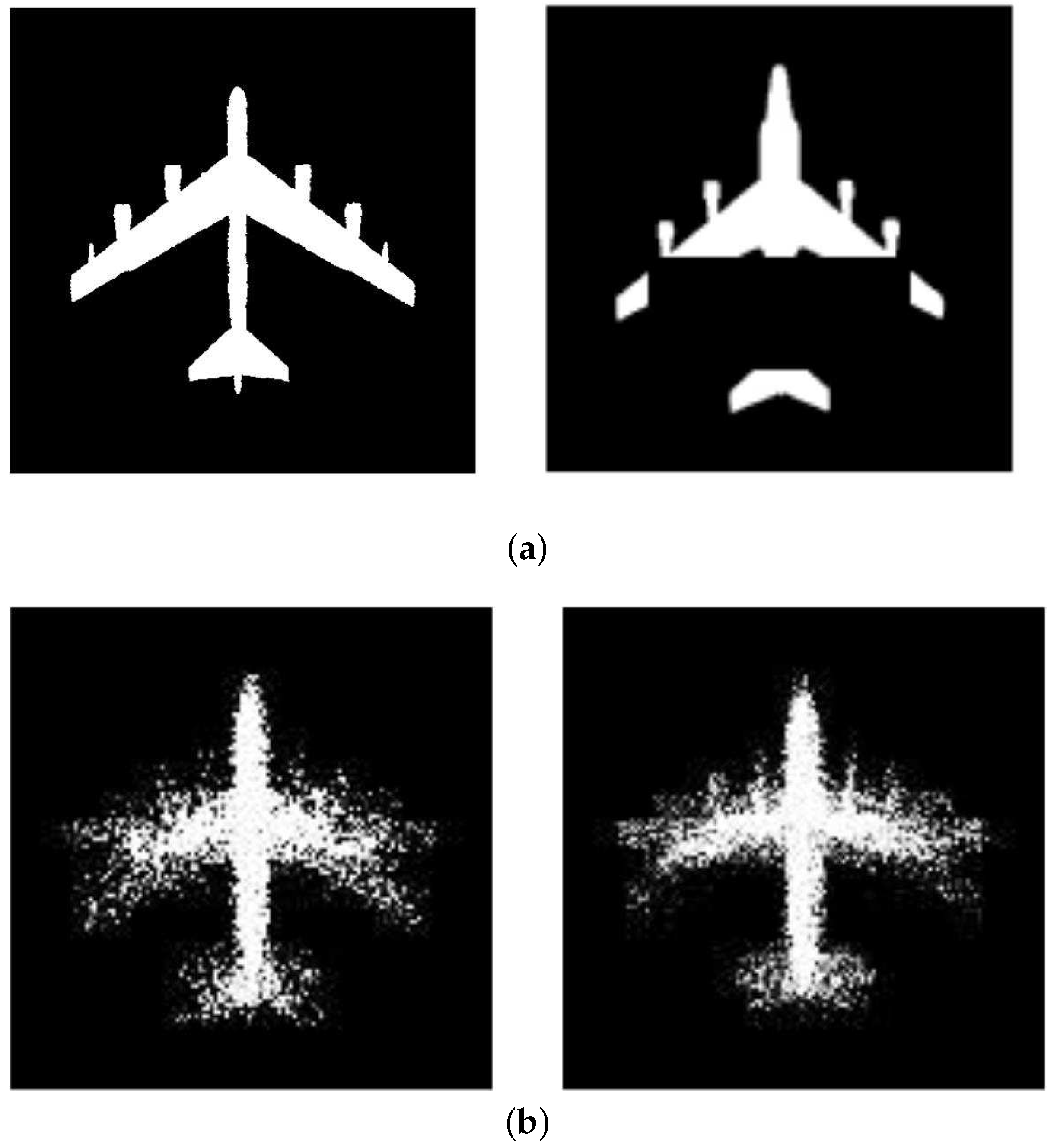

In low-resolution SAR images, as shown in

Figure 1a, strong scattering points are apparently independent and are clearly affected by azimuth, incident angle, and other imaging conditions. However, in high-resolution SAR images, many parts of the target generate strong scattering points, which makes the target more obvious and reduces effects on appearance. Therefore, the contour shape of the target is relatively stable under different imaging angles, as shown in

Figure 1b. Traditionally, the contour shape feature can be extracted by computer vision method, such as the graph cut method [

1], Chan-Vese segmentation method [

2], the shape-based global minimization active contour model (SGACM) method [

3] and so on.

However, taking the aircraft as an example, scattered information of oil patches on the ground as well as maintenance vehicles or loading vehicles near the gate position will be taken as parts of the aircraft. Moreover, the scattered information generated from some parts of the aircraft, such as smooth areas of the fuselage and plane areas of wings, is not obvious, which brings about partial deletion. Under the influence of the noise and the partial deletion mentioned above, the shape feature cannot be directly and easily obtained, thus it is difficult to perform accurate object interpretation, such as object detection and recognition. Therefore, accurate extraction of the contour shape feature becomes one of the key steps to achieve accurate object interpretation results in SAR images.

For human beings, the interpretation of an object in SAR images is usually conducted by the prior knowledge. For example, based on the regularities of distribution of strong scattering points, the shape, the size and the other information of the object can be judged according to existing prior knowledge. Then, the type of the object can be recognized in accordance with this information. Therefore, it is crucial to use known prior information for computer-assisted SAR image interpretation.

In previous studies, the prior information used by the researchers mainly included the distribution of strong scattering points, the intensity of scattered points, and the shape of object information, etc. Chen et al. [

4] put forward a method of SAR object recognition based on key point detection and a feature matching strategy. Chang et al. [

5] and Tang et al. [

6] proposed a SAR object recognition method by means of SAR image simulation and scatter information research. Although these methods can achieve certain results, the information of scattering points in SAR images often changes with the imaging conditions, and it is difficult to obtain robust results only by using only the scattering point information. Zhang et al. [

7] proposed a top-down SAR image building reconstruction method. This method succeeded in integrating priors of roof shapes into the object reconstruction model and achieving successful results. In SAR images, most of the strong scattering points can be used to reconstruct the shape of the object. By using shape priors, the robustness of object interpretation can be greatly improved as compared with methods only using the scattering point information.

However, how to express the prior knowledge as a reasonable mathematical expression is a difficult problem. Simple shapes, such as building roofs, can be represented with simple mathematical models. However, it is difficult to model shape priors for objects with complex shapes, such as aircrafts. To address the above issue, this paper presents a novel method of aircraft reconstruction in SAR images based on deep shape prior modelling. Innovations and contributions of this paper are summarized as follows:

Firstly, the shape prior is used in SAR aircraft reconstruction. In addition, to address the issue of modelling such complex shapes for aircrafts, we propose training a generative deep Boltzmann machine (DBM) [

8] to model global and local structures of shapes as well as shape variations. After training a DBM, deep shape priors are represented by parameters of the DBM, which will be integrated into the underlying energy function to finally achieve the goal of object reconstruction.

Secondly, objects in SAR images are usually found in different poses, which is an obstacle when performing reconstruction. Without processing, the pose solution space is [0, 360). Since searching within the wide pose solution space is time consuming and the result easily trapped into local solutions, leading to low efficiency and accuracy, a pose estimation scheme is proposed which combines a novel coarse pose estimation method and a fine local pose estimation technique in the reconstruction stage. Specifically, the former coarse pose estimation method contains two main steps. In the symmetric transform step, the image is translated to a symmetric form and the pose of the object will be estimated within eight certain poses via using the transform invariant low-rank textures (TILT) method in Zhang et al. [

9] to avoid an exhaustive search in the space [0, 360). In the symmetric detection step, a correlation analysis method is conducted to detect the symmetric type and then obtain two candidate poses, which will be used when performing object reconstruction. Moreover, the latter fine local pose estimation technique is integrated into the optimization of the energy function and is detailed in Algorithm 1. In general, the proposed pose estimation scheme can significantly improve the efficiency and the accuracy of object reconstruction.

Thirdly, to address the issue of integrating deep shape priors into the aircraft reconstruction stage, an energy function is defined by integrating a scattering term and a shape term, among which the deep shape prior corresponds to the shape term. Moreover, scattering points and their edge information of the transformed image after coarse pose estimation form the scattering term. Finally, an improved iterative optimization method based on the split Bregman algorithm [

10] is introduced to minimize the energy function. To the best of our knowledge, this is the first attempt at reconstructing complex objects and extract information in SAR images using shape priors.

To verify the effectiveness of the proposed method, we have carried out experiments on a TerraSAR-X data set with a resolution of 0.5 m and 1.0 m. Experimental results show that the proposed method can reconstruct the object more robustly. In addition, we compare the proposed method with conventional methods based on contour segmentation. The results show that the use of deep shape priors can assist the object reconstruction, which greatly improves the accuracy of object reconstruction.

3. Methods

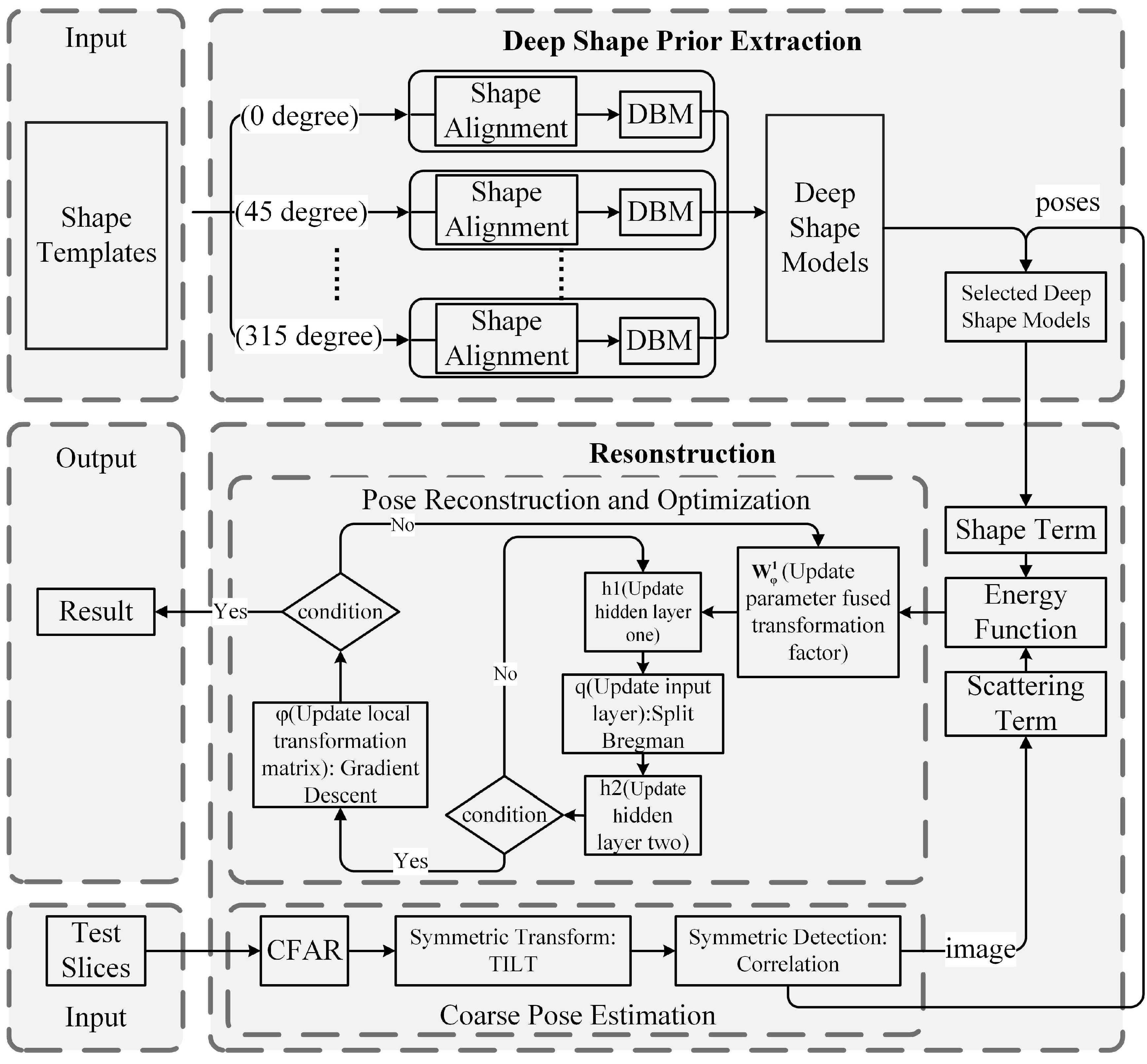

In this section, ideas of the proposed method as well as some implementation details will be described. As shown in

Figure 6, the proposed method contains two main stages: the deep shape prior modelling stage and the reconstruction stage. Specifically, the shape models pre-trained in the first stage are used as shape constraints in the second stage. To realize efficient object reconstruction, a coarse pose estimation method is conducted at the beginning of the object reconstruction stage to obtain candidate poses and the processed symmetric image, which makes the proposed method fit for objects in different orientations. Then, the selected shape priors with local transformation parameters and the processed SAR image are used to form the energy function. Finally, an iterative optimization algorithm is carried out to minimize the energy function.

Notably, the input data sets are preprocessed by the constant false alarm rate (CFAR) [

13] method to achieve background noise removal and improve the accuracy of coarse pose estimation and object reconstruction. More implementation details are summarized in the following subsections.

3.1. Deep Shape Prior Extraction

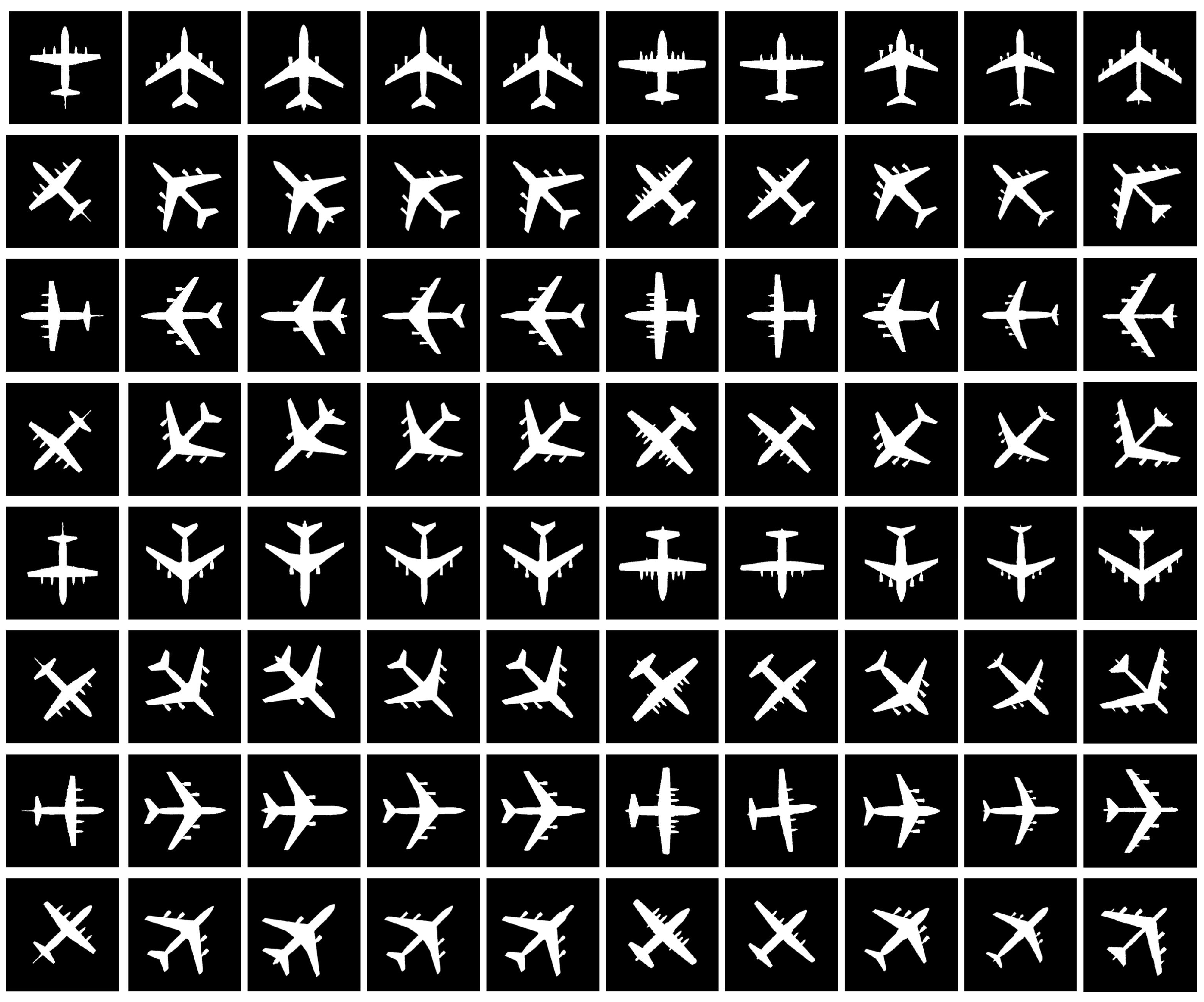

3.1.1. Shape Alignment

The shape is a typical feature for objects, which can characterize the distinctive structures of different objects or detailed structures of each object. Since generative deep learning models like DBM have the specific property of generating realistic samples, they can generate samples which are different from shapes in our training set [

14]. Since the size of the shape is fixed according to the known resolution, we just need to perform the shape alignment. To this end, the method of Liu et al. [

3] is used for the binary shape templates with the centre of gravity being aligned with formula defined as follows:

where

is the centroid of the shape template, while the variable

S denotes the pixel value of

. Then, the scale alignment is defined as follows:

where

and

represent normalization parameters, which are used to scale each shape template.

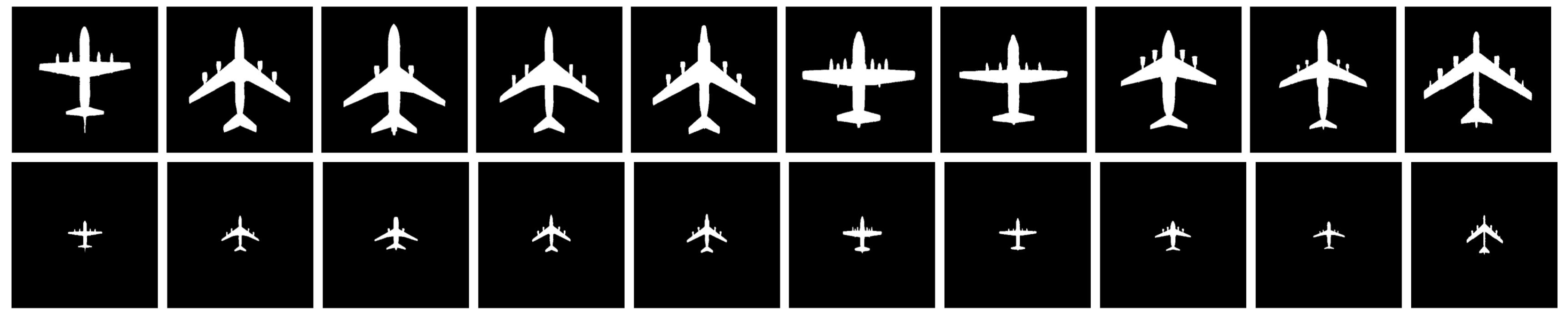

Figure 7 shows results of the shape alignment. As shown in this figure, shape templates are set to their center of gravity and are regularized to a certain scale, which can be translated to other scale with different resolution directly.

3.1.2. Shape Prior Models

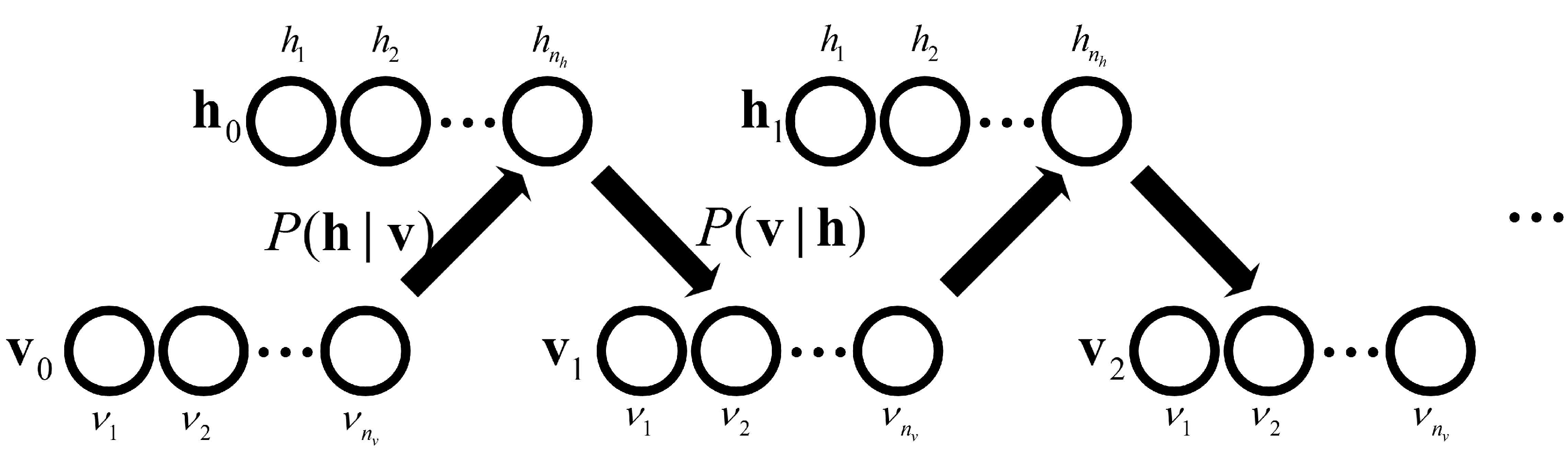

After the shape alignment, a three-layered DBM, which is shown in

Figure 8, is used to model the shape priors by maximizing the distribution of the visible layer, which is denoted by

. However, the calculation of

is difficult. Therefore, approximate inference is used via an efficient Gibbs sampling, which is detailed in [

8,

12] and is shown in

Figure 9. The energy function of the three-layered shape model is defined as:

where variables

,

and

are the first hidden layer, the second hidden layer and the visible layer of DBM, respectively. Parameters in

are DBM parameters, which can represent the deep shape prior, in which

and

are weight metrics between the visible layer and the first hidden layer, and the two hidden layers, respectively.

,

and

are biases of the first hidden layer, the second hidden layer and the visible layer, respectively. Some of these variables and parameters are labelled in

Figure 8.

After training the three-layered DBM, the processed image is imported to the network to obtain the shape image via the sampling process, which is shown in

Figure 9. Activation functions used are defined as follows:

where

is a sigmoid function. Variables listed above are also labelled in

Figure 9, in which variables

,

, and

are elements of the visible layer, the first hidden layer, and the second hidden layer of DBM, respectively. Parameters

and

are elements of

and

in Function (

3). Parameters

and

and

are elements of biases in the first hidden layer, the second hidden layer, and the visible layer, respectively.

In this paper, parameters are chosen as 100 for the first hidden layer and 300 for the second hidden layer with best performance. The parameter

, which responds to the input image, is visualized in

Figure 10 by a calculation function in [

15]. The figure reveals that the cross structure is obvious, which characterizes typical aircraft characteristics.

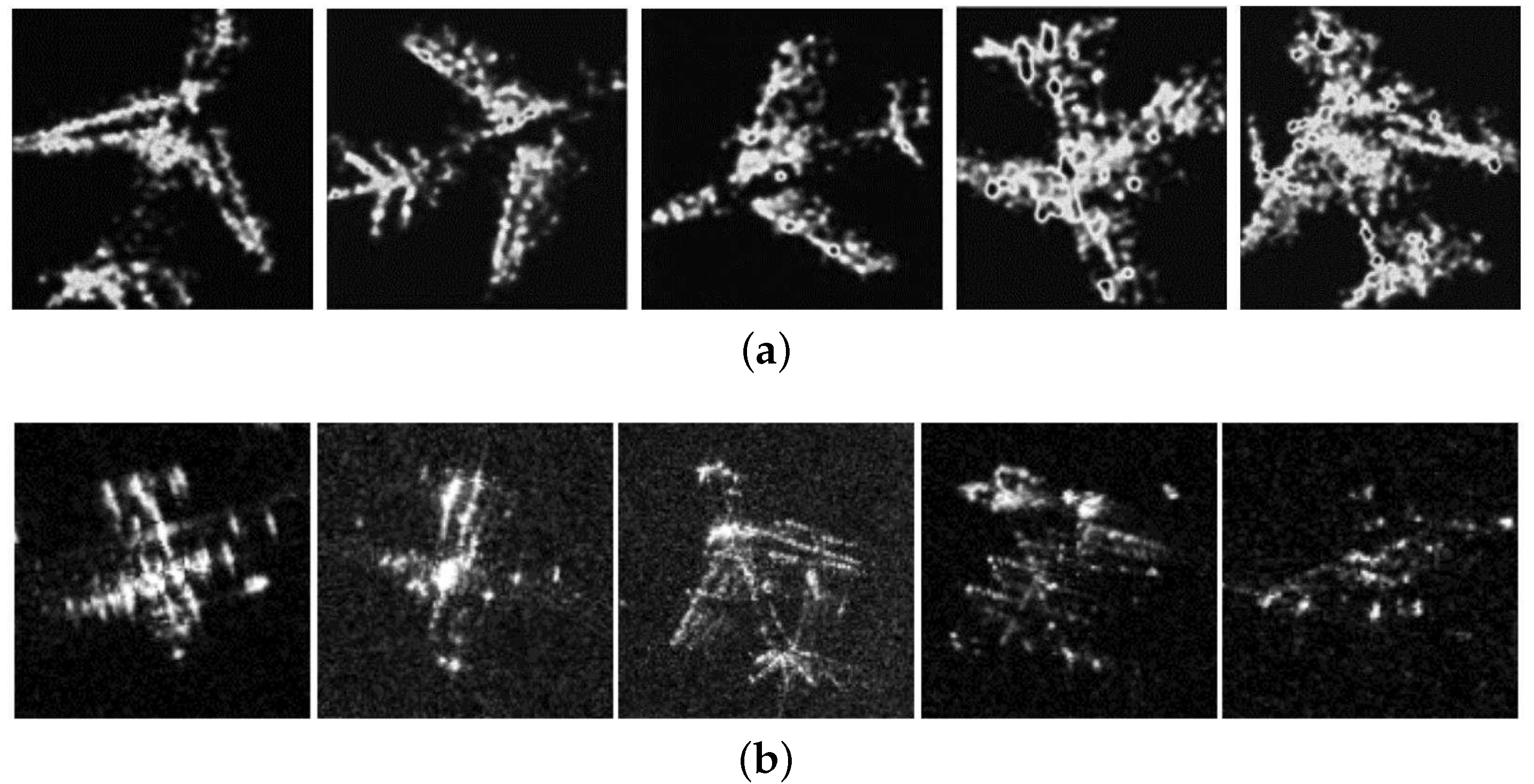

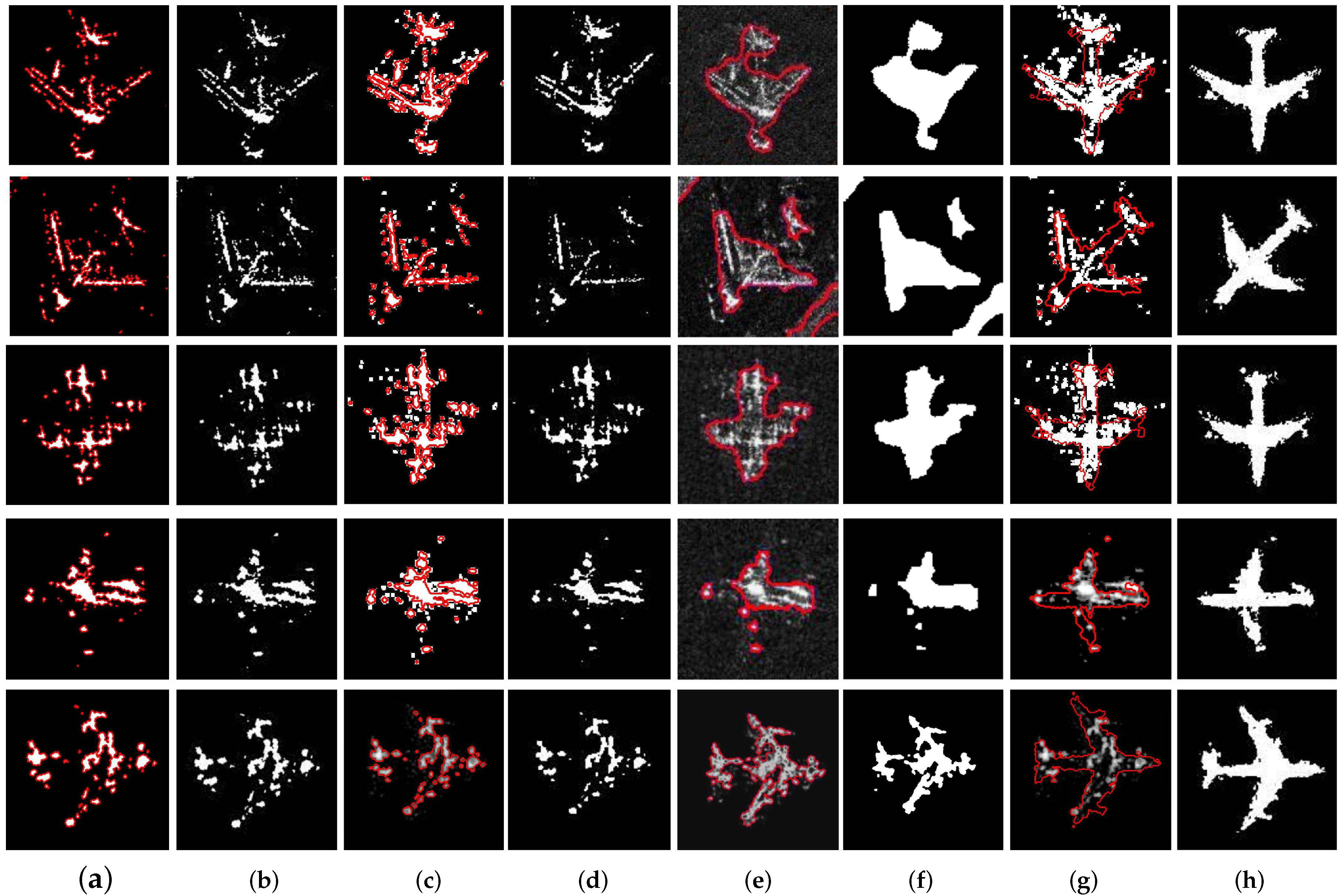

Owing to the representation of local and global features, the deep shape prior obtained in the former subsection is stable to objects with absent parts, i.e., the second column in

Figure 11a. As shown in

Figure 11b, the deep shape prior is still complete, although the object has absent parts. In SAR images, due to the special imaging mechanism, some parts of the objects are absent as shown in

Figure 2, which brings difficulties to object reconstruction. The deep shape prior can solve this challenge task via its insensitivity to components absence.

Up to this point, the DBM parameters , which are used as shape constraints in the reconstruction stage, can represent local and global features of shape templates.

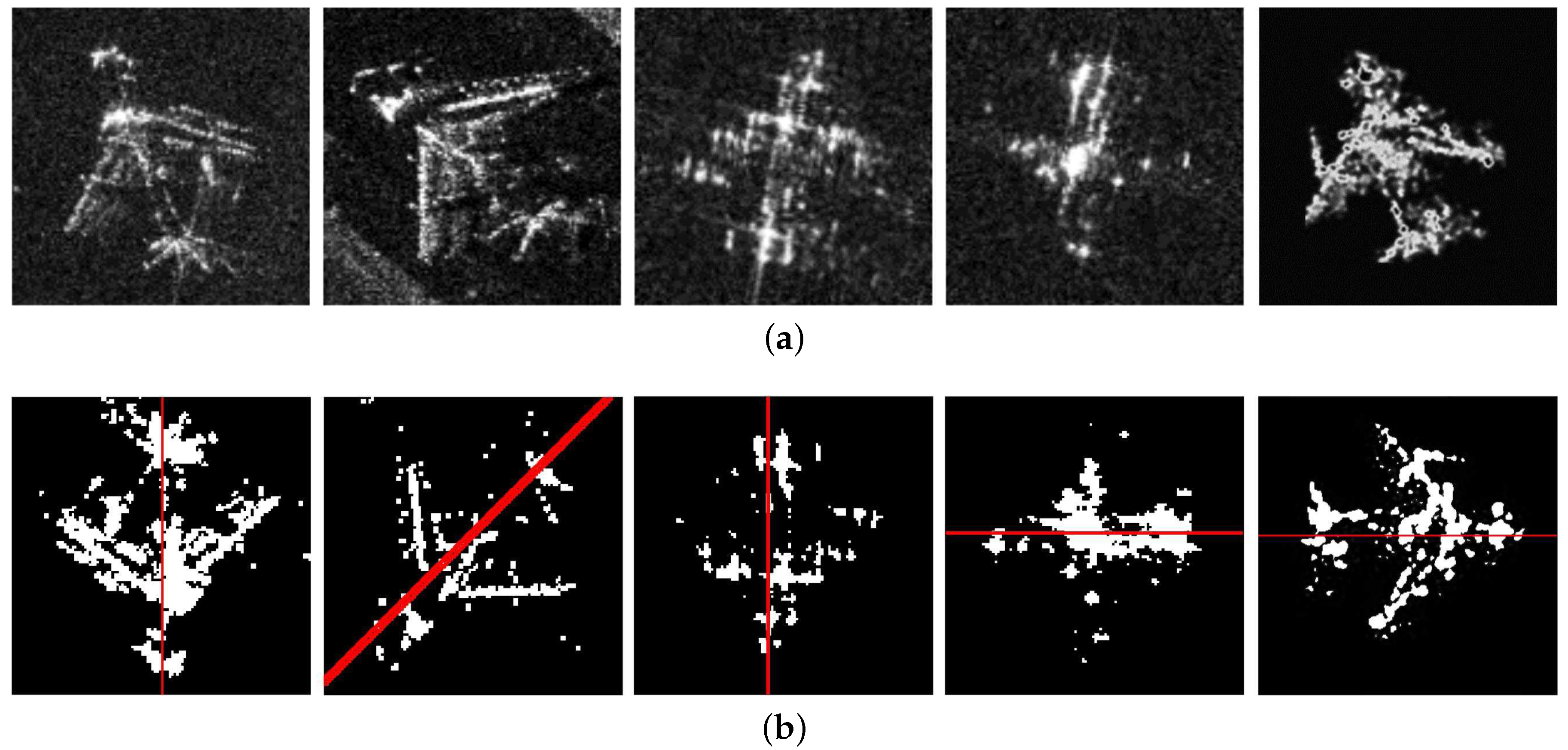

3.2. Coarse Pose Estimation

Objects in SAR images can be in any pose, which makes deep shape prior extraction be a challenging task. To address this issue, the coarse pose estimation method is described in this subsection. Poses of objects will be constrained into two specific values by means of symmetric transformation and symmetric detection. In this case, zygomorphic, laterally zygomorphic, principal-diagonal symmetry and counter-diagonal symmetry are corresponding to poses of 0 and 180, 90 and 270, 135 and 315, 45 and 225 degrees. This means that one type of symmetry only represents two candidate poses.

In the symmetric transform step, an approximate symmetric image can be translated by the TILT algorithm to obtain image (i.e., X) with approximate symmetric appearance within the above four symmetric styles. By doing so, only eight poses can be confirmed to be candidate poses.

In the symmetric detection step, correlation coefficients of the image matrix are used to determine the kind of symmetry it is, which can be defined as:

where

is the correlation coefficient of

X and

.

S and

D denote the symmetric type and the translation distance, respectively, while

represents the translated image of

X with a certain symmetric type and translation distance.

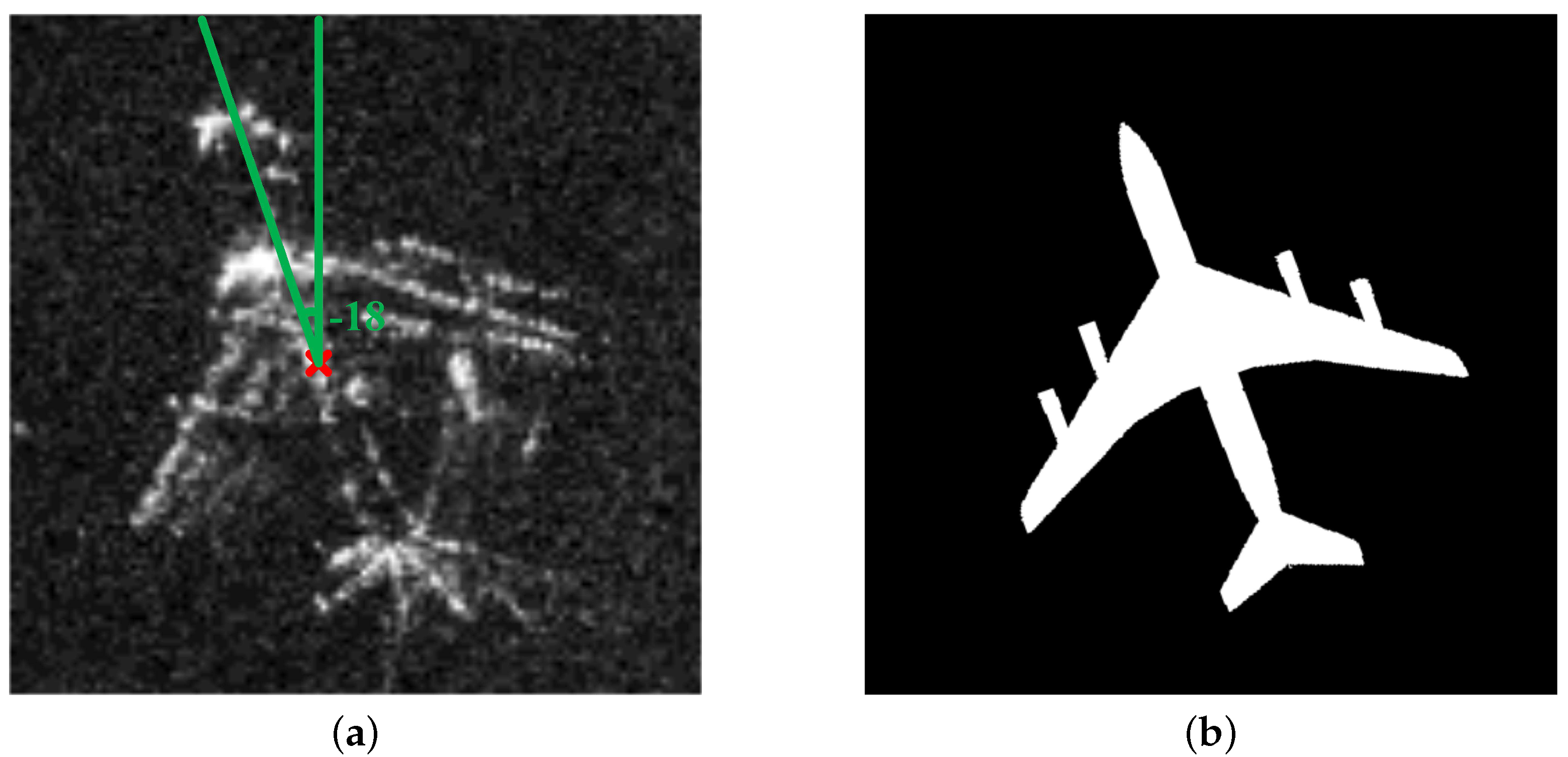

Finally, two candidate poses can be determined according to the maximum value of all correlation coefficients. At the same time, the object in the output image is translated according to parameter

D. As shown in

Figure 6, outputs of the coarse pose estimation (i.e., image and poses) are used to construct the energy function.

3.3. Pose Reconstruction and Optimization

After the coarse pose estimation and the deep shape prior extraction, an energy function is defined by the combination of the deep shape prior and the processed image. Here, a classical probabilistic shape representation method [

12,

16] is used as the basic function and is modified via combining the shape term and the scattering term, which is defined as follows:

where

represent parameters of the shape prior model. Since researchers have confirmed that the localities and the intensities of strong scattering centres over a certain span of azimuth angles are statistically quasi-invariant [

17], the weight

is modified as

, in which

represents the local transformation matrix and is defined as follows:

among which

are the locally transformation parameters, i.e., a translation of the first and second dimension, the scale, and the rotation angle, respectively.

For the scattering term,

is the contour of the scattering region which is defined as

while

distinguishes the scattering region and the background, which is defined as follows:

where

and

are the contour indicator and the gradient of the scattering region, respectively, with

.

is the image space.

and

are the mean intensity of the scattering region and the background region, which are defined as

and

, respectively. Inside,

is the processed image and

is the scattering region, which is

with

a selected threshold.

Reconstruction results are obtained by optimize the energy function with an iterative minimization procedure, in which the approximate inference of DBM [

12] is introduced for the calculation of each layer of hidden units, and split Bregman, a traditional algorithm for convex optimization, is used to calculate the shape. Details of the optimization process are summarized in Algorithm 1, while Algorithm 2 is the details of Split Bregman method to solve the problem of minimizing

in Algorithm 1 step 2.3.

As shown in Algorithm 1, parameters are initialized in advance. Since the resolution is a known number, the scale h is fixed. For objects that have already been transformed, x, y, and are set to be 0. Specifically, if the object in the slice is not centroid, x, y will be set to be values obtained by the symmetry axis in the coarse pose estimation. For example, if symmetry axis of the object in the slice is or , the initializations of x, y in Algorithm 1 for are set to be , or , . At the same time, the shape is set to be the mean shape of the templates while the hidden units are initialized by . Besides, the set of the learning rate in the gradient descent, which is used to update , is set to be small values for local adaption. The optimization will be continued until the energy difference is less than the threshold or the maximum number of iterations is reached.

After that, reconstruction results with candidate poses are obtained. The one with the minimum energy value is chosen as the final result.

| Algorithm 1 Optimization Algorithm |

| Input: Learned parameters fused by transformation factor , test image slice |

| Initialization: |

| Initialize

as mean shape of the data set, set , , , , and maximum numbers of iteration , . |

| Optimization: |

| Repeat 1 to 3 until

or the maximum number of iteration is reached. |

| 1. Calculate and let . |

| 2. Repeat 2.1 to 2.5 until or the maximum number of iteration is reached. |

| 2.1 |

| 2.2 |

| 2.3 |

| 2.4 |

| 2.5 Calculate by Function (9). |

| 3. Update using the gradient descent technique. |

| 3.1 Calculate using |

| |

| |

| |

| |

| 3.2 Calculate |

| Output: The latest shape . |

| Algorithm 2 Detailed steps in Algorithm 1 2.3 |

| Input: Parameters , , , . |

| Repeat 1 to 5 until or the maximum number of iterations is reached. |

| 1. Define: |

| 2. |

| 2.1 |

| |

| 2.2 |

| |

| 3. |

| 4. Find |

| 5. Renew |

| Output: The latest shape . |

3.4. Parameter Selection

In this subsection, parameters in Algorithm 1 and Algorithm 2 are described. In practice, these parameters are set mainly based on experience. In our experiments, the convergence parameter

is set to

. The maximum numbers of iterations

,

, and

are set to be 10, 100, and 50, respectively. To achieve fine tuning, the learning rate of the transformation parameter is set to small numbers. In our experiment, it is set to be

. In addition, parameters

and

are related to each other. Among them,

represents the importance of the scattering edge term and is also the coefficient of the first part in Function (

9) which is set to be 1.0 by defaults. The other two parameters are set according to

.

represents the importance of the scattering region term, while

represents the importance of the deep shape term. In object reconstruction, the region term is the most important part. The shape term and the edge term are priors. In our experiments,

and

are set to be 100.0 and 1.0, respectively. In addition,

represents the distinguished threshold of inner and outer object which is set to be 0.1 for SAR aircraft reconstruction.