1. Introduction

In manmade or natural environmental disasters, fast detection of the disaster, and short arrival time of the emergency forces, are key elements that can make the difference between small-scale disaster and mass casualty incident. Knowing in real-time crucial physical components that affect the spread and extent of the disaster, enable the emergency agencies to act faster and be better prepared, decreasing the number of casualties in body and damage to property. To provide hazard warning, alongside information about the environmental conditions that continue to affect the disaster, physical sensors are deployed as part of an Environmental Sensor Network (ESN). ESNs, deployed in large areas, are comprised of devices containing sensors for collecting physical data from the surrounding environment with the capacity of transmitting them. Although ESNs are efficient in providing hazard warning, past experience from major disasters indicated that conventional static physical sensors deployment is often not sufficient, and therefore might not provide with the needed adequate data for situation assessments and decision makings—mainly due to limited coverage and low deployment level [

1]. A solution to the inadequate geosensor network coverage problem can suggest relying on crowdsourcing user-generated data, i.e., making use of Volunteered Geographic Information (VGI), as a complementary data source for the task of weather data densification, or more generally put, augmentation of existing ESN deployment.

Crowdsourcing geographic user-generated data is the process of gathering and sharing geospatial and geographic data and information that originates from individuals, citizens, and communities, which voluntarily participate in a specific task ([

2,

3]). Using sensory data via VGI working paradigms is an effective method for data collection that can be used for expanding the variety of data sources, and enhancing the spatial resolution of sensor readings and reports. Thus, modern portable devices, such as smartphones and tablets, equipped today with modern sensor detectors and designated applications (apps), have the potential to provide the knowledge-gap associated with ESNs. Thus, building the capacity of enhancing and enriching information, especially when the nature of the geographic information is dynamic. Since citizens’ motivation to participate and contribute continues to grow, coupled with advanced technology and communication capabilities, the use of contributed user-generated weather data for environmental processes is practical and beneficial.

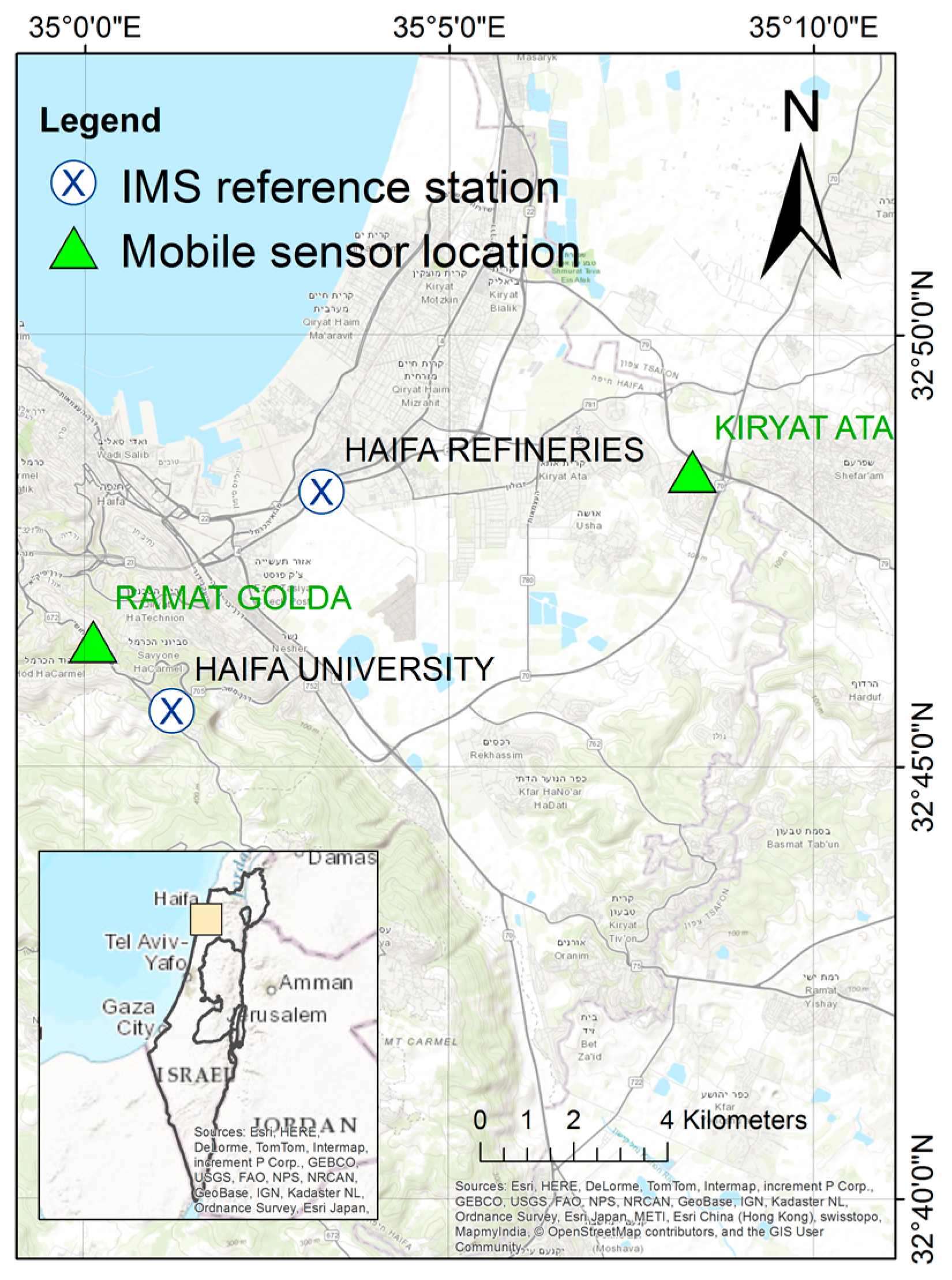

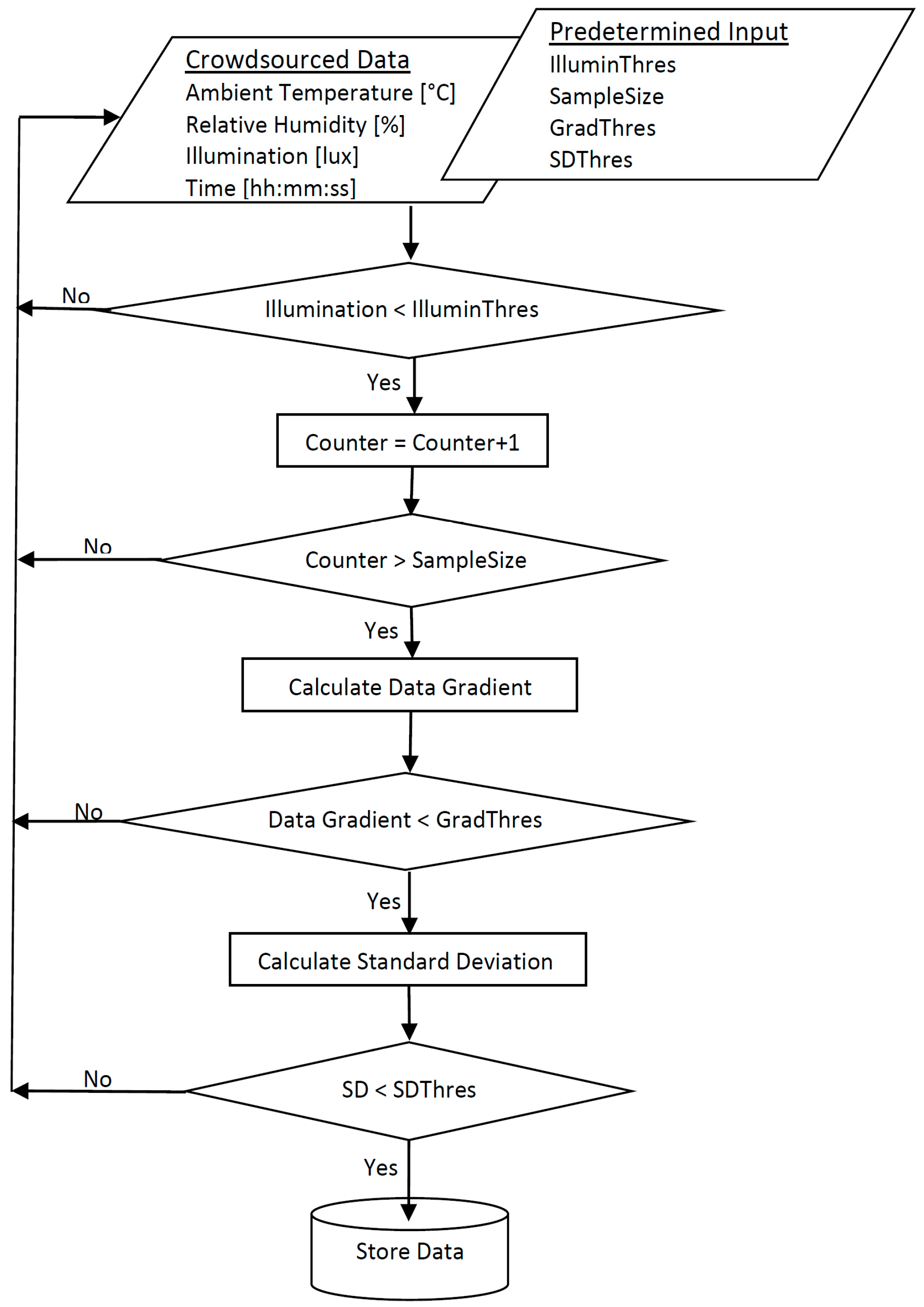

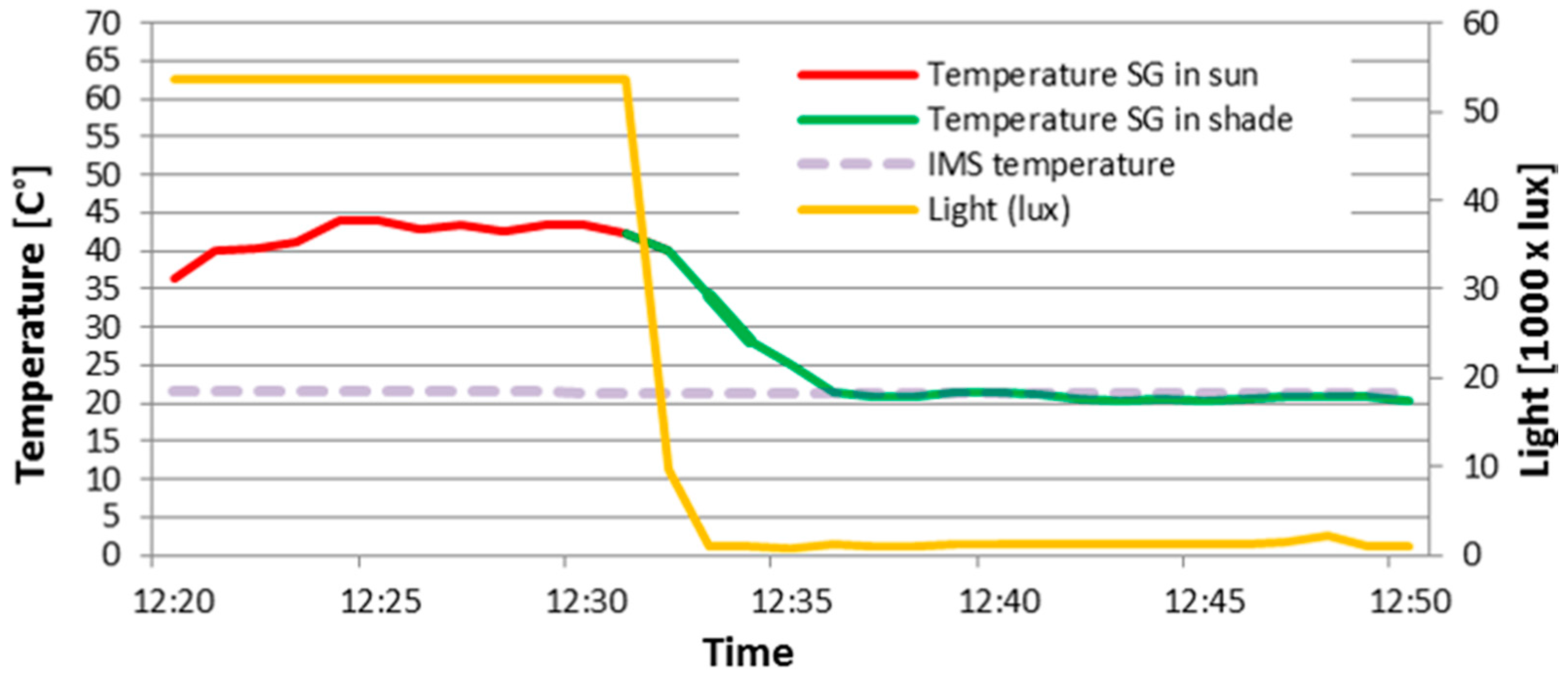

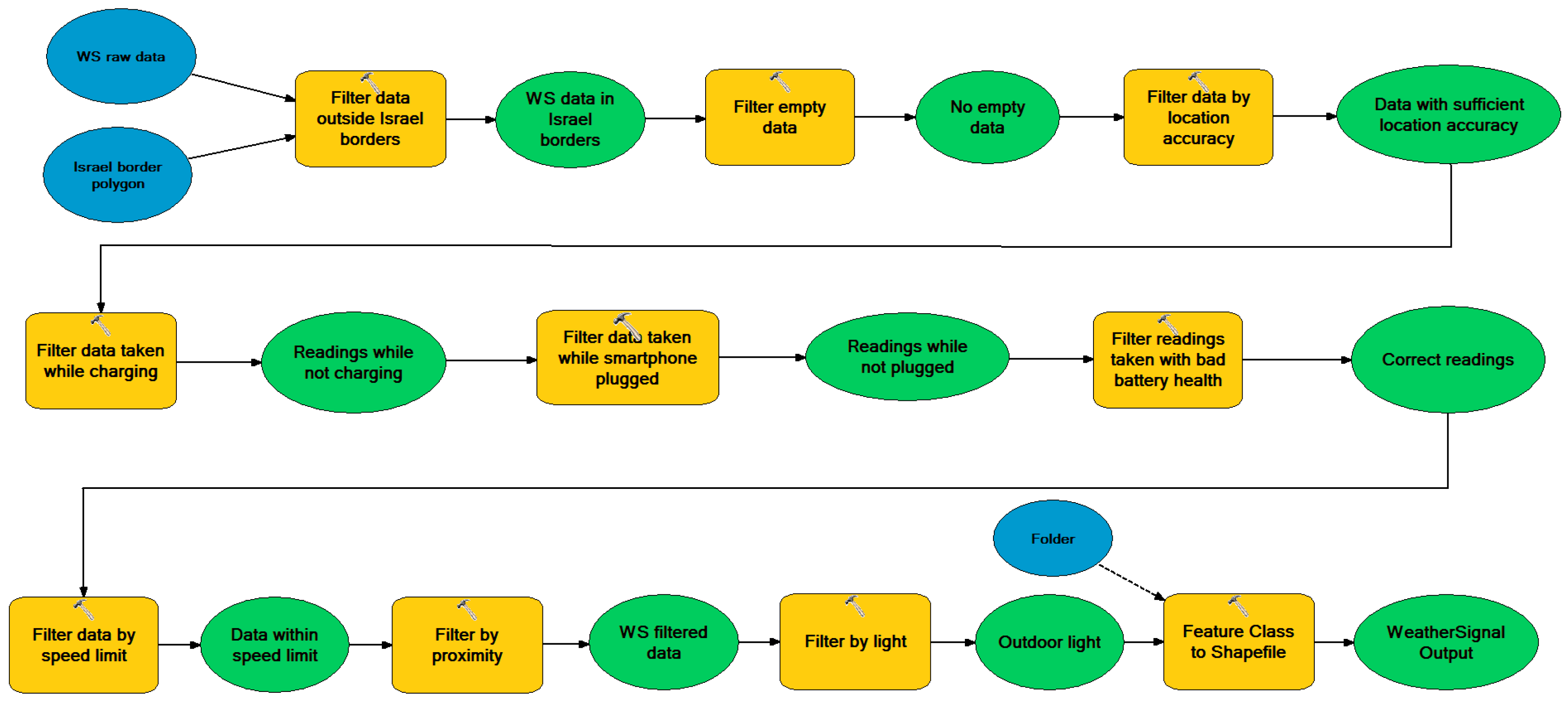

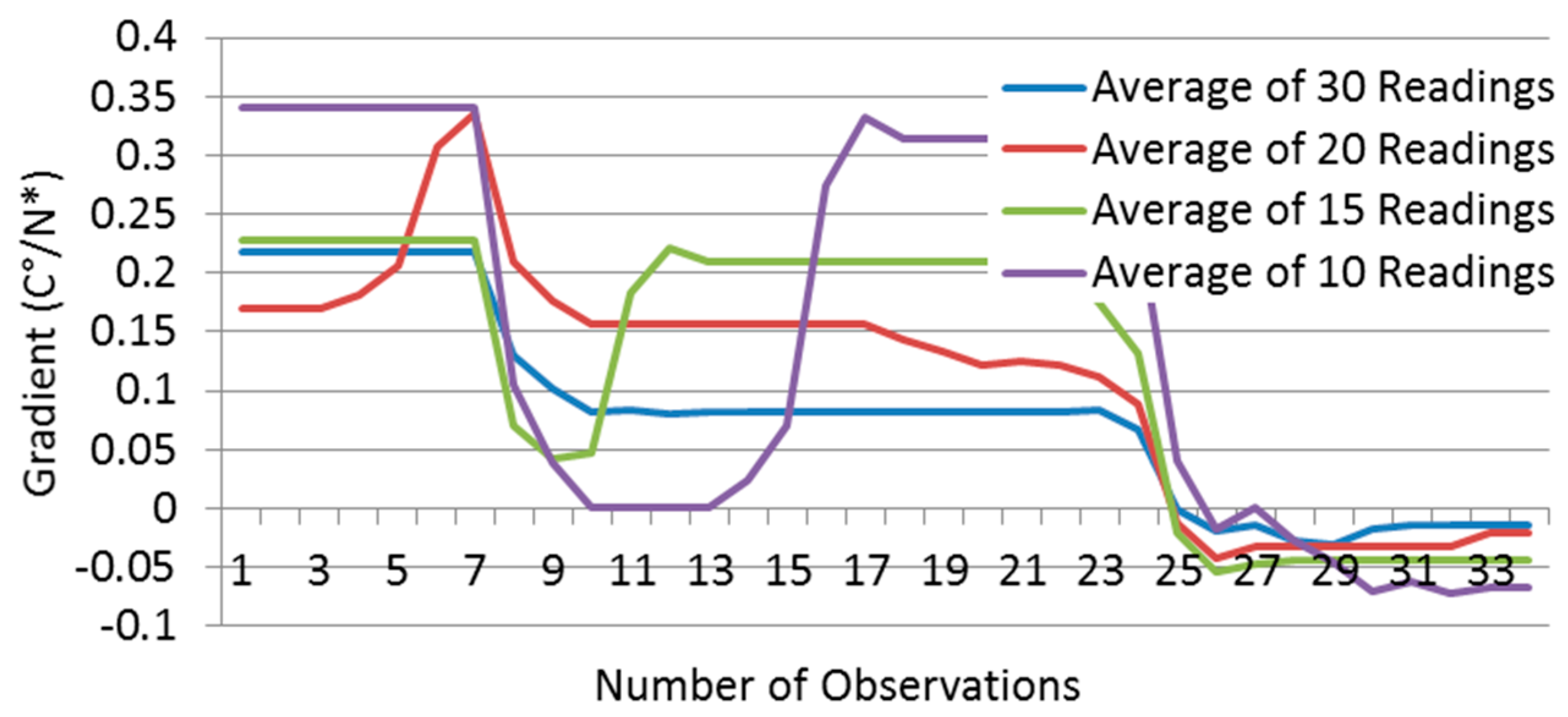

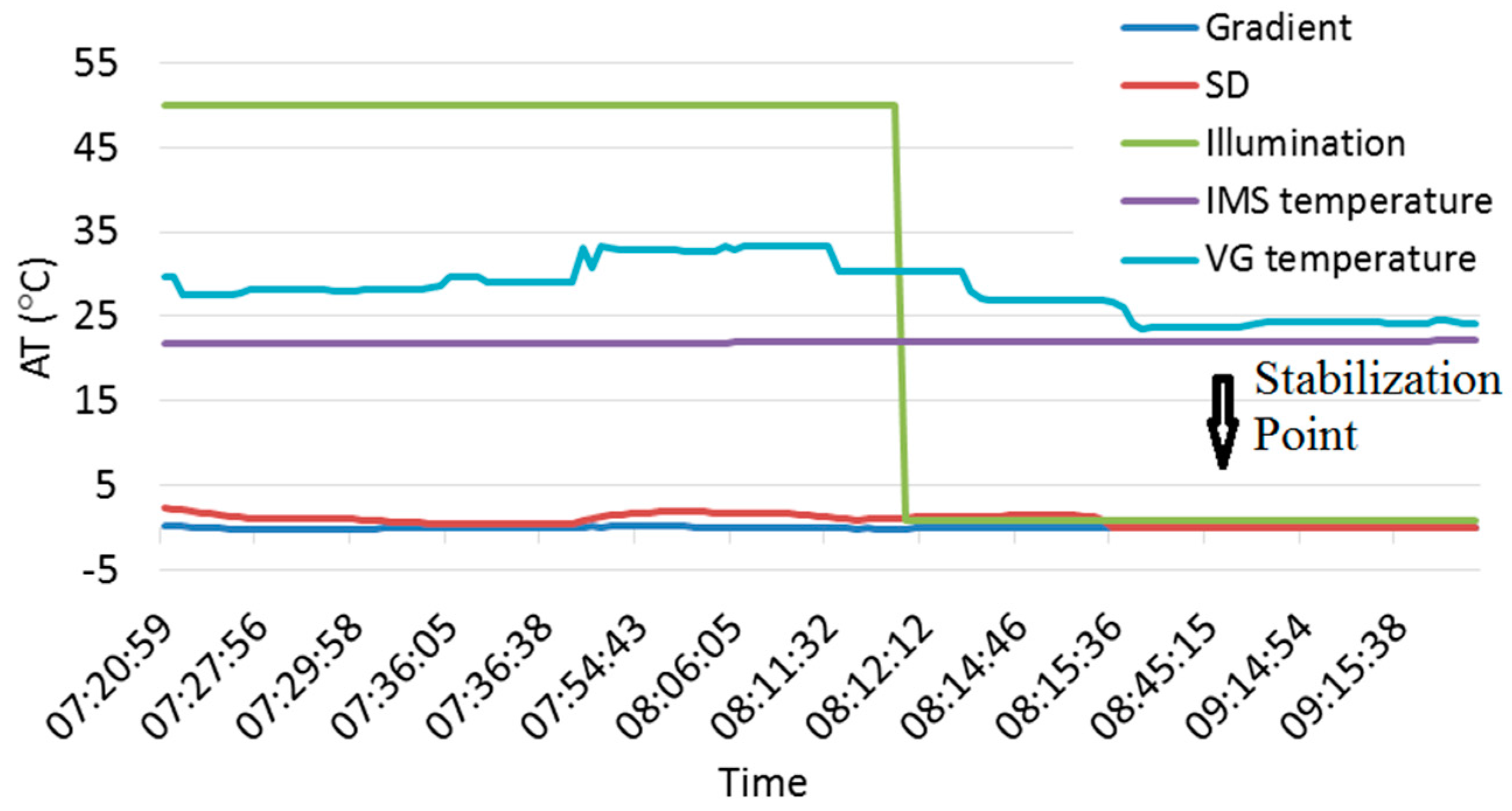

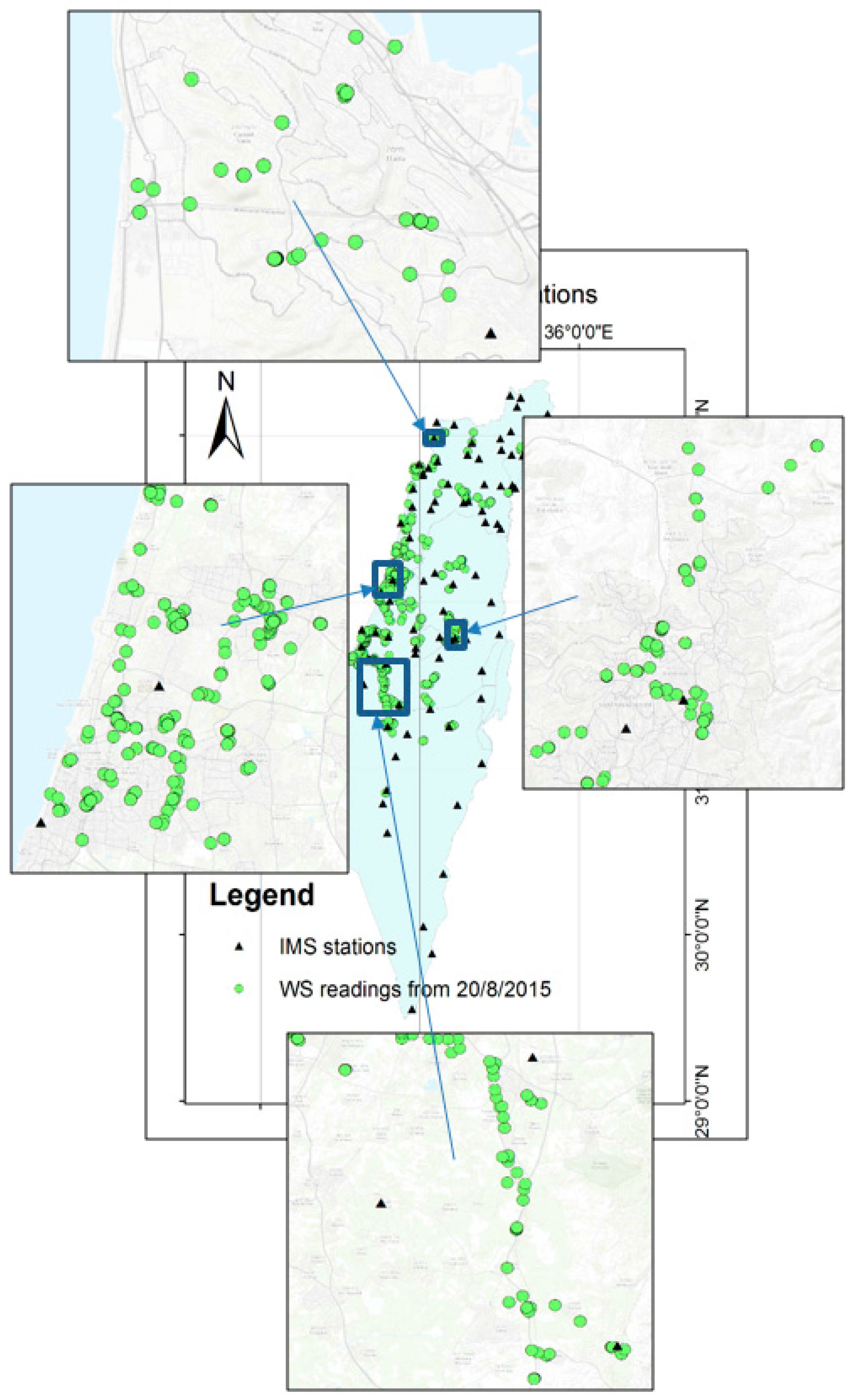

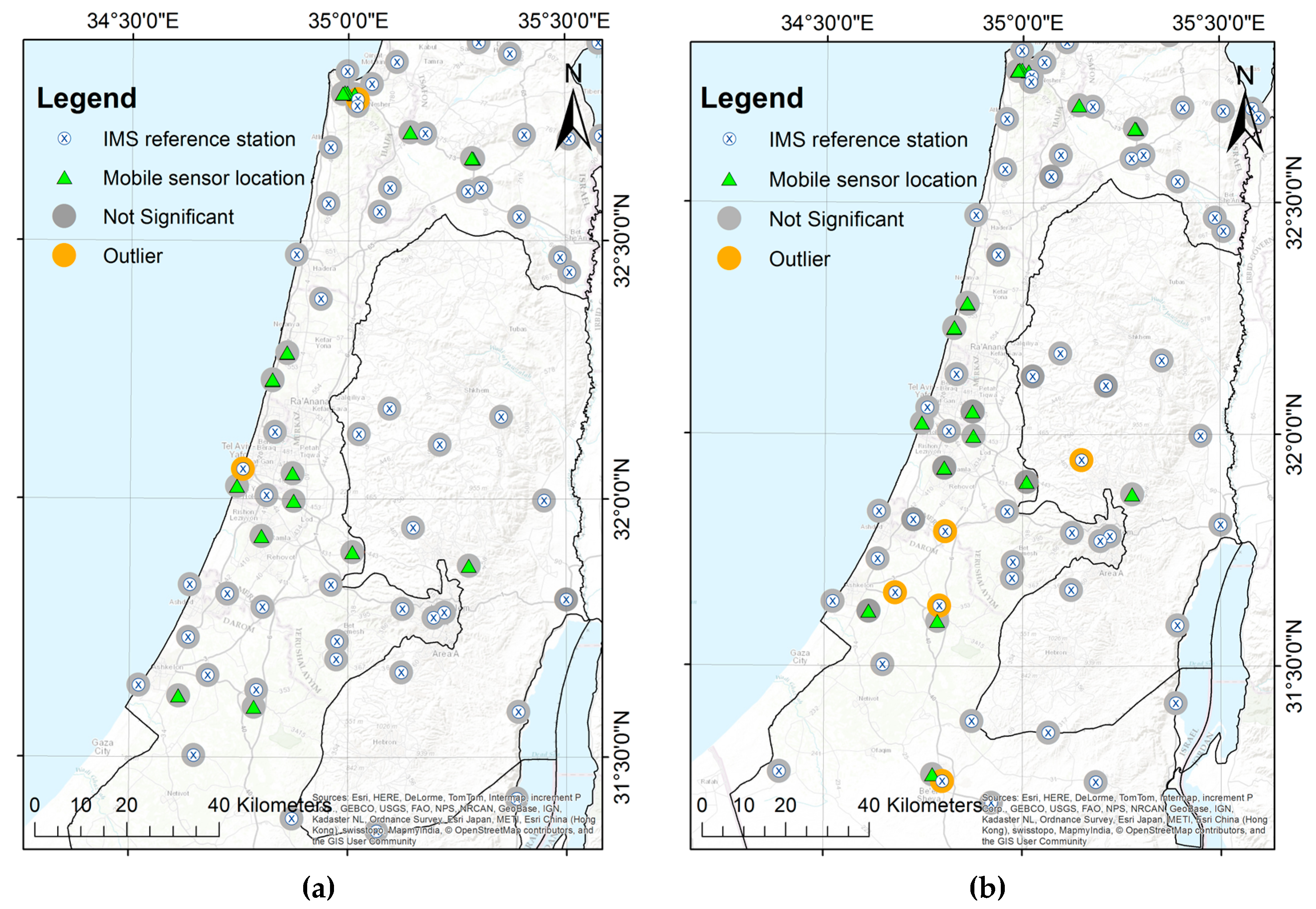

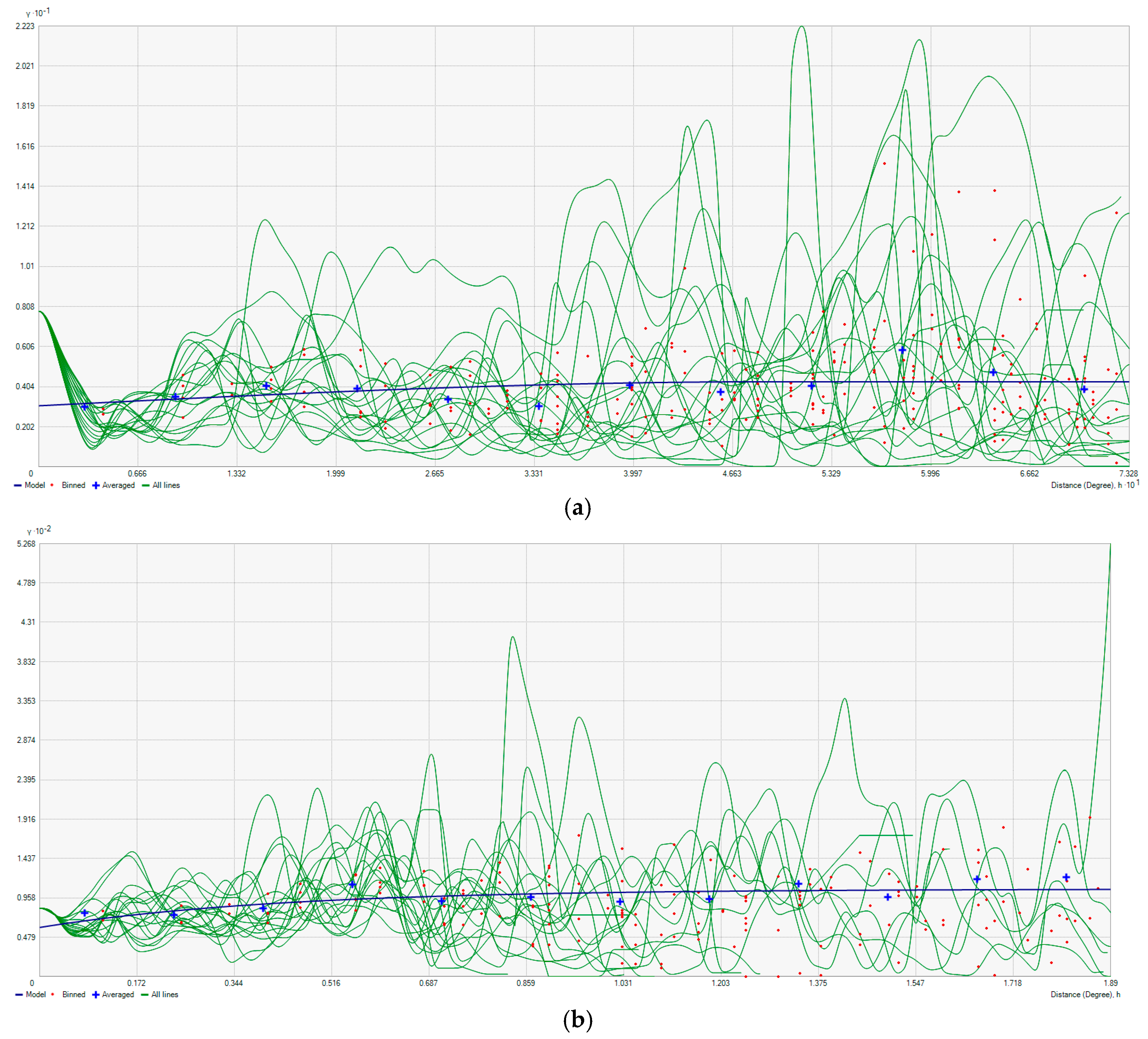

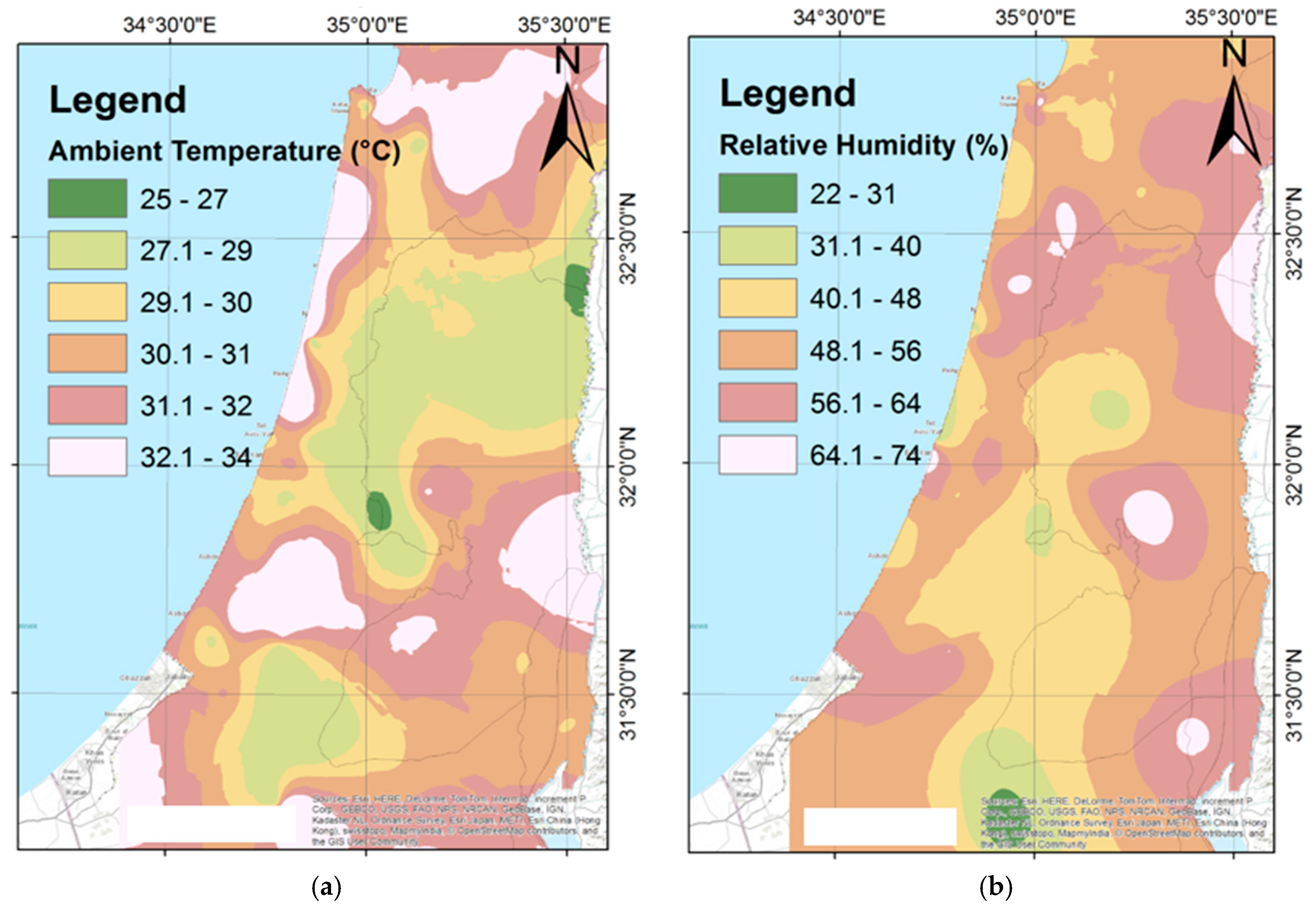

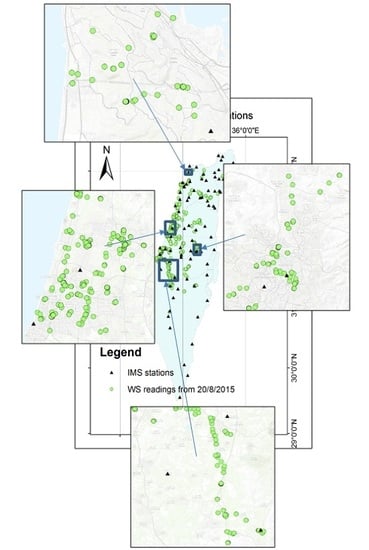

This paper investigates the use of crowdsourcing user-generated weather data, namely ambient temperature and relative humidity, for the augmentation and densification of ESNs. The motivation is to facilitate a new source of real-time and accurate sensory data, contributing with practical solution to overcoming physical limitations associated with ESNs. Two main geo-statistical processes are handled here, stemmed from issues related to collecting, fusing and disseminating user-generated crowdsourced data: (1) The determination of the accuracy, reliability and supplementary statistical characteristics of the contributed weather data. This is achieved by conducting statistical analysis on observations in respect to external authoritative reference data. This is coupled with the development of real-time algorithms for identifying stabilized non-biased data, thus eliminating the need of using external data sources for data validation in real-time scenarios; and (2) Developing a densification methodology of ESN observations with user-generated weather data, followed by a quantitative geostatistical evaluation of the overall environmental contribution.

The idea is that sizable countries, such as the US, having average coverage area of approximately 3500 km

2 per single weather station ([

4]), or Canada, with approximately 10,000 km

2 per single weather station, can benefit greatly. To demonstrate our methodology, we will evaluate and validate the empirical results in respect to fire weather parameters, used for simulation and assessment of the geosensor weather network of Israel. The presentation of our methodology and experimental results are made, proving the effectiveness of the developed and implemented algorithms, and the potential of the proposed augmentation and densification process.

2. Related Research

It is widely acknowledged that real-time geospatial data provide the best early warning source of information on damage and disaster management ([

5]). Recent studies have already proven that the public is collaborating in sharing and collecting information (e.g., [

6,

7]), whereas in cases of emergencies and disasters, the public’s motivation for data collection is even bigger (e.g., [

8,

9]). Implementing crowdsourcing working schemes, supported by physical modern and reliable sensors that are carried by citizens, user-generated data enables to reduce the dependency on experts while using the fact that data can be collected or produced via diverse sources. The contribution of VGI for disaster situations in particular is implemented to some extent in various applications (e.g., [

10,

11]), where the latter showed that 26% of these applications are related to wildfire disaster management.

Weather and metrological data and information can be obtained nowadays from various non-authoritative sources originating in citizens and communities (e.g., [

12,

13]). Aggregating these data via the use of crowdsourcing techniques play a vital role in collecting and assessing reliable data in real time, especially in densely populated areas or regions having sparse meteorological networks ([

14]). Since predictions assert that extreme weather events are expected to increase in frequency, duration and magnitude (e.g., [

15,

16]), dense, high-resolution and real-time observations will be increasingly required to observe metrological conditions and weather phenomena required for immediate detection and assessment.

Various examples that make use of public crowdsourcing collecting weather data (Citizen Science) exist. For example, the Community Collaborative Rain, Hail and Snow Network (CoCoRaHS) relies on a network of volunteers who measure and map precipitation to provide data for research, natural resource and education applications (e.g., [

17,

18]). The “Precipitation Identification Near the Ground” (PING) project, maintained by National Oceanic and Atmospheric Administration (NOAA), is another example, where volunteers issue reports on the type of precipitation that is occurring in real time ([

19]). Still, in the majority of such projects, public crowdsourcing involves the use of low-cost and amateur-level sensors deployed and handled by citizens, and not the passive and active use of mobile devices equipped with sensors. Since today the number of embedded sensors in mobile devices is increasing, data collected can be crowdsourced to serve as input for various applications and services, for example OpenSignal and PressureNet (e.g., [

20,

21,

22]). Still, physical weather variables can vary over small distances and with changing topographies, such that the reliability of these sensors in accurately capturing environmental conditions is still being investigated.

According to [

23], densification of static geosensor network can be achieved by two means: (1) using hardware devices, hence deploying more sensors (“Hard” densification); or (2) using software solutions without additional hardware (“Soft” densification). One can consider the densification of an ESN as the densification of a geodetic control network, using different statistical methods ([

24]). As in the case of multi-sensor data fusion methods, these are derived from statistics and are probabilistic methods ([

25]), such as Bayesian fusion, Extended and Unscented Kalman-Filter, Grid-based, and Monte-Carlo-based. The main disadvantage of fusion based on probability methods is the inability to assess unknown conditions, hence relatively less appropriate for disaster management and assessment situations, which can be characterized by environmental condition anomalies.

Fusion and densification of data from physical geosensors with data collected using crowdsourcing is an innovative perception (e.g., [

25,

26]). Related research in this field primarily focuses on improving the coverage of physical geosensors—without using crowdsourced data. The problem of fusing data from fixed physical sensors with human (user-generated) sensors for the task of data quality improvement (to improve decision making) is referred to as Symbiotic Data Fusion and Processing problem (SDFP) [

1]. Authors established a crowdsourcing support system for disaster surveillance, suggesting Centralized Decision Fusion (CDF) procedure for the platform, based on stochastic detection and estimation theory expressed in terms of binary hypothesis tests, using both value fusion and decision fusion. Another example is Social Fusion ([

27]), a platform for fusing data from different sources and types (e.g., mobile data sensors, social networks and static networks sensors), with the objective to create context-aware applications. Fusion is done using a set of classifiers to extract meaningful contextual inferences from the data, while dividing the data collection mechanism from the classification phase.

6. Conclusions and Future Work

The conception of using crowdsourced user-generated weather sensor data from mobile devices for the augmentation of static geosensor weather networks was presented, accompanied by developed methodology and tailored functionalities. Experiments made with the SG4 smartphone showed that with accuracies obtained, collected data can be considered for a variety of applications. Certain issues and automatic procedures were addressed to guarantee the overall reliability, namely stabilization identification and geo-statistical analysis, enabling real-time data collection without the need of reference data. Research proved that with proper handling of data, the complementary crowdsourced user-generated data can be considered for the purpose of augmentation. Hypothesis tests statistically proved that user-generated weather data are considered as an integral part of the authoritative weather network, correlating to surroundings observations locally and globally.

Future work will investigate the use of larger volumes of data collected in field experiments and communication protocols of observations in real time, together with assessment of the user-generated data contribution on actual systems. Work on the densification process is planned, taking into account additional factors, such as observation weights, network structure, and existing topography. Other physical sensory data, such as pressure, will be investigated, whereas in Israel only 10 IMS stations are equipped with pressure sensors, such that user-generated data will have higher influence and contribution.

In conclusion, the results of this research are valuable and positive, showing that sensors embedded in modern mobile devices can be used to collect weather data via crowdsourcing process to augment static geosensor weather networks, providing more observations of weather parameters used for network densification. It is believed that countries and regions with sparse dispersion of static geosensor networks can benefit from these working methodologies, while in the future, together with technological and communication developments, real-time user-generated weather data will be considered as reliable as authoritative ESN.