Attribute Learning for SAR Image Classification

Abstract

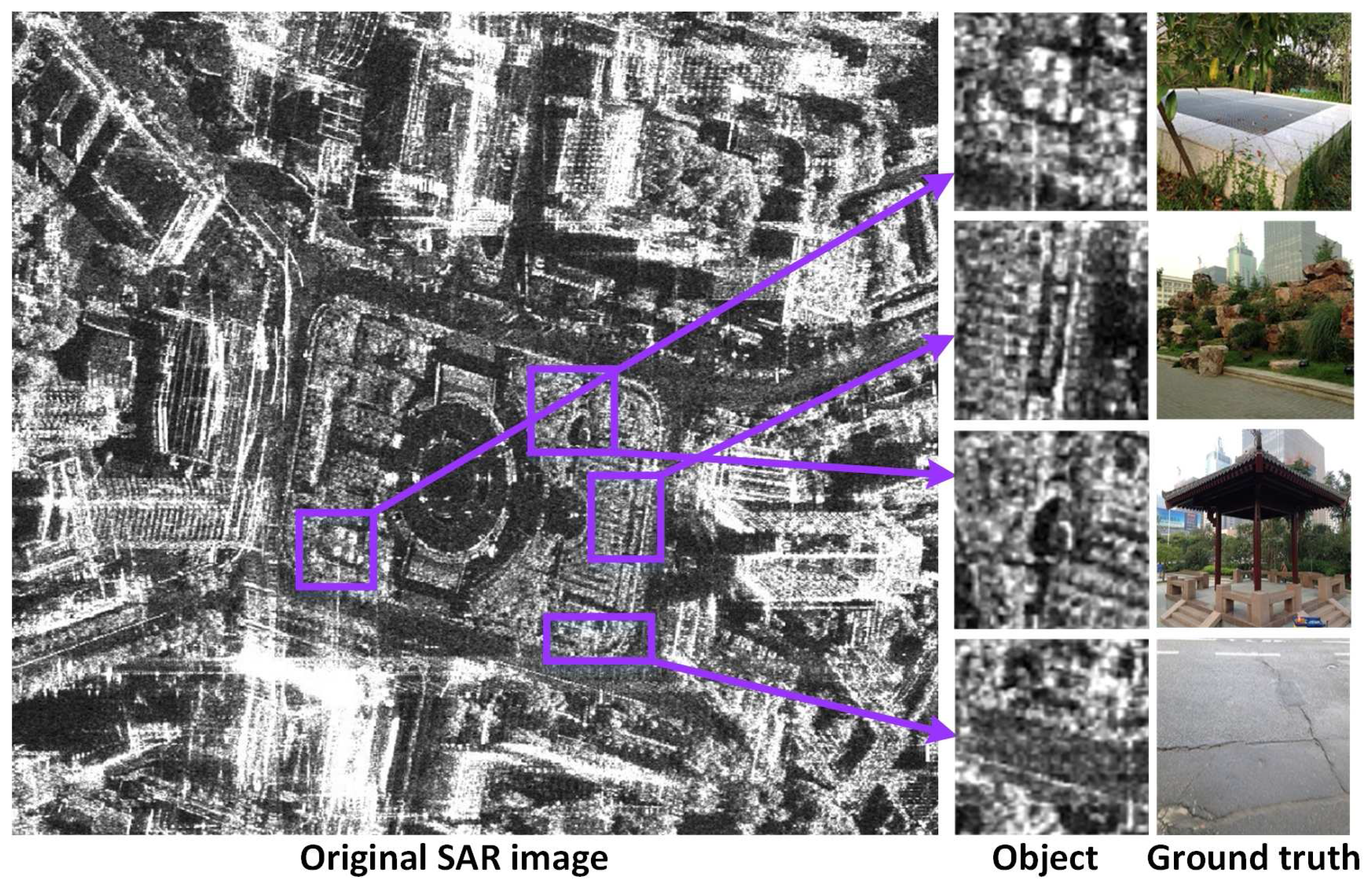

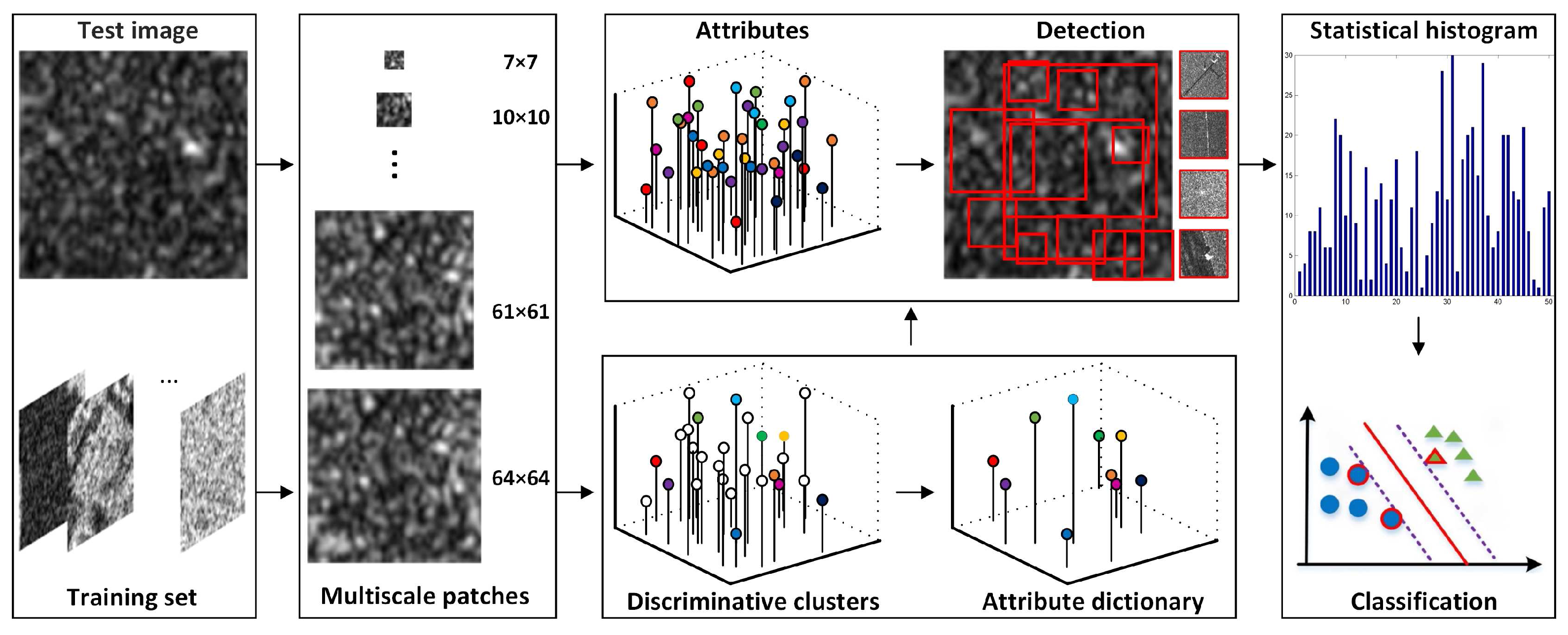

:1. Introduction

2. Attribute Learning

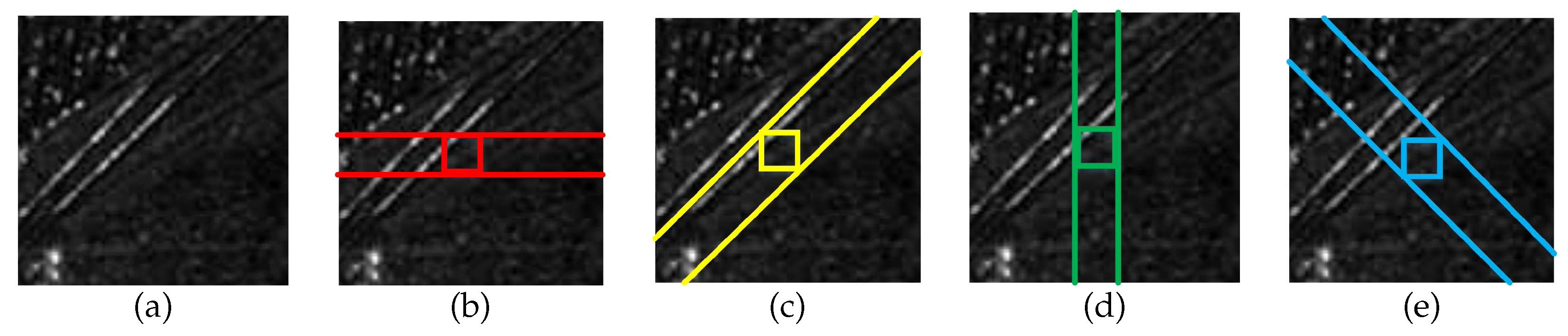

2.1. Low-Level Feature Extraction

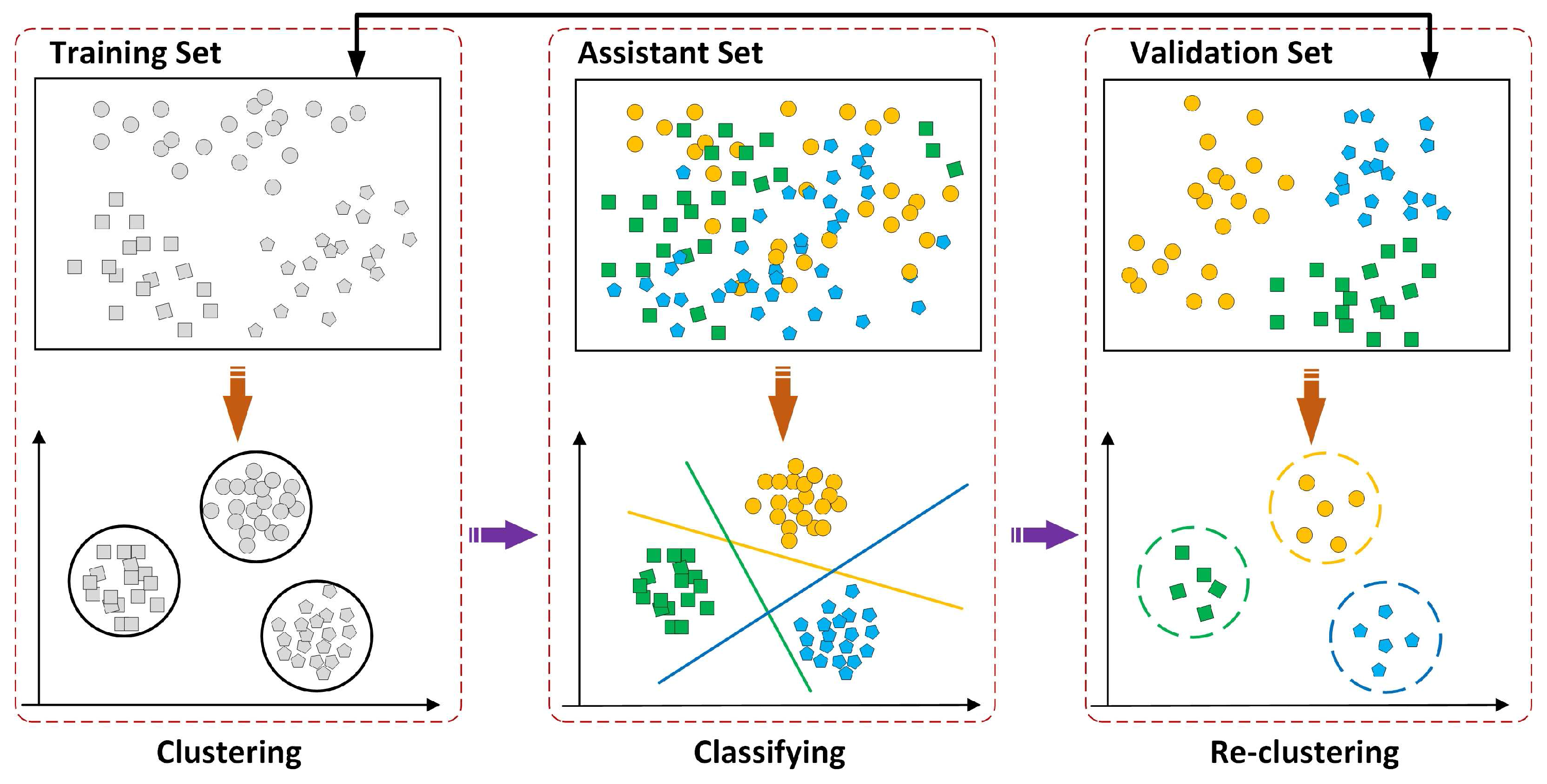

2.2. Attribute Learning

| Algorithm 1 Attribute learning |

| Input: Training set , validation set , assistant set |

| Output: Attributes |

| Step |

| 1: While: not convergence do |

| 2: Exchange: and |

| 3: Cluster on , cluster center , |

| remove cluster centers with less than 3 members |

| 4: Train corresponding classifiers on with positive examples from step 3 |

| 5: Classify on , top 5 members are sorted out for each new cluster |

| 6: Swap |

| 7: Repeat step 3 to 5 |

| 8: if members are not changed in each cluster center |

| 9: end while |

| 10: return Attributes |

2.3. Attribute Dictionary Construction

3. Classification with Attributes

4. Experiment

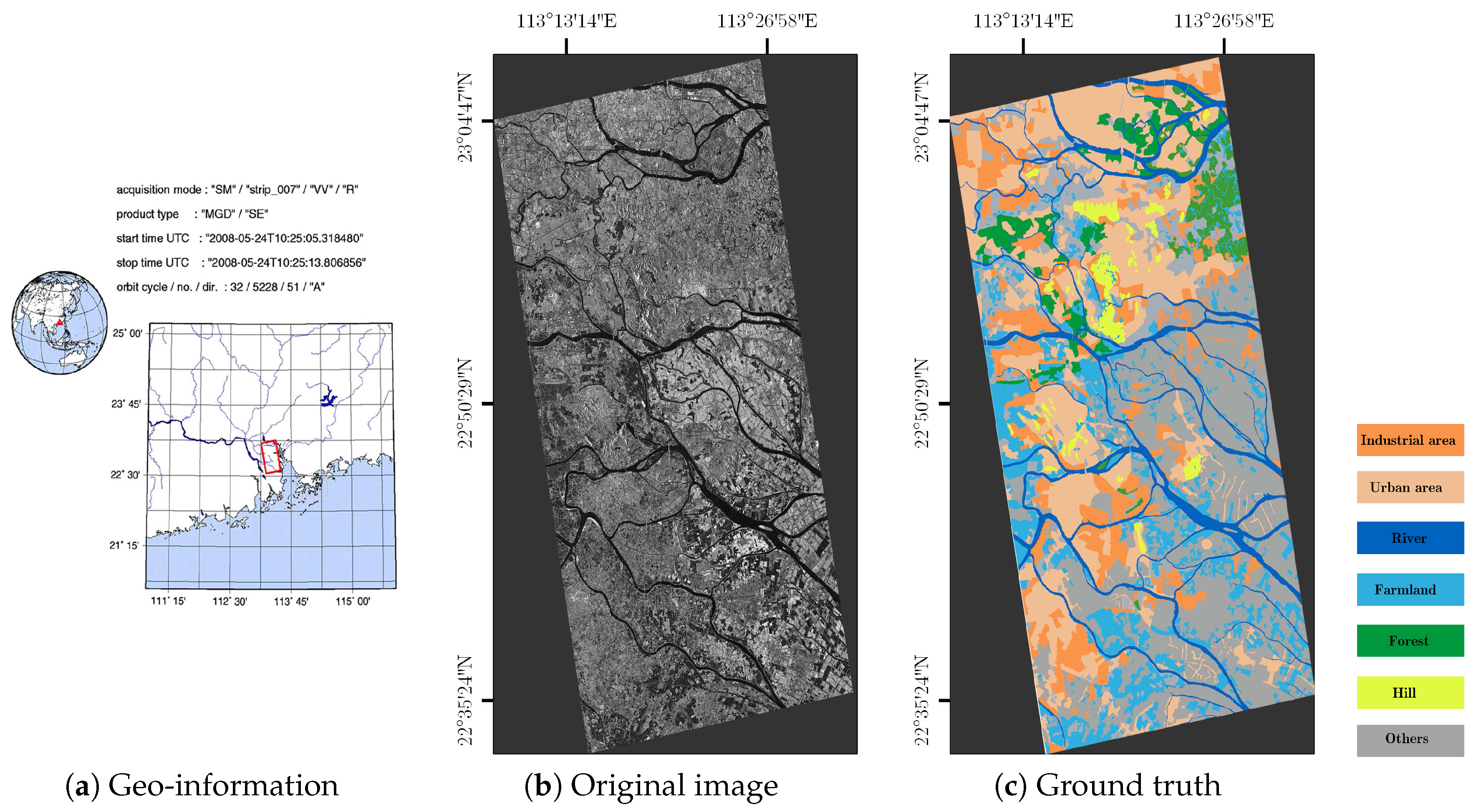

4.1. Data Set

4.2. Parameter Setting

4.3. Results and Analysis

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef]

- Reigber, A.; Scheiber, R.; Jager, M.; Prats-Iraola, P.; Hajnsek, I.; Jagdhuber, T.; Papathanassiou, K.P.; Nannini, M.; Aguilera, E.; Baumgartner, S.; et al. Very-high-resolution airborne synthetic aperture radar imaging: Signal processing and applications. Proc. IEEE 2013, 101, 759–783. [Google Scholar] [CrossRef]

- Tison, C.; Nicolas, J.M.; Tupin, F.; Maître, H. A new statistical model for Markovian classification of urban areas in high-resolution SAR images. IEEE Trans. Geosci. Remote Sens. 2004, 42, 2046–2057. [Google Scholar] [CrossRef]

- Marques, R.C.P.; Medeiros, F.N.; Nobre, J.S. SAR image segmentation based on level set approach and model. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2046–2057. [Google Scholar] [CrossRef] [PubMed]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Torres-Torriti, M.; Jouan, A. Gabor vs. GMRF features for SAR imagery classification. In Proceedings of the 2001 International Conference on Image Processing (ICIP), Thessaloniki, Greece, 7–10 October 2001; pp. 1043–1046. [Google Scholar]

- He, C.; Li, S.; Liao, Z.; Liao, M. Texture classification of PolSAR data based on sparse coding of wavelet polarization textons. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4576–4590. [Google Scholar] [CrossRef]

- Li, S.Z. Markov Random Field Modeling in Image Analysis; Springer Science & Business Media: New York, NY, USA, 2009. [Google Scholar]

- Lafferty, J.D.; Mccallum, A.; Pereira, F.C.N. Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In Proceedings of the Eighteenth International Conference on Machine Learning, Williams College, MA, USA, 28 June–1 July 2001; pp. 282–289. [Google Scholar]

- Koller, D.; Friedman, N. Probabilistic Graphical Models: Principles and Techniques; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Ghamisi, P.; Souza, R.; Benediktsson, J.A.; Zhu, X.X.; Rittner, L.; Lotufo, R.A. Extinction profiles for the classification of remote sensing data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5631–5645. [Google Scholar] [CrossRef]

- Geiß, C.; Klotz, M.; Schmitt, A.; Taubenböck, H. Object-based morphological profiles for classification of remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5952–5963. [Google Scholar] [CrossRef]

- Demir, B.; Bruzzone, L. Histogram-based attribute profiles for classification of very high resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2096–2107. [Google Scholar] [CrossRef]

- Feng, J.; Jiao, L.; Zhang, X.; Yang, D. Bag-of-visual-words based on clonal selection algorithm for SAR image classification. IEEE Geosci. Remote Sens. Lett. 2011, 8, 691–695. [Google Scholar] [CrossRef]

- Cui, S.; Schwarz, G.; Datcu, M. Remote sensing image classification: No features, no clustering. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 5158–5170. [Google Scholar] [CrossRef]

- He, C.; Zhuo, T.; Ou, D.; Liu, M.; Liao, M. Nonlinear compressed sensing-based LDA topic model for polarimetric SAR image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 972–982. [Google Scholar] [CrossRef]

- Parikh, D.; Grauman, K. Relative attributes. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 503–510. [Google Scholar]

- Scheirer, W.J.; Kumar, N.; Belhumeur, P.N.; Boult, T.E. Multi-attribute spaces: Calibration for attribute fusion and similarity search. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 2933–2940. [Google Scholar]

- Felix, X.Y.; Ji, R.; Tsai, M.H.; Ye, G.; Chang, S.F. Weak attributes for large-scale image retrieval. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 2949–2956. [Google Scholar]

- Khan, F.S.; Anwer, R.M.; Van De Weijer, J.; Bagdanov, A.D.; Vanrell, M.; Lopez, A.M. Color attributes for object detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3306–3313. [Google Scholar]

- Lampert, C.H.; Nickisch, H.; Harmeling, S. Learning to detect unseen object classes by between-class attribute transfer. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 951–958. [Google Scholar]

- Singh, S.; Gupta, A.; Efros, A.A. Unsupervised discovery of mid-level discriminative patches. In Computer Vision–ECCV 2012; Springer: New York, NY, USA, 2012; pp. 73–86. [Google Scholar]

- Fukuda, S.; Hirosawa, H. Support vector machine classification of land cover: Application to polarimetric SAR data. In Proceedings of the IEEE 2001 International Geoscience and Remote Sensing Symposium, Sydney, Ausralia, 9–13 July 2001; Volume 1, pp. 187–189. [Google Scholar]

- Touzi, R.; Lopes, A.; Bousquet, P. A statistical and geometrical edge detector for SAR images. IEEE Trans. Geosci. Remote Sens. 1988, 26, 764–773. [Google Scholar] [CrossRef]

- Cui, S.; Dumitru, C.O.; Datcu, M. Ratio-detector-based feature extraction for very high resolution SAR image patch indexing. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1175–1179. [Google Scholar]

- Kanungo, T.; Mount, D.M.; Netanyahu, N.S.; Piatko, C.D.; Silverman, R.; Wu, A.Y. An efficient k-means clustering algorithm: Analysis and implementation. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 881–892. [Google Scholar] [CrossRef]

- Clausi, D.A.; Yue, B. Comparing cooccurrence probabilities and Markov random fields for texture analysis of SAR sea ice imagery. IEEE Trans. Geosci. Remote Sens. 2004, 42, 215–228. [Google Scholar] [CrossRef]

- He, C.; Zhuo, T.; Zhao, S.; Yin, S.; Chen, D. Particle filter sample texton feature for SAR image classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1141–1145. [Google Scholar]

- Reichenheim, M.E. Confidence intervals for the kappa statistic. Stata J. 2004, 4, 421–428. [Google Scholar]

| Parameter Index | Setting |

|---|---|

| patch size | from pixels to global size with 3 pixels shift |

| stride | 3 pixels |

| number of sampled patches | n |

| initial cluster center | |

| members forming final cluster center |

| Class/Method | GLCM | Gabor | GMRF | PFST | BoW-MV | AL |

|---|---|---|---|---|---|---|

| Forest | 78.33 | 53.33 | 86.67 | 79.00 | 80.12 | 92.37 |

| Hill | 78.33 | 28.33 | 68.33 | 81.00 | 39.13 | 81.45 |

| Industrial area | 55.00 | 50.00 | 46.67 | 63.00 | 59.01 | 78.66 |

| Farmland | 90.00 | 80.00 | 98.33 | 99.00 | 83.23 | 96.34 |

| River | 100 | 95.00 | 100 | 79 | 92.55 | 98.60 |

| Urban area | 70.00 | 30.00 | 68.33 | 100 | 75.78 | 92.66 |

| Others | 61.67 | 68.33 | 71.67 | 77.00 | 72.67 | 72.88 |

| A.A. | 76.19 | 57.86 | 77.14 | 82.57 | 72.61 | 89.27 |

| Kappa | 0.72 | 0.51 | 0.73 | 0.80 | 0.67 | 0.86 |

| Kappa C.I. | [0.67,0.77] | [0.45,0.56] | [0.69,0.78] | [0.79,0.80] | [0.66,0.67] | [0.83,0.89] |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, C.; Liu, X.; Kang, C.; Chen, D.; Liao, M. Attribute Learning for SAR Image Classification. ISPRS Int. J. Geo-Inf. 2017, 6, 111. https://doi.org/10.3390/ijgi6040111

He C, Liu X, Kang C, Chen D, Liao M. Attribute Learning for SAR Image Classification. ISPRS International Journal of Geo-Information. 2017; 6(4):111. https://doi.org/10.3390/ijgi6040111

Chicago/Turabian StyleHe, Chu, Xinlong Liu, Chenyao Kang, Dong Chen, and Mingsheng Liao. 2017. "Attribute Learning for SAR Image Classification" ISPRS International Journal of Geo-Information 6, no. 4: 111. https://doi.org/10.3390/ijgi6040111