1. Introduction

Distinguishing Witness Accounts (WA) of events from social media is of significant interest for applications such as emergency services, marketing and journalism [

1]. WA can provide relevant and timely information about an unfolding crisis contributing to improved situation awareness for organizations responsible for response and relief efforts [

2]. They can be analyzed for feedback and sentiment related to consumer products or harvested to create event summaries [

3]. With their widespread popularity, social networks are a valuable potential source for identifying witnesses [

4]. For the purpose of this research, a WA is a micro-blog that contains a direct observation of the event, which may be expressed with text or linked image [

5]. Once a WA is identified, the inference that the micro-blogger is a Witness posting from the event, that they are on-the-ground (OTG), is enabled [

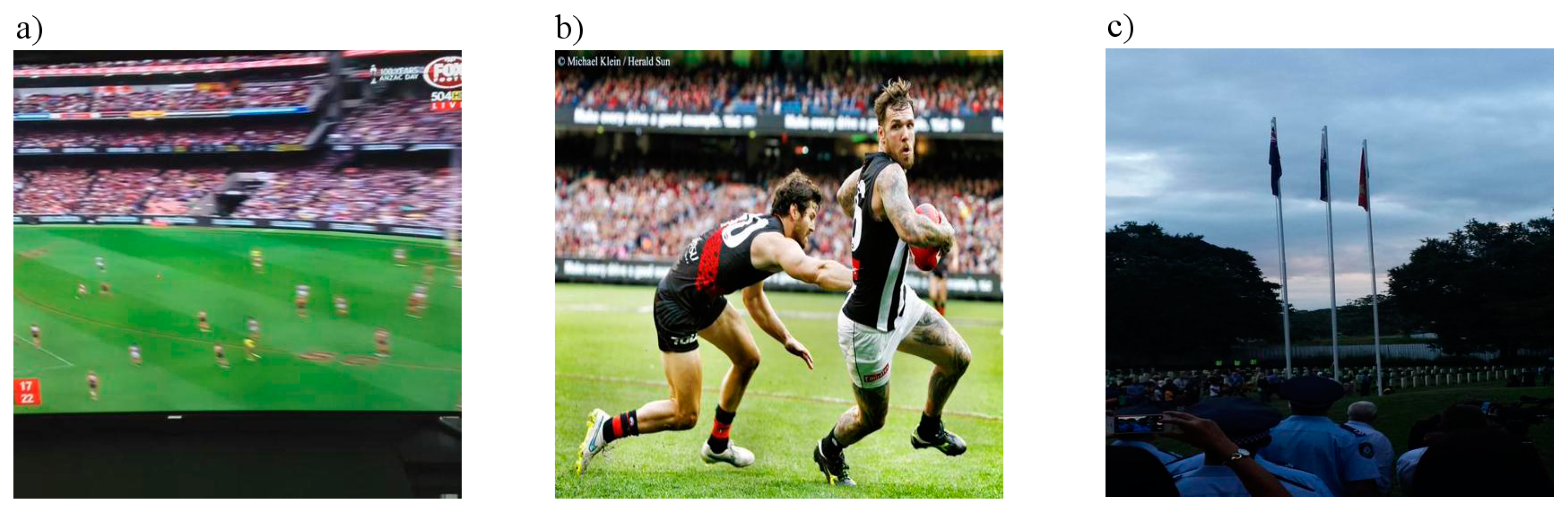

5]. For example, a micro-blogger at a football match linking to an image of the pre-game banner (see

Figure 1) is considered to have posted a WA from OTG.

The human practice of recording events by photography long pre-dates social media. However, since their introduction social media platforms have been rapidly adopted as an information source For example, Journalists utilize social media such as Twitter and Instagram to identify potential witnesses to news-worthy stories [

6], and seek permission to publish citizens posts [

7]. Images have numerous advantages for communicating observations of events, including the information content of images are considered language independent [

8] and images have been described as less subjective than text [

9]. In the context of social media, linked images have been suggested as a feature for filtering relevant content for crisis events [

10]. This relevance was further qualified for four case studies described by Truelove et al. [

11], with more than 50% of WA linking to images containing observations of the event.

A primary motivation to identify features which can distinguish micro-blogs posted from an event, is that only a fraction contain geotags [

13]. But even if present, geotags can be unreliable for interpreting the location of the linked image [

14]. For example, if a micro-blogger posts an image of the event while journeying home on the train at its conclusion, the geotag will reflect this posting location. Recent research highlights the interest in distinguishing witnesses [

6,

15] or micro-blogs posted from a region impacted by an event [

16]. These studies have focused on the text content of micro-blogs, whereas this paper is focused on the image content linked to micro-blogs. Identifying image-based evidence of witnessing in addition to text-based evidence can enable testing whether in combination they corroborate the categorization of a WA, or are they in conflict [

1], and can significantly increase the number of witnesses identified from geotags or text alone [

1].

Although a large number of micro-blogs are posted to social media such as Twitter, the number of WA related to an event will be a small fraction of the total posts, diluted even further for events with national or global reach [

4,

17]. Additionally, images crowdsourced from social media such as Twitter, are stripped of meta-data [

18]. These factors indicate a need for an automated procedure for filtering relevant content that utilize visual features derived directly from the image.

In recent years, machine learning methods have shown great promise in scene understanding and image category classification. In particular, the bag-of-words method for image representation has shown interesting results in image category classification [

19,

20,

21]. In this method the algorithm determines which visual features are valuable for the classification rather than human analyst, an expected strength because of the diversity of images that result from crowdsourcing efforts. Image diversity is predicted for many reasons including the subject of crowdsourced images will change as the event progresses, the distance and perspective from the subject will vary, and numerous lens types.

The primary goal motivating the selection of the case study events, Australian Football League (AFL) games, was the need to study events that were broadcast live. Such events test inferences made in previous case studies [

5,

11], that micro-bloggers are OTG when they post observations of an event. That micro-bloggers can be watching, arguably witnessing the event from some other place via a live broadcast, introduces complexity to the problem of distinguishing WA from OTG [

1]. Further motivations are sporting events are a focus for a number of crowdsourcing applications such as the creation of event summaries [

3,

22,

23], and identified as one of the most popular

social search topics for regular citizens [

24]. Additionally, unfortunately not all crisis events for which authorities need to prepare are of natural causes, for example scheduled mass gatherings have been the target of terrorism acts previously.

The contribution of this paper is to evaluate whether an established procedure for image categorization, the bag-of-words method, can categories images which are WA for the case study events, describing the advancements from preliminary results adopted by Truelove, Vasardani and Winter [

1]. This paper questions whether the bag-of-words representation can be learned from a small set of manually labelled images to reliably categorize a larger set of images. Also, this paper questions whether a bag-of-words representation learned from images of one event can be transferred to another instance of the same event type, testing a potential weakness of the method being limitations on the transferability of training models to other event instances. Further contributions include analysis of micro-blogger posting behavior, enabling recommendations for collecting more relevant samples. Finally, a discussion of whether the method proposed for this event type could be generically adopted for other event types is considered. However, experimentation to test the applicability to dissimilar event types is left to future work.

The paper proceeds with a review of related work, including identifying WA in social media and image category classification.

Section 3 presents the methodology adopted including a description of the bag-of-words method. Results are presented in

Section 4, followed by discussion and conclusions.

3. Materials and Methods

The fundamental objective of this research is to evaluate the bag-of words method to automatically categorize images which are WA posted to social media. Another objective is to evaluate transfer learning from a model devised from a training dataset to automatically categorize WA in other datasets, both the off-hash dataset for the same event, and a new similar event. Two Twitter case studies, AFL matches played five months apart at the Melbourne Cricket Ground (MCG), were collected to provide the data for the experiments. The first event was used to evaluate the bag-of-words method and to investigate whether a satisfactory classification can be achieved by learning the classifier from a small subset of the samples. The second event was used to test whether a bag-of-words model learned from one event can be transferred to another similar event. Each image was manually analyzed and categorized by human annotators to create the training datasets, and support detailed characterization of the categories of images contributing to misclassification. Witness posting behavior was analyzed from those images identified as WA, and in comparison to others in the dataset. This section details the methodology implemented to complete each experiment, including details on the creation of the case study datasets.

3.1. Events Description

A summary description of the case study events is presented in

Table 1. The ANZAC Day (an Australian national holiday [

51]) match typically records the highest attendance outside the Final Series. The Grand Final is the culmination of the season, celebrated and viewed by fans regardless of which club they support.

3.2. Data Collection

A number of considerations motivated the data collection methodology, including Twitter API rate limiting [

55] and the reduction in the completeness of search results over time [

56]. Therefore, any prior knowledge is leveraged to target the most relevant samples in a short space of time, including the fundamental inferential prerequisites of WA which are that they contain original content, on the topic of the event, and are posted by individuals [

5]. Image content meeting this criteria are typically posted to Twitter or other social media such as Instagram. Additionally, previous case studies suggest witnesses do not limit their posting about the event to during the event [

11].

The method to collect the datasets for the two case studies was completed in stages. The first stage was collecting a sample of on-topic micro-blogs with hashtags promoted by the AFL. The Twitter Streaming API [

57] and Twitter Data Analytics software tools [

58] where utilized to complete this task in real-time. Within one-hour of the event finishing the sample of on-topic micro-blogs was then used to establish a sample of micro-bloggers who had likely posted original content. For the ANZAC Day match the filter for micro-bloggers was set at one original micro-blog posted between 7:00 and 18:30. More restrictive sampling was required for the Grand Final match, a much larger dataset. Micro-bloggers were sampled to those identified to have posted two original micro-blogs within a more constrained temporal period, from 11:00 until 18:00. A list of pre-determined non-individuals were additionally removed from the micro-blogger samples at this stage, including media outlets, gambling companies, the AFL clubs and administration, and the venue. All these non-individuals can be identified from their Twitter application user handles.

Using the micro-bloggers identified in the previous stage, the Twitter Search API [

56] and Twitter Data Analytics software tools [

58] were used to collect all posting history for the day of the event. All micro-blogs which are identifiable as copied content, including those using the retweet convention are removed from the sample, as they cannot support inferences as to the location of the micro-blogger. The remaining micro-blogs are separated, those containing the promoted hashtag became the on-hash datasets, and the remainder the off-hash datasets for each event. Differing temporal constraints where applied to these datasets, based on when the gates opened at the venue for the different matches. These datasets are summarized in

Table 2.

The final stage is to collect images linked to micro-blogs posted from Twitter or Instagram. Eliminating retweets has the effect of removing a large number of the copied images, however, they will remain linked from micro-blogs with original text. If the micro-blogger maintains the link to the original image by posting the original URL, this can be identified in Twitter by testing the micro-blogs meta-data. However, if the micro-blogger copies the image and links from their own account, a copy cannot be identified. The reader is reminded that all meta-data for the image itself is stripped by many social networks. In summary, where it is possible to identify copied image content from the micro-blog content and meta-data this is completed.

3.3. Training Data Creation

Preliminary annotation of the visual topics in the ADon dataset was completed by a single expert user, a method previously tested with multiple annotators with acceptable agreement [

5]. The purpose was to describe the visual topics, and their sample sizes, to identify those estimated in sufficient numbers for the bag of words method. The process was to manually inspect and document the dominant visual features in each image, including those that are observations of the event, and determine categories based on these features. Each visual topic category was then analyzed as to whether they provide evidence that supports the inference the micro-blogger was OTG at the event, or counter-evidence that supports the inference the micro-blogger was not OTG at the event, or alternatively the visual topic category provides no evidence (NE) [

1]. Finally the visual topic categories that represent WA OTG are decided, and annotation is completed by two independent annotators to create the training data for the binary classification. Each image in the training datasets is categorized in two classes, WA OTG or other. Each annotator can be described as an expert in the domain, both having attended AFL matches at the MCG, and watched live television broadcasts. During this process, the annotators also check if any identical copies of images remained in the dataset following pre-processing.

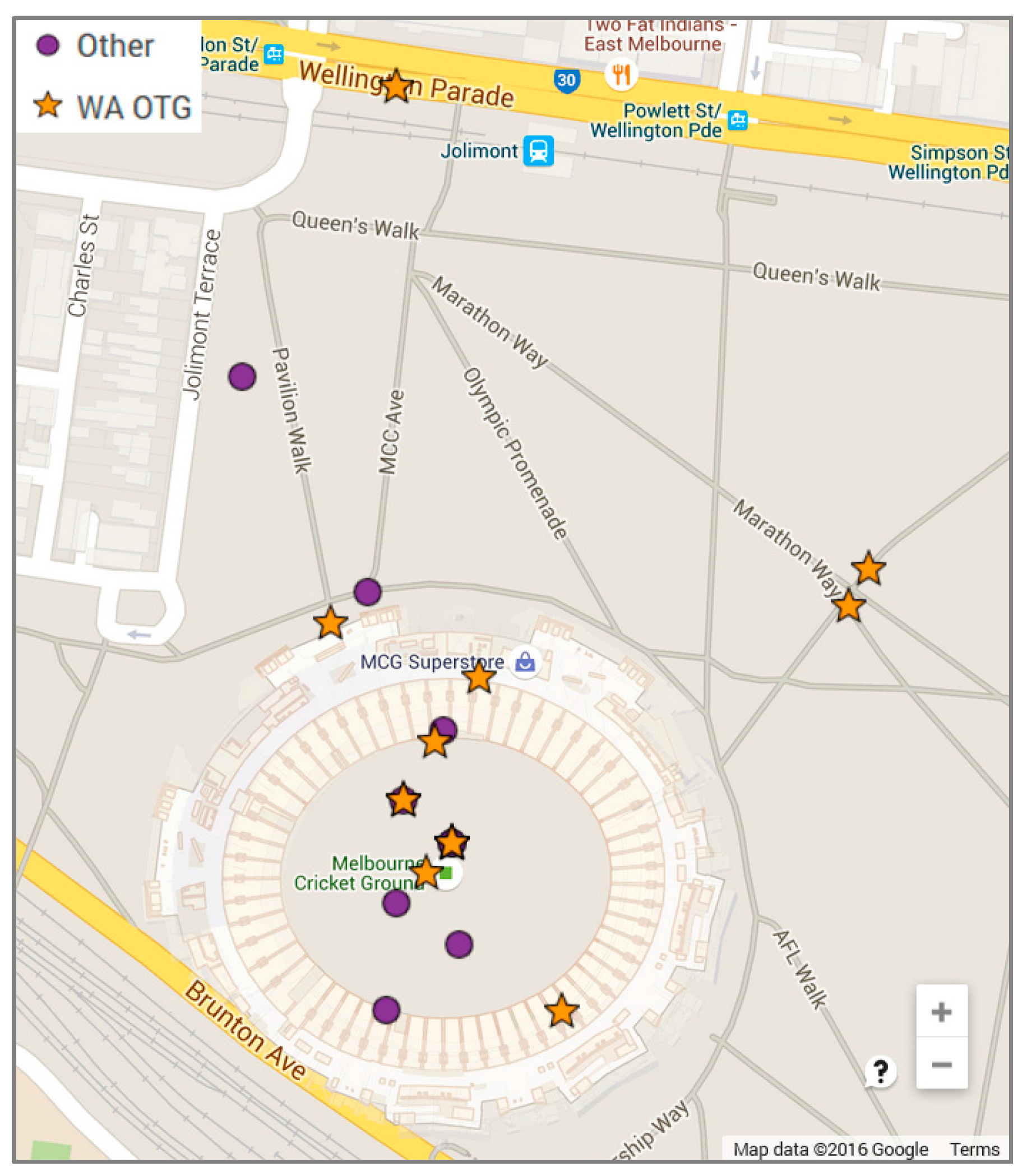

3.4. Posting Behaviour: Temporal and Spatial Analysis

An analysis of posting behavior for the AFon and GFon datasets, includes a comparison of when micro-blogs were posted compared (using the timestamp) to the known schedule of the event. This enabled a temporal categorization of images labelled as WA relative to the progress of the event. Additionally, images linked to micro-blogs with geotags were visualized on a map. Though the number of micro-blogs with geotags was limited, in combination with the images visual topic and temporal category their meaning was explained.

3.5. Image Categorization by Bag-of-Words

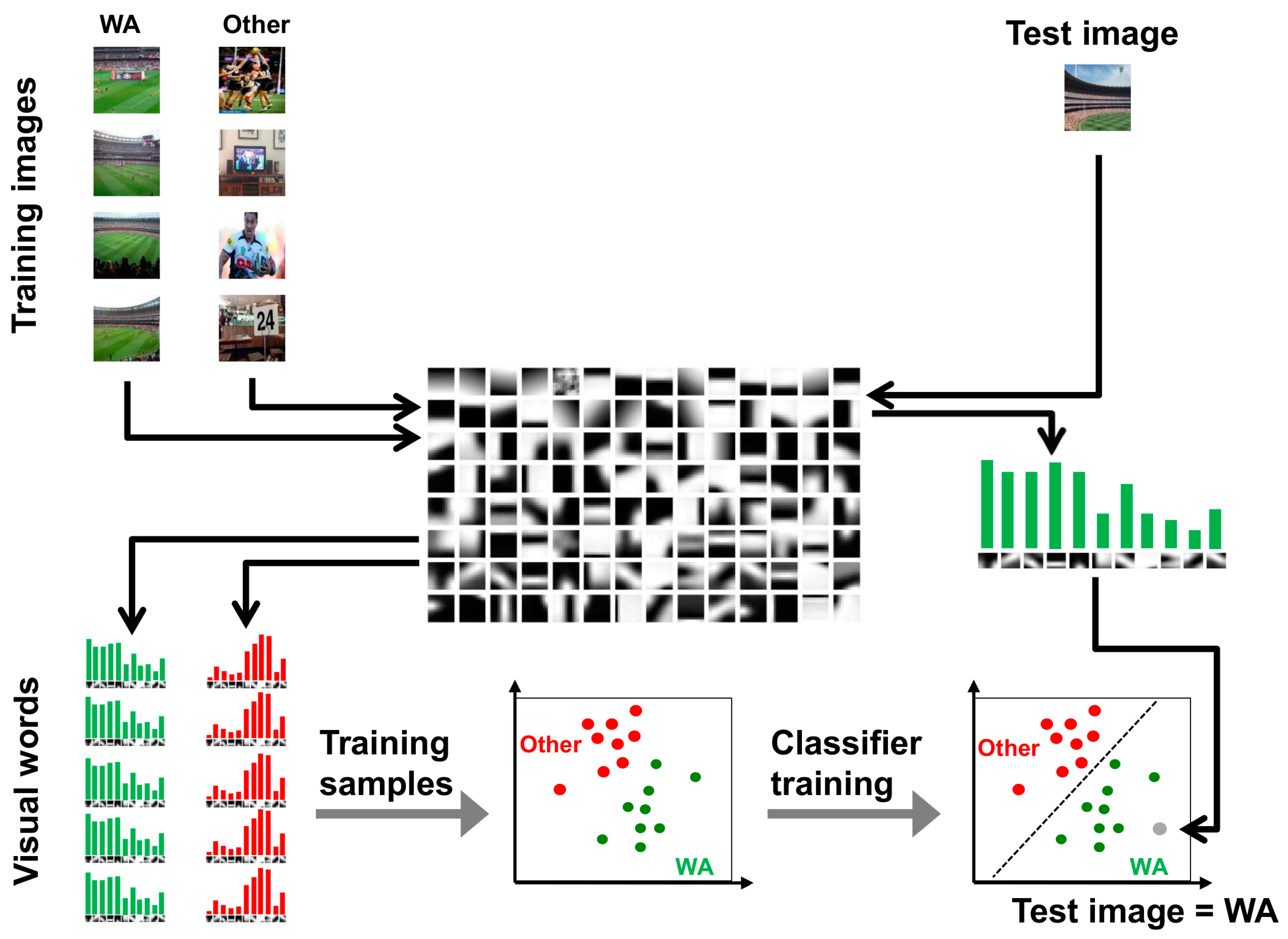

The general workflow of the bag-of-words approach for categorizing WA presented in

Figure 2 consists of the following steps (supported by Matlab software):

Extracting local image features.

Constructing a vocabulary of visual words.

Training a classifier using the vocabulary and a set of training images.

Evaluating the classifier on a set of test images.

3.5.1. Extracting Local Image Features

Local image features describe the characteristics of small image regions. A number of methods are available for detecting and describing local image features. We use Speeded Up Robust Features (SURF) [

59] to detect image keypoints and compute their descriptors. An alternative is to define image patches on a regular grid, and compute the SURF descriptor for each patch. This latter approach is more suitable for images that do not have many distinct local features. Each SURF descriptor is a vector of length

n describing the magnitude and orientation of gradients in a small neighborhood around the keypoint. For efficiency in all our experiments we choose

n = 64. This empirically configured value is selected to balance between distinctiveness and computation time. Larger values of

n may result in more distinctive descriptors but will also increase the computation time of feature extraction.

3.5.2. Vocabulary Construction

A vocabulary is defined by finding clusters in the 64-dimensional space formed by all local descriptors of all images. The clusters are found by a k-means clustering algorithm, with unsupervised seeding, with a predetermined number of clusters k. Each cluster center is the mean of a number of similar local descriptors, and can be interpreted as a visual word containing local information that may be found in many images.

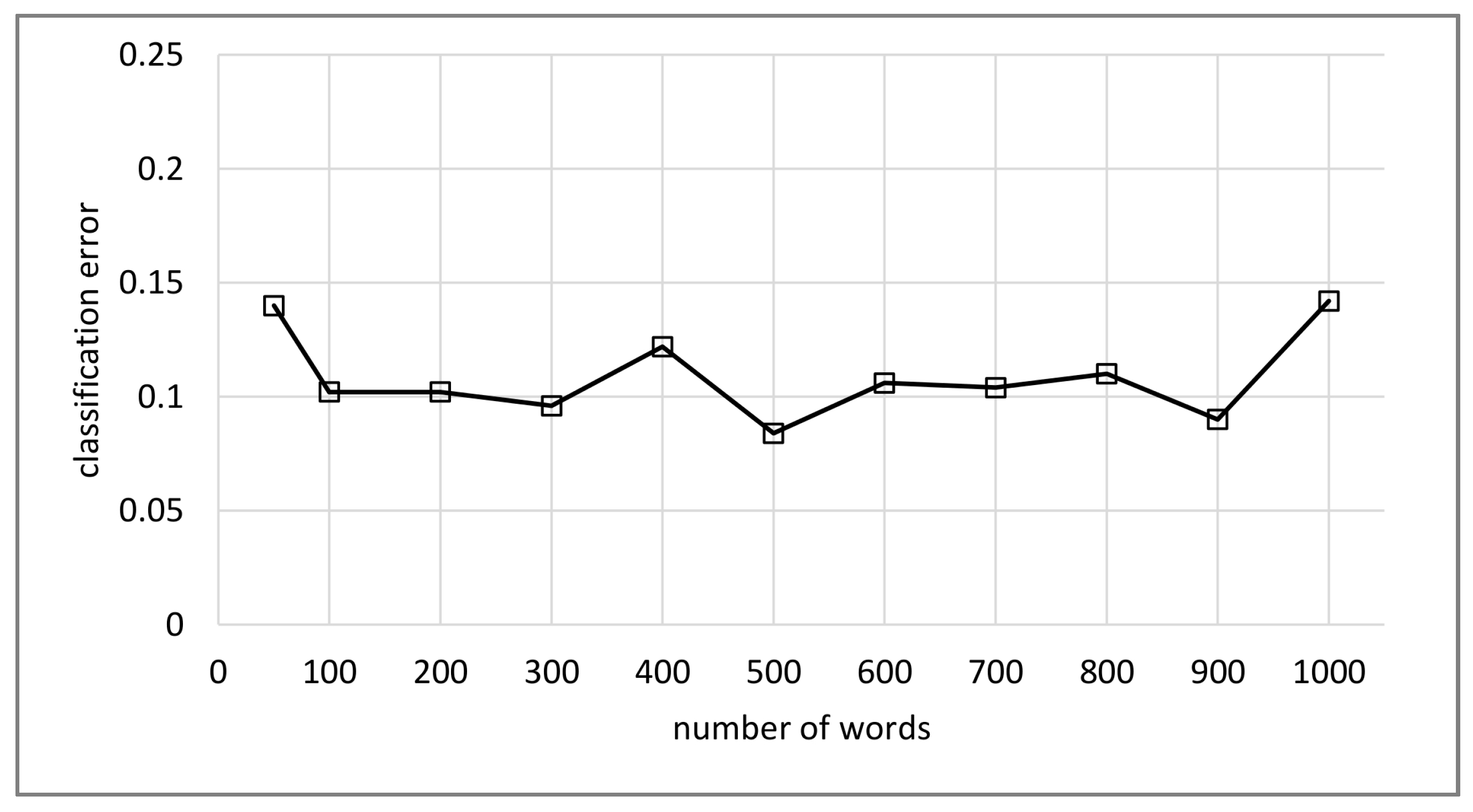

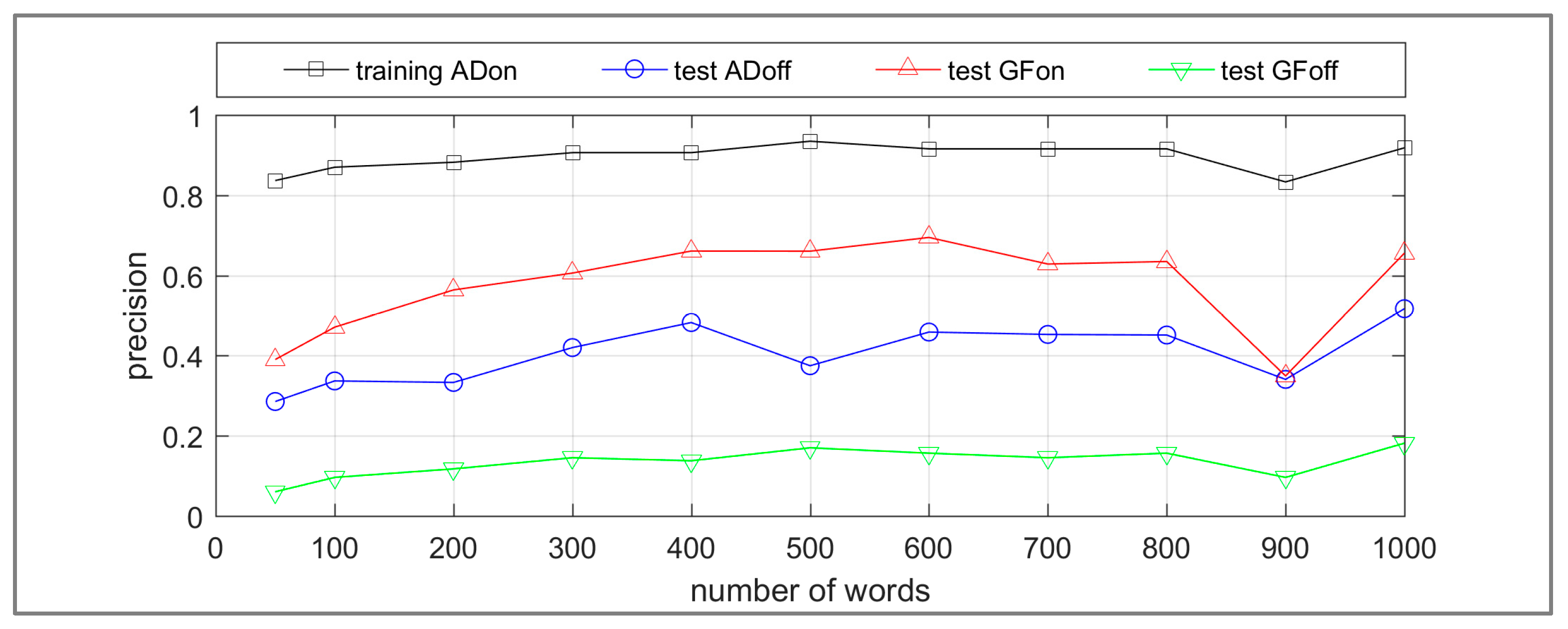

The choice of the number of visual words

k in the vocabulary has an influence on the outcome of the image category classification. As mentioned in

Section 2, there is no rule for choosing the number of words, and an appropriate choice is often made by evaluating the classification performance against varying number of words.

3.5.3. Classifier Training and Evaluation

Once the vocabulary is constructed, each image can be represented by a histogram of word frequencies. This is simply done by counting for each cluster the number of member descriptors that are present in the image. The histogram representation results in a compact encoding of the image into a single feature vector of length k.

With every image represented by a feature vector, a classifier can be learned from a set of labelled images. For the identification of WA we consider two categories of images, either confirming or not confirming the poster being present at the event. With a set of training images for each category a binary classifier is trained, a linear SVM is selected as a baseline, and the classification performance is evaluated using a set of labelled test images. The evaluation is based on overall accuracy defined as the ratio of correctly classified images to the total number of images. Similarly, the classification error is defined as the ratio of misclassified images to the total number of images.

In addition to Matlab software, WEKA [

60] is utilized for the classification tasks.

3.5.4. Practical Considerations

In essence, the bag-of-words method for identifying WA involves a supervised classification, requiring a human annotator to manually assign labels to a set of training images. In practice, we would like to eliminate or minimize the level of manual interaction. Two scenarios to minimize the manual labelling in the bag-of-words framework investigated are the following:

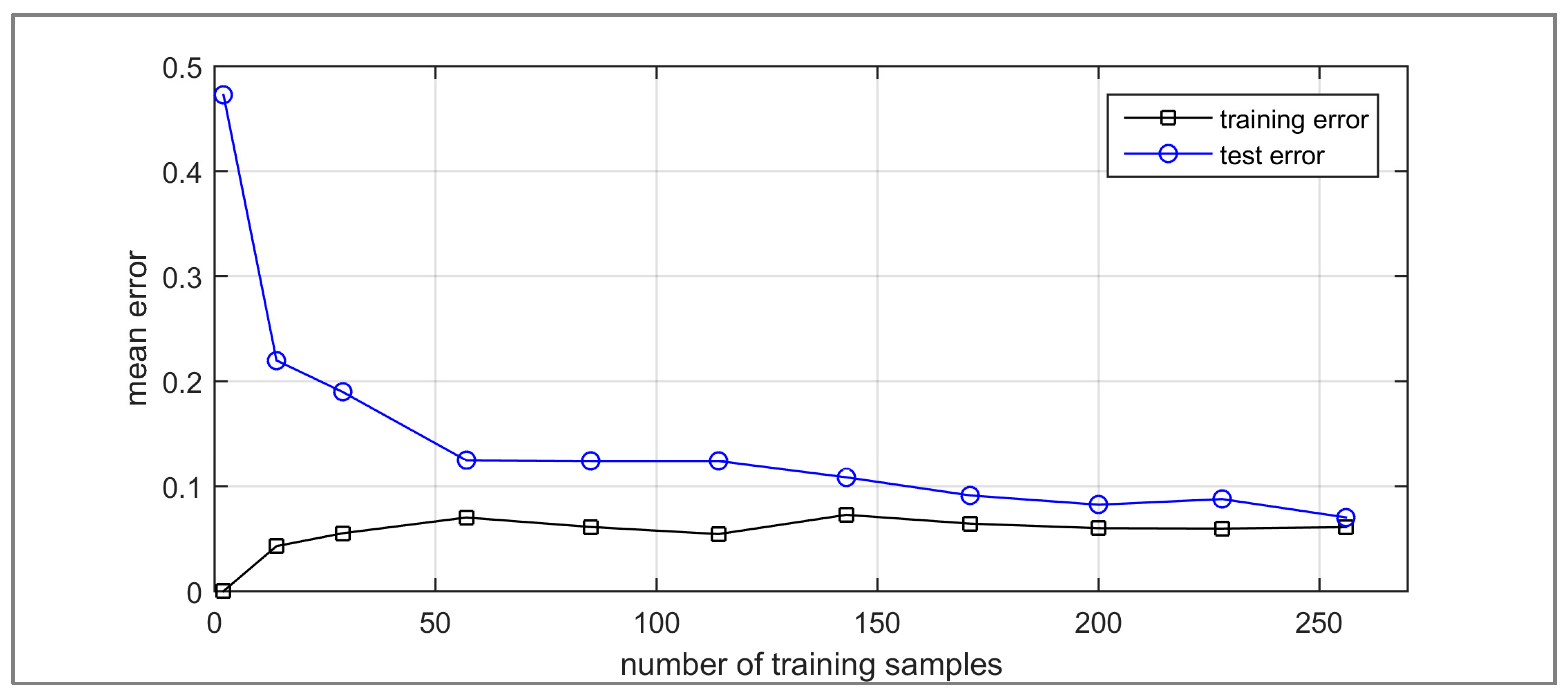

Training a classifier with a small set of labelled images, and applying it to a larger set. This requires finding the minimum number of training images needed to sufficiently train the classifier, which can be achieved through a learning curve analysis [

61,

62].

Training a classifier with the labelled images of one event, and applying it to the images of other similar events. In machine learning this is usually referred to as transfer learning [

63]. A representative approach to transfer learning is adopted in this research.

5. Discussion

This research confirms that only a fraction of micro-bloggers posting about an event can be described as witnesses OTG, and that imbalance is further compounded for events that are broadcast live through a variety of mediums. The ANZAC Day and Grand Final AFL matches had relatively similar size audiences OTG, but the Grand Final had a significantly greater broadcast audience (see

Table 1). It was predicted that the number of WA OTG for the Grand Final would be similar or exceed that held on ANZAC Day, however, this was not the result with 54 images detected versus 97 images. It is concluded this result was unlikely due to varying audience characteristics but due to differences in sampling. For the Grand Final micro-bloggers were sampled to those who had posted two original micro-blogs rather than one which was the criteria for the ANZAC Day game. This result suggests that witnesses are situated in the

long-tail, and a significant number will only post one original micro-blog about an event they are attending. This finding should be assumed generic to all events. A difference in temporal sampling criteria is also likely to have contributed, with confirmation that witnesses OTG are just as likely to post before the event during the pre-match entertainment, as during the main attraction. Therefore, it is recommended, the optimal temporal extents to sample for WA OTG is from the earliest time witnesses have access to the venue, including queuing outside, until they have travelled home. For the Grand Final, with extended access to the venue and extended pre and post-game entertainment, the sampling time did not encompass the full temporal extents now recommended.

Temporal posting behavior was additionally analyzed for varying patterns between WA OTG and other categories, which could be developed as features to assist machine learning. However, it was found that though the volume of micro-blogs categorized as WA OTG varied significantly from other image categories, their temporal posting pattern did not. As the temporal pattern of posting was comparable between both case studies, it is suggested that the posting behavior in these case studies can be assumed for other scheduled event types.

The mapped locations of images with geotags enabled analysis of the relationship between these content sources, despite limited numbers. The analysis demonstrated that not all images located at the MCG were categorized as WA OTG, and images categorized as WA OTG were posted from locations outside the venue. The implications of these findings for all event research, is that humans post about topics beyond their immediate spatial and temporal context, even while participating in events. The geotag, and what witnessing inferences it support need careful consideration, and will vary dependent on the spatial and temporal extents of the event and its effects. For example, a geotag at the dedicated venue posted during the event is strong evidence supporting the inference the micro-blogger is a Witness even if observations are absent. However, for an event affecting a large geographic area, the inference of witnessing from a geotag is less certain.

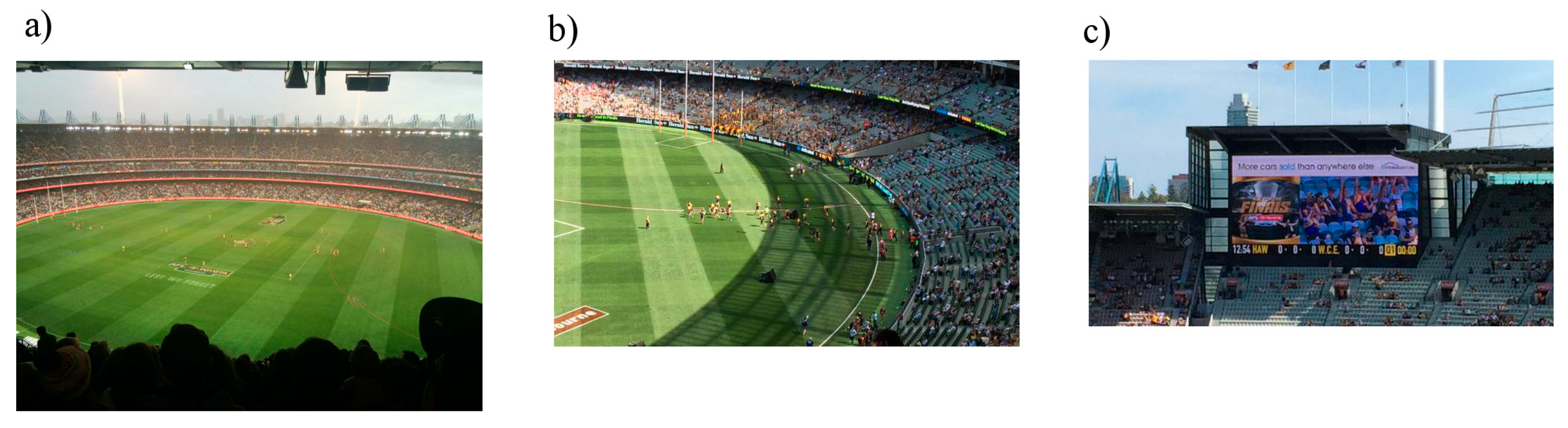

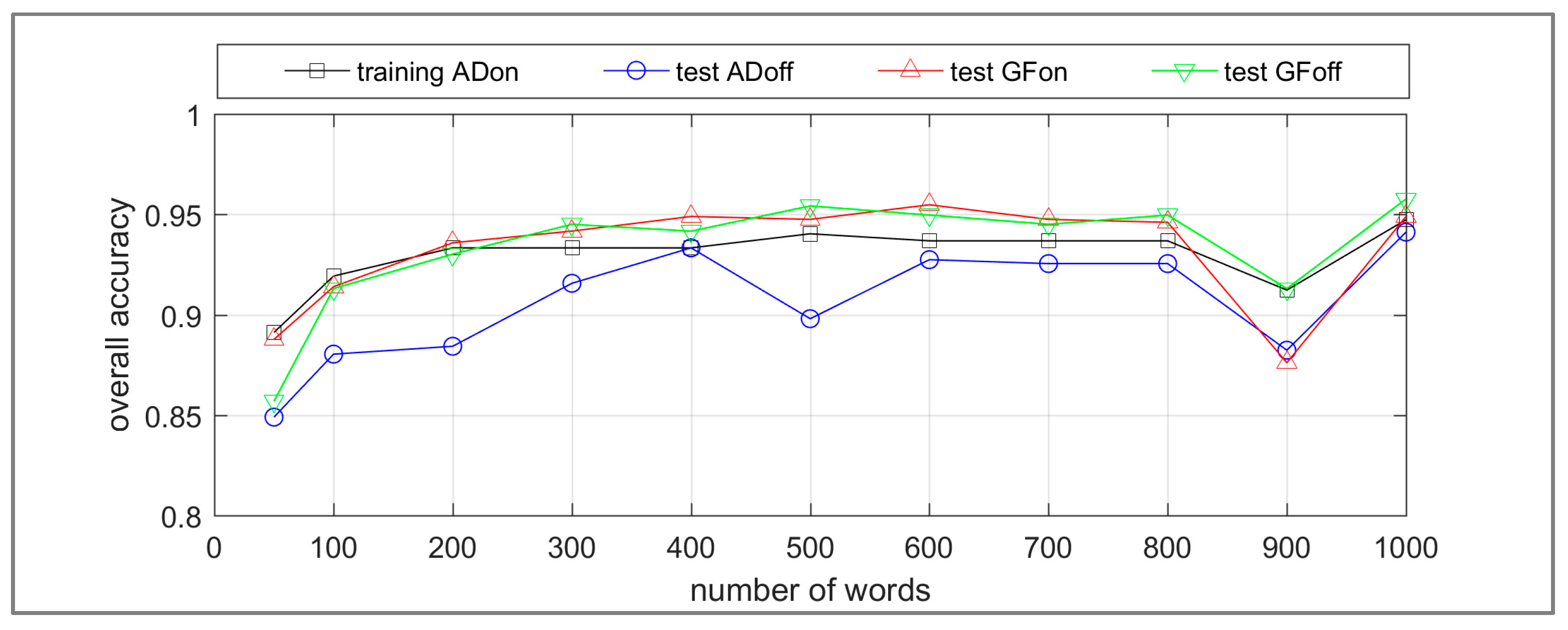

To support all experiments a definition of images which could be classified as WA OTG was described. For each image in the ADon dataset, the dominant visual topic was identified and categorized, and observations of the event included views of the venue, both of the arena and the exterior. However, only those inside the arena were estimated in sufficient numbers required to support the bag-of-words methodology, and adopted to represent the category WA OTG. This limitation is present for all machine learning approaches and the number of samples required depends on numerous factors, for example the number of classes, the number and proportion of samples in each class, and the number of features representing each sample. The implications of minimum sample requirements for classifying images which are WA, is that dominant visual observation topics must emerge for each event. A learning curve experiment was completed for the case study finding the training and test error converged at approximately 50 images. This number provides guidance as to sample sizes required for similar events and datasets. Additionally, it provides guidance as to the sample of manually annotated images that are required to create a training model to automate subsequent image categorization.

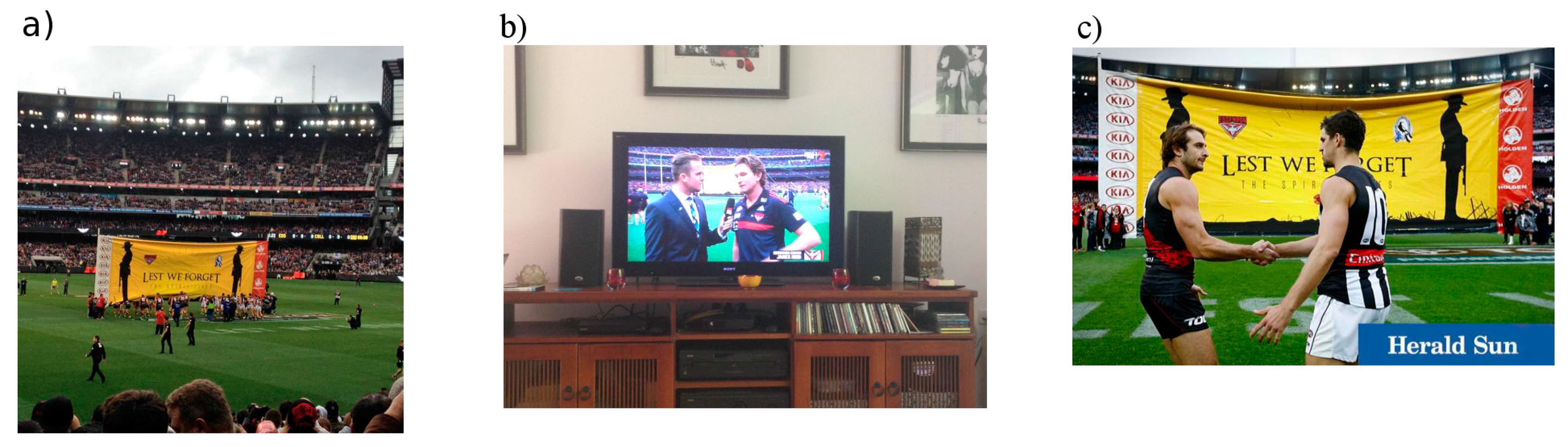

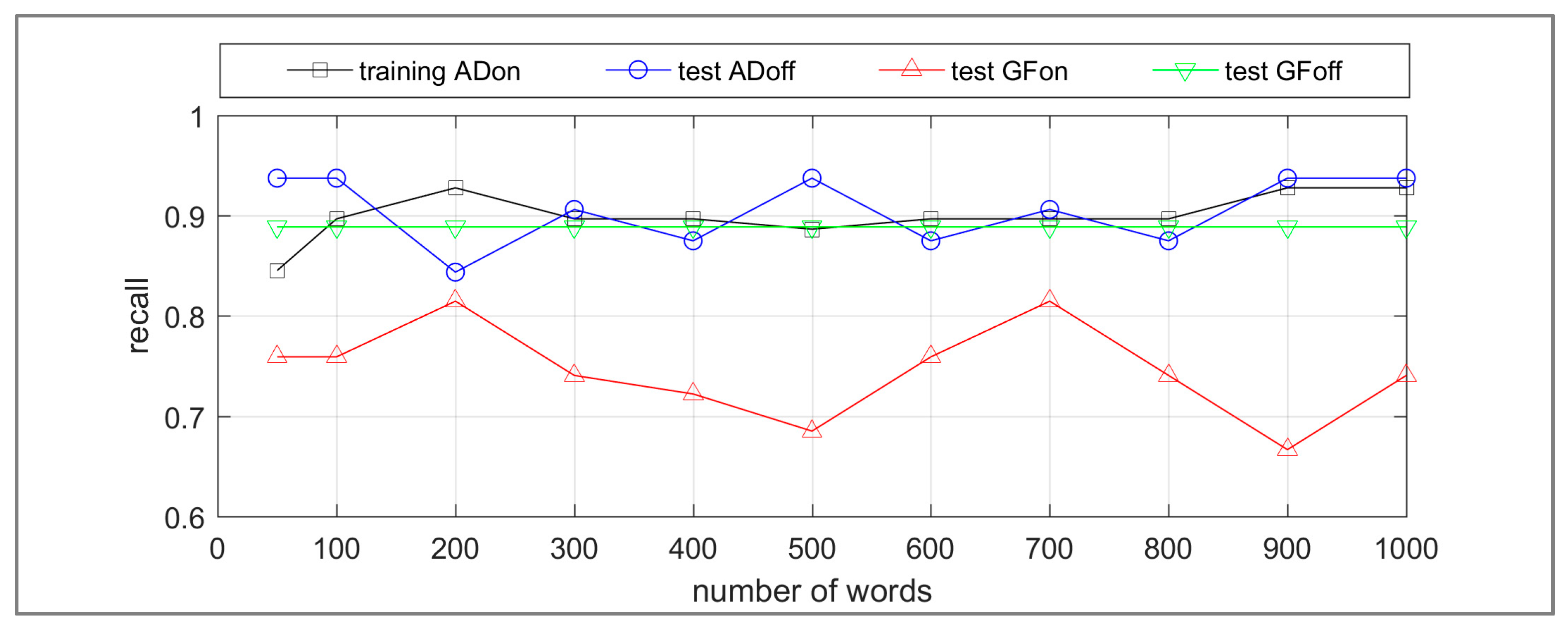

Another methodology to minimize manual human annotation is transfer learning, with the results indicating the potential of this method for the application. But further analysis of misclassified images indicates areas where improvements are required, in particular to reduce the number of false positives. Even a false positive error as low as 0.05 may not be sufficient, as a comparable number of true positives may result, due to the relative size of the classes in the datasets. Recommendations to reduce the impact of this scenario include increased filtering to improve the representation of the WA OTG class in unbalanced datasets, experimentation with classifiers developed specifically for unbalanced datasets, and improved representation of the categories in the training dataset. The misclassification analysis confirmed scenarios where additional filtering would be beneficial. Techniques that could identify non-real world scenes for removal [

76] would contribute to reducing the unbalance in the datasets classes, and reduce false positive error. Such techniques are likely to contribute to a variety of event types, as evidenced by previous case studies [

11]. Identification and removal of images with overlays placed by broadcasters and mobile applications [

46], would contribute similarly to event types which are broadcast live. A particular issue identified in the ADoff dataset, was the misclassification of images posted about similar events, other football matches scheduled on the same day. A less sophisticated but equally effective way to identify these images for removal is to search for the promoted hashtag of these other games.

The misclassification analysis also identified sub-categories of WA OTG that were overrepresented in the false negatives. For the training datasets, all images which were identified by a human annotator as being of the arena at the MCG were included in the category WA OTG. But as the false negative results in

Figure 13 indicate, sub-categories such as selfies and close-ups of the scoreboard are more likely to be misclassified. A simple solution would be to eliminate non-typical samples such as scoreboard close-ups. However, the implications of this action for each case should be considered. For example the topic of selfies or portraits is more complex. The results for the ADon dataset suggest a linked selfie or portrait does not provide strong evidence that the micro-blogger is a Witness to that event, with 40 selfies or portraits at indeterminate locations compared to eight identified as at the MCG. Additionally, the appearance of portraits and selfies in the off-hash datasets indicates they are an enduring characteristic well represented in social media in general. Therefore, an application may choose to eliminate them from training datasets representing WA, or ensure they are adequately represented and visually distinguishable from selfies generally.

Media photography was not represented in the false positives to the degree expected, suggesting that this category of images were both visually distinctive and adequately represented in the training datasets. In comparison, images of a screen relaying the broadcast did prove problematic. Previous research indicated that the sample for the single dataset ADon was not adequate to represent this class [

1]. An approach that may provide improvements is to increase the number of samples by combining those collected for multiple event instances. Essentially, the recommendation to improve results is to move beyond the constraints of a particular event instance when collecting training samples to optimize category representation.

This approach may also improve results for transfer learning. The false negatives for the GFon dataset suggests misclassifications occurred which cannot be attributed to the training errors, but perhaps overfitting to the ANZAC Day event. To reduce the chance of overfitting to a particular event instance, the training dataset for a real-world application of the methodologies demonstrated in this research, might have images taken from a range of event instances. Additionally, this approach may contribute to an improved representation of the diversity of visual topics in off-hash datasets. Finally, the greatest benefit of this approach may be to support event types for which insufficient sample numbers of the dominant visual observation topics are likely to be present for a single event instance. This scenario may evolve due to the characteristics of the event itself, limited effects which humans can observe or limited human sensors in the place where the event is occurring.

6. Conclusions

Contributions of this research include a comprehensive evaluation of automatic image category classification using the bag-of-words method for case study events, demonstrating it is possible to identify images that are Witness Accounts posted from on-the-ground. A comparison of classifiers showed a linear SVM achieved an overall accuracy of 90% and precision and recall for both classes exceeding 83%. Further, results from learning curve experiments found that identifying Witness Accounts for the case study event was also possible by training the classifier using a relatively small subset of the images. This is a significant finding in the context of social media, as an automatic method is required due to the large number of images posted about events.

Transfer learning experiments identified the potential to identify Witness Accounts for different instances of the same event type with acceptable recall results, however, low precision results indicate a need to further address class imbalance and false positive error particularly for off-hash datasets. Numerous methods to achieve this goal were described for future research including additional pre-classification filtering to enable removal of images without real life scenes, and improving the representation of categories to be classified by combining samples from different event instances. This would also improve the applicability of the approach in this research to be extended from event types held in dedicated venues to those which are not. For many event types an adequate sample of training images capturing the dominant visual observation topics would only be possible by collating images from multiple event instances. Testing this enhancement towards generalization of the method to a range of dissimilar event types from the case study, is left to future research. Additionally, due to the relatively balanced ADon training dataset used for classifier comparison, algorithms designed or adapted for improved performance with unbalanced datasets were not explored. Such classifiers may have proved beneficial alternatives in the transfer learning experiments. Approaches to be considered are hybrid classifiers designed for the task for example RUSBoost [

77] or the introduction of a misclassification cost with a SVM classifier an approach adopted by Starbird, Grace and Leysia [

17].

The bag-of-words is a relatively simple method for extracting local image features and encoding them into global features for image category classification. In future, we will investigate models for incorporating spatial relationships between words, and the burstiness of word configurations. Another direction for future research is to investigate recent developments in image description by deep convolutional neural networks, which have recently achieved state of the art performance on a range of image categorization tasks.

Further contributions include an analysis of posting behavior that provides recommendations to maximize Witness Accounts in collected samples for scheduled event types held in dedicated venues. These include a significant number of witnesses will only post one original micro-blog about an event, and are just as likely to post before the scheduled start of the event as during the event. These recommendations are critical when Witness Accounts are typically only a fraction of micro-blogs posted particularly if the event is broadcast live through a variety of mediums.