Disaster Hashtags in Social Media

Abstract

:1. Introduction

2. Materials and Methods

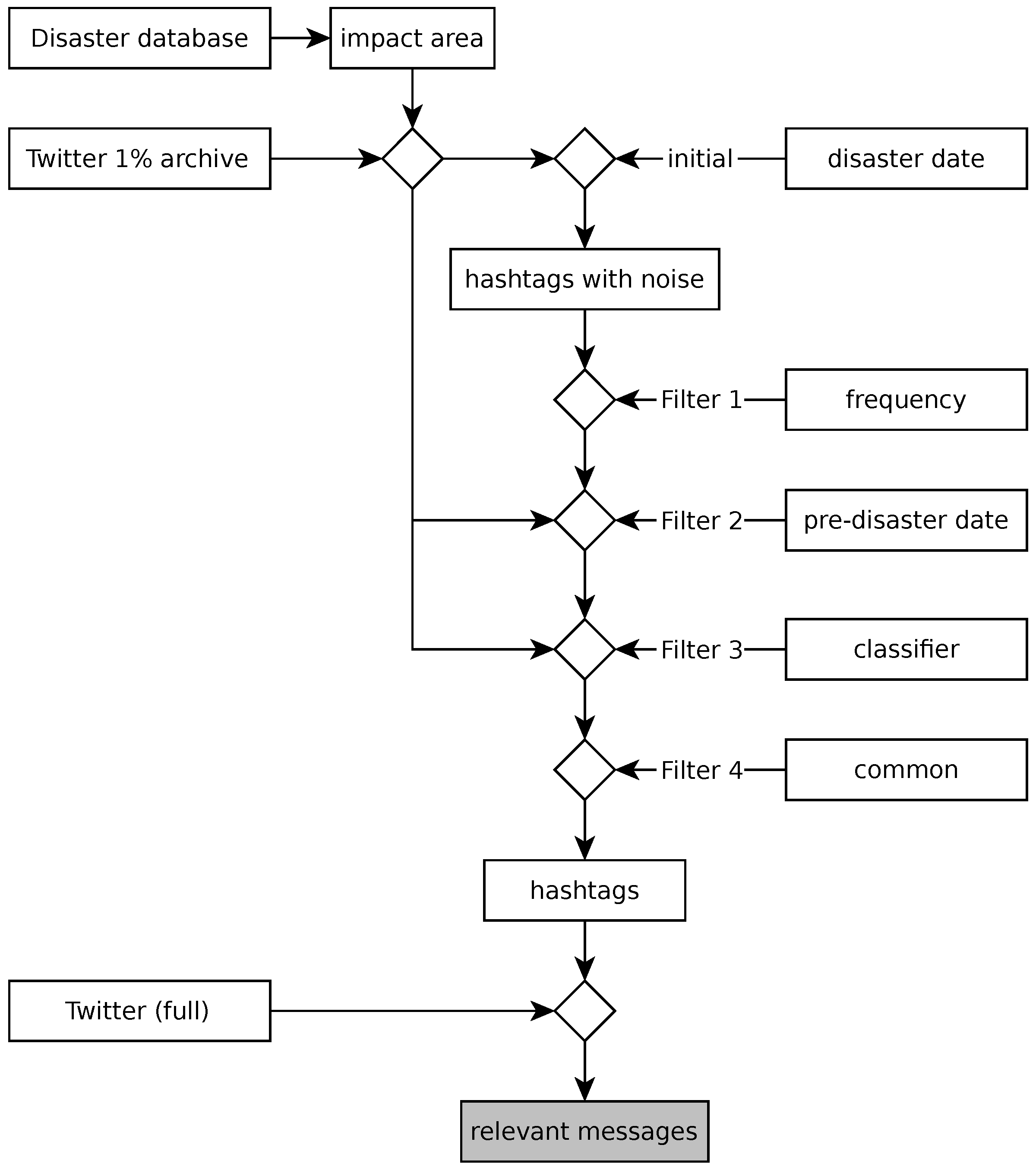

2.1. Method Overview

- Typhoon #Haiyan has displaced at least 800,000 people, U.N. estimates.

- Typhoon #Haiyan deaths likely 2000 to 2500—not 10,000, Philippine president tells

2.2. Datasets

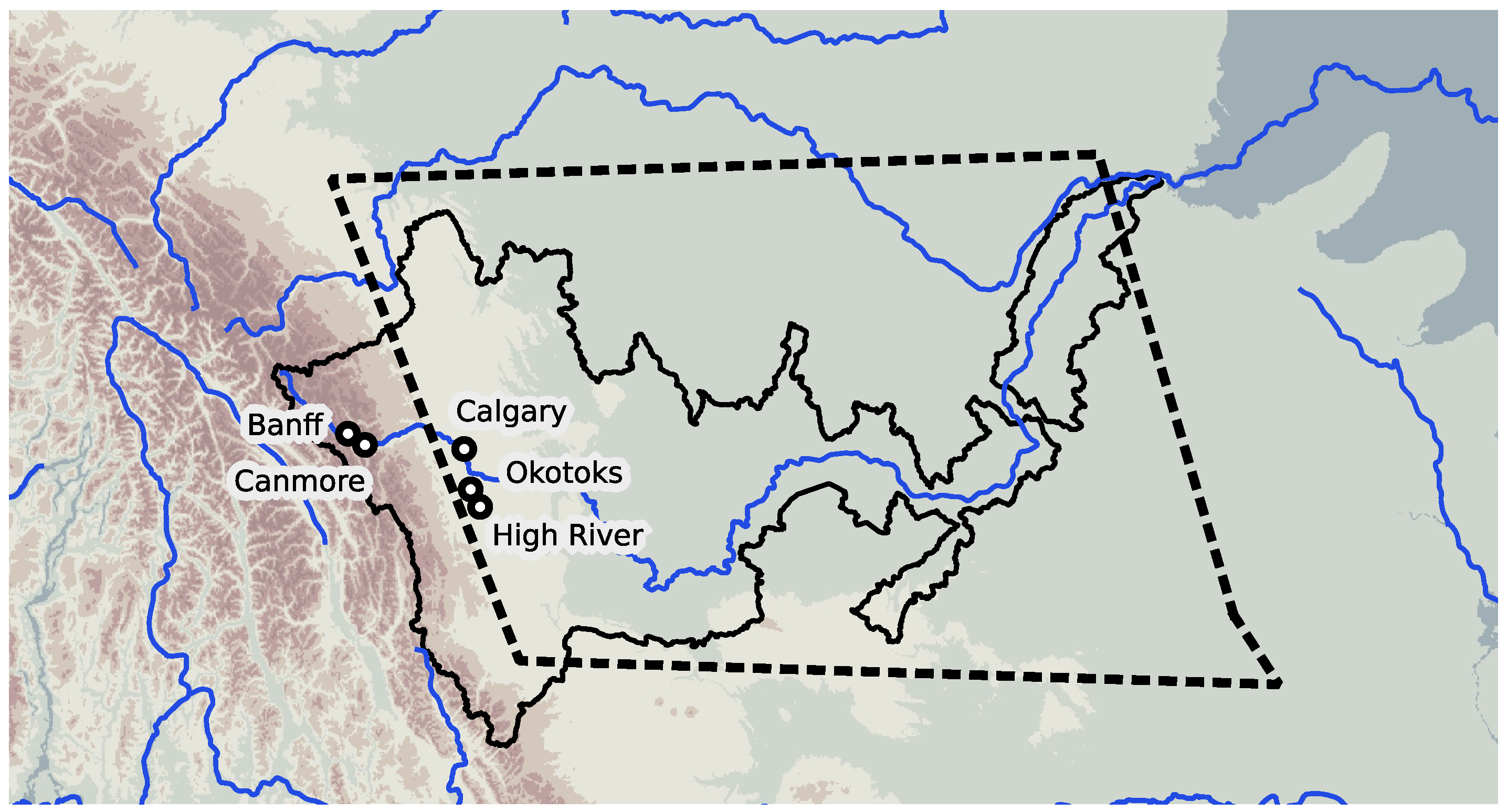

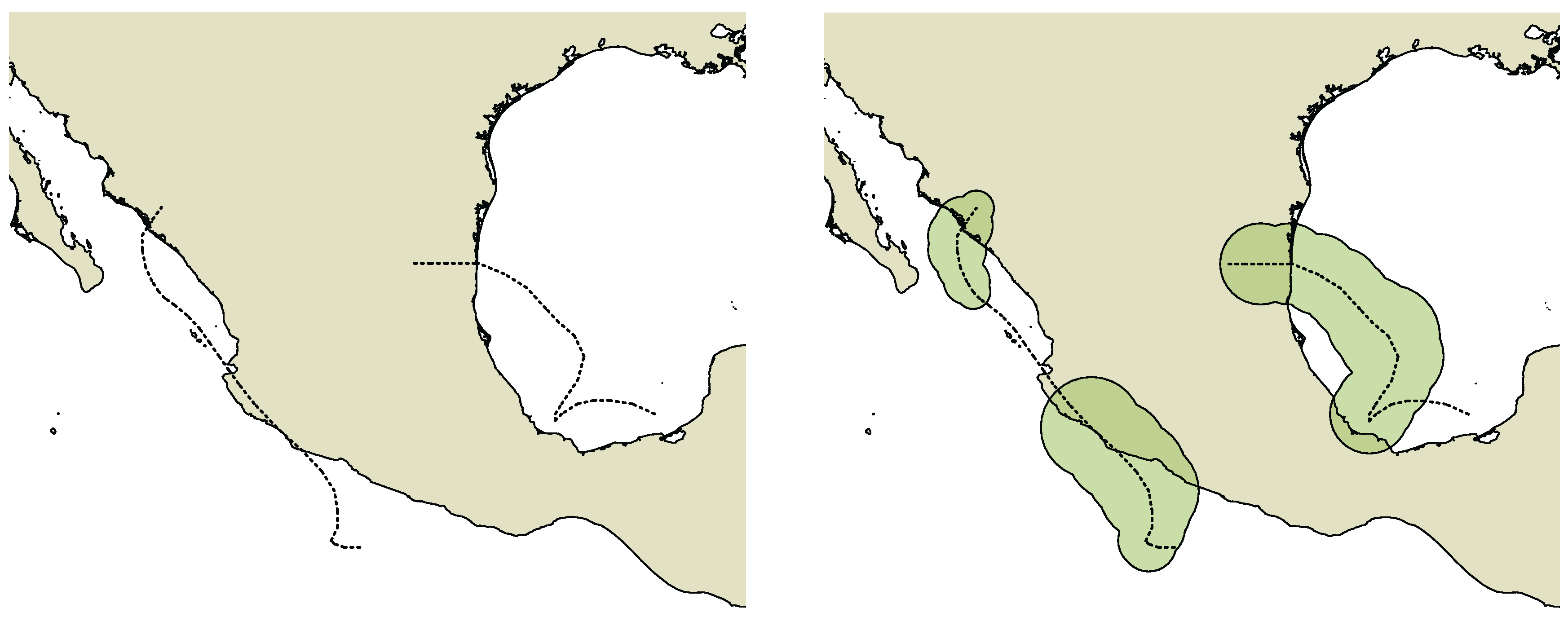

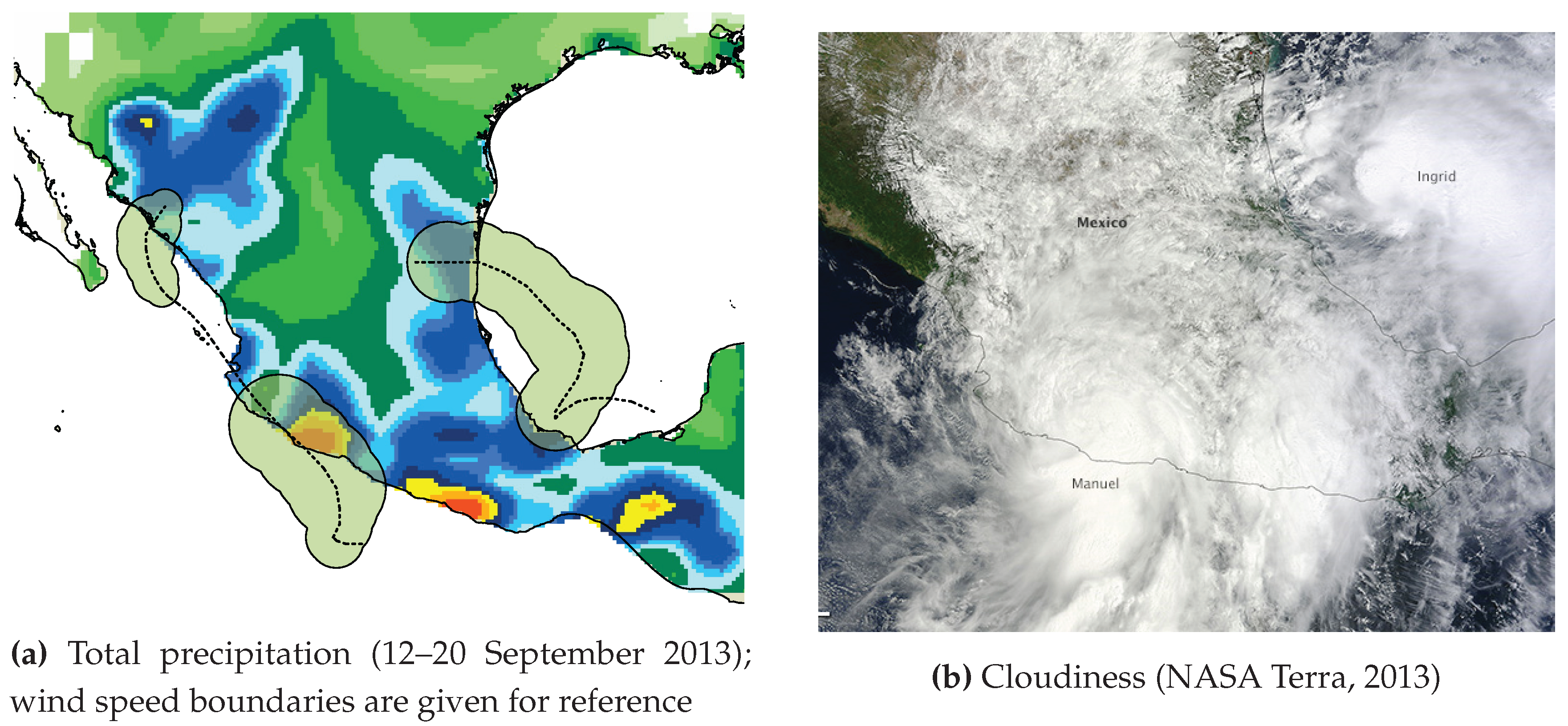

2.3. Impact Area

2.4. Classifier

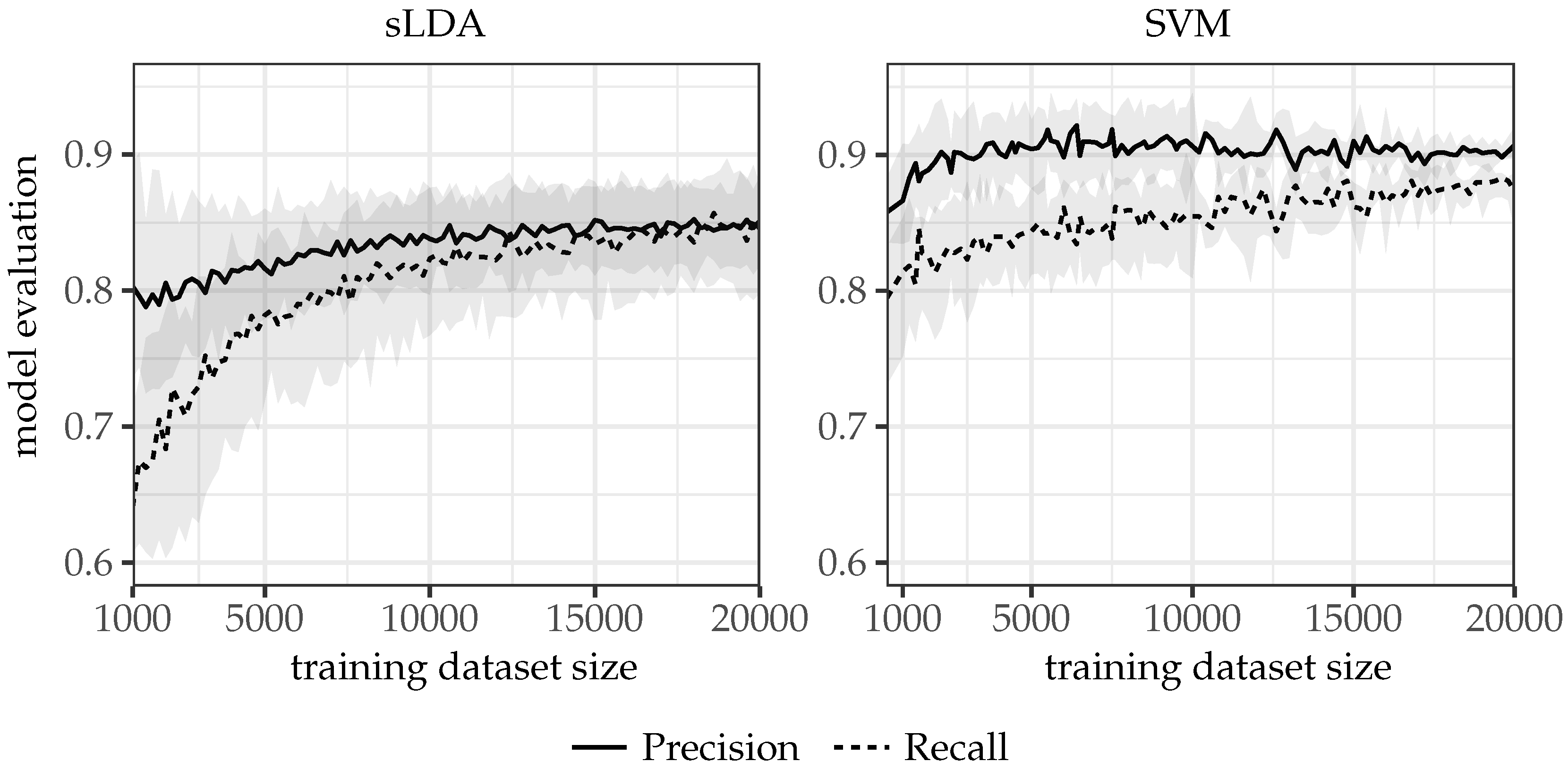

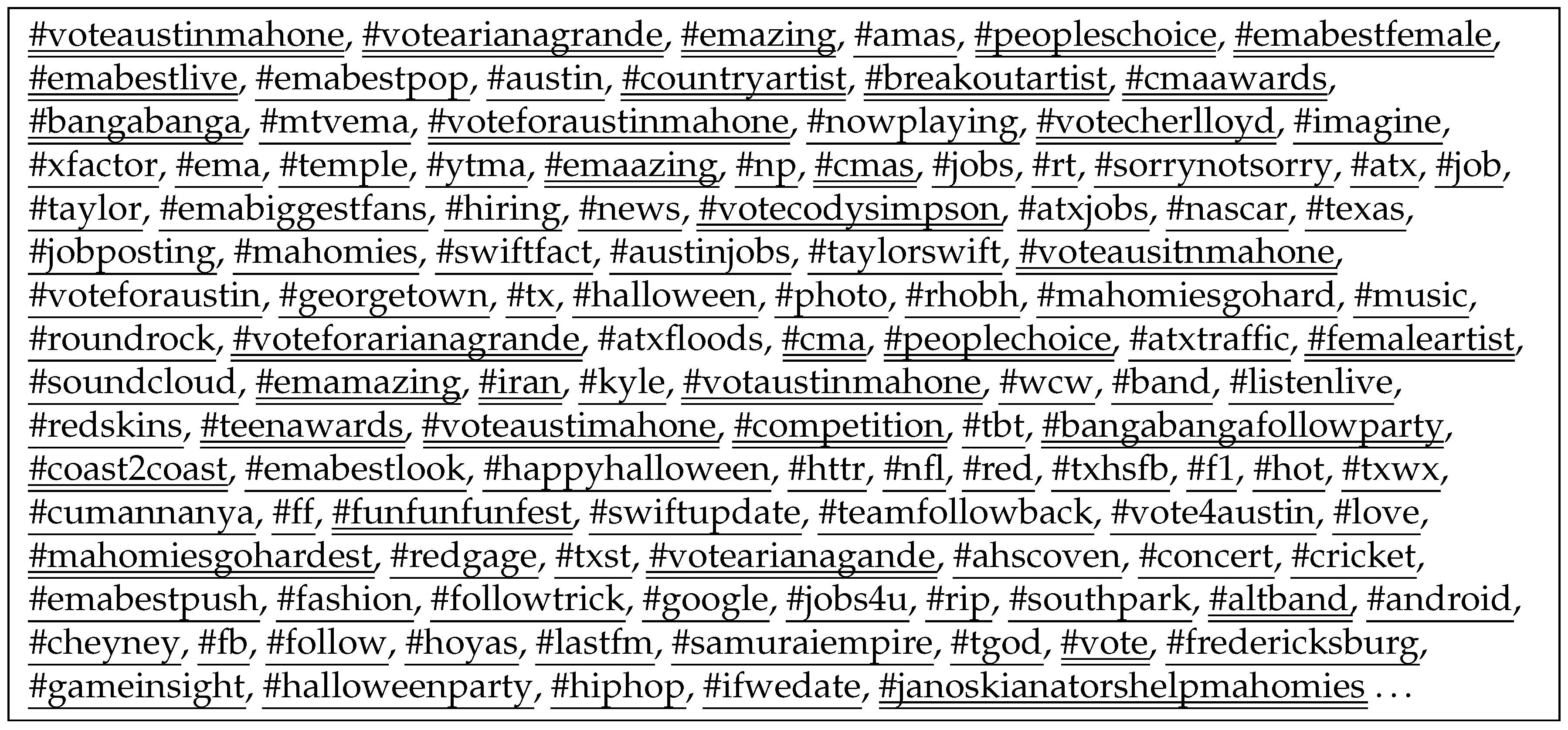

2.5. Hashtag Extraction and Filtering

- #EMABestFemale Selena Gomez . my queen

- Everyone RT this. #EMABestFemale Miley Cyrus

- omg i woke up at 6:30am today and im just dead #votearianagrande

- #Iraq’i government complicit in deadly attack at #CampAshraf on #Iran’ian refugees

3. Results

- Group 1:

- –

- Nari: #walangpasok, #santiph

- –

- Phailin: #phailin, #cyclonephailin

- Group 2:

- –

- Flood in Texas: #atxfloods, #atxwx

- –

- Haiyan: #yolandaph, #haiyan, #tacloban, #prayforthephilippines, #prayfortacloban, #rescueph, #yolanda, #tracingph, #reliefph, #ormoc, #typhoonyolanda, #ugc, #typhoonhaiyan, #yolandaupdates, #prayfortheph, #prayforphilippines, #surigaodelnorte, #bangonvisayas, #strongerph, #zoraidaph

4. Conclusions and Discussion

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| AIDR | Artificial Intelligence for Disaster Response |

| API | Application Programming Interface |

| AUC | Area Under the Curve |

| EM-DAT | Emergency Events Database |

| GAALFE | Global Active Archive of Large Flood Events |

| IBTrACS | International Best Track Archive for Climate Stewardship |

| POS | Parts Of Speech |

| sLDA | Supervised LDA |

| SVM | Support Vector Machine |

References

- Internet Archive. Available online: https://archive.org/details/twitterstream (accessed on 25 January 2017).

- Okolloh, O. Ushahidi or ’testimony’: Web 2.0 tools for crowdsourcing crisis information. Particip. Learn. Action 2009, 59, 65–70. [Google Scholar]

- Haustein, S.; Bowman, T.D.; Holmberg, K.; Tsou, A.; Sugimoto, C.R.; Larivière, V. Tweets as impact indicators: Examining the implications of automated “bot” accounts on Twitter. J. Assoc. Inf. Sci. Technol. 2015, 67, 232–238. [Google Scholar] [CrossRef]

- Imran, M.; Castillo, C.; Lucas, J.; Meier, P.; Vieweg, S. AIDR: Artificial Intelligence for Disaster Response. In Proceedings of the 23rd International Conference on World Wide Web, Seoul, Korea, 7–11 April 2014; International World Wide Web Conferences Steering Committee: Seoul, Korea, 2014; pp. 159–162. [Google Scholar]

- Imran, M.; Castillo, C.; Diaz, F.; Vieweg, S. Processing Social Media Messages in Mass Emergency: A Survey. ACM Comput. Surv. 2015, 47. [Google Scholar] [CrossRef]

- Xiong, F.; Liu, Y.; Zhang, Z.; Zhu, J.; Zhang, Y. An information diffusion model based on retweeting mechanism for online social media. Phys. Lett. A 2012, 376, 2103–2108. [Google Scholar] [CrossRef]

- Xiong, F.; Liu, Y.; Zhang, H.F. Multi-source information diffusion in online social networks. J. Stat. Mech. Theory Exp. 2015, 2015, 07008. [Google Scholar] [CrossRef]

- Friggeri, A.; Adamic, L.A.; Eckles, D.; Cheng, J. Rumor Cascades. In Proceedings of the 8th International AAAI Conference on Weblogs and Social Media, Ann Arbor, MI, USA, 1–4 June 2014; pp. 101–110. [Google Scholar]

- Olteanu, A.; Vieweg, S.; Castillo, C. What to Expect When the Unexpected Happens: Social Media Communications Across Crises. In Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing, Vancouver, BC, Canada, 14–18 March 2015; ACM: Vancouver, BC, Canada, 2015; pp. 994–1009. [Google Scholar]

- Olteanu, A.; Castillo, C.; Diaz, F.; Vieweg, S. CrisisLex: A Lexicon for Collecting and Filtering Microblogged Communications in Crises. In Proceedings of the 8th International Conference on Weblogs and Social Media (ICWSM), Ann Arbor, MI, USA, 1–4 June 2014; AAAI Press: Ann Arbor, MI, USA, 2014; pp. 376–385. [Google Scholar]

- Twubs: Hashtag Directory. Available online: http://twubs.com/p/hashtag-directory (accessed on 25 January 2017).

- Han, B.; Baldwin, T. Lexical Normalisation of Short Text Messages: Makn Sens a #Twitter. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011; Association for Computational Linguistics: Portland, OR, USA, 2011; Volume 1, pp. 368–378. [Google Scholar]

- Ahmed, B. Lexical normalisation of Twitter Data. In Proceedings of the 2015 Science and Information Conference, London, UK, 28–30 July 2015; Institute of Electrical and Electronics Engineers (IEEE): London, UK, 2015. [Google Scholar]

- Sridhar, V.K.R. Unsupervised text normalization using distributed representations of words and phrases. In Proceedings of the NAACL-HLT, Denver, CO, USA, 31 May–5 June 2015; Association for Computational Linguistics: Denver, CO, USA, 2015; pp. 8–16. [Google Scholar]

- De Albuquerque, J.P.; Herfort, B.; Brenning, A.; Zipf, A. A geographic approach for combining social media and authoritative data towards identifying useful information for disaster management. Int. J. Geogr. Inf. Sci. 2015, 29, 1–23. [Google Scholar] [CrossRef]

- Morstatter, F.; Pfeffer, J.; Liu, H. When is It Biased? Assessing the Representativeness of Twitter’s Streaming API. In Proceedings of the 23rd International Conference on World Wide Web, Seoul, Korea, 7–11 April 2014; ACM: Seoul, Korea, 2014; pp. 555–556. [Google Scholar]

- Guha-Sapir, D.; Below, R.; Hoyois, P.; Université Catholique de Louvain, Brussels, Belgium. EM-DAT: International Disaster Database. Available online: http://www.emdat.be/ (accessed on 25 January 2017).

- Knapp, K.R.; Applequist, S.; Diamond, H.J.; Kossin, J.P.; Kruk, M.; Schreck, C. NCDC International Best Track Archive for Climate Stewardship (IBTrACS) Project, Version 3. [CrossRef]

- Knapp, K.R.; Kruk, M.C.; Levinson, D.H.; Diamond, H.J.; Neumann, C.J. The International Best Track Archive for Climate Stewardship (IBTrACS). Bull. Am. Meteorol. Soc. 2010, 91, 363–376. [Google Scholar] [CrossRef]

- Brakenridge, G.R.; Dartmouth Flood Observatory, University of Colorado. Global Active Archive of Large Flood Events. Available online: http://floodobservatory.colorado.edu/Archives/index.html (accessed on 25 January 2017).

- Ramm, F. OpenStreetMap Data in Layered GIS Format. Version 0.6.7. Available online: https://www.geofabrik.de/data/geofabrik-osm-gis-standard-0.6.pdf (accessed on 25 January 2017).

- Pomeroy, J.W.; Stewart, R.E.; Whitfield, P.H. The 2013 flood event in the South Saskatchewan and Elk River basins: Causes, assessment and damages. Can. Water Resour. J. Rev. Can. Ressour. Hydr. 2015, 41, 105–117. [Google Scholar] [CrossRef]

- Milrad, S.M.; Gyakum, J.R.; Atallah, E.H. A Meteorological Analysis of the 2013 Alberta Flood: Antecedent Large-Scale Flow Pattern and Synoptic-Dynamic Characteristics. Mon. Weather Rev. 2015, 143, 2817–2841. [Google Scholar] [CrossRef]

- Natural Resources Canada (NRCan). Atlas of Canada 1,000,000 National Frameworks Data, Hydrology—Major River Basin. v6.0. Dataset; 2003. Available online: http://geogratis.gc.ca/api/en/nrcan-rncan/ess-sst/14f77ebc-5600-5e33-9565-1bd58086f98d.html (accessed on 25 January 2017).

- Dee, D.P.; Uppala, S.M.; Simmons, A.J.; Berrisford, P.; Poli, P.; Kobayashi, S.; Andrae, U.; Balmaseda, M.A.; Balsamo, G.; Bauer, P.; et al. The ERA-Interim reanalysis: Configuration and performance of the data assimilation system. Q. J. R. Meteorol. Soc. 2011, 137, 553–597. [Google Scholar] [CrossRef]

- Blake, E.S. The 2013 Atlantic Hurricane Season: The Quietest Year in Two Decades. Weatherwise 2014, 67, 28–34. [Google Scholar] [CrossRef]

- Kimberlain, T.B. The 2013 Eastern North Pacific Hurricane Season: Mexico Takes the Brunt. Weatherwise 2014, 67, 35–42. [Google Scholar] [CrossRef]

- Ashktorab, Z.; Brown, C.; Nandi, M.; Culotta, A. Tweedr: Mining Twitter to Inform Disaster Response. In Proceedings of the 11th International ISCRAM Conference, University Park, PA, USA, 18–21 May 2014; Hiltz, S.R., Pfaff, M.S., Plotnick, L., Shih, P.C., Eds.; 2014; pp. 354–358. [Google Scholar]

- Cobo, A.; Parra, D.; Navón, J. Identifying Relevant Messages in a Twitter-based Citizen Channel for Natural Disaster Situations. In Proceedings of the 24th International Conference on World Wide Web, Florence, Italy, 18–22 May 2015; pp. 1189–1194. [Google Scholar]

- Roy Chowdhury, S.; Imran, M.; Asghar, M.R.; Amer-Yahia, S.; Castillo, C. Tweet4act: Using Incident-Specific Profiles for Classifying Crisis-Related Messages. In Proceedings of the 10th International ISCRAM Conference, Baden-Baden, Germany, 12–15 May 2013; Comes, T., Fiedrich, F., Fortier, S., Geldermann, J., Müller, T., Eds.; 2013; p. 159. [Google Scholar]

- Li, H.; Guevara, N.; Herndon, N.; Caragea, D.; Caragea, K.N.C.; Squicciarini, A.; Tapia, A.H. Twitter Mining for Disaster Response: A Domain Adaptation Approach. In Proceedings of the 12th International Conference on Information Systems for Crisis Response and Management (ISCRAM 2015), Kristiansand, Norway, 24–27 May 2015; Palen, L., Buscher, M., Comes, T.A.H., Eds.; 2015. [Google Scholar]

- Meier, P.P.; Castillo, C.; Imran, M.; Elbassuoni, S.M.; Diaz, F. Extracting Information Nuggets from Disaster-Related Messages in Social Media. In Proceedings of the 10th International ISCRAM Conference, Baden-Baden, Germany, 12–15 May 2013; Comes, T., Fiedrich, F., Fortier, S., Geldermann, J., Müller, T., Eds.; p. 129. [Google Scholar]

- Jurka, T.P.; Collingwood, L.; Boydstun, A.E.; Grossman, E.; van Atteveldt, W. RTextTools: Automatic Text Classification via Supervised Learning, R Package Version 1.4.2; 2014. Available online: https://CRAN.R-project.org/package=RTextTools (accessed on 25 January 2017).

- Meyer, D.; Dimitriadou, E.; Hornik, K.; Weingessel, A.; Leisch, F. e1071: Misc Functions of the Department of Statistics, Probability Theory Group (Formerly: E1071), TU Wien, R Package Version 1.6-7; 2015. Available online: https://CRAN.R-project.org/package=e1071 (accessed on 25 January 2017).

- Chang, J. lda: Collapsed Gibbs Sampling Methods for Topic Models, R Package Version 1.3.2; 2012. Available online: https://CRAN.R-project.org/package=lda (accessed on 25 January 2017).

- Cao, J.; Xia, T.; Li, J.; Zhang, Y.; Tang, S. A density-based method for adaptive LDA model selection. Neurocomputing 2009, 72, 1775–1781. [Google Scholar] [CrossRef]

- Griffiths, T.L.; Steyvers, M. Finding scientific topics. Proc. Natl. Acad. Sci. USA 2004, 101, 5228–5235. [Google Scholar] [CrossRef] [PubMed]

- Murzintcev, N. ldatuning: Tuning of the Latent Dirichlet Allocation (LDA) Models Prameters, R Package Version 0.1.9000; 2015. Available online: https://CRAN.R-project.org/package=lda (accessed on 25 January 2017).

- Kireyev, K.; Palen, L.; Anderson, K. Applications of topics models to analysis of disaster-related twitter data. In NIPS Workshop on Applications for Topic Models: Text and Beyond; NIPS Workshop: Whistler, BC, Canada, 2009. [Google Scholar]

- Earl, R.A.; Jordan, T.R.; Scanes, D.M. Halloween 2013: Another “100-Year” Storm and Flood in Central Texas. Pap. Appl. Geogr. 2015, 1, 342–347. [Google Scholar] [CrossRef]

- Gochis, D.; Schumacher, R.; Friedrich, K.; Doesken, N.; Kelsch, M.; Sun, J.; Ikeda, K.; Lindsey, D.; Wood, A.; Dolan, B.; et al. The Great Colorado Flood of September 2013. Bull. Am. Meteorol. Soc. 2015, 96, 1461–1487. [Google Scholar] [CrossRef]

- Graham, C.; Thompson, C.; Wolcott, M.; Pollack, J.; Tran, M. A guide to social media emergency management analytics: Understanding its place through Typhoon Haiyan tweets. Stat. J. IAOS 2015, 31, 227–236. [Google Scholar] [CrossRef]

- Beigi, G.; Hu, X.; Maciejewski, R.; Liu, H. An Overview of Sentiment Analysis in Social Media and Its Applications in Disaster Relief. In Sentiment Analysis and Ontology Engineering: An Environment of Computational Intelligence; Pedrycz, W., Chen, S.M., Eds.; Springer: Cham, Switzerland, 2016; pp. 313–340. [Google Scholar]

- Lu, Y.; Hu, X.; Wang, F.; Kumar, S.; Liu, H.; Maciejewski, R. Visualizing Social Media Sentiment in Disaster Scenarios. In Proceedings of the 24th International Conference on World Wide Web, Florence, Italy, 18–22 May 2015; ACM: New York, NY, USA, 2015; pp. 1211–1215. [Google Scholar]

- Gallegos, L.; Lerman, K.; Huang, A.; Garcia, D. Geography of Emotion: Where in a City Are People Happier? In Proceedings of the 25th International Conference Companion on World Wide Web, Montreal, QC, Canada, 11–15 April 2016; International World Wide Web Conferences Steering Committee: Geneva, Switzerland, 2016; pp. 569–574. [Google Scholar]

| Category | Messages | Events |

|---|---|---|

| earthquake | 3689 | 5 |

| geophysical | 648 | 2 |

| flood | 11,221 | 6 |

| storm | 11,263 | 5 |

| wildfire | 1678 | 2 |

| technological | 7613 | 8 |

| Total | 36,112 | 28 |

| SVM | sLDA | |||||

|---|---|---|---|---|---|---|

| Events | Precision | Recall | AUC | Precision | Recall | AUC |

| earthquake | 90.5% | 90.2% | 0.946 | 84.1% | 89.8% | 0.920 |

| flood | 88.9 | 84.3 | 0.928 | 84.7 | 82.2 | 0.896 |

| storm | 90.9 | 88.1 | 0.954 | 84.7 | 88.4 | 0.925 |

| technological | 90.4 | 95.7 | 0.976 | 84.8 | 99.1 | 0.974 |

| wildfires | 87.0 | 78.9 | 0.908 | 81.3 | 81.4 | 0.877 |

| all-in-one | 89.3 | 85.4 | 0.937 | 83.7 | 86.1 | 0.909 |

| Training Dataset | Model Quality | ||||

|---|---|---|---|---|---|

| Messages | Events | Precision | Recall | AUC | |

| Ashktorab et al. [28] | 1049 | 12 | 78.0 | 57.0 | 0.880 |

| Cobo et al. [29] | 2187 | 1 | 80.7 | 67.3 | 0.844 |

| new model sLDA | 73,698 | 28 | 83.7 | 86.1 | 0.909 |

| new model SVM | 89.3 | 85.4 | 0.937 | ||

| Hashtag | Messages | Hashtag | Messages |

|---|---|---|---|

| yolandaph | 109,852 | prayfortacloban | 4373 |

| prayforthephilippines | 104,280 | bangonvisayas | 4147 |

| haiyan | 56,218 | tracingph | 3610 |

| typhoonhaiyan | 21,225 | supertyphoon | 2660 |

| yolanda | 17,299 | strongerph | 2160 |

| reliefph | 16,758 | yolandaupdates | 1716 |

| rescueph | 8607 | ugc | 810 |

| prayforphilippines | 8229 | ormoc | 806 |

| tacloban | 7825 | prayfortheph | 471 |

| typhoonyolanda | 5184 | safenow | 139 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Murzintcev, N.; Cheng, C. Disaster Hashtags in Social Media. ISPRS Int. J. Geo-Inf. 2017, 6, 204. https://doi.org/10.3390/ijgi6070204

Murzintcev N, Cheng C. Disaster Hashtags in Social Media. ISPRS International Journal of Geo-Information. 2017; 6(7):204. https://doi.org/10.3390/ijgi6070204

Chicago/Turabian StyleMurzintcev, Nikita, and Changxiu Cheng. 2017. "Disaster Hashtags in Social Media" ISPRS International Journal of Geo-Information 6, no. 7: 204. https://doi.org/10.3390/ijgi6070204