Three-Dimensional Modeling and Indoor Positioning for Urban Emergency Response

Abstract

:1. Introduction

2. 3DM Technology for City Emergency Response

2.1. Rapid Acquisition and Processing of Three-Dimensional, Low-Altitude Remote-Sensing Data with Low Cost and High Precision

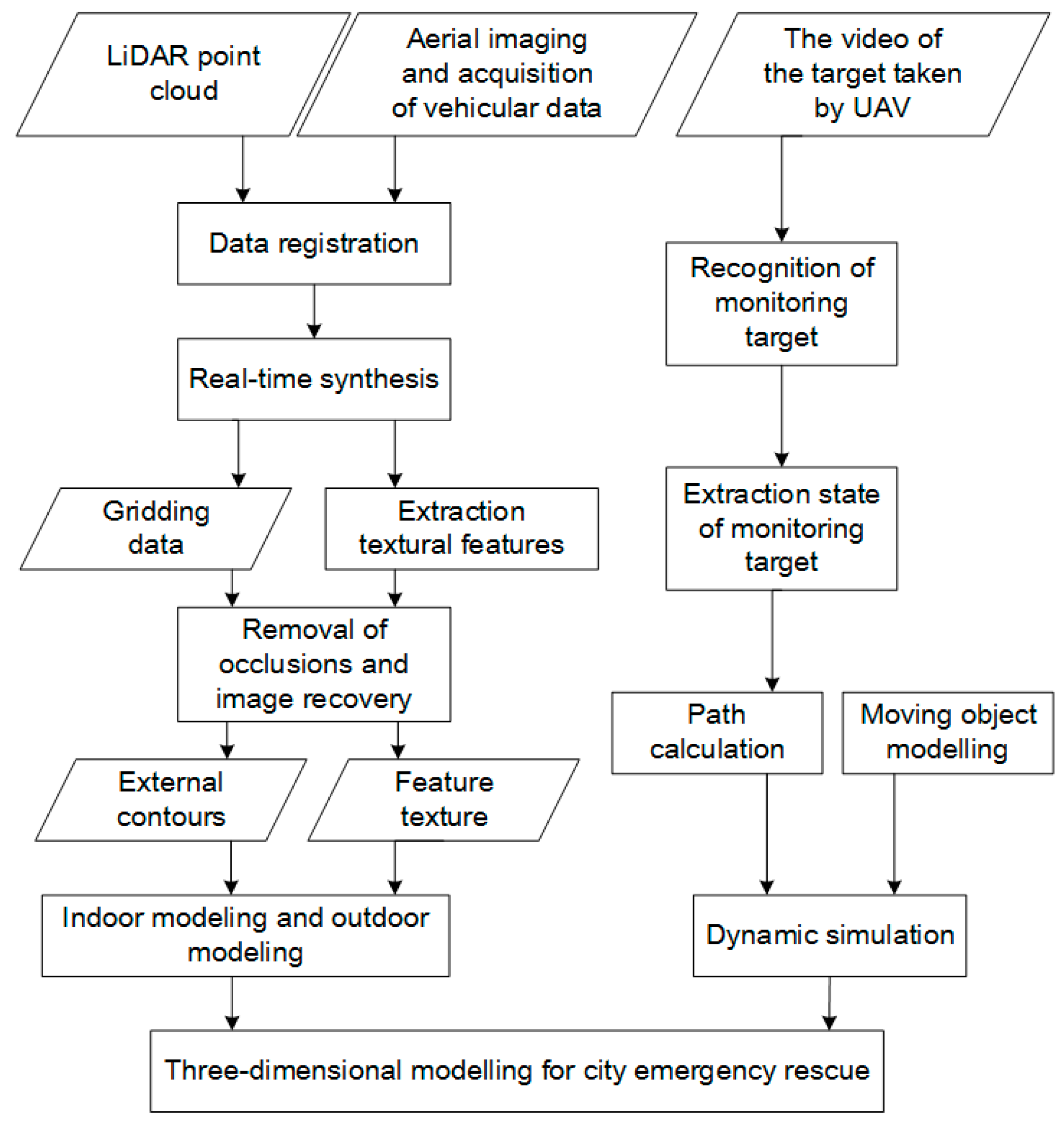

2.2. Technical Scheme of the Three-Dimensional Scene Reconstruction

2.2.1. The Acquisition and Processing of Point-Cloud and Image Data

2.2.2. The Extraction of Surface Texture Features and the Establishment of the Texture Atlas

- 1.

- Filtering by locations

- 2.

- Filtering by angle

- 3.

- Filtering by area

2.2.3. Foreground Removal and Restoration Algorithms

- Foreground trees are removed using hue and the parallel-line information. The leaves are usually green or a color similar to green, so we can select a range of hues from 80 to 200 to determine whether the pixels are located in the shelter area. In the image, lines on the wall of the building correspond to parallel lines in the X-axis or the Y-axis of the object coordinate system. These lines are arranged in a certain order, while the direction of the straight line of the tree is not regular. Therefore, the foreground can be removed according to information about parallel lines and color.

- The foreground restoration algorithm is based on the stereo matching of image segmentation. This method, which is based on the adaptive-weight matching algorithm for color pixels, is used to reduce the influence of sampling noise. We use the cost of visual pixel matching, block pixels and parallax smoothness to define the energy function in order to determine the shade.

- The image is divided into a rectangular grid of a certain size and the density of the parallel lines of the grid is calculated. If the grid density of an area is less than a given threshold, this area is considered a shelter area; otherwise, it is not a blocked area. The results of the above operation will contain the incorrectly-segmented block grid area, which is generally considered to contain fewer linear features or to be covered by a substantial amount of advertising signs.

- Image segmentation. These methods are applied to zone-element images. For some local filled areas, their closest filling blocks are centered in zones of similar textures, so filled target blocks are searched for only in their neighboring source areas. This approach is able not only to maintain the linear structures of images effectively but also to shorten the search time.

- For planar shielding, the template-matching margin protection method is applied to restore foreground textures. For simple linear vertical foregrounds, the one-dimensional average-value interpolation and block-filling methods are used to recover the occluded textures. When the extracted margin width and the surrounding textures satisfy certain conditions, the one-dimensional average-value interpolation method is applied. Otherwise, the affine transformation is used to achieve texture recovery.

- The image-texture substitution method is based on grid optimization. First, in the target region, the original images are divided into two-dimensional grids. In addition, the shape of the grid is consistent with the geometry of the target area. Second, the corresponding sampling points in the target area for each point on the texture plane are found. Third, color-space conversion and transmission are performed to maintain the brightness and shadow information of the original image. This method can replace the texture of the target area in the original image with a new texture and maintain the lighting effects of the original image.

2.2.4. Information Fusion of Outer Contours and Textural Features of Urban Targets

2.3. Dynamic Simulation of an Emergency Response for Urgent Situations

3. Indoor 3DM and Positioning

3.1. Indoor 3DM

- A base-map of the building is established in Autodesk Computer Aided Design (AutoCAD) and is imported into SketchUp.

- The imported base-map is used to produce the foundational data for the building. The building is split into different parts by adding lines, and the bottom of each part is pulled up to a related height to form the basic framework.

- The window and door models of the building are created, and the models are divided into groups. Windows and doors are drawn with lines in the basic framework of the building and hollowed out. In the next step, the window and door groups are added into the hollowed parts of the building by continuous movements and replications.

- Refinement-processing of the model is performed by segmentation and rotation and special parts of the building are constructed. The 3D framework is then generated.

- The background and clusters of the images are removed and the intensity and contrast and angular deviation of the images are adjusted. The images are then cut and spliced and corrected. After the above treatments, the textural information of the building is formed.

- By checking the textural position of the building, the treated textural information is added to the corresponding position of the model. It is then processed as a real model texture using several treatments, such as sizing and rotation, and the indoor 3DM is formed. Finally, the model is exported and provides the basis for the subsequent processes.

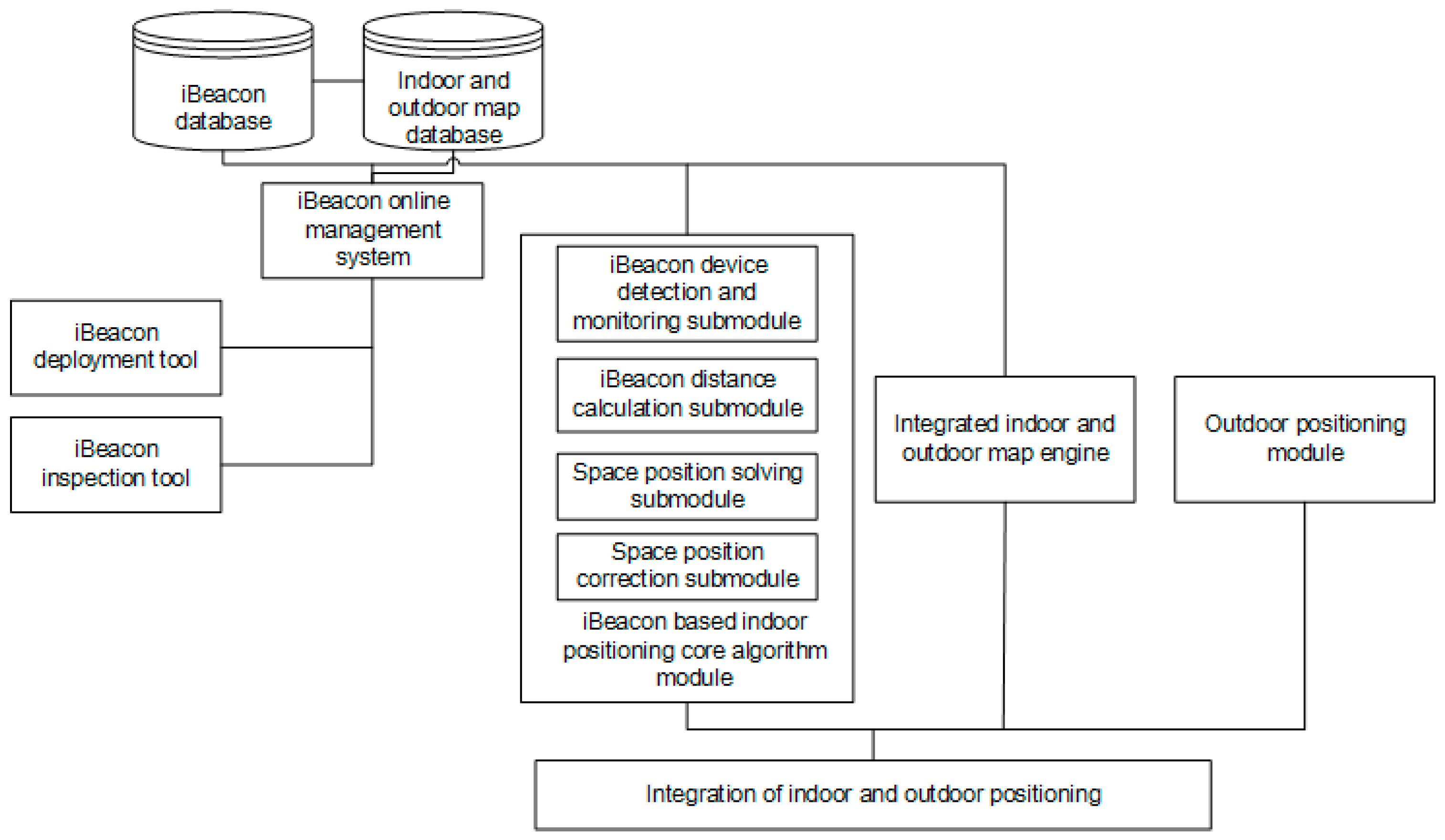

3.2. Indoor Positioning Technology

- An iBeacon database and an indoor map database provide iBeacon indoor and outdoor map database services for indoor and outdoor positioning.

- For iBeacon and for indoor and outdoor maps, an iBeacon online management system can be accessed and managed online.

- An iBeacon deployment tool and an iBeacon inspection tool are used to deploy and monitor iBeacons.

- An iBeacon is based on the indoor positioning core algorithm module, which is used to provide indoor positioning functions. This module has four core submodules: the iBeacon device-detection and monitoring submodule, the iBeacon distance calculation submodule, the spatial position-solving submodule and spatial position correction submodule.

- An integrated indoor and outdoor map engine provides indoor and outdoor map services.

- An outdoor positioning module uses GPS to provide an outdoor positioning service.

- An integration of indoor and outdoor positioning is used to integrate the indoor and outdoor services. This service is provided by iBeacon and is based on the indoor positioning core algorithm module and the indoor and outdoor positioning module.

4. A Prototype System Study: City Firefighting Rescue Application of the Indoor and Outdoor Positioning Service

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- He, H.; Li, J.; Yang, Y.; Xu, J.; Guo, H.; Wang, A. Performance assessment of single-and dual-frequency Beidou/GPS single-epoch kinematic positioning. GPS Solut. 2014, 18, 393–403. [Google Scholar] [CrossRef]

- Früh, C.; Zakhor, A. An Automated Method for Large-Scale, Ground-Based City Model Acquisition. Int. J. Comput. Vis. 2004, 1, 5–24. [Google Scholar] [CrossRef]

- Yoon, K.J.; Kwen, I.S. Adaptive support weight approach for correspondence search. IEEE Trans Pattern Anal. Mach. Intell. 2006, 28, 650–656. [Google Scholar] [CrossRef] [PubMed]

- Criminisi, A.; Perez, P.; Toyama, K. Object Removal by Exemplar Based in Painting. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 16–22 June 2003; pp. 721–728. [Google Scholar]

- Meng, R.; Song, X. Research on algorithm of segmenting color images. Express Inf. Min. Ind. 2004, 9, 21–24. [Google Scholar]

- Ashikhmin, M. Synthesizing Natural Textures. In Proceedings of the Symposium on Interactive 3D Graphics; ACM Press: New York, NY, USA, 2001; pp. 217–226. [Google Scholar]

- Fang, H.; Hart, J. Textureshop: Texture synthesis as a photograph editing tool. In Proceedings of the SIGGRAPH 2004, Los Angeles, CA, USA, 8–12 August 2004; pp. 254–359. [Google Scholar]

- Hu, J.; You, S.; Neumann, U. Approaches to Large scale Urban Modeling. Comput. Graph. Appl. 2003, 23, 62–69. [Google Scholar]

- Heuvel, F.A. 3D Reconstruction from a Single Image Using Geometric Constraints. ISPRS J. Photogramm. Remote Sens. 1998, 53, 354–368. [Google Scholar] [CrossRef]

- Debevec, P.; Taylor, C.; Malik, J. Modeling and Rendering Architecture from Photographs: A Hybrid Geometry and Image Based Approach. In Proceedings of the Siggraph; ACM Press: New York, NY, USA, 1996; pp. 11–20. [Google Scholar]

- Gruen, A.; Nevatia, R. Automatic Building Extraction from Aerial Images—Guest Editors’ Inreoduction. Comput. Vis. Image Underst. 1998, 73, 1–2. [Google Scholar]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Photo tourism: Exploring photo collections in 3D. ACM Trans. Graph. 2006, 25, 835–846. [Google Scholar] [CrossRef]

- Frueh, C.; Jain, S.; Zakhor, A. Data Processing Algorithms for Generating Textured 3D Building Facade Meshes From Laser Scans and Camera Images. Int. J. Comput. Vis. 2005, 61, 159–184. [Google Scholar] [CrossRef]

- Wang, Y.; Hu, C.M. A Robust Registration Method for Terrestrial LiDAR Point Clouds and Texture Image. Acta Geod. Cartogr. Sin. 2012, 41, 266–272. [Google Scholar]

- Rottensteiner, F.; Briese, C. Automatic Generation of Building Models from LIDAR Data and the Integration of Aerial Images. ISPRS 2003, 34, 1–7. [Google Scholar]

- Huber, M. Fusion of LiDAR Data and Aerial Imagery for Automatic Reconstruction of Building Surfaces. In Proceedings of the 2nd GRSS/ISPRS Joint Workshop on Data Fusion and Remote Sensing over Urban Areas, Berlin, Germany, 22–23 May 2003. [Google Scholar]

- Sohn, G.; Dowman, I. Building Extraction Using Lidar DEMs and IKONOS Images; ISPRS Commission III, WG III/3: Dresden, Germany, 2003. [Google Scholar]

- Bougnoux, S.; Robert, L. Totalcalib: A fast and reliable system for off-line calibration of image sequences. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition; IEEE: New York, NY, USA, 1997. [Google Scholar]

- Pollefeys, M.; Gool, V.L.; Vergauwen, M.; Cornelis, K.; Verbiest, F.; Tops, J. Image-based 3D acquisition of archaeological heritage and applications. In Proceedings of the 2001 Conference on Virtual Reality, Archeology, and Cultural Heritage; ACM Press: New York, NY, USA, 2001; pp. 255–262. [Google Scholar]

- Cipolla, R.; Robertson, D.P.; Boyer, E.G. Photobuilder-3D Models of Architectural Scenes from Uncalibrated Images. In Proceedings of the Conference on Multimedia Computing and Systems, Florence, Italy, 7–11 June 1999; pp. 25–31. [Google Scholar]

- Patwari, N.; Hero, A.O.; Perkins, M.; Correal, N.S.; O’dea, R.J. Relative location estimation in wireless sensor networks. IEEE Trans. Signal Proc. 2003, 51, 2137–2148. [Google Scholar] [CrossRef]

- Whitehouse, K.; Karlof, C.; Culler, D. A practical evaluation of radio signal strength for ranging-based localization. In ACM Sigmobile Mobile Computing and Communications Review; ACM Press: New York, NY, USA, 2007; Volume 11, pp. 41–52. [Google Scholar]

- Chen, G.L.; Meng, X.L.; Wang, Y.J.; Zhang, Y.Z.; Tian, P.; Yang, H.C. Integrated WiFi/PDR/Smartphone Using an Unscented Kalman Filter Algorithm for 3D Indoor Localization. Sensors 2015, 15, 24595–24614. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Zou, H.; Jiang, H.; Zhu, Q.; Soh, Y.C.; Xie, L. Fusion of WiFi, Smartphone Sensors and Landmarks Using the Kalman Filter for Indoor Localization. Sensors 2015, 15, 715–732. [Google Scholar] [CrossRef] [PubMed]

- Masiero, A.; Fissore, F.; Guarnieri, A.; Pirotti, F.; Vettore, A. UAV positioning and collision avoidance based on RSS measurements. Int. Arch Photogr. Remote Sens. Spat. Inf. Sci. 2015, 40, 219–225. [Google Scholar] [CrossRef]

- Masiero, A.; Fissore, F.; Pirotti, F.; Guarnieri, A.; Vettore, A. Toward the Use of Smartphones for Mobile Mapping. Geo-Spat. Inf. Sci. 2016, 19, 210–221. [Google Scholar] [CrossRef]

- Leu, J.S.; Yu, M.C.; Tzeng, H.J. Improving indoor positioning precision by using received signal strength fingerprint and footprint based on weighted ambient WiFi signals. Comput. Netw. 2015, 91, 329–340. [Google Scholar] [CrossRef]

- Hossain, A.K.M.M.; Soh, W.S. A survey of calibration-free indoor positioning systems. Comput. Commun. 2015, 66, 1–13. [Google Scholar] [CrossRef]

- Tesoriero, R.; Tebar, R.; Gallud, J.A.; Lozano, M.D.; Penichet, V.M.R. Improving location awareness in indoor spaces using RFID technology. Expert Syst. Appl. 2010, 37, 894–898. [Google Scholar] [CrossRef]

- Moghtadaiee, V.; Dempster, A.G. Design protocol and performance analysis of indoor fingerprinting positioning systems. Phys. Commun. 2014, 13, 17–30. [Google Scholar] [CrossRef]

- Hafner, P.; Moder, T.; Wisiol, K.; Wieser, M. Indoor Positioning based on Bayes Filtering Using Map Information. IFAC PapersOnLine 2015, 48, 208–214. [Google Scholar] [CrossRef]

- Zhu, N.; Zhao, H.B.; Feng, W.Q.; Wang, Z.L. A novel particle filter approach for indoor positioning by fusing WiFi and inertial sensors. Chin. J. Aeronaut. 2016, 28, 1725–1734. [Google Scholar] [CrossRef]

- Bisio, I.; Lavagetto, F.; Marchese, M.; Sciarrone, A. Smart probabilistic fingerprinting for WiFi-based indoor positioning with mobile devices. Pervasive Mob. Comput. 2015, 31, 107–123. [Google Scholar] [CrossRef]

- Liu, H.S.; Zhang, X.L.; Song, L.X. Comprehensive evaluation and prediction of fire accidents in China based on Statistics. China Saf. Sci. J. 2011, 21, 54–59. [Google Scholar]

- Li, N.; Burcin, B.G.; Bhaskar, K.; Lucio, S. A BIM centered indoor localization algorithm to support building fire emergency response operations. Autom. Constr. 2014, 42, 78–89. [Google Scholar] [CrossRef]

- Liu, X.Y.; Zhang, Q.L.; Xu, X.Y. Petrochemical Plant multi-Objective and multi-Stage fire Emergency Management Technology System Based on the fire risk Prediction. Procedia Eng. 2013, 62, 1104–1111. [Google Scholar] [CrossRef]

- Joo, I.H.; Kim, K.S.; Kim, M.S. Fire Service in Korea: Advanced Emergency 119 System Based on GIS Technology; Springer: Berlin, Germany, 2004; pp. 396–399. [Google Scholar]

- Klann, M. Playing with Fire: User-Centered Design of Wearable Computing for Emergency Response; Springer: Berlin, Germany, 2007; pp. 116–125. [Google Scholar]

- Li, W.H.; Li, Y.; Yu, P.; Gong, J.H.; Shen, S.; Huang, L.; Liang, J.M. Modeling, simulation and analysis of the evacuation process on stairs in a multi-floor classroom building of a primary school. Phys. Stat. Mech. Appl. 2016, 469, 157–172. [Google Scholar] [CrossRef]

- Liang, J.; Shen, S.; Gong, J.; Liu, J.; Zhang, J. Embedding user-generated content into oblique airborne photogrammetry-based 3D city model. Int. J. Geogr. Inf. Sci. 2016, 31, 1–16. [Google Scholar] [CrossRef]

- Ogawa, K.; Verbree, E.; Zlatanova, S.; Kohtake, N.; Ohkami, Y. Toward seamless indoor-outdoor applications: Developing stakeholder-oriented location-based services. Geo-Spat. Inf. Sci. 2011, 14, 109–118. [Google Scholar] [CrossRef]

- Chen, H.T.; Qiu, J.Z.; Yang, P.; Lv, W.S.; Yu, G.B. Simulation study on the novel stairs and elevator evacuation model in the high-rise building. J. Saf. Sci. Technol. 2012, 8, 48–53. [Google Scholar]

- Lin, H.; Chen, M.; Lu, G.N.; Zhu, Q.; Gong, J.H.; You, X.; Wen, Y.N.; Xu, B.L.; Hu, M.Y. Virtual Geographic Environments (VGEs): A New Generation of Geographic Analysis Tool. Earth Sci. Rev. 2013, 126, 74–84. [Google Scholar] [CrossRef]

- Hui, L.; Min, C.; Guonian, L. Virtual Geographic Environment: A Workspace for Computer-Aided Geographic Experiments. Ann. Assoc. Am. Geogr. 2013, 103, 465–482. [Google Scholar]

- Jia, F.; Zhang, W.; Xiong, Y. Cognitive research framework of virtual geographic environment. J. Remote Sens. 2015. [Google Scholar] [CrossRef]

- Chen, M.; Lin, H.; Lu, G. Virtual Geographic Environments; Science Press: Hingham, UK, 2009; Volume 31, pp. 1–6. [Google Scholar]

- Zhang, X.; Chen, Y.X.; Wang, W.S. Three-dimensional Modelling Technology for City Indoor Positioning and Navigation Applications. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Philadelphia, PA, USA, 2016. [Google Scholar]

- Vosselman, G.; Dijkman, S. 3D Building Model Reconstruction from Point Clouds and Ground Plans. Int. Arch. Photogramm. Remote Sens. 2001, 34, 37–43. [Google Scholar]

| Contrasting Aspects | 3D Modeling Using the Technologies Proposed in This Paper | Modeling by 3DMAX and SketchUp |

|---|---|---|

| area | 50 km2 | 5 km2 |

| quantity of buildings | 4930 | 642 |

| ground precise | 10 cm | Cannot realize fusion with ground |

| time | 75 h | 20 days |

| node number of computer clust | 4 | 2 |

| personnel usage | 1 | 2 |

| elevation precise | Elevation precise 20 cm | 2 m cm |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Chen, Y.; Yu, L.; Wang, W.; Wu, Q. Three-Dimensional Modeling and Indoor Positioning for Urban Emergency Response. ISPRS Int. J. Geo-Inf. 2017, 6, 214. https://doi.org/10.3390/ijgi6070214

Zhang X, Chen Y, Yu L, Wang W, Wu Q. Three-Dimensional Modeling and Indoor Positioning for Urban Emergency Response. ISPRS International Journal of Geo-Information. 2017; 6(7):214. https://doi.org/10.3390/ijgi6070214

Chicago/Turabian StyleZhang, Xin, Yongxin Chen, Linjun Yu, Weisheng Wang, and Qianyu Wu. 2017. "Three-Dimensional Modeling and Indoor Positioning for Urban Emergency Response" ISPRS International Journal of Geo-Information 6, no. 7: 214. https://doi.org/10.3390/ijgi6070214