1. Introduction

Social researchers take a great interest in household practices, among other things, family dynamics and child-rearing (e.g., [

1,

2]), practices around meals [

3], sleep [

4], assisted living arrangements and mobile health solutions (e.g., [

5,

6]), homeworking [

7] and energy-related practices [

8]. Existing social research methods are both qualitative and quantitative, and often some combination of the two are used for pragmatic and constructivist purposes [

9].

Qualitative methods are used to acquire rich in-depth data. Observations and open-ended interviews are particularly effective in capturing the meanings participants attach to various aspects of their everyday lives and relations (e.g., [

10]). Quantitative methods such as questionnaires and surveys capture qualitative information in formalised ways for computational processing, and are widely used in large scale studies on demographics, household economics and social attitudes (e.g., [

11,

12]). Time-use diaries are also used to log activity sequences [

13], and to seek evidence of life changes and social evolution [

14]. Efforts to harmonise time use surveys across Europe have delivered guidelines (HETUS) [

15] on activity coding for analysing the time use data, but interviews and observations are commonly used to cross-validate what goes on, and to calibrate and amplify the meaning of the diary evidence, including the use of activity sensors and video cameras [

16].

Sensor-generated data are becoming widely available and the topic of activity recognition [

17] has thrived in recent years with applications in areas such as smart homes and assisted living. Researchers have investigated activity recognition methods using data obtained from various types of sensors, for instance, video cameras [

18], wearables [

19] and sensors embedded in smartphones [

20]. Such rich contextual information has been used in various activity specific studies. For example, Williams et al. [

4] discuss the use of accelerometers to study people’s sleep patterns. Amft and Tröster [

21] study people’s dietary behaviour by using inertial sensors to recognise movements, a sensor collar for recognising swallowing and an ear microphone for recognising chewing. Wang et al. [

22] help in detecting elderly accidental falls by employing accelerometers and cardiotachometers.

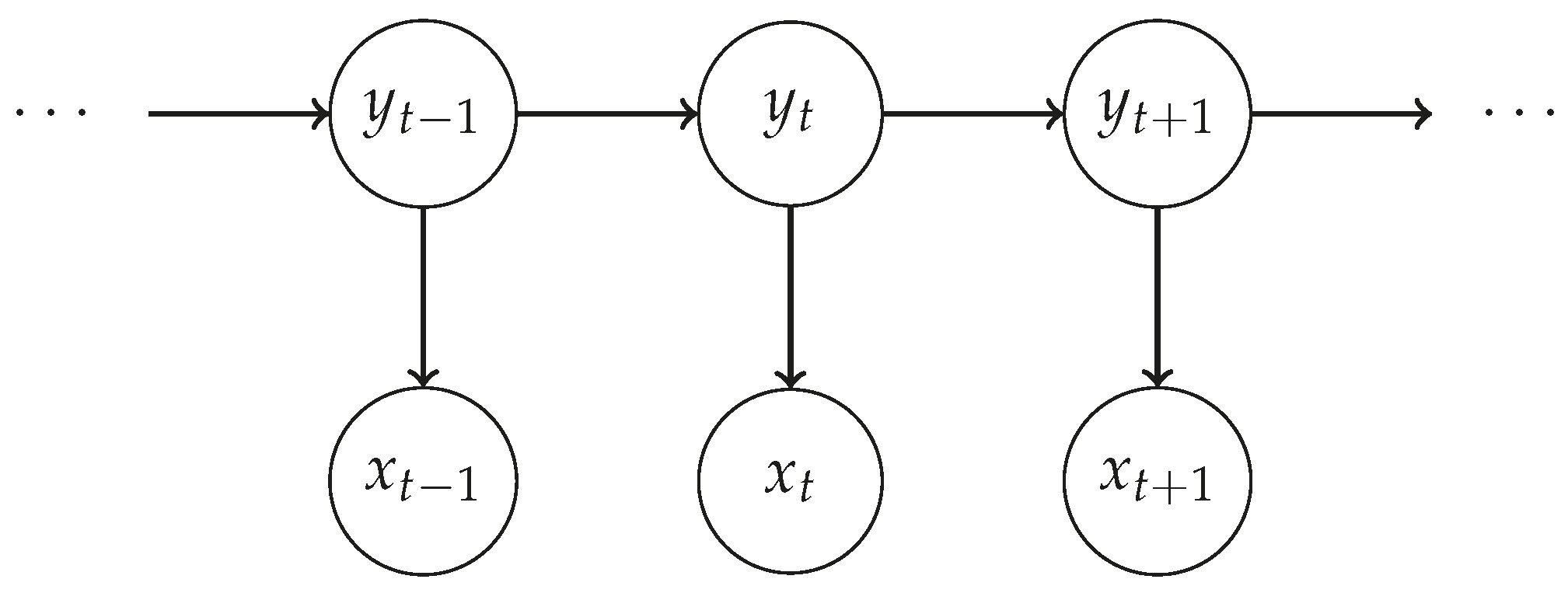

Numerous algorithms have been proposed for general activity recognition in the literature, most of which are based on the assumption that by sensing the environment it is possible to infer which activities people are performing. Dynamic Bayesian Networks (e.g., [

23]), Hidden Markov Models (e.g., [

24]) and Conditional Random Fields (e.g., [

25]) are popular methods due to their ability to recognise latent random variables in observing sequences of sensor-generated data. Other approaches rely on Artificial Neural Networks (e.g., [

26]). A more detailed discussion will be given in

Section 2.

To evaluate the adequacy of inferences about activities derived from sensor data, records of what activities are taking place from direct observation can be used to obtain the so-called “ground truth”. In the literature, there are three main types of approaches. The first relies on video cameras to record what participants are doing during an experiment. For example, Lin and Fu [

23], use multiple cameras and floor sensors to track their participants. Although the data quality can be guaranteed in a controlled lab, this method is very intrusive and difficult to deploy in an actual home. A second common way of establishing ground truth is by asking participants to carry out a predefined list of tasks, again, in a controlled environment. For example, Cook et al. [

27] ask their participants to carry out scripted activities, predetermined and repeatedly performed. Both of these methods correspond with social research methods, such as questionnaires, surveys and interviews, in generating what Silverman calls “researcher-provoked” data [

28]. The outcomes may suffer the bias introduced by the researchers in provoking participants’ activities as opposed to observing them without interference. The third type of approach relies on human annotators to label sensor-generated data manually. For example, Wang et al. [

29] conducted a survey with their participants to have a self-reported record of their main activities, and compared it to the annotated data based on video recordings. This type of approach relies heavily on the annotator’s knowledge of participants’ activities and their understanding of participants’ everyday practices, but may also be challenged by discrepancies between the research-provoked survey and video data and non-provoked sensor-generated data.

In this study, we consider two data sources. The first is “digital sensors” that generate activity data based on activity recognition derived from sensor-generated environment data (referred to as sensor data or sensor-generated data in the rest of the paper). The second is “human sensors” that generate activity data from participants’ self-reported time-use diaries (referred to as time use diary or self-reported data in the rest of the paper). To make inferences from sensor data about human activities, it is first necessary to calibrate the sensor data by matching the data to activities identified by the self-reported data. The calibration involves identifying the features in the sensor data that correlate best with the self-reported activities. This in turn requires a good measure of the agreement between the activities detected from sensor-generated data and those recorded in self-reported data.

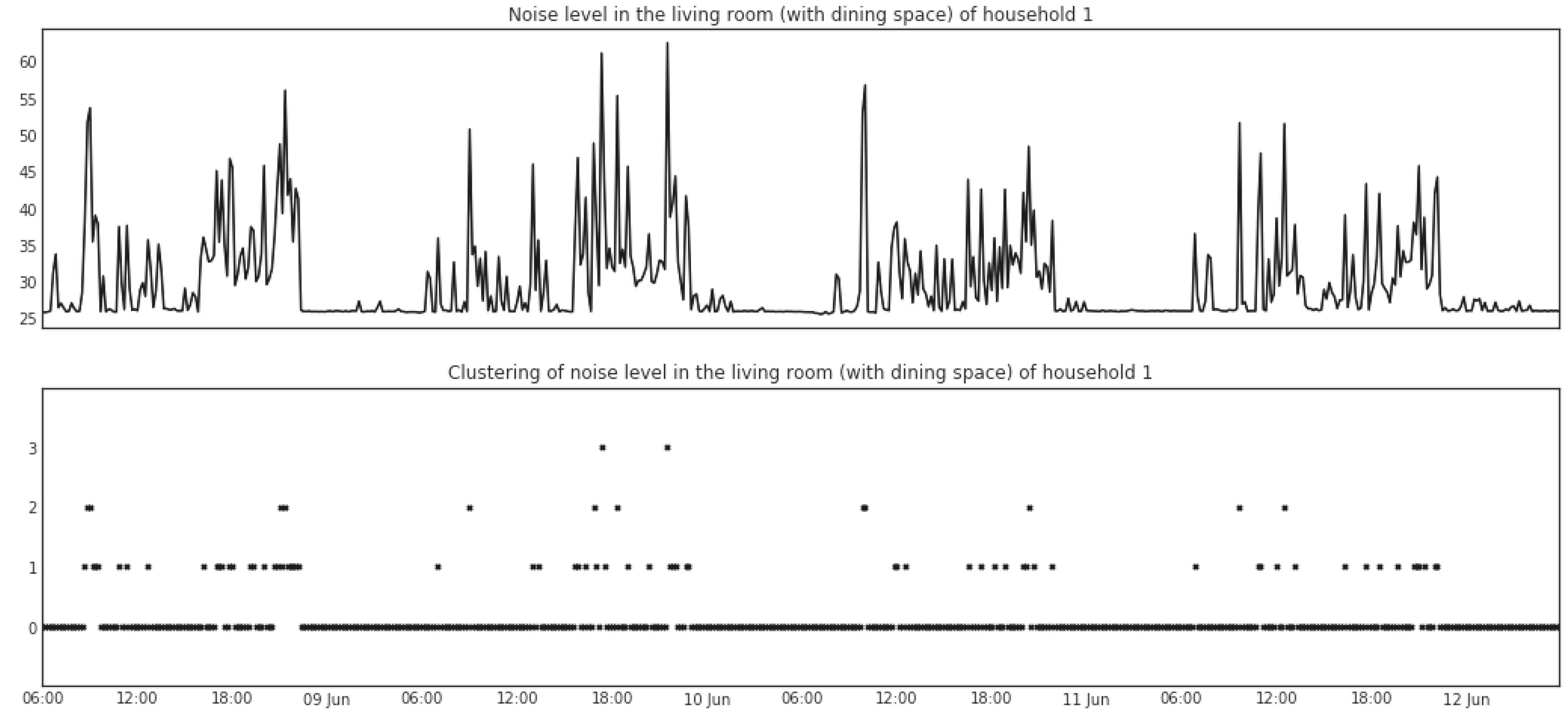

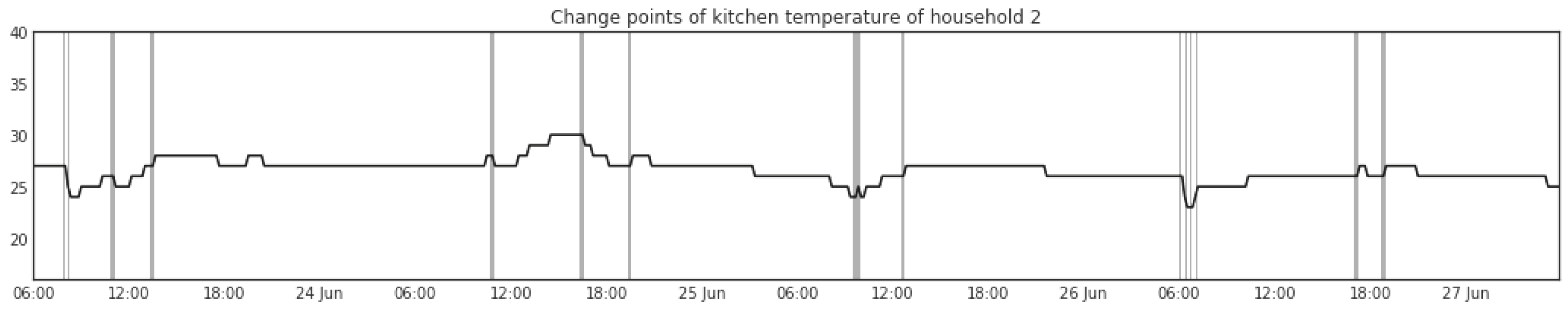

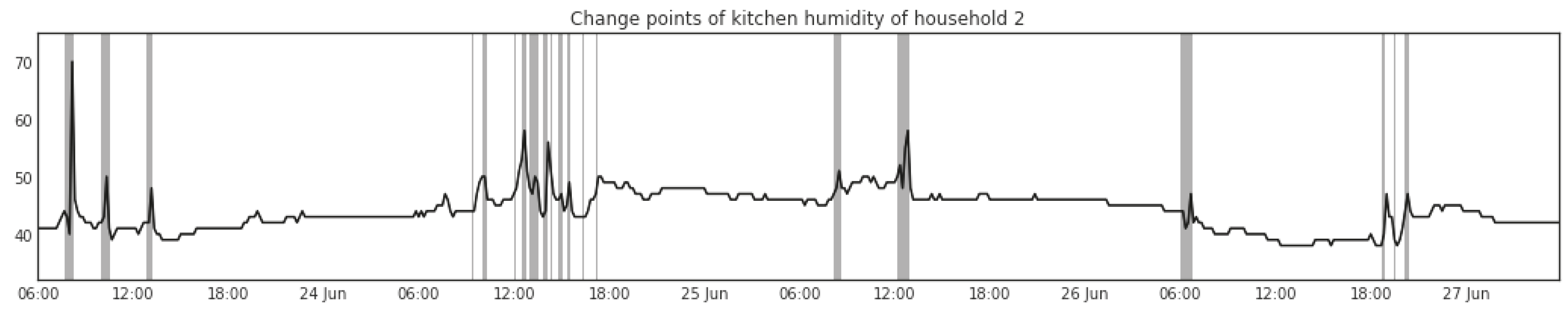

To illustrate how this can be done, we conducted a trial in three residential houses, collecting data from a set of sensors and from a time use diary recorded by the occupant (human sensor) from each house over four consecutive days. The sensors captured temperature, humidity, range (detecting movements in the house), noise (decibel levels), ambient light intensity (brightness) and energy consumption. For activity recognition, we adopt an unsupervised learning approach based on a Hidden Markov Model, i.e., only sensor-generated data are used to fit the model, which allows the model to discover the patterns by itself rather than fitting the model with unreliable labels from the time use diaries. We apply mean shift clustering [

30] and change points detection [

31] for extracting features. To reduce computational cost, we adopt a correlation-based approach [

32] for feature selection. To compare the data generated by the two types of sensors, we propose a method for measuring the agreement between them based on the Levenshtein distance [

33].

The contributions of this paper are three-fold. First, we present a new data collection framework for recognising activities at home, i.e., a mixed-methods approach of combining computational and qualitative types of non-provoked data: sensor-generated and time use diary. Secondly, we investigate the application of several feature extraction and feature selection methods for activity recognition using sensor-generated data. Thirdly, we propose an evaluation method for measuring the agreement between the sensor-supported activity recognition algorithms and the human constructed diary. Compared to our previous work [

34], this paper has the following extensions: (1) we add an illustration of the trial setup procedure and discuss the design of the procedure; (2) we use a larger data set of three households and investigate three more activity types; (3) we demonstrate the use of feature selection in activity recognition to harness the exploration of feature combinations; and (4) we further the analysis of results by triangulating with evidence from household interviews.

The rest of the paper is organised as follows. In

Section 2, we discuss related work. In

Section 3, we give an introduction to the home settings. Thereafter, in

Section 4, we describe the data collected for this study, including both the sensor data and the time use diary data. In

Section 5, we show how features are extracted and selected and introduce our activity recognition algorithm. In

Section 6, we present the metric for evaluating agreement between activities recognised by the sensor-generated data and what is reported by the participant, and give an analysis of the results based on the evidence gathered from household interviews. Finally, we conclude our work with some possible extensions in

Section 7.

2. Related Work

In this section, we discuss the works that have been recently published in the area of automated activity recognition in home-like environments in terms of the sensors they use, the activities they detect, and the recognition methods they adopt or propose.

Early works (e.g., [

23,

35]) were concerned with designing frameworks for providing services based on the prediction of resident action. For example, Lin and Fu [

23] leveraged K-means clustering and domain knowledge to create context out of raw sensor data and combined this with Dynamic Bayesian Networks (DBNs) to learn multi-user preferences in a smart home. Their testbed allows location tracking and activity recognition via cameras, floor sensors, motion detectors, temperature and light sensors in order to recommend services to multiple residents, such as turning on TV or lights in various locations or playing music.

More recently, the CASAS smart home project [

36] enabled the detection of multi-resident activities and interactions in a testbed featuring motion, temperature, water and stove (ad-hoc) usage sensors, energy monitors, lighting controls and contact detectors for cooking pots, phone books and medicine containers. Using the testbed, Singla et al. [

37] applied Hidden Markov Models (HMMs) to perform real-time recognition of activities of daily living. Their work explores seven types of individual activities: filling medication dispenser, hanging up clothes, reading magazine, sweeping floor, setting the table, watering plants, preparing dinner, and 4 types of cooperative activities: moving furniture, playing checkers, paying bills, gathering and packing picnic food. Validated against the same data set, Hsu et al. [

25] employed Conditional Random Fields (CRFs) with strategies of iterative and decomposition inference. They found that data association of non-obstructive sensor data is important to improve the performance of activity recognition in a multi-resident environment. Chiang et al. [

38] further improved the work in [

25] with DBNs that extend coupled HMMs by adding vertices to model both individual and cooperative activities.

The single-occupancy datasets from CASAS also attracted many researchers. For example, Fatima et al. [

39] adopted a Support Vector Machine (SVM) based kernel fusion approach for activity recognition and evaluated it on the Milan2009 and Aruba datasets. Fang et al. [

40] evaluated the application of neural network for activity recognition based on a dataset of two volunteers. Khrishnan and Cook [

41] evaluated an online sliding-window based approach for activity recognition on a dataset of three single-occupancy houses. The authors showed that combining mutual information based weighting of sensor events and adding past contextual information to the feature leads to better performance. To analyse possible changes in cognitive or physical health, Dawadi et al. [

42] introduced the notion of activity curve and proposed a permutation-based change detection in activity routine algorithm. The authors validated their approach with a two-year smart home sensor data. In these works, different kinds of sensors were used such as temperature and light sensors, motion and water/stove usage sensors. The recognised activities range from common ones such as eating and sleeping to more specific ones like taking medication.

ARAS [

43] is a smart home data set collected from two houses with multiple residents. The two houses were equipped with force sensitive resistors, pressure mats, contact sensors, proximity sensors, sonar distance sensors, photocells, temperature sensors, and infra-red receivers. Twenty-seven types of activities were labelled such as watching TV, studying, using internet/telephone/toilet, preparing/having meals, etc. With the ARAS dataset, Prossegger and Bouchachia [

44] illustrated the effectiveness of an extension to the incremental decision tree algorithm ID5R, which induces decision trees with leaf/class nodes augmented by contextual information in the form of activity frequency.

With a mission of elderly care, the CARE project [

45] has carried out research on automatic monitoring of human activities in domestic environments. For example, Kasteren et al. [

46] investigated HMMs and CRFs for activity recognition in a home setting and proposed to use Bluetooth headsets for data annotation. Fourteen state-change sensors were placed on the doors, cupboards, refrigerator and toilet flush. Seven types of activities were annotated by the participants themselves, including sleeping, having breakfast, showering, eating dinner, drinking, toileting and leaving the house. Two probabilistic models, HMM and CRF, were investigated for activity recognition. Kasteren et al. [

24] provided a summary of probabilistic models used in activity recognition and evaluated their performance on datasets of three households with single occupant. Further work by Ordonez et al. [

47] evaluated transfer learning with HMMs on a dataset of three houses with the same setting of sensor deployment and labelled activity types, showing potential of reusing experience on new target houses where little annotated data is available.

Besides probabilistic models, neural network models are becoming popular in recognising human activities. For example, Fan et al. [

26] studied three neural network structures (Gated Recurrent Unit, Long Short-Term Memory, Recurrent Neural Network) and showed that a simple structure that remembers history as meta-layers outperformed recurrent networks. The sensors they used include grid-eye infrared array, force and noise sensors as well as electrical current detectors. For their model training, the participants performed scripted activities in a home testbed: eating, watching TV, reading books, sleeping and friends visiting. Singh et al. [

48] showed that Long Short-Term Memory classifiers outperformed probabilistic models such as HMM and CRF when raw sensor data was used. Laput et al. [

49] proposed a general-purpose sensing approach with a single sensor board that is capable of detecting temperature, humidity, light intensity and colour, motion, sound, air pressure, WiFi RSSI, magnetism (magnetometer) and electromagnetic inference (EMI sensor). The sensors were deployed in five different locations including a kitchen, an office, a workshop, a common area and a classroom. For activity recognition, a supervised approach based on SVM and a two-stage clustering approach with AutoEncoder were used. The authors showed the merit of the sensor features with respect to their contribution to the recognition of 38 types of activities.

While several other approaches exist for activity recognition and capture, they mostly employ only wearable sensors (i.e., see [

19] for a recent survey), and thus cannot be applied in multi-modal scenarios of smart-home settings with fixed, unobtrusive and ambient sensors. In addition, due to the time-series nature of activity recognition in the home environment, supervised algorithms not incorporating the notion of temporal dependence might lead to poor performance in activity recognition, so such works are not reviewed here.

Time use diaries are widely used for collecting activity data. To validate time use diaries, Kelly et al. [

16] tested the feasibility of using wearable cameras. Participants were asked to wear a camera and at the same time keep a record of time use over a 24-hour period. During an interview with each participant afterwards, the visual images were used as prompts to reconstruct the activity sequences and improve upon the activity record. No significant differences were found between the diary and camera data with respect to the aggregate totals of daily time use. However, for discrete activities, the diaries recorded a mean of 19.2 activities per day, while the image-prompted interviews revealed 41.1 activities per day. This raises concerns of using the data collected from time use diaries for training activity recognition models directly.

In this work, we use a suite of fixed sensors. For activity recognition, we build our model based on HMMs. In particular, we investigate the use of mean shift clustering and change points detection techniques for feature extraction. A correlation-based feature selection method is applied to reduce computational cost. Our work differs from similar studies in that we adopt a mixed-methods approach for the problem of recognising activities at home, and we evaluate its effectiveness using a formal framework.

6. Agreement Evaluation

6.1. Evaluation Metric

In the previous section, we introduced the activity recognition framework. By fitting a HMM to the data generated by the sensors, sequences of hidden states can be extracted. In this section we illustrate how to evaluate the agreement between state sequences generated by the HMMs and the activity sequences recorded in the time use diary, which provides a quantification of inter-rater/observer reliability.

A time use diary may contain misreports in several ways. First, the start and end time of individual activities may not be accurately recorded, i.e., either earlier or later than the actual occurrence. This is called time shifting. Secondly, there might be activities that occurred but were not recorded, which are missing values. Pairwise comparison like precision and recall may exaggerate the dis-similarity introduced by such noise. Thus, we need an agreement evaluation metric that is able to alleviate the effect.

A suitable metric for this task is the Levenshtein distance (LD), a.k.a., edit distance [

33] which has been widely used for measuring the similarity between two sequences. It is defined as the minimum sum of weighted operations (insertions, deletions and substitutions) needed to transform one sequence into the other. Compared to the pair-wise evaluation framework upon sequence data, LD based method can deal with the aforementioned problems of misreports. However, it is more computation intense.

Formally, given two sequences

s and

q, the Levenshtein distance between these two sequences

is defined by:

where

is an indicator function that equals 0 when

and equals 1 otherwise. The three lines in the

bracket correspond to the three operations transforming

s into

q, i.e., deletion, insertion and substitution.

,

and

are respectively the costs associated with the deletion, insertion and substitution operations.

The inputs to the function of Levenshtein distance, in our case, are two sequences of labels with respect to a type of activity. One is generated by the HMMs and the other is the corresponding activity labels recorded in the time use diary. The elements of both sequences are composed of two values: “0” indicating the absence of the activity and “1” indicating the presence of the activity. In our agreement evaluation, we attempt to minimise the difference introduced by slight time shifting and mis-recording of activities. For these reasons, we set the costs of the three types of operations

,

and

as follows. For substituting “1” with “0”, the cost is set to

; for substituting “0” with “1”, the cost is set to

. This gives less penalty to cases of false positive than to cases of false negative, i.e., the agreement is lower when the activities recorded in the time use diaries are not recognised from the sensor data. The costs of inserting and deleting ‘0’ are set to

. This is to reduce the penalty introduced by time shifting. We set the cost of inserting and deleting ‘1’ to 100 to disable these two operations. The output is the minimum cost of the operations that are needed to transform predicated sequences to the ones recorded in time use diaries and lower values indicate higher agreements. The implementation is based on the python package weighted-levenshtein [

66].

The costs of deletion, insertion and substitution are used to differentiate their influence upon the perceived agreement. The absolute difference between these costs will be investigated in future work.

6.2. Results and Analysis

In this section we discuss the results from applying the aforementioned activity recognition method to the collected data and compare them to the interview data of the corresponding households.

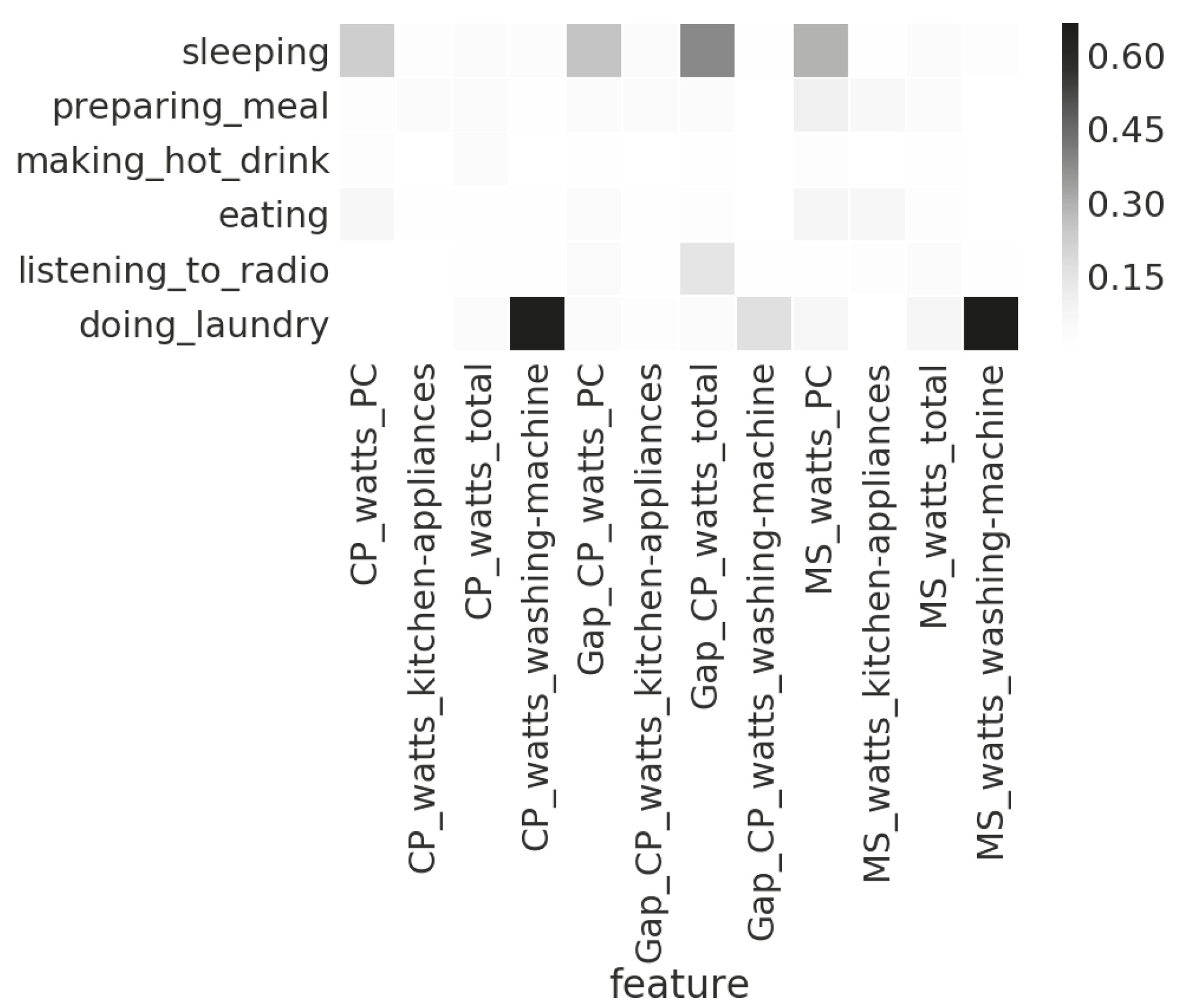

Table 7 lists the set of features that achieves the best agreement in terms of the Levenshtein distance (LD) between the activity sequences generated by the HMMs and that recorded in the time use diary.

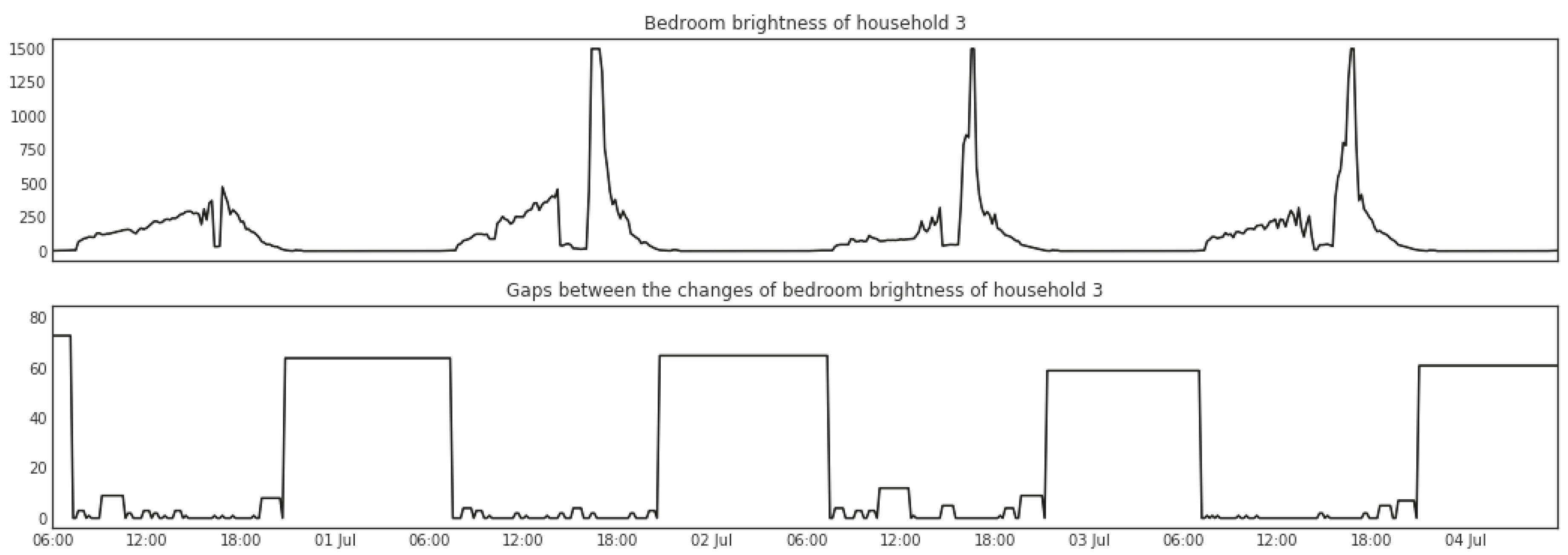

For the sleeping activity of Household 3, the best agreement is achieved by the feature capturing the gaps between the changes of movements in the living room. According to the interview data, we know that the living room is the geographical centre of the house, i.e., the passage to transit between kitchen, dining/study area and the sleeping area. The occupant keeps busy at home and spends a lot of time in the dining/study area and the living area.

For the activity of making hot drink, the feature identifying humidity changes in the utility room and bedroom of Household 3 is contained in the subset of features that achieves the best agreement. A further investigation of the time use diary shows that when making coffee in the kitchen in the mornings the occupant shaves in the bathroom which is located next to the utility room and bedroom. The humidity change in this case is very likely caused by the humid bathroom.

The overlaps between the subsets of features that achieve the best agreement in recognising activities of preparing meal and eating show the close relation between the two types of activities. This demonstrates that sensor insensitive activities may be recognised via sensor readings from their causal/correlated activities. In this case, the recognition of preparing meal can help recognise eating.

For the laundry activity, since we have an energy monitor attached to the washing machine of each household, it is expected that using the features capturing the energy consumption of the washing machine would achieves the best agreement. However, for Household 2, the feature subset that achieves the best agreement also contains the changes of the energy consumption of the kitchen appliances as well as that of the total energy consumption. A further investigation of the time use diary shows that when doing laundry the occupant quite often cooks or makes coffee around the same time. However, among the three households, the agreement between the HMM and the time use diary for laundry activity in Household 2 is the lowest, which may indicate the possibility of misreport or that the reported laundry activities involve other elements, such as sorting, hanging out, folding, etc.

Among the seven types of activities, listening to radio has the lowest agreement (the largest value in LD) between the detection from the sensor-generated data and the records in the time use diaries. In case of Household 2, the time use diary tells us that the occupant listens to radio in the bedroom while getting up, in the kitchen while preparing meals and in the dining area when having meals. Similarly, the occupant in Household 3 listens to radio in the kitchen when preparing meal and in the living room when relaxing. In both cases, the occupant is using more than one device for listening to radio in more than one place.

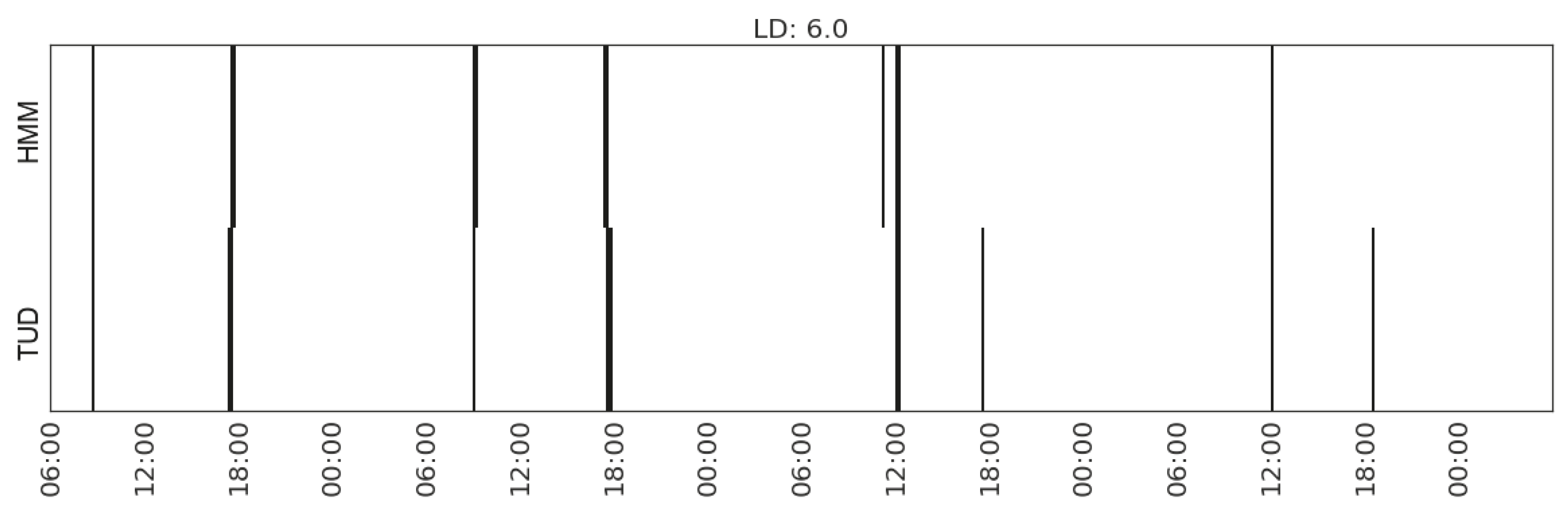

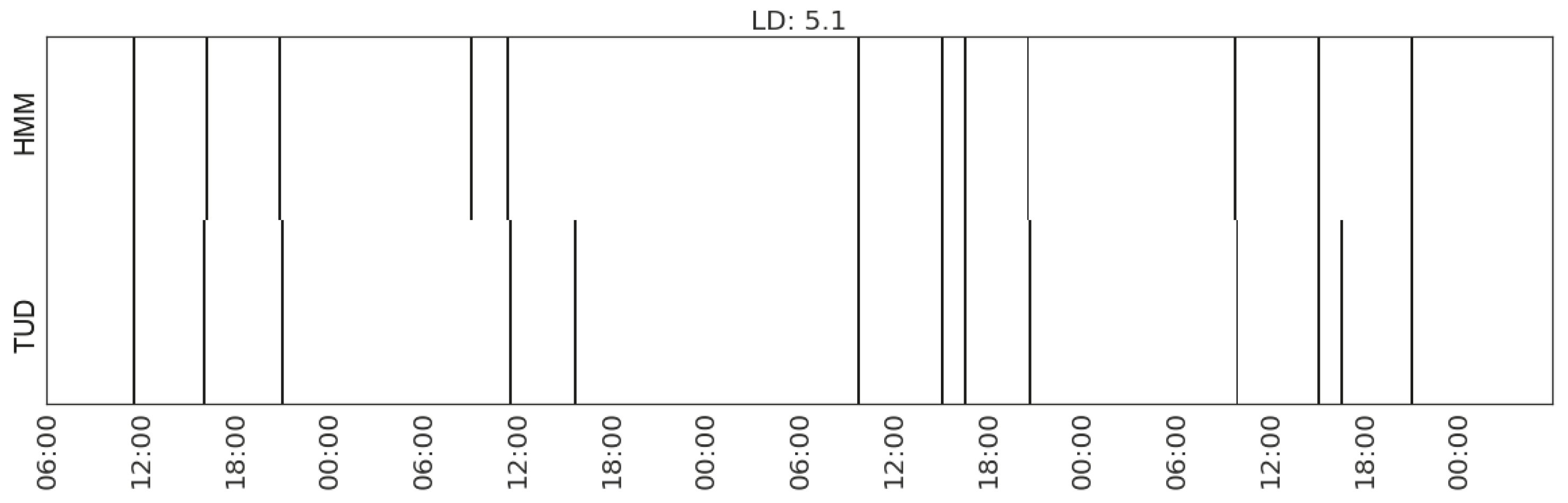

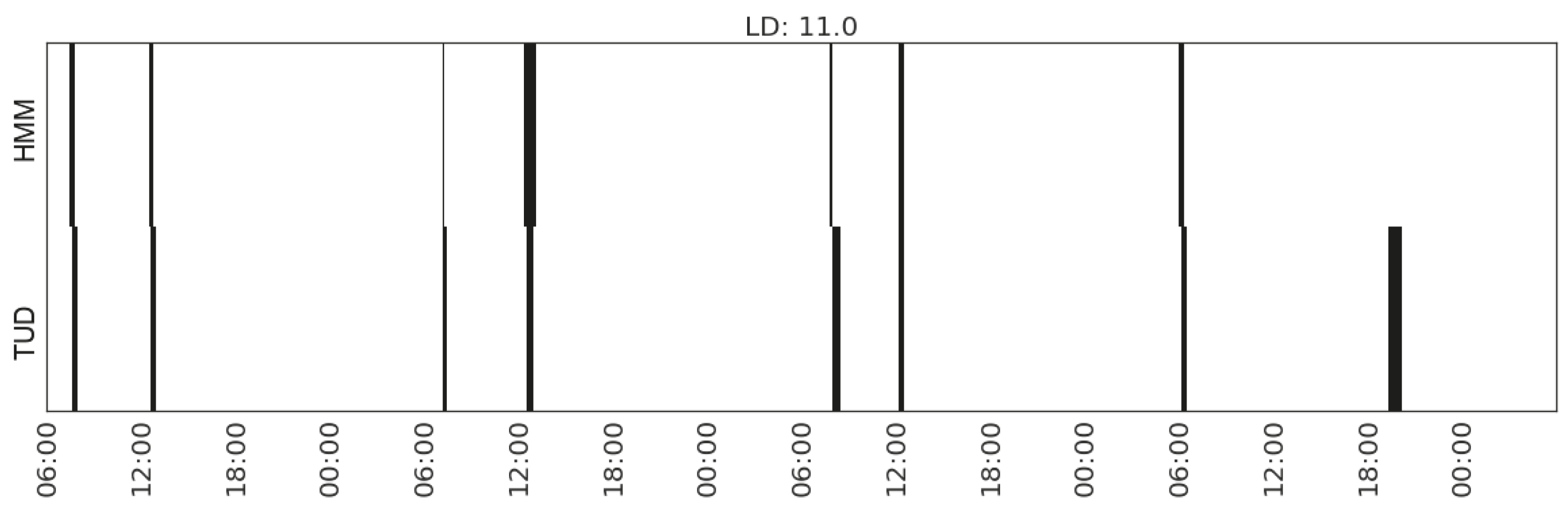

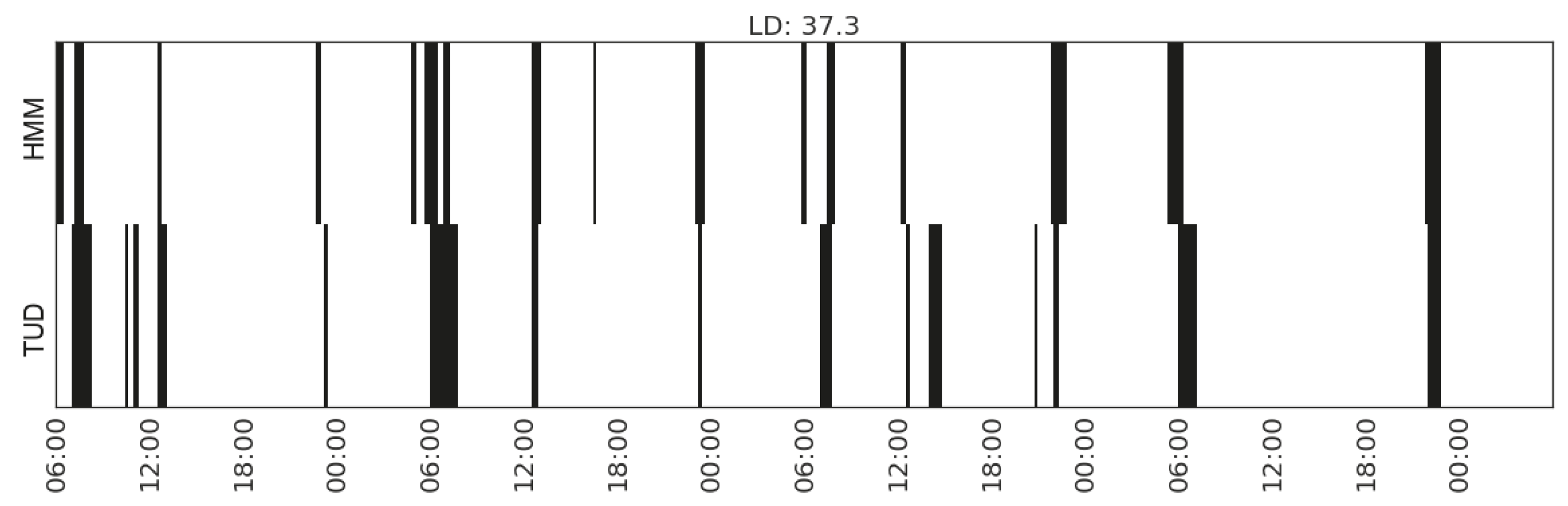

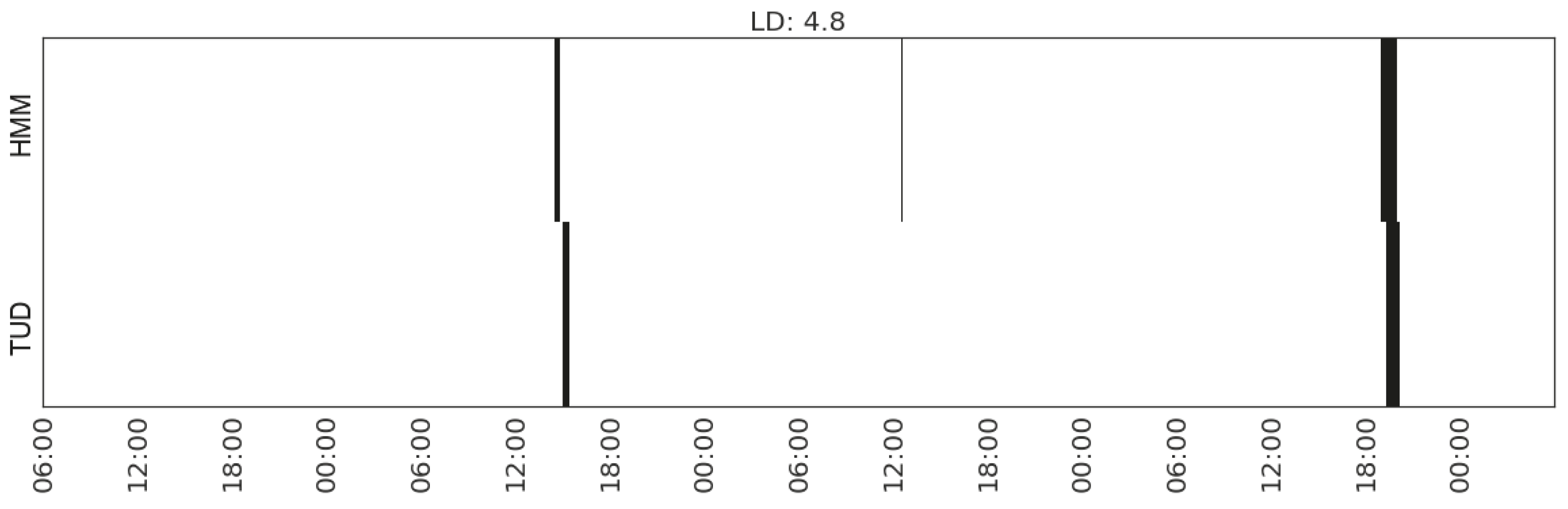

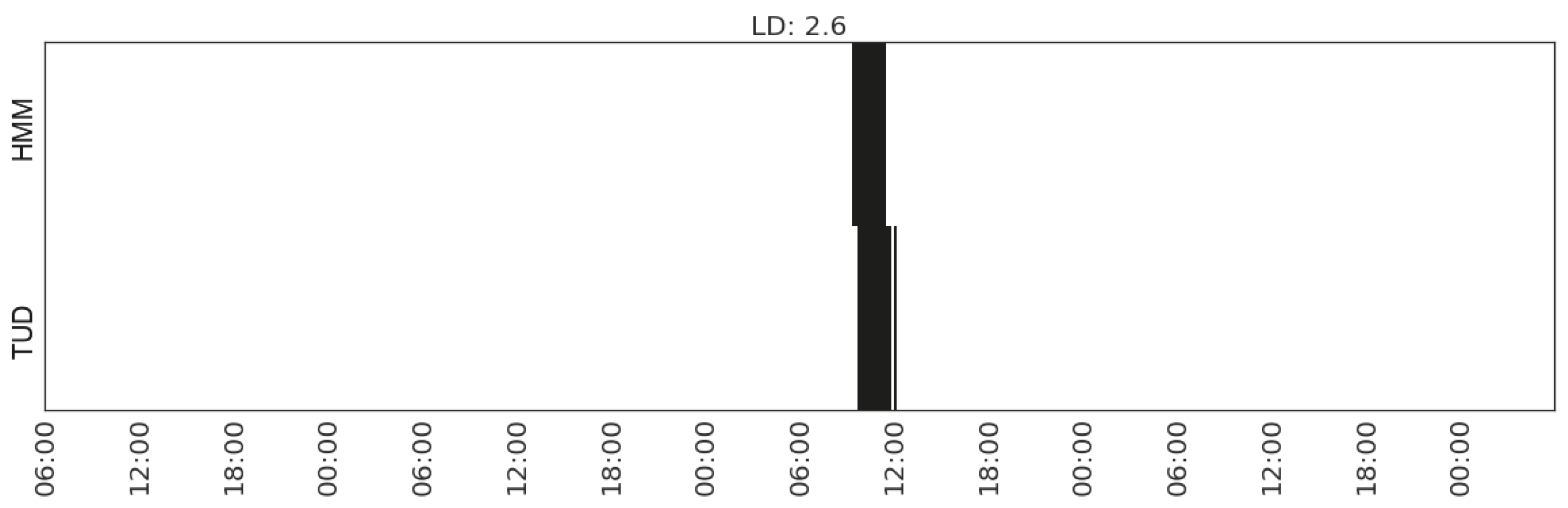

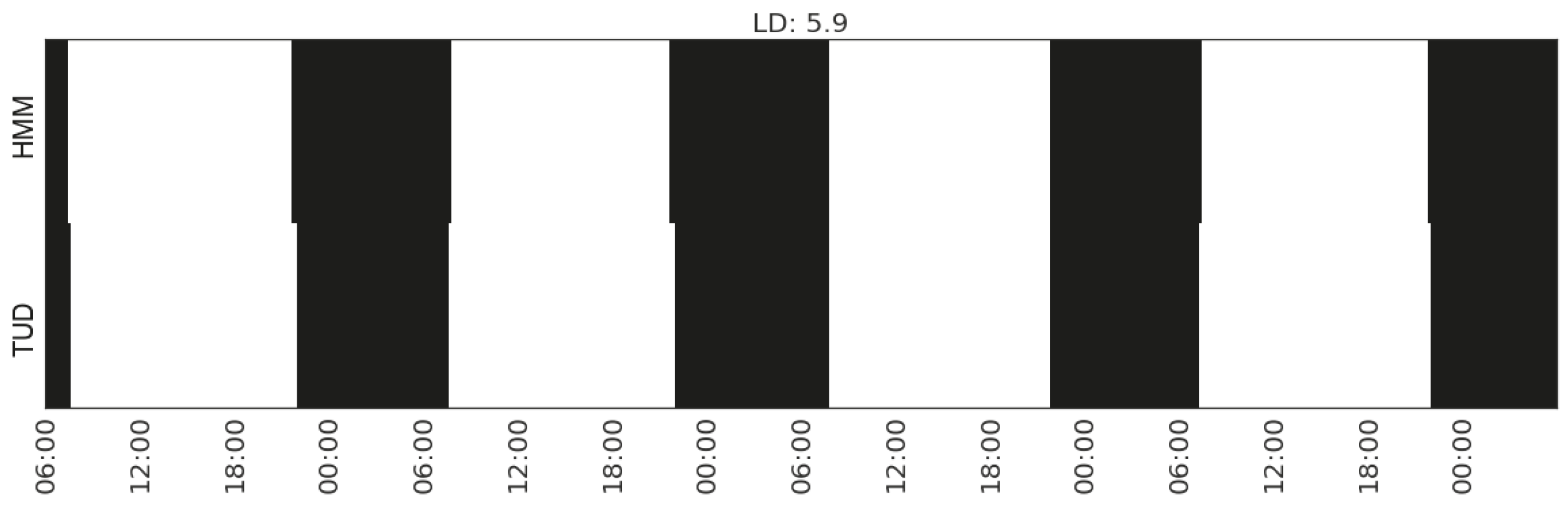

For illustration, we plot the comparison of each type of activity detected from the sensor data and that recorded in the time use diaries (

Figure 12,

Figure 13,

Figure 14,

Figure 15,

Figure 16,

Figure 17 and

Figure 18). In each figure, the upper part shows the state sequences generated by an HMM using the specific set of features, and the lower part shows the activity sequences recorded in the time use diary (TUD). The black bins represent the time slices when a particular activity is detected/recorded.

The activity durations generated by the HMMs mostly overlap with those recorded in the time use diary, with some local shifts along the time line. The only exception in these seven plots is the activity of listening to radio in Household 2, which suggests that the features being used are not sufficient enough to distinguish the occurrences of such activities. In some cases, radio is used as background, which does not necessarily constitute the activity of listening to the radio.

7. Conclusions

In this paper, we presented a mixed-methods approach for recognising activities at home. In particular, we proposed a metric for evaluating the agreement between the predicted activities from models trained by the sensor data and the activities recorded in a time use diary. We also investigated ways of extracting and selecting features from sensor-generated data for activity recognition.

The focus of this work is not on improving the recognition performance of particular models but to present a framework for quantifying how activity recognition models trained by sensor-generated data can be evaluated on the basis of their agreement with activities recorded in time use diaries. We demonstrate the usefulness of this framework by an experiment involving three trial households. The evaluation results can provide evidence about which types of sensors are more effective for detecting certain types of activities in a household. This may further help researchers in understanding the occupant’s daily activities and the contexts in which certain activities occur. The agreement between the sensor-generated data and the time use diary may also help to validate the quality of the diary.

This is an on-going research, investigating the use of digital sensors for social research, using household practices as a testbed. As this is written, we are in the process of collecting data from three types of households: single occupant, families with children and ≥2 adults. There are several directions to consider for extending this work. We are adding a wearable wristband sensor to the setting to detect the proximity of participants to each sensor box via Bluetooth RSSI (received signal strength indicator). Such data will give us a more accurate reading of presence and co-presence of particular occupants in different parts of their home, while also helping us in obtaining more accurate start and end times of certain activities. We will continue to investigate other activity recognition methods and feature selection techniques. In addition, we are interested in employing post and assisted labelling mechanisms, for example, by asking participants to assign an agreement score to the activity sequences generated by our activity recognition models. In this way, another layer of agreement can be added to the evaluation.

References