The Univariate Collapsing Method for Portfolio Optimization

Abstract

:1. Introduction

2. The Univariate Collapsing Method

2.1. Motivation

2.2. Model

2.3. Model Estimation and ES Computation

3. Sampling Portfolio Weights

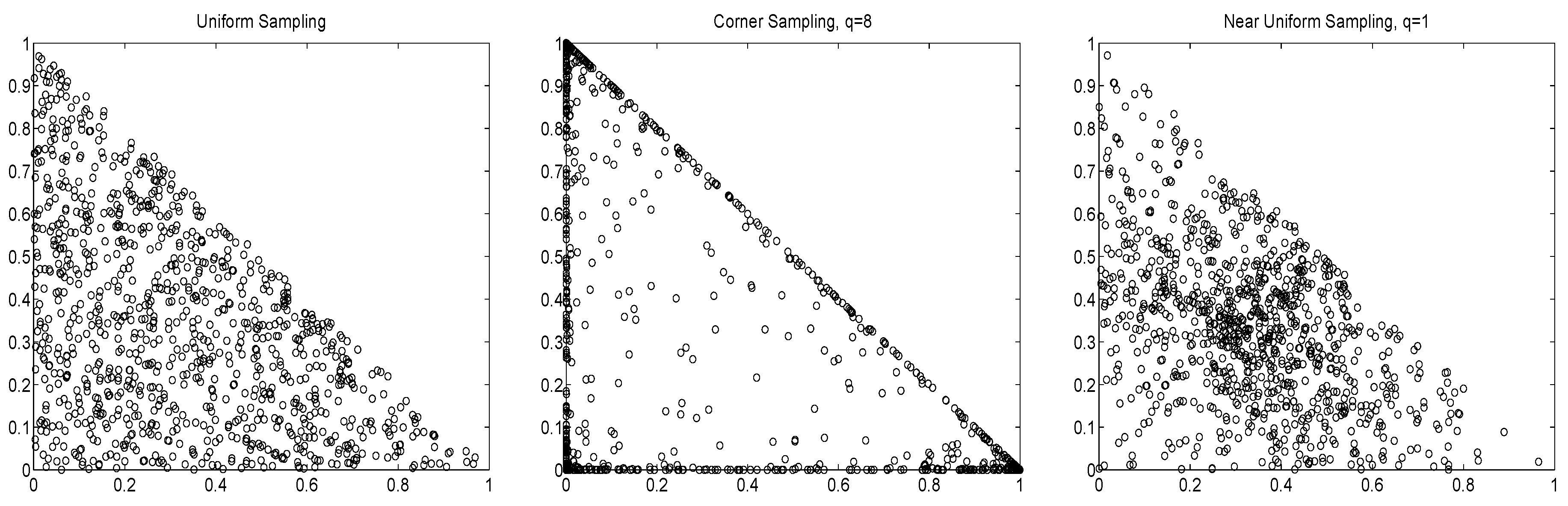

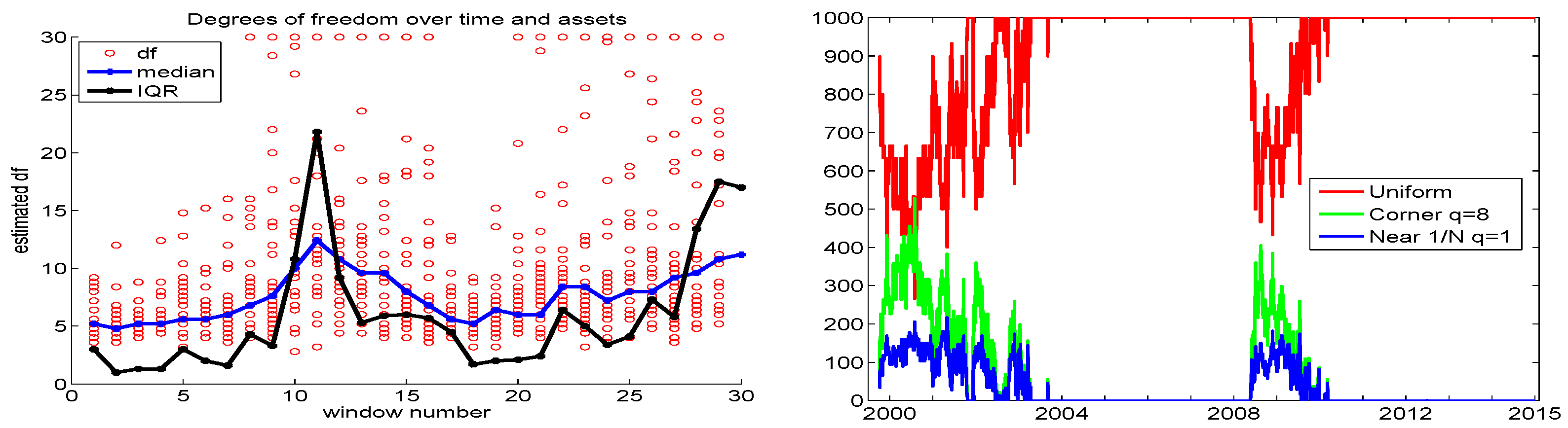

3.1. Uniform, Corner, and Near-Equally-Weighted

3.2. Objective, and First Illustration

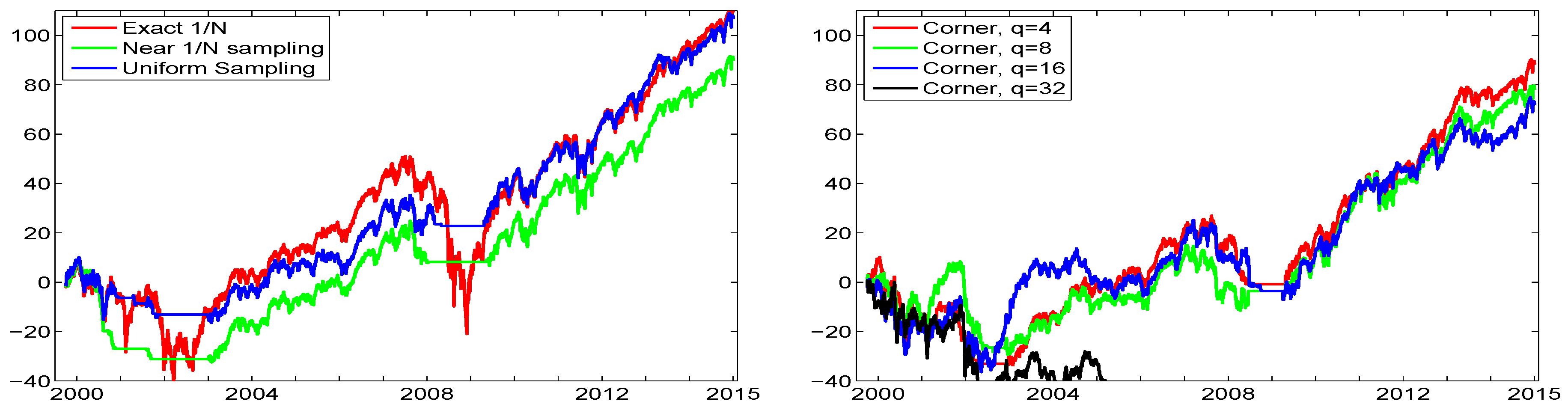

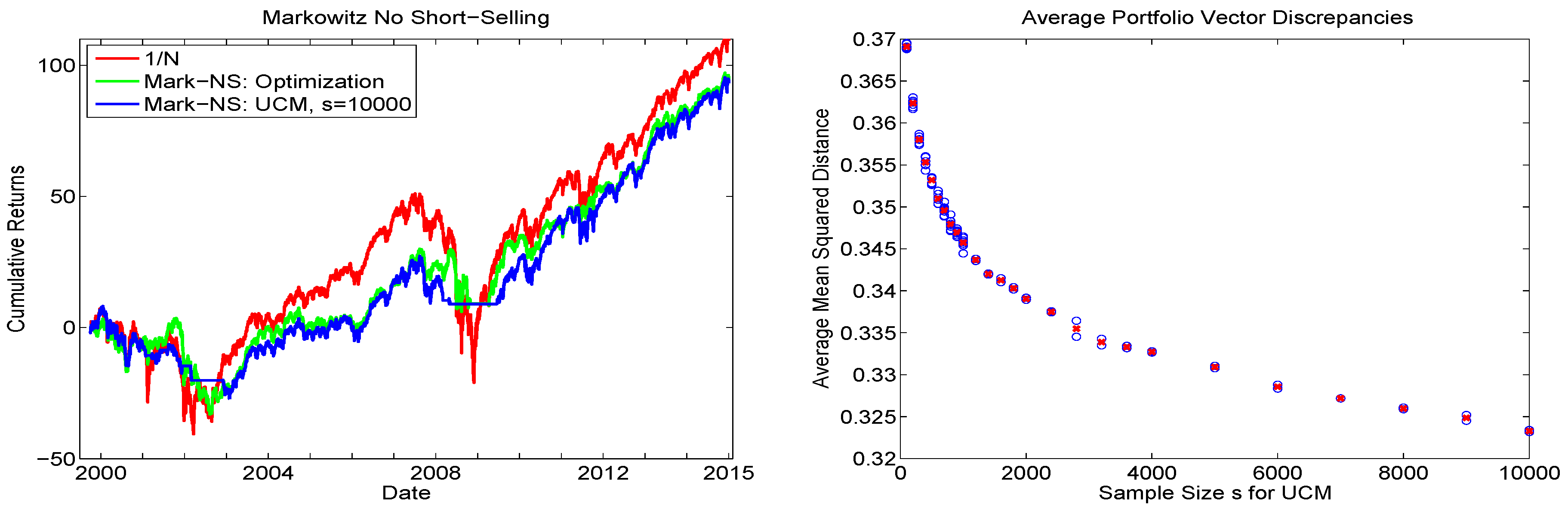

3.3. Sample Size Calibration via Markowitz

3.4. Data-Driven Sampling

3.5. Methodological Assessment

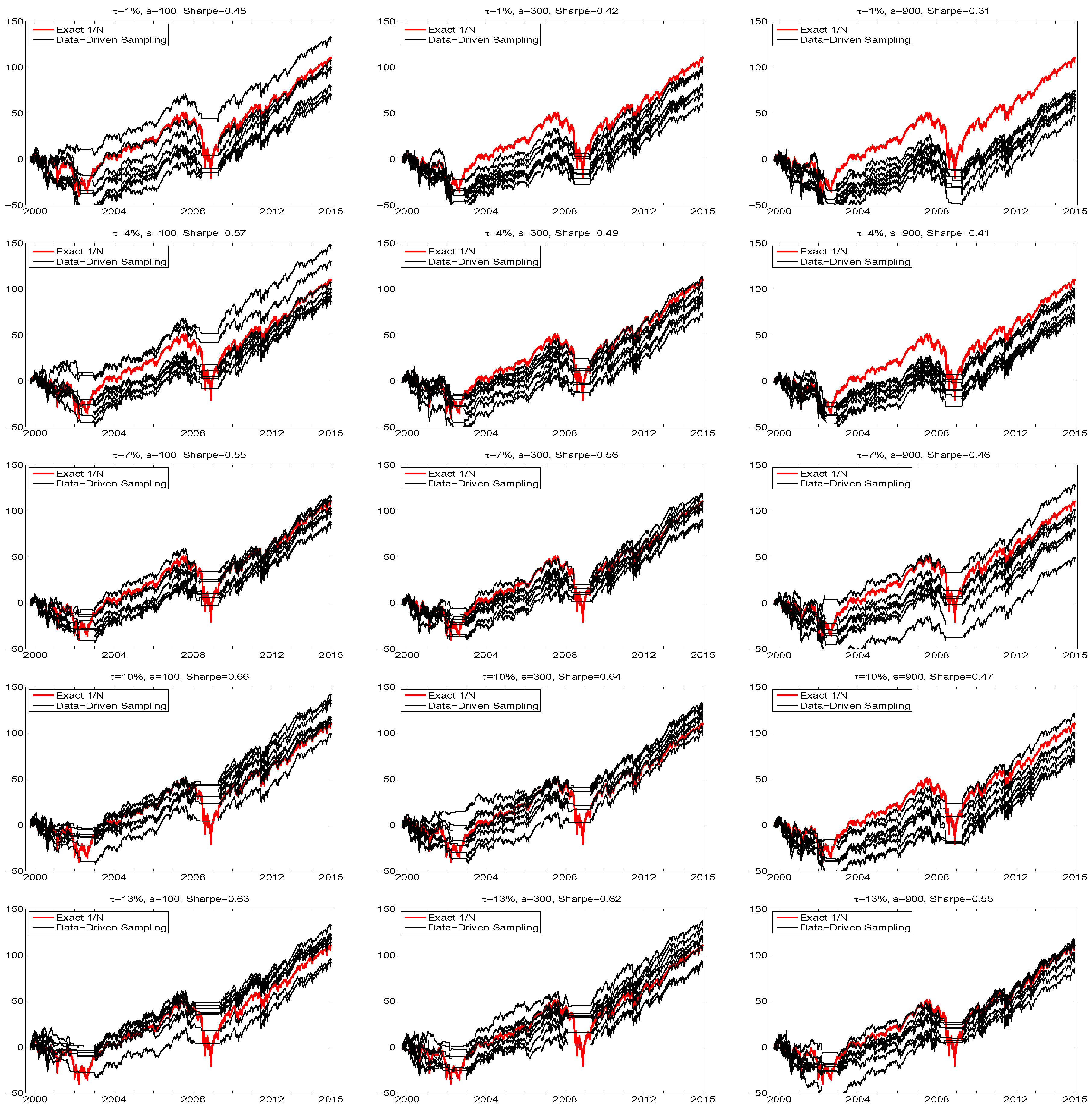

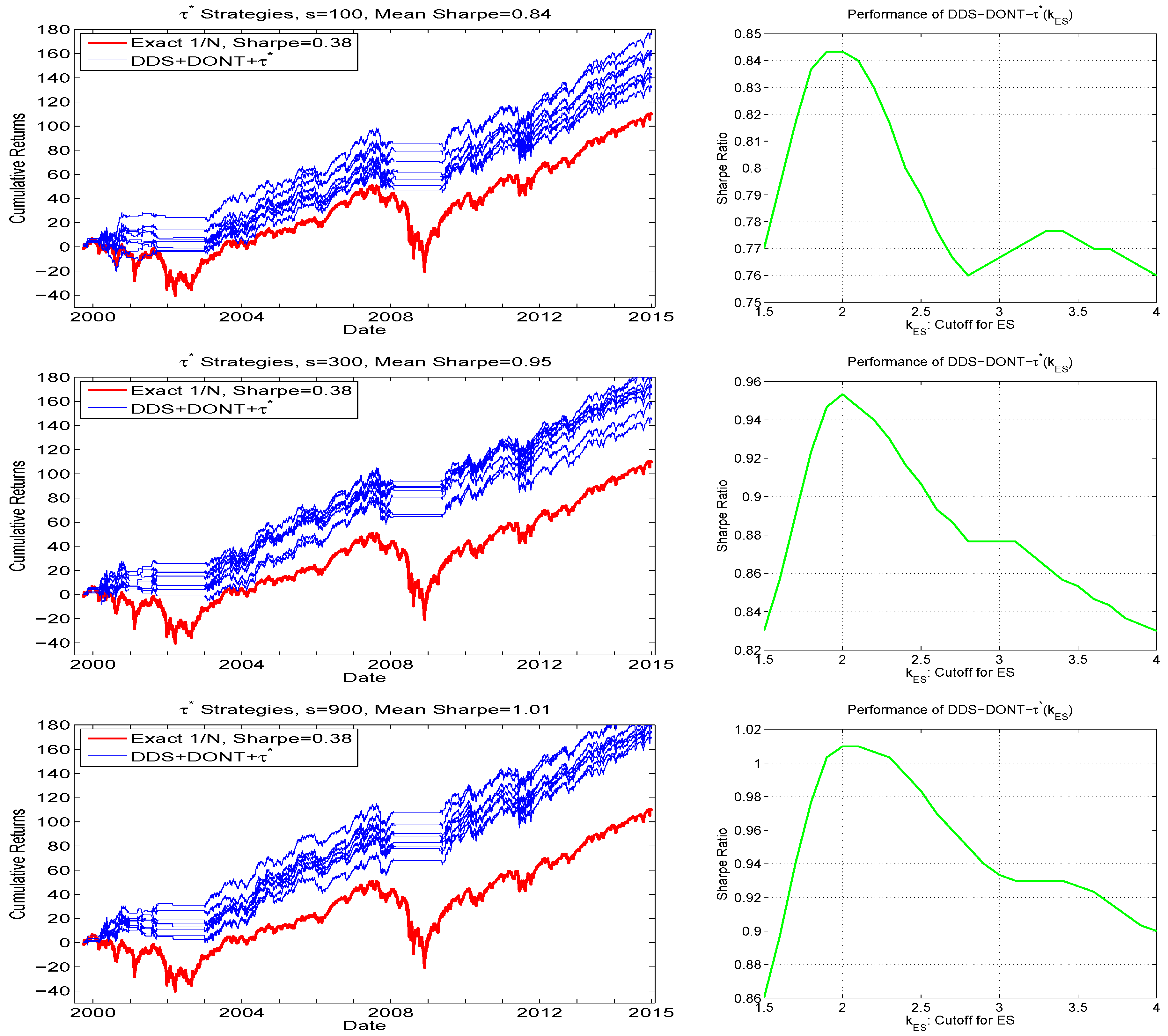

3.5.1. Performance Variation: Use of Hair Plots

3.5.2. Varying the Values of and s

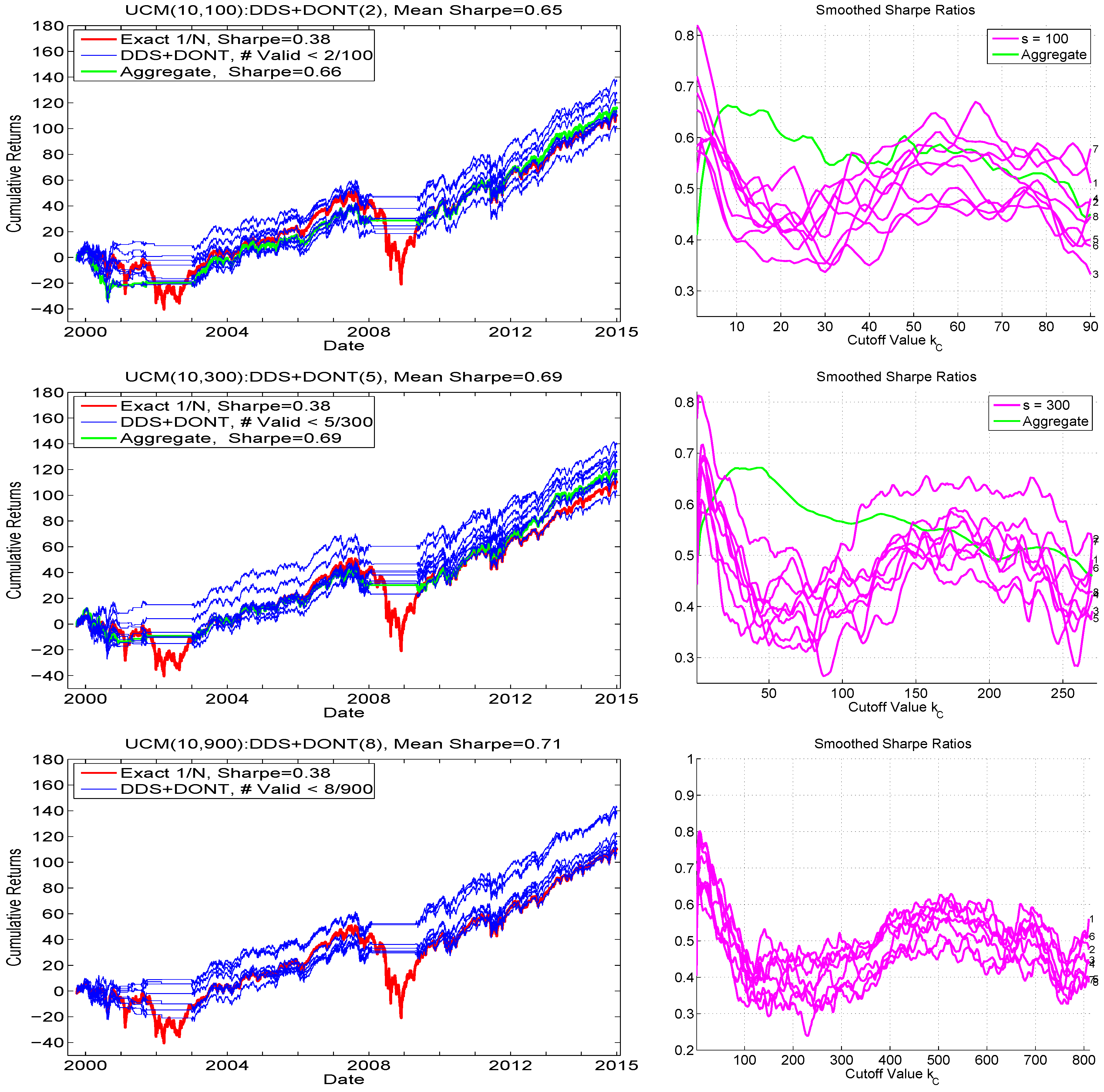

3.6. The DDS-DONT Sampling Method

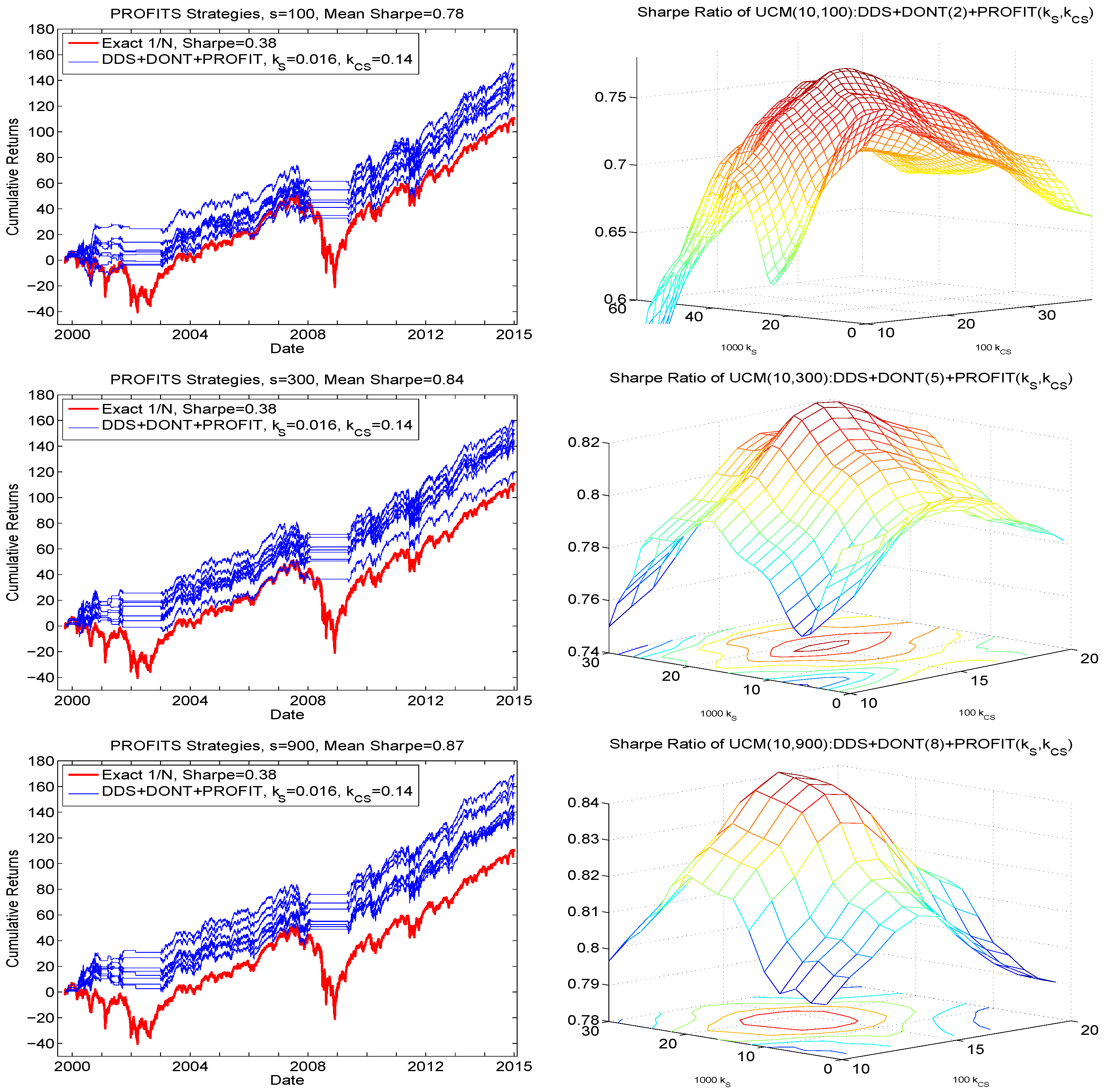

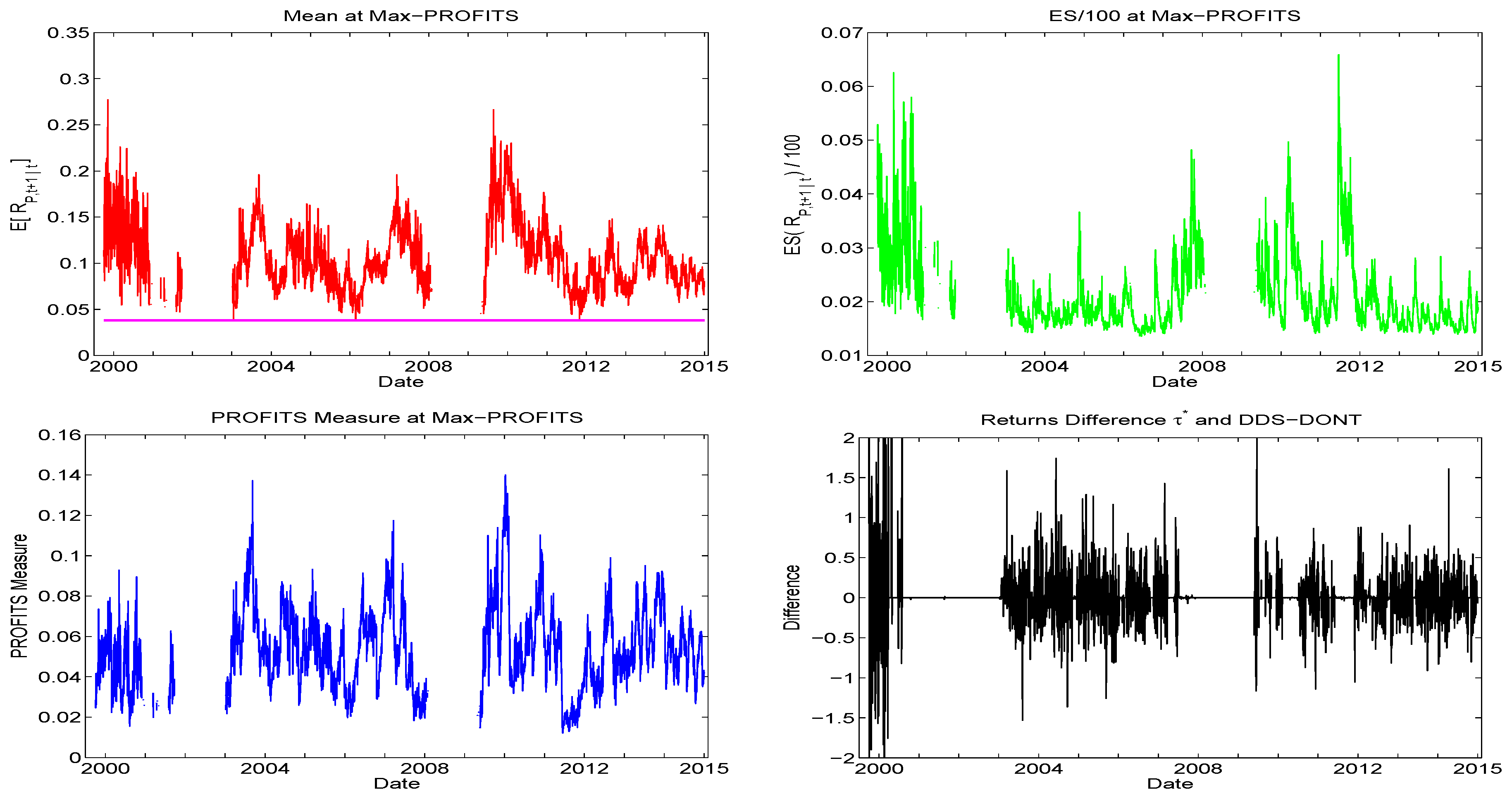

4. Enhancing Performance with PROFITS

4.1. PROFITS-Weighted Approach

4.2. Increasing Amid Favorable Conditions

- the number of random portfolios satisfying the mean constraint exceeds , and

- the ES corresponding to is less than a particular cutoff value, say ,

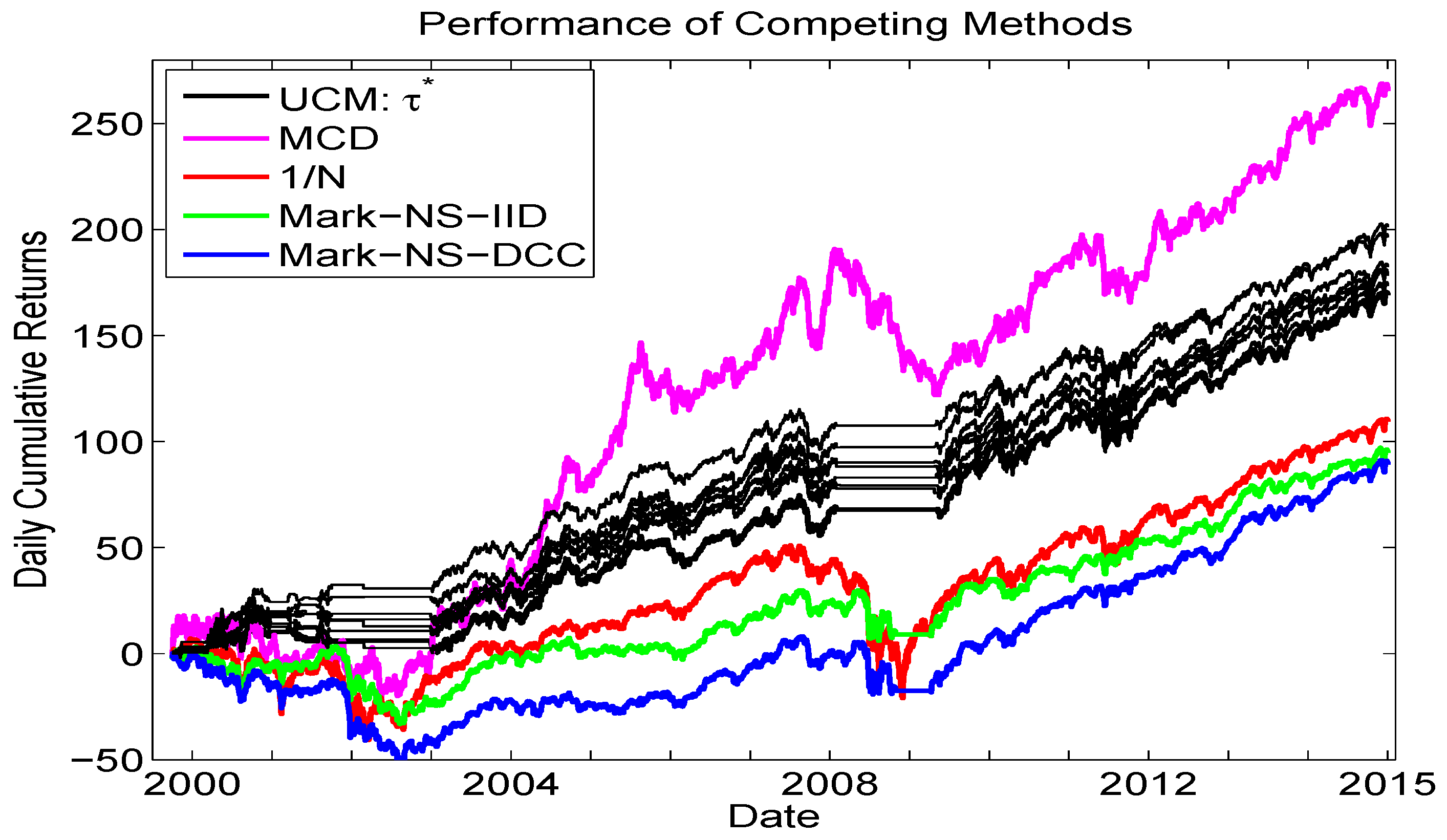

5. Performance Comparisons across Models

6. Conclusions

Acknowledgments

Conflicts of Interest

Appendix A. Mean Signal Improvement

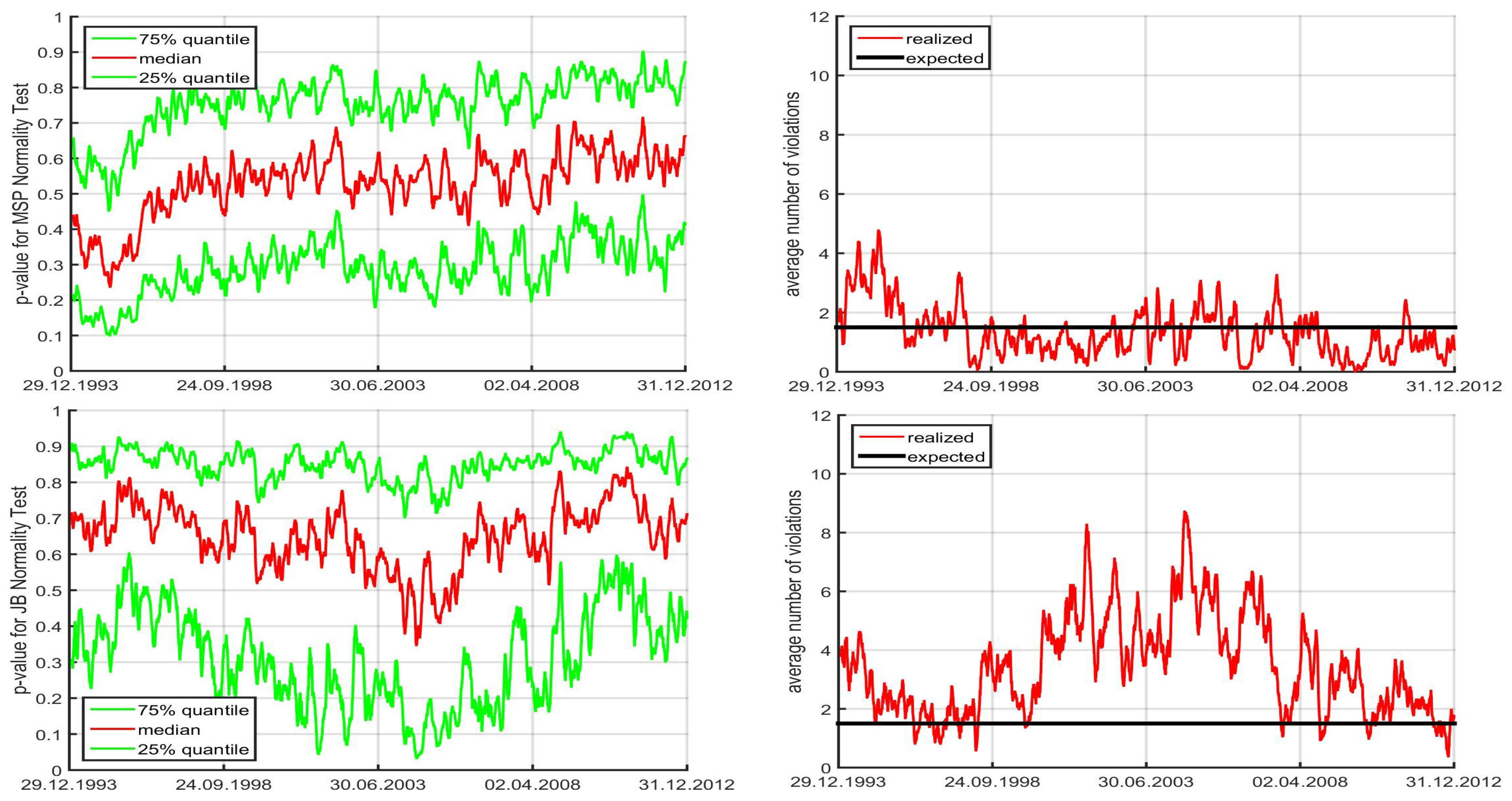

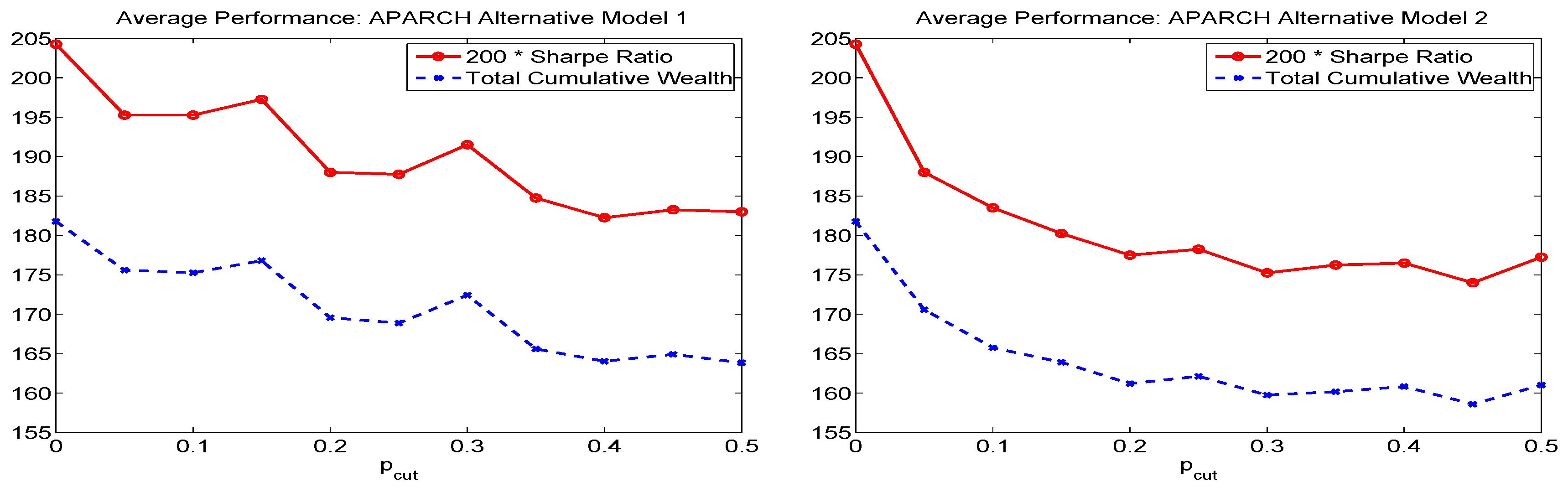

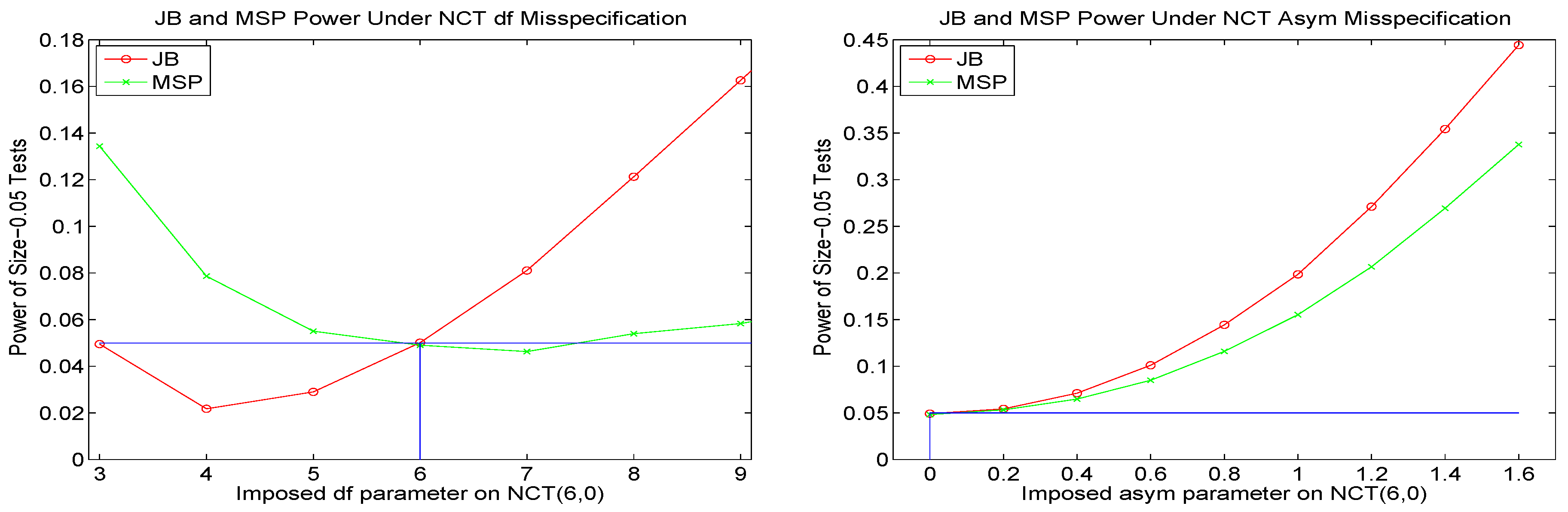

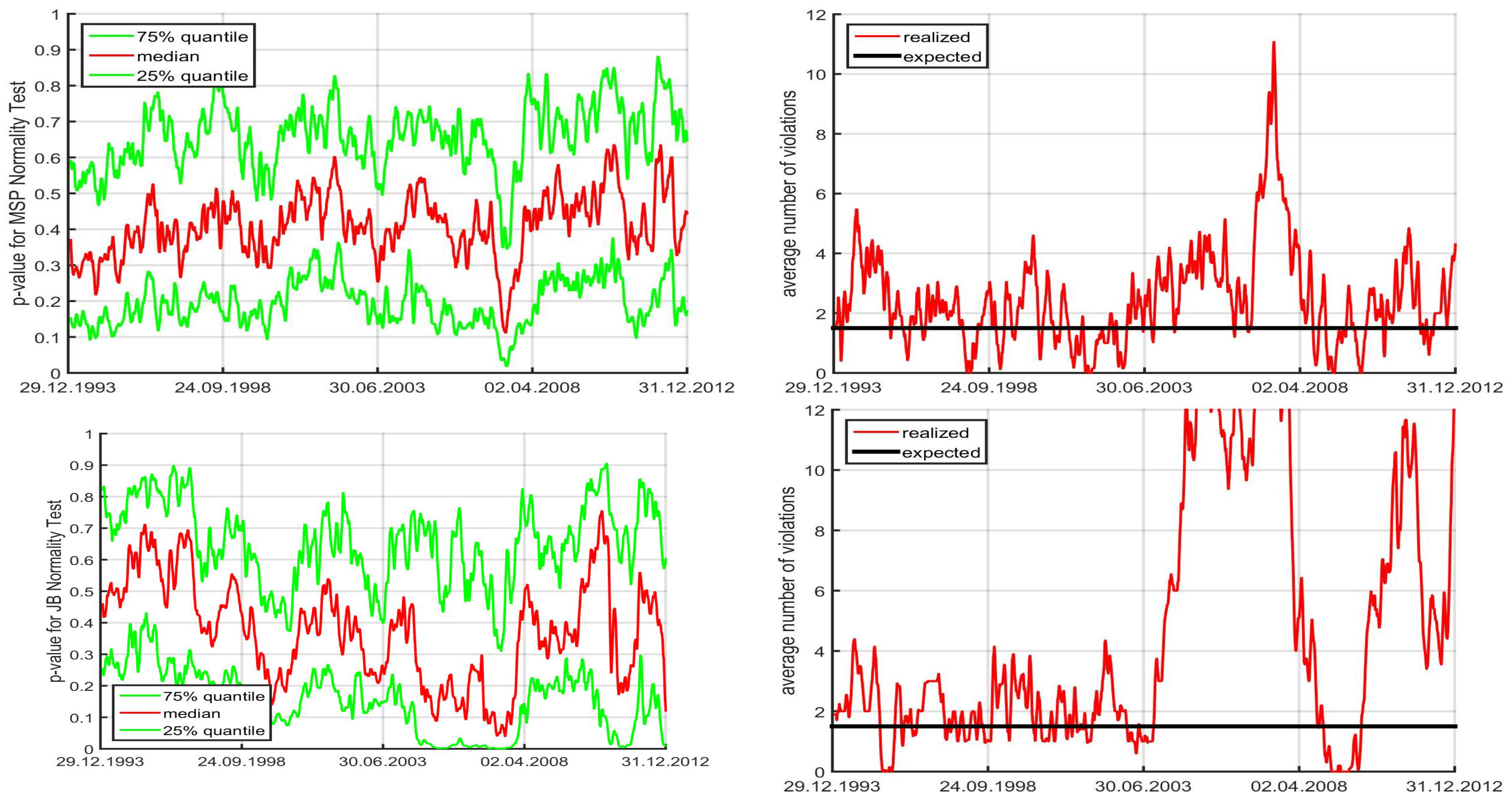

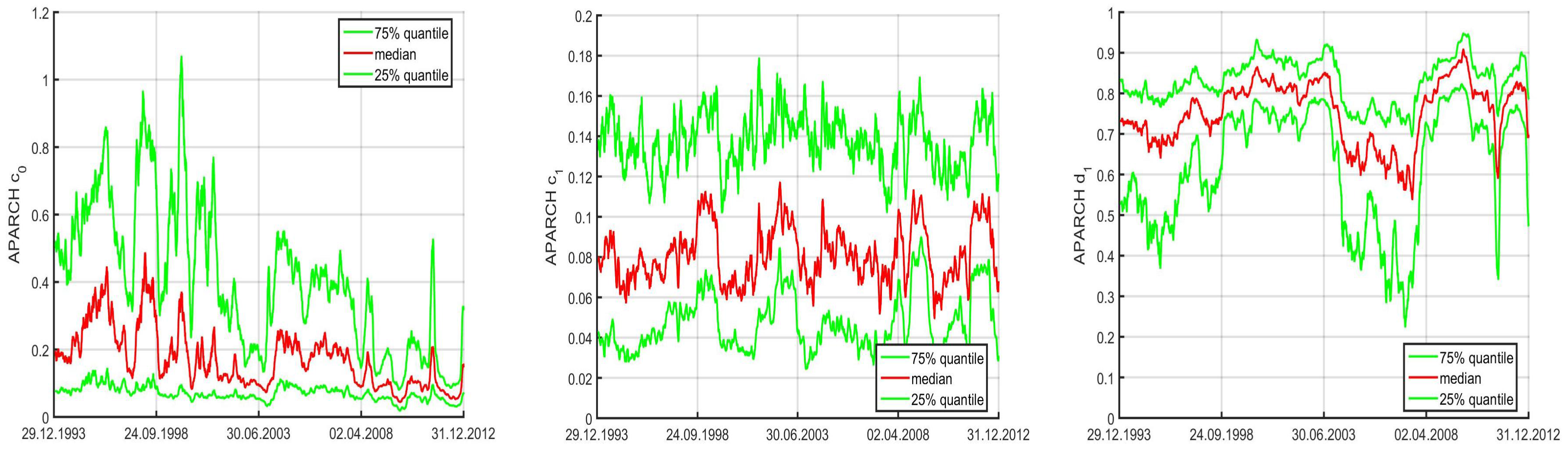

Appendix B. Model Diagnostics and Alternative APARCH Specifications

References

- Aas, Kjersti, Ingrid Hobæk Haff, and Xeni K. Dimakos. 2005. Risk Estimation using the Multivariate Normal Inverse Gaussian Distribution. Journal of Risk 8: 39–60. [Google Scholar] [CrossRef]

- Adcock, Christopher J. 2010. Asset Pricing and Portfolio Selection Based on the Multivariate Extended Skew-Student-t Distribution. Annals of Operations Research 176: 221–34. [Google Scholar] [CrossRef]

- Adcock, Christopher J. 2014. Mean—Variance—Skewness Efficient Surfaces, Stein’s Lemma and the Multivariate Extended Skew-Student Distribution. European Journal of Operational Research 234: 392–401. [Google Scholar] [CrossRef]

- Adcock, Christopher J., Martin Eling, and Nicola Loperfido. 2015. Skewed Distributions in Finance and Actuarial Science: A Preview. European Journal of Finance 21: 1253–81. [Google Scholar] [CrossRef]

- Allen, David E., Michael McAleer, Robert J. Powell, and Abhay K. Singh. 2016. Down-side Risk Metrics as Portfolio Diversification Strategies across the GFC. Journal of Risk and Financial Management 9: 6. [Google Scholar] [CrossRef]

- Andersen, Torben G., Tim Bollerslev, Peter F. Christoffersen, and Francis X. Diebold. 2007. Practical Volatility and Correlation Modeling for Financial Market Risk Management. In The Risks of Financial Institutions. Edited by Mark Carey and Rene M. Stulz. Chicago: The University of Chicago Press, chp. 11. pp. 513–44. [Google Scholar]

- Bauwens, Luc, Christian M. Hafner, and Jeroen V. K. Rombouts. 2007. Multivariate Mixed Normal Conditional Heteroskedasticity. Computational Statistics & Data Analysis 51: 3551–66. [Google Scholar] [CrossRef]

- Bauwens, Luc, Sébastien Laurent, and Jeroen V.K. Rombouts. 2006. Multivariate GARCH Models: A Survey. Journal of Applied Econometrics 21: 79–109. [Google Scholar] [CrossRef]

- Biglova, Almira, Sergio Ortobelli, Svetlozar Rachev, and Frank J. Fabozzi. 2010. Modeling, Estimation, and Optimization of Equity Portfolios with Heavy-Tailed Distributions. In Optimizing Optimization: The Next Generation of Optimization Applications and Theory. Edited by Stephen Satchell. Cambridge: Academic Press, pp. 117–42. [Google Scholar]

- Billio, Monica, Massimiliano Caporin, and Michele Gobbo. 2006. Flexible Dynamic Conditional Correlation Multivariate GARCH Models for Asset Allocation. Applied Financial Economics Letters 2: 123–30. [Google Scholar] [CrossRef]

- Bloomfield, Ted, Richard Leftwich, and John B. Long Jr. 1977. Portfolio Strategies and Performance. Journal of Financial Economics 5: 201–18. [Google Scholar] [CrossRef]

- Bollerslev, Tim. 1986. Generalized Autoregressive Conditional Heteroskedasticity. Journal of Econometrics 31: 307–27. [Google Scholar] [CrossRef]

- Bollerslev, Tim. 1990. Modeling the Coherence in Short-Run Nominal Exchange Rates: A Multivariate Generalized ARCH Approach. Review of Economics and Statistics 72: 498–505. [Google Scholar] [CrossRef]

- Bowman, K. O., and L. R. Shenton. 1975. Omnibus Test Contours for Departures from Normality Based on and b2. Biometrika 62: 243–50. [Google Scholar] [CrossRef]

- Brandt, Michael W., Pedro Santa-Clara, and Rossen Valkanov. 2009. Parametric Portfolio Policies: Exploiting Characteristics in the Cross-Section of Equity Returns. Review of Financial Studies 22: 3411–47. [Google Scholar] [CrossRef]

- Broda, Simon, and Marc S. Paolella. 2007. Saddlepoint Approximations for the Doubly Noncentral t Distribution. Computational Statistics & Data Analysis 51: 2907–18. [Google Scholar] [CrossRef]

- Broda, Simon A., Markus Haas, Jochen Krause, Marc S. Paolella, and Sven C. Steude. 2013. Stable Mixture GARCH Models. Journal of Econometrics 172: 292–306. [Google Scholar] [CrossRef]

- Broda, Simon A., and Marc S. Paolella. 2009. CHICAGO: A Fast and Accurate Method for Portfolio Risk Calculation. Journal of Financial Econometrics 7: 412–36. [Google Scholar] [CrossRef]

- Broda, Simon A., and Marc S. Paolella. 2011. Expected Shortfall for Distributions in Finance. In Statistical Tools for Finance and Insurance. Edited by Pavel Čížek, Wolfgang Härdle and Rafał Weron. Berlin: Springer Verlag, pp. 57–99. [Google Scholar]

- Brown, Stephen J., Inchang Hwang, and Francis In. 2013. Why Optimal Diversification Cannot Outperform Naive Diversification: Evidence from Tail Risk Exposure. Available online: https://www.researchgate.net/publication/273084879_Why_Optimal_Diversification_Cannot_Outperform_Naive_Diversification_Evidence_from_Tail_Risk_Exposure (accessed on 9 October 2016).

- Campbell, Rachel A., and Roman Kräussl. 2007. Revisiting the Home Bias Puzzle: Downside Equity Risk. Journal of International Money and Finance 26: 1239–60. [Google Scholar] [CrossRef]

- Caporin, Massimiliano, and Michael McAleer. 2008. Scalar BEKK and Indirect DCC. Journal of Forecasting 27: 537–49. [Google Scholar] [CrossRef]

- Caporin, Massimiliano, and Michael McAleer. 2012. Do We Really Need Both BEKK and DCC? A Tale of Two Multivariate GARCH Models. Journal of Economic Surveys 26: 736–51. [Google Scholar] [CrossRef]

- Cappiello, Lorenzo, Robert F. Engle, and Kevin Sheppard. 2006. Asymmetric Dynamics in the Correlations of Global Equity and Bond Returns. Journal of Financial Econometrics 4: 537–72. [Google Scholar] [CrossRef]

- Chopra, Vijay Kumar, and William T. Ziemba. 1993. The Effect of Errors in Means, Variances, and Covariances on Optimal Portfolio Choice. Journal of Portfolio Management 19: 6–11. [Google Scholar] [CrossRef]

- Christoffersen, Peter. 2009. Value-at-Risk Models. In Handbook of Financial Time Series. Edited by Thomas Mikosch, Jens-Peter Kreiss, Richard A. Davis and Torben Gustav Andersen. Berlin: Springer-Verlag, pp. 753–66. [Google Scholar]

- Cogneau, Philippe, and Georges Hübner. 2009a. The (more than) 100 Ways to Measure Portfolio Performance—Part 1: Standardized Risk-Adjusted Measures. Journal of Performance Measurement 13: 56–71. [Google Scholar]

- Cogneau, Philippe, and Georges Hübner. 2009b. The (more than) 100 Ways to Measure Portfolio Performance—Part 2: Special Measures and Comparison. Journal of Performance Measurement 14: 56–69. [Google Scholar]

- D’Agostino, Ralph, and E. S. Pearson. 1973. Testing for Departures from Normality. Empirical Results for Distribution of b2 and . Biometrika 60: 613–22. [Google Scholar]

- Davis, Mark H. A. 2016. Verification of Internal Risk Measure Estimates. Statistics and Risk Modeling, 33. [Google Scholar] [CrossRef]

- DeMiguel, Victor, Lorenzo Garlappi, Francisco J. Nogales, and Raman Uppal. 2009a. A Generalized Approach to Portfolio Optimization: Improving Performance by Constraining Portfolio Norms. Management Science 55: 798–812. [Google Scholar] [CrossRef]

- DeMiguel, Victor, Lorenzo Garlappi, and Raman Uppal. 2009b. Optimal Versus Naive Diversification: How Inefficient is the 1/N Portfolio Strategy? Review of Financial Studies 22: 1915–53. [Google Scholar] [CrossRef]

- Diebold, Francis X., Todd A. Gunther, and Anthony S. Tay. 1998. Evaluating Density Forecasts with Applications to Financial Risk Management. International Economic Review 39: 863–83. [Google Scholar] [CrossRef]

- Ding, Zhuanxin, Clive W. J. Granger, and Robert F. Engle. 1993. A Long Memory Property of Stock Market Returns and a New Model. Journal of Empirical Finance 1: 83–106. [Google Scholar] [CrossRef]

- Embrechts, Paul, and Marius Hofert. 2014. Statistics and Quantitative Risk Management for Banking and Insurance. Annual Review of Statistics and Its Application 1: 493–514. [Google Scholar] [CrossRef]

- Embrechts, Paul, Alexander McNeil, and Daniel Straumann. 2002. Correlation and Dependency in Risk Management: Properties and Pitfalls. In Risk Management: Value at Risk and Beyond. Edited by M. A. H. Dempster. Cambridge: Cambridge University Press, pp. 176–223. [Google Scholar]

- Engle, Robert F. 2002. Dynamic Conditional Correlation: A Simple Class of Multivariate Generalized Autoregressive Conditional Heteroskedasticity Models. Journal of Business and Economic Statistics 20: 339–50. [Google Scholar]

- Engle, Robert F. 2009. Anticipating Correlations: A New Paradigm for Risk Management. Princeton: Princeton University Press. [Google Scholar]

- Engle, Robert F., and Kenneth F. Kroner. 1995. Multivariate Simultaneous Generalized ARCH. Econometric Theory 11: 122–50. [Google Scholar] [CrossRef]

- Fama, Eugene F., and Kenneth R. French. 1993. Common Risk Factors in the Returns of Stocks and Bonds. Journal of Financial Economics 33: 3–56. [Google Scholar] [CrossRef]

- Fama, Eugene F., and Kenneth R. French. 1996. Multifactor Explanations of Asset Pricing Anomalies. Journal of Finance 51: 55–84. [Google Scholar]

- Fletcher, Jonathan. 2017. Exploring the Benefits of Using Stock Characteristics in Optimal Portfolio Strategies. The European Journal of Finance 23: 192–210. [Google Scholar] [CrossRef]

- Francq, Christian, and Jean-Michel Zakoïan. 2010. GARCH Models: Structure, Statistical Inference and Financial Applications. Hoboken: John Wiley & Sons Ltd. [Google Scholar]

- Fugazza, Carolina, Massimo Guidolin, and Giovanna Nicodano. 2015. Equally Weighted vs. Long-Run Optimal Portfolios. European Financial Management 21: 742–89. [Google Scholar] [CrossRef]

- Gambacciani, Marco, and Marc S. Paolella. 2017. Robust Normal Mixtures for Financial Portfolio Allocation. Econometrics and Statistics. [Google Scholar] [CrossRef]

- Glosten, Lawrence R., Ravi Jagannathan, and David E. Runkle. 1993. On the Relation between the Expected Value and Volatility of Nominal Excess Return on Stocks. Journal of Finance 48: 1779–801. [Google Scholar] [CrossRef]

- Haas, Markus, Stefan Mittnik, and Marc S. Paolella. 2009. Asymmetric Multivariate Normal Mixture GARCH. Computational Statistics & Data Analysis 53: 2129–54. [Google Scholar] [CrossRef]

- Hansen, Nikolaus, and Andreas Ostermeier. 2001. Completely Derandomized Self-Adaptation in Evolution Strategies. Evolutionary Computation 9: 159–95. [Google Scholar] [CrossRef] [PubMed]

- Härdle, Wolfgang Karl, Ostap Okhrin, and Weining Wang. 2015. Hidden Markov Structures for Dynamic Copulae. Econometric Theory 31: 981–1015. [Google Scholar] [CrossRef]

- He, Changli, and Timo Teräsvirta. 1999. Statistical Properties of the Asymmetric Power ARCH Model. In Cointegration, Causality, and Forecasting. Festschrift in Honour of Clive W. J. Granger. Edited by Robert F. Engle and Halbert White. Oxford: Oxford University Press, pp. 462–474. [Google Scholar]

- Jagannathan, Ravi, and Tongshu Ma. 2003. Risk Reduction in Large Portfolios: Why Imposing the Wrong Constraints Helps. Journal of Finance 58: 1651–84. [Google Scholar] [CrossRef]

- Jarque, Carlos M., and Anil K. Bera. 1980. Efficient Tests for Normality, Homoskedasticity and Serial Independence of Regression Residuals. Economics Letters 6: 255–59. [Google Scholar] [CrossRef]

- Jondeau, Eric. 2016. Asymmetry in Tail Dependence of Equity Portfolios. Computational Statistics & Data Analysis 100: 351–68. [Google Scholar] [CrossRef]

- Jondeau, Eric, Ser-Huang Poon, and Michael Rockinger. 2007. Financial Modeling Under Non-Gaussian Distributions. London: Springer. [Google Scholar]

- Jorion, Philippe. 1986. Bayes-Stein Estimation for Portfolio Analysis. Journal of Financial and Quantitative Analysis 21: 279–92. [Google Scholar] [CrossRef]

- Kan, Raymond, and Guofu Zhou. 2007. Optimal Portfolio Choice with Parameter Uncertainty. Journal of Financial and Quantitative Analysis 42: 621–56. [Google Scholar] [CrossRef]

- Karanasos, Menelaos, and Jinki Kim. 2006. A Re-Examination of the Asymmetric Power ARCH Model. Journal of Empirical Finance 13: 113–28. [Google Scholar] [CrossRef]

- Krause, Jochen, and Marc S. Paolella. 2014. A Fast, Accurate Method for Value at Risk and Expected Shortfall. Econometrics 2: 98–122. [Google Scholar] [CrossRef]

- Kuester, Keith, Stefan Mittnik, and Marc S. Paolella. 2006. Value–at–Risk Prediction: A Comparison of Alternative Strategies. Journal of Financial Econometrics 4: 53–89. [Google Scholar] [CrossRef]

- Ledoit, Olivier, and Michael Wolf. 2004. Honey, I Shrunk the Sample Covariance Matrix. Journal of Portfolio Management 30: 110–19. [Google Scholar] [CrossRef]

- Ledoit, Oliver, and Michael Wolf. 2008. Robust Performance Hypothesis Testing with the Sharpe Ratio. Journal of Empirical Finance 15: 850–59. [Google Scholar] [CrossRef]

- Ledoit, Olivier, and Michael Wolf. 2012. Nonlinear Shrinkage Estimation of Large-Dimensional Covariance Matrices. Annals of Statistics 40: 1024–60. [Google Scholar] [CrossRef]

- Lehmann, E. L., and George Casella. 1998. Theory of Point Estimation, 2nd ed. New York: Springer Verlag. [Google Scholar]

- Ling, Shiqing, and Michael McAleer. 2002. Necessary and Sufficient Moment Conditions for the GARCH(r, s) and Asymmetric Power GARCH(r, s) Models. Econometric Theory 18: 722–29. [Google Scholar] [CrossRef]

- Lo, Andrew W. 2002. The Statistics of Sharpe Ratios. Financial Analysts Journal 58: 36–52. [Google Scholar] [CrossRef]

- Manganelli, Simone. 2004. Asset Allocation by Variance Sensitivity. Journal of Financial Econometrics 2: 370–89. [Google Scholar] [CrossRef]

- McAleer, Michael, Felix Chan, Suhejla Hoti, and Offer Lieberman. 2008. Generalized Autoregressive Conditional Correlation. Econometric Theory 24: 1554–83. [Google Scholar] [CrossRef]

- McNeil, Alexander J., Rüdiger Frey, and Paul Embrechts. 2005. Quantitative Risk Management: Concepts, Techniques, and Tools. Princeton: Princeton University Press. [Google Scholar]

- Mittnik, Stefan, and Marc S. Paolella. 2000. Conditional Density and Value–at–Risk Prediction of Asian Currency Exchange Rates. Journal of Forecasting 19: 313–33. [Google Scholar] [CrossRef]

- Nadarajah, Saralees, Bo Zhang, and Stephen Chan. 2013. Estimation Methods for Expected Shortfall. Quantitative Finance 14: 271–91. [Google Scholar]

- Nijman, Theo, and Enrique Sentana. 1996. Marginalization and Contemporaneous Aggregation in Multivariate GARCH Processes. Journal of Econometrics 71: 71–87. [Google Scholar] [CrossRef]

- Paolella, Marc S. 2007. Intermediate Probability: A Computational Approach. Chichester: John Wiley & Sons. [Google Scholar]

- Paolella, Marc S. 2014. Fast Methods For Large-Scale Non-Elliptical Portfolio Optimization. Annals of Financial Economics 9, 02: 1440001. [Google Scholar] [CrossRef]

- Paolella, Marc S. 2015a. Multivariate Asset Return Prediction with Mixture Models. European Journal of Finance 21: 1214–52. [Google Scholar] [CrossRef]

- Paolella, Marc S. 2015b. New Graphical Methods and Test Statistics for Testing Composite Normality. Econometrics 3: 532–60. [Google Scholar] [CrossRef]

- Paolella, Marc S., and Paweł Polak. 2015a. ALRIGHT: Asymmetric LaRge-Scale (I)GARCH with Hetero-Tails. International Review of Economics and Finance 40: 282–97. [Google Scholar] [CrossRef]

- Paolella, Marc S., and Paweł Polak. 2015b. COMFORT: A Common Market Factor Non-Gaussian Returns Model. Journal of Econometrics 187: 593–605. [Google Scholar] [CrossRef]

- Paolella, Marc S., and Paweł Polak. 2015c. Density and Risk Prediction with Non-Gaussian COMFORT Models. Submitted for publication. [Google Scholar]

- Paolella, Marc S., and Paweł Polak. 2015d. Portfolio Selection with Active Risk Monitoring. Research paper. Zurich, Switzerland: Swiss Finance Institute. [Google Scholar]

- Paolella, Marc S., and Sven -C. Steude. 2008. Risk Prediction: A DWARF-like Approach. Journal of Risk Model Validation 2: 25–43. [Google Scholar]

- Santos, André A. P., Francisco J. Nogales, and Esther Ruiz. 2013. Comparing Univariate and Multivariate Models to Forecast Portfolio Value–at–Risk. Journal of Financial Econometrics 11, 2: 400–41. [Google Scholar] [CrossRef]

- Silvennoinen, Annastiina, and Timo Teräsvirta. 2009. Multivariate GARCH Models. In Handbook of Financial Time Series. Edited by Torben Gustav Andersen, Richard A. Davis, Jens-Peter Kreiss and Thomas Mikosch. Berlin: Springer Verlag, pp. 201–29. [Google Scholar]

- Sun, Pengfei, and Chen Zhou. 2014. Diagnosing the Distribution of GARCH Innovations. Journal of Empirical Finance 29: 287–303. [Google Scholar]

- Tunaru, Radu. 2015. Model Risk in Financial Markets: From Financial Engineering to Risk Management. Singapore: World Scientific. [Google Scholar]

- Virbickaite, Audrone, M. Concepción Ausín, and Pedro Galeano. 2016. A Bayesian Non-Parametric Approach to Asymmetric Dynamic Conditional Correlation Model with Application to Portfolio Selection. Computational Statistics & Data Analysis 100: 814–29. [Google Scholar] [CrossRef]

- Wu, Lei, Qingbin Meng, and Julio C. Velazquez. 2015. The Role of Multivariate Skew-Student Density in the Estimation of Stock Market Crashes. European Journal of Finance 21: 1144–60. [Google Scholar] [CrossRef]

- Zhu, Qiji Jim, David H. Bailey, Marcos Lopez de Prado, and Jonathan M. Borwein. 2017. The Probability of Backtest Overfitting. Journal of Computational Finance 20: 39–69. [Google Scholar]

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paolella, M.S. The Univariate Collapsing Method for Portfolio Optimization. Econometrics 2017, 5, 18. https://doi.org/10.3390/econometrics5020018

Paolella MS. The Univariate Collapsing Method for Portfolio Optimization. Econometrics. 2017; 5(2):18. https://doi.org/10.3390/econometrics5020018

Chicago/Turabian StylePaolella, Marc S. 2017. "The Univariate Collapsing Method for Portfolio Optimization" Econometrics 5, no. 2: 18. https://doi.org/10.3390/econometrics5020018