1. Introduction

In the Northwestern area of Iran about half of the annual precipitation occurs between months of March and May. Less than 4% of the annual precipitation occurs in summer [

1], typically with heavy convective rainfall. Topography and other factors provide favorable conditions for the occurrence of flood in this area in summer. There is a rising cost and social impact associated with extreme weather, not to mention the loss of human life [

2]. These events have major effects on the society, economy and the environment [

3], and have a direct impact on people, countries and vulnerable regions [

4]. Records show that these events are increasing [

5]. There are several methods to predict rainfall-runoff. Rainfall-runoff modeling was improved in many researches [

6,

7,

8,

9,

10].

An important thing to predict flash flood is heavy rainfall forecasting. If we can predict rainfall, it will be very useful to determine flash flood. An attempt to seek a relatively optimal data-driven model for rainfall forecasting from three aspects: model inputs, modeling methods, and data-preprocessing techniques. A proposed model, modular artificial neural network (MANN), is compared with three benchmark models, viz. artificial neural network (ANN), K-nearest-neighbors (K-NN), and linear regression (LR). The proposed optimal rainfall forecasting model can be derived from MANN coupled with SSA [

11]. In this study, Numerical weather prediction (NWP) was used to improve rainfall simulation.

According to statistical data from 87 meteorological stations, 132 cases have been recorded with daily rainfall total over 20 mm in this region during the summer since 1954 to 2015, and 41 cases have recorded in the last 5 years. These few events during the summer since 1954 to 2015 because there were a few stations before 2000 and the most of weather stations were stablished after that. For example 41 events were recorded in 5 years between 2010 and 2015. All 87 weather stations had data during 2010 to 2015 and their data is reliable [

12], then historical records of 2010 to 2015 are taken.

Most of these events have not been predicted. Then we need to improve our rainfall simulation. The WRF system is a mesoscale numerical weather prediction system used for operational forecasting, which allows users to make adjustments for a specific scale and geographical location [

13]. Four different WRF microphysics schemes have been tested [

14] over southeast India (Thompson, Lin, WRF Single-Moment 6 class (WSM6) and Morrison). While the Thompson scheme simulated surface rainfall distribution closer to observations, the other three schemes overestimated observed rainfall. Furthermore [

15], Austral summer rainfall over the period 1991/1992 to 2010/2011 was dynamically downscaled by the WRF model at 9 km resolution for South Africa, and utilized three different convective parameterization schemes, namely the (1) Kain-Fritsch (KF), (2) Betts-Miller-Janjic (BMJ) and (3) Grell-Devenyi-ensemble (GDE) schemes. All three schemes have generated positive rainfall biases over South Africa, with the KF scheme producing the largest biases and mean absolute errors. Only the BMJ scheme could reproduce the intensity of rainfall anomalies, and also exhibited the highest correlation with the observed internal summer rainfall variability. In another study [

16], simulations of a 15 km grid resolution were compared using five different cumulus parameterization schemes for three flooding events in Alberta, Canada. The Kain-Fritsch and explicit cumulus parameterization schemes were found to be the most accurate when simulating precipitation across three summer events.

The accuracy of South East of United State (SE US) summer rainfall simulations at 15 km resolution were evaluated [

17] using (WRF) model and were compared with those at the 3 km resolution. Results indicated that the simulations at the 3 km resolution do not show significant advantages over those at the 15 km resolution using the Zhang-McFarlane cumulus scheme. In this study it was suggested that to obtain an acceptable simulation, selection of a suitable cumulus scheme that realistically represents the convective rainfall triggering mechanism is more important than just increasing the model resolution. Another study [

18] has evaluated the WRF model for regional climate applications over Thailand, focusing on simulated precipitation using various convective parameterization schemes possible in WRF. Experiments were carried out for the year 2005 using four convective cumulus parameterization schemes, namely, Betts-Miller-Janjic (BMJ), Grell-Devenyi (GD), improved Grell-Denenyi (G3D) and Kain-Fritsch (KF) with and without nudging applied to the outermost nest. The results showed that the experiments with nudging generally performed better than un-nudged experiments and that the BMJ cumulus scheme with nudging provided the smallest bias relative to the observations. Another study [

19] that examined the sensitivity of the WRF model performance using three different PBL schemes Mellor-Yamada-Janjic (MYJ), Yonsei University (YSU), and the asymmetric convective model, version 2 (ACM2) WRF simulations with different schemes over Texas in July–September 2005 showed that the simulations with the YSU and ACM2 schemes gave much less bias compared to the MYJ scheme. An examination combined different physical scheme for simulation series of rainfall events near the southeast coast of Australia known as East Coast Lows. The study [

13] was made using a thirty-six member multi-physics ensemble such that each member had a unique set of physics parametrisations. These results suggested that the Mellor-Yamada-Janjic planetary boundary layer scheme and the Betts-Miller-Janjic cumulus scheme can be used with some level of robustness. The results also suggested that the Yonsei University planetary boundary layer scheme, Kain-Fritsch cumulus scheme and Rapid Radiative Transfer Model for General circulation model (RRTMG) radiation scheme should not be used in combination for that region. In other research [

20], a matrix of 18 WRF model configurations were created using different physical scheme combinations, ran with 12 km grid spacing for eight International H

2O Project (IHOP) mesoscale convective system (MCS) cases. For each case, three different treatments of convection, three different microphysical schemes, and two different planetary boundary layer schemes were used. The greatest variability in forecasts was found to come from changes in the choice of convective scheme, while notable impacts also occurred by changes in the microphysics and PBL schemes. On the other hand [

21], 3 km resolution WRF model was simulated with four different microphysics schemes and two different PBL schemes. The results showed that simulated rain volume was particularly affected by changes in microphysics schemes for both initializations. The change in the PBL scheme and corresponding synergistic terms (which corresponded to the interactions between different microphysical and PBL schemes) resulted in a statistically significant impact on rain volume.

A simulation [

22] of West Africa used combinations of three convective parameterization schemes (CPSs) and two planetary boundary layer schemes (PBLSs). The different parameterizations tested showed that the PBLSs have the largest effect on temperature, humidity, vertical distribution and rainfall amount. Whereas dynamics and precipitation variability are strongly influenced by CPSs. In particular, the Mellor-Yamada-Janjic PBLS provided more realistic values of humidity and temperature. Combined with the Kain-Fritsch CPS, the West African monsoon (WAM) onset is well represented.

More recently [

23], several aspects of the WRF modelling systems including two land surface models and two cumulus schemes have been tested for 4 months at 30 km resolution over the USA. The two cumulus schemes were found to perform similarly in terms of mean precipitation everywhere except over Florida where the Kain-Fritsch scheme performed better than the Betts-Miller-Janjic scheme and hence was chosen for future studies.

The results of research discussed above were used to select convective and planetary boundary layer schemes for this study. Some new schemes used in WRF 3.8 were also considered. For more information, visit the WRF USERS PAGE, 2016. Although a unique combination of different schemes cannot work well to give accurate forecasts for all atmospheric conditions, the best combinations from the many choices available on WRF could be investigated. But final choices would certainly depend on geographical area of study and season.

WRF was simulated of four summer rainfall events in NWI. For each event the simulations were repeated with 26 different model physics configurations. Combinations of 5 cumulus convection schemes, 4 planetary boundary layer schemes and 2 microphysics schemes were tested. Each simulation ran for 48 h, and was repeated in two domains; a larger one with 15 km grid spacing and smaller domain with 5 km grid spacing. The simulation results were compared with a range of indices based on contingency tables, and also a comparison of standard deviations, root mean square errors and correlation coefficients. The rainfall patterns from the simulations were also compared qualitatively with observed rainfall patterns. The overall result of the study is that, in general, the simulations give a poor representation of the convective rainfall events, but that specific combinations of the physics schemes perform slightly less poorly.

2. Data and Model Configuration

This study used the rainfall data from the NWI. This area covers four provinces and total area of 127,394 square kilometers. The area of study is located between latitude 35°32′54″ and 39°46′36″ North and longitude 44°2′5″ and 49°26′27″ East. The highest elevation is over 4500 m above sea level. In this area heavy convective rainfall can’t predict well. That is why this area selected to this study.

Synoptic systems are generally associated with shallow and weak troughs in the level of 500 mb in selected events. It causes cold air advection from higher latitudes regions to this area. Black sea is a source of moisture for these synoptic systems. Positive vorticity increases the instability in front of 500 mb troughs in the reign. These synoptic systems can only increase the amounts of cloud and wind speed due to the shortage of humidity and weak trough. The mountain effect intensifies the instability, so heavy precipitation occurs in some parts of NWI. The altitude of 4.32% of NWI is between 1600 and 2000 m [

24]. In this research, different models were tested to simulate precipitation by changing convective parameters. However WRF model cannot fully show the effects of the mountains well.

The models were generated using the WRF system with Advanced Research WRF (ARW) version 3.8 hosted at the National Center of Atmospheric Research (NCAR) [

25]. The WRF model has been updated to version 3.8 on 8 April 2016. It was the last version of WRF, when this study was done.

It is necessary to choose between many parametrizations for each physics option to run WRF. The wide of applications is possible due to the presence of multiple options for the physics and dynamics of WRF, enabling the user to optimize WRF for specific scales, geographical locations and applications. Determining the optimal combination of physics parameterizations to use is an increasingly difficult task as the number of parameterizations increases [

13]. A range of physics combinations are used to simulate rainfall events for the purpose of optimizing WRF for dynamical downscaling in this region. There are 15 cumulus schemes, 14 PBL schemes and 23 microphysics scheme options in WRF 3.8. According to most studies, cumulus schemes are very important on summer models. Therefore five different cumulus schemes were used. Kain-Fritsch (new Eta) (KF) (cu = 1) and Betts-Miller-Janjic (BMJ) (cu = 2) were selected due to result of the recent research some of them mentioned in introduction. Modified Kain-Fritsch (MKF) (cu = 10) which is new in WRF 3.8 was also selected as a candidate. This scheme modifies the Kain-Fritsch ad-hoc trigger function with one linked to boundary layer turbulence via probability density function using the cumulus potential scheme of Berg and Stull (2005) [

26]. Multi-scale Kain-Fritsch (MsKF) (cu = 11) and newer Tiedtke (NT) (cu = 16) were selected, as well [

27]. Three planetary boundary layer schemes (PBL) were selected based on the results from a previous researches as mentioned in introduction. Mellor-Yamada-Janjic (MYJ) (pbl = 2), Shin-Hong ‘scale-aware’ (SHsa) (pbl = 11) and Medium Range Forecast (MRF) (pbl = 99) were selected. MsKF cumulus scheme had to combine with YSU (pbl = 1) planetary boundary layer scheme, then this PBL was used. Based on the results of previous researches, microphysics scheme are less sensitive. Therefore, Kessler (mc = 1) and WSM 3 class simple ice (WSM3) (mc = 3) were used. Finally five cumulus, four PBL and two microphysics schemes were selected as shown in

Table 1. Combination of these schemes lead to 26 different configurations.

Four events were selected randomly from forty-one events with more than 20 mm of daily rainfall total in summer in the last five years (2010–2015), see

Table 2.

4. Verification

There are many methods for forecast verification, including their characteristics, pros and cons. Although the correct term is simulation, the words “forecast” and “simulation” are used interchangeably here for convenience. The methods range from simple traditional statistics and scores, to methods with more detailed diagnostic and scientific verification. The forecast is compared, or verified, against a corresponding observation of what actually occurred, or some good estimate of the true outcome [

29].

In this work, several methods were used to verify the models. These method included all of Standard verification methods [

30]. Methods for dichotomous (yes/no) forecasts, Methods for ulti-category forecasts, Methods for forecasts of continuous variables, Methods for probabilistic forecasts and Taylor diagram were used for verification. Observation data was collected from 87 stations gridded by kriging method [

31] to produce 5 km and 15 km grids to match the WRF output.

Several statistical tests have been developed to detect significance of the contingency. The chi-square contingency test [

32] is used for goodness-of-fit when there are one nominal variable with two or more values [

33]. Therefore, Chi-square contingency test was ran for the models. The results were significance. Categorical statistics that could be computed from the yes/no-contingency table. The indexes were determined from

Table 4. Result of seven Scalar quantities and five skill scores that were computed from yes/no-contingency table and Root Mean Square Error (RMSE) are shown in

Table 5 and

Table 6.

The scalar quantity indexes are included Proportion Correct (PC) [

34], Bias score (B), Treat Score (TS) or Critical Success Index [

35], odds ratio (

θ) [

36], False Alarm Ratio (FAR), Hit Rate (H) and False Alarm Rate (F).

Heidke skill score (HSS) [

37,

38], Peirce skill score (PSS) [

39,

40], Clayton skill score (CSS), Gilbert skill score (GSS) [

41] and Q skill score (Q) [

42,

43] are skill scores indexes.

These Indexes, mentioned above, were obtained from the following formula:

RMSE = Root Mean Square Error, (Hydman and Koehler 2006), F = forecast and O = observation, PC = indicate percent of correct forecasts.

Models and observation were compared spatially with among of daily rainfall total. Number of comparison in a, b, c and d indexes in

Table 5 and

Table 6 are different because number of grids in 5 km resolution are more than 15 km resolution.

Green and red cells in the tables represent the best models verified. The smallest value represents the best model as shown by green cells for some indexes. For other indexes, the highest value represents the best model as shown by red cells.

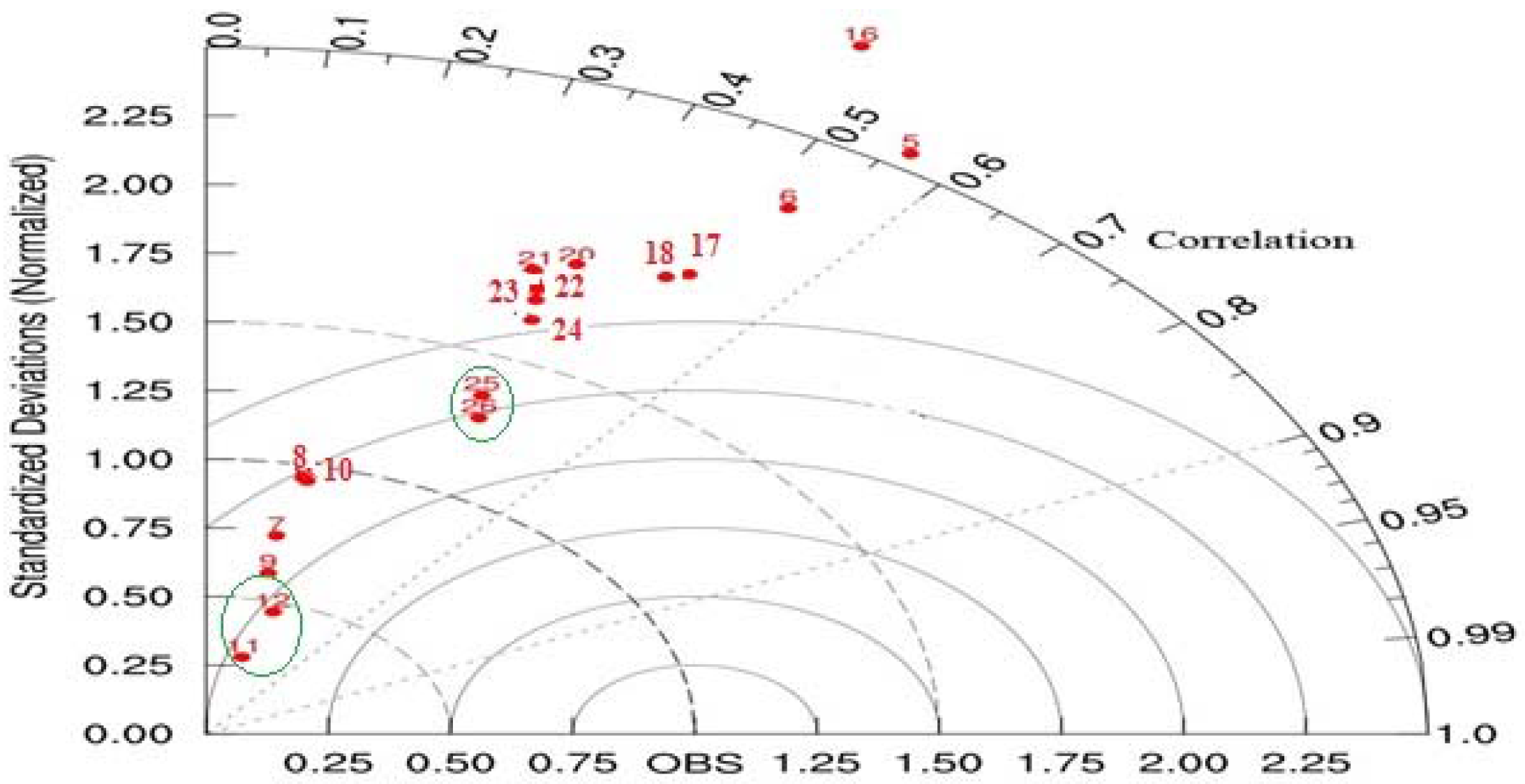

Verification by Taylor diagram [

44] is illustrated in

Figure 2 and

Figure 3. Taylor diagrams provide a way of graphically summarizing how closely a pattern matches observations. The similarity between two patterns is quantified in terms of their correlation, their centered root-mean-square difference and the amplitude of their variations (represented by their standard deviations). These diagrams are especially useful in evaluating multiple aspects of complex models or in gauging the relative skill of many different models [

45]. The position of each point appearing on the plot quantifies how closely that model’s simulated precipitation pattern matches observations. The reason that each point in the two-dimensional space of the Taylor diagram can represent three different statistics simultaneously (the centered RMS difference, the correlation, and the standard deviation) is that these statistics are related by the following formula:

where

R is the correlation coefficient between the forecast and observation fields,

E’ is the centered RMS difference between the fields, and

and

are the variances of the forecast and observation fields, respectively. Given a “forecast” field (

f) and a observation field (

O), the formulas for calculating the correlation coefficient (

R), the centered RMS difference (

E’), and the standard deviations of the “forecast” field (

) and the observation field (

) are given below:

5. Results

Based on results of a, b, c and d indexes were shown in

Table 5 and

Table 6 configuration NT cumulus, MYJ pbl and WSM3 or Kessler microphysics have most correct forecast frequency. This result is accepted by PC, TS, odds ratio, H, HSS, PSS, CSS, GSS and Q indexes. These models are shown with c16-p2-m1 and c16-p2-m3 symbols. Also combination BMJ (cu = 2) cumulus, MRF pbl, WSM 3 and Kessler microphysics schemes as shown with c2-p99-m1 and c2-p99-m3 models (cu = 2, pbl = 99, mc = 1 and mc = 3) show good results. RMSE results represented in c2-p99-m1 and c2-p99-m3 appear better than other models.

Looking at

Table 6, it is apparent that for 5 km resolution, KF cumulus (cu = 1), MYJ (pb l = 2) planetary boundary layer and Kessler (c1-p2-m1 model) gives good results in odds ratio, FAR, HSS, PSS, CSS, GSS and Q. However, the best answer is achieved by models c16-p2-m1 and c16-p2-m3 (cu = 2, pbl = 99, mc = 1 and mc = 3). Also Models c2-p11-m1 and c2-p99-m1 show the lowest RMSE.

Model c2-p99-m1 had the best RMSE. It is 1.96 for 15 km resolution and 1.75 for 5 km resolution. It indicates the absolute fit of the model to the data—how close the observed data points are to the model’s predicted values. This model has the lowest standard deviation as shown in

Figure 4 and

Figure 5. 81.86% of the simulated values are within +/−1 mm deviation from the interpolated values from observation data.

PC values indicating that more than 62% of all forecasts were correct in models c16-p2-m1 and c16-p2-m3. B index indicates forecast with these models have a slight tendency to under forecasting in 15 km resolution, while the best choosing model according this index in 5 km resolution is c2-p99-m1 with slight over forecasting of rain frequency. H index value show that roughly 3/4 of the observed rain events were correctly predicted with c16-p2-m1 and c16-p2-m3 models in the both resolutions. FAR index indicating that in roughly 1/3 of the forecast rain events, rain was not observed with c2-p99-m1 and c2-p99-m3. F index representing that for 36% of the observed “no rain” events the forecasts were incorrect with c2-p99-m1 and c2-p99-m3 models in 15 resolution and it is 22% with c10-p99-m1 model in 5 resolution. TS index value in c16-p2-m1 and c16-p2-m3 models meaning that approximately half of the “rain” events (observed and/or predicted) were correctly forecast. The GSS index is often used in the verification of rainfall in NWP models because its “equitability” allows scores to be compared more fairly across different regimes. It does not distinguish the source of forecast error. GSS in models c16-p2-m1, c16-p2-m1 and c1-p2-m1 have best results and gives a lower score than TS. PSS score may be more useful for more frequent events. Can be expressed in a form similar to the GSS [

46]. Range of values are between −1 and 1. Zero indicates no skill. Perfect score is one. The best model according this index is c16-p2-m1.

According

Figure 2 in 15 km resolution, Models with number 25 and 26 (c11-p1-m1 and c11-p1-m3) show better correlation than other models. However, models 11 and 12 (c2-p99-m1 and c2-p99-m3) show correlation similar to those of models 25 and 26, and better result in RMSE and Standard deviations.

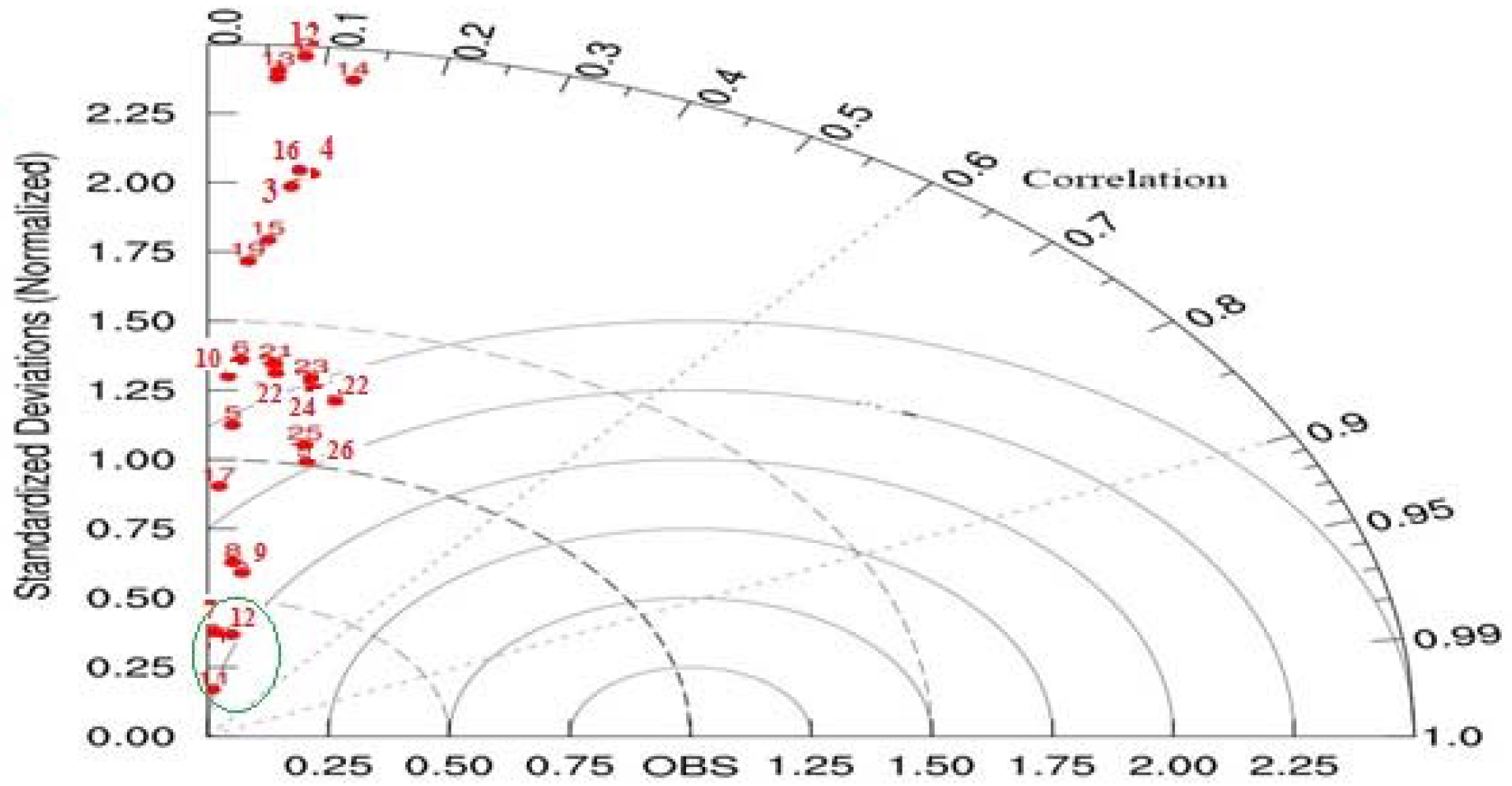

Taylor diagram in

Figure 3 shows that models 11 and 12 (c2-p99-m1 and c2-p99-m3) Are more suitable than other models in the 5 km resolution.

To summarize the results of verifications indexes made here, the scalar quantity, the skill scores of contingency table (2 × 2), the RMSE and the Taylor diagrams are summarized in the

Table 7 and

Table 8. In each verification method, two of the best models were identified. Four of the best models are also identified in Taylor diagram maximum. The best models are scored 1 in each verification method and the others are scored 0.

For resolution 15 km: c16-p2-m1, c16-p2-m3 models have the highest total scores (11 points). C2-p99-m1 and c2-p99-m3 models are the runner ups (7 points).

For resolution 5 km: c16-p2-m1 (with 9 points), c1-p2-m1 (with 7 points) and c16-p2-m3 (with 6 points) are the optimum models. The model c2-p99-m1 has the best rating from RMSE and Taylor diagram.

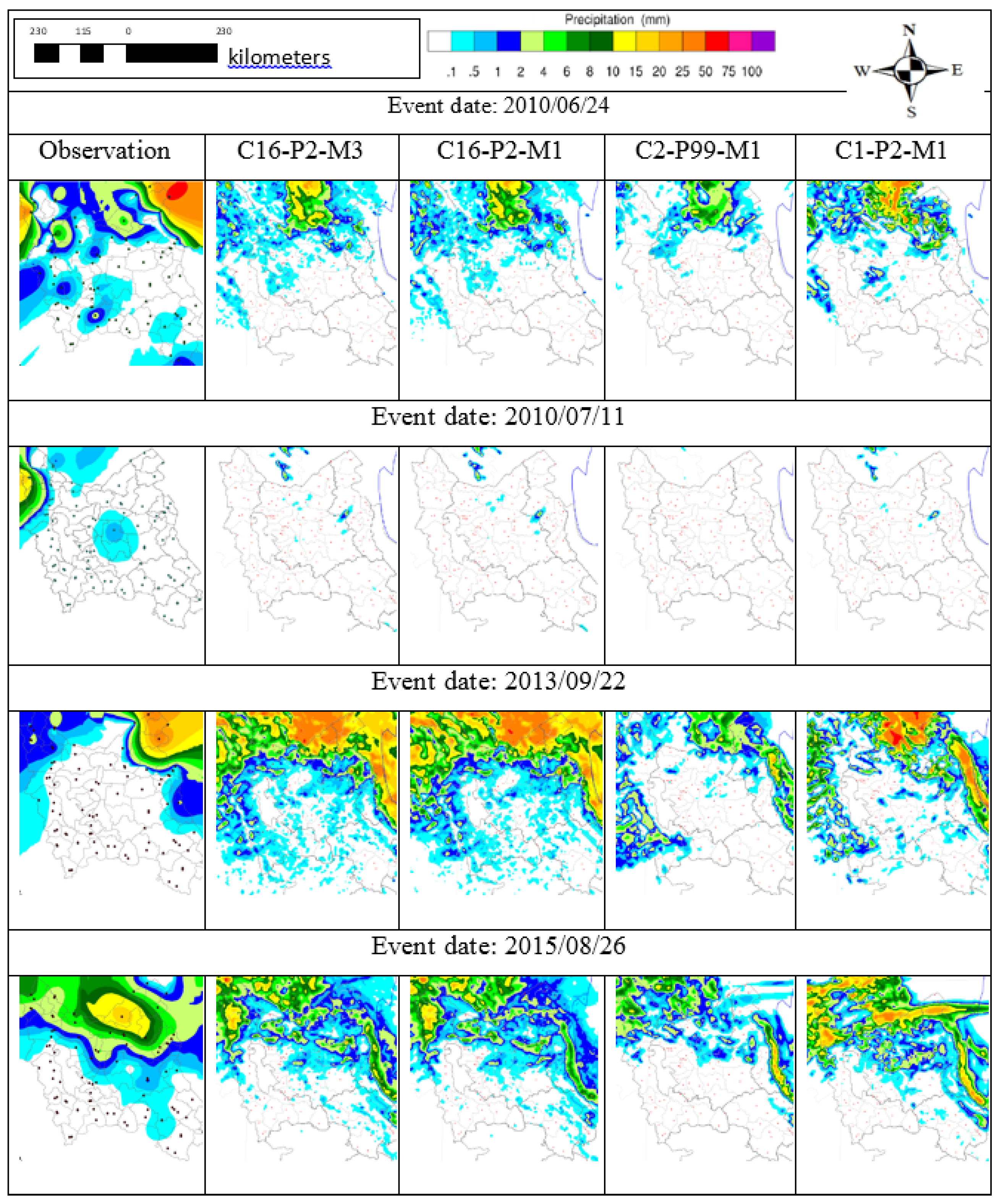

Finally, the results were checked by eyeball verification to identify the best model amongst the models selected by statistical methods. The output of these models was compared with observations obtained by interpolation with kriging in two resolutions (5 km & 15 km).

Figure 4 and

Figure 5 display the result of models and observation in four events. One of the oldest and best verification methods is the good old fashioned visual, or “eyeball”, method. Comparison the results, show that heavy rain in large area Conformity with the models, except of event on date of 7 November 2010. For example, Models C16-P2-M3 and C16-P2-M1 were simulated heavy rainfall in the upper right of area in event date 22 September 2013 in

Figure 4. There is a clear difference in rainfall amount in the north central region between the c16 models and c2 models at 15 km resolution. It is shown c16 models are closer than c2 models to observations. Also,

Figure 4, especially in event 26 August 2015, are shown that precipitation are simulated better by c16 models in the south central. Finally, comparing the model output images with map observations leads to a final decision on the best model. According to

Figure 4 and

Figure 5, models c16-p2-m1 and c16-p2-m3 show a very close similarity to the observations in both 5 and 15 km resolutions. They have used newer Tiedtke cumulus, Mellor-Yamada-Janjic PBL, WSM3 and Kessler microphysical schemes.

Since there are only 87 actual data points, the interpolated grid points are not all statistically independent of each other as there must be a spatial correlation between the interpolated points that is related to the average spacing of the original stations. It is suggested that in additional studies, radar data or more events can be used to more accurately control the models, then the comparison would be improved if the simulation data are interpolated to the station positions.

6. Conclusions

Most rainfalls in the Northwest of Iran in summer season are convective, with heavy rainfall occurring in some smaller areas. The results of verification by all of the methods clearly shows that NWP models cannot accurately predict this type of precipitation.

On the other hand, the result in

Table 4 and

Table 5 indicate that cumulus schemes are the most sensitive. Microphysical schemes are the least sensitive. This is also illustrated in the Taylor diagrams, in

Figure 2 and

Figure 3. In the Tayor diagrams, the models with the same cumulus and different PBL and microphysics show very similar outcomes. Comparison of 15-km resolution simulation with 5 km resolution simulation does not show obvious advantages, according to the verification score.

Configuration with the newer Tiedtke cumulus, Mellor-Yamada-Janjic PBL, WSM3 and Kessler microphysics schemes demonstrate the best results in both resolutions (5 and 15 km). There is little difference in the results between WSM3 and Kessler microphysics schemes. However, the results of WSM3 microphysics scheme are better than Kessler microphysical scheme.

No single configuration of the multi-physics performed best for all cases. In the first 24 h forecast of these 26 configurations, it has been observed, based on the output image of models and statistical methods, that convective rainfall in the NWI could not be simulated with high accuracy using WRF model in summer. This conclusion is based on statistical indexes and Eyeball verifications. Therefore, accuracy in the first 24 h forecast with the other methods needs to be improved. A method such as extrapolation of observation data using satellite and radar data can be used to improve forecast in first 24 h.