Emergency Response Cyber-Physical Framework for Landslide Avoidance with Sustainable Electronics †

Abstract

:1. Introduction

- (i)

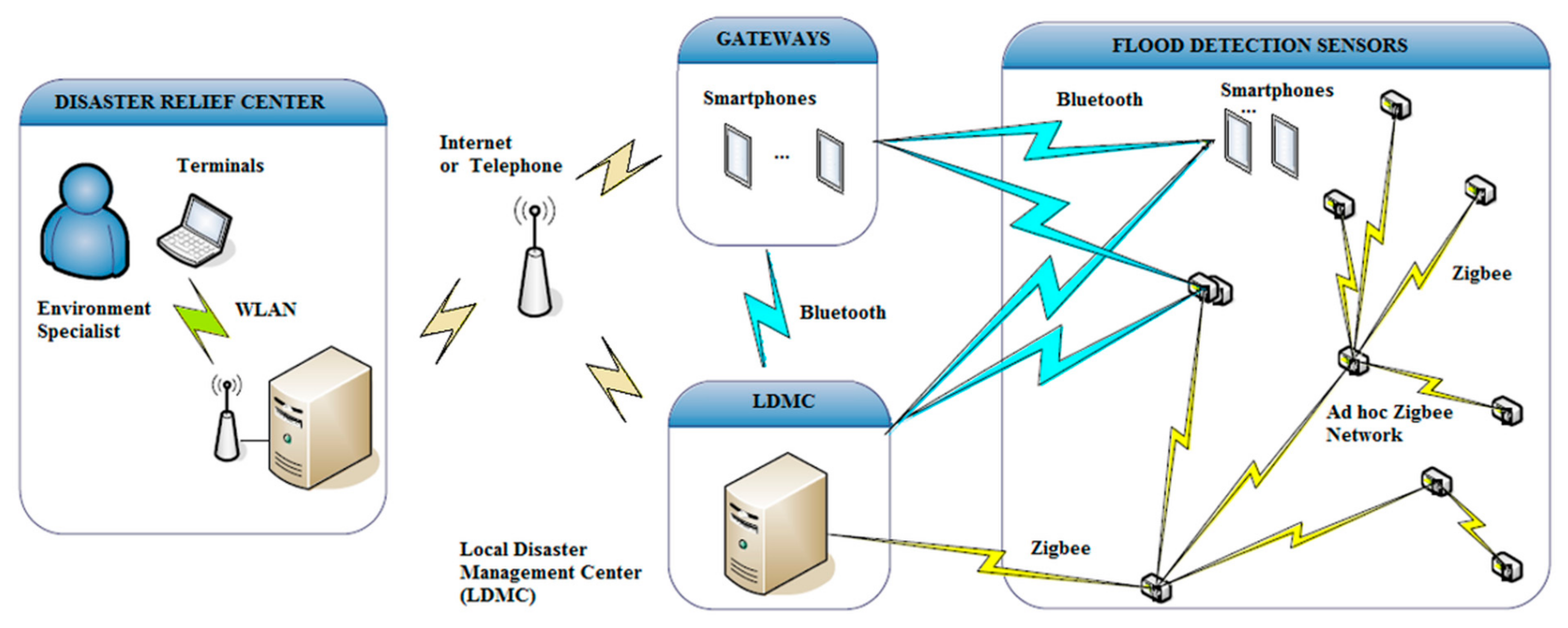

- Cold advection involves water descending due to the gravity force to a deep lower level; and

- (ii)

- Hot advection comprises condensation of hot magmatic gases.

- Geometric techniques alter the slope geometry;

- Hydrogeological approaches attempt to decrease the groundwater, to lessen the liquid content or humidity of the problematic area; and

- Both mechanical as well as chemical procedures try to increase the unsteady volume shear strength. Active forces from rocks, anchors, and ground nailing, and passive forces from structural wells, piles and reinforced soil, to cite a few, can be employed to neutralize the threatening forces.

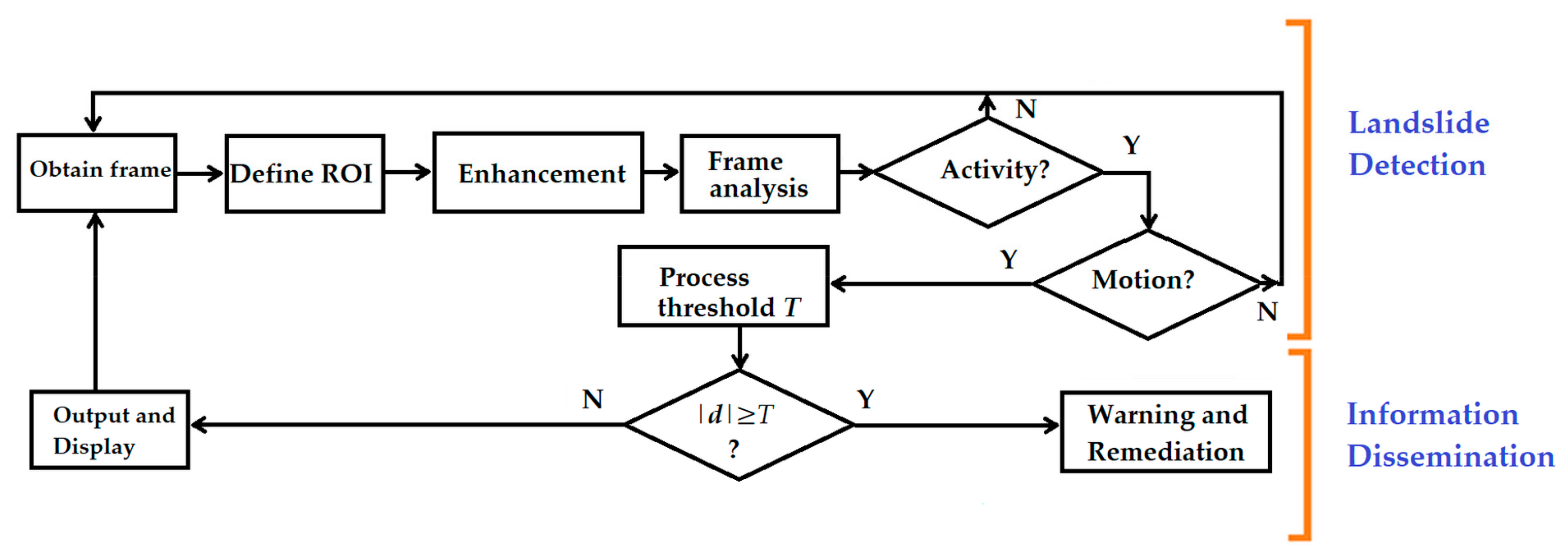

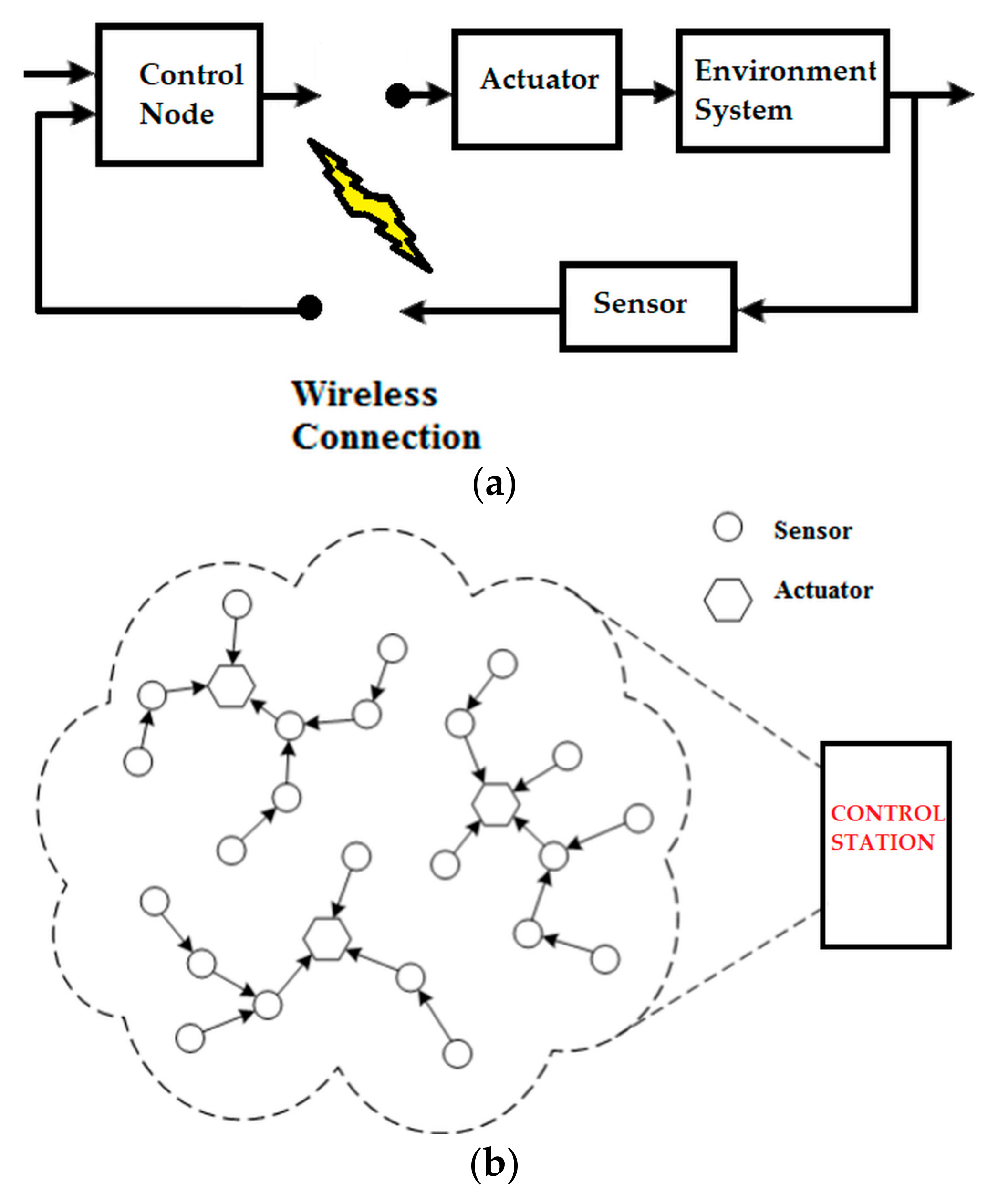

- Process models: Signal processing along with control system theory can help to model an LD system where sensors estimate errors or deviations from a normality situation and processed by a subsystem called actuator to produce the necessary feedback to restore the system stability.

- Parameter and state estimation: The fault detection system needs to acquire and process signals to generate the data for decision-making.

- Performance Control: Fault detection strategies must be sensitive to problems, while robust to noise, modeling errors, operating points, normal signal fluctuations, and so on, because these requirements can be conflicting:

- (i)

- The size of a fault must be sensed accordingly with the due detection time;

- (ii)

- The LD time must be compatible with the fault appearance speed;

- (iii)

- The speed of fault appearance must trigger the adequate actuator response time;

- (iv)

- The parameter changes must be tracked and handled adequately timewise; and

- (v)

- The detection time must be extremely fast while having a small false alarm rate.

- Redundancy: Several detection methods may be used simultaneously to guarantee redundancy in LD systems.

- Reliability: An LD system needs to be reliable, with safe backup structures to guarantee smooth work to avoid false alarms while having a low probability of missing true alarms.

- Self-Testing: An LD system must check if the system is working correctly via error detection and fault identification techniques to improve the total system reliability and safety.

2. Optical and IR Sensors for In Situ Motion Detection

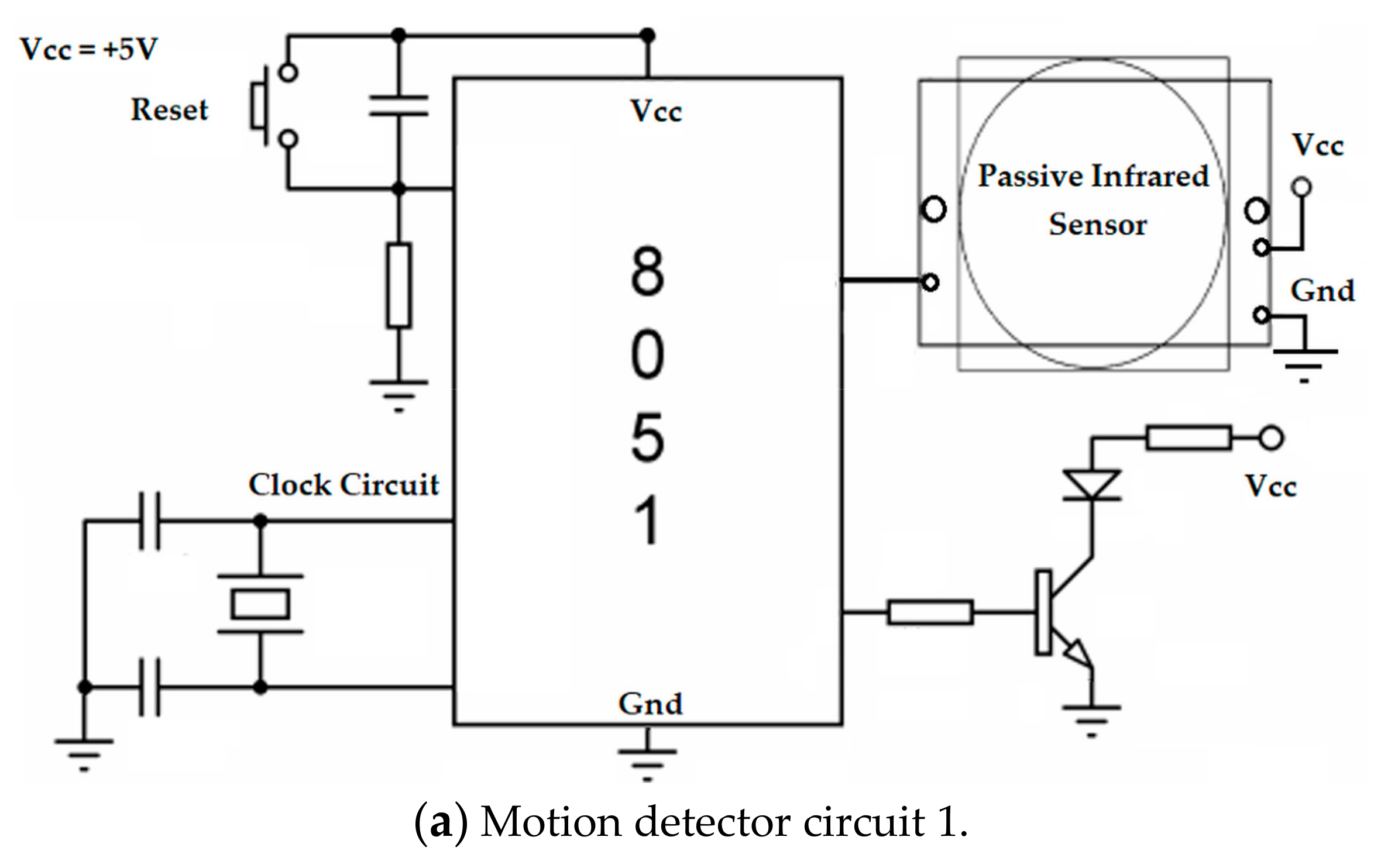

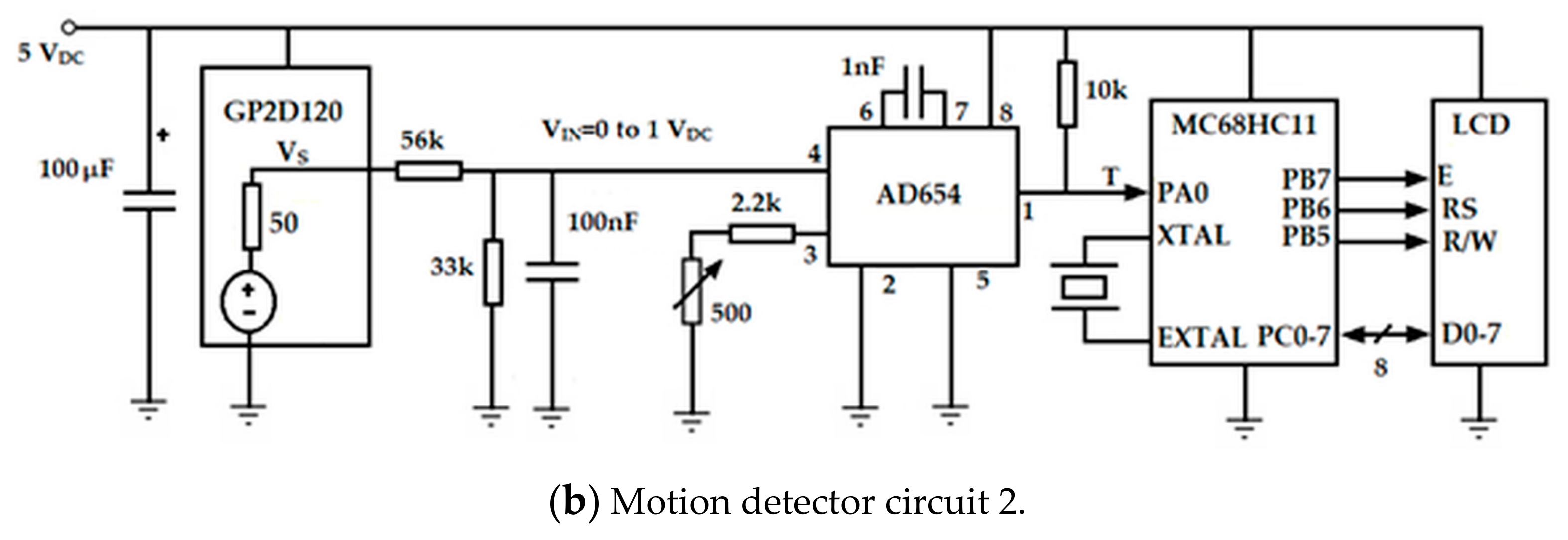

2.1. Types of Motion Sensors

2.1.1. Passive Optical Devices

2.1.2. Optical and IR Video Cameras

2.2. Motion Detection Hardware Rationale

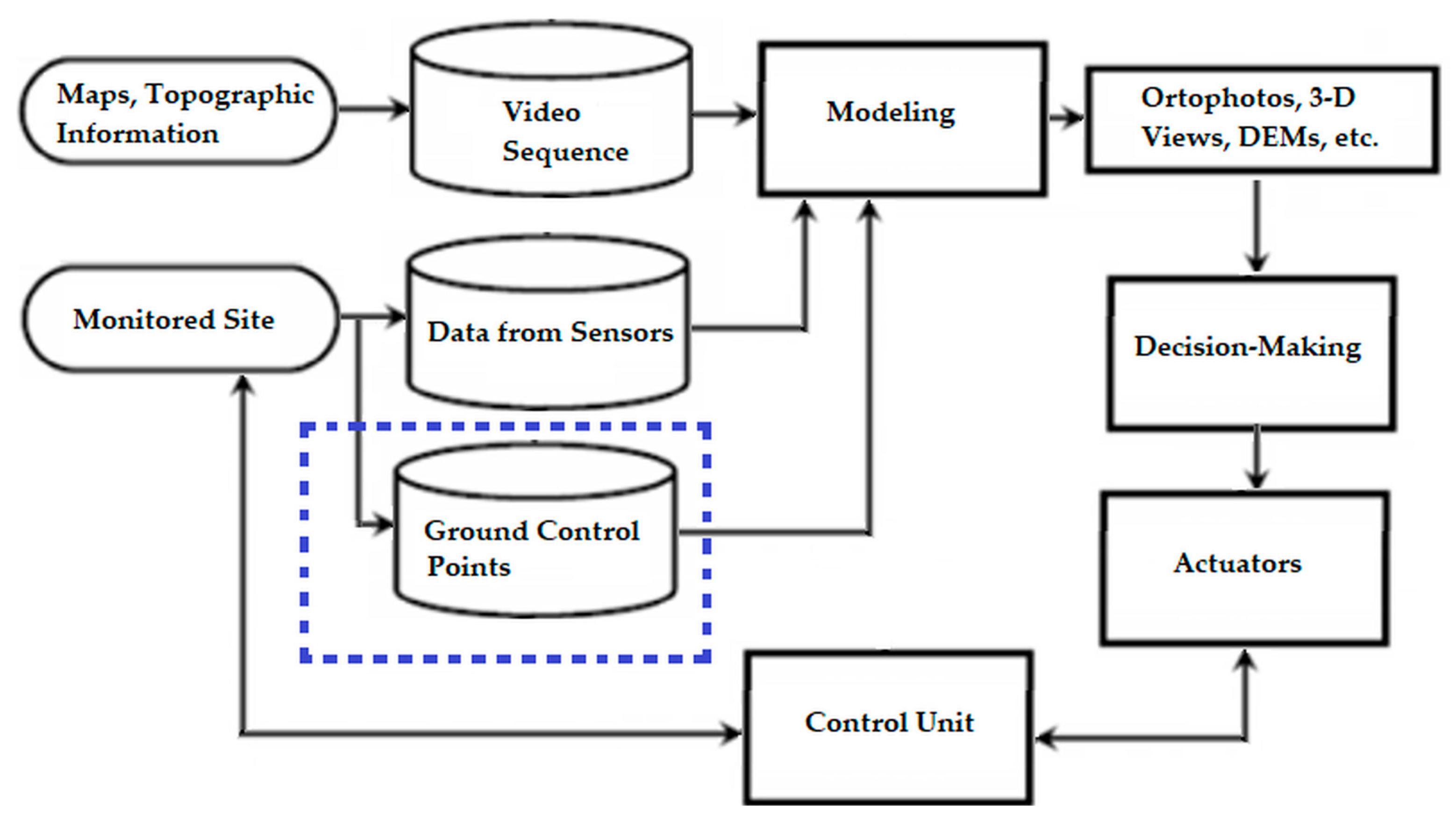

3. Optical Flow Applications in Terrain Modeling and Analysis

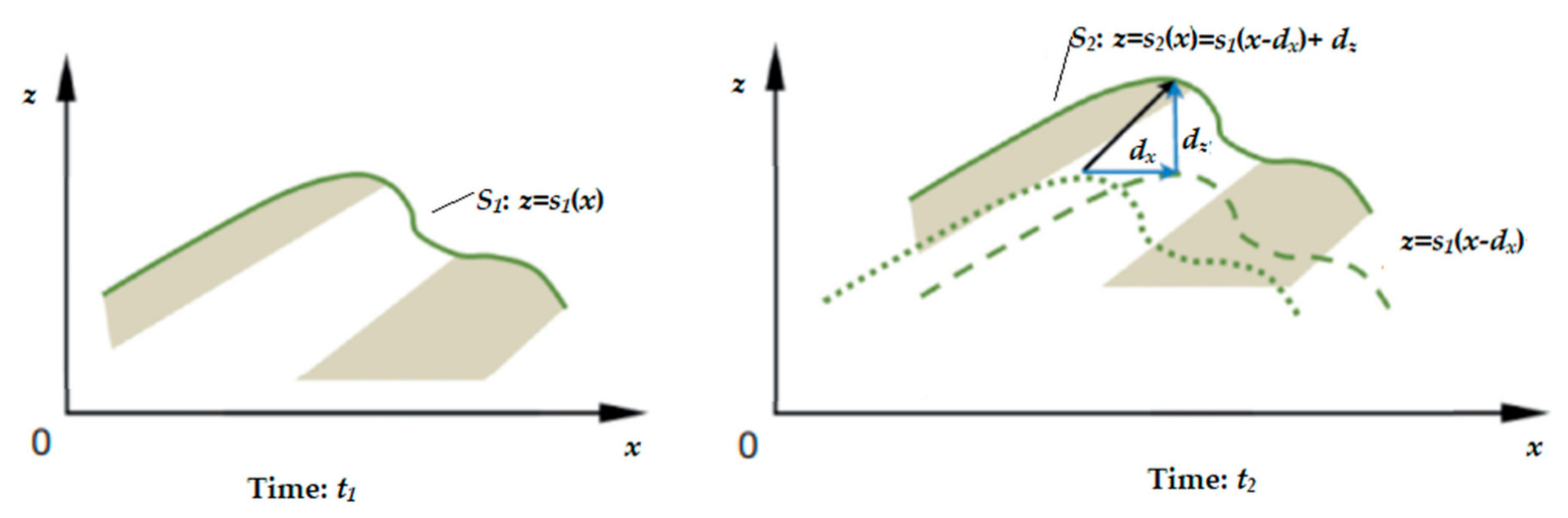

3.1. Optical Flow

3.2. Measurement Using OF

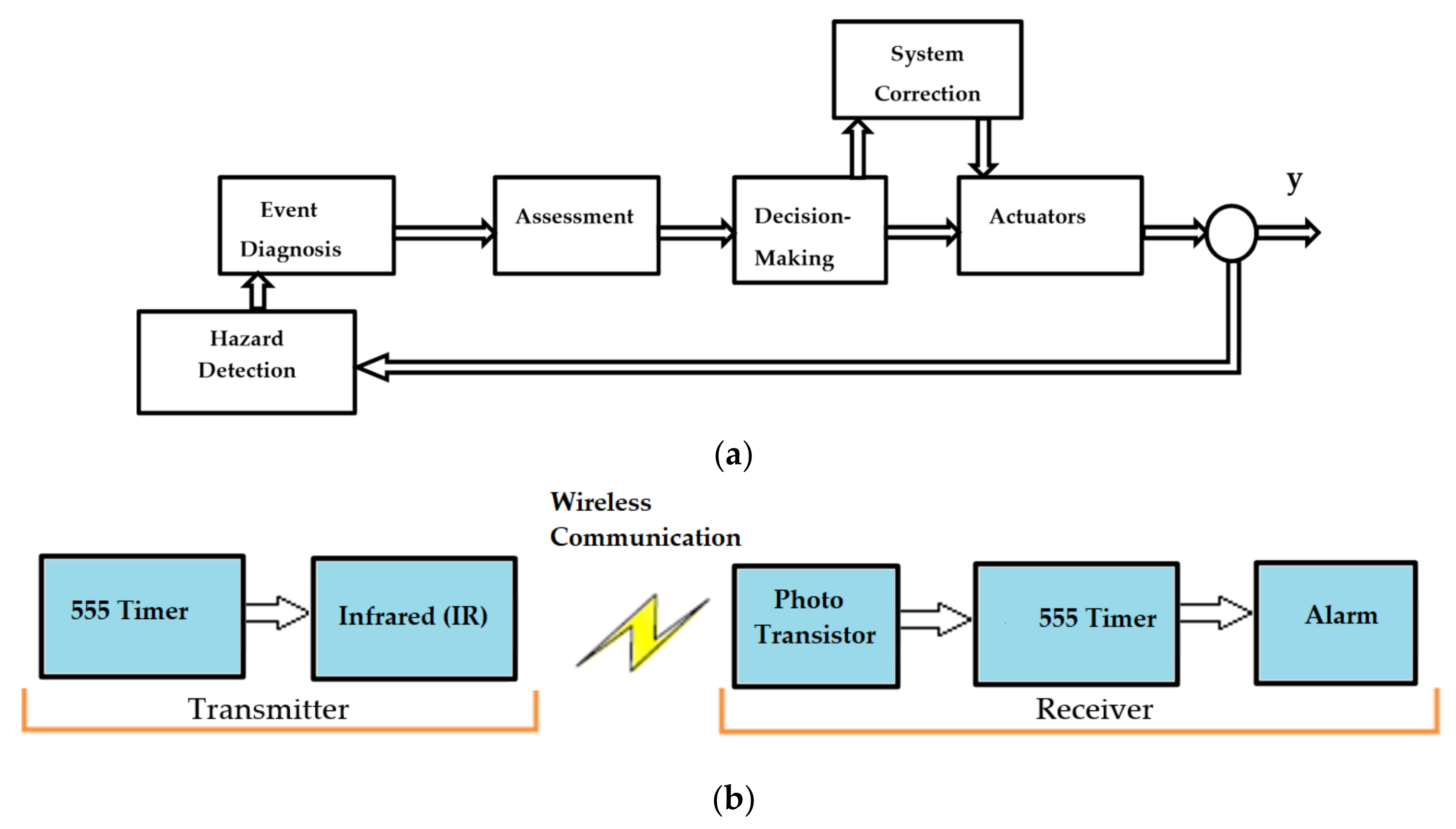

4. Emergency Response (ER) System Design Issues

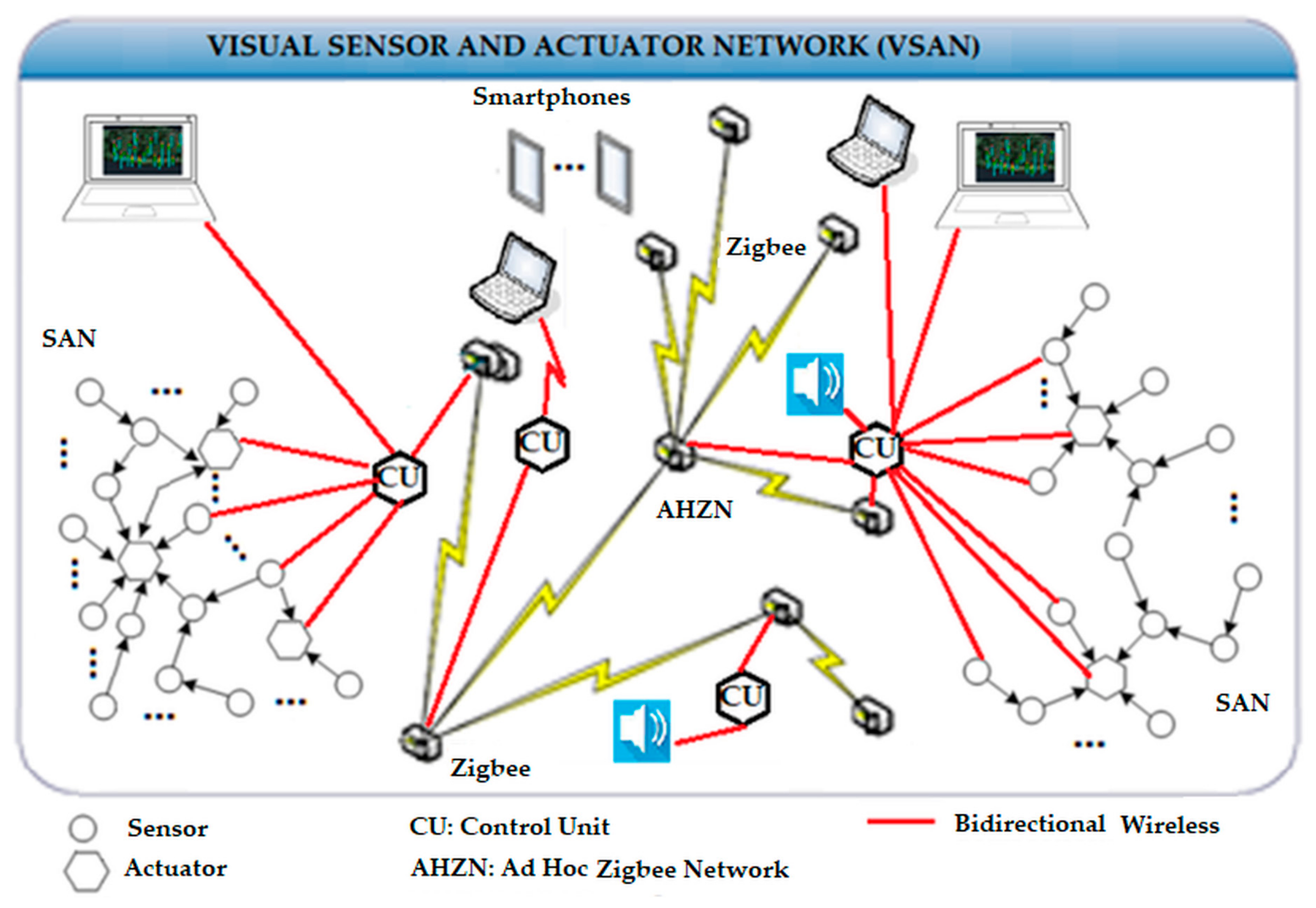

5. Design Considerations for Visual Sensor Actuator Nodes (VSANs)

5.1. Sensors

5.2. Actuators

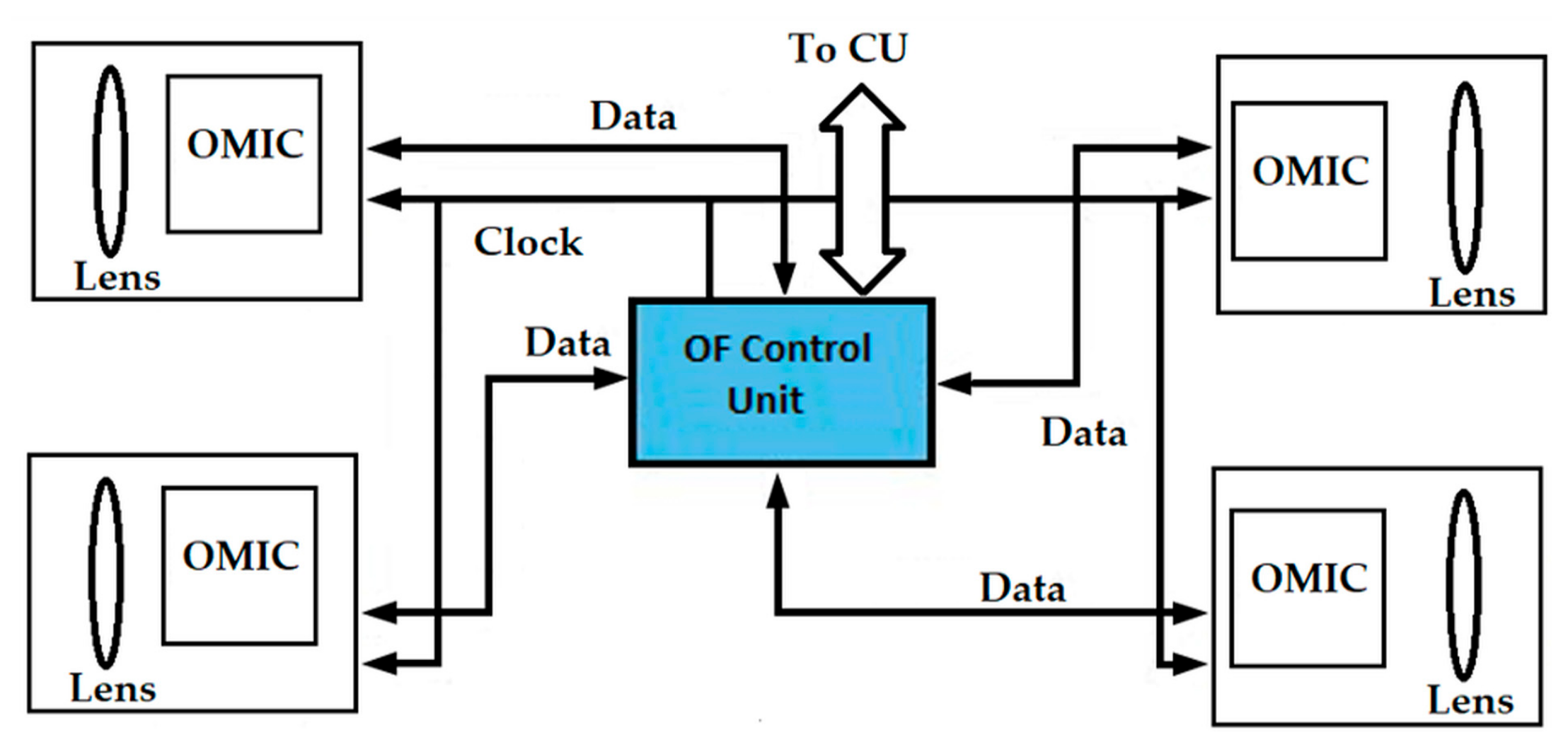

5.3. Video Camera and OMICs Motion Detection

5.4. Visual Sensor Actuator Nodes (VSANs)

6. Case Studies

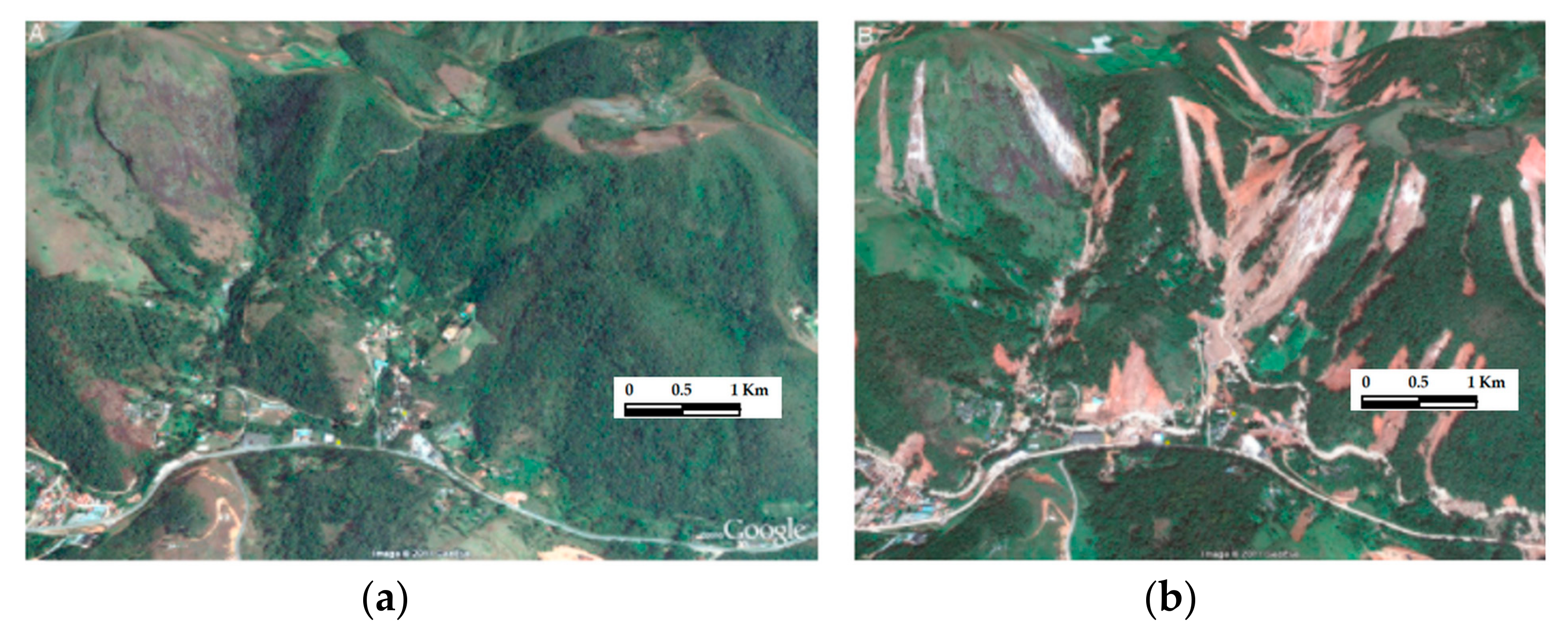

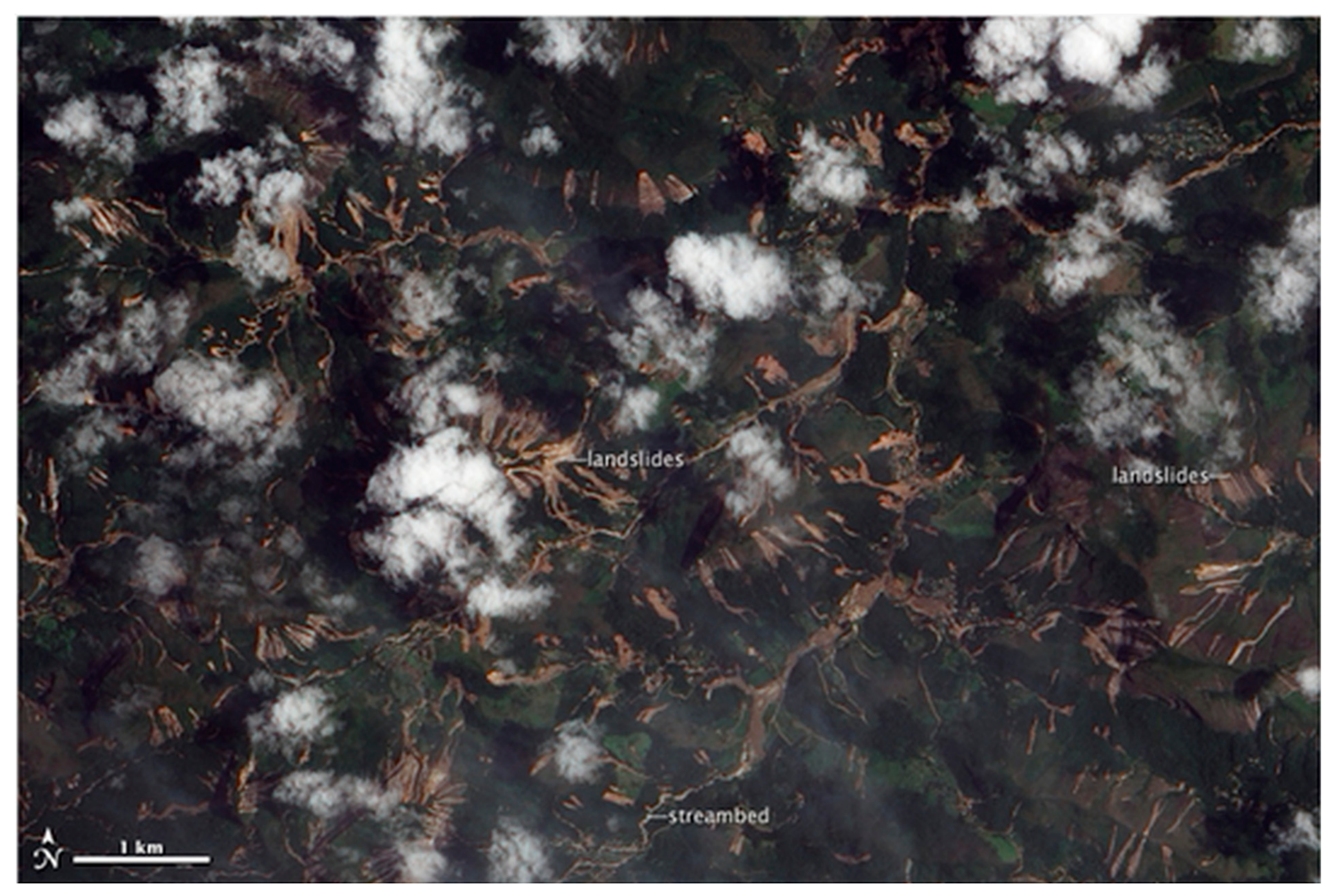

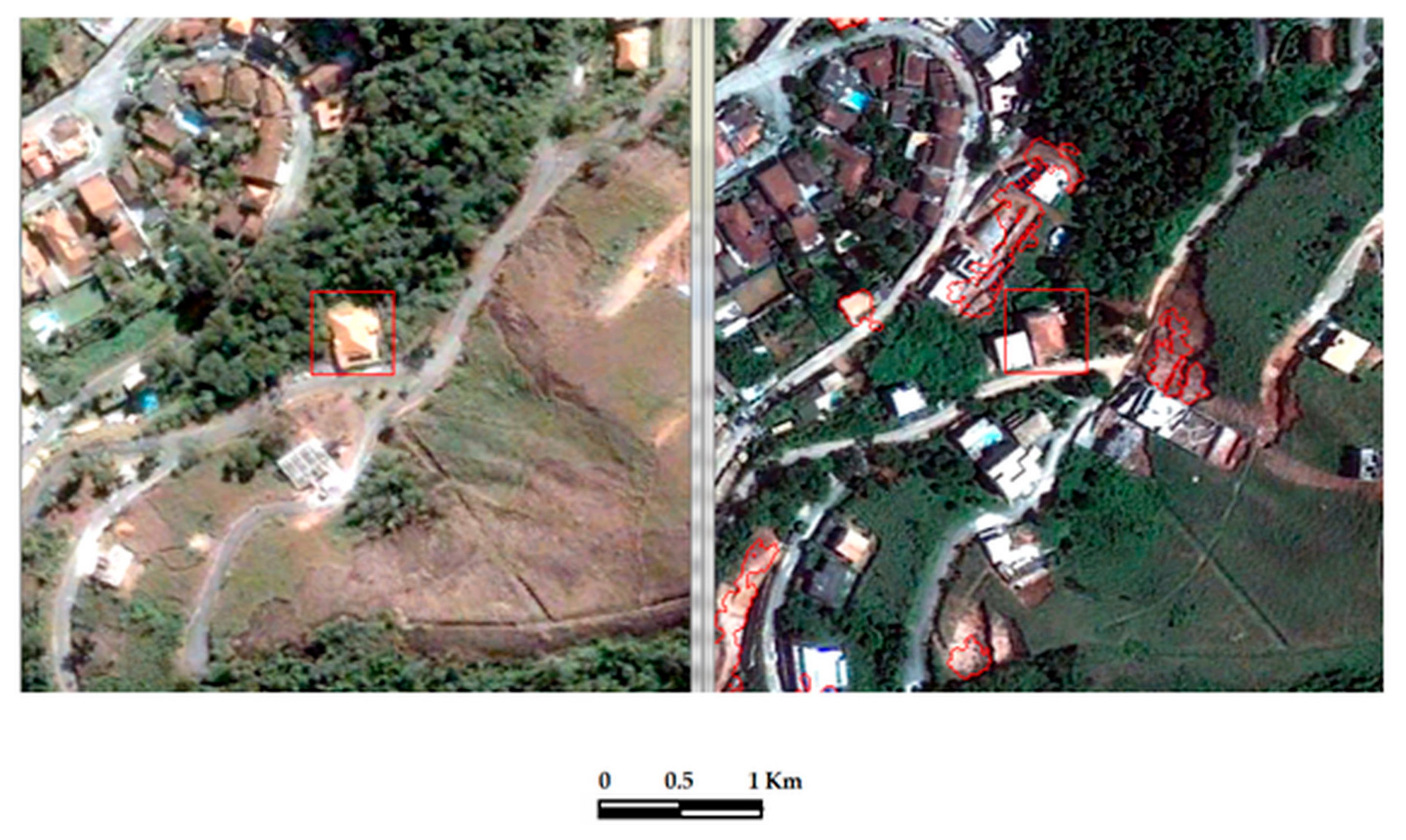

6.1. Case Study 1: Mudslides in Mountainous Region of Rio de Janeiro State

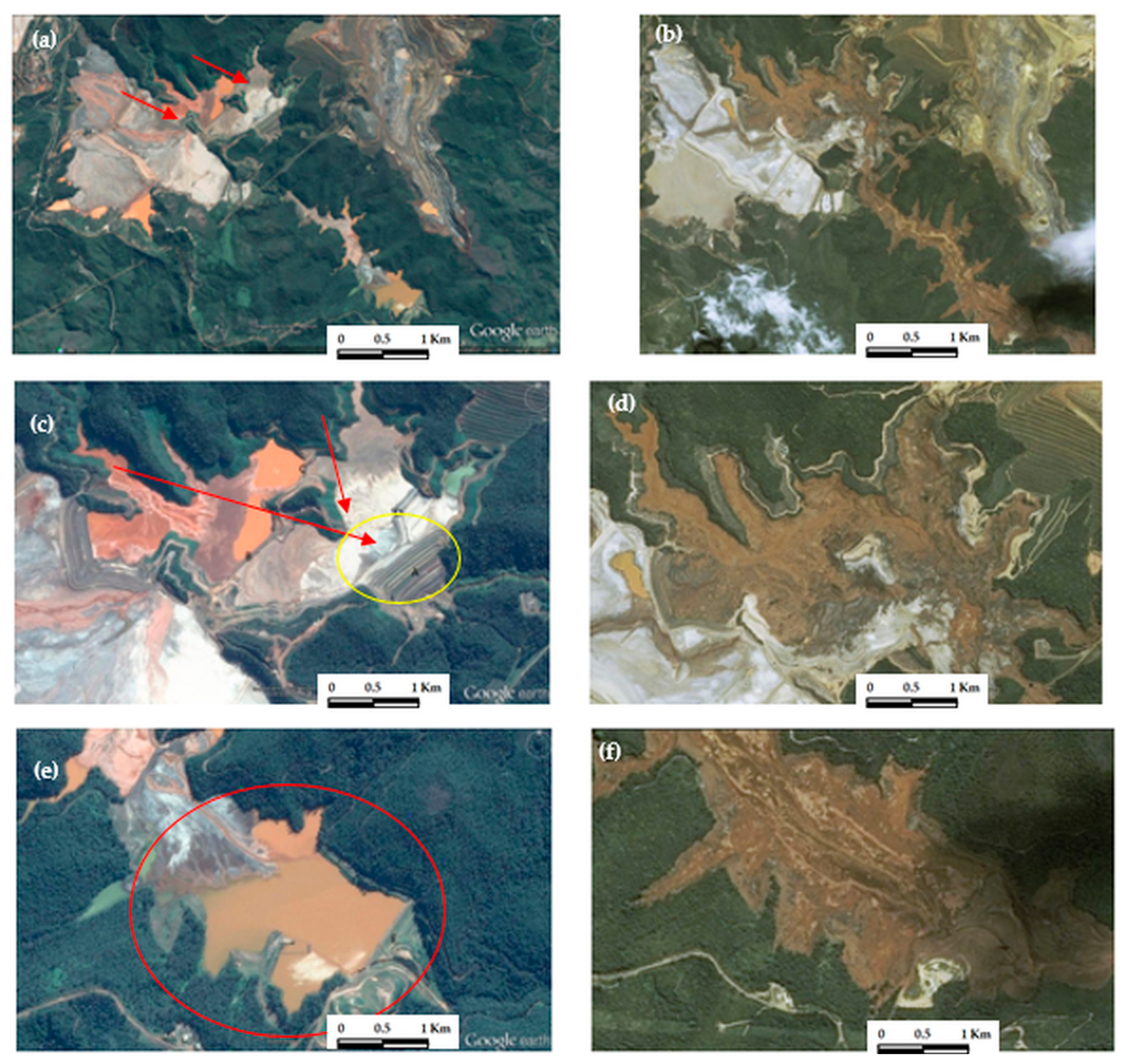

6.2. Case Study 2: The Mariana Disaster also Known as Samarco Tailings Dam Disaster in Bento Rodriguez

- The urgency to mine rapidly implies low design and construction standards;

- Fast staff turn-over as new and more lucrative opportunities appear;

- The boom increases the resources in regions with difficult conditions;

- After the boom, as commodity prices fall, expenses are reduced;

- The boom stimulates the acquisition of inappropriate projects imported from other places;

- Independent evaluations tend to go down, probably to evade the concomitant delays and costs.

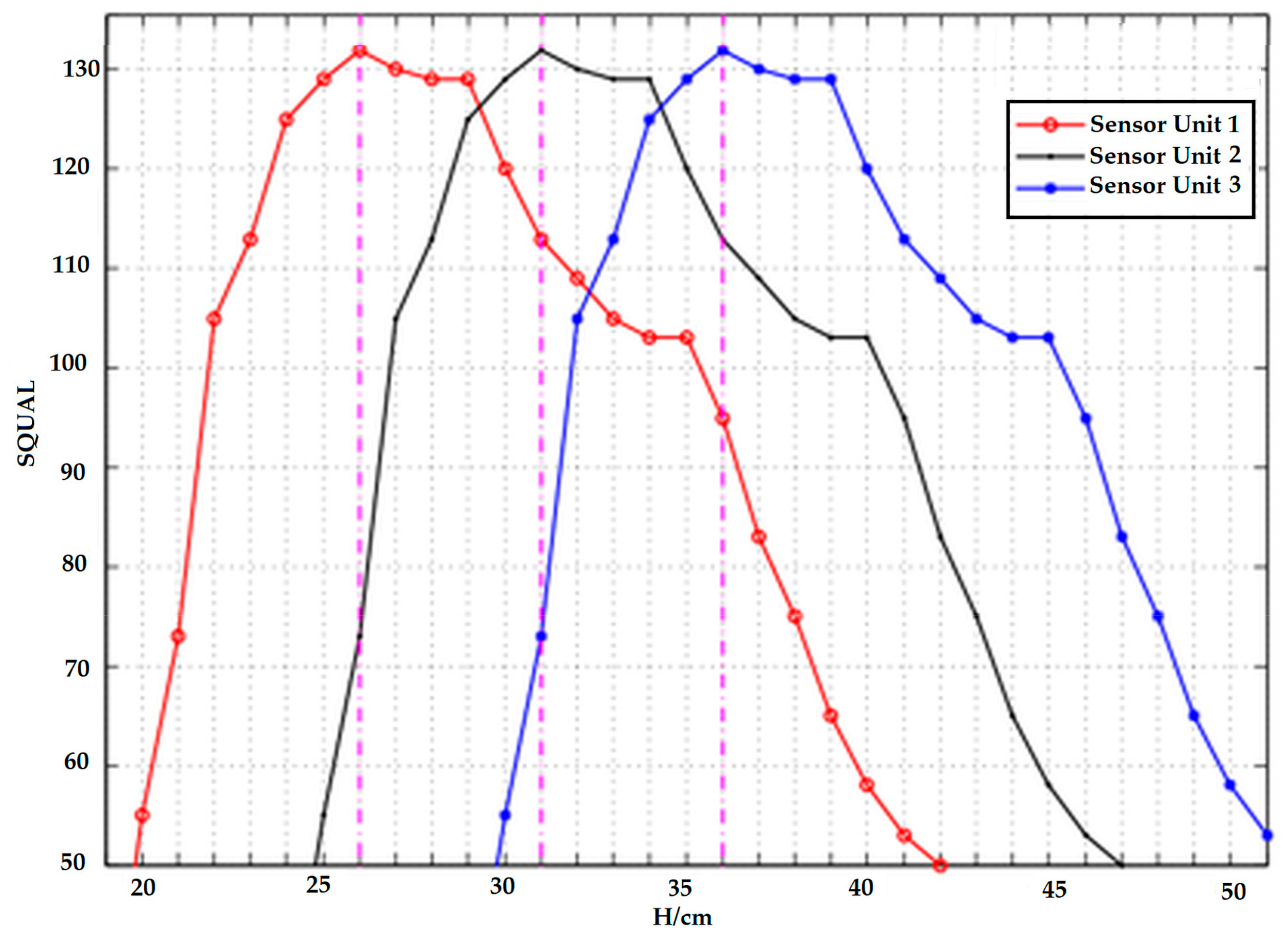

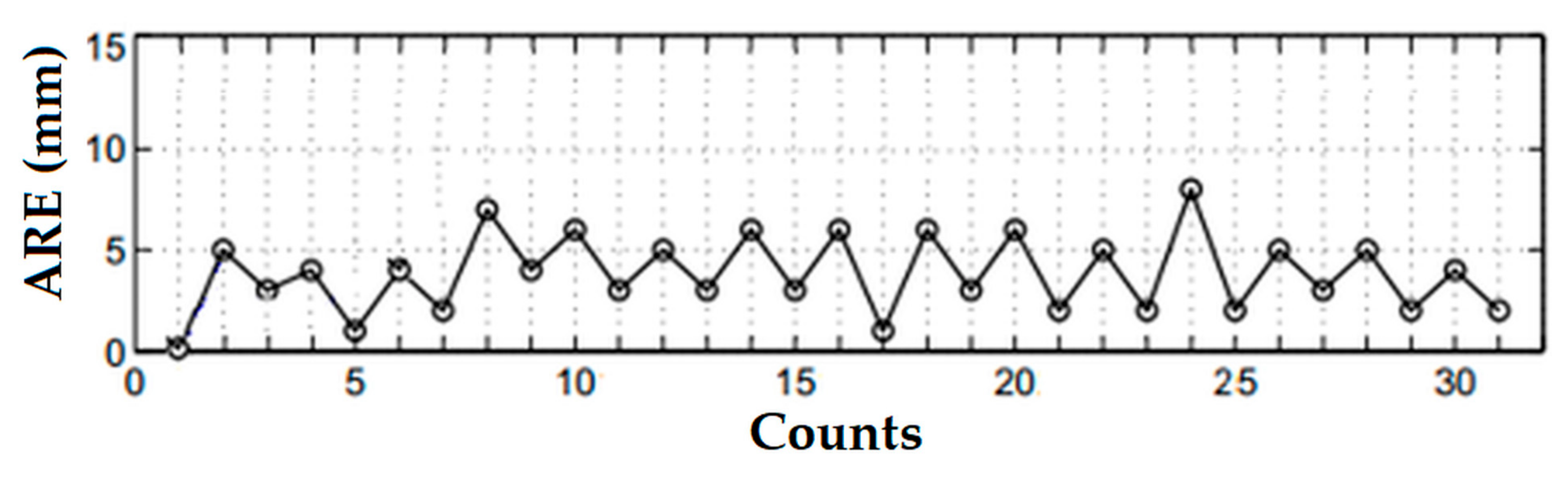

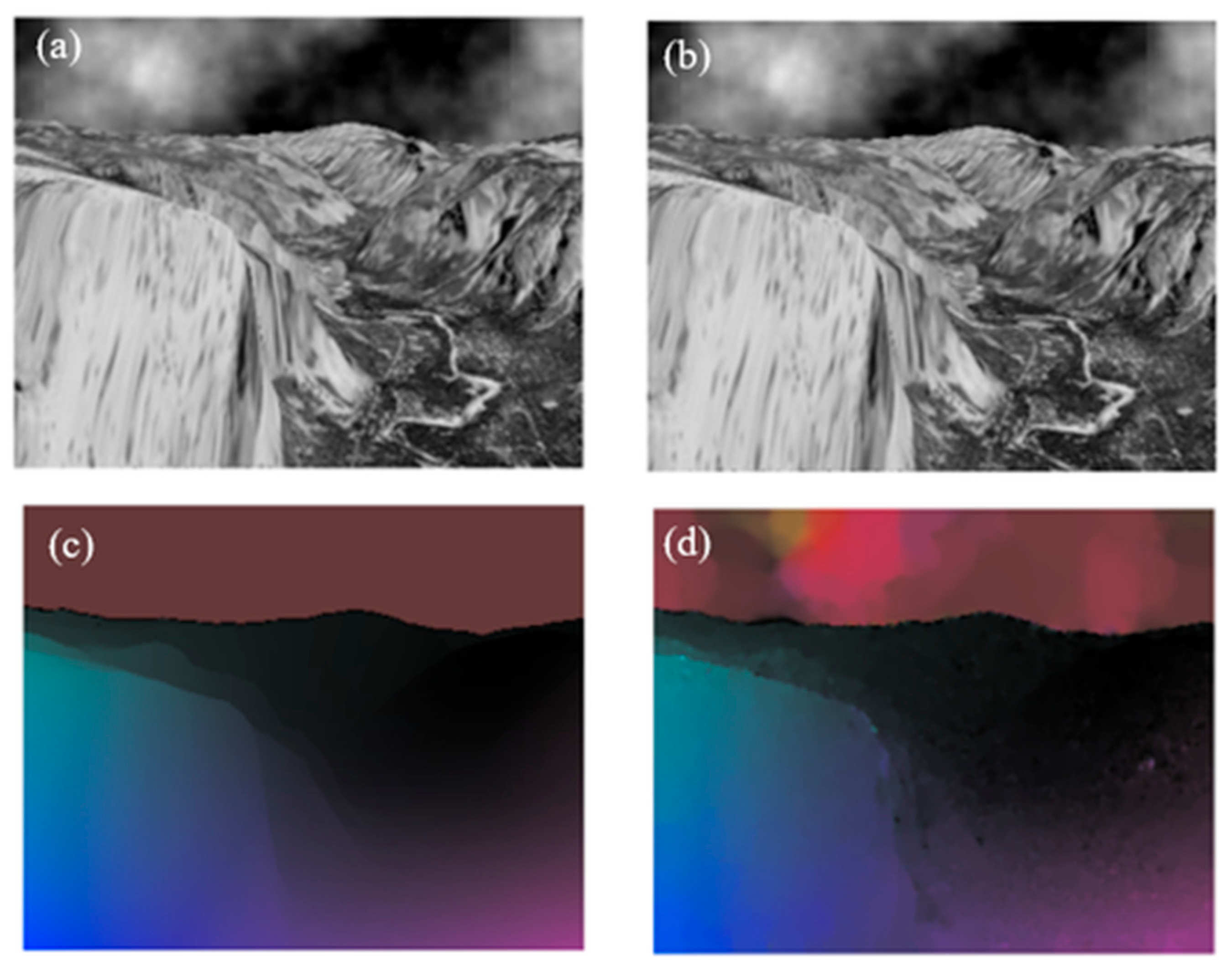

6.3. Preliminary Experiments

6.3.1. Experiment 1: System with OMICs for Displacement Estimation

6.3.2. Experiment 2: Two Views of the Same Site

7. Discussion

7.1. Combination of Detectors

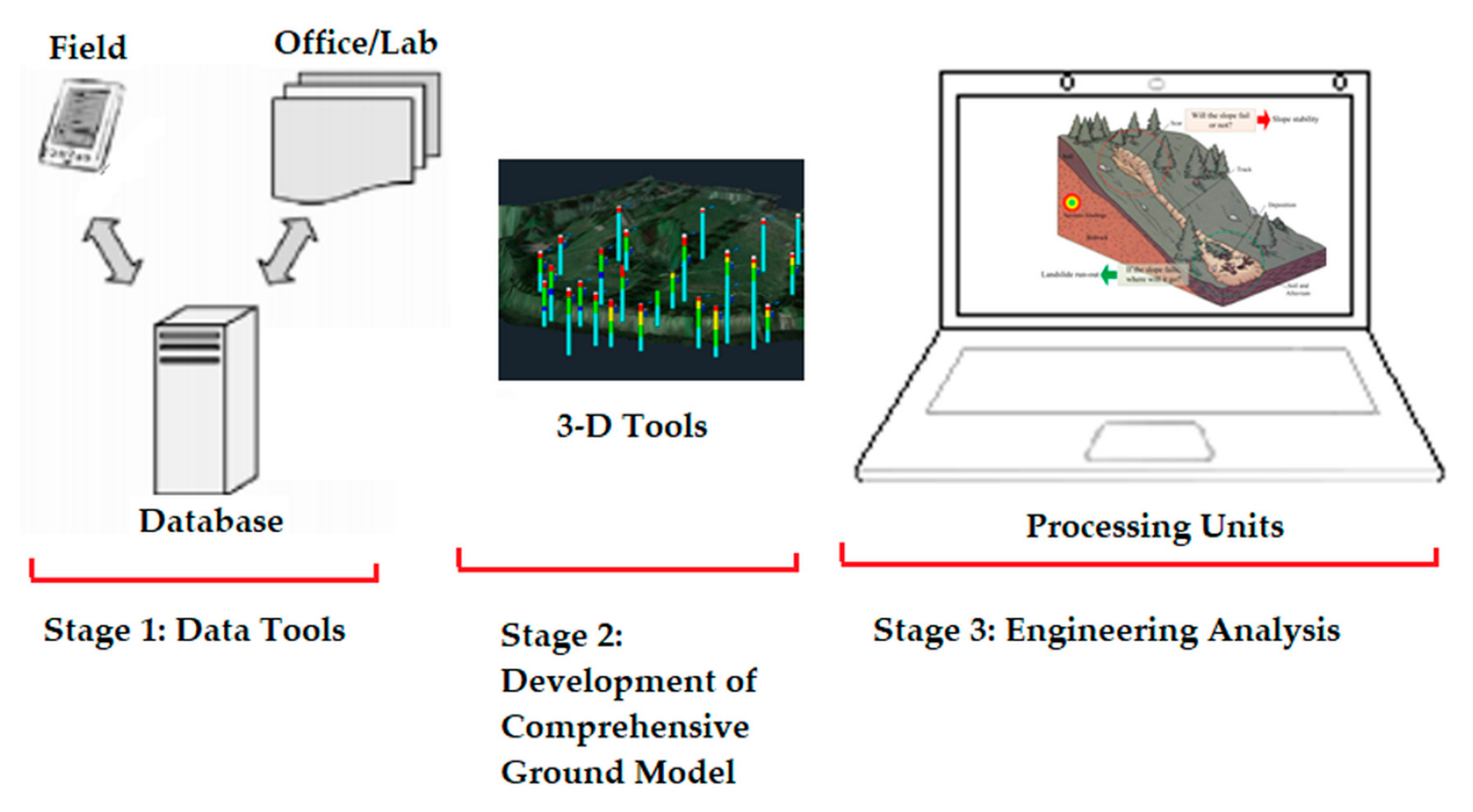

7.2. Fusion of In Situ and GIS Information

7.3. Economic Indicators and Evaluation Tools for Sustainability

- Economic advantages of preventing and remediating disasters.

- Economic advantages of using salvaged components, carbon credits, etc.

- Energy savings.

- Other factors.

7.4. On Displacement Detection Robustness

7.5. Sensor Placement

8. Conclusions

Author Contributions

Conflicts of Interest

References

- Brancalion, P.H.S.; Garcia, L.C.; Loyola, R.; Rodrigues, R.R.; Pillar, V.D.; Lewinsohn, T.M. A critical analysis of the native vegetation protection law of Brazil (2012): Updates and ongoing initiatives. Nat. Conserv. 2016, 14, 1–15. [Google Scholar] [CrossRef]

- Braathen, E. Brazil: Successful Country, Failed Cities? Available online: https://blogg.hioa.no/nibrinternational/2011/01/24/brazil-successful-country-failed-cities/ (accessed on 5 March 2018).

- Avila, A.; Justino, F.; Wilson, A.; Bromwich, D.; Amorim, M. Recent precipitation trends, flash floods and landslides in southern Brazil. Environ. Res. Lett. 2016, 11. [Google Scholar] [CrossRef]

- Suthersan, S.; McDonough, J. Remediation Engineering: Design Concepts; CRC Press: Boca Raton, FL, USA, 1996. [Google Scholar]

- Delleur, J.W. The Handbook of Groundwater Engineering, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar]

- Bughi, S.; Aleotti, P.; Bruschi, R.; Andrei, G.; Milani, G.; Scarpelli, G.; Sakellariadi, E. Slow Movements of Slopes Interfering with Pipelines: Modeling and Monitoring. In Proceedings of the 15th International Conference on Offshore Mechanics and Arctic Engineers, Florence, Italy, 16–20 June 1996; pp. 363–372. [Google Scholar]

- Verde, C.; Torres, L. Modeling and Monitoring of Pipelines and Networks: Advanced Tools for Automatic Monitoring and Supervision of Pipelines; Springer: Berlin, Germany, 2017. [Google Scholar]

- Mosleh, A.; Pourali, M. A Functional Sensor Placement Optimization Method for Power Systems Health Monitoring. IEEE Ind. Appl. Soc. 2013, 49, 1711–1719. [Google Scholar]

- Teillet, P.M.; Gauthier, R.P.; Chichagov, A.; Fedosejevs, G. Towards integrated Earth sensing: Advanced technologies for in situ sensing in the context of Earth observation. Can. J. Rem. Sens. 2002, 26, 713–718. [Google Scholar] [CrossRef]

- Wong, K.P. Electrical Engineering, Encyclopedia of Life Support Systems; EOLSS Publishers Co. Ltd.: Abu Dhabi, UAE, 2009. [Google Scholar]

- Fraden, J. Handbook of Modern Sensors: Physics, Designs, and Applications, 5th ed.; Springer: Berlin, Germany, 2016. [Google Scholar]

- Platt, C. Encyclopedia of Electronic Components Volume 3: Sensors for Location, Presence, Proximity, Orientation, Oscillation, Force, Load, Human Input, Liquid and Gas Properties, Light, Heat, Sound, and Electricity; Maker Media, Inc.: Sebastopol, CA, USA, 2016. [Google Scholar]

- Dahmen, H.; Mallot, H.A. Odometry for ground moving agents by optic flow recorded with optical mouse chips. Sensors 2014, 14, 21045–21064. [Google Scholar] [CrossRef] [PubMed]

- De Jesus, M.A.; Estrela, V.V. Optical flow estimation using total least squares variants. Orient. J. Comput. Sci. Technol. 2017, 10, 563–579. [Google Scholar] [CrossRef]

- Coelho, A.M.; Estrela, V.V. A study on the effect of regularization matrices in motion estimation. Int. J. Comput. Appl. 2012, 51, 17–24. [Google Scholar] [PubMed]

- Horn, B.K.P.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Lucas, D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence (IJCAI 1981), Vancouver, BC, Canada, 24–28 August 1981; Morgan Kaufmann Publishers: Burlington, MA, USA, 1981. [Google Scholar]

- Coelho, A.M.; Estrela, V.V. Data-driven motion estimation with spatial adaptation. Int. J. Image Proc. 2012, 6, 53–67. [Google Scholar]

- Hossain, K.M.; Sohel, A.A. Using optical mouse as a position feedback sensor for AGV navigation. Int. J. Mech. Mechatron. Eng. 2013, 13, 33–37. [Google Scholar]

- Mo, L.; Cao, X.; Chen, J.; Sun, Y. Collaborative Estimation and Actuation for Wireless Sensor and Actuator Networks. IFAC Proc. Vol. 2014, 47, 5544–5549. [Google Scholar] [CrossRef]

- Ko, B.; Kwak, S. Survey of computer vision-based natural disaster warning systems. Opt. Eng. 2012, 51. [Google Scholar] [CrossRef]

- Broxton, M.J.; Nefian, A.V.; Moratto, Z.; Kim, T.; Lundy, M.; Segal, A.V. 3D Lunar Terrain Reconstruction from Apollo Images. In International Symposium on Visual Computing (ISVC 2009); Bebis, G., Ed.; Springer: Berlin, Germany, 2009; pp. 710–719. [Google Scholar]

- Sabater, N.; Leprince, S.; Avouac, J.P. Contrast Invariant and Affine Sub-Pixel Optical Flow. In Proceedings of the 19th IEEE International Conference on Image Processing (ICIP 2012), Orlando, FL, USA, 30 September–3 October 2012; pp. 53–56. [Google Scholar]

- Marins, H.R.; Estrela, V.V. On the use of motion vectors for 2D and 3D error concealment in H.264 AVC video. In Handbook of Research on Applied Video Processing and Mining; Dey, N., Suvojit, A., Patra, P.K., Ashour, A., Eds.; IGI Global: Hershey, PA, USA, 2017. [Google Scholar]

- Coelho, A.M.; Estrela, V.V. EM-based mixture models applied to video event detection. arXiv, 2016; arXiv:1610.02923. [Google Scholar]

- Fernandes, S.R.; de Assis, J.T.; Pacheco, M.P.; Estrela, V.V.; Medina, I. Desenvolvimento de uma Ferramenta Computacional para o Processamento de Imagens Estereoscopicas. In Congresso Nacional De Matemática Aplicada E Computacional; SBMAC: Belem, Brazil, 2007. (In Portuguese) [Google Scholar]

- Van Westen, C.J.; Greiving, S. Multi-hazard risk assessment and decision making. In Environmental Hazards Methodologies for Risk Assessment and Management; Nicolas, R., Ed.; IWA Publishing Online: London, UK, 2017. [Google Scholar]

- Estrela, V.V.; Saotome, O.; Hemanth, J.; Cabral, R.J.R. Emergency Response Cyber-Physical System for Disaster Prevention with Sustainable Electronics. In Proceedings of the 10th International Conference on PErvasive Technologies Related to Assistive Environments (PETRA ’17), Rhodes, Greece, 21–23 June 2017; pp. 238–239. [Google Scholar]

- Morgenstern, N.R.; Martin, C.D. Landslides: Seeing the Ground, Landslides and Engineered Slopes; Taylor & Francis Group: London, UK, 2008. [Google Scholar]

- The Federal Emergency Management Agency (FEMA). Crisis Response and Disaster Resilience 2030: Forging Strategic Action in an Age of Uncertainty; Report Highlighting the 2010–2011 Insights of the Strategic Foresight Initiative; FEMA: Washington, DC, USA, 2012. [Google Scholar]

- Stankovic, J.A.; Lee, I.; Mok, A.; Rajkumar, R. Opportunities and obligations for physical computing systems. IEEE Comput. 2005, 38, 23–31. [Google Scholar] [CrossRef]

- Davies, M.; Martin, T. Mining Market Cycles and Tailings Dam Incidents, Tailings and Mine Waste. In Proceedings of the 13th International Conference on Tailings and Mine Waste, Edmonton, AB, Canada, 1–4 November 2009; pp. 3–14. [Google Scholar]

- Wang, H.; Zhang, Y.; Hu, H. A study on the relationship between the occurrence of landslides and rainfall. In Proceedings of the 2nd International Conference on Electric Technology and Civil Engineering (ICETCE ’12), Washington, DC, USA, 18–20 May 2012; pp. 200–203. [Google Scholar]

- Angeli, M.-G.; Pasuto, A.; Silvano, S. A critical review of landslide monitoring experiences. Eng. Geol. 2000, 55, 133–147. [Google Scholar] [CrossRef]

- Malet, J.-P.; van Asch, T.W.; van Beek, R.; Maquaire, O. Forecasting the behaviour of complex landslides with a spatially distributed hydrological model. Nat. Hazards Earth Syst. Sci. 2005, 5, 71–85. [Google Scholar] [CrossRef]

- Guzzetti, F.; Peruccacci, S.; Rossi, M.; Stark, C.P. Rainfall thresholds for the initiation of landslides in central and southern Europe. Meteorol. Atmos. Phys. 2007, 98, 239–267. [Google Scholar] [CrossRef]

- Wilson, R.C. The rise and fall of a debris-flow warning system for the San Francisco Bay region, California. In Landslide Hazard and Risk; John Wiley & Sons: Chichester, UK, 2012; pp. 493–516. [Google Scholar]

- White, I.D.; Mottershead, D.N.; Harrison, S.J. Environmental Systems: An Introductory Text; Psychology Press: Sussex, UK, 1992. [Google Scholar]

- Wieczorek, G.F.; Glade, T. Climatic factors influencing occurrence of debris flows. In Debris-Flow Hazards and Related Phenomena; Springer: Berlin, Germany, 2005; pp. 325–362. [Google Scholar]

- Aleotti, P. A warning system for rainfall-induced shallow failures. Eng. Geol. 2004, 73, 247–265. [Google Scholar] [CrossRef]

- Corominas, J. Landslides and Climate. In International Symposium on Landslides; IEEE: Cardiff, UK, 2000. [Google Scholar]

- Baum, R.L.; Godt, J.W. Early warning of rainfall-induced shallow landslides and debris flows in the USA. Landslides 2010, 7, 259–272. [Google Scholar] [CrossRef]

- Badoux, A.; Graf, C.; Rhyner, J.; Kuntner, R.; McArdell, B.W. A debris-flow alarm system for the Alpine Illgraben catchment: Design and performance. Nat. Hazards 2009, 49, 517–539. [Google Scholar] [CrossRef]

- Berti, M.; Martina, M.L.V.; Franceschini, S.; Pignone, S.; Simoni, A.; Pizziolo, M. Probabilistic rainfall thresholds for landslide occurrence using a Bayesian approach. J. Geophys. Res. Earth Surf. 2012, 117. [Google Scholar] [CrossRef]

- Sydenham, P.H.; Thorn, R. Handbook of Measuring System Design; John Wiley & Sons, Ltd.: Indianapolis, IN, USA, 2005. [Google Scholar]

- Linearize Optical Distance Sensors with a Voltage-to-Frequency Converter. Available online: https://www.edn.com/design/analog/4371308/Linearize-optical-distance-sensors-with-a-voltage-to-frequency-converter (accessed on 2 March 2018).

- Thakoor, S.; Chahl, J.; Bouffant, N.L.; Stange, G.; Srinivasan, M.V.; Hine, B.; Zornetzer, S. Bioinspired engineering of exploration systems: A horizon sensor/attitude reference system based on the dragonfly ocelli for Mars exploration applications. J. Robot. Syst. 2003, 20, 35–42. [Google Scholar]

- Bell, S. High-Precision Robot Odometry Using an Array of Optical Mice. In Proceedings of the 2011 IEEE Region 5 Student Paper Contest, Edmond, OK, USA, 14–17 April 2011. [Google Scholar]

- Walter, T.R. Low cost volcano deformation monitoring: Optical strain measurements and application to Mount St. Helens data. Geophys. J. Int. 2011, 186, 699–705. [Google Scholar] [CrossRef]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Song, B.; Choi, H.; Lee, H.S. Surveillance Tracking System Using Passive Infrared Motion Sensors in Wireless Sensor Network. In Proceedings of the International Conference on Information Networking (ICOIN 2008), Busan, South Korea, 23–25 January 2008; pp. 1–5. [Google Scholar]

- Kazuya, T.K.; Ueda, H.; Tamura, H.; Kawahara, K.; Oie, Y. Deployment design of wireless sensor network for simple multi-point surveillance of a moving target. Sensors 2009, 9, 3563–3585. [Google Scholar] [CrossRef]

- Buratti, C.; Conti, A.; Dardari, D.; Verdone, R. An overview on wireless sensor networks technology and evolution. Sensors 2009, 9, 6869–6896. [Google Scholar] [CrossRef] [PubMed]

- Yan, L.; Chakrabarty, K.; Ho, T.-Y. A Cyberphysical Synthesis Approach for Error Recovery in Digital Microfluidic Biochips. In Proceedings of the IEEE Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 12–16 March 2012. [Google Scholar]

- Springer, T.; Peter, S.; Givargis, T. Resource Synchronization in hierarchically Scheduled Real-Time Systems Using Preemptive Critical Sections. In Proceedings of the 2014 IEEE 17th International Symposium on Object/Component/Service-Oriented Real-Time Distributed Computing (ISORC), Reno, NV, USA, 10–12 June 2014. [Google Scholar]

- Walstra, J.; Dixon, N.; Chandler, J.H. Historical aerial photographs for landslide assessment: Two case histories. Quart. J. Eng. Geol. Hydrogeol. 2007, 40, 315–332. [Google Scholar] [CrossRef]

- Blaauw, F.J.; Schenk, H.M.; Jeronimus, B.F.; van der Krieke, L.; de Jonge, P.; Aiello, M.; Emerencia, A.C. Let’s get Physiqual—An intuitive and generic method to combine sensor technology with ecological momentary assessments. J. Biomed. Inform. 2016, 63, 141–149. [Google Scholar] [CrossRef] [PubMed]

- Al Najjar, M.; Ghantous, M.; Bayoumi, M. Video surveillance for sensor platforms. In Visual Sensor Nodes; LNEE Springer: New York, NY, USA, 2013; Volume 114, pp. 17–35. [Google Scholar]

- He, M.; Guo, X.; Wang, G. Enhanced Positioning Systems Using Optical Mouse Sensors. In International Conference on Intelligent Robotics and Applications (ICIRA 2014); Springer: Berlin, Germany, 2014; pp. 463–474. [Google Scholar]

- Source: Images Produced by Google Earth 2006/2010 and Reproduced in MMA. Available online: https://www.sciencedirect.com/science/article/pii/S1679007316300020#bib0115 (accessed on 5 March 2018).

- NASA Earth Observatory Image. Available online: https://earthobservatory.nasa.gov/NaturalHazards/view.php?id=49120 (accessed on 5 March 2018).

- Bento Rodrigues: A Disastrous Tailings Dam Failure in Brazil. Available online: https://blogs.agu.org/landslideblog/2015/11/06/bento-rodrigues-1/ (accessed on 5 March 2018).

- Sroufe, R. Integration and organizational change towards sustainability. J. Clean. Prod. 2017, 162, 315–329. [Google Scholar] [CrossRef]

- The Hungarian Tailings Dam Accident—Images of the Failure of the Impounding Embankment. Available online: https://blogs.agu.org/landslideblog/2010/10/05/the-hungarian-tailings-dam-accident-images-of-the-failure-of-the-impounding-embankment/ (accessed on 5 March 2018).

- Gura, D. Toxic Red Sludge Spill from Hungarian Aluminum Plant ‘An Ecological Disaster’. Available online: http://www.npr.org/blogs/thetwo-way/2010/10/05/130351938/red-sludge-from-hungarian-aluminum-plant-spillan-ecological-disaster (accessed on 12 April 2018).

- Enserink, M. After red mud flood, scientists try to halt wave of fear and rumors. Science 2010, 330, 432–433. [Google Scholar] [CrossRef] [PubMed]

- The Guardian. Outrage as Plant Bosses Acquitted over Fatal Toxic Spill in Hungary. Available online: https://www.theguardian.com/world/2016/jan/28/outrage-plant-bosses-acquitted-fatal-toxic-spill-hungary (accessed on 8 April 2018).

- Earth Observatory. Available online: https://earthobservatory.nasa.gov/IOTD/view.php?id=87083&eocn=image&eoci=related_image (accessed on 2 March 2018).

- Norsk Regnesentral. Available online: https://www.nr.no/nb/projects/some-results-3 (accessed on 31 March 2018).

- Krobl, B.; Boerboom, L.; Looijen, J.; van Westen, C.J. The Use of Geo-information in Eco-DRR: From Mapping to Decision Support. In Ecosystem-Based Disaster Risk Reduction and Adaptation in Practice; Springer: Berlin, Germany, 2016. [Google Scholar]

- Isermann, R. Process fault detection based on modeling and estimation methods—A survey. Automatica 1984, 20, 387–404. [Google Scholar] [CrossRef]

- Hong, Y.; Adler, R.F.; Huffman, G.J. Satellite remote sensing for global landslide monitoring. EDS Trans. Am. Geophys. Union 2007, 88, 357–358. [Google Scholar] [CrossRef]

- Akbarzadeh, V.; Lévesque, J.-C.; Gagné, C.; Parizeau, M. Efficient sensor placement optimization using gradient descent and probabilistic coverage. Sensors 2014, 14, 15525–15552. [Google Scholar] [CrossRef] [PubMed]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Estrela, V.V.; Saotome, O.; Loschi, H.J.; Hemanth, J.; Farfan, W.S.; Aroma, J.; Saravanan, C.; Grata, E.G.H. Emergency Response Cyber-Physical Framework for Landslide Avoidance with Sustainable Electronics †. Technologies 2018, 6, 42. https://doi.org/10.3390/technologies6020042

Estrela VV, Saotome O, Loschi HJ, Hemanth J, Farfan WS, Aroma J, Saravanan C, Grata EGH. Emergency Response Cyber-Physical Framework for Landslide Avoidance with Sustainable Electronics †. Technologies. 2018; 6(2):42. https://doi.org/10.3390/technologies6020042

Chicago/Turabian StyleEstrela, Vania V., Osamu Saotome, Hermes J. Loschi, Jude Hemanth, Willian S. Farfan, Jenice Aroma, Chandran Saravanan, and Edwiges G. H. Grata. 2018. "Emergency Response Cyber-Physical Framework for Landslide Avoidance with Sustainable Electronics †" Technologies 6, no. 2: 42. https://doi.org/10.3390/technologies6020042