1. Introduction

Recent international reports advocate for the engagement of undergraduate students in authentic research in preparation for the science, technology, engineering, and mathematics (STEM) workforce and to face the global challenges of the 21st century as scientifically-literate citizens [

1,

2,

3,

4,

5]. Reflecting this emphasis, as well as the substantial resources (e.g., funding, faculty, and student time) made available to support undergraduate research (UR), students are gaining greater access to these high-impact learning practices through apprenticeship-like undergraduate research experiences (UREs) and course-based undergraduate research experiences (CUREs). A recent US survey of student engagement found that approximately one-in-two senior life science majors participated in some form of faculty-sponsored research during their educational careers [

6], and it is likely that the number of students engaged in research experiences will continue to grow with the increasing popularity of UR as part of both majors’ and non-majors’ post-secondary science curricula [

7].

Undergraduate research “provides a window on science making, allowing students to participate in scientific practices such as research planning, modeling of scientific observations, or analysis of data” [

8]. In comparison to “cookbook” lab activities that may not engage students into authentic scientific inquiry, UR experiences are characterized by involving students in the process of doing science through iterative, collaborative, and discovery-based research efforts that rely on disciplinary research practices [

7,

9,

10]. As a form of situated learning [

9,

11], UR embeds learning in authentic situations to help hone students’ cognitive skills through use and support of their abilities to increasingly interact as members of the research community [

12].

Scholarly attention to the efficacy and impact of UR increased over the past two decades for both accountability purposes in reporting to funding agencies [

13] and to document and refine key design features in support of educational outcomes [

7,

14]. Recently, an emerging base of educational research reported on numerous short- and long-term personal and professional benefits for students engaging in UR [

15]. Commonly-identified positive outcomes include increased research-related skills [

13,

16,

17,

18] familiarity with the research process [

17], positive affect toward science (e.g., self-confidence; valuing science) [

9,

19], degree completion [

20], intentions to pursue graduate school and careers in STEM [

21], and understanding the culture and norms of science [

9,

22]. Evidence also suggests that UR supports the retention and advancement of underrepresented groups in STEM and professional careers [

23,

24,

25].

Most commonly, evidence of student outcomes is collected using self-report survey data after completion of the UR experience ([

8,

26]). UR survey studies often rely on poorly-developed measures limiting the valid assessment of cognitive gains, a point raised by other researchers [

8,

27,

28]. In particular, UR studies regularly use Likert-scale ratings that offer unequal or coarse-grained categories (e.g., [

13,

29]) requiring respondent interpretation, increasing the potential for bias [

30,

31]. For example, it may be difficult to ensure that UR students perceive the differences between “little gain” to “moderate gain” equal to the distance between “moderate gain” and “good gain” when evaluating their respective proficiencies. Most current UR studies also rely on single post-experience administration (e.g., [

16,

28,

32]) since the surveys are designed to measure only the gains after UR experiences. Therefore, currently-available assessments for students’ perceived research skills do not allow to compare baseline and post-interventional data for estimating changes in student outcomes [

33]. Additionally, the ubiquity and ease of use of Likert-type items, it can be suggested that insufficient attention has been paid to rigorously evaluating survey psychometrics [

34].

While most research suggests that students benefit from engaging in UR activities, recent calls have been made for improvements in documenting participant progress to strengthen the research base [

8]. Despite the recognized challenges of self-reports [

28] and questionable value of this data in studying UR [

8], surveys are of particular practical use in evaluating research experiences as they: (a) require lower investments of time, resources, and assessment experience in collecting and analyzing than other data forms (e.g., interviews, observations) [

35]; (b) can be used to collect information from large samples of UR participants [

28]; (c) lend insight to student competencies (e.g., the ability to use lab techniques) that may not be easily assessed using direct measures (e.g., evaluation of oral presentations, performance tests) alone [

36]; and (d) have been justified as a general measure of achievement [

34]. Given this, need exists for generalizable, well-designed, and validated surveys that document UR student progress and program efficacy.

This article describes the development and testing of the Undergraduate Scientists—Measuring Outcomes of Research Experiences student survey (USMORE-SS). As part of a large national project focused on understanding how students benefit from UREs, the USMORE-SS was designed as an instrument that includes a common set of items (e.g., self-reported research-related proficiencies, views of UREs) to track student progress over their research experience(s) and permit generalizable comparisons across institutions, as well as site-specific questions to inform departments and administrators about given program features of interest (e.g., professional development activities). Although a discussion of the broader range of survey results is outside the scope of this article, evidence is provided here to the validity of the USMORE-SS instrument in documenting undergraduate researchers’ self-reported skills. As our colleagues in the sciences are regularly asked to provide evidence to programmatic effectiveness for quality assurance and refinement purposes, the aim of this article is to report on a validated self-report survey tool that can be used to confidently measure student outcomes.

1.1. Instrument Development and Administration

Development of the USMORE-SS was through an iterative process where we developed new items or modified ones from earlier assessments of UR [

13,

16,

37,

38]. A multi-step, iterative design approach to survey development was undertaken [

39], including data collection on URE student and research mentor experiences to establish content validity. Multiple revisions occurred throughout the design process based on field-testing and faculty feedback.

In order to collect greater detail about the cognitive and affective gains students believe they make during UR, survey items were constructed based on research from two national mixed-methods studies. The first investigated UR as part of the transition from student to practicing scientist in the fields of chemistry and physics (Project Crossover NSF DUE 0440002). Respondent survey (

n = 3014) and interview (

n = 86) data were used to outline long-term outcomes conferred to UR participants from the perspective of individuals who went on to research-related careers [

32]. Those data, drawn from a subset of items, provided some background to the residual benefits of early research training. The USMORE study focused specifically on UREs and how they lead to learning and development of career interest. Since 2012, data gathering has been continuous over the 5-year study with a sample of more than 30 colleges and universities that support UREs in the natural sciences through formal or informal research programs. In an effort to provide an encompassing view of UREs, data for all aspects of the project were collected from more than 750 student researchers; and more than 100 faculty, postdoctoral researchers, or graduate student mentors and program administrators, using multiple forms of quantitative and qualitative data to allow triangulation of data sources [

40]. Data collection included: pre/post- experience surveys and semi-structured interviews, weekly electronic journals, in-situ observations using student-borne point-of-view (POV) video and performance data to assess student learning progressions [

36].

During our initial investigations, we asked student researchers about the gains they expected or received based on involvement in their UREs. In addition, we asked lab mentors (faculty, post-docs, graduate students) about their expectations and evaluations of gains their student researchers made during the experience. Right away, themes emerged that helped us to make sense of our initial data on participant outcomes. Similar to faculty accounts reported at four liberal arts institutions, it was indicated that student research participants were expected to gain knowledge, skills, or confidence related to: (a) understanding of the scientific process and research literature; (b) how to operate technical equipment and do computer programming; (c) how to collect and analyze data, and (d) how to interact and build networks in the professional community. Through the first year of the study, we collected information on these gains through responses to open-ended interview and survey questions for each of the categories we identified. We were not sure if each of the categories we developed items for would hold up under factor analysis, but we knew we were capturing the most common activities occurring in these experiences. By summer 2013, we finalized these items and have used the same set in data collection since that time. Items fell into each of seven broad categories, nested in the four themes above, including: reading of primary literature, data collection, data analysis, data interpretation, scientific communication skills, understanding of research/field, and confidence. Item stems for each of these categories are listed in the

Appendix A.

As mentioned, a limitation of existing UR evaluation methods is the use of coarsely defined rating scales that require respondent interpretation, which can inadvertently increase bias [

34]. To address this early in the study, students and research mentors were asked in the pre-surveys and interviews about the greatest anticipated gain and what types of evidence would demonstrate whether the gain(s) were made. Often participants indicated that the degree of independence in task completion as a key indicator of skill proficiency. To align with this, we created a scale with response options based on level of independence, which included:

No Experience (lowest),

Not Comfortable,

Can complete with substantial assistance,

Can complete independently, and

Can instruct others how to complete (highest). For the knowledge (or understanding) and confidence items that would not be appropriately represented using this scale, options included:

No [understanding or confidence],

Little,

Some,

Moderate, and

Extensive. In an effort to increase scale reliability with these options, the knowledge and confidence items were written as actions (e.g., rating their confidence to “

Work independently to complete ‘basic’ research techniques such as data entry, weighing samples, etc.”). Prior to use in the formal study, mentor feedback (

n = 7) was solicited to evaluate scale appropriateness and clarity, with slight modifications made based on feedback.

The USMORE-SS was developed to include partnered pre- and post-URE versions to provide information on students’ expected and actual experiences, as well as changes in research-related skills and knowledge and academic or career intentions. While there are limitations to students’ realistic assessment of their abilities upon entering a research project due to a lack of professional experience [

28], baseline and post-intervention data offer greater fidelity in measuring the effects of UREs by: (a) taking students’ background or beginning skills into account to estimate net gains [

34], and (b) providing multiple estimates of (then) current aptitudes rather a single reflection of gains over time [

41]. Similar to other self-report data [

13,

17,

28], the USMORE-SS provides a broad indicator to the cognitive, affective, and conative impact of UREs on participants, rather than a substitute for direct performance data (e.g., tests, skill demonstration). More broadly, we believe this tool should be used with complementary direct and indirect (e.g., interviews, observations) measures to evaluate the nature of these experiences and how they lead to learning [

42]. The results reported here are based on data from students who completed the partnered pre- and post-experience surveys with the finalized survey items. The finalized USMORE-SS includes 38 questions which were intended to quantify student gains in the seven areas of research skills gained through UREs. To make this tool useful to a broad audience of those running UREs, the structural validity needs to be tested.

1.2. Study Aim

While the goal of the larger study is to increase our understanding of how undergraduate research experiences influence students’ skills and academic/career choices, the main goal of this paper is to share the survey instrument (USMORE-SS) with others interested in evaluating their research programs and provide strong support for its use. In this paper we investigate various psychometric properties of the USMORE-SS including reliability, structural validity, and longitudinal measurement invariance.

2. Materials and Methods

In this section we present information on the sample of UR participants we collected data from and our factor analysis of the scales included in the USMORE-SS instrument. The intent here is to present the structure of the scales so others can use these scales to evaluate their UR programs. All subjects gave their informed consent for inclusion before they participated in the study. The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Ethics Committee of Indiana University (Protocol 11120007580).

2.1. Sample

Data gathering with the surveys was continuous between summer 2013 and summer 2016 with a total of 507 undergraduate researchers from 43 different institutions completing the USMORE-SS. For the purpose of generalizability, Institutions were selected to provide diversity in educational mission (n = 6 liberal arts institutions, n = 7 Master’s granting institutions, n = 25 Doctoral/research institutions, n = 4 research organizations), geographic location, academic field, timing (i.e., summer and academic-year) and program type (e.g., externally- and internally-funded student research programs). In terms of sample demographics, roughly half (48%) were female and 59% self-identified as Caucasian, 9% Asian, 8% Black/African-American, 6% Latino/a, 1% American Indian, and 18% as multiple ethnicities. Nearly 85% of respondents were in their third or fourth year as undergraduates at the time of the survey, and 44% had previously participated in at least one URE. Participants’ majors were physics (25%), biology (23%), chemistry (19%), multiple STEM majors (19%), other STEM majors (e.g., engineering, Earth science; 16%), and non-STEM majors (2%).

2.2. Data Collection

Student participants were recruited through faculty or administrators working with formal programs (e.g., NSF REUs, university-sponsored initiatives) or informal URE opportunities (e.g., student volunteers) across the US. Faculty and administrators interested in having their students participate in the pre/post-survey study could decide to (a) provide their students’ contact information for us to directly solicit participation and distribute the surveys, or (b) distribute the surveys themselves via email link. The survey was hosted via Qualtrics, an online survey platform. Students who completed the survey received a $5 gift card, per administration, for their participation.

2.3. Analytic Procedure

We evaluated the USMORE-SS through two phases. First, we examined descriptive statistics of the pre- and post-survey items to explore possible outliers and to examine the proportion of missing data. We conducted Kolmogorov-Smirnov tests to examine the normality of the items using IBM SPSS 23. This phase is meant to determine which type of estimation method is most appropriate for our data to test structural validity and measurement invariance. For example, the maximum likelihood robust estimation method is more appropriate for the data violating normality assumption than the maximum likelihood estimation method to avoid the problem of underestimating standard errors [

43,

44,

45,

46]. We also investigated internal consistency reliability of the whole test and each of the hypothesized sub-constructs in the survey by referring to Cronbach’s

α.

The second phase of analysis consisted of three steps:

testing adequacy of the hypothesized seven-factor model for pre- and post-surveys;

testing longitudinal measurement invariance between pre- and post-surveys; and

comparing latent means between pre- and post-surveys only if the finally established measurement invariance model is adequate for doing so.

The first step is important as it provides evidence for the adequacy of using the subscale scores which represent different aspects of research-related skills. The second step is necessary to compare latent means of each subscale. If the second step results are adequate to move on to the third step, we can validly test the change (ideally, gain) in students’ research skills after a URE. All steps in the second phase were conducted using MPlus 7.0. The survey items in the USMORE-SS was treated as continuous because they are ordered categorical variables with five response options [

47].

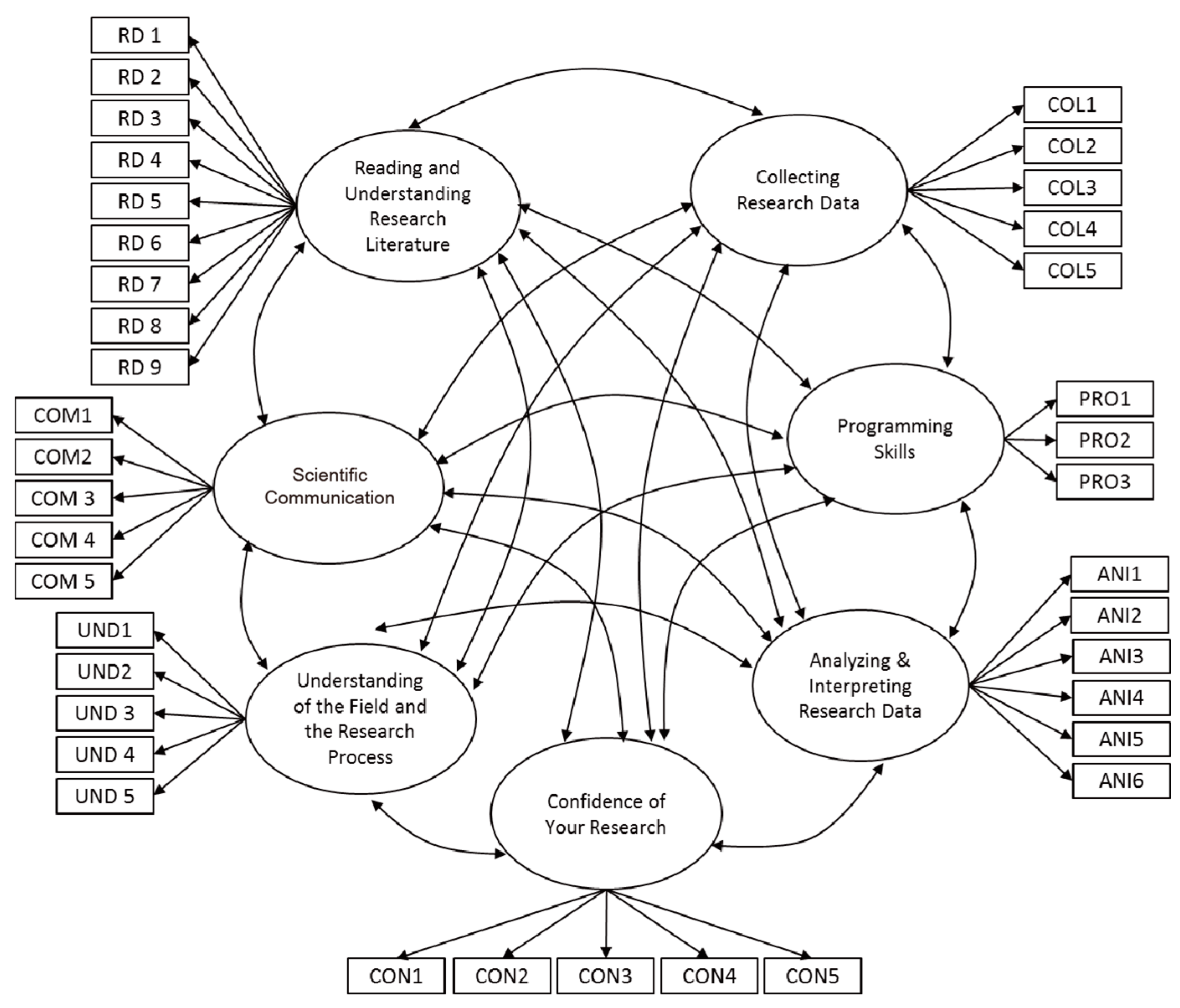

2.3.1. Confirmatory Factor Analysis and Longitudinal Measurement Invariance

Similar to Weston and Laursen’s [

28] analysis of the URSSA instrument, for Phase 2, we selected the confirmatory factor analysis (CFA) framework. Compared to exploratory factor analysis (EFA) used by many researchers when developing scales [

48,

49], CFA provides a stronger framework grounded on theories rather than relying on data itself [

50,

51]. In this study, we identify a plausible factor structure of the USMORE-SS beforehand based on the interviews and survey contents and, thus, EFA was not considered. In the current version of the instrument, seven constructs were formalized, including: reading and understanding of primary literature, collecting research data, analysis and interpretation of research data, programming skills, scientific communication, understanding of the field and research process, and confidence in research-related activities. In our original conceptualization, we thought that programming skills would reside as a part of analysis and interpretation of research data, but in our early analysis we realized that these are measuring potentially related, but separate constructs. CFA also provides a more trustworthy solution for evaluating instruments including multiple constructs and we can directly test the adequacy of the hypothesized factor structure using the range of fit indices provided in CFA [

50]. CFA also facilitates testing method effects that cannot be tested under the EFA framework, for example, by imposing correlation between error variances [

52]. In addition, CFA is more flexible than EFA in testing every aspect of measurement invariance [

53].

In practice, CFA delineates the linear relationship between hypothesized construct(s) and multiple observed indicator variables. Suppose that the number of observed indicators is

m and the number of hypothesized constructs is

k (always <

m). A CFA model for the above case can be mathematically expressed as:

Here, O denotes a p × 1 vector-valued observed variable of the multiple indicators, while ξ denotes a k × 1 vector of the hypothesized constructs. τ, , and δ represent a p × 1 vector of intercept, a p × k matrix of factor loadings, and a p × 1 vector of unique factor scores, respectively. Equation (1) is analogous to a linear regression model (Y = a + bX + e; Y: outcome variable, X: predictor, a: regression intercept, b: regression weight, and e: random error). An intercept represents the score of each item when the score of the hypothesized (latent) score is zero while a factor loading indicates the strength of the relationship between the latent construct and each of the observed items. Each factor score includes both the random error and the uniqueness of each item.

The three parameters (

τ,

, and

δ) are the main interest of factorial invariance, which is a special case of measurement invariance under a factor analysis framework. To study longitudinal change across our two waves of data collection, factorial invariance can be tested by putting the pre- and post-survey in one model [

54,

55,

56].

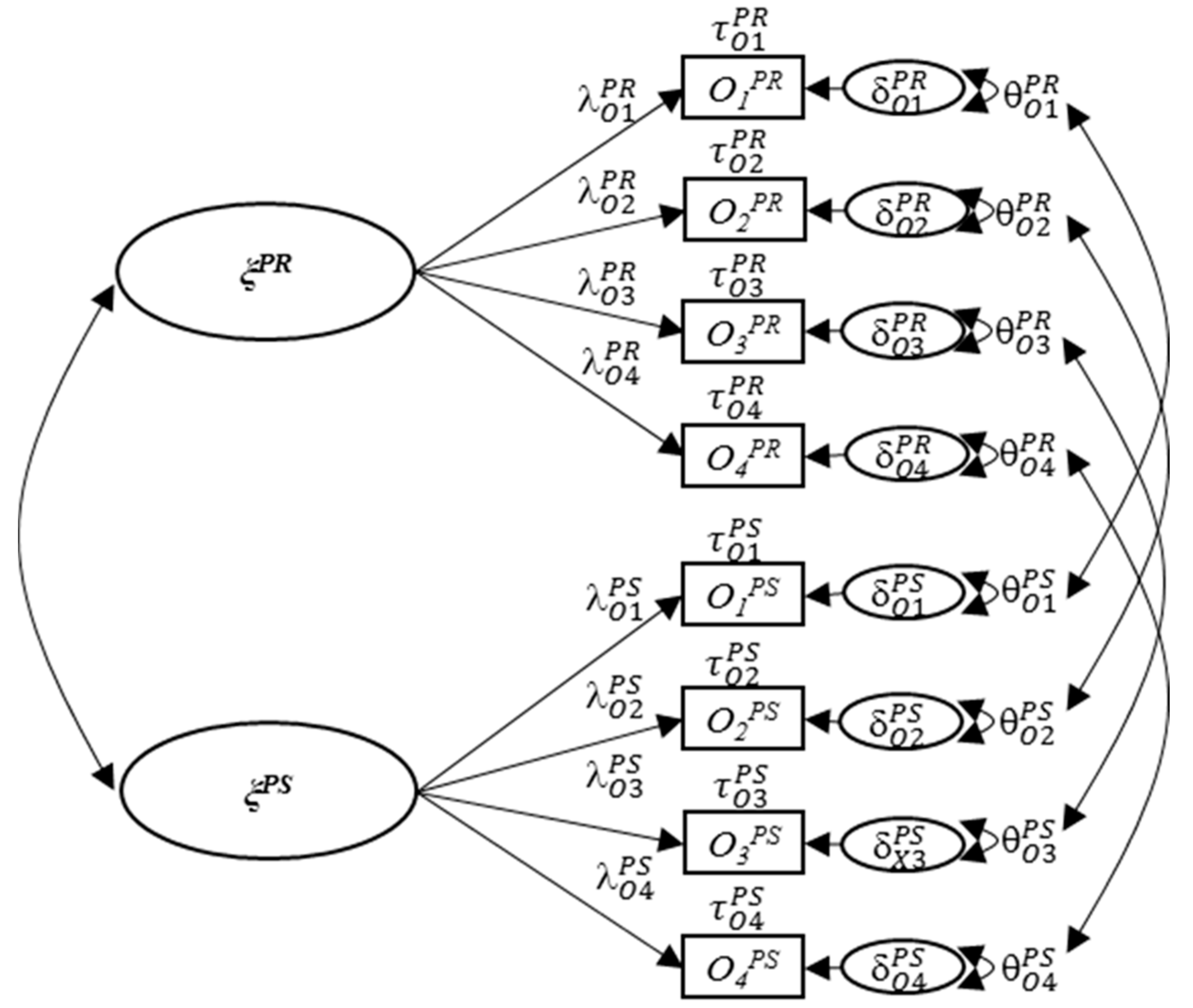

Figure 1 illustrates a longitudinal factorial invariance model of one factor with four indicators for pre- and post-survey conditions. Factorial invariance is established when

τ,

, and

(a diagonal matrix of unique variances which is the variance of

δ) are equivalent across different times. The change in the observed scores (

O) is considered to originate from the change in latent constructs (

ξ) without any differential functioning of the measurement. Typically, factorial invariance is investigated with comparing four hierarchically nested models: (1) configural (equal structure); (2) metric (equal factor loadings); (3) scalar (equal intercepts), and (4) strict invariance (equal unique variances) models [

53,

57,

58]. We followed this sequence of modeling to test the longitudinal measurement invariance between the pre- and post-surveys.

2.3.2. Evaluating Structural Validity and Measurement Invariance

In order to evaluate the adequacy of the tested CFA model, we referred to the commonly used model fit indices: (1) chi-square statistics (

χ2) at the

level; (2) the comparative fit index (

CFI); (3) the root mean square of approximation (

RMSEA); and (d) the standardized root mean squared residual (

SRMR). A non-significant

χ2 (

p > 0.05) indicates a good model fit [

59], however,

χ2 overly rejects an adequate model when the sample size is large [

50,

60,

61]. Meanwhile, other fit indices indicate how adequate the tested model is, independent of the sample size. The criteria for acceptable model fit are the

RMSEA ≤ 0.08, the

SRMR ≤ 0.08, and the

CFI ≥ 0.90 [

50,

62].

Using the established CFA model for both the pre- and post-surveys, we sequentially tested configural, metric, scalar, and strict invariance models. Each pair of two nested models (e.g., configural vs. metric invariance models) is typically compared by the difference in the

χ2 fit statistics as well as the differences in the

CFI,

RMSEA, and

SRMR (

ΔCFI,

ΔRMSEA, and

ΔSRMR). In this study, we report Sattora-Bentler

χ2 difference test because we used the maximum likelihood-robust (MLR) estimation method [

63]. Given the same problem of the test based on

χ2 (i.e., falsely rejecting an adequate model with large samples), we consulted the criteria

ΔCFI,

ΔRMSEA, and

ΔSRMR suggested by Chen [

60]. Although

χ2 rejects the tested invariance model, we can go further for the higher-level invariance model based on

ΔCFI,

ΔRMSEA, and

ΔSRMR. When sample size is greater than 300, as in this study, the model with more invariant constraints is rejected with

ΔCFI ≤ −0.010 and ΔRMSEA ≥ 0.015 regardless of the tested parameters. Yet, the criteria of the SRMR is different between metric invariance (

ΔSRMR ≥ 0.030) and scalar/strict invariances (

ΔSRMR ≥ 0.010). To summarize, we used the criteria for ΔCFI, ΔRMSEA, and ΔSRMR suggested by Chen [

60] to determine the adequacy of each of the tested invariance models instead of relying on chi-square difference tests, which is known to be too sensitive to negligible misfit.

4. Discussion

In this manuscript, we present and statistically evaluate the survey we generated for the purpose of providing reliable self-report data for measuring changes in students’ skills through participation in undergraduate research. We contend that our instrument addresses common limitations faced by extant survey tools. Rather than using Likert scales that offer unequal or coarse-grained categories, our response scales are defined by the level of competence students have for each skill. The definition of these scales was informed by faculty perceptions of student expertise in the research setting and extensive interviewing of students, mentors, and faculty across various science disciplines. Using this, respondents can more easily identify their competencies with less potential for interpretive bias. In comparison with other tools intended only for a single post-experience administration (e.g., URSSA), our instrument was developed as a pre/post measure to establish baseline information and monitor self-reported changes in students’ skills over the course of a single or multiple research experiences. The longitudinal measurement invariance test results also indicate that the same interpretation of the survey items held before and after the URE. Hence, future studies can confidently use the USMORE-SS to measure the level of student research skills before and after the URE experiences and compare the scores legitimately. Finally, few other instruments demonstrated their suitability to assess student skills or growth as a result of participation in UREs. We employed a rigorous CFA-based analysis to examine the psychometric properties of the USMORE-SS and the results presented here indicate the suitability of the instrument for estimating URE students’ self-rated abilities.

4.1. Limitations

The significant limitation with this tool, and most others, is that it relies on self-report to measure student gains. While we do have data from graduate student and faculty mentors, we believe these measures are also flawed in that they lack prior knowledge of students’ skills and are significantly more difficult to collect. As this research continues, we plan to explore the comparisons between student and mentor data to investigate any differences in ratings between these groups. Additionally, since our items measure students’ levels of confidence in given skills. It is possible that students with the same skills may rate their comfort in teaching a skill to others differently based on their levels of confidence.

4.2. Future Work

Successfully implementing educational practices requires the articulation of measurable goals, followed by rigorous evaluation that documents effectiveness and impact and guides future decision making [

71]. With the increased emphasis internationally on UR in higher education (e.g., [

1,

5]), it is essential that reliable assessment tools are made available to faculty and program administrators to monitor student development. The USMORE-SS is a generalizable and validated instrument that was designed to offset common limitations in existing UR survey data allowing for better estimates of student skill trajectories. While self-report indicators should be complemented with other more direct measures, as possible, for a more comprehensive understanding of student outcomes [

16,

36], this study demonstrates the value of the USMORE-SS for providing insight to self-perceived gains and can help inform faculty how to “best” support participant learning.

Additionally, there are a number of analyses we plan to address the noted limitations and to extend this research. We are actively working on analyses that compare results from these survey items with an assessment tool we developed to provide direct evidence to the development of students’ experimental problem-solving skills over a research experience [

42]. As mentioned, we also plan to pursue a detailed comparison of student ratings and mentor/faculty ratings. Future extensions of this work will involve specific analyses to identify the association between programmatic characteristics (e.g., mentorship strategies, day-to-day activities) and student gains.