This section is the technical heart of the paper. It investigates the definition of derivative for a real function and considers how it could be defined on more general spaces, such as the space of random variables. It explains how Lévy processes can be used to define "direction" and how the infinitesimal generator of a Lévy process relates to derivatives. This allows us to pin-point the difference between the derivatives of an insurance process and of an asset process.

6.1. Defining the Derivative

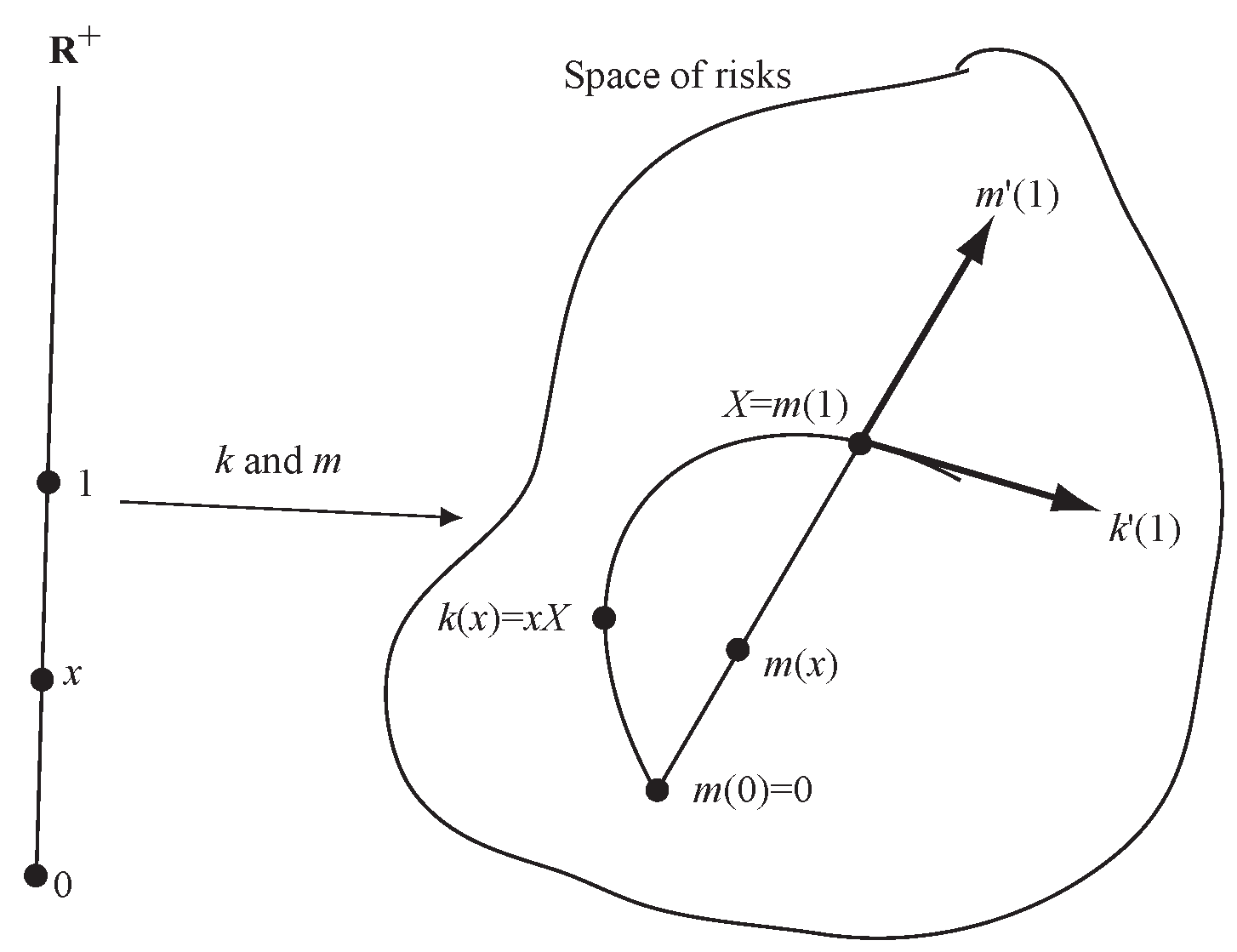

When the meaning of is clear. However we want to consider where is the more complicated space of random variables. We need to define the derivative mapping as a real-valued linear map on tangent vectors or “directions” at . Meyers’ example shows the asset/return model and an insurance growth model correspond to different directions.

A direction in

can be identified with the derivative of a coordinate path

where

. Composing

and

x results in a real valued function of a real variable

,

, so standard calculus defines

. The derivative of

at

in the direction defined by the derivative

of

is given by

The surprise of Equation (

22) is that the two complex objects on the left combine to the single, well-understood object on the right. The exact definitions of the terms on the left will be discussed below.

Section 4 introduced two important coordinates. The first is

,

for some fixed random variable

. It is suitable for modeling assets:

u represents position size and

X represents the asset return. The second coordinate is

,

, where

is a compound Poisson distribution with frequency mean

and severity component

Z. It is suitable for modeling aggregate losses from an insurance portfolio. (There is a third potential coordinate path

where

is a Brownian motion, but because it always takes positive and negative values it is of less interest for modeling losses.)

An issue with the asset coordinate in an insurance context is the correct interpretation of . For , can be interpreted as a quota share of total losses, or as a coinsurance provision. However, for or is generally meaningless due to policy provisions, laws on over-insurance, and the inability to short insurance. The natural way to interpret a doubling in volume (“”) is as where are identically distributed random variables, rather than as a policy paying $2 per $1 of loss. This interpretation is consistent with doubling volume since . Clearly has a different distribution to unless and are perfectly correlated. The insurance coordinate has exactly this property: is the sum of two independent copies of because of the additive property of the Poisson distribution.

To avoid misinterpreting it is safer to regard insurance risks as probability measures (distributions) on . The measure corresponds to a random variable X with distribution . Now there is no natural way to interpret . Identify with , the set of probability measures on . We can combine two elements of using convolution: the distribution of the sum of the corresponding random variables. Since the distribution if is the same as the distribution of order of convolution does not matter. Now in our insurance interpretation, , corresponds to , where ⋆ represents convolution, and we are not led astray.

We still have to define “directions” in

and

. Directions should correspond to the derivatives of curves. The simplest curves are straight lines. A straight line through the origin is called a ray.

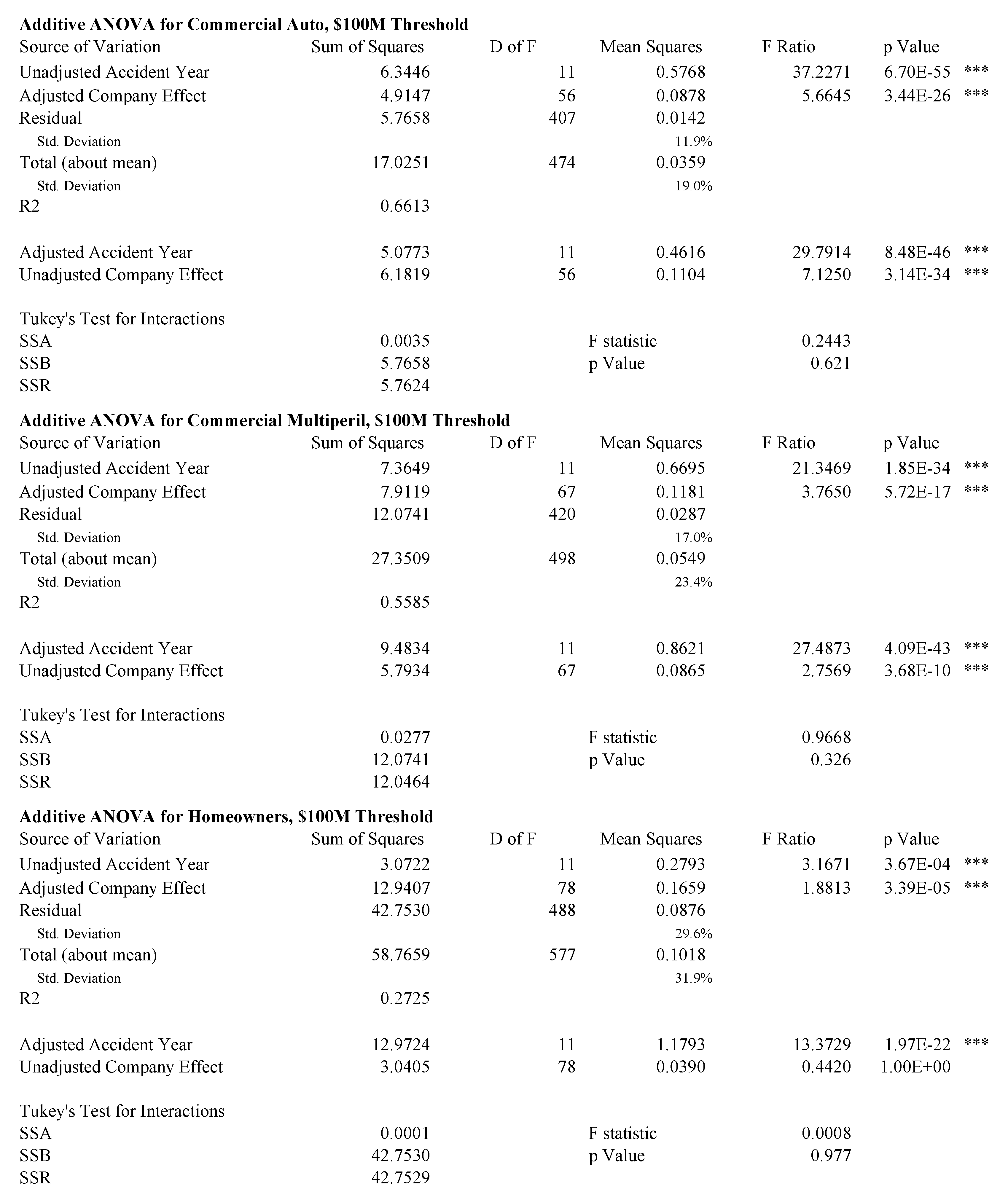

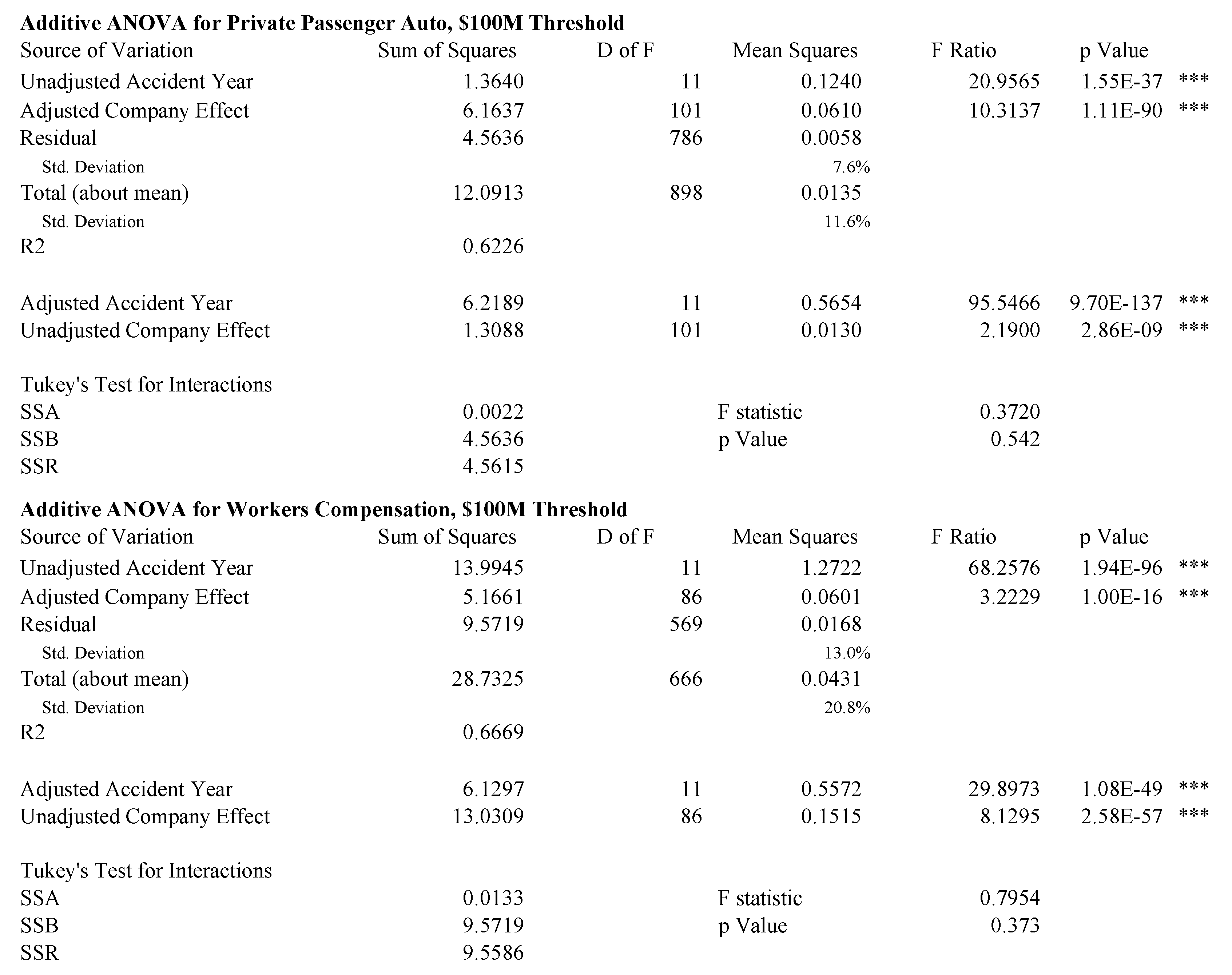

Table 2 shows several possible characterizations of a ray

each of which uses a different aspect of the rich mathematical structure of

, and which could be used as characterizations in

.

The first two use properties of

that require putting a differential structure on

, which is very complicated. The third corresponds to the asset volume/return model and uses the identification of the set of possible portfolios with the

vector space

. This leaves the fourth approach: a ray is characterized by the simple relationship

. This definition only requires the ability to add for the range space, which we have on

. It is the definition adopted in

Stroock (

2003).

Therefore rays in should correspond to families of random variables satisfying (or, equivalently, in to families of measures satisfying ), i.e., to Lévy processes. Since a ray must start at 0, the random variable taking the value 0 with probability 1. Straight lines correspond to translations of rays: a straight line passing through the point is a family where is a ray (resp. passing thought is where is a ray.) Directions in are determined by rays. By providing a basis of directions in , Lévy processes provide the insurance analog of individual asset return variables.

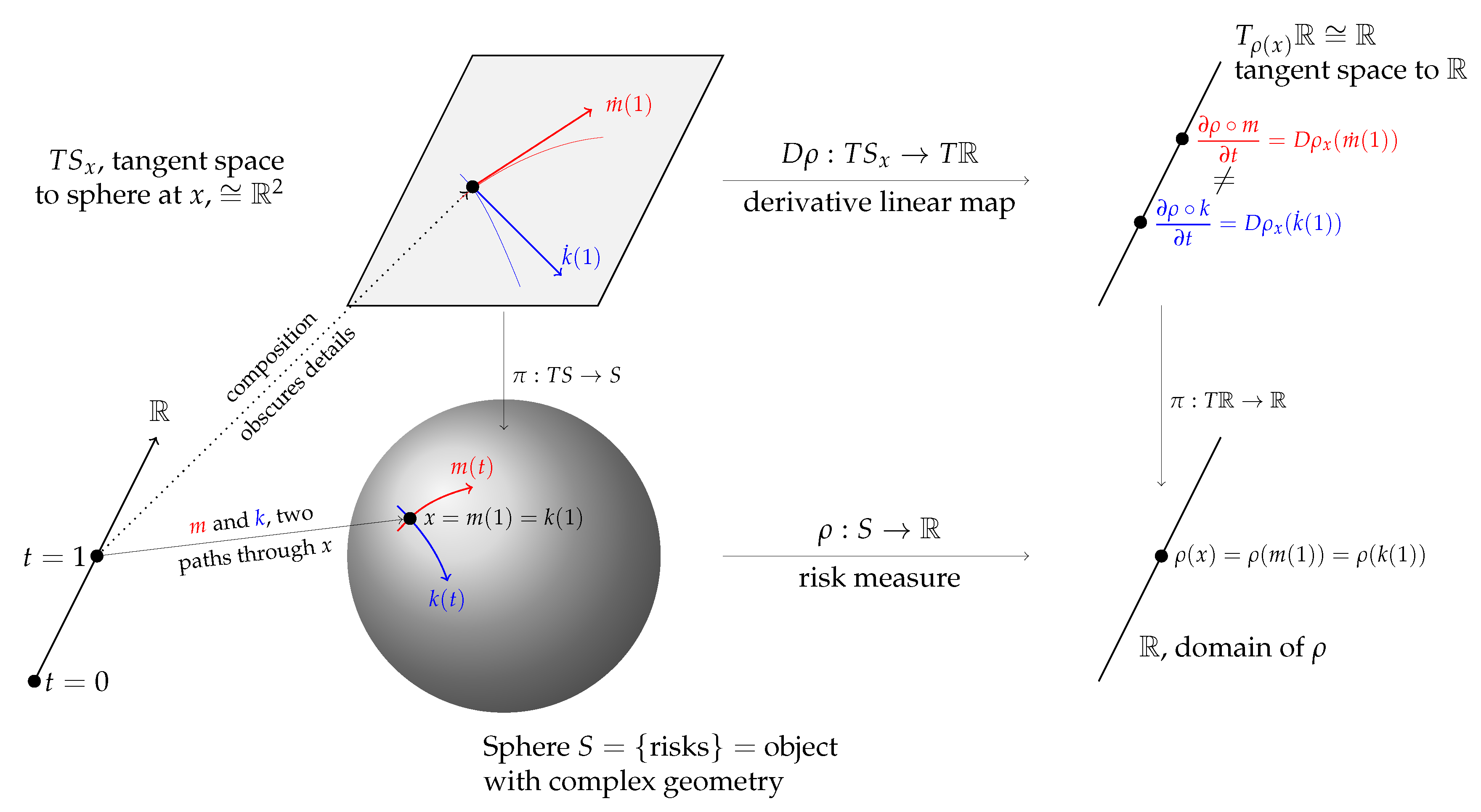

We now think about derivatives in a more abstract way. Working with functions on obscures some of the complication involved in working on more general spaces (like ) because the set of directions at any point in can naturally be identified with a point in . In general this is not the case; the directions live in a different space. A familiar non-trivial example of this is the sphere in . At each point on the sphere the set of directions, or tangent vectors, is a plane. The collection of different planes, together with the original sphere, can be combined to give a new object, called the tangent bundle over the sphere. A point in the tangent bundle consists of a point on the sphere and a direction, or tangent vector, at that point.

There are several different ways to define the tangent bundle. For the sphere, an easy method is to set up a family of local charts, where a chart is a differentiable bijection from a subset of to a neighborhood of each point. Charts must be defined at each point on the sphere in such a way that they overlap consistently, producing an atlas, or differentiable structure, on the sphere. Charts move questions of tangency and direction back to functions on where they are well understood. This is called the coordinate approach.

Another way of defining the tangent bundle is to use curves, or coordinate paths, to define tangent vectors: a direction becomes the derivative of a curve. The tangent space can be defined as the set of curves through a point, with two curves identified if they are tangent (agree to degree 1). In the next section we will apply this approach to

. A good general reference on the construction of the tangent bundle is

Abraham et al. (

1988).

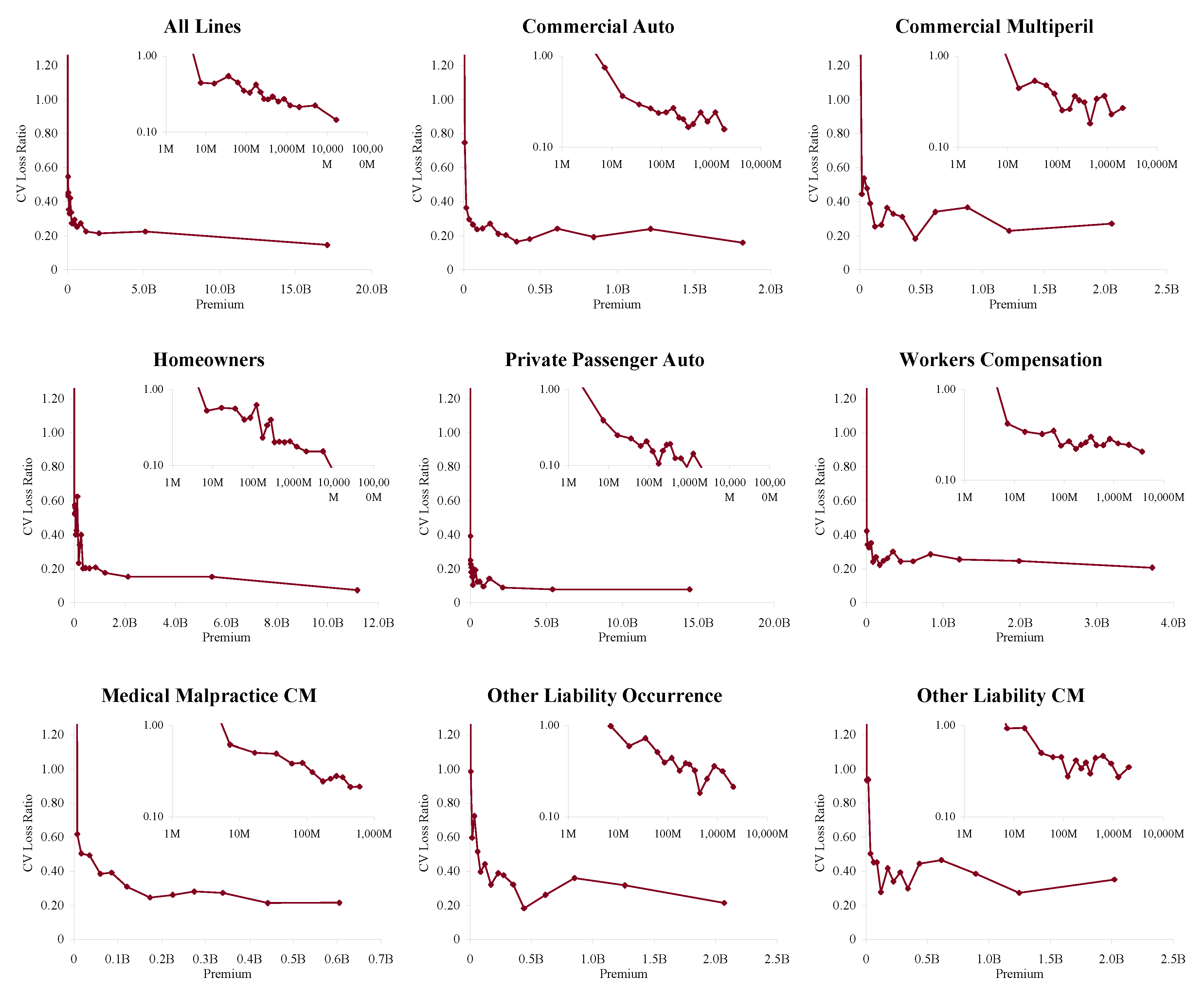

Figure 2 is an illustrative schematic. The sphere

S is used as a proxy for

, an object with more complex geometry than flat Euclidean space. The two paths

m and

k are shown as the red and blue lines, passing through the same point (distribution)

x on the sphere at

. The red line is part of a great circle geodesic—the analog of a straight line on a sphere—whereas the blue line is not. Above

x is the tangent plane (isomorphic to

) to the sphere at

x,

;

is the projection from the tangent bundle

to

S. The derivative of

at

x is a linear map

. For Euclidean spaces we can identify the tangent bundle with the space so

. Although

they have different derivatives (define different vectors in

),

at

.

The derivative of a risk measure

,

, is the evaluation of the linear differential

on a tangent vector in the direction

X. Meyer’s embedding

m corresponds to

whereas Kalkbrener’s corresponds to

. As demonstrated in

Section 4 these derivatives are not the same—just as the schematic leads us to expect—because the direction

is not the same as the direction

.

The difference between and is a measure of the diversification benefit given by m compared to k. The embedding k maps and so offers no diversification to an insurer. Again, this is correct for an asset portfolio (you don’t diversify a portfolio by buying more of the same stock) but it is not true for an insurance portfolio. We will describe the analog of next.

6.2. Directions in the Space of Actuarial Random Variables

We now show how Lévy processes provide a description of “directions” in the space . The analysis combines three threads:

The notion that directions, or tangent vectors, live in a separate space called the tangent bundle.

The identification of tangent vectors as derivatives of curves.

The idea that Lévy processes, characterized by the additive relation , provide the appropriate analog of rays to use as a basis for insurance risks.

The program is to compute the derivative of the curve

defined by a Lévy process family of random variables (or

defined by an additive family of probability distributions on

). The ideas presented here are part of a general theory of Markov processes. The presentation follows the beautiful book by

Stroock (

2003). We begin by describing a finite sample space version of

which illustrates the difficulties involved in regarding it as a differentiable manifold.

To see that the construction of tangent directions in may not be trivial, consider the space M of probability measures on , the integers with + given by addition modulo n. An element can be identified with an n-tuple of non-negative real numbers satisfying . Thus elements of M are in one to one correspondent with elements of the dimensional simplex . inherits a differentiable structure from and we already know how to think about directions and tangent vectors in Euclidean space. However, even thinking about shows M is not an easy space to work with. is a plane triangle; it has a boundary of three edges and each edge has a boundary of two vertices. The tangent spaces at each of these boundary points is different and different again from the tangent space in the interior of . As n increases the complexity of the boundary increases and, to compound the problem, every point in the interior gets closer to the boundary. For measures on the boundary is dense.

Let

be the measure giving probability 1 to

. We will describe the space of tangent vectors to

at

. By definition, all Lévy processes

have distribution

at

. Measures

are defined by their action on functions

f on

. Let

, where

X has distribution

. In view of the fundamental theorem of calculus, the derivative

of

should satisfy

with

a linear functional acting on

f, i.e.,

and

is linear in

f. Converting Equation (

23) to its differential form suggests that

where

has distribution

.

We now consider how Equation (25) works when

is related to a Brownian motion or a compound Poisson—the two building block Lévy processes. Suppose first that

is a Brownian motion with drift

and standard deviation

, so

where

is a standard Brownian motion. Let

f be a function with a Taylor’s expansion about 0. Then

because

and

and so

and

. Thus

acts as a second order differential operator evaluated at

(because we assume

):

Next suppose that

is a compound Poisson distribution with Lévy measure

,

and

. Let

J be a variable with distribution

, so, in actuarial terms,

J is the severity. The number of jumps of

follows a Poisson distribution with mean

. If

t is very small then the axioms characterizing the Poisson distribution imply that in the time interval

there is a single jump with probability

and no jump with probability

. Conditioning on the occurrence of a jump,

and so

This analysis side-steps some technicalities by assuming that

. For both the Brownian motion and the compound Poisson if we are interested in tangent vectors at

for

then we replace 0 with

x because

. Thus Equation (33) becomes

for example. Combining these two results makes the following theorem plausible.

Theorem 2 (

Stroock (

2003) Thm 2.1.11)

. There is a one-to-one correspondence between Lévy triples and rays (continuous, additive maps) . The Lévy triple corresponds to the infinitely divisible map given by the Lévy process with the same Lévy triple. The map is is differentiable andwhere is a pseudo differential operator, see Applebaum (2004); Jacob (2001 2002 2005), given byIf is a differentiable curve and for some then there exists a unique Lévy triple such that is the linear operator acting on f by Thus , the tangent space to at , can be identified with the cone of linear functionals of the form where is a Lévy triple.

Just as in the Lévy-Khintchine theorem, the extra term in the integral is needed for technical convergence reasons when there is an infinite number of very small jumps. Note that is a number, is a function and its value at x, is a number. The connection between and x is is the measure concentrated at .

At this point we have described tangent vectors to

at degenerate distributions

. To properly illustrate

Figure 1 and

Figure 2 we need a tangent vector at a more general

. Again, following (

Stroock 2003, sct. 2.11.4), define a tangent vector to

at a general

to be a linear functional of the form

where

is a continuous family of operators

L determined by

x. We will restrict attention to simpler tangent vectors where

does not vary with

x. If

is the Lévy process corresponding to the triple

and

f is bounded and has continuously bounded derivatives, then, by independent and stationary increments

by dominated convergence. The tangent vector is an average of the direction at all the locations that

can take.

6.4. Application to Insurance Risk Models IM1-4 and Asset Risk Model AM1

We now compute the difference between the directions implied by each of IM1-4 and AM1 to quantify the difference between

and

in

Figure 1 and

Figure 2. In order to focus on realistic insurance loss models we will assume

and

. Assume the Lévy triple for the subordinator

Z is

. Also assume

,

, and that

C,

X and

Z are all independent.

For each model we can consider the time derivative or the volume derivative. There are obvious symmetries between these two for IM1 and IM3. For IM2 the temporal derivative is the same as the volumetric derivative of IM3 with .

Theorem 2 gives the direction for IM1 as corresponding to the operator Equation (

37) multiplied by

x or

t as appropriate. If we are interested in the temporal derivative then losses evolve according to the process

, which has Levy triple

. Therefore, if

,

, then the time direction is given by the operator

The temporal derivative of IM2,

, is more tricky. Let

K have distribution

, the severity of

Z. For small

t,

with probability

and

with probability

. Thus

where

is the distribution of

. This has the same form as IM1, except the underlying Lévy measure

has been replaced with the mixture

See (

Sato 1999, chp. 6, Thm 30.1) for more details and for the case where

X or

Z includes a deterministic drift.

For IM3, , the direction is the same as for model IM1. This is not a surprise because the effect of C is to select, once and for all, a random speed along the ray; it does not affect its direction. By comparison, in model IM2 the “speed” is proceeding by jumps, but again, the direction is fixed. If then the derivative would be multiplied by .

Finally the volumetric derivative of the asset model is simply

Thus the derivative is the same as for a deterministic drift Lévy process. This should be expected since once

is known it is fixed regardless of volume

x. Comparing with the derivatives for IM1-4 expresses the different directions represented schematically in

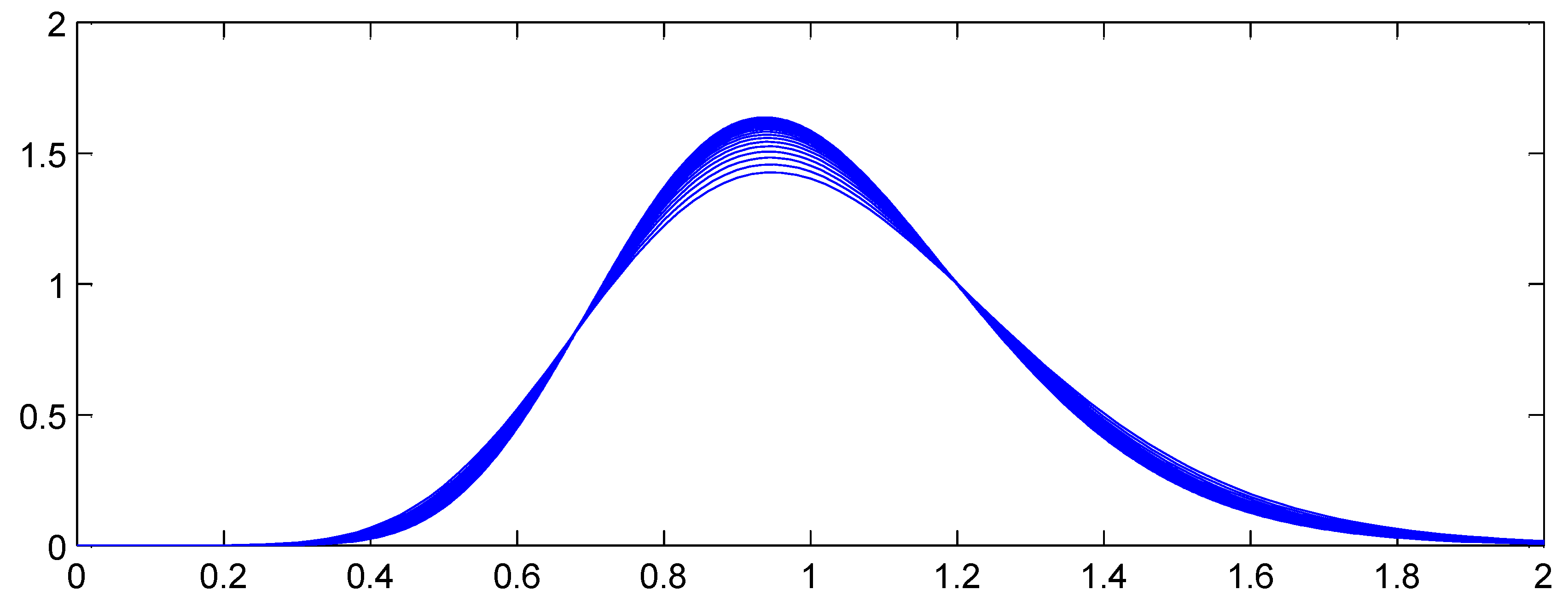

Figure 2 analytically. The result is also reasonable in light of the different shapes of

and

as

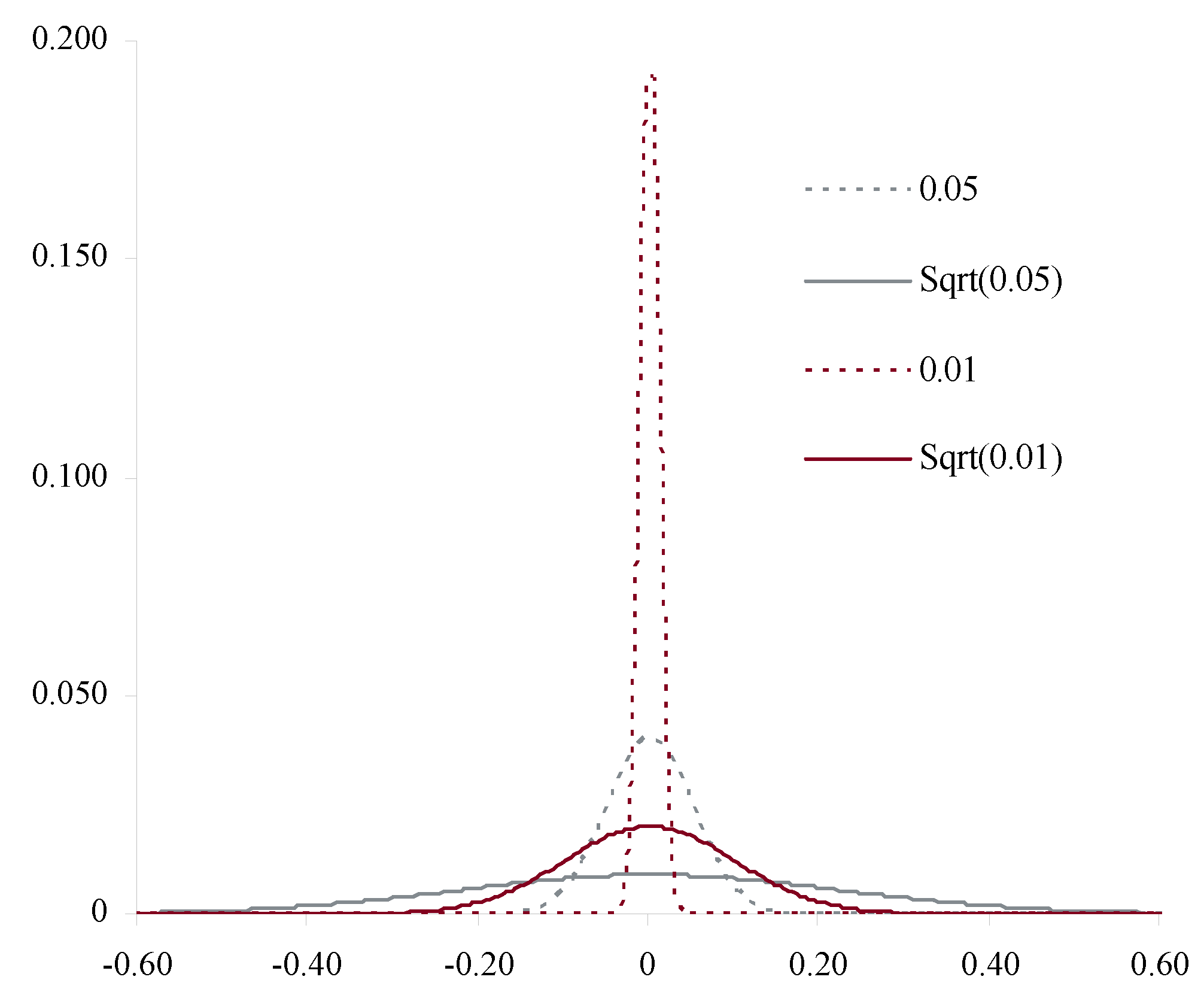

, for a random variable

Z with mean and standard deviation equal to 1. For very small

t,

is essentially the same as a deterministic

, whereas

has a standard deviation

which is much larger than the mean

t. Its coefficient of variation

as

. The relative uncertainty in

grows as

whereas for

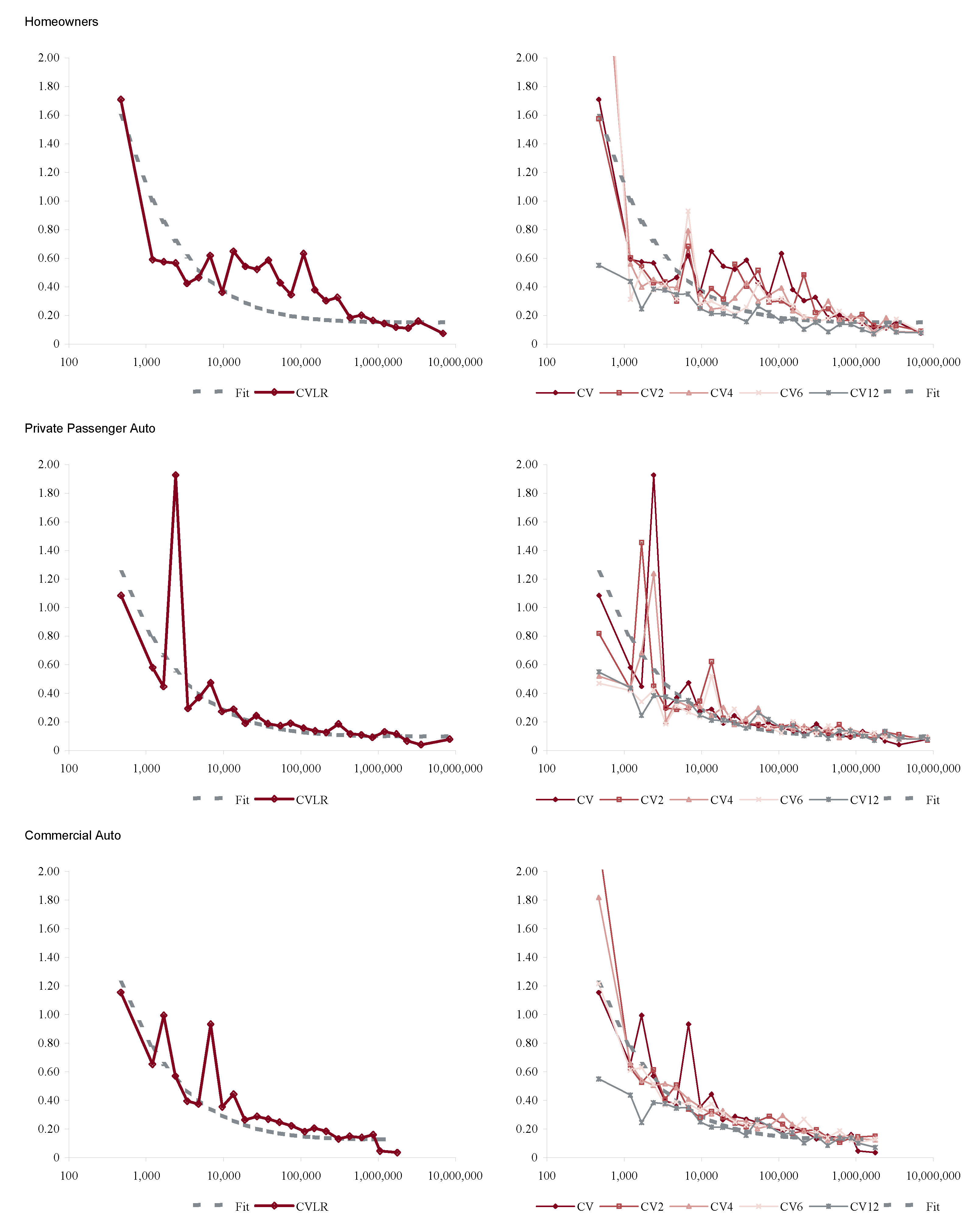

it disappears see

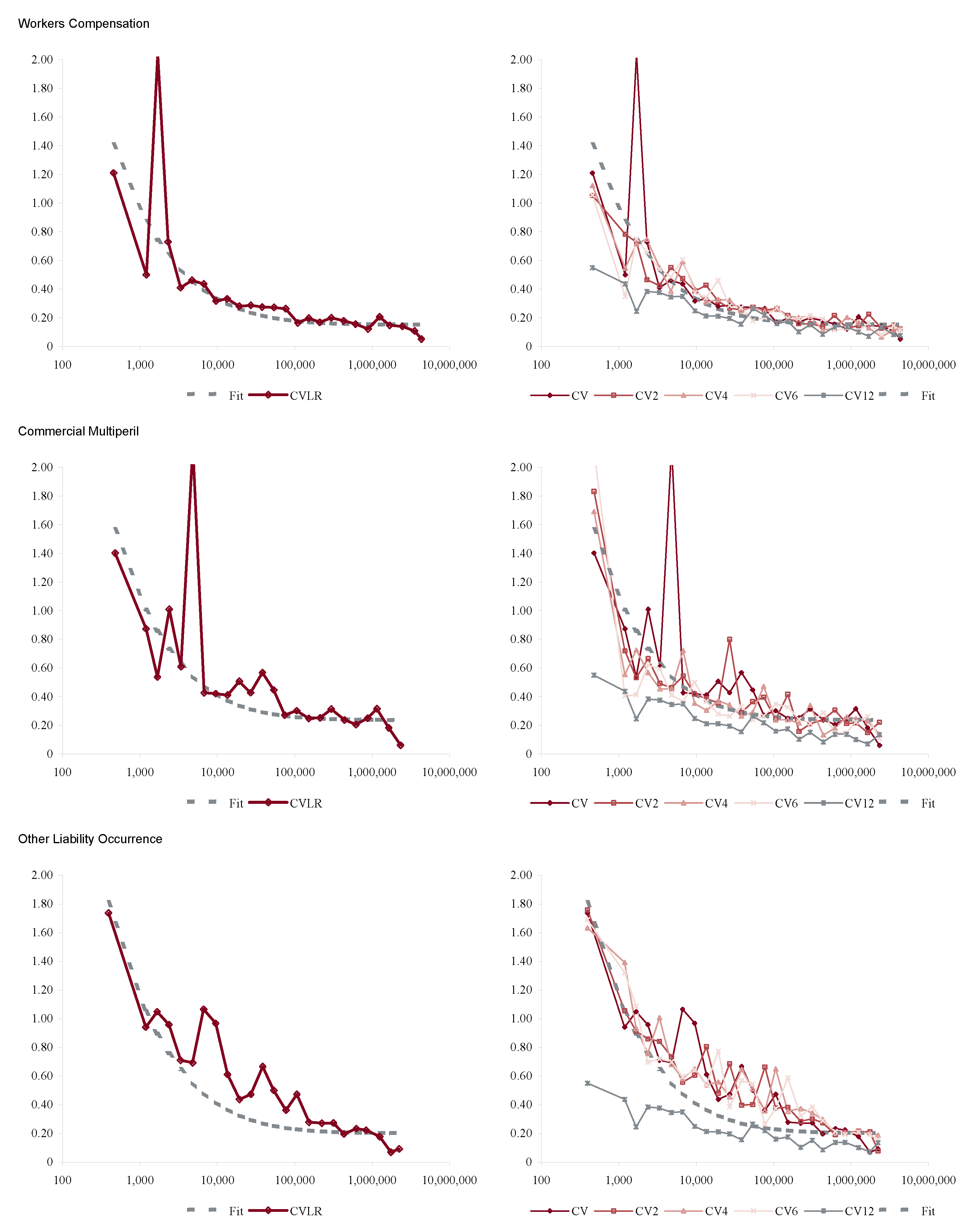

Figure 3.

Severity uncertainty is also interesting. Suppose that claim frequency is still

but that severity is given by a family of measures

for a random

V. Now, in each state, the Lévy process proceeds along a random direction defined by

, so the resulting direction is a mixture

We can interpret these results from the perspective of credibility theory. Credibility is usually associated with repeated observations of a given insured, so t grows but x is fixed. For models IM1-4 severity (direction) is implicitly known. For IM2-4 credibility determines information about the fixed (C) or variable () speed of travel in the given direction. If there is severity uncertainty, V, then repeated observation resolves the direction of travel, rather than the speed. Obviously both direction and speed are uncertain in reality.

Actuaries could model directly with a Lévy measure

and hence avoid the artificial distinction between frequency and severity as

Patrik et al. (

1999) suggested. Catastrophe models already work in this way. Several aspects of actuarial practice could benefit from avoiding the artificial frequency/severity dichotomy. The dichotomy is artificial in the sense it depends on an arbitrary choice of one year to determine frequency. Explicitly considering the claim count density of losses by size range helps clarify the effect of loss trend. In particular, it allows different trend rates by size of loss. Risk adjustments become more transparent. The theory of risk-adjusted probabilities for compound Poisson distributions (

Delbaen and Haezendonck 1989;

Meister 1995), is more straightforward if loss rate densities are adjusted without the constraint of adjusting a severity curve and frequency separately. This approach can be used to generate state price densities directly from catastrophe model output. Finally, the Lévy measure is equivalent to the log of the aggregate distribution, so convolution of aggregates corresponds to a pointwise addition of Lévy measures, facilitating combining losses from portfolios with different policy limits. This simplification is clearer when frequency and severity are not split.

6.5. Higher Order Identification of the Differences Between Insurance and Asset Models

We now consider whether

Figure 1 is an exaggeration by computing the difference between the two operators

and

acting on test functions. We first extend

k slightly by introducing the idea of a homogeneous approximation.

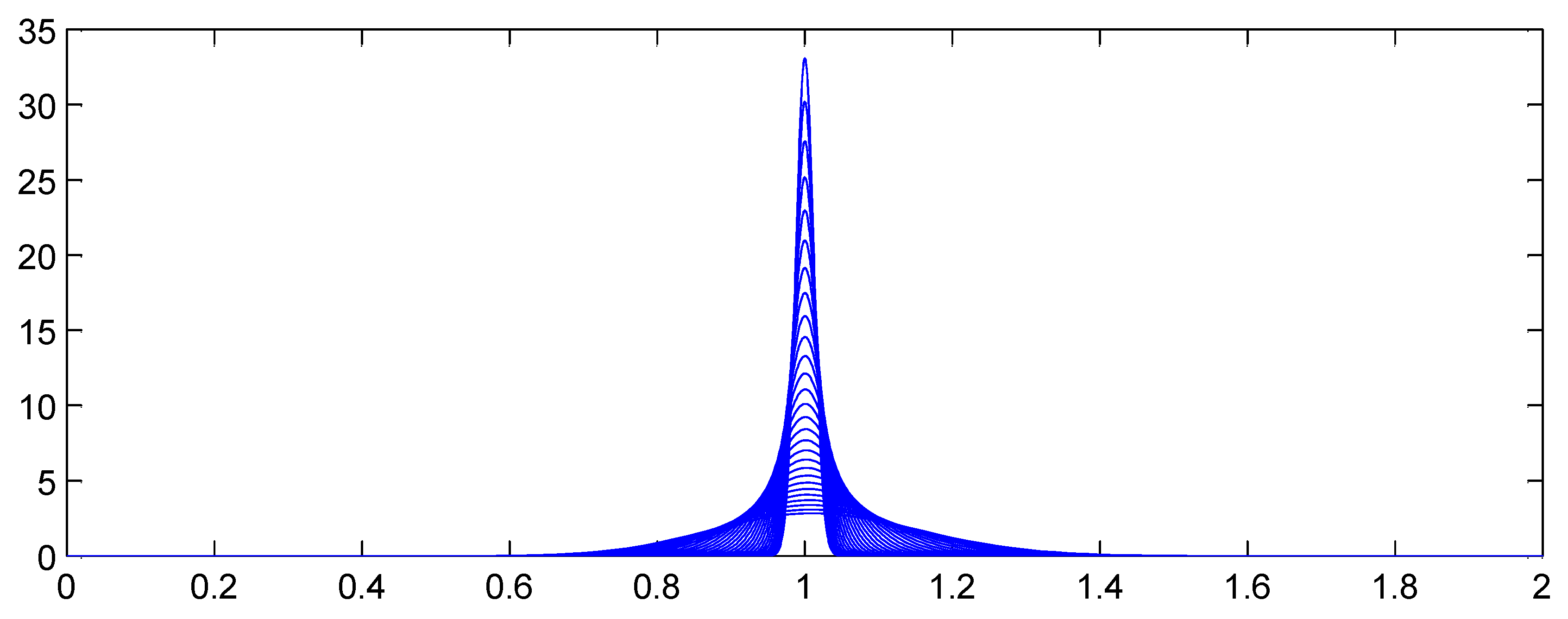

Let X be an infinitely divisible distribution with associated Lévy process . As usual, we consider two coordinate maps : the asset return model , , and the insurance model , . These satisfy , but in general at . We use u rather than t as the argument to avoid giving the impression that the index represents time: remember it represents the combination of time and volume.

Obviously there is no need to restrict

k to be an approximation of

. For general

we can construct a homogeneous approximation to

at

by

. The name homogeneous approximation is apt because

, and

k is homogeneous:

, for real

. For a general Lévy process

X,

does not have the same distribution as

, so

m is not homogeneous. For example if

X is stable with index

,

then

(Brownian motion is stable with

). This section will compare

and

by computing the linear maps corresponding to

and

and showing they have a different form. We will compute the value of these operators on various functions to quantify how they differ. In the process we will recover Meyers’

Meyers (

2005a) example of “axiomatic capital” vs. “economic capital” from

Section 4.

Suppose the Lévy triple defining

is

, where

is the standard deviation of the continuous term and

is the Lévy measure. Let

be the pseudo-differential operator defined by Theorem 2 and let

be the law of

. Using the independent and additive increment properties of an Lévy process and (

Sato 1999, Theorem 31.5) we can write

where

is a doubly differentiable, bounded function with bounded derivatives.

Regarding

k as a deterministic drift at a random (but determined once and for all) speed

, we can apply Equation (62) with

and average over

to get

We can see this equation is consistent with Equation (

23):

where

is the law of

.

Suppose that the Lévy process

is a compound Poisson process with jump intensity

and jump component distribution

J. Suppose the jump distribution has a variance. Then, using Equation (62), and conditioning on the presence of a jump in time

s, which has probability

, gives

Now let . Usually test functions are required to be bounded. We can get around this by considering for fixed n and letting and only working with relatively thin tailed distributions—which we do through our assumption the severity J has a variance. Since , Equations (63) and (69) give and respectively so the homogeneous approximation has the same derivative in this case.

If

then since

we get

The difference is independent of and so the relative difference decreases as increases, corresponding to the fact that changes shape more slowly as increases. If J has a second moment, which we assume, then the relative magnitude of the difference depends on the relative size of compared to , i.e., the variance of J offset by the expected claim rate .

In general, if

,

then

Let

be the

nth cumulant of

and

be the

nth moment. Recall

and the relationship between cumulants and moments

Combining these facts gives

and hence

As for , the term is independent of whereas all the remaining terms grow with . For the difference is .

In the case of the standard deviation risk measure we recover same results as

Section 4. Let

be the standard deviation risk measure. Using the chain rule, the derivative of

in direction

at

, where

, is

and similarly for direction

. Thus

which is the same as the difference between Equations (

7) and (

10) because here

,

and, since we are considering equality at

where frequency is

, and we are differentiating with respect to

u we pick up the additional

in Equation (

10).

This section has shown there are important local differences between the maps k and m. They may agree at a point, but the agreement is not first order—the two maps define different directions. Since capital allocation relies on derivatives—the ubiquitous gradient—it is not surprising that different allocations result. Meyer’s example and the failure of gradient based formulas to add-up for diversifying Lévy processes are practical manifestations of these differences.

The commonalities we have found between the homogeneous approximation

k and the insurance embedding

m are consistent with the findings of

Boonen et al. (

2017) that although insurance portfolios are not linearly scalable in exposure the Euler allocation rule can still be used in an insurance context. Our analysis pinpoints the difference between the two and highlights particular ways it could fail and could be more material in applications. Specifically, it is more material for smaller portfolios and for portfolios where the severity component has a high variance: these are exactly the situations where aggregate losses will be more skewed and will change shape most rapidly.