1. Introduction

The typical task of a controller consists in tracking a user-specified trajectory as closely as possible while observing certain additional specifications, such as stability, the satisfaction of safety limits and the minimization of expensive control action when it is not needed. Mathematically, we may define such a controller by the mapping

, where

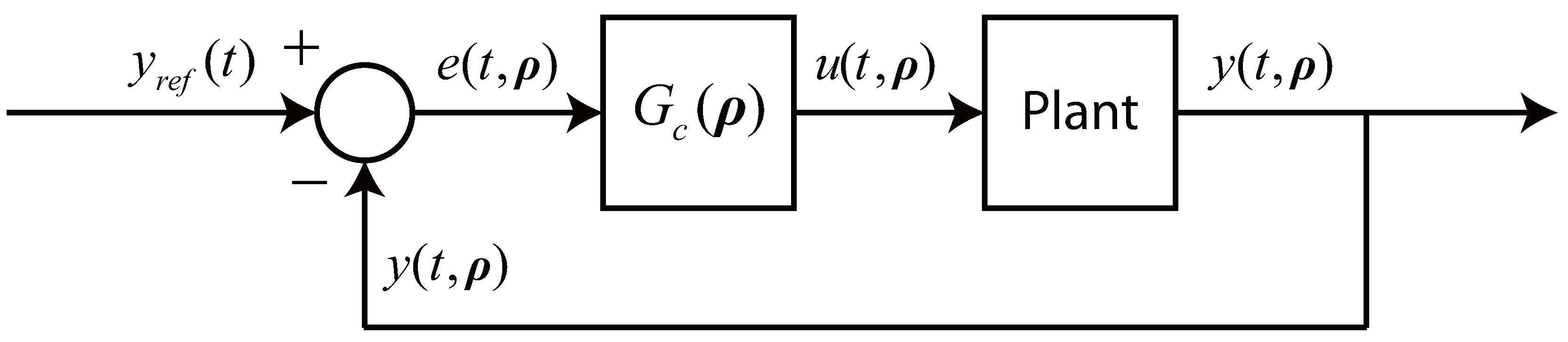

denote the parameters that dictate the controller’s behavior and represent decision variables (the “tuning parameters”) for the engineer intending to implement the controller in practice. In the simplest scenario, this often leads to a closed-loop system that may be described by the schematic in

Figure 1. No assumptions are made on the nature of

, which may represent such controllers as the classical PID, the general fixed-order controller, or even the more advanced MPC (model-predictive control). To be even more general,

may represent an entire system of such controllers—one would need, in this case, to simply replace

,

,

and

by their vector equivalents.

Figure 1.

Qualitative schematic of a single-input-single-output system with the controller . Elements such as disturbances and sensor dynamics, as well as any controller-specific requirements, are left out for simplicity. We use the notation to mark the (implicit) dependence of the control output on the tuning parameters ρ (likewise for the input and the error).

Figure 1.

Qualitative schematic of a single-input-single-output system with the controller . Elements such as disturbances and sensor dynamics, as well as any controller-specific requirements, are left out for simplicity. We use the notation to mark the (implicit) dependence of the control output on the tuning parameters ρ (likewise for the input and the error).

As with any set of decision variables, it should be clear that there are both good and bad choices of

ρ, and in every application, some sort of design phase precedes the actual implementation and acts to choose a set of

ρ that is expected to track the reference,

, “well”, while meeting any additional specifications. The classic example for PID controllers is the Ziegler-Nichols tuning method [

1], with methods such as model-based direct synthesis [

2] and virtual reference feedback tuning [

3] acting as more advanced alternatives. Though not as developed, both theoretical and heuristic approaches exist for the design of MPC [

4] and general fixed-order controllers [

5,

6] as well.

In the majority of cases, the set of controller parameters obtained by these design methods will not be the best possible with respect to control performance. There are many reasons for this, with some of the common ones being:

assumptions on the plant, such as linearity or time invariance, that are made at the design stage,

modeling errors and simplifications,

conservatism in the case of a robust design,

time constraints and/or deadlines that give preference to a simpler design over an advanced one.

To improve the closed-loop performance of the system, some sort of data-driven adaptation of the parameters from their initial designed values, denoted here by

, may be done online following the acquisition of new data. These are generally classified as “indirect” and “direct” adaptations [

7] depending on what is actually adapted—the model (followed by a model-based re-design of the controller) in the indirect variant or the controller parameters in the direct one. This paper investigates direct methods that attempt to optimize control performance by establishing a direct link between the observed closed-loop performance and the controller parameters, with the justification that such methods may be forced to converge—at least, theoretically—to a locally optimal choice,

, regardless of the quality or the availability of the model, which cannot be said for indirect schemes [

8].

Many of these schemes attempt to minimize a certain user-defined performance metric (e.g., the tracking error) for a given run or batch by playing with the controller parameters as one would in an iterative optimization scheme—

i.e., by changing the parameters between two consecutive runs, trying to discover the effect that this change has on the closed-loop performance (estimating the performance derivatives), and then using the derivative estimates to adapt the parameters further in some gradient-descent manner [

9,

10,

11,

12,

13]. This is essentially the

iterative controller tuning (ICT)

problem, whose goal is to bring the initial suboptimal set,

, to the locally optimal

via iterative experimentation on the closed-loop system, all the while avoiding that the system become dangerously unstable from the adaptation (a qualitative sketch of this idea is given in

Figure 2). A notable limitation of such methods, though rarely stated explicitly, is that the control task for which the controller is being adapted must be identical (or very similar) from one run to the next—otherwise, the concept of optimality may simply not exist, since what is optimal for one control task (e.g., the tracking of a step change) need not be so for another (e.g., the tracking of a ramp). A closely related problem where the assumption of a repeated control task is made formally is that of iterative learning control [

14], although what is adapted in that case is the

open-loop input trajectory, rather than the parameters of a controller dictating the closed-loop system.

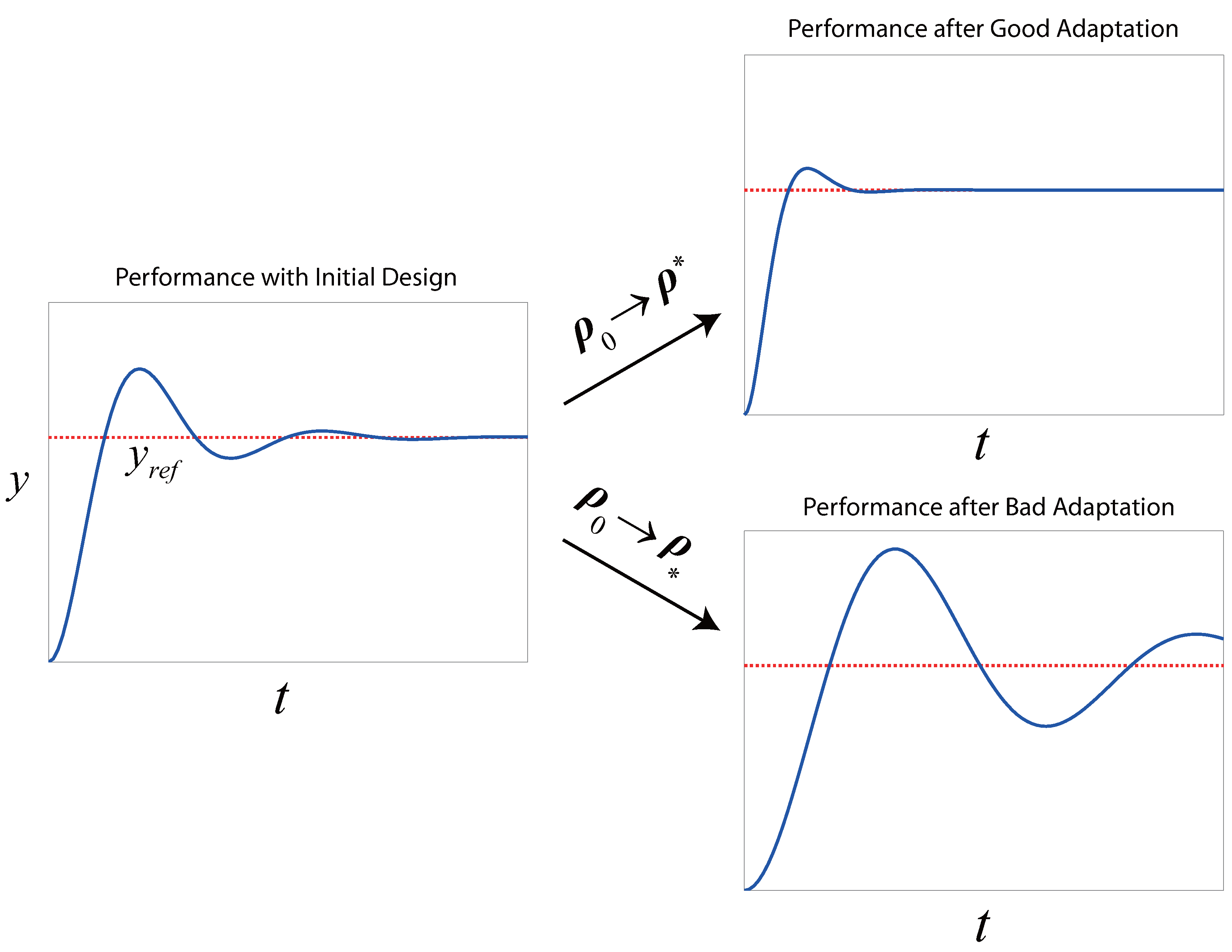

Figure 2.

The basic idea of iterative controller tuning. Here, a step change in the setpoint represents the repetitive control task. We use to denote a sort of “anti-optimum” that might be achieved with a bad adaptation algorithm.

Figure 2.

The basic idea of iterative controller tuning. Here, a step change in the setpoint represents the repetitive control task. We use to denote a sort of “anti-optimum” that might be achieved with a bad adaptation algorithm.

We observe that, as the essence of these tuning methods consists in iteratively minimizing a performance function that is

unknown, due to the lack of knowledge of the plant, the ICT problem is actually a

real-time optimization (RTO) problem as it must be solved by iterative experimentation. Recent work by the authors [

15,

16,

17] has attempted to unify different RTO approaches and to standardize the RTO problem as any problem having the following canonical form:

where

denote the RTO variables (RTO inputs) forced to lie in the relevant RTO input space defined by the lower and upper limits,

and

,

denotes the cost function to be minimized, and

and

denote the sets of individual constraints,

(

i.e., safety limitations, performance specifications), to be respected. We use the symbol ⪯ to denote

componentwise inequality.

The subscript

p (for “plant”) is used to indicate those functions that are unknown, or “uncertain”, and can only be evaluated by applying a particular

and conducting a single experiment (with

k denoting the experiment/iteration counter), from which the corresponding function values may then be measured or estimated:

with some additive stochastic error,

w. Conversely, the absence of

p indicates that the function is easily evaluated by algebraic calculation without any error present.

Owing to the generality of Problem (

1), casting the ICT problem in this form is fairly straightforward and, as will be shown in this work, has numerous advantages, as it allows for a fairly systematic and flexible approach to controller tuning in a framework where strong theoretical guarantees are available. The main contribution of our work is thus to make this generalization formally and to argue for its advantages, while cautioning the potential user of both its apparent and hypothetical pitfalls.

Our second contribution lies in proposing a concrete method for solving the ICT problem in this manner. Namely, we advocate the use of the recently released open-source SCFO (“sufficient conditions for feasibility and optimality”) solver that has been designed for solving RTO problems with strong theoretical guarantees [

17]. While this choice is undoubtedly biased, we put it forward as it is, to the best of our knowledge, the only solver released to date that solves the RTO problem (

1) reliably, which is to say that it consistently converges to a local minimum without violating the safety constraints in theoretical settings and that it is fairly robust in doing the same in practical ones. Though quite simple to apply, the SCFO framework and the solver itself need to be properly configured, and so we guide the potential user through how to configure the solver for the ICT problem.

Finally, as the theoretical discussion alone should not be sufficient to convince the reader that there is a strong potential for solving the ICT problem as an RTO one, we finish the paper with a total of four case studies, which are intended to cover a diverse range of experimental and simulated problems and to demonstrate the general effectiveness of the proposed method, the difficulties that are likely to be encountered in application, and any weak points where the methodology still needs to be improved. Specifically, the four studies considered all solve the ICT problem for:

the tracking of a temperature profile in a laboratory-scale stirred tank by an MPC controller,

the tracking of a periodic setpoint for a laboratory-scale torsional system by a general fixed-order controller with a controller stability constraint,

the PID tracking of a setpoint change for various linear systems (previously examined in [

13,

18]),

the setpoint tracking and disturbance rejection for a five-input, five-output multi-loop PI system with imperfect decoupling and a hard output constraint.

In each case, we do our best to concretize the theory discussed earlier by showing how the resulting ICT problem may be formulated in the RTO framework, followed by the application of the SCFO solver with the proposed configuration.

3. The SCFO Solver and Its Configuration

Having now presented the formulation of the ICT problem as an RTO one, we go on to describe how Problem (

1) may be solved. Although (

1) is posed like a standard optimization problem, the reader is warned that it is

experimental in nature and must be solved by iterative closed-loop experiments on the system—

i.e., one cannot simply solve (

1) by numerical methods, since evaluations of functions

and

require experiments. A variety of RTO (or “RTO-like”) methodologies, all of which are appropriate for solving (

1), have been proposed over the years and may be characterized as being model-based (see, e.g., [

24,

25,

26,

27]), model-free [

28,

29], or as hybrids of the two [

30,

31]. In this work, we opt to use the SCFO solver recently proposed and released by the authors [

15,

16,

17], as it is the only tool available to theoretically guarantee that:

the RTO scheme converges arbitrarily close to a Karush-Kuhn-Tucker (KKT) point that is, in the vast majority of practical cases, a local minimum,

the constraints and are never violated,

the objective value is consistently improved, with always,

with these properties enforced approximately in practice.

The basic structure of the solver may be visualized as follows:

where the majority of the configuration is fixed once and for all, while the measurements act as the true iterative components, with the full set of measured data being fed to the solver at each iteration, after which it does all of the necessary computations and outputs the next RTO input to be applied. This is illustrated for the ICT context in

Figure 3 (as an extension of

Figure 1).

The natural price to pay for such simplicity of implementation is, not surprisingly, the complexity of configuration.

Table 1 provides a summary of all of the configuration components, how they are set, and the justifications for these settings. Noting that most of these settings are relatively simple and do not merit further discussion, we now turn our focus to those that do.

Figure 3.

The iterative tuning scheme, where the results obtained after each closed-loop experiment on the plant (denoted by the dashed lines) are sent to the RTO loop (denoted by the dotted box), which then appends these data to previous data and uses the full data set to prompt the SCFO solver, as well as to update any data-driven adaptive settings (we refer the reader to

Table 1 for which settings are fixed and which are adaptive).

Figure 3.

The iterative tuning scheme, where the results obtained after each closed-loop experiment on the plant (denoted by the dashed lines) are sent to the RTO loop (denoted by the dotted box), which then appends these data to previous data and uses the full data set to prompt the SCFO solver, as well as to update any data-driven adaptive settings (we refer the reader to

Table 1 for which settings are fixed and which are adaptive).

Table 1.

Summary of SCFO configuration settings for the ICT problem.

Table 1.

Summary of SCFO configuration settings for the ICT problem.

| Solver Setting | Chosen As | Justification | Type |

|---|

| Initialization | closed-loop experiments | See Section 3.1 | – |

| Optimization target | Scaled gradient descent | See Section 3.2 | Adaptive |

| Noise statistics | Initial experiments at | See Section 3.3 | Fixed |

| Constraint concavity | None assumed | No reason for assuming this property in ICT context | Fixed |

| Constraint relaxations | None assumed | For simplicity (should be added if some constraints are soft) | Fixed |

| Cost certainty | Cost function is uncertain | The performance metric is an unknown function of ρ | Fixed |

| Structural assumptions | Locally quadratic structure | Recommended choice for general RTO problem [17] | Fixed |

| Minimal-excitation radius | | Recommended choice for general RTO problem [17] | Fixed |

| Lower and upper limits, and | Controller-dependent or set adaptively | See Section 3.4 | Fixed/Adaptive |

| Lipschitz and quadratic bound constants | Initial data-driven guess followed by adaptive setting | See Section 3.5 | Fixed/Adaptive |

| Scaling bounds | Problem-dependent; easily chosen | See [17] | Fixed |

| Maximal allowable adaptation step, | | Recommended choice for general RTO problem [17] | Fixed |

3.1. Solver Initialization

Prior to attempting to solve Problem (

1), it is strongly recommended that the problem be well-scaled with respect to both the RTO inputs and outputs. For the former, this means that:

where “≈” may be read as “on the same order of magnitude as”. For the RTO outputs, it is advised that both the cost and constraint functions are such that their values vary on the magnitude of

. Once this is done, one may proceed to initialize the data set.

As the solver needs to compute gradient estimates directly from measured data, it is usually needed to generate the

measurements (whose corresponding RTO input values should be well-poised for linear regression) necessary for a rudimentary (linear) gradient estimate (see, e.g., [

25,

32]). In the case that previous measurements are already available (e.g., from experimental studies carried out prior to optimization), one may be able to avoid this step partially or entirely.

We will, for generality, assume the case where no previous data are available. We will also assume that the initial point, , has been obtained by some sort of controller design technique. In addition, we require that the initial design satisfy , , and —this is expected to hold intrinsically, since one would not start optimizing performance prior to having at least one design that is known to meet the required constraints with at least some safety margin. The next step is then to generate additional measurements, i.e., to run () closed-loop experiments on the plant.

A simple initialization method would be to perturb each controller parameter one at a time, as this would produce a well-poised data set with sufficient excitation in all input directions, thereby making the task of estimating the plant gradient possible. However, such a scheme could be wasteful, especially for ICT problems with many parameters to be tuned. One alternative would be to use smart, model-based initializations [

25], but this would require having a plant model. In the case of no model, we propose to use a “smart” perturbation scheme that attempts to begin optimizing performance during the initialization phase, and refer the reader to the

appendix for the detailed algorithm.

3.2. The Optimization Target

The target,

, represents a nominal optimum provided by any standard RTO algorithm that is coupled with the SCFO solver and, as such, actually represents the choice of algorithm. This choice is important as it affects performance, with some of the results in [

16] suggesting that coupling the SCFO with a “strong” RTO algorithm (e.g., a model-based one) can lead to faster convergence to the optimum. However, the choice is

not crucial with respect to the reliability of the overall scheme, and so one does not need to be overly particular about what RTO algorithm to use, but should prefer one that generally guides the adaptations in the right direction.

For the sake of simplicity, the algorithm adopted in this work is the (scaled) gradient descent with a unit step size:

where both

and

are data-driven estimates. We refer the reader to the

appendix for how these estimates are obtained.

3.3. The Noise Statistics

Obtaining the statistics (

i.e., the probability distribution function, or PDF) for the stochastic error terms

δ in Equations (

3) and (

7) is particularly challenging in the ICT context. One reason for this is that these terms do not have an obvious physical meaning, as both Equations (

3) and (

7), which model the observed performance/constraint values as a sum of a deterministic and stochastic component, are approximations. Furthermore, even if this model were correct, the actual computation of an accurate PDF would likely require a number of closed-loop experiments on the plant that would be judged as excessive in practice.

As will be shown in the first two case studies of

Section 4, some level of engineering approximation becomes inevitable in obtaining the PDF for an experimental system. The basic procedure advocated here is to carry out a certain (economically allowable) number of repeated experiments for

prior to the initialization step. In the case where each experiment is expensive (or time consuming) and the total acceptable number is low, one may approximate the

δ term by modeling the observed values by a zero-mean normal distribution with a standard deviation equal to that of the data. If the experiments are cheap and a fairly large number (e.g., a hundred or more) is allowed, then the observed data may be offset by its mean and then fed directly into the solver (as the solver builds an approximate PDF directly from the fed noise data).

3.4. Lower and Upper Input Limits

Providing proper lower and upper limits

and

can be crucial to solver performance. As already stated, for the ICT problem these are simply

and

, but, as these values may not be obvious for certain controller designs, the user may use adaptive limits that are redefined at each iteration

k:

As the solver can never actually converge to an optimum that touches these limits, the resulting problem is essentially unconstrained with respect to them, thereby allowing us to configure the solver without affecting the optimality properties of the problem. We note that, while one could use very conservative choices and not adapt them (e.g.,

and

), this is not recommended as it would introduce scaling issues into the solver’s subroutines.

3.5. Lipschitz and Quadratic Bound Constants

The solver requires the user to provide the Lipschitz constants (denoted by

κ) for all of the functions

,

and

. These are implicitly defined as:

for all

. Quadratic bound constants (denoted by

M) on the cost function are also required and are implicitly defined as:

For

, which is easily evaluated numerically, we note that the choice is simple since one can, in many cases, compute these values prior to any implementation.

For

,

and

, the choice is a very difficult one. This is especially true for the ICT problem, where such constants have no physical meaning, a trait that may make them easier to estimate for some RTO problems [

16]. When a model of the plant is available, one may proceed to compute these values numerically for the modeled closed-loop behavior and then make the estimates more conservative (e.g., by applying a safety-factor scaling) to account for plant-model mismatch.

For the pure model-free case, we have no choice but to resort to heuristic approaches. As a choice of

, we thus propose the following (very conservative) estimate based on the gradient estimate for the initial

points (

23):

as we expect these bounds to be valid unless

is small, which, however, would indicate that we are probably close to a zero-gradient stationary point already and would have little to gain by trying to optimize performance further if this point were a minimum.

A similar rule is applied to estimate

, with:

where the estimate

is obtained in the same manner as in Equation (

23). The choice of 2, as opposed to 10, is made for performance reasons, as making

too conservative can lead to very slow progress in improving performance—this is expected to scale linearly,

i.e., if the choice of

leads to a realization that converges in 20 runs, the choice of

may lead to one that converges in 100. Note, however, that this way of defining the Lipschitz constants does not have the same natural safeguard as it does for the cost, and it may happen that

at the initial point even though the gradient may be quite large in the neighborhood of the optimum. When this is so, an alternate heuristic choice is to set:

where

denotes the smallest value that the constraint can take in practice, with

. Combining the two, one may then use the heuristic rule:

However, it may still occur that this choice is not conservative enough. This lack of conservatism may be proven if a given constraint,

, is violated for one of the runs, since sufficiently conservative Lipschitz estimates will usually guarantee that this is not the case (provided that the noise statistics are sufficiently accurate). As such, the following adaptive refinement of the Lipschitz constants is proposed to be done online when/if the constraint is violated with sufficient confidence:

where

represents the estimated standard deviation of the non-repeatability noise term,

δ, for

.

For the quadratic bound constants

M, which represent lower and upper bounds on the second derivatives of

, we propose to use the estimate of the Hessian,

, as obtained in

Section 3.2 (see

Appendix), together with a safety factor,

η, to define the bounds at each iteration

k as:

with

η initialized as 1. Since such a choice may also suffer from a lack of conservatism, an adaptive algorithm for

η is put into place. Since a common indicator of choosing

M values that are not conservative enough is the failure to decrease the cost between consecutive iterations, the following law is proposed for any iterations where the solver applied the SCFO conditions but increased (with sufficient confidence) the value of the cost [

17]:

If , then set ;

otherwise, set , with ,

where

is the estimated standard deviation for the non-repeatability noise term in the measurement of

. The essence of this update law is to make

M more conservative (by increasing

η) whenever the performance is statistically likely to have increased in the recent adaptation and to relax the conservatism otherwise, though at only twice the rate that it would be increased. Such a scheme essentially ensures that the

M constants become conservative enough to continually guarantee improved performance with an increasing number of iterations.

4. Case Studies

The proposed method was applied to four different problems, of which the first two are of particular interest, as they were carried out on experimental systems and demonstrate the reliability and effectiveness of the proposed approach when applied in settings where neither the plant nor the non-repeatability noise terms are known. Of these two, the first represents a typical batch scenario with fairly slow dynamics and time-consuming, expensive experiments for which an MPC controller is employed (

Section 4.1), while the latter represents a much faster mechanical system, where the optimization of the controller parameters for a general fixed-order controller must be carried out quickly due to real-time constraints, but where a single run is inexpensive (

Section 4.2).

The last two studies, though lacking the experimental element, are nevertheless of interest as they make a link with similar work carried out by other researchers (

Section 4.3) and generalize the method to systems of controllers with an additional challenge in the form of an output constraint (

Section 4.4). In both of these cases, we have chosen to simplify things by assuming to know the noise statistics of the relevant

δ terms and to let the repeatability assumption hold exactly.

In each of the four studies, we have used the configuration proposed in

Section 3 and so will not repeat these details here. However, we will highlight those components of the configuration that are problem-dependent and will explain how we obtained them for each case.

4.1. Batch-to-Batch Temperature Tracking in a Stirred Tank

The plant in question is a jacketed stirred water tank, where a cascade system is used to control the temperature inside the tank by having an MPC controller manipulate the setpoint temperature of the jacket inlet, which is, in turn, tracked by a decoupled system of two PI controllers that manipulate the flow rates of the hot and cold water streams that mix to form the jacket inlet (

Figure 4). As this system is essentially identical to what has been previously reported [

33], we refer the reader to the previous work for all of the implementation details.

Figure 4.

Schematic of the jacketed stirred tank and the cascade control system used to control the water temperature inside the tank. The reference () for the water flow to the jacket () was fixed at 2 L/min.

Figure 4.

Schematic of the jacketed stirred tank and the cascade control system used to control the water temperature inside the tank. The reference () for the water flow to the jacket () was fixed at 2 L/min.

As the task of tracking an “optimal” temperature profile is fairly common in batch processes and the failure to do so well can lead to losses in product quality, a natural ICT problem arises in these contexts as it is desired that the temperature stay as close to the prescribed optimal setpoint trajectory as possible. In this particular case study, the controller that is tasked with this job is the MPC controller, whose tunable parameters include:

the output weight that controls the trade-off between controller aggressiveness and output tracking,

the bias update filter gain, which acts to ensure offset-free tracking,

the control and prediction horizons that dictate how far ahead the MPC attempts to look and control,

all of which act to change the objective function at the heart of the MPC controller [

33]. For this problem, we decided to vary the output weight between 0.1 and 10 (

i.e., covering three orders of magnitude) and defined its logarithm as the first tunable variable,

. Our reason for choosing the logarithm, instead of the actual value, was due to the sensitivity of the performance being more uniform with respect to the magnitude difference between the priorities given to controller aggressiveness and output tracking (e.g., changing the output weight from 0.1 to 1.0 was expected to have a similar effect as changing it from 1.0 to 10). The bias update filter gain, defined as the second variable

, was forced to vary between 0 and 1 by definition. The control and prediction horizons,

m and

n, were both allowed to vary anywhere between 2 and 50 and, as this variance was on the magnitude of

, were divided by 100 so as to have comparable scaling with the other parameters, with

and

. We note as well that the horizons were constrained to be integers, whereas the solver provided real numbers, and so any answer provided by the solver had to be rounded to the nearest integer to accommodate these constraints.

As this system was fairly slow/stable and controller aggressiveness was not really an issue and as there was no strong preference between using hot or cold water, the performance metric simply consisted of minimizing the tracking error (

i.e., the general metric in Equation (

5) with

and

) over a batch time of

min. The setpoint trajectory to be tracked consisted of maintaining the temperature at 52 °C for 10 min, cooling by 4 °C over 10 min, and then applying a quadratic cooling profile for the remainder of the batch. Each batch was initialized by setting the jacket inlet to 55 °C and starting the batch once the tank temperature rose to 52 °C.

The certain inequality constraint

was enforced as this was needed by definition—see Equation (

9)—thereby contributing to yield the following ICT problem in RTO form:

where we scaled the performance metric by dividing by its initial value (thereby giving us a base performance metric value of 1, which was then to be lowered). We also note that in practice measurements were collected every 3 s, and so the integral of the squared error was evaluated discretely. The initial parameter set was chosen, somewhat arbitrarily, as

.

Prior to solving (

17), we first solved an easier problem where

and

were fixed at their initial values and only

and

were optimized over (these two parameters being expected to be the more influential of the four):

In order to approximate the non-repeatability noise term for the performance, a total of 8 batches were run with the initial parameter set , with the (unscaled) performance metric values obtained for those experiments being: 13.45, 13.31, 13.46, 14.25, 13.80, 13.44, 13.72 and 13.98 (their mean then being taken as the scaling term, ). Rather than attempt to run more experiments, which, though it could have improved the accuracy of our approximation, would have required even more time (each batch already requiring 40 min, with an additional 20–30 min of inter-batch preparation), we chose to approximate the statistics of the non-repeatability noise term by a zero-mean normal distribution with the standard deviation of the data, i.e., 0.32.

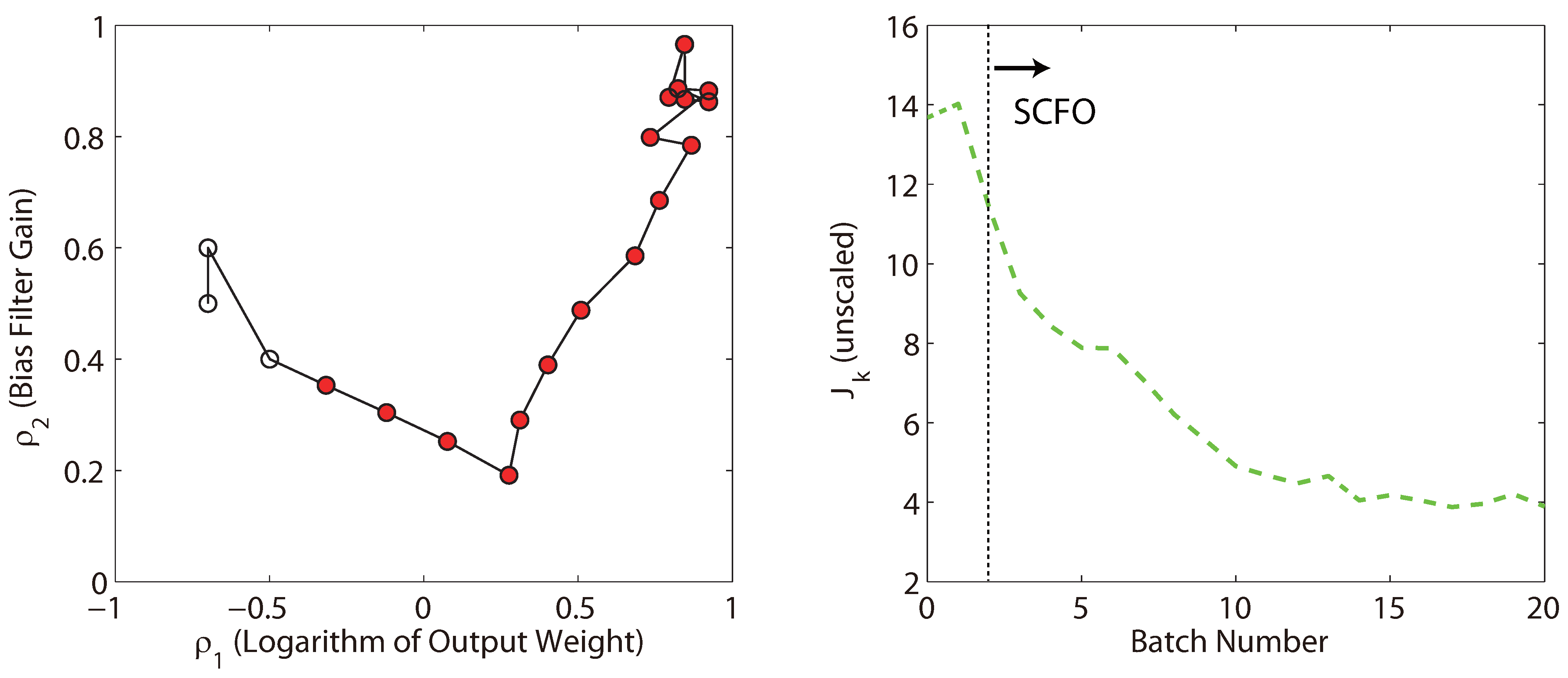

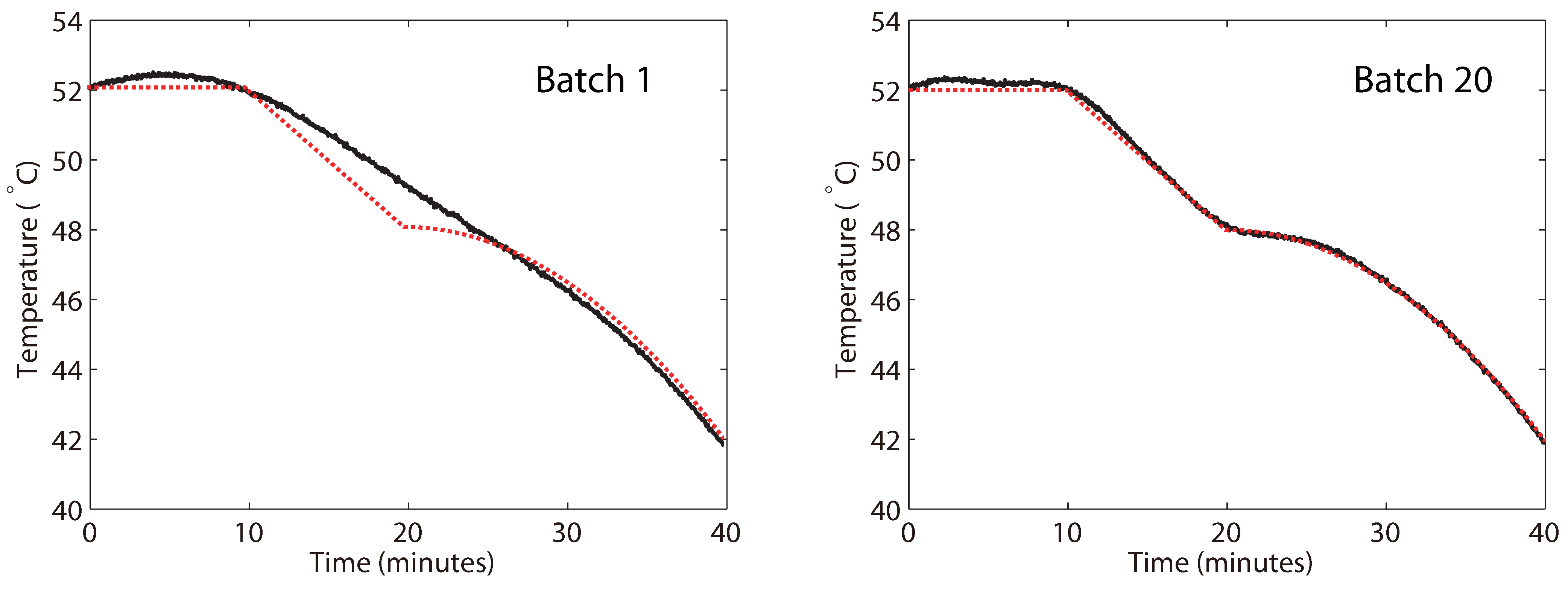

The map of the parameter adaptations and the values of the measured performance metric are given in

Figure 5, with a visual comparison of the tracking before and after optimization given in

Figure 6. It is seen that the majority of the improvement is obtained by about the tenth batch, with only minor improvements afterwards, and that monotonic improvement of the control performance is more or less observed.

Figure 5.

The parameter adaptation plot (left) and the measured performance metric (right) for the solution of Problem (

18). Hollow circles on the left indicate batches that were carried out as part of the initialization (prior to applying the solver). Likewise, the dotted vertical line on the right shows the iteration past which the parameter adaptations were dictated by the SCFO solver.

Figure 5.

The parameter adaptation plot (left) and the measured performance metric (right) for the solution of Problem (

18). Hollow circles on the left indicate batches that were carried out as part of the initialization (prior to applying the solver). Likewise, the dotted vertical line on the right shows the iteration past which the parameter adaptations were dictated by the SCFO solver.

Figure 6.

The visual improvement in the temperature profile tracking from Batch 1 to Batch 20. The dotted (red) lines denote the setpoint, while the solid (black) lines denote the actual measured temperature.

Figure 6.

The visual improvement in the temperature profile tracking from Batch 1 to Batch 20. The dotted (red) lines denote the setpoint, while the solid (black) lines denote the actual measured temperature.

We also note that the solution obtained by the solver is very much in line with what an engineer would expect for a system with slow dynamics such as this one, in that one should increase both the output weight so as to have better tracking and set the bias update filter gain close to its maximal value (both of these actions could have potentially negative effects for faster, less stable systems, however). As such, the solution is not really surprising, but it is still encouraging that a method with absolutely no knowledge embedded into it has been able to find the same in a relatively low number of experiments. It is also interesting to note that the non-repeatability noise in the measured performance metric originally puts us on the wrong track, as increasing the bias update filter gain does not improve the observed performance for Batch 1, though it probably should, and so the solver then spends the first 6 adaptations decreasing the bias filter gain in the belief that doing so should improve performance. However, it is able to recover by Batch 7 and to go in the right direction afterwards—this is likely due to the internal gradient estimation algorithm of the solver having considered all of the batches and having thereby decoupled the effects of the two parameters.

Problem (

17) was then solved by similar means, though we used all of the data obtained previously to help “warm start” the solver. As the results were similar to what was obtained for the two-parameter case, we only give the measured performance metric values and the temperature profile at the final batch in

Figure 7. We also note that the parameter values at the final batch were

, from which we see that, while all four variables were clearly adapted and the solver chose to lower both the control and prediction horizons, any extra performance gains from doing this (if any) appear to have been marginal when compared to the simpler two-parameter problem. This is also in line with our intuition (

i.e., that the output weight and bias filter gain are more important) and reminds us of a very important RTO concept: just because one has many variables that one

can optimize over does not mean that one

should, as RTO problems with more optimization variables are generally expected to converge slower and, as seen here, may not be worth the effort.

Figure 7.

The measured performance metric for the solution of Problem (

17), together with the tracking obtained for the final batch.

Figure 7.

The measured performance metric for the solution of Problem (

17), together with the tracking obtained for the final batch.

4.2. Periodic Setpoint Tracking in a Torsional System

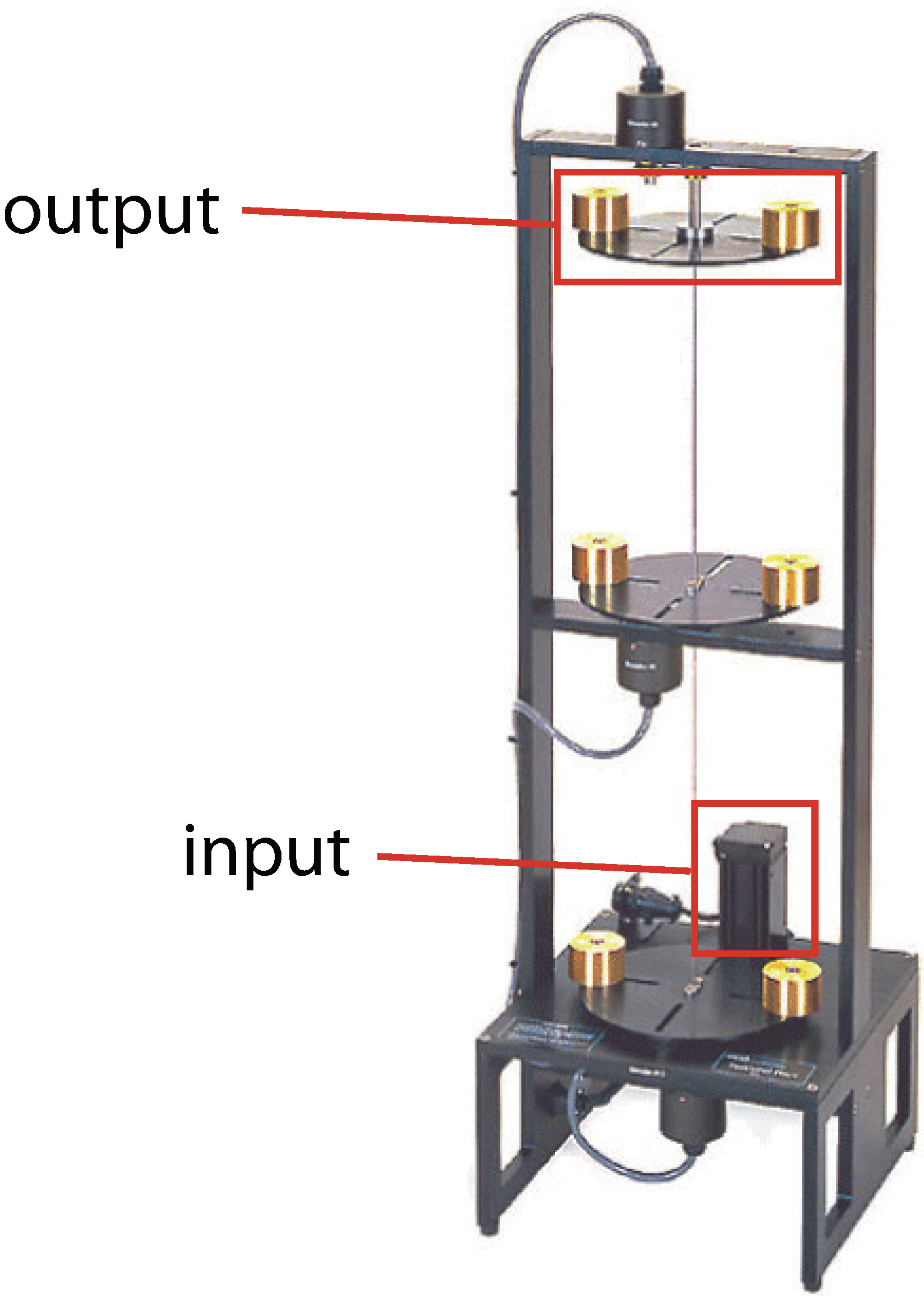

In this study, we consider the three-disk torsional system shown in

Figure 8 (the technical details of which may be found in [

34]). Here, the control input is defined as the voltage of the motor located near the bottom of the system, with the control output taken as the angular position of the top disk.

Figure 8.

The ECP 205 torsional system.

Figure 8.

The ECP 205 torsional system.

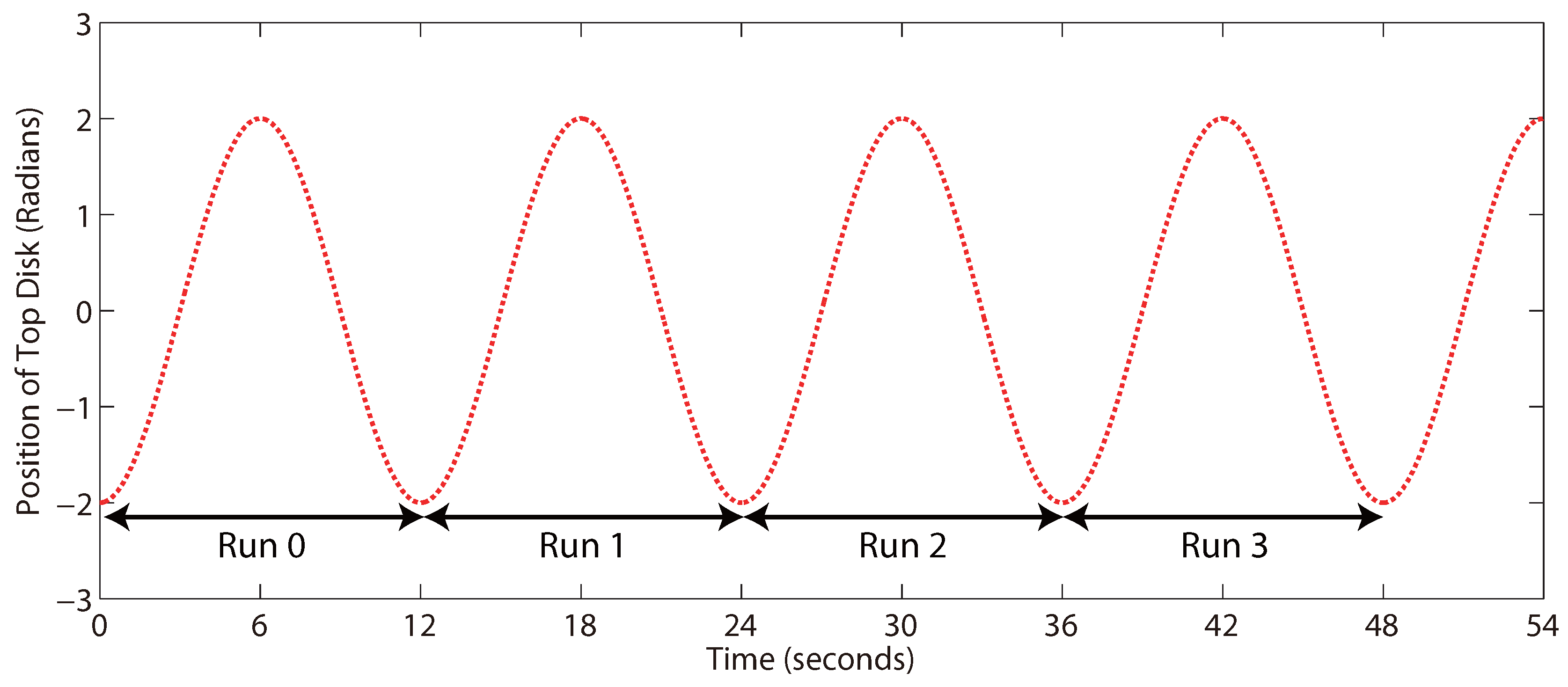

To define an ICT problem, we generalize the idea of a “run” or a “batch” as seen in the previous example and consider, instead, a “window” of a periodic sinusoidal trajectory defined by:

with

t given in seconds. As the same trajectory is repeated every 12 s, we can essentially consider each 12-second window as a “run” (or a “batch”), as shown in

Figure 9, and adapt the relevant controller parameters in the sampling time period between two consecutive windows.

Figure 9.

The generalization of “run-to-run” tuning to a system with a periodic setpoint trajectory. Only the setpoint is given here.

Figure 9.

The generalization of “run-to-run” tuning to a system with a periodic setpoint trajectory. Only the setpoint is given here.

Not surprisingly, this presents a computational challenge, as the sampling time for this system is only 60 ms, which is, with the current version of the solver, insufficient—the solver needing at least a few seconds to provide a new choice of parameters. While a much simpler implementation that satisfies this real-time constraint has already been successfully carried out on the same system [

20], we choose to apply the methodology presented in this paper by using a wait-and-synchronize approach. Here, the solver takes all of the available data and starts its computations, with no adaptation of the parameters being done until the solver’s computations are finished. Afterwards, the solver waits until these new parameters are applied and the results for the corresponding run obtained, after which the new data is fed into the solver and the cycle restarts. The noted drawback of this approach is that we have to wait, on average, 2–3 runs (24–36 s) for an adaptation to take place, although the positive side of this is that the resulting data is generally less noisy due to the repeated experiments.

The controller employed is the discrete fixed-order controller given in Equation (

10), with the numerator and denominator coefficients being the (five) tuned parameters. The performance metric used is, again, a case of the general metric (

5), but this time equal priority is given to tracking, controller aggressiveness, and the smoothness of the output trajectory, with

and

.

As the poles of the controller are also being adapted (due to the adaptation of the denominator coefficients), controller stability constraints, as already derived in Equations (

11) and (

12), are added to the ICT problem (with a tolerance of

):

and are recast into differentiable form (as the solver requires

to be differentiable):

where we have used

in the reformulation of the second set.

The adaptive limits of Equation (

15) are used to constrain the individual parameters, thereby leading to the (adaptive) ICT-RTO problem:

where we scale the performance metric by

so as to make it vary on the magnitude of

.

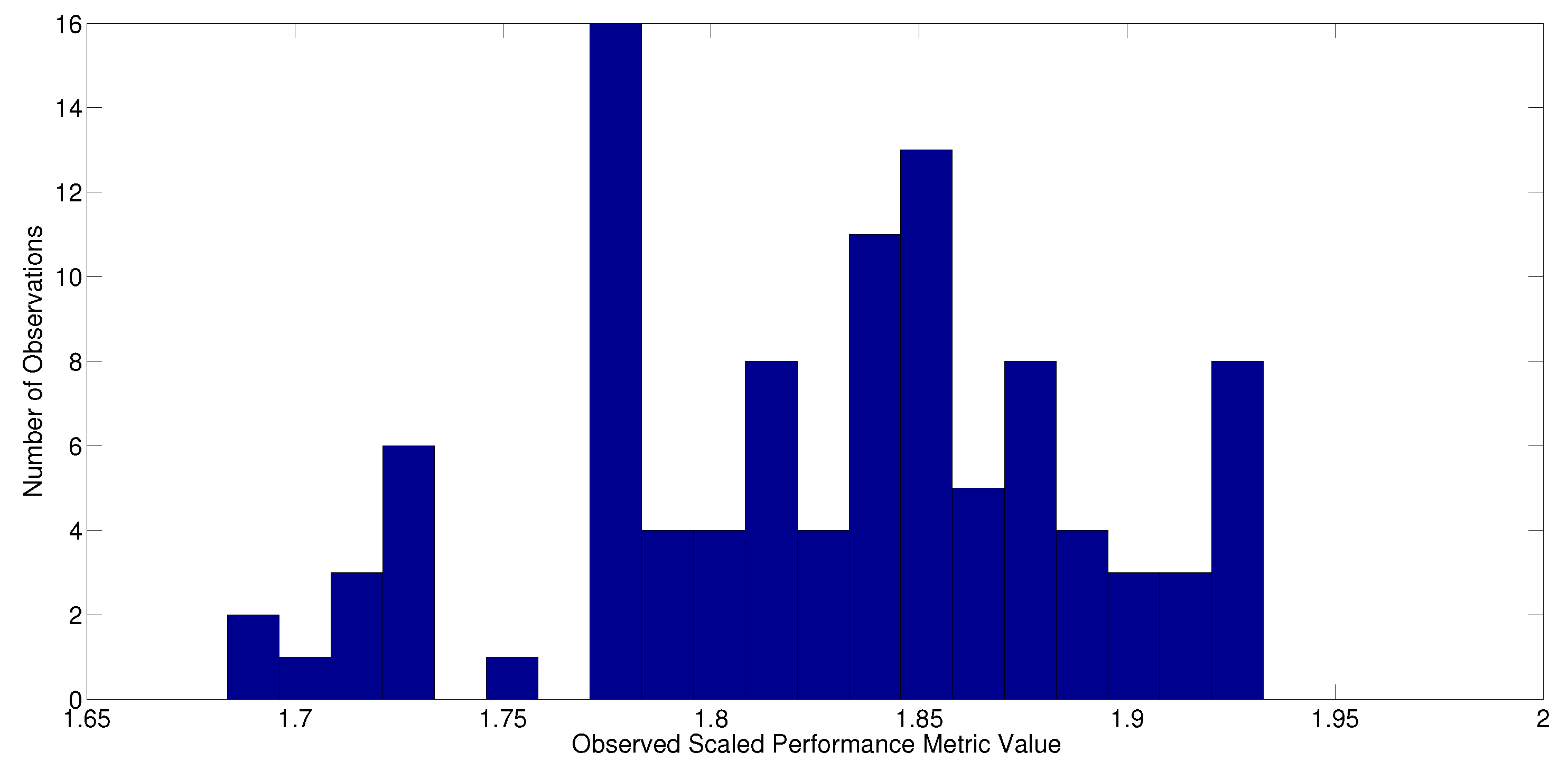

An initial parameter set of

was chosen and corresponds to an

ad hoc initial design found by a mix of both simulation and hand tuning. To estimate the noise statistics of the non-repeatability noise term in the performance metric, the system was operated at

for 20 min, which produced a total of 100 performance metric measurements (see

Figure 10). These were then offset by their mean to generate the estimated noise samples, with the latter being fed directly into the solver, which would then build an approximate distribution function for them.

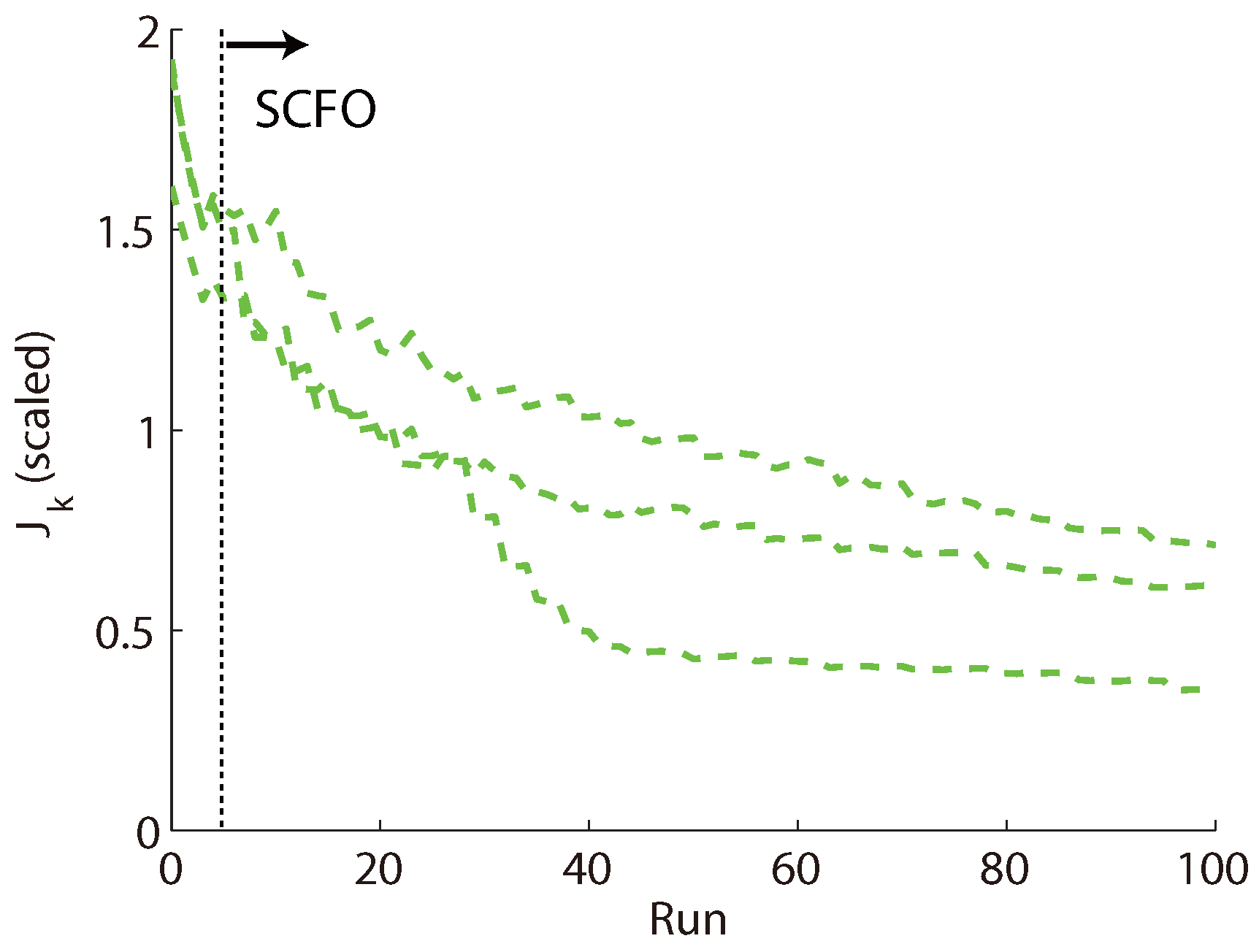

Problem (

19) was solved a total of three times for 20 min of operation (100 runs), with the performance improvements for the three trials given in

Figure 11 and the visual improvement for the middle case (“middle” with regard to the final performance metric value) given in

Figure 12. We note the variability in convergence behavior for the three cases (both in terms of speed and the performance achieved after 100 runs), which was largely caused by the solver converging to different minima, but note as well that all three follow the same “reliable” trend, in that performance is always improved with a fairly consistent decrease in the metric value over the course of operation.

Figure 10.

A twenty-bin histogram representation of the observed scaled performance metric values for a hundred runs with the initial parameter set (Problem (

19)).

Figure 10.

A twenty-bin histogram representation of the observed scaled performance metric values for a hundred runs with the initial parameter set (Problem (

19)).

Figure 11.

Performance improvement over 100 runs of operation for three different trials (dashed lines) of Problem (

19).

Figure 11.

Performance improvement over 100 runs of operation for three different trials (dashed lines) of Problem (

19).

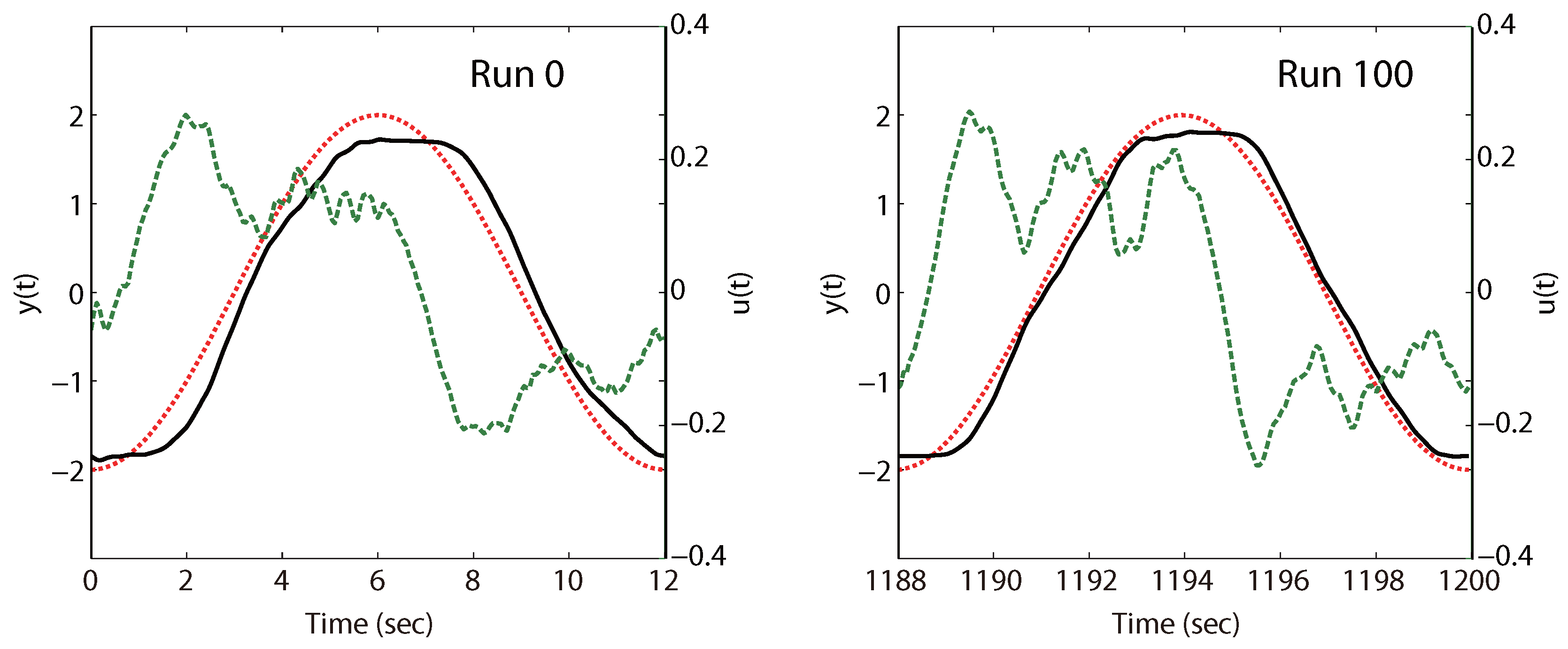

Figure 12.

Difference in control input and output profiles between the first and final runs of Problem (

19), with the dashed green line used to denote the input (motor voltage) values.

Figure 12.

Difference in control input and output profiles between the first and final runs of Problem (

19), with the dashed green line used to denote the input (motor voltage) values.

4.3. PID Tuning for a Step Setpoint Change

We consider the problem previously examined in [

13,

18], where the parameters of a PID controller are to be tuned for the closed-loop system given by:

with the PID parameters

,

, and

being used to define

and

as:

and

being the plant, whose definition will be varied for study purposes. The case of a setpoint step change (

) is considered, with only the tracking error to be minimized over a “masked” operating length, where a mask of

is applied so as not to penalize for errors on the interval

, as proposed in [

18].

Since the controller gain,

, is expected to vary on a magnitude of about

, it does not need scaling and so we define

. For both

and

we assume the possibility of greater variations, on the magnitude of

(as has been suggested in both [

13,

18]), and thus define the scaled second and third parameters as

and

. Since we do not know

a priori what

and

for a PID controller should be, but do realize that both

and

should be positive, the adaptive definition of the lower and upper limits with the positivity constraints respected is chosen to yield the ICT problem in RTO form:

where we scale the cost function by dividing by the performance metric value for the original parameter set.

As done in [

13], the original parameter set is chosen as the set found by Ziegler-Nichols tuning. The following three studies are considered here:

So as to study the effect of non-repeatability noise, each observed performance metric value is corrupted with an additive error from , i.e., by an additive error with a standard deviation that is chosen as 5% of the original performance metric value (assumed known for solver configuration). Noiseless scenarios were simulated as well.

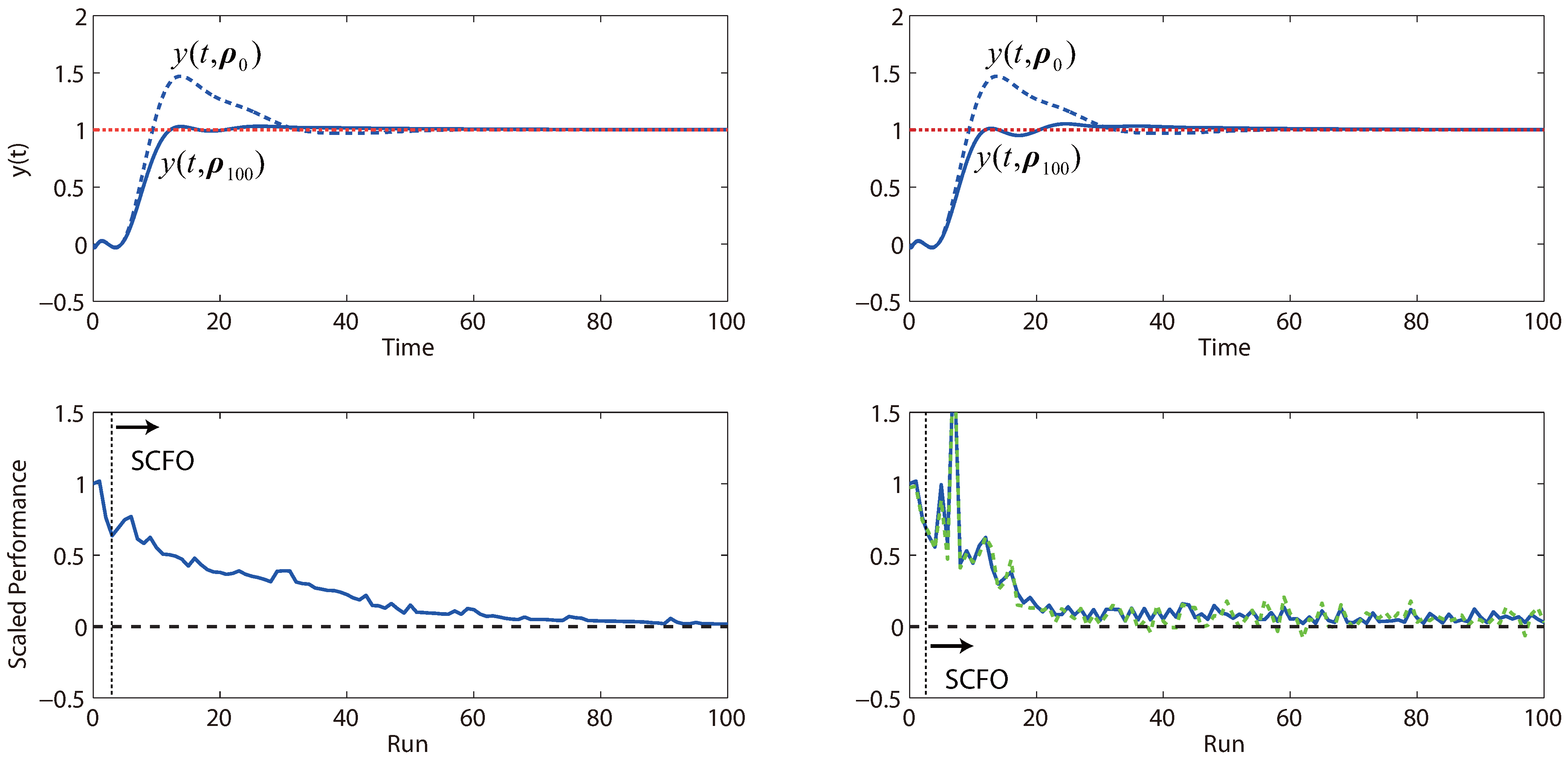

Figure 13.

Performance obtained by iterative tuning for both the noiseless (left) and noisy (right) cases of Study 1 of Problem (

20), with the solid blue line used to denote the “true” performance of the closed-loop system and the green dashed line used to denote what is actually observed (and provided to the solver). In both cases, the SCFO solver brings the closed-loop performance metric value close to its global minimum of zero (marked by the black dashed line in the lower plots).

Figure 13.

Performance obtained by iterative tuning for both the noiseless (left) and noisy (right) cases of Study 1 of Problem (

20), with the solid blue line used to denote the “true” performance of the closed-loop system and the green dashed line used to denote what is actually observed (and provided to the solver). In both cases, the SCFO solver brings the closed-loop performance metric value close to its global minimum of zero (marked by the black dashed line in the lower plots).

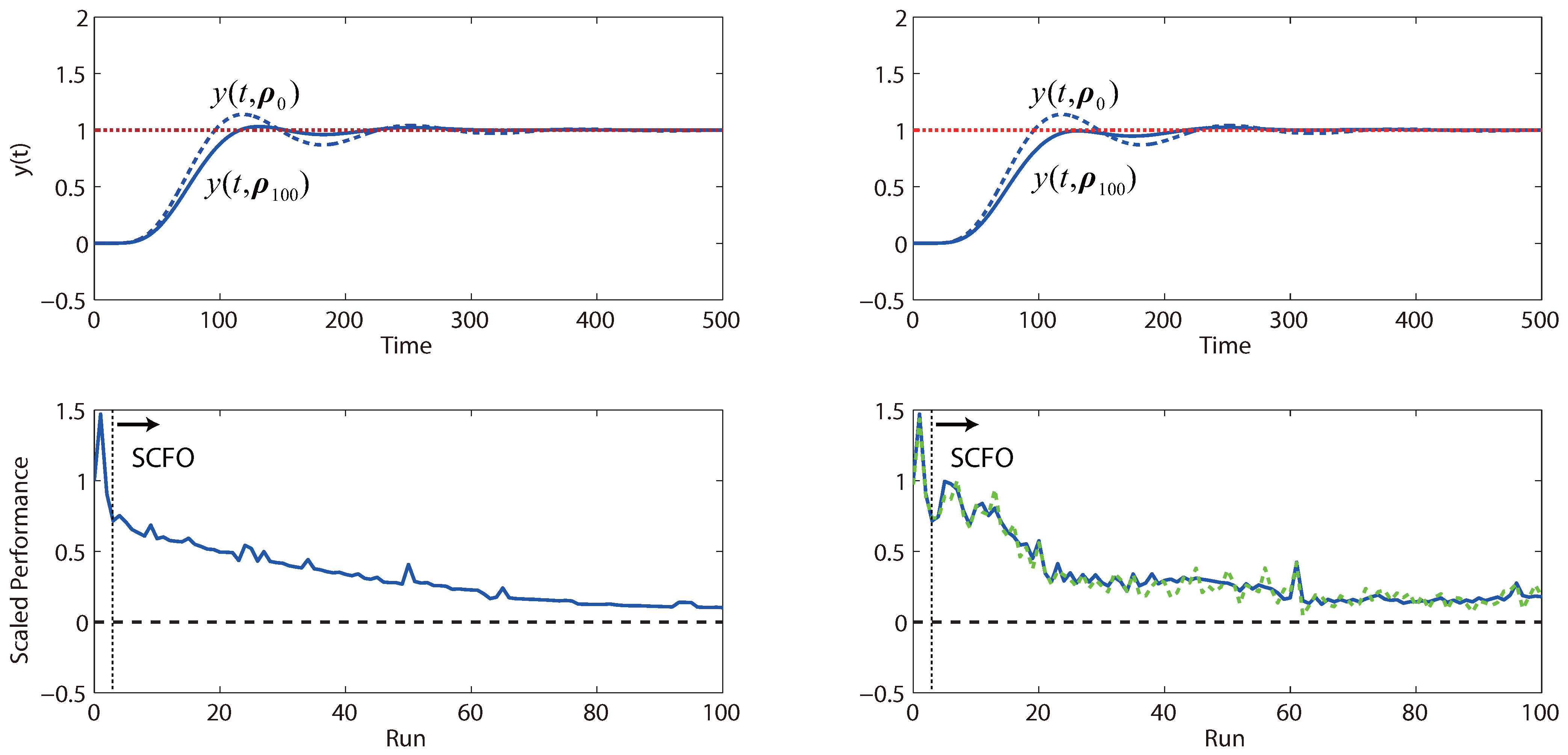

Figure 14.

Performance obtained by iterative tuning for Study 2 of Problem (

20).

Figure 14.

Performance obtained by iterative tuning for Study 2 of Problem (

20).

Figure 15.

Performance obtained by iterative tuning for Study 3 of Problem (

20).

Figure 15.

Performance obtained by iterative tuning for Study 3 of Problem (

20).

The results for the three studies are provided in

Figure 13,

Figure 14 and

Figure 15. On the whole, we see that the solver reliably optimizes control performance in both the noiseless and noisy scenarios, even though we note that the rate of improvement can vary from problem to problem. For the noisy cases, we generally see more “bumps” in the convergence trajectory, which should not be surprising given (a) the added difficulty for the solver in estimating local derivatives and (b) the reduced conservatism in the estimation of the quadratic bound constants

M, for which the safety factor

η in Equation (

16) is generally augmented less frequently when noise is present. However, for the latter point, we see that there is an upside with regard to convergence speed. Because the values of

M tend to be less conservative in the presence of noise, the algorithm tends to take larger steps and progresses quicker towards the optimum, as is witnessed in both

Figure 13 and

Figure 15. We do note the occasional danger of performance worsening due to tuning, but this is almost always restricted to the earlier runs when the solver is relatively “data-starved”.

4.4. Tuning a System of PI Controllers for Setpoint Tracking and Disturbance Rejection

Here, we consider the following five-input, five-output dynamical system:

While the user cannot be assumed to know the plant (

21), we will assume that they have been able to properly decouple the system with the input-output pairings of

(as this is evidently the superior choice if one considers the relative gains). A system of five PI controllers is used for the pairings:

which, of course, is not perfect, since the decoupling is not either, and so what one controller does will inevitably affect the others.

The ICT problem that we define for this system consists of starting with all and defining the setpoints of , and as 1 (which makes this a tracking problem with respect to these outputs) and the setpoints of and as 0 (which makes it a disturbance rejection problem with respect to these two outputs). The total sum of squared tracking errors for all of the outputs is used as the performance metric, with the interval of being considered in the metric computation (a “mask” of 2 time units being employed).

The first five tuning parameters are simply defined as the controller gains, with . As in the previous example, we use a scaled version of the integral times to define the rest, with . Once again, as we do not know a priori what lower and upper limits should be set on these parameters (save the positivity of the ), adaptive inputs with the positivity limitation (as shown in the previous case study) are used.

Furthermore, we suppose the existence of a safety limitation in the form of a maximal value that

is allowed to take, with the constraint

to be met at all times. Using the reformulation shown in

Section 2.2, we may proceed to state this problem in RTO form as:

We note that this problem is a bit more challenging than the ones considered in the previous three studies due to the increased number of tuning parameters, and point out that, were the problem perfectly decoupled, we would be able to solve it as five two-parameter RTO problems in parallel. However, seeing as all of the parameters are intertwined, we have no choice but to optimize over all ten simultaneously—the expected price to pay being a slower rate of performance improvement obtained by the solver. Alternate strategies that are based on additional engineering knowledge, such as optimizing only the parameters of specific controllers or optimizing only the controller gains, could of course be proposed and are highly recommended.

As a somewhat arbitrary design, the initial set is chosen as . Like with the previous example, an additive measurement noise of is added to corrupt the performance metric value that is observed for a given choice of tuning parameters. An additive measurement noise of is added to corrupt the observed values of . Both sets of statistics are assumed to be known for the purposes of SCFO solver configuration. As before, the noiseless scenarios are also considered.

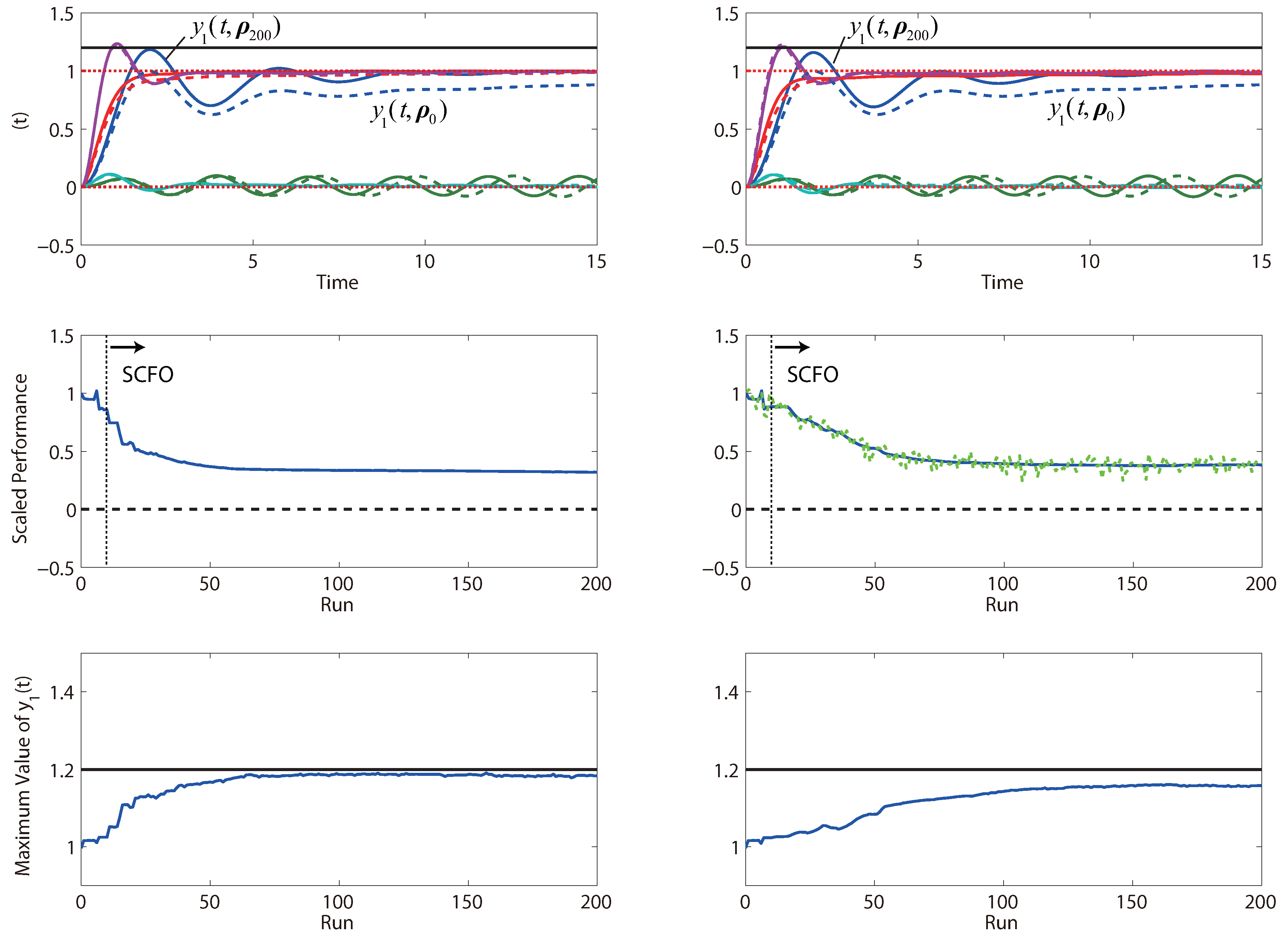

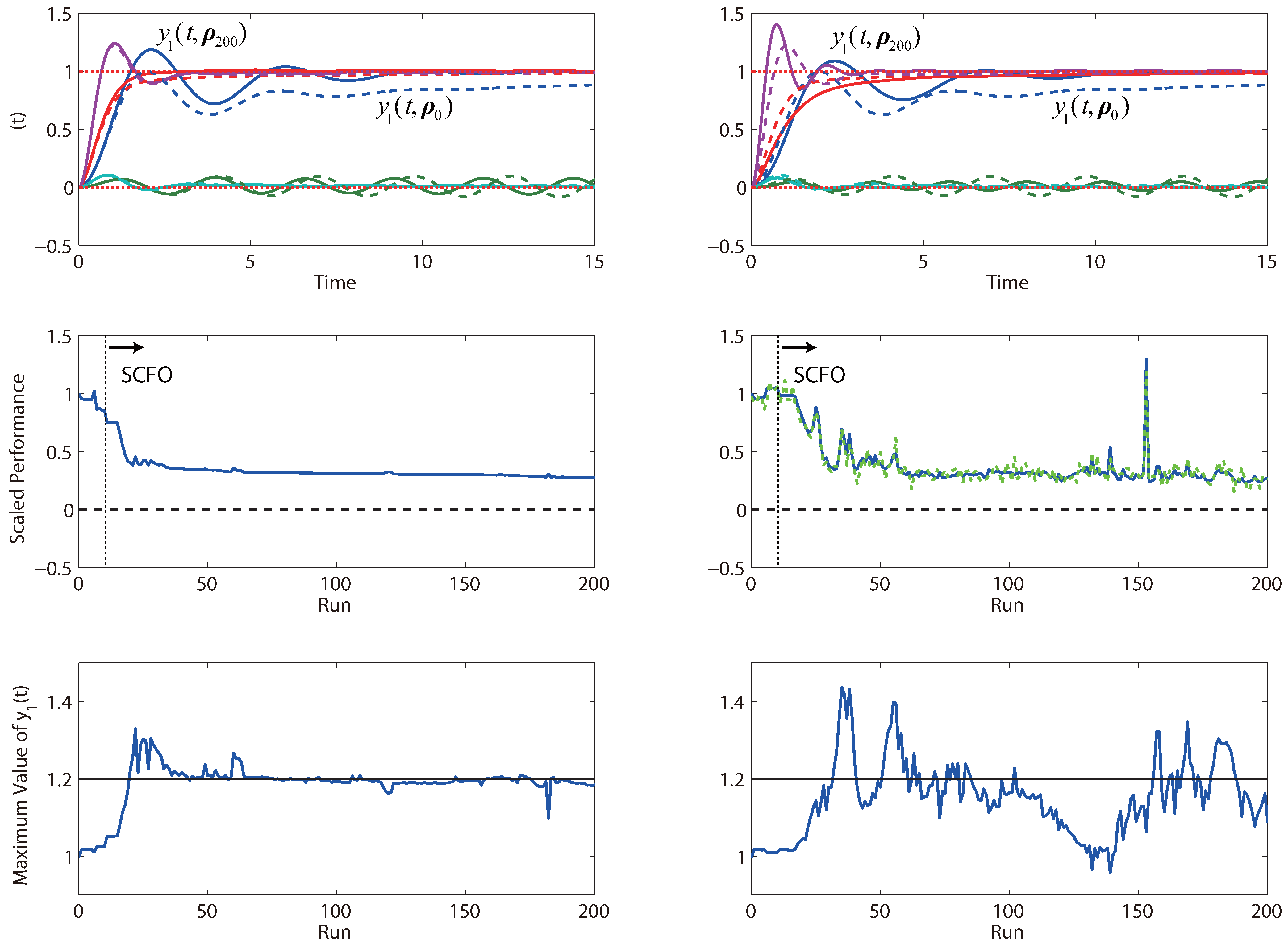

We present the results in

Figure 16, which show that the solver is able to obtain significant performance improvements within 50 iterations for both the noiseless and noisy cases without once violating the output constraint on

. In this case, we see that the noise has the effect of slowing down convergence, which may be explained by the fact that the solver must take even more cautious steps so as not to violate the output constraint. Additionally, the performance that is observed after 200 iterations is a bit worse for the noisy case, which may be seen as being due to the back-off from the output constraint being larger (to account for the noise).

Figure 16.

Performance obtained by iterative tuning for the system of PI controllers in Problem (

22)—the noiseless case is given on the left and the noisy case on the right. For the output profiles, we note that the initial profiles are given as dashed lines, with the final profiles given by solid lines of the same color.

Figure 16.

Performance obtained by iterative tuning for the system of PI controllers in Problem (

22)—the noiseless case is given on the left and the noisy case on the right. For the output profiles, we note that the initial profiles are given as dashed lines, with the final profiles given by solid lines of the same color.

To test the effect of this constraint and to see if it is even necessary, we also run a simulation where the constraint is lifted from the problem statement. The results for this study are given in

Figure 17 and show that not having the constraint in place certainly leads to runs where it is violated. This is not surprising, given that a lot of the performance improvement is obtained by tracking the setpoint of

faster, which is easier to do once there is no constraint on its overshoot. It is also seen that the performance obtained after 200 iterations is generally better than what would be obtained with the constraint—this is, again, not surprising, as removing a limiting constraint should allow for greater performance gains. We do note that the noisy case is more bumpy without the constraint, which is expected, as there is less to limit the adaptation steps and more “daring” adaptations become possible. While some of the bumps may be quite undesired (particularly, the one noted just after the 150

th run), the algorithm remains, on the whole, reliable, as it keeps the performance metric at low values for the majority of the runs despite significant noise corruption.

Figure 17.

Performance obtained by iterative tuning for the system of PI controllers in Problem (

22) (without an output constraint).

Figure 17.

Performance obtained by iterative tuning for the system of PI controllers in Problem (

22) (without an output constraint).