1. Introduction

Modern process industries rely on dependable measurements from instrumentation in order to achieve efficient, reliable and safe operation. To this end, the concept of the

Internet of Things is receiving wider acceptance in the industry. This is resulting in facilities with massively instrumented intelligent sensors, actuators, and other smart devices gathering real-time process knowledge at a high frequency. However, in order to take take full advantage of such abundant data streams, we need to extract useful information from them as outlined in the vision for future smart manufacturing platforms [

1]. A typical application of processing large data streams is flare monitoring. Industrial flares are safety devices designed to burn waste gas in a safe and controlled manner [

2], and they are commonly used in petroleum refining. The flares can be especially useful during non-routine situations—such as power outages, emergency conditions or plant maintenance activities. Conventionally, flare monitoring work relies on thermal imaging cameras to recognize the difference in the heat signature of a flare stack flame and the surrounding background [

3]. In this paper, we will demonstrate that plant-wide historical data can also be used to monitor flares effectively.

Raw data from field instrumentation stored in historians is difficult to use directly for modeling until it is cleaned and processed. One of the most important aspects of data cleaning and pre-processing is to remove erroneous values (i.e., outliers in this paper) that are inconsistent with the expected behaviors of measured signals during that timeframe. In chemical processes, outliers could be generated through malfunction of sensors, erroneous signal processing by the control system, or human-related errors such as inappropriate specification of data collection strategies. Outliers can negatively affect main statistical analyses such as the

t-test and ANOVA by violating corresponding distribution assumptions, masking the existence of anomalies and swamping effects. Outliers can further negatively impact downstream data mining and processing procedures such as system identification and parameter estimation [

4].

Numerous methods have been proposed for outlier detection, and decent reviews can be found in the work done by experts from different fields such as computer science [

5] and chemometrics [

6,

7]. Generally, based on whether we have knowledge of a process model a priori, we can categorize those methods into model-based and data-driven, and the latter can be further divided into four subcategories. First, from the perspective of regression theory, removing outliers from data sets is equivalent to estimating the underlying model of “meaningful” values. Several robust regression-based estimators in the presence of outliers are proposed, including L-estimator [

8], R-estimator [

9], M-estimators [

10], S-estimators [

11], etc. Although the estimators are simple to implement and take advantage of relations between variables, they do not work well when variables are independent, and the iterative procedures of deleting and refitting will significantly increase the computational cost.

Second, if we focus on estimating data location and scale robustly, we can apply several proximity-based methods including the general extreme studentized deviate (GESD) method [

12,

13], Hampel identifier [

14], quartile-based identifier and boxplots [

15] or minimum covariance determinant estimator [

16]. It is important to point out that a critical assumption of proximity-based methods is that the data follow a well-behaved distribution. However, in the majority of cases, such an assumption does not hold in chemical processes data due to transient dynamics of measured signals. As a result, being able to discriminate outliers from normal process dynamics poses a challenge for end-users of process data. In current literature, a moving window technique is often used to account for process dynamics by performing statistical abnormality detection within a smaller local window [

4]. However, such an approach does not always give satisfactory results when the time scales of variations in the datasets are non-uniform (fast and slow dynamics occurring in the same dataset).

Recently, machine learning methods have become increasingly popular in outlier detection. Typical examples include k-means clustering [

17], k-nearest neighbor (kNN) [

18], support vector machine (SVM) [

19], principal component analysis (PCA) [

20,

21], and isolation forest [

22]. The general advantage of machine learning algorithms lies in their capability to explore interactions between variables and computational efficiency. In this paper, we use isolation forest as the reference method, which isolates abnormal observations through randomly selecting a value between the maximum and minimum values, partitioning the data and constructing a series of trees. The average path length from the root node to the leaf node of a tree over the forest is used to measure the normality of an observation and outliers usually have shorter paths.

The final class of outlier detection methods is time series based including the time series Kalman filter (TSKF) [

23]. The TSKF approximates normal process variations in the signal by univariate time-series models such as an autoregressive model (AR) and then identifies observations that are inconsistent with the expected time-series model behavior. The advantages of the TSKF method include robustness against ill-conditioned auto-covariance matrices (by using Burg’s model estimation method [

24]) while maintaining the original dataset integrity. Based on case studies in [

23], the TSKF method obtains superior performance with stationary time series; however, it does not perform well on non-stationary process data with distribution shifts like product grade transitions. In addition, considering the fact that continuous operations of many chemical plants, especially petrochemical ones, are scattered along different operating regions with frequent mean shifts for process variables [

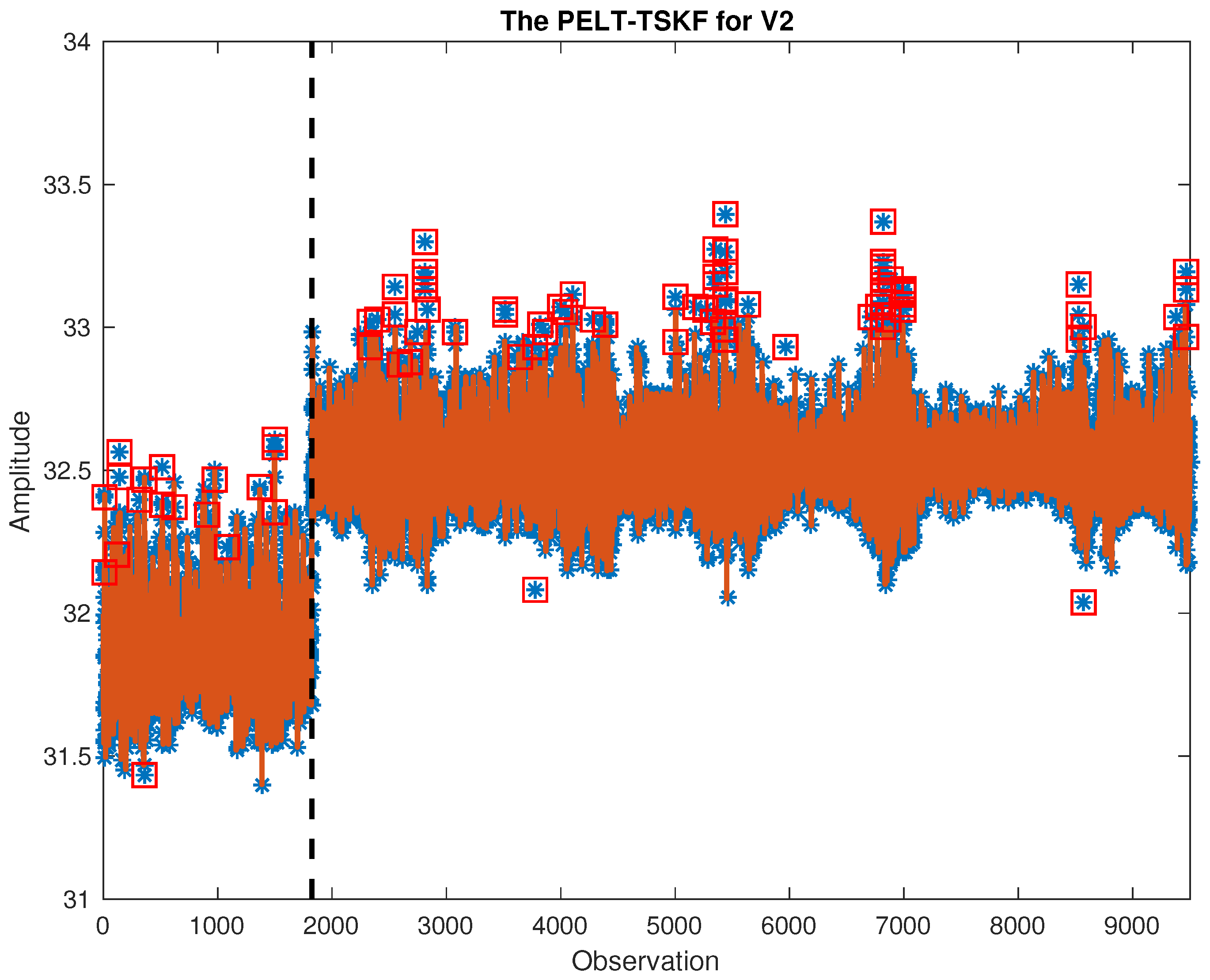

25], detecting outliers in such situations becomes quite challenging because data no longer follow a single well-behaved distribution. As a result, we have to find ways to help us quickly pinpoint the mean shift locations of each variable to improve the overall performance of univariate outlier detection within each operating region bracketed by changepoints.

Generally speaking, changepoint analysis focuses on identifying break points within a dataset where the statistical properties such as mean or variance show dramatic differences from previous periods [

26]. Assuming we have a sequence of time series data,

, and the data set includes

m changepoints at

, where

. We define

and

, and assume that

are ordered, i.e.,

if and only if

. As a result, the data

are divided into

segments with the

ith segment containing

.

Changepoints can be identified by minimizing:

where

ℓ represents the cost function for a segment and

is a penalty to reduce over-fitting. The cost function is usually the negative log-likelihood given the density function

, as shown in Equation (

2):

where

can be estimated by maximum likelihood given data within a single stage. The penalty is commonly chosen to be proportional to the number of changepoints, for example,

, and it grows with an increasing number of change points. For data

, if we use

to represent the optimal solution of Equation (

1), and define

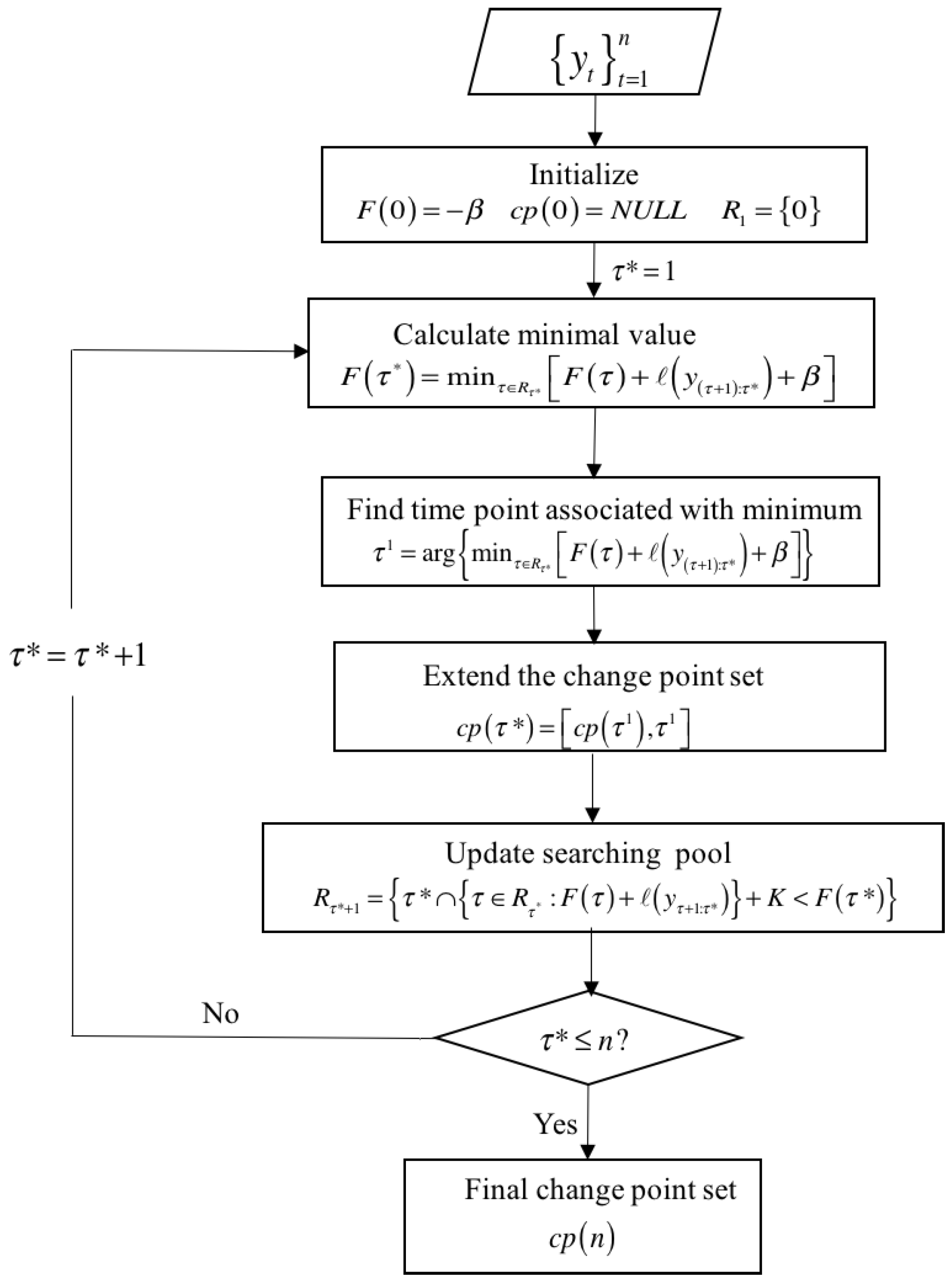

to be the set of possible changepoints, the following recursion from optimal partitioning (OP) [

27] can be used to give the minimal cost for data

with

:

where

. The recursion can be solved in turn for

with a linear cost in

s; as a result, the overall computational cost of finding

using an optimal partitioning (OP) approach is

.

Other widely used changepoint search methods include binary segmentation (BS) [

28] and segment neighborhood (SN) method [

29] with

and

computational costs (

Q is the maximum number of change points), respectively. Although the BS method is computationally efficient, it does not necessarily find the global minimum of Equation (

1) [

26]. As for the SN method, since the number of change points

Q increases linearly with

n, the overall computational cost will become high with

. Bayesian approaches have also been reported [

30,

31] for changepoint detection, but the associated heavy computational cost cannot be overlooked. In this paper, because we deal with an enormous amount of high-frequency industrial data, a pruned exact linear (PELT) algorithm [

26] with

computational efficiency is adopted.

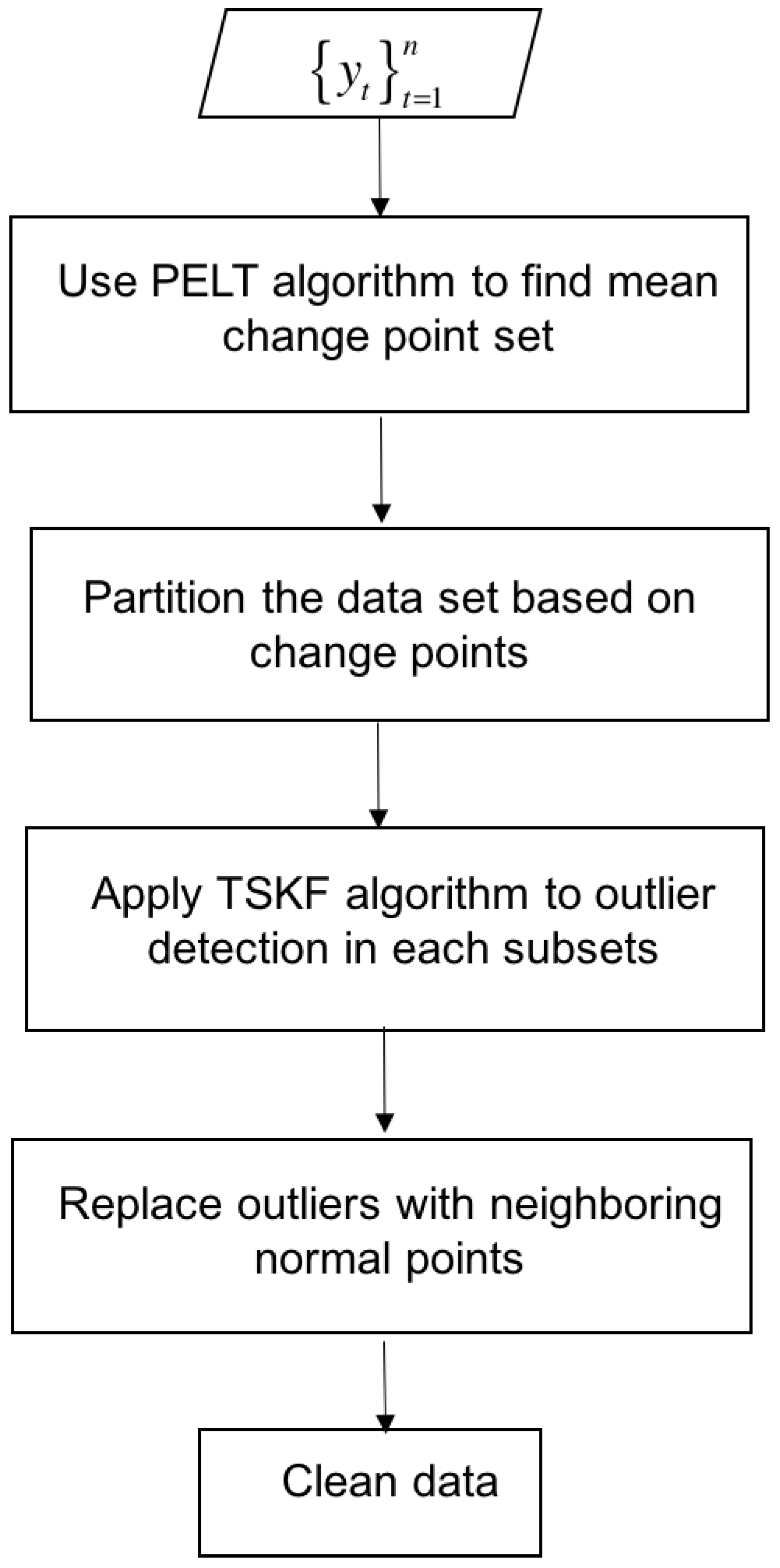

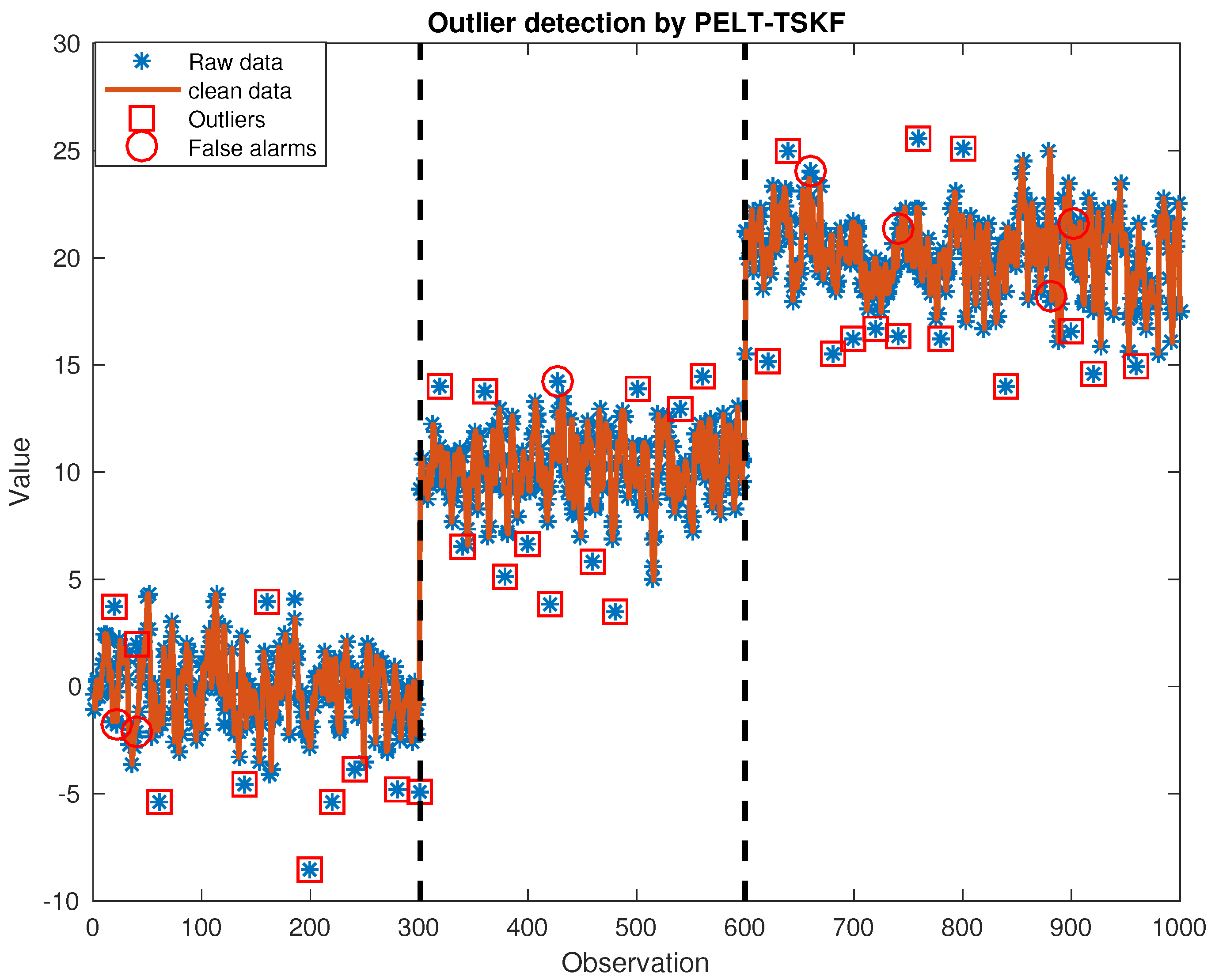

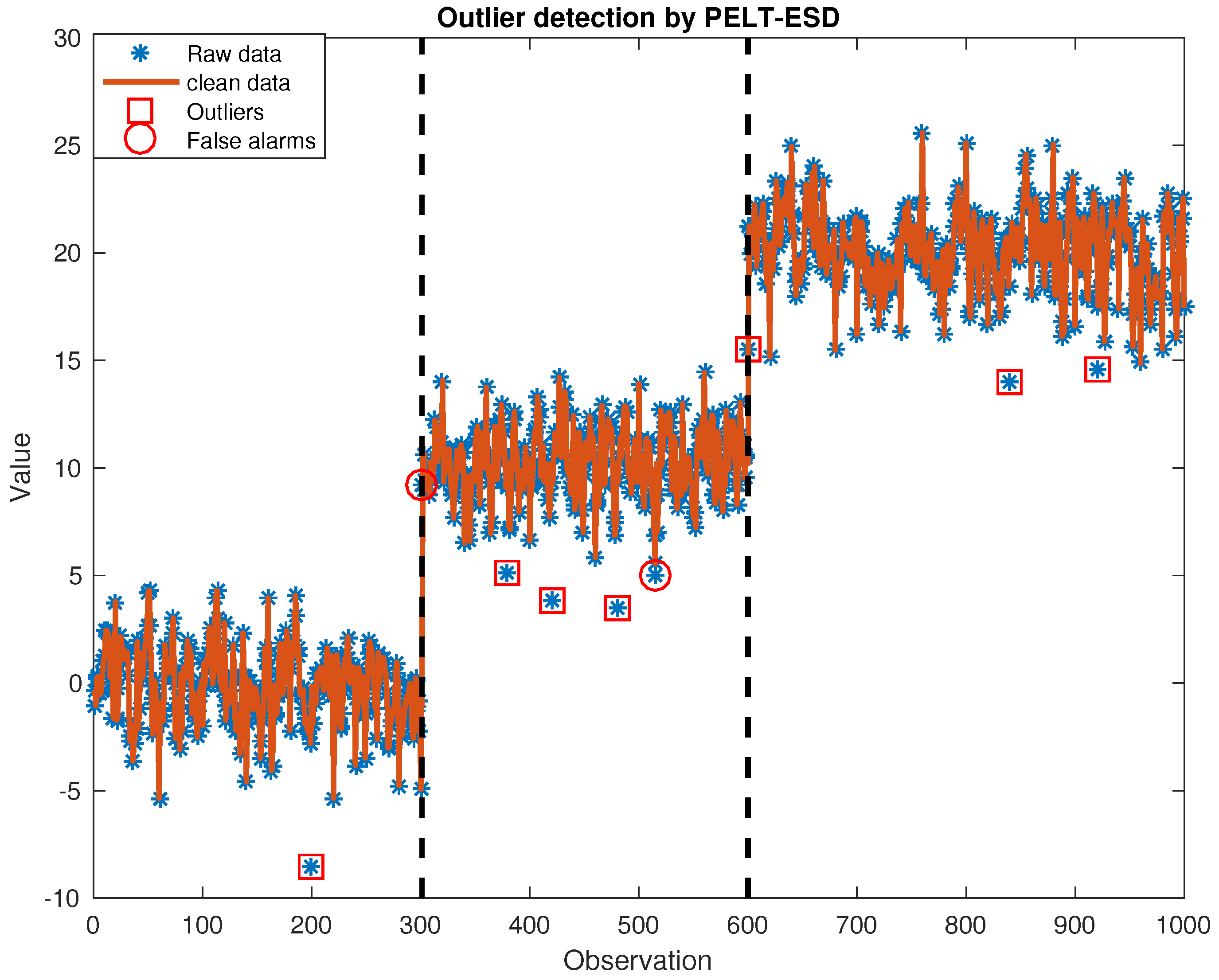

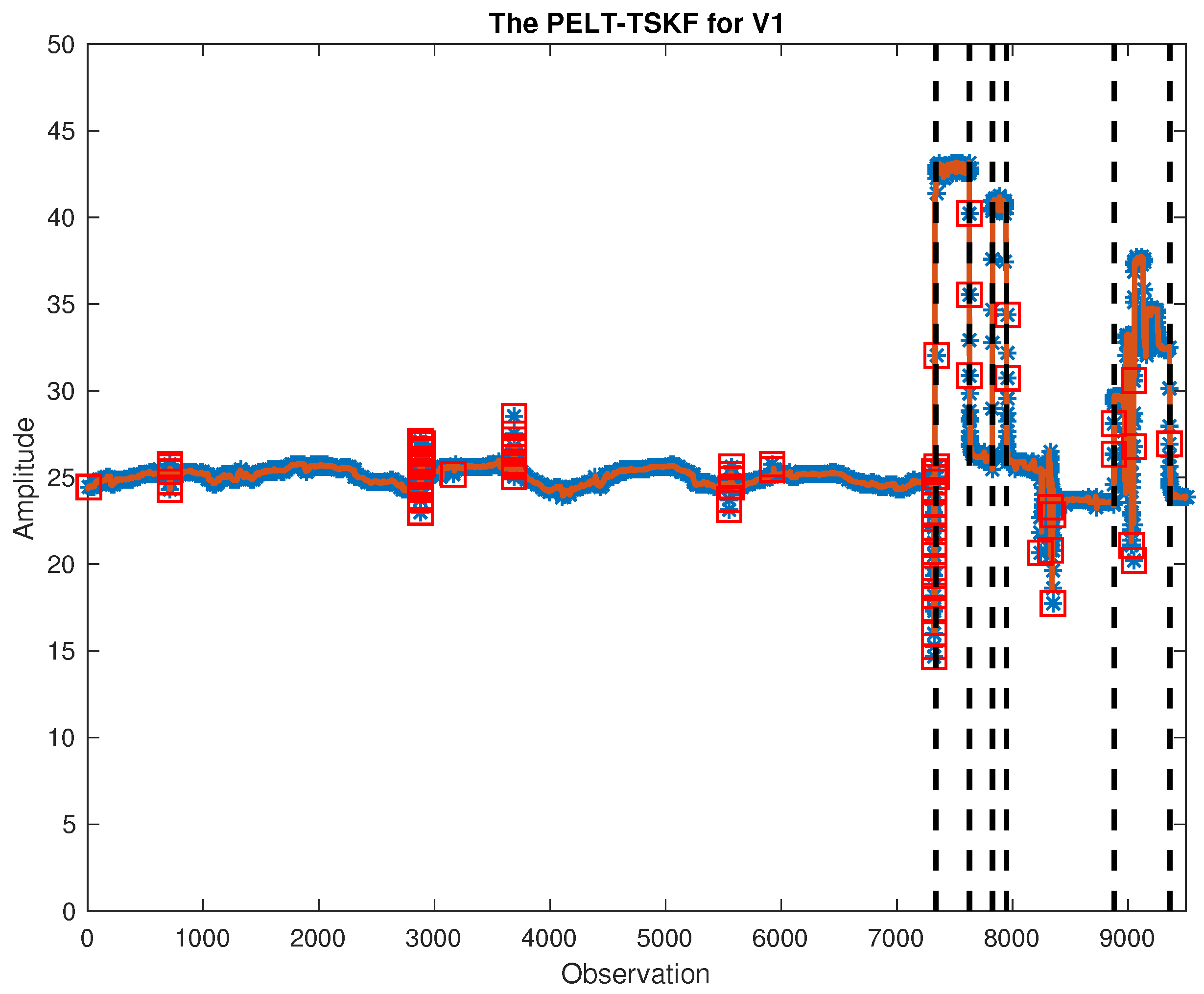

The article is organized as follows. In

Section 2.1 to

Section 2.3, after an introduction to both methods, we propose a strategy to integrate TSKF with PELT to improve TSKF’s performance in handling outlier detection in a dynamic data set with multiple operating points for each variable. In

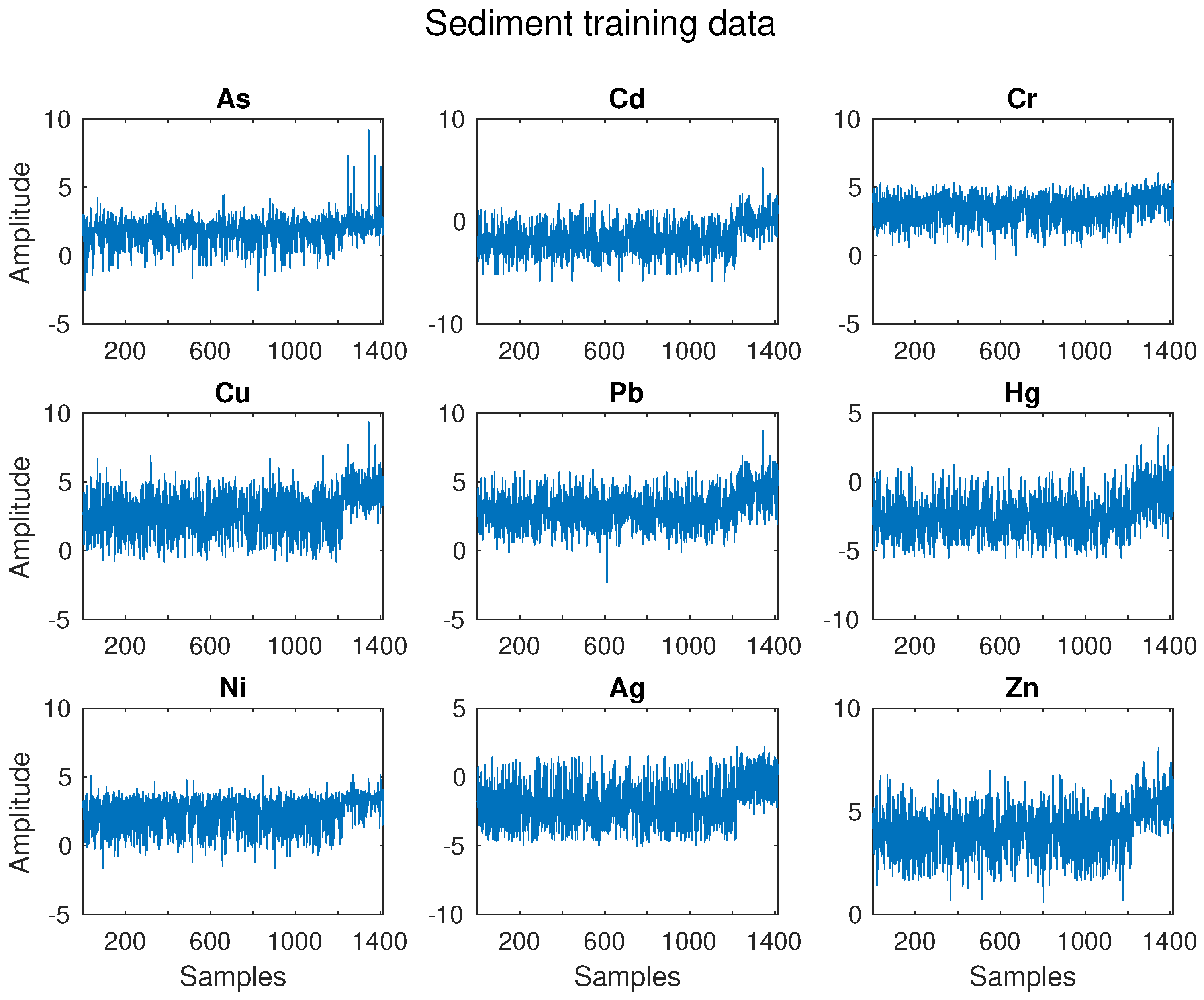

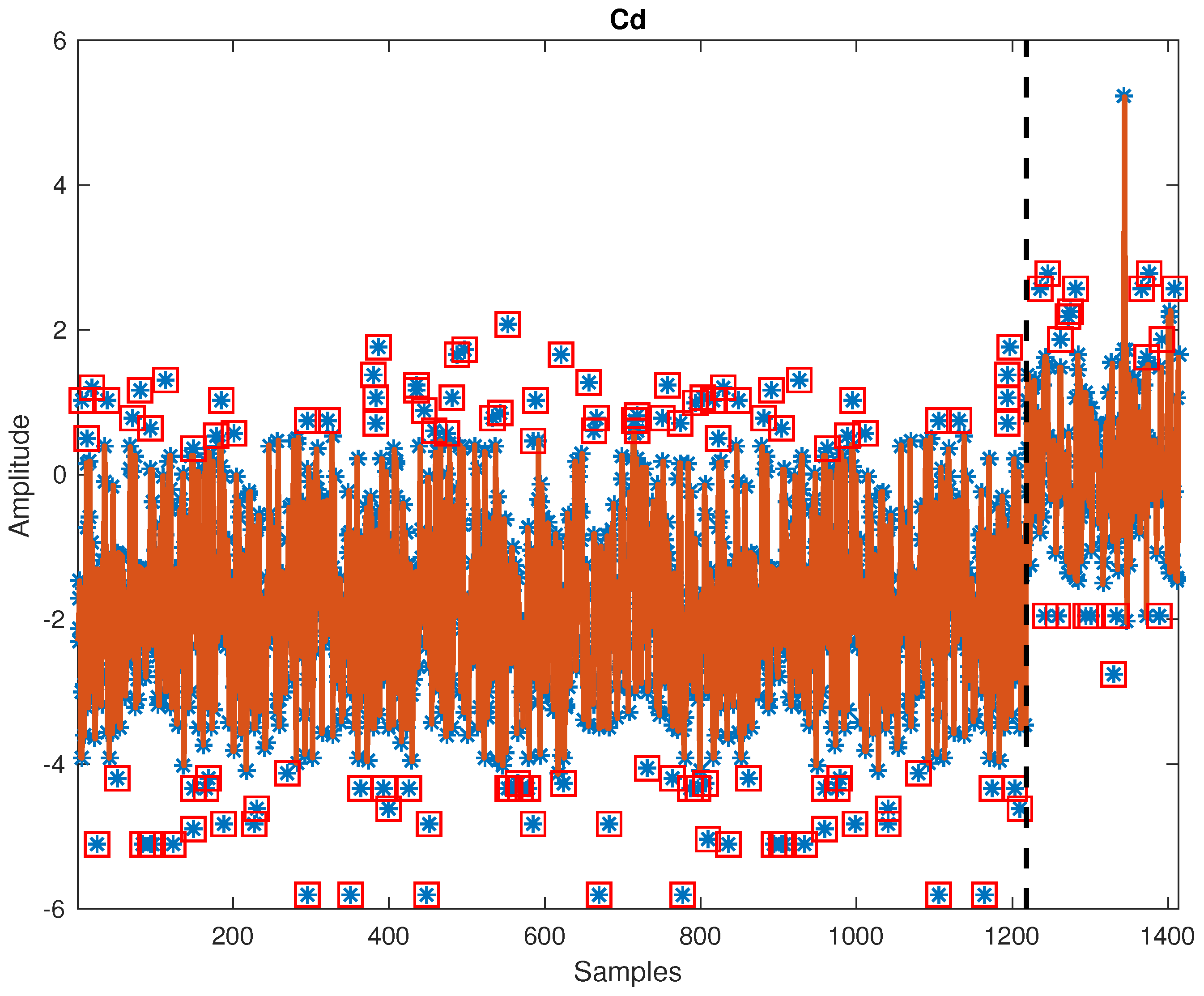

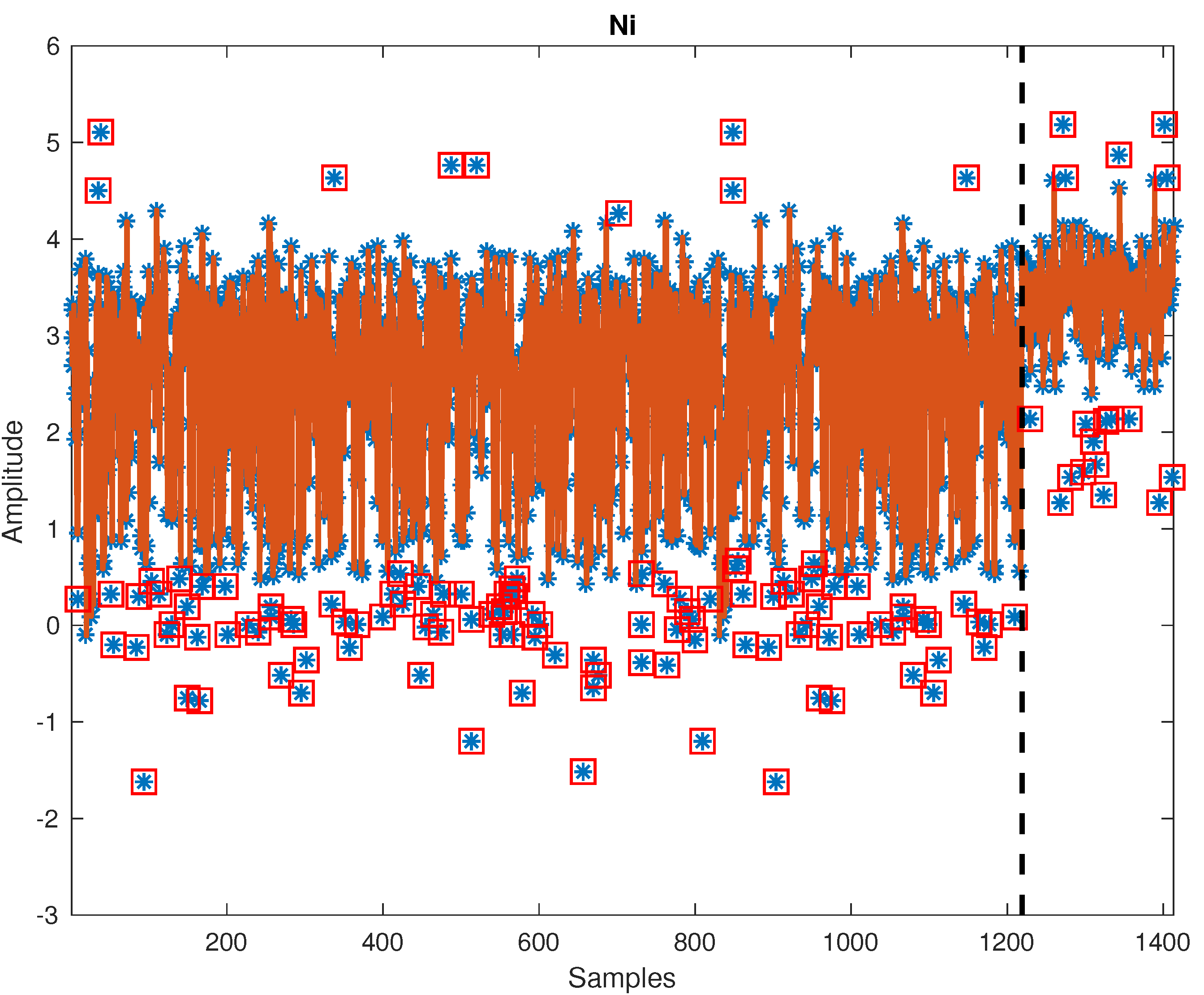

Section 2.4, the partial least squares discriminant analysis (PLS-DA) is briefly described to facilitate the understanding of how such a data-driven approach is applied in practice. In

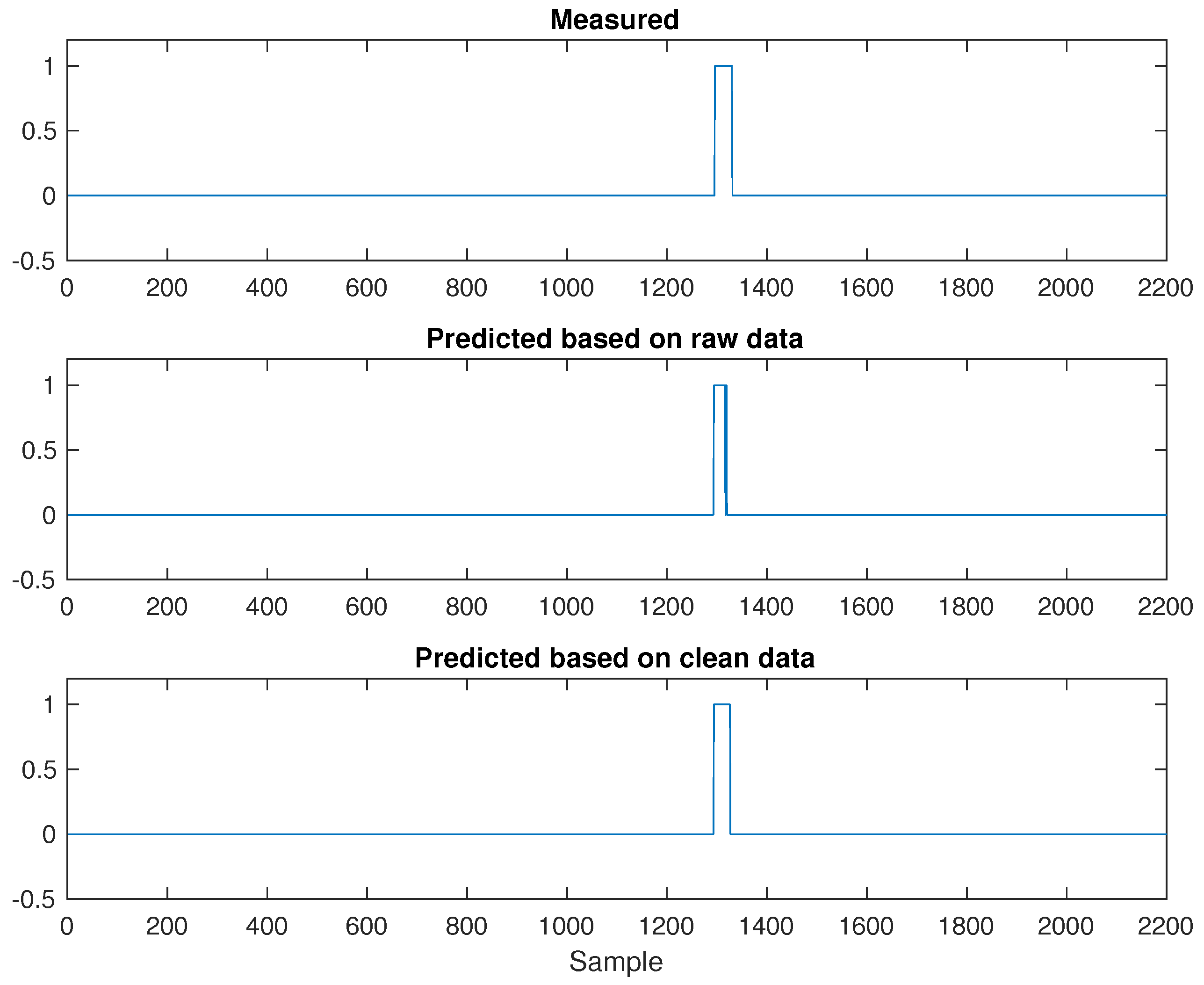

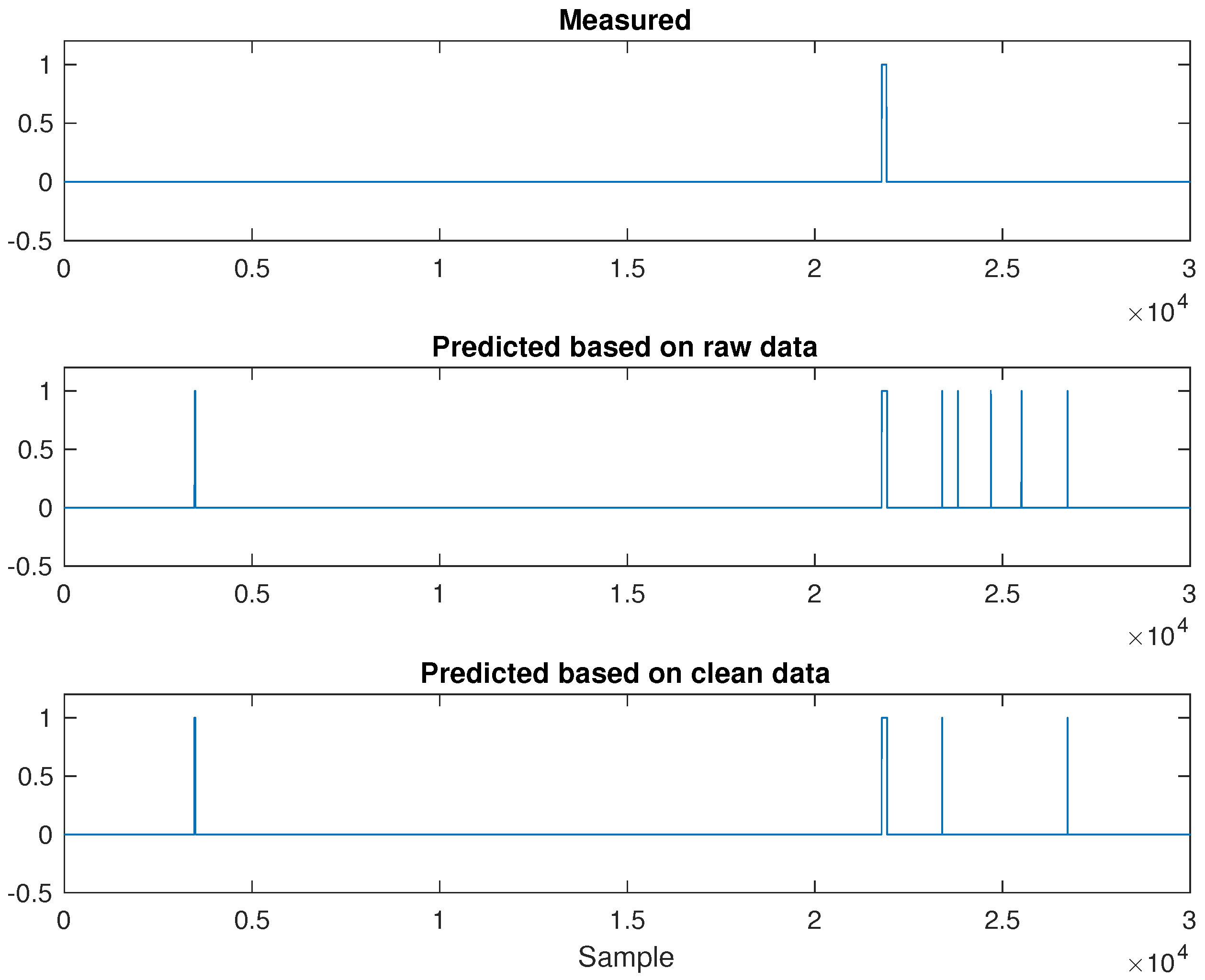

Section 3.1, the new outlier detection methodology framework is tested using simulated data set and compared with the conventional general extreme studentized deviate (GESD) and the latest isolation forest methods. Furthermore, The PELT-TSKF is applied to pre-processing both sediment and industrial flare data sets, and its efficacy in improving PLS-DA results are demonstrated in

Section 3.2. Although this paper is mainly focused on building data-driven models for industrial flare monitoring, the sediment toxicity detection work is shown as an additional case study to demonstrate the proposed methodology.Discussion on the results of case studies is provided in

Section 4. Finally, conclusions and future directions are given in

Section 5.