1. Introduction

Equipment throughput (ETH), the basis of capacity management, is absolutely necessary information for practitioners in manufacturing because its timeliness and reliability significantly affect the performance of production systems. For example, as a key parameter for production planning and scheduling, inaccurate or out-of-date ETH usually leads to suspect results, which may hurt the commitment-to-customer or expected profit. In addition, for capital-intensive industries such as semiconductor manufacturing, any capacity loss from ineffective ETH management may cause a hugely rising production cost. Therefore, a systematic and reliable solution for ETH management is a prerequisite to the success of advanced semiconductor wafer fabrication plants (Fabs). Unfortunately, estimating and maintaining reliable ETH is not an easy task for large complex manufacturing systems such as Fabs.

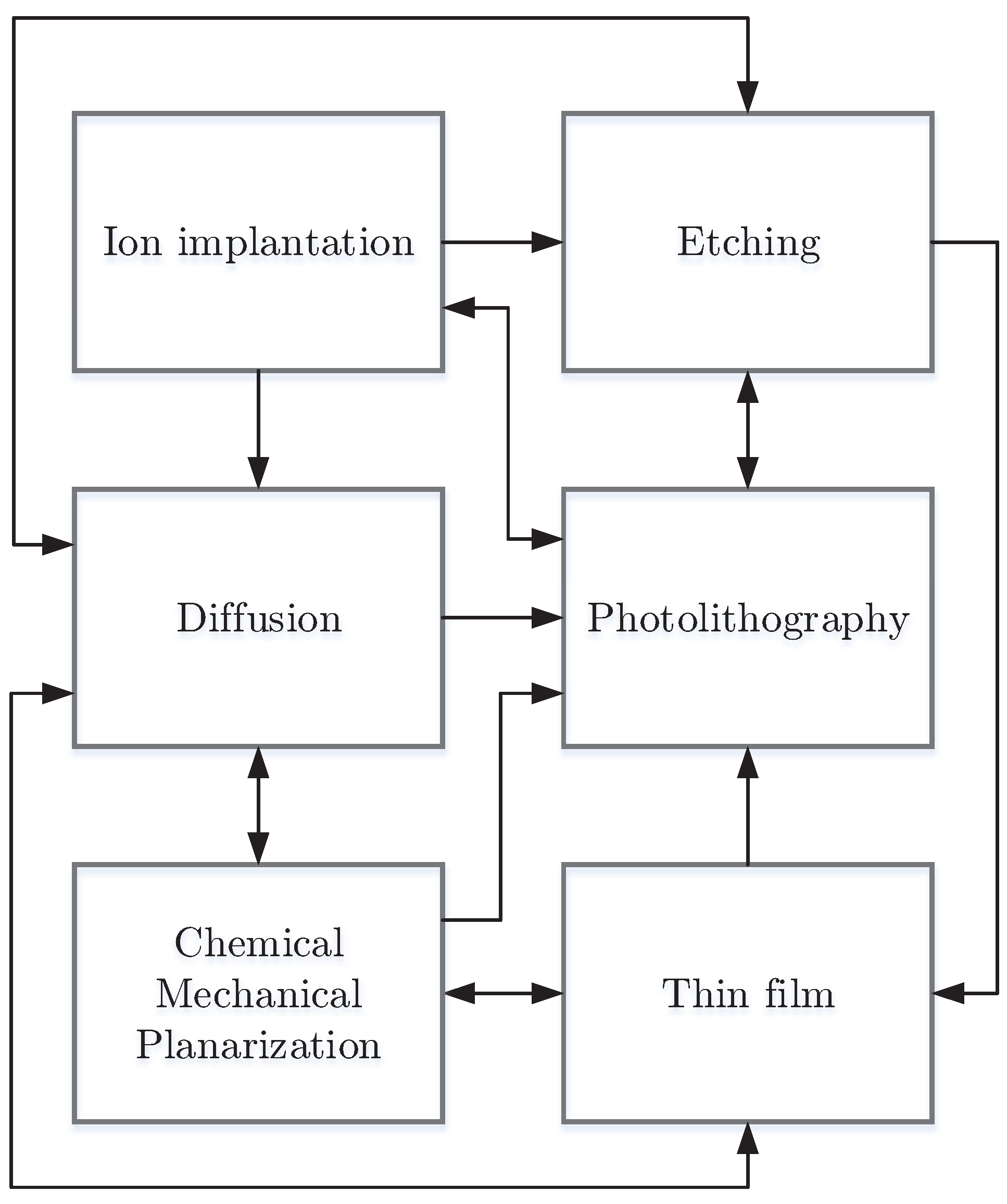

The manufacturing process of semiconductor chips often involves hundreds of processing steps being executed layer by layer onto a bare wafer. The whole process is composed of a few repeating unit processes: thin film, photolithography, chemical mechanical planarization, diffusion, ion implantation and etching. This nature of semiconductor manufacturing leads to long cycle times and affects the changes of wafer size not only due to enhancing the throughput, but also reducing production cost per chip, thereby using the same number of process steps to produce more chips. Highly automated and integrated processing tools (IPT) in advanced manufacturing systems such as Fabs are adopted to answer the need for such a complicated manufacturing requirement. However, this kind of complex production system also brings new challenges to practitioners.

Work measurement has been widely used as a method for establishing the time for qualified labor to complete a particular operational task at a satisfactory level of performance [

1]. For fully-automatic IPT equipment, this kind of method is applied for estimating and determining the ETH. The results of work measurement are the foundation for manufacturing industries in order to establish the capacity model and to develop further production planning and scheduling [

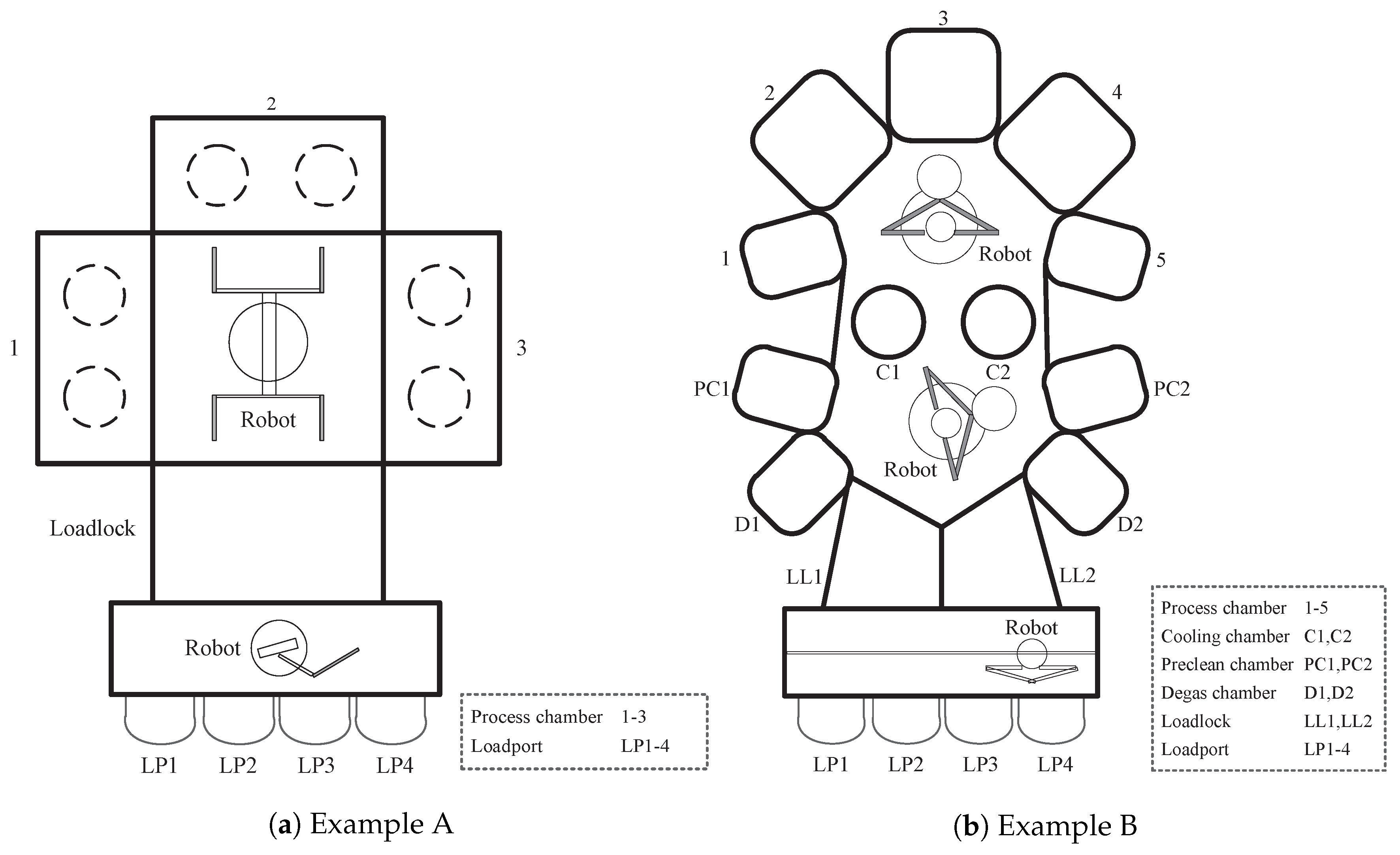

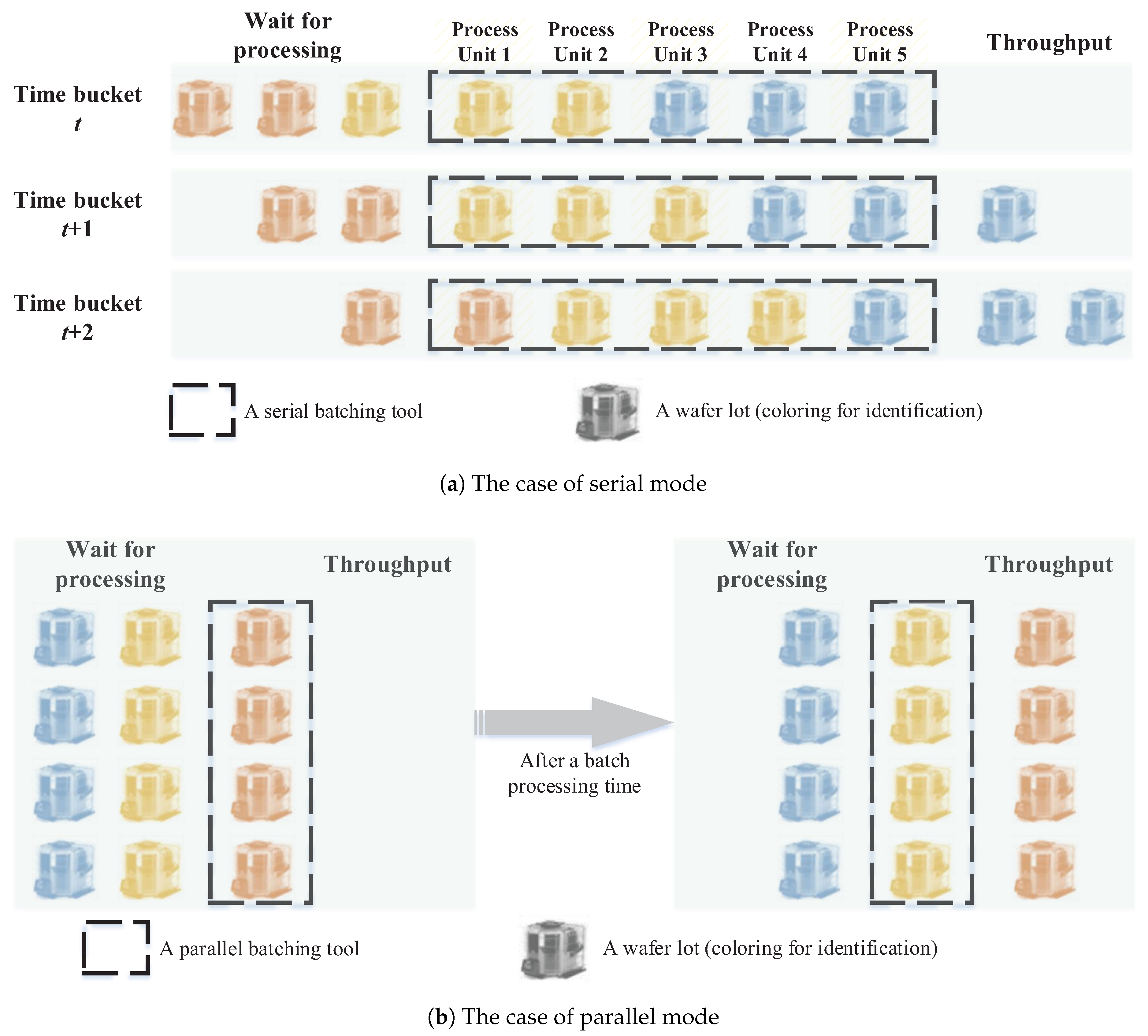

2]. Due to the increasing complexity of production equipment in Fab, the difficulty is increasing. In modern Fabs, connecting several process modules around a central handler, IPT is capable of processing multiple wafers from the same lot simultaneously. This kind of equipment has been common for decades because of its lower floor-space requirement, lower capital cost, shorter cycle time, higher yield, better process control and less operator handling [

3,

4,

5,

6]. Logically, many IPT equipment units can be seen as a job-shop production line or even a small factory. Therefore, modeling and performance analysis for IPT has never been easy and is always a research focus in semiconductor manufacturing, for example [

7,

8,

9,

10,

11].

In semiconductor manufacturing, measurement of overall equipment effectiveness (OEE) [

12,

13] is absolutely a manufacturing best practice [

14]. Since OEE has been recognized as a standard efficiency index for measuring production equipment beginning in the late 1980s, many studies attempted to propose a better performance index to further represent the overall equipment efficiency or not just at the equipment level; for example, overall input equipment efficiency (OIE) for measuring the resource usage efficiency of a machine and total equipment efficiency (TEE) for measuring the usage efficiency of a machine [

15], overall throughput effectiveness (OTE) for measuring factory-level performance [

16], overall tool group efficiency (OGE) for measuring the equipment performance at the tool group level [

17] and overall wafer effectiveness (OWE) for measuring wafer productivity [

18]. In any case, ETH is not only a key input for OEE calculation, but also for the calculation of the others.

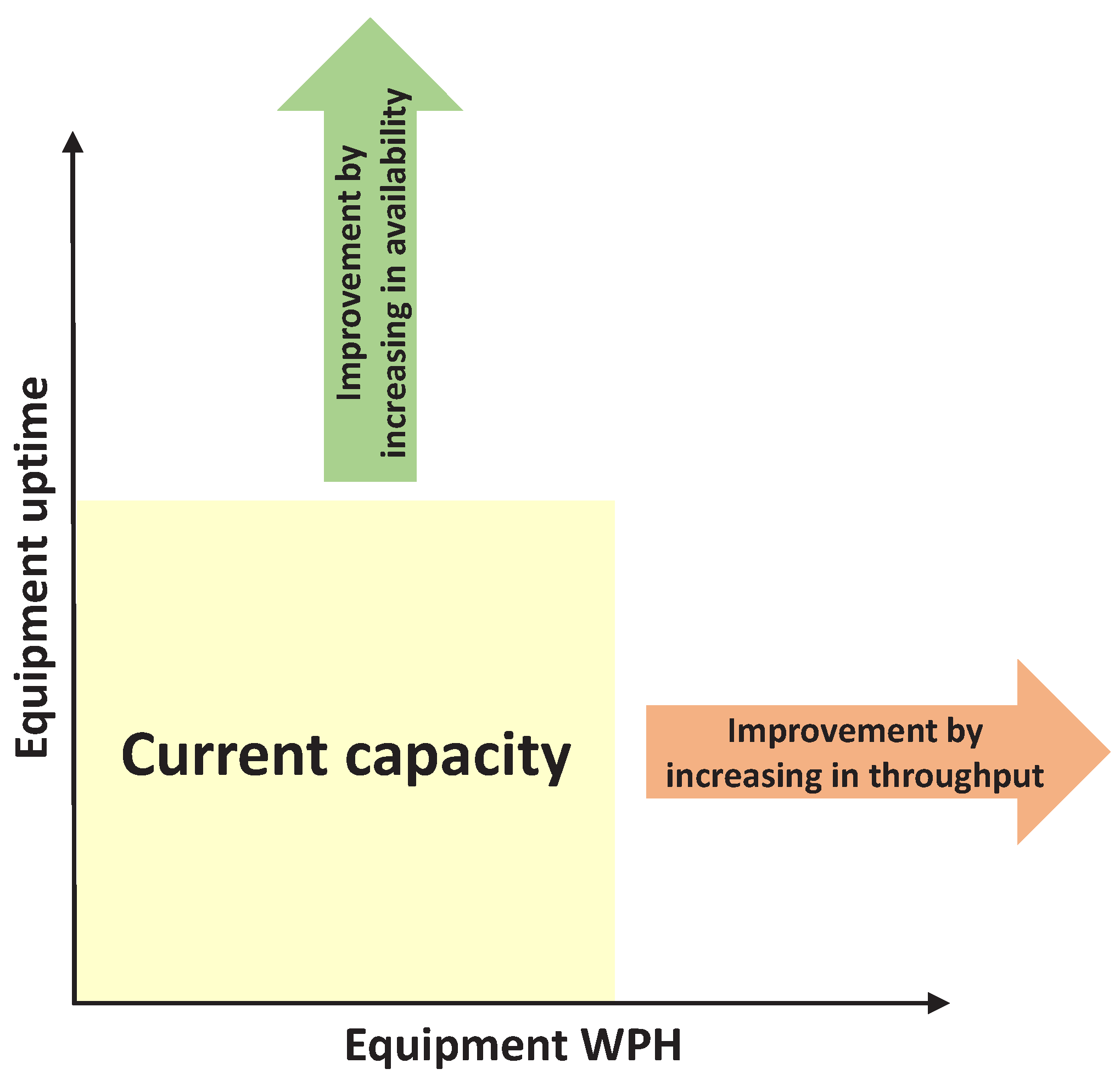

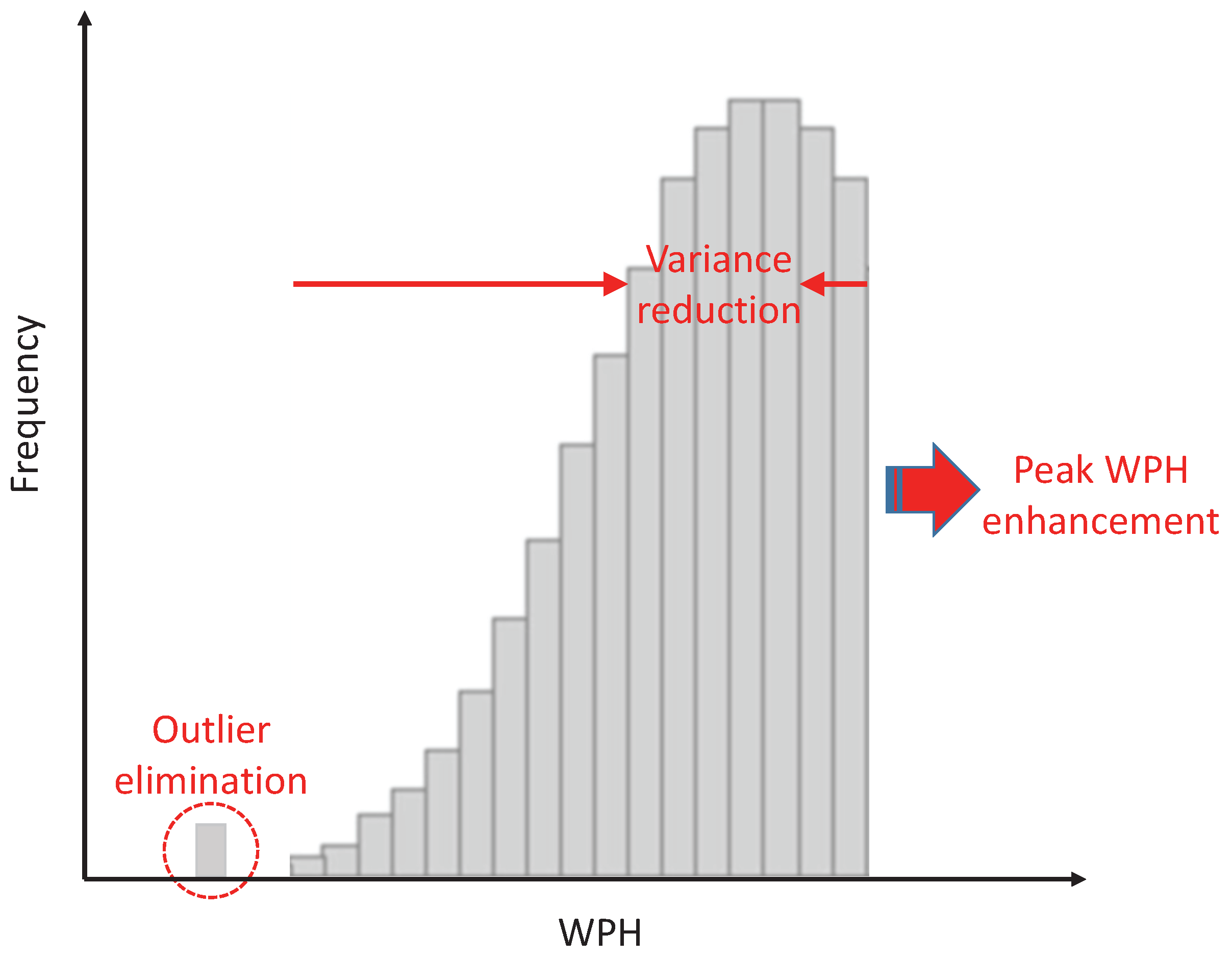

Wafer per hour (WPH) is the most common performance index for measuring the ETH among worldwide Fabs. In general, there are two kinds of WPH: actual WPH and peak WPH; the former is the real performance of ETH, and the latter is the theoretical extreme performance of ETH. The actual WPH is often obtained by direct measurement, which means treating the equipment as a black box and then recording how many wafers have completed the process during a period of estimation. Obviously, repeated sampling is necessary due to the existing random noise in processing. This method often relies on a massive workforce to maintain its reliability; even in a highly automated Fab with an advanced manufacturing execution system, it can still be time consuming because of complex data screening and pre-treatment.

As for the peak WPH, parameterized models are formulated for estimation. A set of mathematical formulas represents different types of equipment; each formula consists of a few parameters, e.g., time for cleaning the chamber or breaking the vacuum, and any ECN (engineering change notice) may trigger the update of the value of these parameters [

19]. Because some mandatory activities happen during wafer processing and reduce peak WPH as well, the impact cannot be reflected by an adjusted plan uptime. Therefore, a revised peak WPH, plan WPH, is relaxed by a tolerance derived from these activities for reasonable results of production planning such as resource requirements planning, rough-cut capacity planning and capacity requirements planning. However, the tolerance determination is not a straightforward task [

20], and the calculation of actual WPH cannot completely avoid including unscheduled events behind the data. Thus, there is often a gap between the plan WPH and the actual WPH. Either way, continuously tracing these indices and analyzing their trends can always provide the fuel to keep rolling the wheel of the plan-do-check-act (PDCA) cycle [

21] for Fab performance enhancement.

In order to monitor all kinds of WPH in a sustainable manner, there is a need to develop a WPH management system that could automatically and periodically estimate and calculate all kinds of WPH for equipment units in the Fab. Because of the high complexity of production equipment and the large number of equipment units, performance monitoring for production equipment in Fabs is a challenge and also has been an attractive research topic for years [

22,

23,

24,

25,

26,

27]. In addition, wafer lots in modern Fabs become much heavier; the automated material handling system (AMHS) has been the main stream on the shop floor for a long time. Due to the advanced intelligent lot scheduling and dispatching built around AMHS to control and execute the complex handling operations precisely, there are many manufacturing execution-related systems (MES) for supporting an automatic Fab. However, the system integration and data synchronization become another challenge for developing a WPH management system. Undoubtedly, a systematic mechanism for monitoring WPH is a key to raise the capacity management from the machine level to the whole Fab level. In summary, based on this foundation, more applications, for example like a generic visualization of wafer process flow for troubleshooting [

28] or a predictive analysis for abnormality prevention [

29], could be developed to improve the performance of production systems further.

In this paper, a WPH management system that includes four modules to give effective support for capacity management is introduced and discussed. This system is capable of estimating and monitoring equipment WPH by a recipe in a sustainable manner; additionally, some supporting tools are included to further assist performance improvement and troubleshooting. The main advantages of the proposed solution are: (1) a generic framework for almost every Fab; (2) high flexibility of WPH calculation and estimation; and (3) data-driven decision support tools for abnormality review and troubleshooting. An industry project for implementing the proposed system is also introduced, and results with performance comparison are demonstrated to show the benefits to the capacity management.

This article not only introduces the contents of the system architecture and related methods, but also discusses some implementation details such as challenges, considerations, data requirements and project control. In the rest of the paper, preliminary information on semiconductor manufacturing and the case Fab are introduced. Then, the idea of the proposed solution is discussed in more detail. Next, the implementation of this industry project is introduced, and the performance comparison between the existing system and the proposed system is also presented. Finally, I conclude this study with some perspectives. Please note that although I must keep the company’s name confidential, the information for apprehending the concept of the proposed system and the key points of implementation is covered.

3. WPH Management System

In this section, I present a WPH management system that is designed to maintain and further improve the effectiveness and efficiency of operations management by careful capacity management. This system includes four primary modules: filter, calculator, monitor and analytics. Filter ensures the data used for calculating WPH are qualified and noiseless; calculator, the core of this system, periodically computes WPH by the process recipe for all equipment units in the Fab; monitor is a platform for equipment performance monitoring and the detection of process malfunctions; finally analytics is capable of classifying abnormal recipes into several categories and provides various visual tools for reviewing corresponding historical processing logs. It prevents capacity loss and further ensures the quality of capacity management. Prior to introducing these modules individually, I would like to introduce the system architecture not only for readers who are interested in system design, but also for better understanding of the interrelationship between these modules.

3.1. Systems Architecture

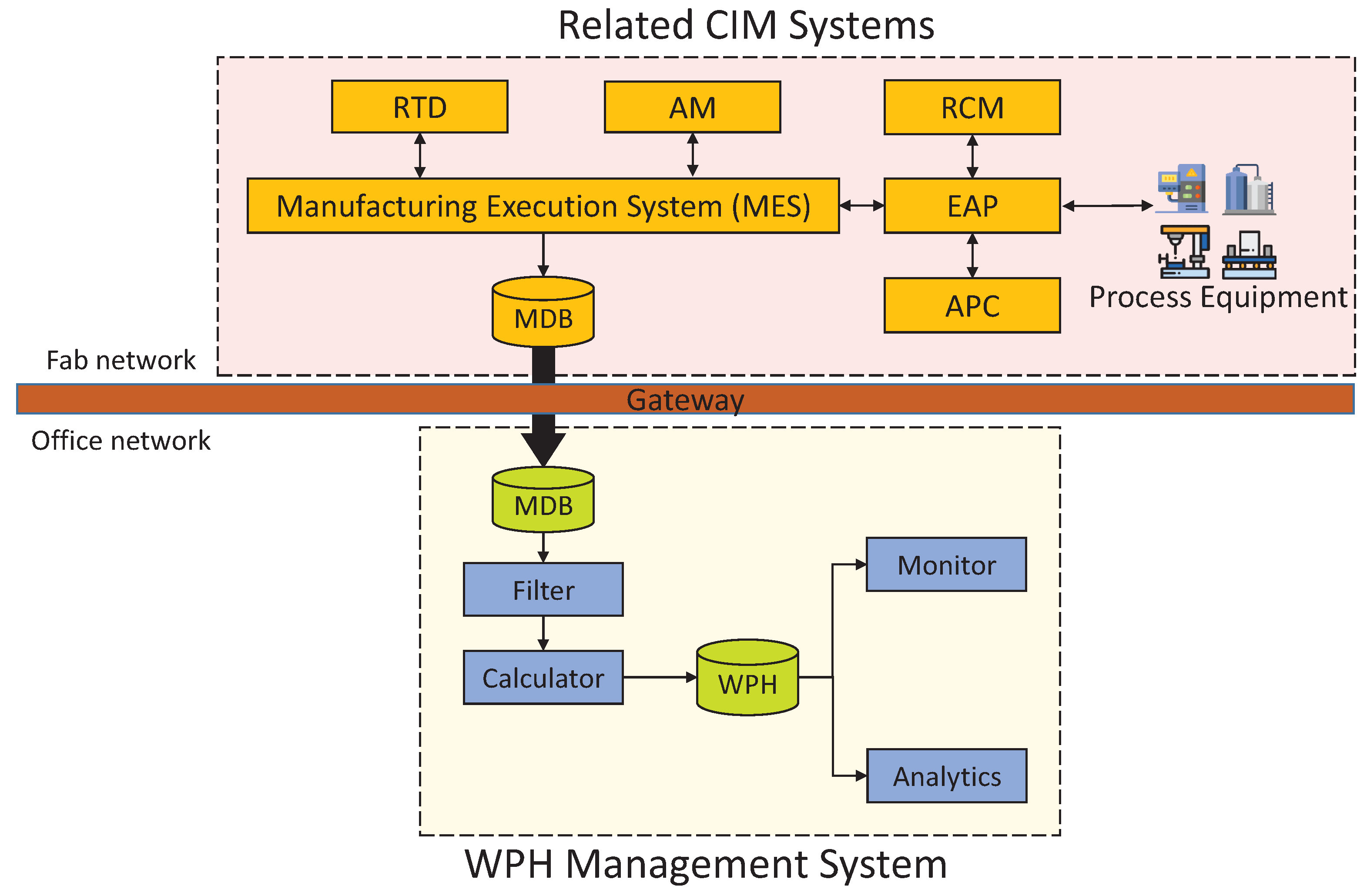

WPH calculation is the basis of the WPH management system and requires an incredibly huge number of processing logs and data. As a control center of modern manufacturing systems, the manufacturing execution system (MES) plays a critical role in shop floor control, and its master database (MDB) is capable of fulfilling the basic data request from WPH calculation.

Figure 7 illustrates the systems architecture to indicate the relationship between primary modules in WPH management system and related CIM (computer-integrated manufacturing) systems. MES coordinates and cooperates with many supporting systems; RTD (real-time dispatcher) determines the next step for each lot while completing a process step; AM (activity manager) is for managing and controlling resources and equipment; EAP (equipment automation program) controls the processing of equipment to avoid misoperations due to operator error and collects processing data from equipment; RCM (recipe control management) allows a central repository and verification for process recipes to achieve systematic management; APC (advance process control) collects product measurements and recipe set points to enhance overall product yields.

Any access to MDB is strictly watched to retain the execution efficiency at a high level; also, because of the connection with all equipment units, the cybersecurity in the Fab network makes MDB access even more rigorous. Therefore, since WPH calculation does not request the entire data of MDB, only partial datasets from MDB are replicated to a database dump in the office network periodically. The data required for WPH calculation are detailed processing logs, which record wafer track-in and track-out times of all traceable internal units (e.g., buffer chamber) for every process equipment unit.

3.2. Filter

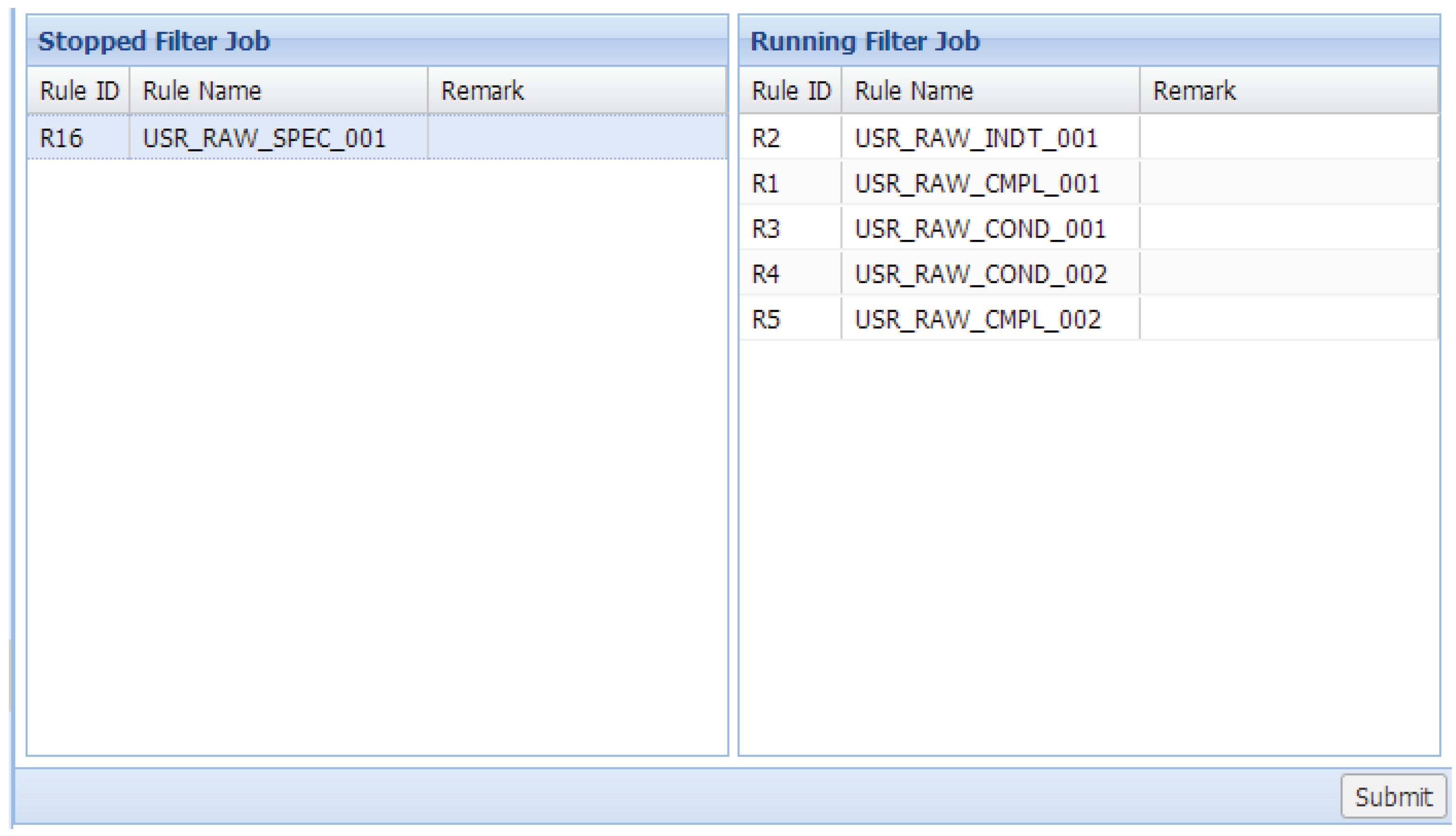

Based on a few prescribed filtering rules, the raw data from MDB are reviewed by the filter before performing WPH calculation in order to screen incomplete and unrepresentative data. These rules are implemented by PL/SQL as stored procedures and are capable of assigning to address the entire dataset or only for those datasets corresponding to a particular equipment type, unit or recipe. Users can adjust the priority for each activated rule to determine which rule will execute prior to another.

Figure 8 is the screen capture from the prototype of the proposed system, and this is an example to demonstrate the user interface for rules management. Each rule corresponds to a stored procedure. Users can change their sequence in the block “Running Filter Job” by drag-and-drop to adjust their priorities. In addition, users can drag a filter rule from the block “Stopped Filter Job” and drop it to the block “Running Filter Job” to activate this rule and vice versa.

3.3. Calculator

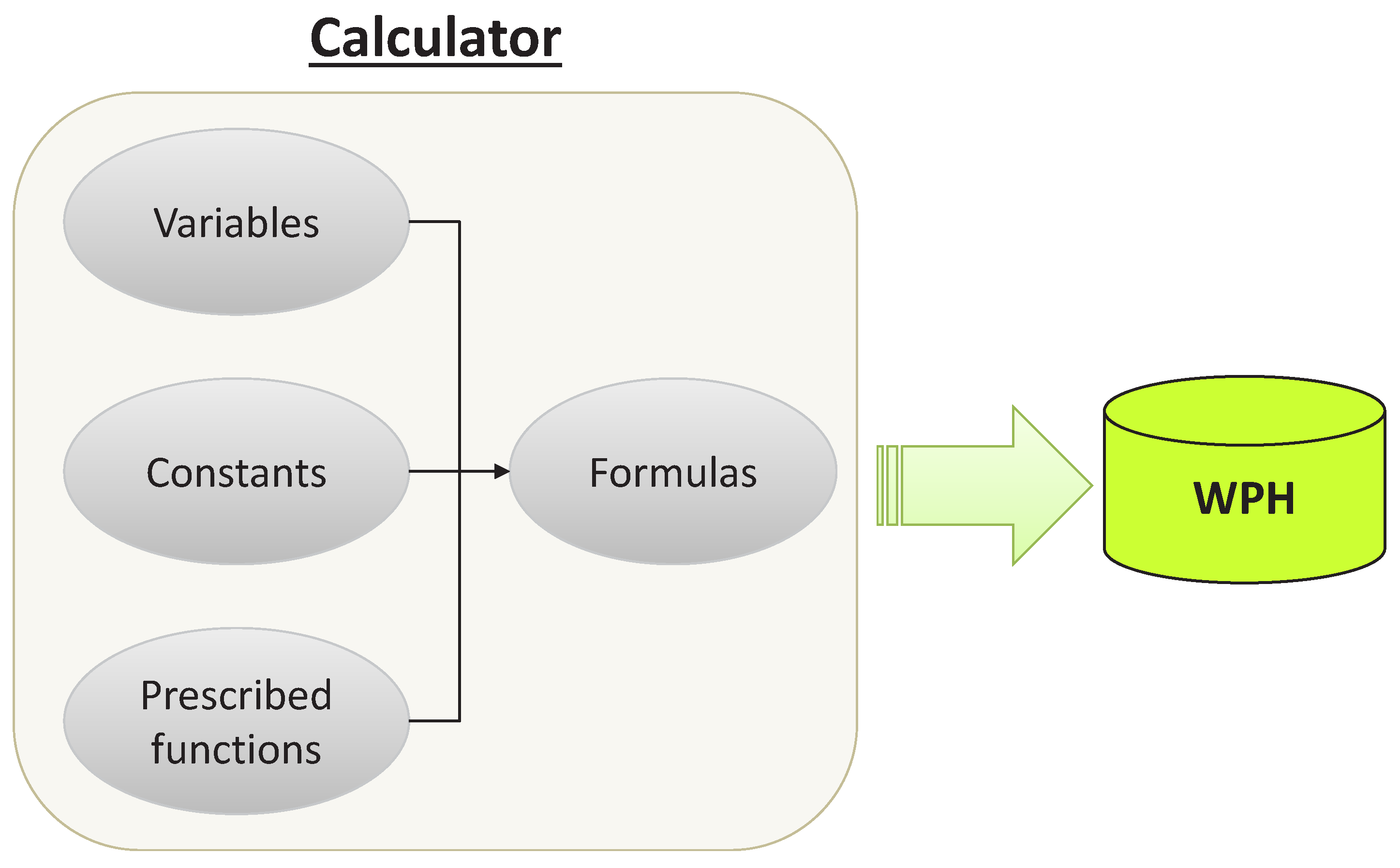

As the core module of this WPH management system, the calculator computes WPH for each recipe weekly. The computation is based on WPH formulas, maintained by users, with collected information from filtered processing data and users. The system allows users to define dedicated formulas of symbolic expressions with a number of prescribed functions and to maintain these formulas by string editing. Each formula is designed for a particular equipment type, which consists of three parts: prescribed functions, constants and variables.

Figure 9 demonstrates the basic structure of the calculator module.

Constants are predefined by users, for example batch size, in order to keep the flexibility of formulas. A prescribed function is a predefined procedure to perform a particular mathematical, statistical or logical operation; the system allows a set of essential functions as prescribed functions such as average() for computing the average value and min() for returning the smallest value. Variables are values gathered from processing data such as process start time on a particular process unit in a machine. The computing results are stored in the WPH database, which is accessible by a query interface for users. For a better understanding of the WPH calculation, an example of WPH computing for a photolithography machine is given, and notations for this example are first listed as follows.

| Constants: |

| batch size (how many wafers in a batch) |

| maximum number of lots for continued run |

| maximum lot size (how many wafers in a lot) |

| Variables: |

| process start time |

| process end time |

| average lot size (how many wafers in a lot on average) |

| number of parallel units in the process unit group i |

| average number of wafers passing through the process unit group i |

| average time for a wafer to stay in the process unit group i |

| Prescribed functions: |

| it returns the largest value among input values |

The formula for peak WPH is defined as:

where takt time,

, is:

The formula for plan WPH is defined as:

where slack time,

, is:

Takt time was commonly deployed for the planning of the operators’ work content in manufacturing [

41]. For semiconductor production equipment units, the most general definition of takt time is the desired time between two sequential lots of a machine output, just like the pace of its processing. Takt time is applied to calculate peak WPH directly, and extra information is required for calculating plan WPH due to the characteristics of integrated processing tools and equipment for batch processing that should be considered.

The estimation of takt time can vary for different equipment types, and it may change while corresponding recipes are revised. In practice, these formulas are derived by practitioners empirically according to their experience and the results of work measurement. Therefore, that is why the system must allow users to define and modify dedicated formulas of symbolic expressions; such flexible functionality is not only for users’ operational convenience, but also a necessary requirement for a rapidly-changing production system.

3.4. Monitor

Since the calculator continuously generates WPH and stores in the WPH database over time, the monitor is capable of monitoring WPH. The monitor module is powered by control charts, which are based on the statistical process control (SPC) to monitor equipment WPH by recipes in order to enhance manufacturing productivity and reliable results of capacity planning. Control charts are very popular in industries, and Montgomery [

42] pointed out at least five reasons for this:

Proven techniques for improving productivity.

Effectiveness in defect prevention.

Preventing unnecessary process adjustment.

Providing diagnostic information.

Providing information about process capability.

I followed the SPC principles and concepts in Hopp and Spearman [

34] to determine the required information of creating control charts, such as the upper limit (UCL)and lower limit (LCL), in order to examine the mean performance of equipment WPH by recipes. Denote

as the average actual WPH of recipe

r in time bucket

p; the required information of a throughput control chart for average WPH of recipe

r is listed as follows:

Regarding parameter

for control limits, three-sigma away from the centerline is usually found in the literature, but also, corresponding managerial targets are often applied for their determination.

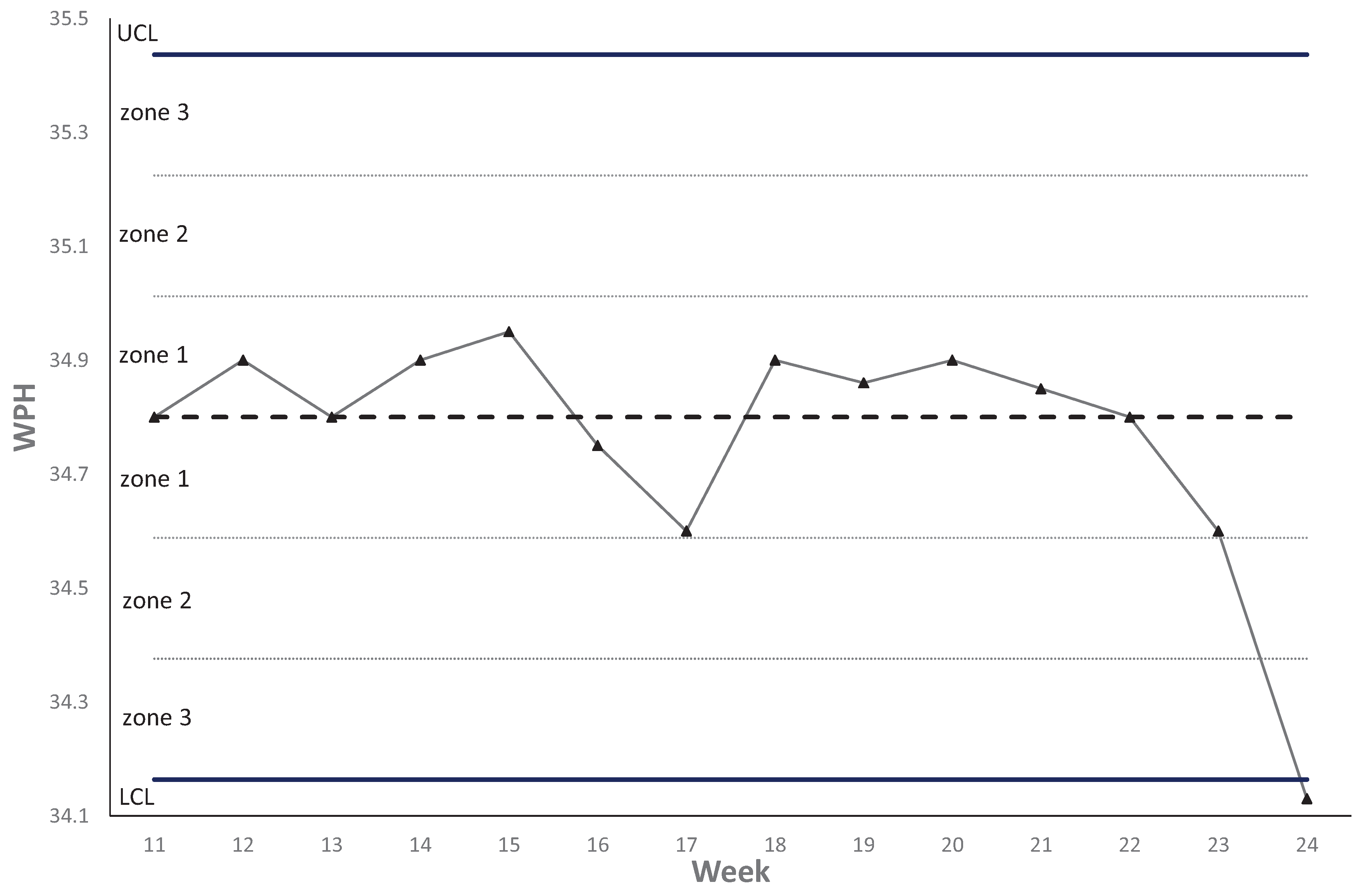

Figure 10 is an example of a throughput control chart for average WPH. Control charts enable the observations of trends by recipes just like a kind of pattern recognition for identifying the reason for nonrandom behaviors. Therefore, tests for special-cause variation are conducted by checking a few rules in order to determine if further investigation is necessary for a particular recipe. In the monitor module, these rules are created due to the suggestion in the book by Montgomery [

42] with the domain knowledge from practitioners.

Table 1 just collects the most basic testing rules because few stricter rules may only apply to recipes for bottleneck tools; Zone 1 is the zone from the centerline to one-sigma above or below the centerline; Zone 2 is the zone from one-sigma to two-sigma above or below the centerline; Zone 3 is the zone from two-sigma to three-sigma above or below the centerline.

For those cases that do not pass any of the above tests, further root cause analysis and identification are required. Therefore, the monitor module is a useful screening tool in which the capacity of analytical tools, e.g., engineering data analysis system, can be reserved for investigating these highly-ranked abnormalities in equipment productivity. In the next section, analytical tools in the analytics module are introduced.

3.5. Analytics

The analytics module is capable of classifying abnormal data into several categories and provides various visual tools for reviewing corresponding historical processing logs. Since the proposed system can record the recipe WPH continuously, applying a data mining technique on its time series data can unearth more information to benefit the capacity management. In addition, each equipment unit may have multiple recipes; hence, conducting data mining on the equipment level becomes a problem with high dimensionality.

To effectively classify abnormal data, a novel symbolic representation of time series called symbolic aggregate approximation (SAX) is adopted. SAX is a transformation technique that is capable of data discretization with a two-step procedure: (1) convert the continuous data into the piecewise aggregate approximation representation; and (2) map the above results into a discrete string representation. The primary advantages of SAX are: (1) allowing dimensionality/numerosity reduction; (2) requiring less storage space; and (3) availing itself of the wealth of algorithms in data mining. For more detail on SAX, please refer to [

43,

44]. After that, a classifier derived by a K-nearest neighbor method [

45], one of the most fundamental and simple classification methods, is applied to classify abnormal recipes; the distance measure selected for this classifier is Euclidean distance.

Since the abnormal classifier will periodically recommend a few high risk recipes for further manual review, four different tools are provided to confirm the abnormality and assist practitioners in finding the root causes. These tools systematically carry out the traditional time-motion study for equipment in Fabs and shorten the time for data collection. In addition, data visualization also increases the job efficiency and efficacy. A brief description of these tools is provided as follows.

3.5.1. What-If Analysis

This tool is developed to answer this kind of question: “what is the impact of a recipe WPH changing the result of the whole capacity planning?” Therefore, this tool not only indicates the potential of the WPH improvement for a recipe, but also estimates the capacity loss from an abnormality in WPH.

3.5.2. Timeline Motion Chart

This chart has two different viewpoints: wafer based and lot based. The former one is developed to show how wafers get processed in passing through process units in a machine. Different positions and lengths of bar pictures are put on a time axis to illustrate the wafers’ arrival time at process units and their corresponding staying duration. Different colors are also applied to identify process units. The latter one is more simple so that it only illustrates when a wafer lot arrived at a machine and how long it stayed.

3.5.3. Gantt Chart

Comparing with the time motion chart, the Gantt chart focuses more on how each process unit is occupied when handling wafers in a row.

4. Industry Project for Implementing the WPH Management System

4.1. Working Procedure

The timescale of this industry project was about two years. Although I focus on the systems design and development in most this article, a brief review of the whole progress of this project is worth giving.

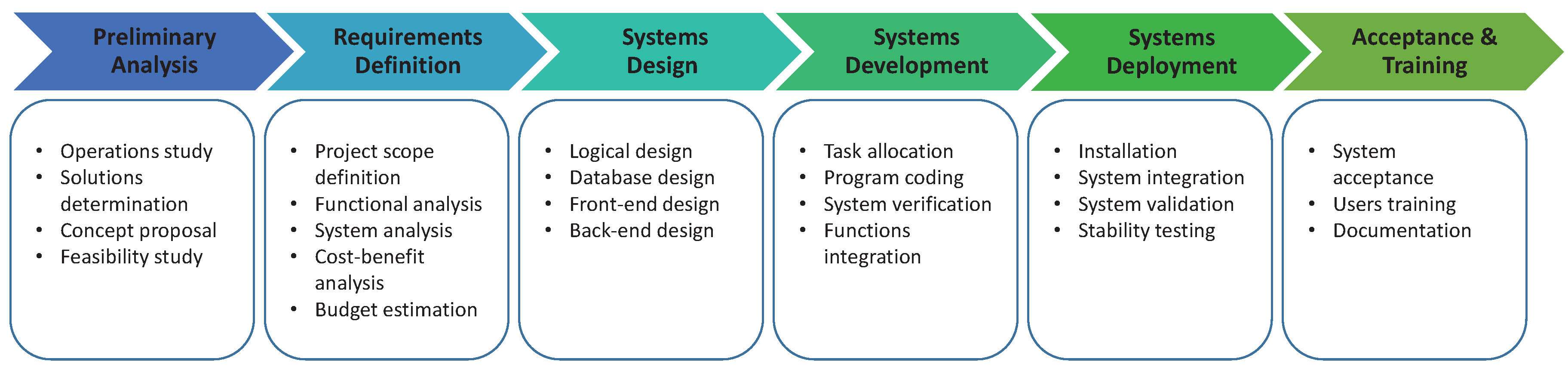

Figure 11 is the working procedure of this WPH project, which consists of six stages: preliminary analysis, requirements definition, systems design, systems development, system deployment and acceptance and training.

First, a preliminary analysis is necessary to find out the objectives of the case Fab by interviewing employees after investigating the operations. Alternative solutions may be discussed, and eventually, the concept proposal with the feasibility study is generated. Second, the requirements definition ensures that the project scope is defined. Based on the previous concept proposal, the in-depth functions are analyzed. The system analysis is also conducted for further cost-benefit analysis and budget estimation. After that, an initial timetable for the follow-on jobs is created.

The third stage, systems design, is to break up the intent functions and features into details; whether invisible parts like operational logic design or visible parts like user interface design. Once the overall designs are ready, the project goes to the stage of systems development. In the beginning, various tasks are allocated to a few developers for program coding; then, a series verification is conducted to confirm the consistency between the designs and functional programs; final, a system verification is employed after functions’ integration. If there is nothing wrong at this stage, it is time to leave the development environment and move on to the next stage.

The fifth stage begins with the system installation and then integrates this system with the current information technology environment. Next, system validation is mandatory to ensure the actual system performance, and the stability testing is also crucial to confirm that the proposed system is a sustainable solution. In the last stage, the acceptance criteria are reviewed, and related documents, e.g., database specifications and operational guidebooks, are created and transferred to customers. The whole project will end by completing the user training. In the following sections, the selected details of this project are discussed.

4.2. System Deployment

System deployment is entirely different from just software installation; it is the converting process that phases out the previous related operations and switches to the developed system. In general, the period of parallel running is necessary for evaluating the stability of the developed system in practice. That is, the case company still counts on their existing practices for WPH management while the developed system has been put into operation. Once the system validation and stability testing are passed, users will depend solely on the new system.

Although this kind of dual-system mechanism ensures a reliable environment for system validation, it is very costly to run for an extended period. Next, a few suggestions are provided to avoid an overly long period of parallel running.

4.2.1. Effective System Verification

In the stage of systems development, the system verification is applied to check the consistency between the designs and functional programs. However, in practice, some errors are found during the system deployment, and this will increase the difficulty of system validation. The system validation will be asked to start over if any of these errors is found during the system deployment. Indeed, if more than one error is found sequentially, the repeated validation process is absolutely a time killer for the project. An effective system verification, in sum, is required before starting the system’s deployment.

4.2.2. Preparation of Job Arrangement

During the period of parallel running, the workload of practitioners is expected to be increased, and thus, the feasibility study in the stage of preliminary analysis will take this issue into account. Then, the budget estimation at the stage of requirements definition will also consider this increasing workload in the future. However, because of the long cycle time of a large-scale system development project, the original job arrangement plan and schedule may be out-of-date. Therefore, a continuous update to the job arrangement is the key to prevent the available time of experienced employees from becoming the bottleneck of the system deployment.

4.2.3. Data Validation and Exception Handling

Since the data requirements of the WPH management system are fulfilled by existing CIM-related systems such as MES, the output data formats of these systems are considered to determine the input data specification in the stage of the system’s design. Unfortunately, during the system validation, many nonsense errors are found because receiving an undefined data format leads to meaningless, ridiculous or misleading computing results; sometimes, this situation can also happen when the system has gone live.

In order to avoid that this kind of mistake delays the project, firstly, the input data specification should be based on the database specification of those CIM-related systems, rather than only on the observation of the output data sample; if any of those systems lacks the database specification to ensure its output data format will match the input data specification of the developed WPH management system, extra efforts for fixing this problem are required. Secondly, even though input/output data validation between those CIM systems and the proposed system has been carried out, the ability of the exception handling is better when attached to the WPH management system. The fundamental principle is that practitioners would rather perform troubleshooting after receiving the system notifications than obtaining misleading data and reports.

4.3. System Maintenance

For a product with a level of wafer start at 10,000 pieces monthly, assume its product cycle time is 60 days for the overall 700 processing steps. There are over seven million new records written into the WPH database after the processing of the filter. Therefore, in order to retain a satisfactory operational efficiency for the proposed WPH management system, a suitable data scrubbing and backup schedule are necessary.

4.4. System Impact, Efficiency and Performance

In semiconductor wafer Fabs, maintaining the dataset of WPH at the recipe level is very time consuming, but necessary. In order to perform better capacity management, up-to-date and accurate throughput estimation, WPH is critical for all kinds of capacity planning and scheduling. This project implements the proposed WPH management system, which effectively and efficiently relieves the workforce of the experienced employees and places it on the troubleshooting and WPH enhancement with the help of the monitor and analytics modules.

Since the system’s deployment adopts parallel running for system validation, the author also has the chance to evaluate the performance of the proposed system by comparing with the old one. In order to do so, a conceptual capacity allocation model is introduced for the performance comparison, and the indices, parameters and decision variables, which are used in the optimization model, are introduced first:

| Indices: |

| product |

| process step of product i |

| machine |

| time bucket |

| Parameters: |

| demand of product i in bucket b |

| minimum fulfillment of product i in bucket b |

| available capacity of machine r in bucket b (express in time) |

| unit processing time of machine r serving step s of product i |

| unit selling price of product i in bucket b |

| unit cost of product i in bucket b |

| Decision variables: |

| allocated quantity of product i in bucket b |

| plan production quantity of product i at step s using machine r in bucket b |

Now, the capacity allocation problem can be formulated as follows:

The objective function (1) to be maximized is the sum of the allocated production quantity over the planning horizon. Ideally, the available capacity of each machine should be transformed into corresponding plan production quantity. The capacity restriction for each machine among phases is formulated by constraints (2). Constraint (3) ensures the planned profit is positive. Constraints (4) and (5) limit the allocation quantity to be between the minimum requirement and demand. Constraint (6) forces the result of job assignment to be equal to the allocation quantity.

The goal of this capacity allocation model is to maximize the total allocation quantity with limited capacity while satisfying the minimum requirement from customers’ demand.

Based on the planning result, the overall utilization of each machine group

f can be computed as follows:

where

. Generally, machines belonging to the same machine group have the same process functionality. In addition, when the definition of the unit processing time,

, is how many hours are required for processing a piece of wafer, its reciprocal would be the WPH, i.e.,

. Obviously, WPH estimated by the old system and the new system may lead to different planning results; the correlation coefficient between the planned overall utilization of the machine group and the actual one is selected as the performance measure for the performance comparison.

During the period of parallel running, the product demand and the minimum fulfillment are fixed. Two different periods are selected for performance evaluation, and the corresponding warm-up period is applied to each of them to eliminate the impacts from different wafer start controls.

Figure 12 illustrates the period of parallel running and the purposes of each time slot. On the one hand, the WPH estimated for capacity allocation planning is provided by the old system in Time Slot 2, and the Pearson correlation coefficient,

r, between planned utilization and actual utilization is 0.6548. On the other hand, when this planning adopted the WPH provided by the new system, the corresponding

r is 0.9587. The value of

r representing the degree of linear correlation is between

; 0 is no linear correlation, and 1 and −1 are total positive linear correlation and negative linear correlation, respectively. Significantly, the inaccurate WPH provided by the old system leads to an unfortunate planning result, and the new one can better improve the performance of capacity allocation planning.

Regarding production quantity, although both natural randomness in the wafer Fab and the capability limit of the static capacity planning model could produce an estimate with errors because it is almost impossible to account for all information, the comparison between planning results and observed results can still provide insightful information on the performance of the proposed solution. In order to evaluate the difference between the planning results, i.e., plan production quantity, and observed results, i.e., actual production quantity, the mean squared error (MSE) of production quantity is adopted. Please note that since each planning result is a predicted value here, it is named as

, and

represents the observed result collected from historical data. The MSE of production quantity is computed as:

and MSE of the old system and new system are 4614.96 and 2834.88, respectively. The new system performs much better to shorten the gap between planning and observed results. In addition, the author would like to mention the planning result of allocated quantity here. The sum of allocated quantity during Time Slot 4 is 4% greater than the one during Time Slot 2 on average. This shows that the new system has the potential to benefit the case wafer through increasing the quantity of wafer-out.