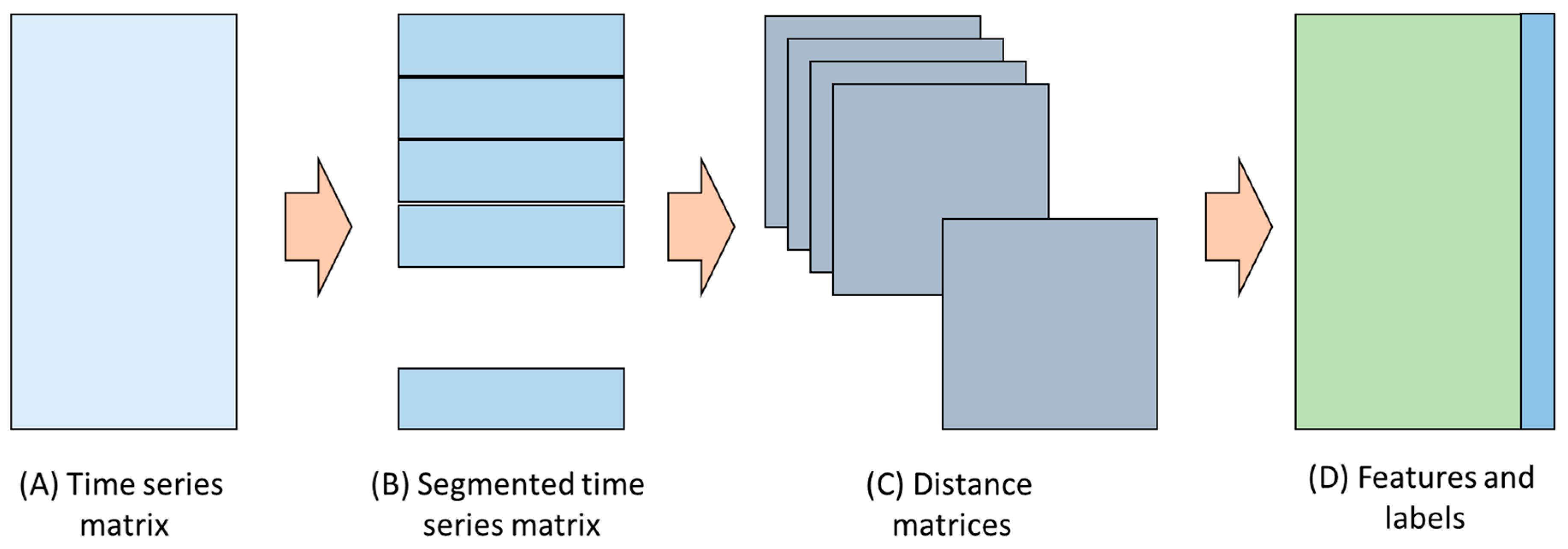

Figure 1.

Schematic workflow of the study, comprising the time series data (A), segmentation of the data (B), calculation of distance matrix for each time series segment (C), and extraction of texture features from each matrix (D) from which models can be built to relate the features to the descriptive labels associated with the time series data.

Figure 1.

Schematic workflow of the study, comprising the time series data (A), segmentation of the data (B), calculation of distance matrix for each time series segment (C), and extraction of texture features from each matrix (D) from which models can be built to relate the features to the descriptive labels associated with the time series data.

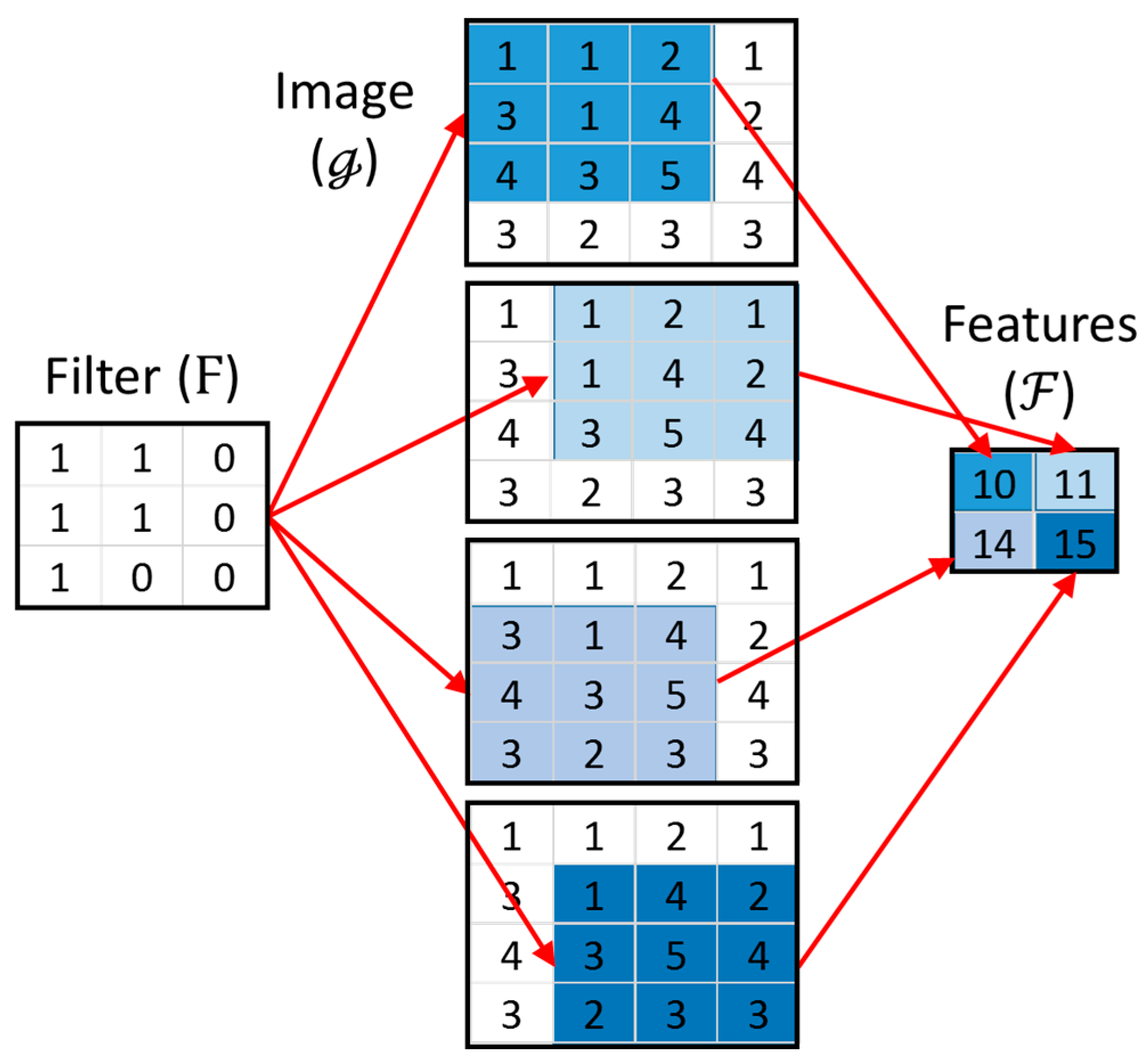

Figure 2.

Convolution of a 4 × 4 image () with a 3 × 3 filter (), yielding 4 features ().

Figure 2.

Convolution of a 4 × 4 image () with a 3 × 3 filter (), yielding 4 features ().

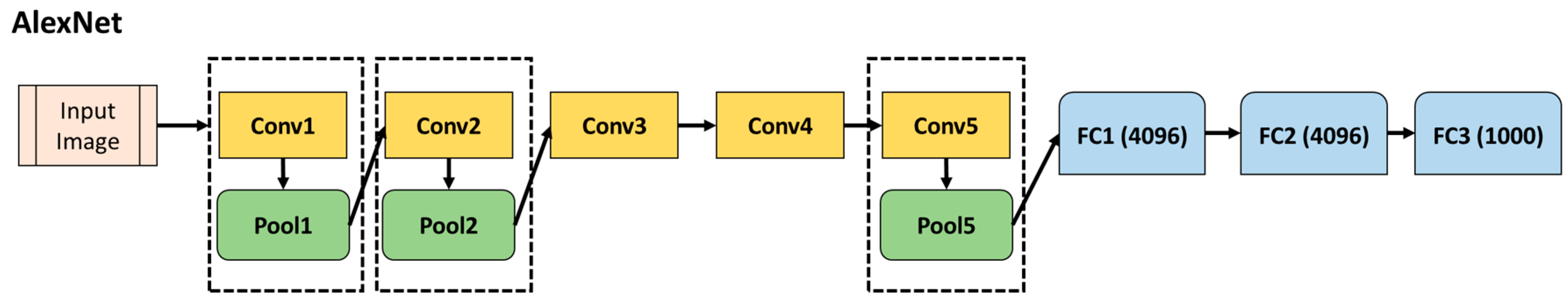

Figure 3.

A simplified version of the architecture of AlexNet showing convolutional (Conv), pooling (Pool), and fully connected (FC) layers.

Figure 3.

A simplified version of the architecture of AlexNet showing convolutional (Conv), pooling (Pool), and fully connected (FC) layers.

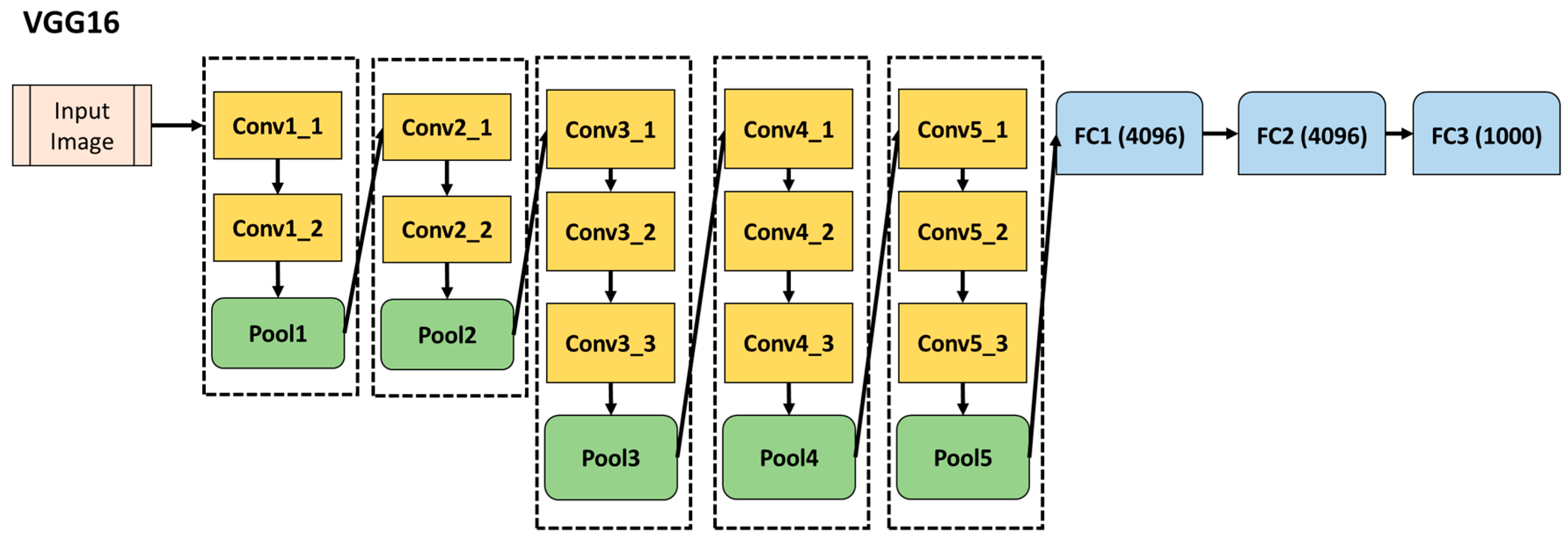

Figure 4.

A simplified version of the architecture of VGG16 neural network showing the different convolutional (Conv), pooling (Pool), and fully connected (FC) layers.

Figure 4.

A simplified version of the architecture of VGG16 neural network showing the different convolutional (Conv), pooling (Pool), and fully connected (FC) layers.

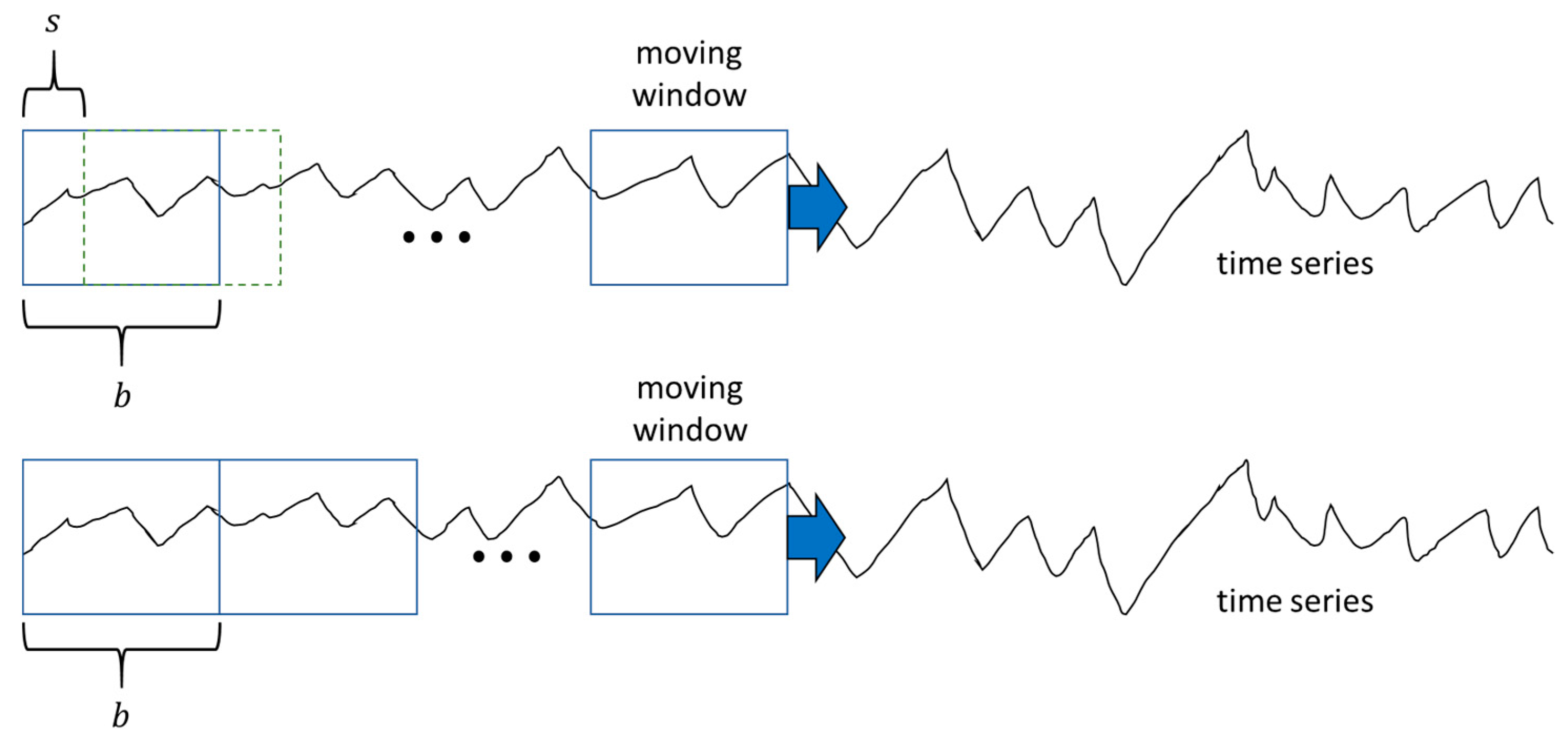

Figure 5.

Online application of the model, whereby a window of length is moved along the time series with a step size (top) and (bottom).

Figure 5.

Online application of the model, whereby a window of length is moved along the time series with a step size (top) and (bottom).

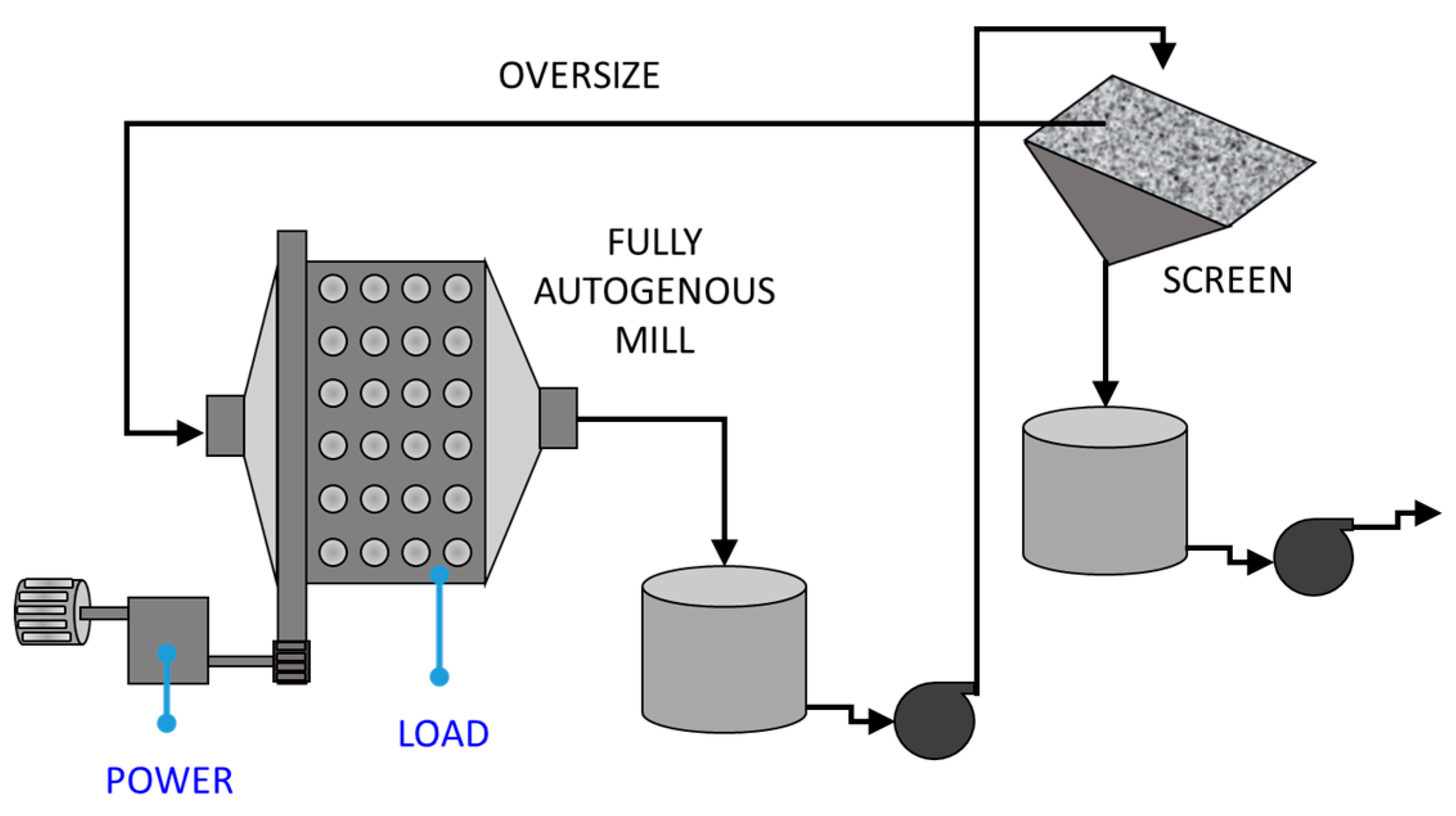

Figure 6.

Basic diagram of fully autogenous grinding circuit (after Aldrich et al. [

35]).

Figure 6.

Basic diagram of fully autogenous grinding circuit (after Aldrich et al. [

35]).

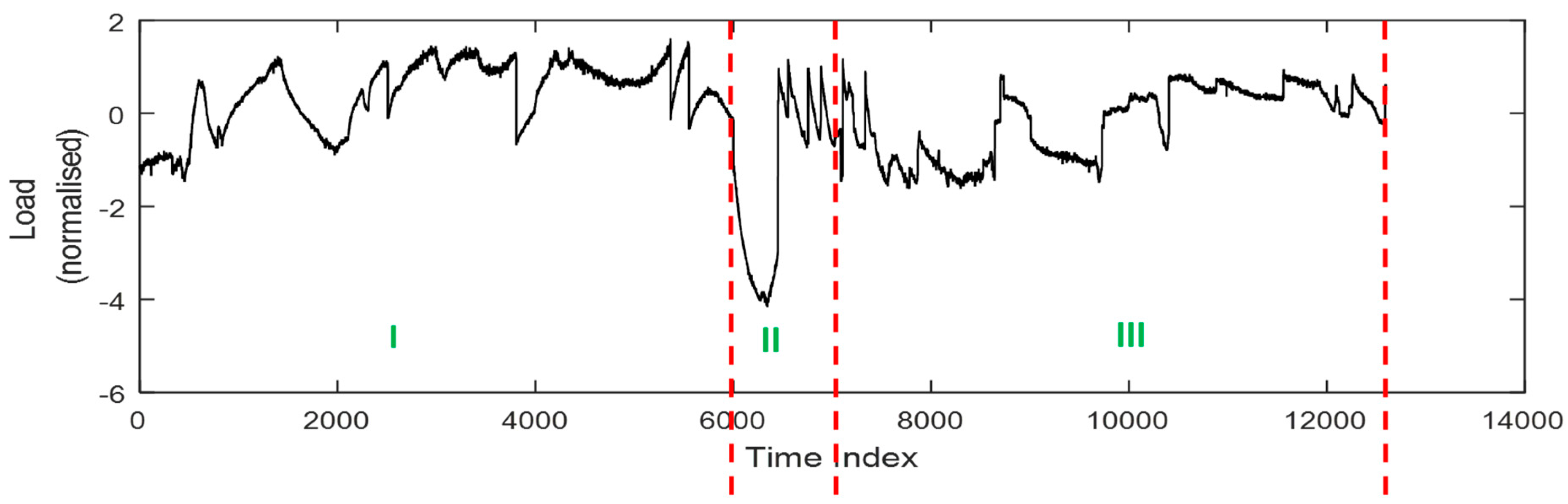

Figure 7.

The normalized mill load with labelled states: (I) normal operating condition (NOC), (II) Feed disturbance, (III) Feed limited.

Figure 7.

The normalized mill load with labelled states: (I) normal operating condition (NOC), (II) Feed disturbance, (III) Feed limited.

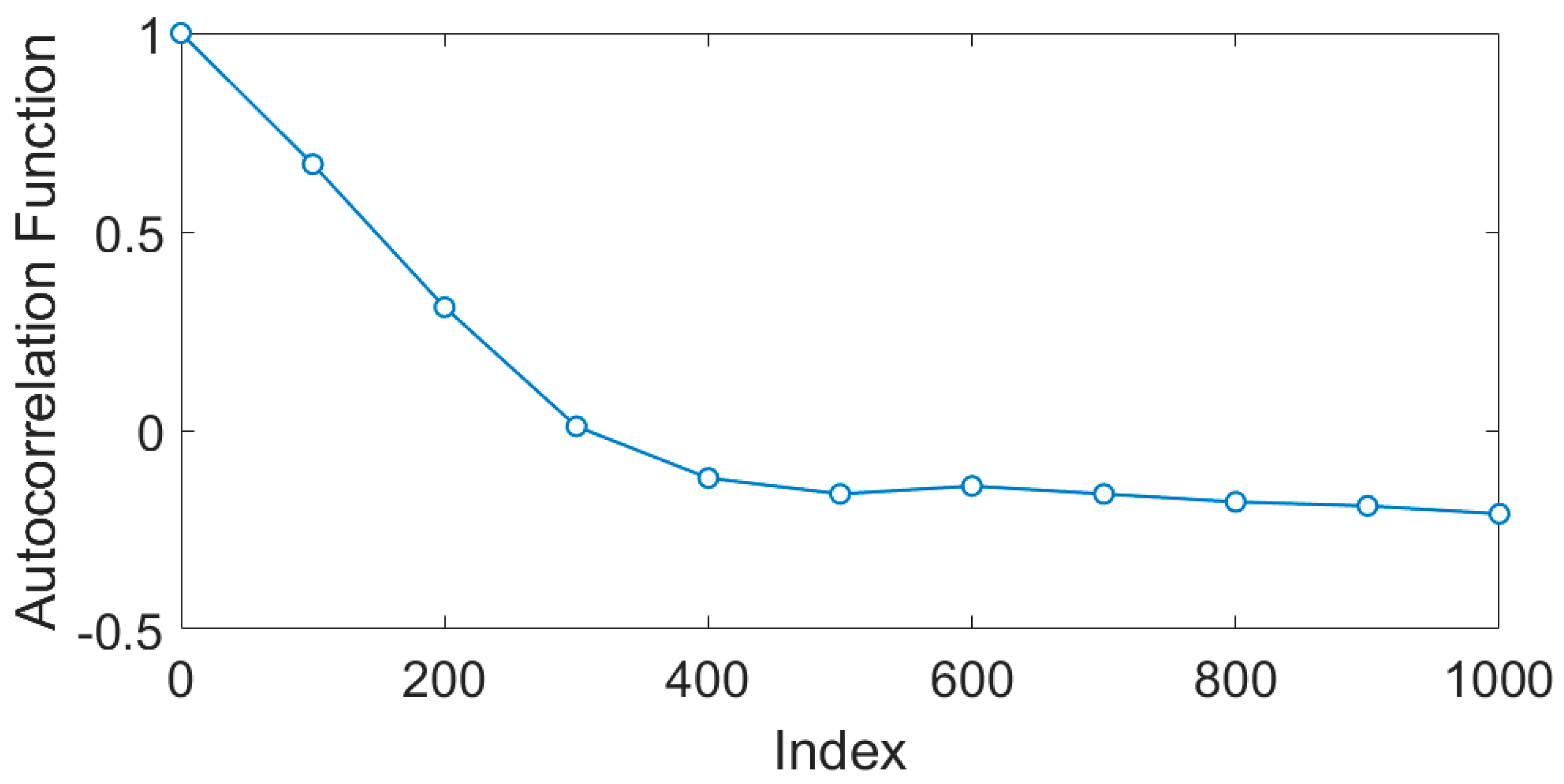

Figure 8.

The autocorrelation function (ACF) of the mill load time series showing a low to negligible value at an index exceeding a window length of b = 250.

Figure 8.

The autocorrelation function (ACF) of the mill load time series showing a low to negligible value at an index exceeding a window length of b = 250.

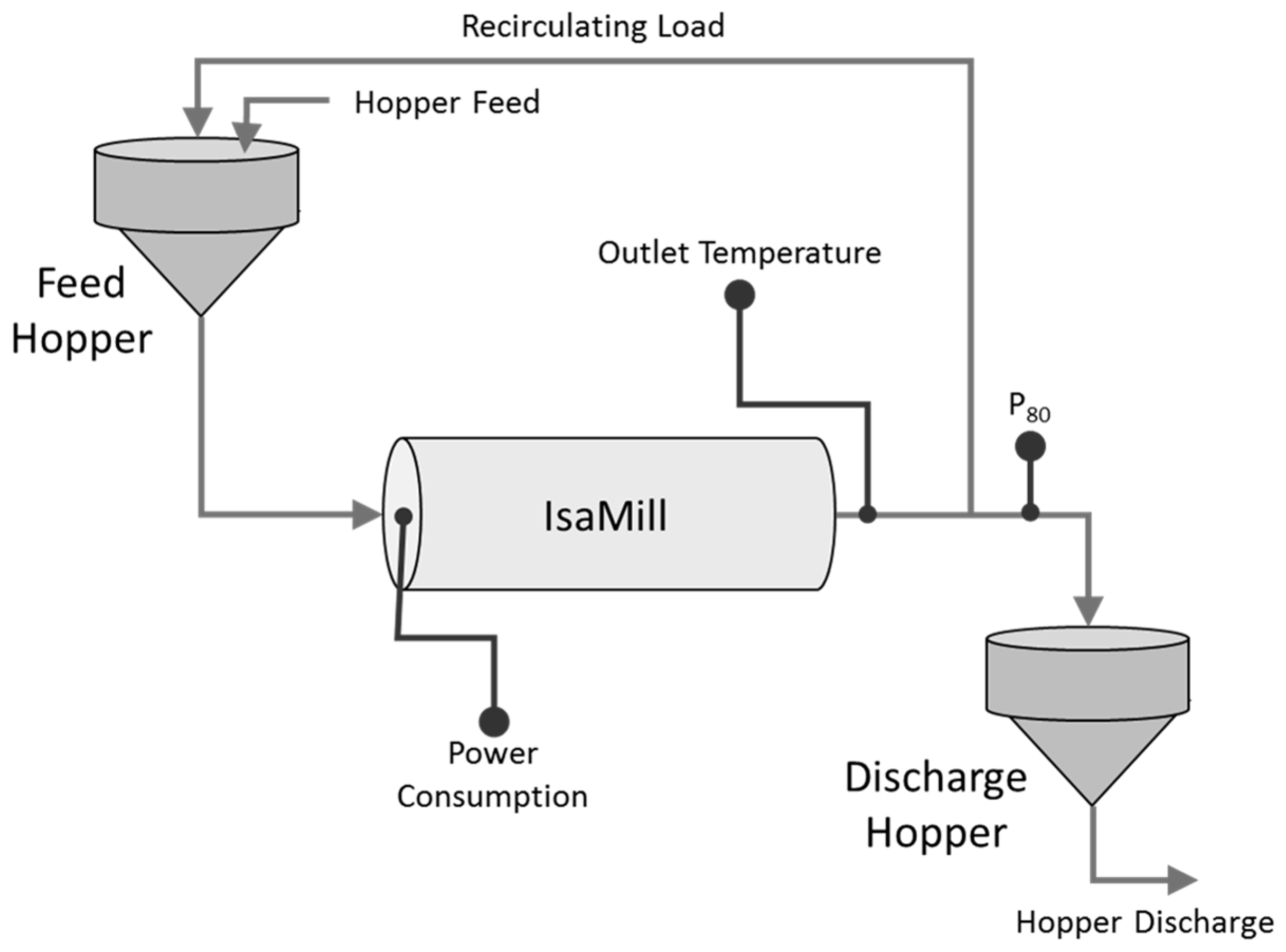

Figure 9.

Flow diagram of the IsaMill grinding circuit, showing the measuring points of the power, temperature, and P

80 particle size (after Aldrich and Napier [

37]).

Figure 9.

Flow diagram of the IsaMill grinding circuit, showing the measuring points of the power, temperature, and P

80 particle size (after Aldrich and Napier [

37]).

Figure 10.

The raw time series of P80 particle size (top), scaled power consumption (middle), and scaled temperature (bottom) of the IsaMill mill.

Figure 10.

The raw time series of P80 particle size (top), scaled power consumption (middle), and scaled temperature (bottom) of the IsaMill mill.

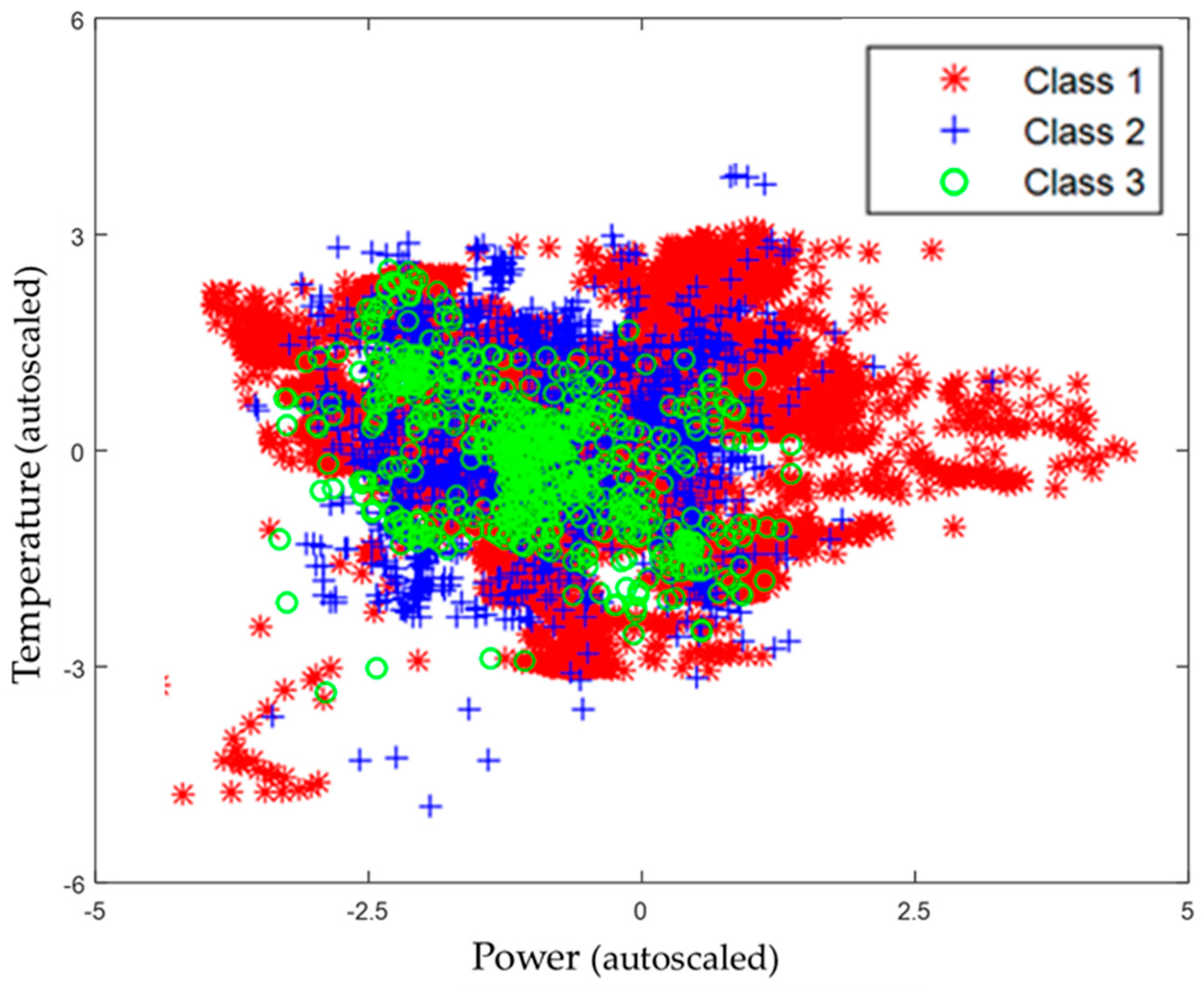

Figure 11.

Distribution of particle size classes as a function of scaled temperature and power measurements.

Figure 11.

Distribution of particle size classes as a function of scaled temperature and power measurements.

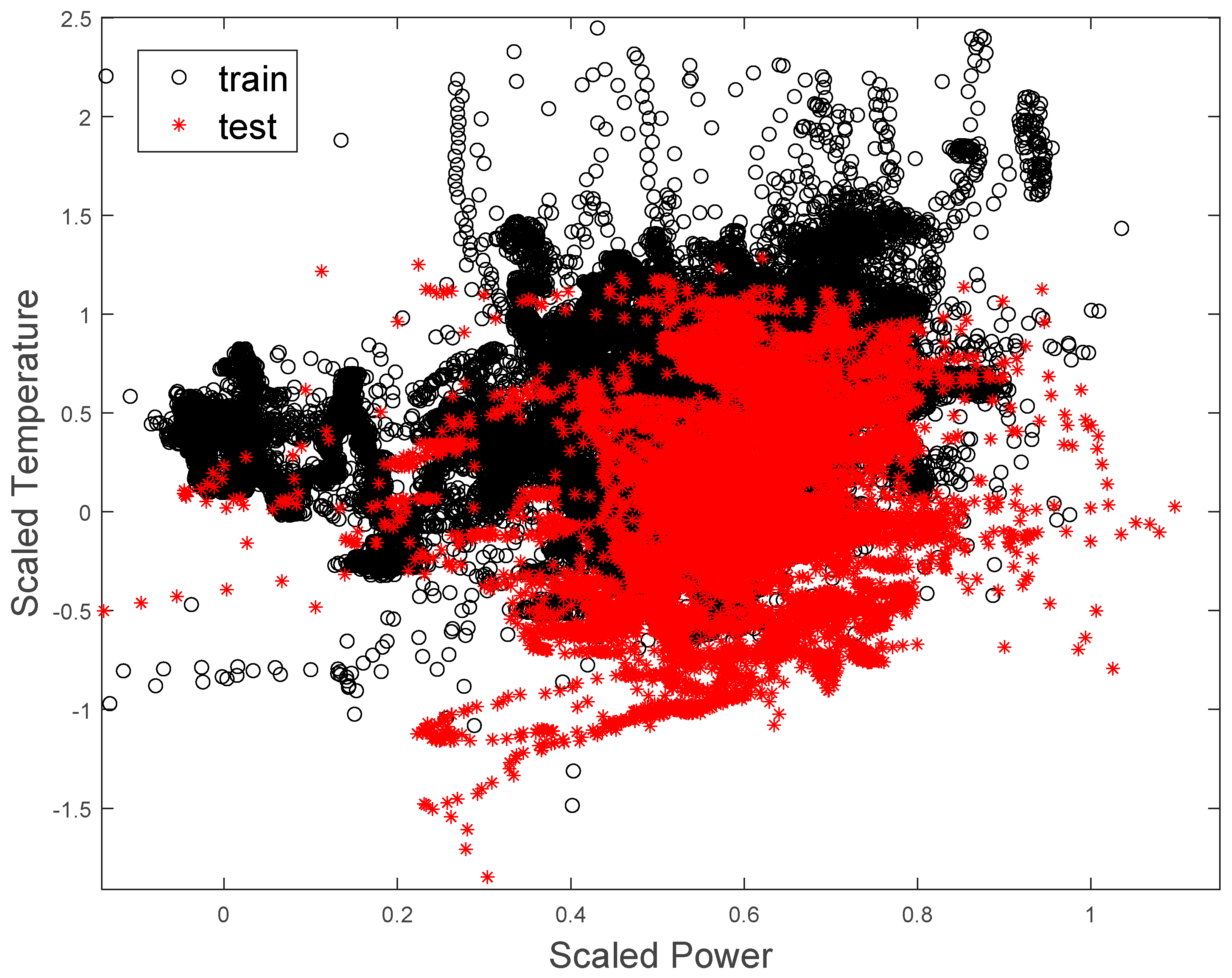

Figure 12.

Distribution of training (black ‘o’) and test (red ‘*’) data sets.

Figure 12.

Distribution of training (black ‘o’) and test (red ‘*’) data sets.

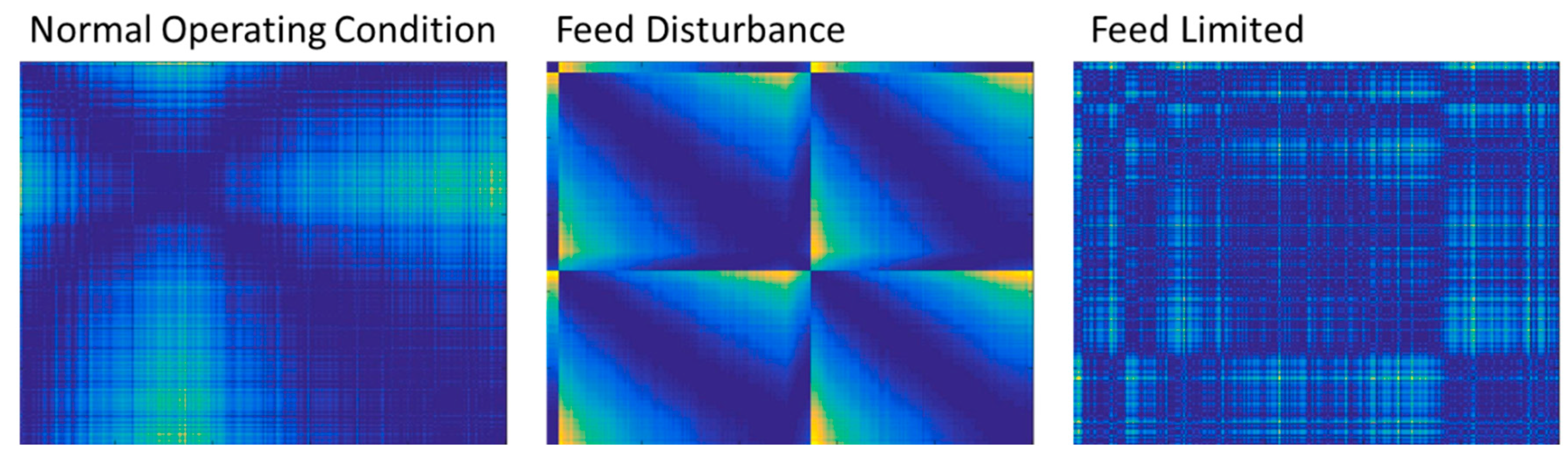

Figure 13.

Representative plot of the Euclidean distance matrices of three control states of the autogenous mill, i.e., normal operating conditions (left), feed disturbance (middle) and a feed limited state (right). Small values are indicated by dark blue colors on the one extreme, while large distance values are indicated by bright yellow colors.

Figure 13.

Representative plot of the Euclidean distance matrices of three control states of the autogenous mill, i.e., normal operating conditions (left), feed disturbance (middle) and a feed limited state (right). Small values are indicated by dark blue colors on the one extreme, while large distance values are indicated by bright yellow colors.

Figure 14.

Visualization of the texton feature set of the mill load data (Case Study 1) as projected onto 2D space using linear discriminant scores, LDA1 and LDA2.

Figure 14.

Visualization of the texton feature set of the mill load data (Case Study 1) as projected onto 2D space using linear discriminant scores, LDA1 and LDA2.

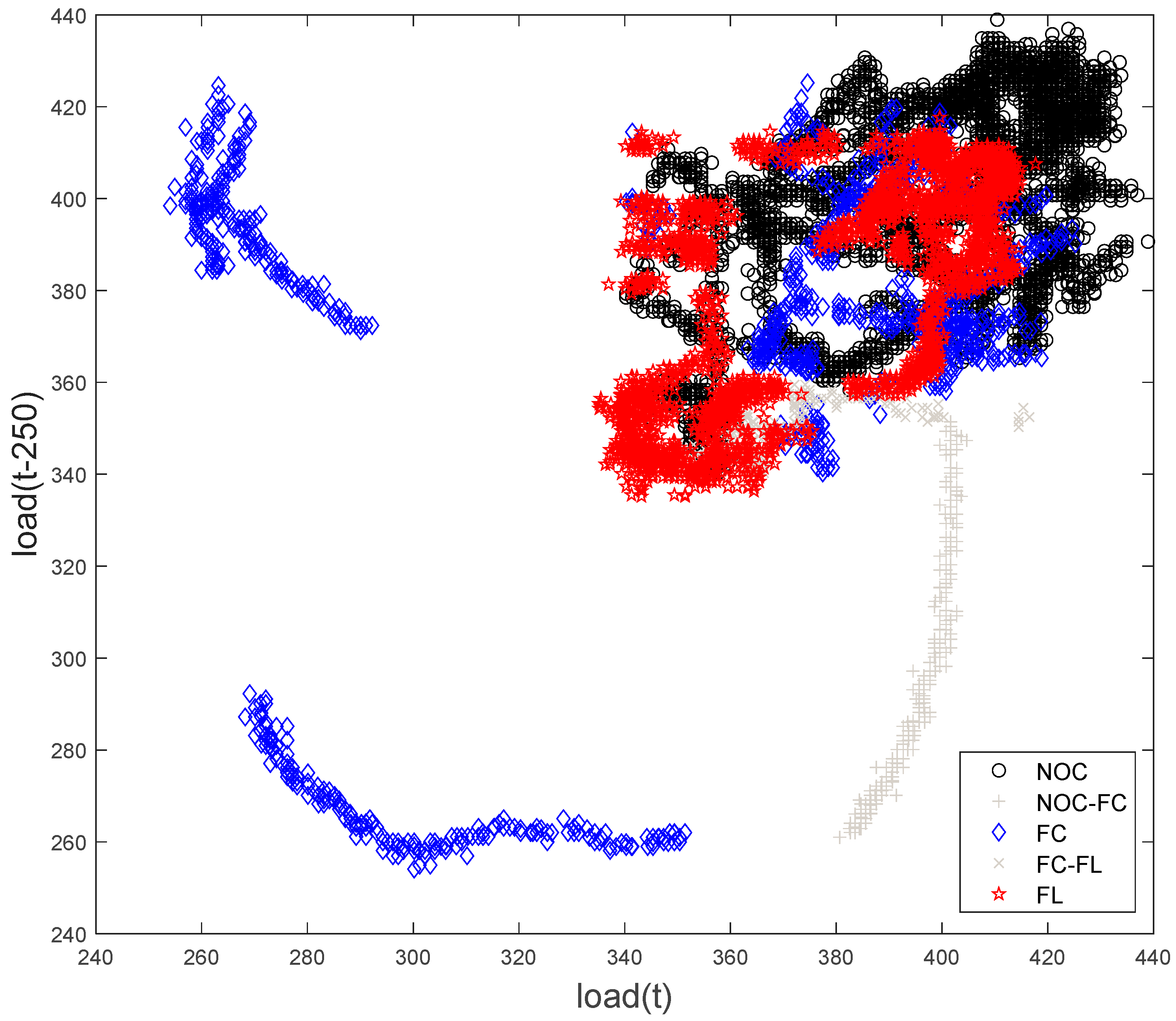

Figure 15.

Visualization of the LTM feature set of the mill load data (Case Study 1), showing the NOC, FC, and FL control states. The scores indicated by ‘NOC–FC’ and ‘FC–FL’ show transitions between the NOC and FC and FC and FL states, respectively.

Figure 15.

Visualization of the LTM feature set of the mill load data (Case Study 1), showing the NOC, FC, and FL control states. The scores indicated by ‘NOC–FC’ and ‘FC–FL’ show transitions between the NOC and FC and FC and FL states, respectively.

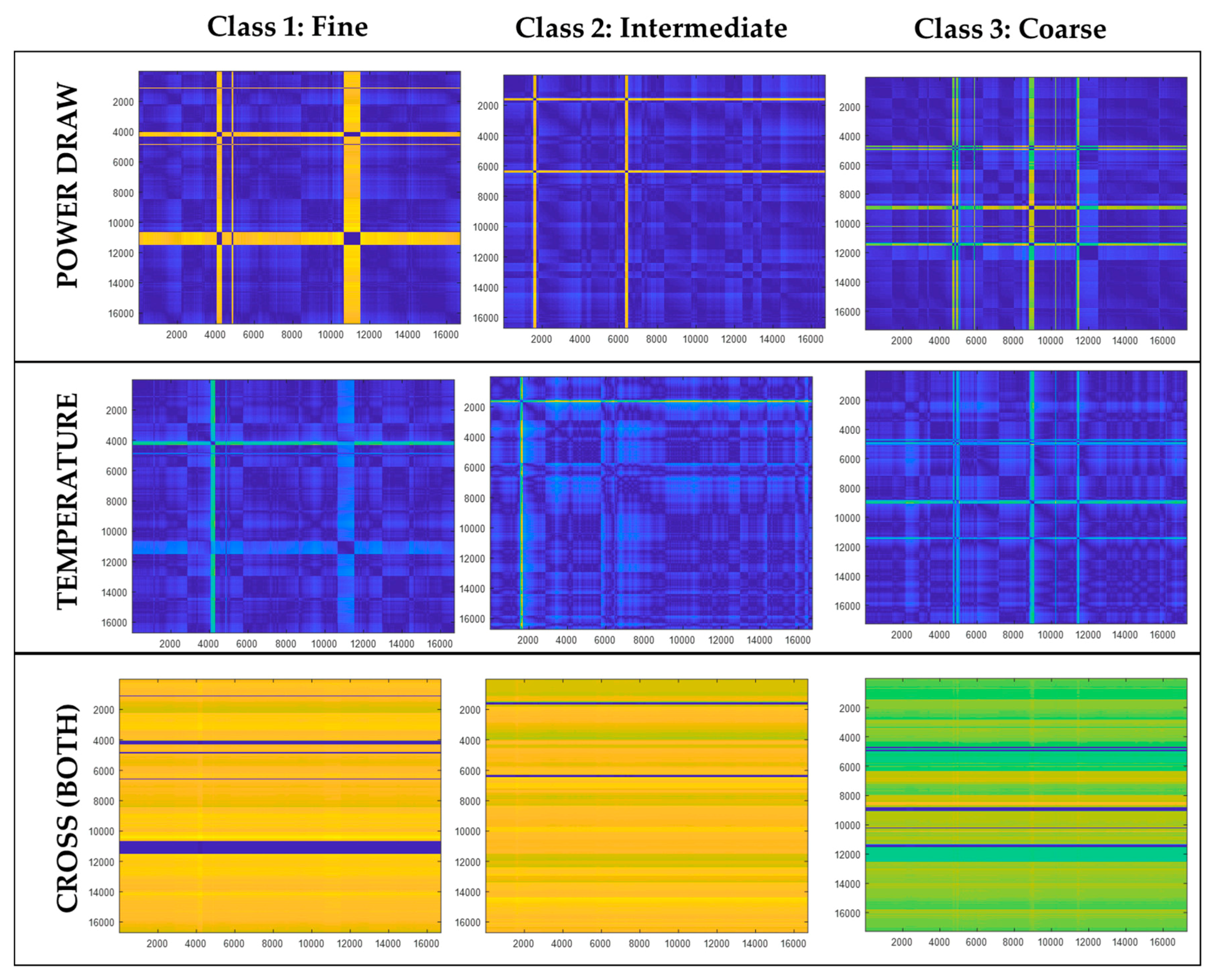

Figure 16.

Distance matrix plots of 3 classes—fine (left), intermediate (middle), and coarse (right)—using time series of power draw (top), outlet temperature (middle), and cross distance of these variables (bottom). Small values are indicated by dark blue colors on the one extreme, while large distance values are indicated by bright yellow colors.

Figure 16.

Distance matrix plots of 3 classes—fine (left), intermediate (middle), and coarse (right)—using time series of power draw (top), outlet temperature (middle), and cross distance of these variables (bottom). Small values are indicated by dark blue colors on the one extreme, while large distance values are indicated by bright yellow colors.

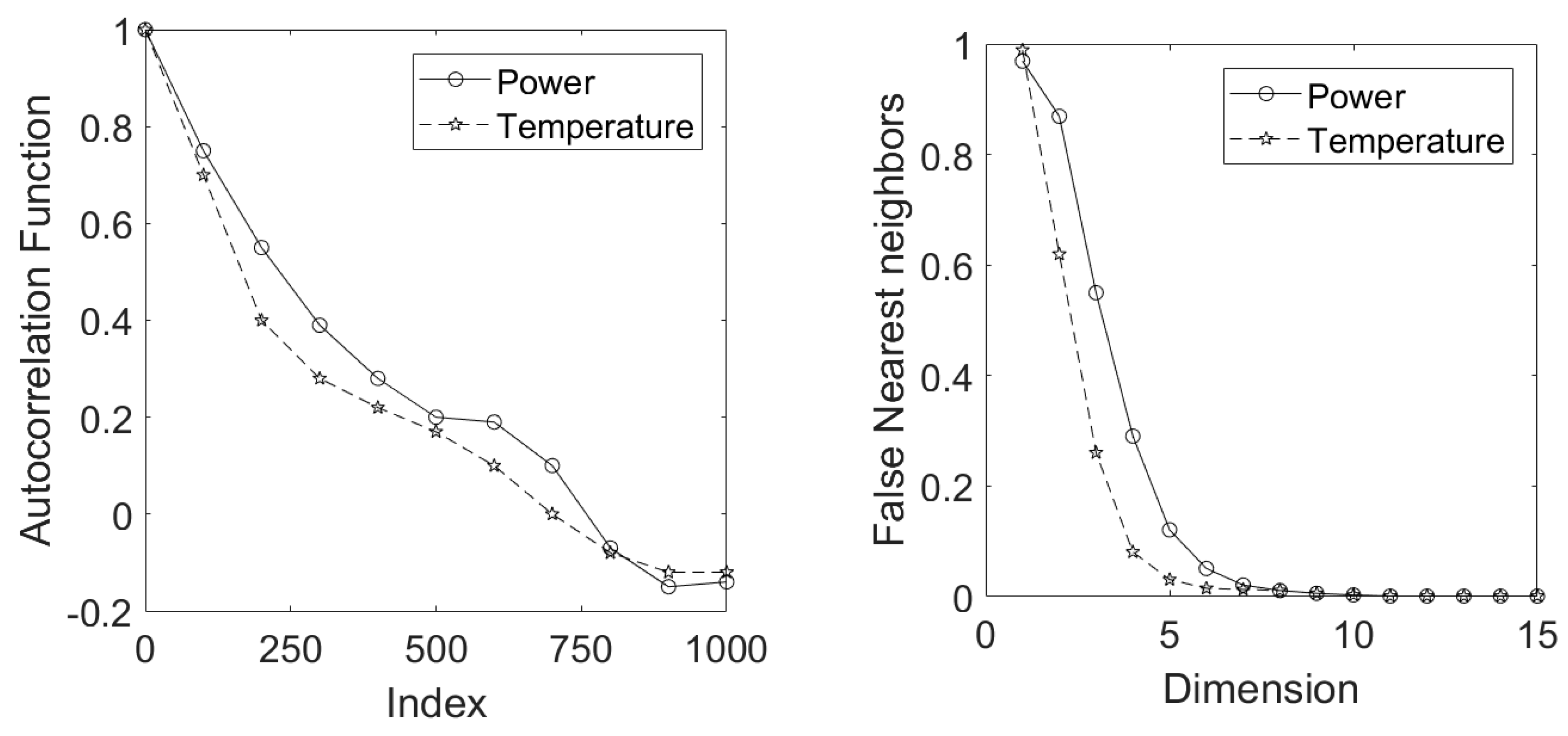

Figure 17.

The autocorrelation function (left) and false nearest neighbor (right) plots of power draw (solid lines) and outlet temperature (broken lines) time series.

Figure 17.

The autocorrelation function (left) and false nearest neighbor (right) plots of power draw (solid lines) and outlet temperature (broken lines) time series.

Figure 18.

The reconstructed attractors of power draw (left) and outlet temperature (right) time series data with legend: fine (red ‘*’), intermediate (blue ‘+’), and coarse (green ‘o’).

Figure 18.

The reconstructed attractors of power draw (left) and outlet temperature (right) time series data with legend: fine (red ‘*’), intermediate (blue ‘+’), and coarse (green ‘o’).

Table 1.

Mill load measurements and number of time series segments for b = 250.

Table 1.

Mill load measurements and number of time series segments for b = 250.

| Mill States | Number of Observations | Number of Segments |

|---|

| Normal Operating Condition (NOC) | 6000 | 24 |

| Feed Disturbance (FD) | 1500 | 6 |

| Feed Limited (FL) | 5100 | 20 |

| | 12,600 | 50 |

Table 2.

Distribution of P80 particle size classes in the IsaMill.

Table 2.

Distribution of P80 particle size classes in the IsaMill.

| Particle Size (μm) | Class | Description | No of Observations | No of Segments * |

|---|

| 13 P80 < 15 | 1 | Fine (F) | 16,712 | 160 |

| 15 P80 < 18 | 2 | Intermediate (I) | 40,330 | 397 |

| P80 18 | 3 | Coarse (C) | 17,280 | 166 |

| | | | 74,312 | 723 |

Table 3.

Results of classification (% correct) with predictor sets derived from the mill power data and use of a cubic kernel support vector machine.

Table 3.

Results of classification (% correct) with predictor sets derived from the mill power data and use of a cubic kernel support vector machine.

| Run | Power |

|---|

| TEXTONS | ALEXNET | VGG16 | LTM |

|---|

| Train | Test | Train | Test | Train | Test | Train | Test |

|---|

| 1 | 83.3 | 60.0 | 71.3 | 62.7 | 76.6 | 63.4 | 59.5 | 60.9 |

| 2 | 86.5 | 41.9 | 76.7 | 47.3 | 77.9 | 46.5 | 65.5 | 37.1 |

| 3 | 85.0 | 44.7 | 74.2 | 57.5 | 78.3 | 48.1 | 62.7 | 53.6 |

| 4 | 83.7 | 64.9 | 71.6 | 65.0 | 73.6 | 66.4 | 65.4 | 55.3 |

| 5 | 84.3 | 54.2 | 72.0 | 58.8 | 78.1 | 62.6 | 62.9 | 54.6 |

| AVG | 84.6 | 53.1 | 73.2 | 56.3 | 76.9 | 57.4 | 63.2 | 52.3 |

| STD | 1.26 | 9.80 | 2.28 | 8.39 | 1.96 | 9.35 | 2.46 | 8.96 |

Table 4.

Results of classification (% correct) with predictor sets derived from the mill temperature data and use of a cubic kernel support vector machine.

Table 4.

Results of classification (% correct) with predictor sets derived from the mill temperature data and use of a cubic kernel support vector machine.

| Run | Temperature |

|---|

| TEXTONS | ALEXNET | VGG16 | LTM |

|---|

| Train | Test | Train | Test | Train | Test | Train | Test |

|---|

| 1 | 79.1 | 57.0 | 72.1 | 59.2 | 75.6 | 59.9 | 66.3 | 68.9 |

| 2 | 84.6 | 36.6 | 75.5 | 46.5 | 77.9 | 47.3 | 69.6 | 55.4 |

| 3 | 79.5 | 48.8 | 73.9 | 47.3 | 76.3 | 49.6 | 70.8 | 58.3 |

| 4 | 80.3 | 58.0 | 78.6 | 58.0 | 75.9 | 62.6 | 71.3 | 61.1 |

| 5 | 79.1 | 63.4 | 74.8 | 60.3 | 76.0 | 64.1 | 68.5 | 63.5 |

| AVG | 80.5 | 52.8 | 75.0 | 54.3 | 76.3 | 56.7 | 69.3 | 61.4 |

| STD | 2.33 | 10.43 | 2.39 | 6.77 | 0.91 | 7.72 | 2.00 | 5.16 |

Table 5.

Results of classification (% correct) with predictor sets derived from the cross recurrence combination of mill temperature and power data and use of a cubic kernel support vector machine.

Table 5.

Results of classification (% correct) with predictor sets derived from the cross recurrence combination of mill temperature and power data and use of a cubic kernel support vector machine.

| Run | Cross |

|---|

| TEXTONS | ALEXNET | VGG16 | LTM |

|---|

| Train | Test | Train | Test | Train | Test | Train | Test |

|---|

| 1 | 69.9 | 59.2 | 59.0 | 57.8 | 69.2 | 63.1 | NA | NA |

| 2 | 76.4 | 50.3 | 65.2 | 45.0 | 74.2 | 46.5 | NA | NA |

| 3 | 74.6 | 46.5 | 67.1 | 46.5 | 74.6 | 48.0 | NA | NA |

| 4 | 72.8 | 64.1 | 59.8 | 67.9 | 73.1 | 66.4 | NA | NA |

| 5 | 72.4 | 58.8 | 63.0 | 61.8 | 72.2 | 60.3 | NA | NA |

| AVG | 73.2 | 55.8 | 62.8 | 55.8 | 72.7 | 56.9 | NA | NA |

| STD | 2.44 | 7.18 | 3.45 | 9.87 | 2.15 | 9.05 | NA | NA |

Table 6.

Results of classification (% correct) with predictor sets derived from combination of the mill temperature and power data and use of a cubic kernel support vector machine.

Table 6.

Results of classification (% correct) with predictor sets derived from combination of the mill temperature and power data and use of a cubic kernel support vector machine.

| Run | Combined Power and Temperature |

|---|

| TEXTONS | ALEXNET | VGG16 | LTM |

|---|

| Train | Test | Train | Test | Train | Test | Train | Test |

|---|

| 1 | 86.1 | 78.9 | 80.9 | 77.5 | 85.2 | 78.2 | 60.5 | 61.3 |

| 2 | 89.7 | 45.0 | 80.6 | 47.3 | 86.3 | 47.3 | 77.4 | 41.9 |

| 3 | 91.1 | 43.1 | 80.4 | 51.9 | 85.7 | 52.6 | 75.3 | 55.9 |

| 4 | 89.0 | 65.0 | 80.7 | 59.8 | 83.4 | 66.4 | 72.6 | 61.2 |

| 5 | 88.8 | 74.1 | 78.9 | 69.2 | 86.2 | 71.8 | 73.4 | 51.2 |

| AVG | 88.9 | 61.2 | 80.3 | 61.1 | 85.4 | 63.3 | 71.8 | 54.3 |

| STD | 1.83 | 16.5 | 0.80 | 12.4 | 1.18 | 13.0 | 6.60 | 8.10 |