3D Reconstruction of Plant/Tree Canopy Using Monocular and Binocular Vision

Abstract

:1. Introduction

- Provide a new method to calibrate camera calibration matrix in metric level.

- Apply the fast software ‘VisualSFM’ on complicate objects, e.g., plant/tree, to generate a full-view 3D reconstruction.

- Generate the metric 3D reconstruction from projective reconstruction and achieve real-size 3D reconstruction for complicate agricultural plant scenes.

2. Materials and Methods

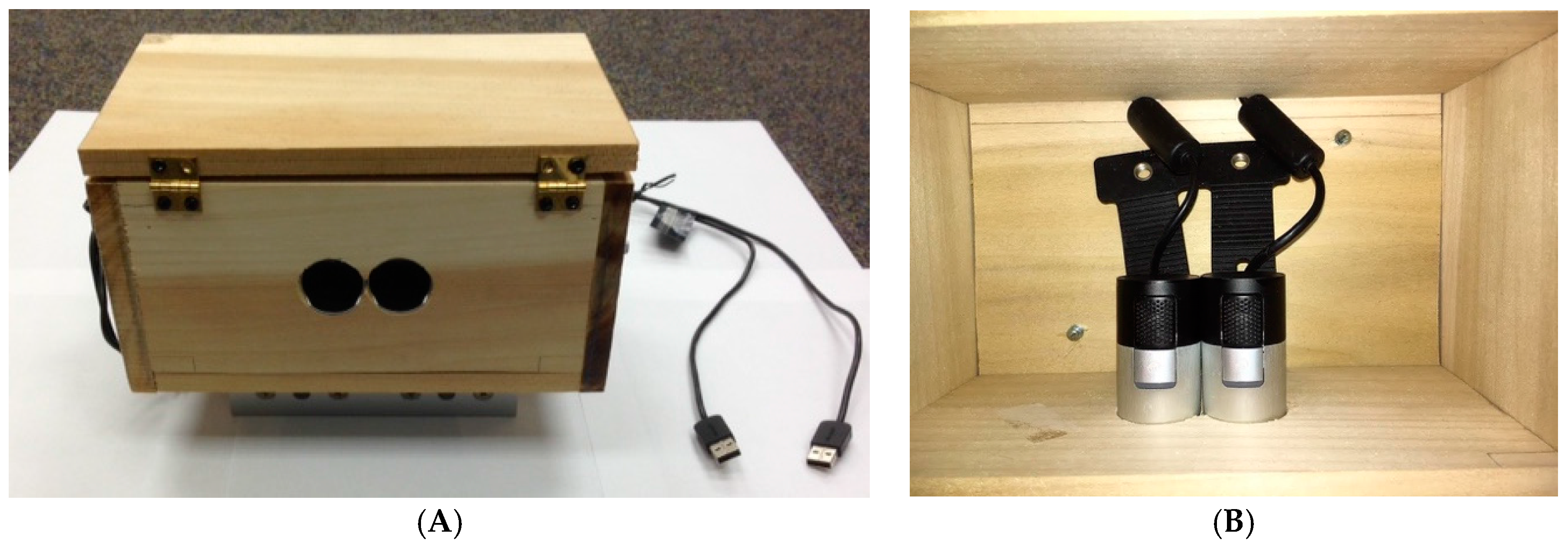

2.1. Hardware

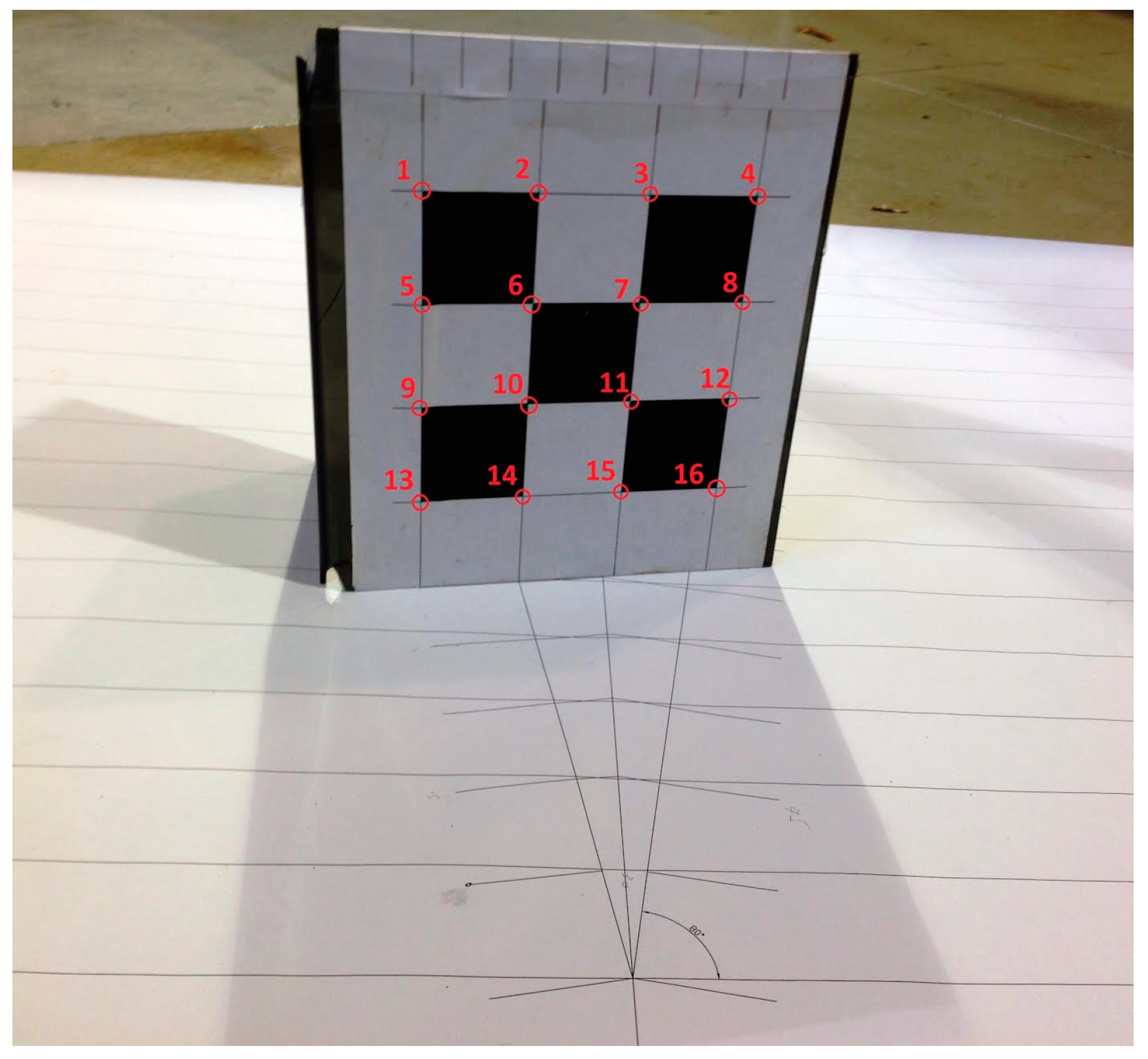

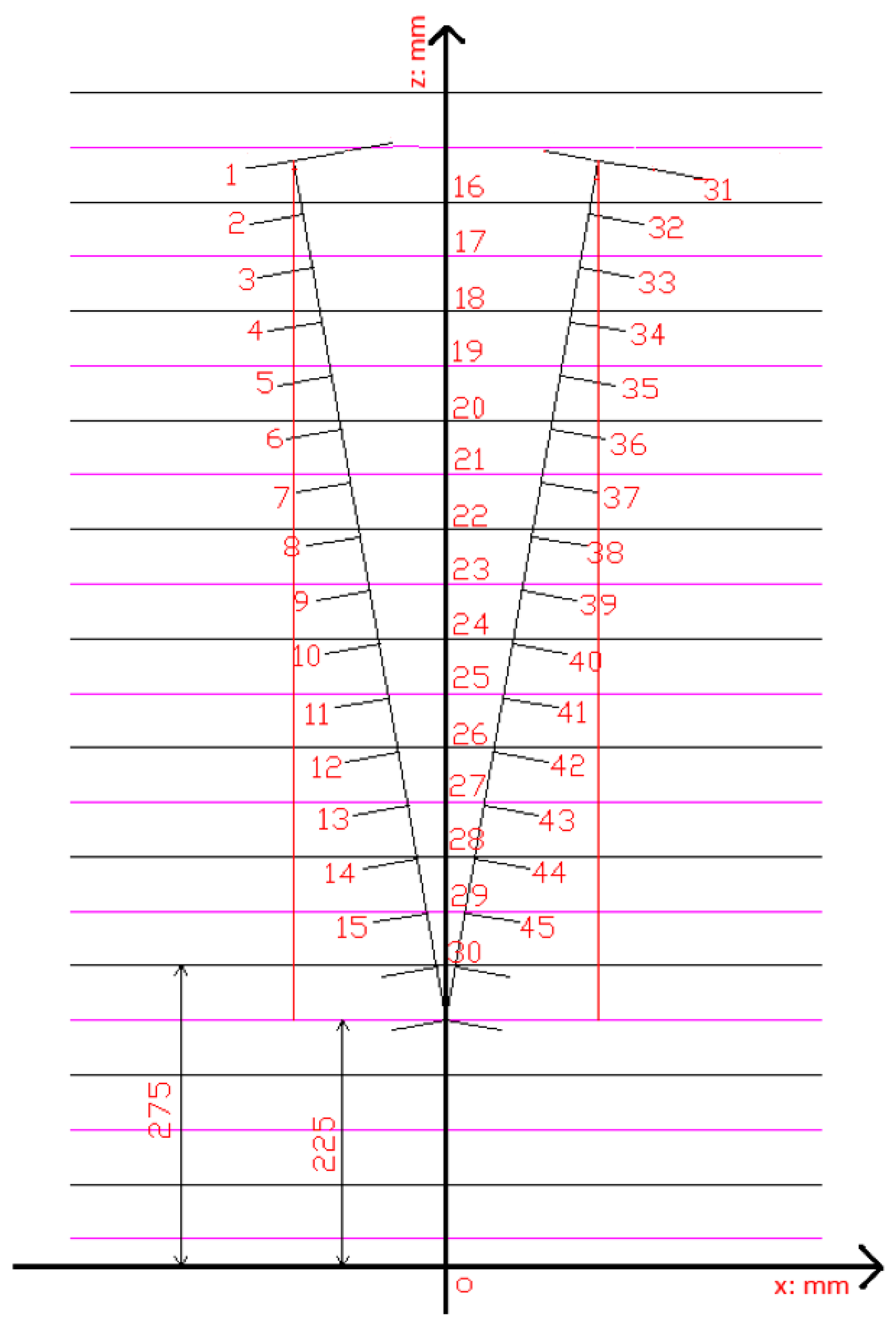

2.2. Stereo Camera Calibration

2.3. Image Acquisition

2.4. Feature Points Detection and Matching

2.5. Sparse Bundle Adjustment

2.6. Dense 3D Reconstruction Using CMVS and PMVS

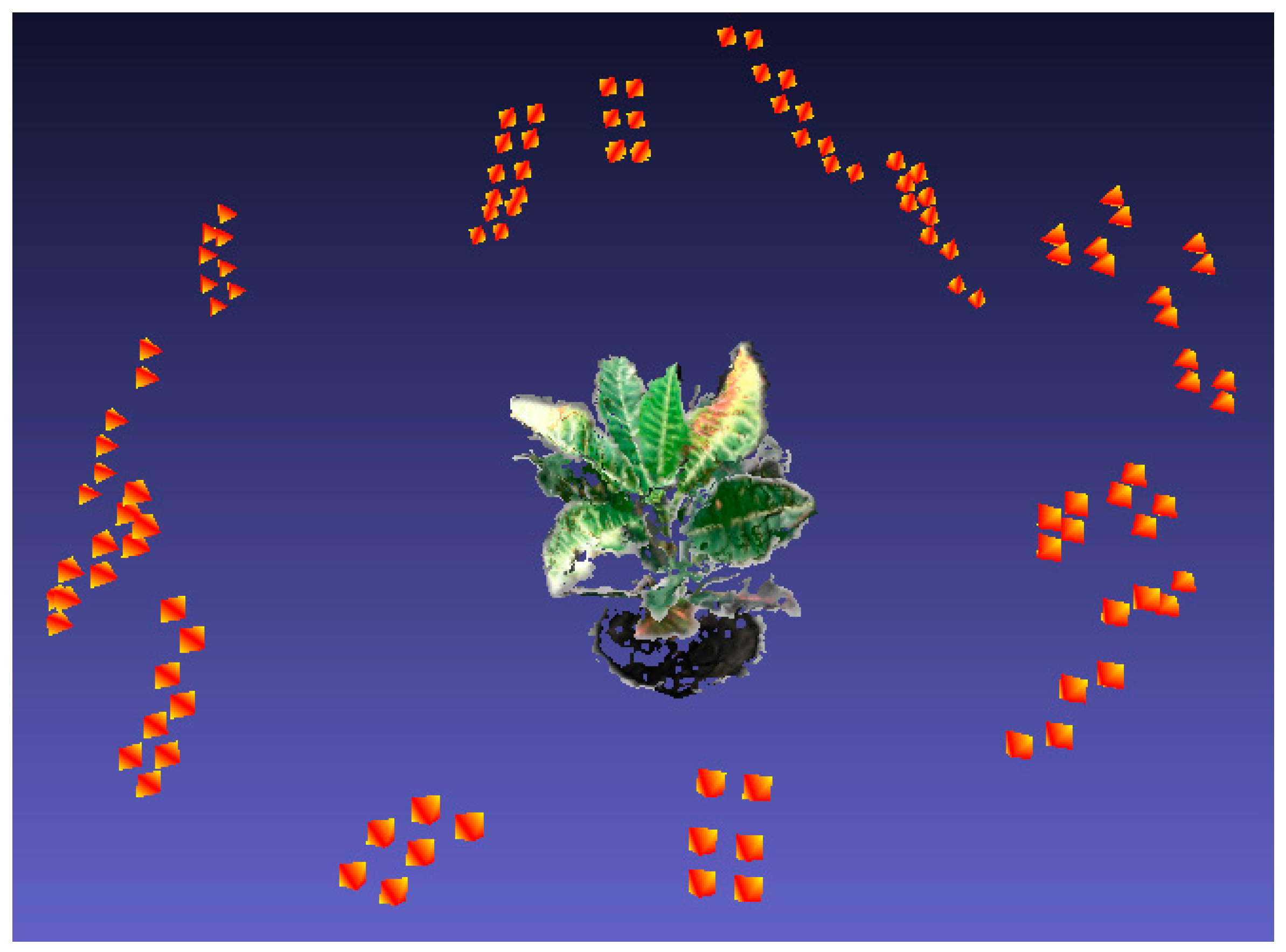

2.7. Stereo Reconstruction Using VisualSFM

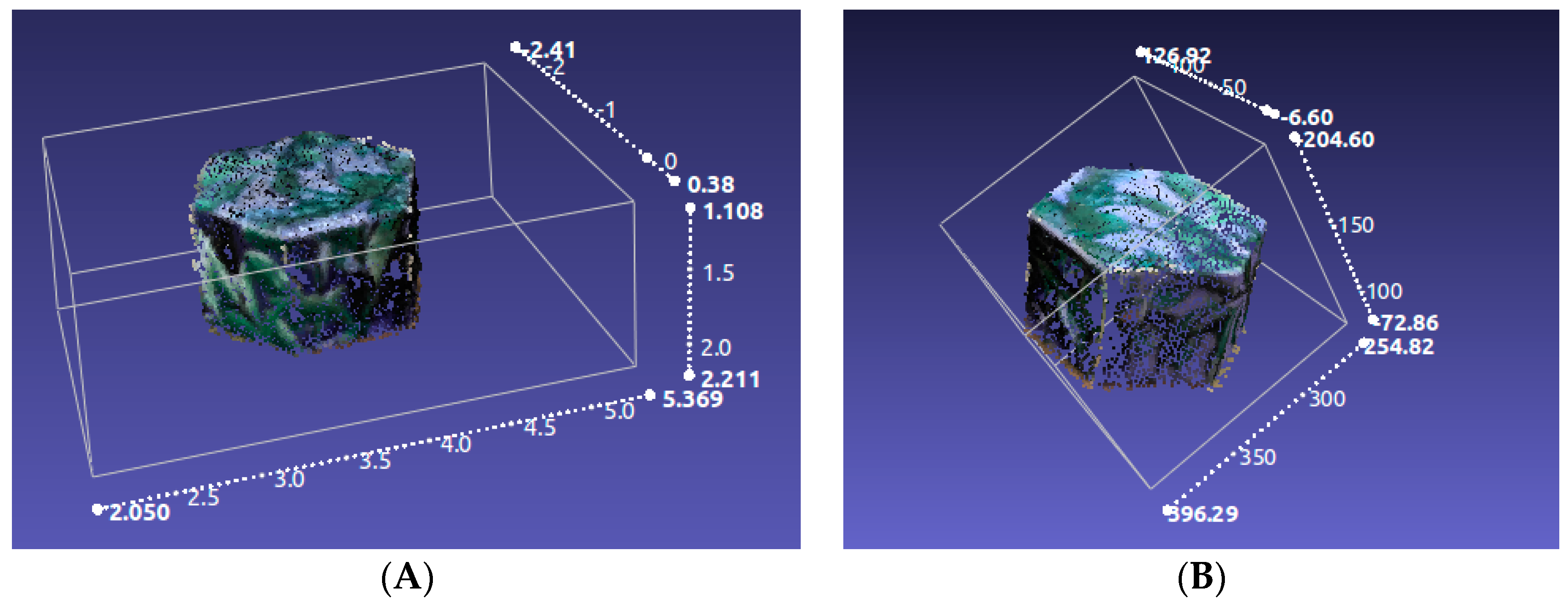

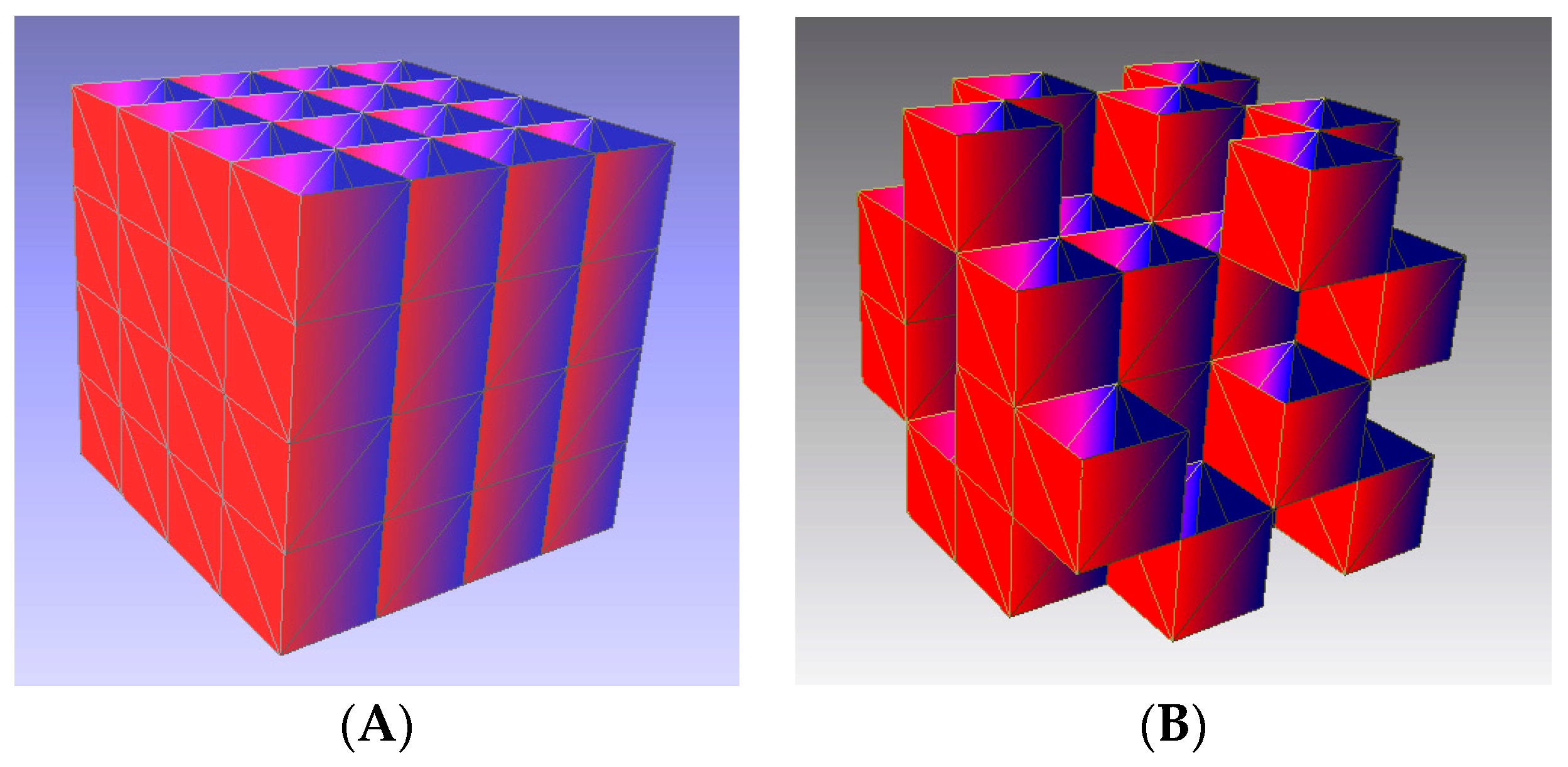

2.8. Metric Reconstruction

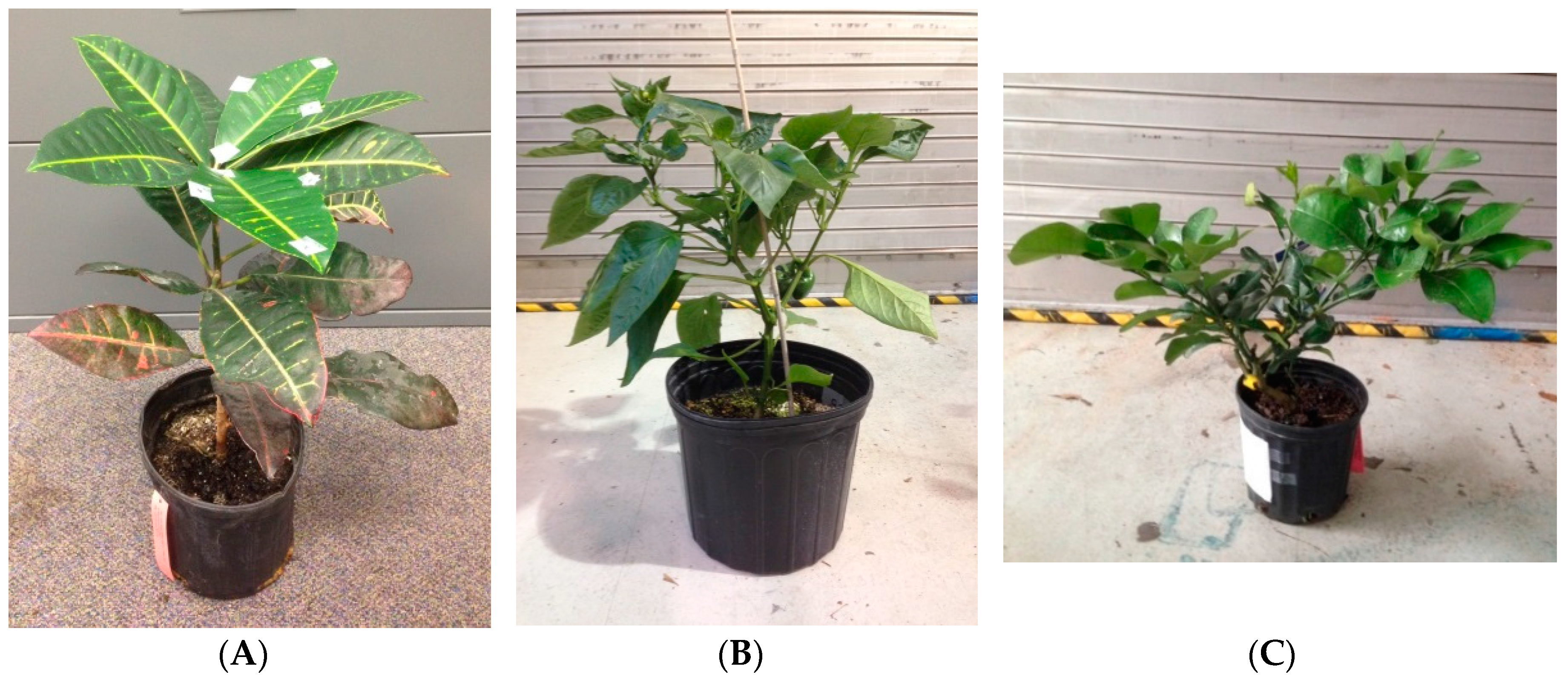

3. Experimental Results and Discussion

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Sinoquet, H.; Moulia, B.; Bonhomme, R. Estimating the three-dimensional geometry of a maize crop as an input of radiation models: Comparison between three-dimensional digitizing and plant profiles. Agric. For. Meteorol. 1991, 55, 233–249. [Google Scholar] [CrossRef]

- Tumbo, S.D.; Salyani, M.; Whitney, J.D.; Wheaton, T.A.; Miller, W.M. Investigation of laser and ultrasonic ranging sensors for measurements of citrus canopy volume. Appl. Eng. Agric. 2002, 18, 367–372. [Google Scholar] [CrossRef]

- Zaman, Q.U.; Salyani, M. Effects of foliage density and ground speed on ultrasonic measurement of citrus tree volume. Appl. Eng. Agric. 2004, 20, 173–178. [Google Scholar] [CrossRef]

- Wei, J.; Salyani, M. Development of a laser scanner for measuring tree canopy characteristics: Phase 1. Prototype development. Trans. ASAE 2004, 47, 2101–2107. [Google Scholar] [CrossRef]

- Wei, J.; Salyani, M. Development of a laser scanner for measuring tree canopy characteristics: Phase 2. Foliage density measurement. Trans. ASAE 2005, 48, 1595–1601. [Google Scholar] [CrossRef]

- Lee, K.H.; Ehsani, R. A laser scanner based measurement system for quantification of citrus tree geometric characteristics. Appl. Eng. Agric. 2009, 25, 777–788. [Google Scholar] [CrossRef]

- Rosell, J.R.; Llorens, J.; Sanz, R.; Arnó, J.; Ribes-Dasi, M.; Masip, J.; Escolà, A.; Camp, F.; Solanelles, F.; Gràcia, F.; et al. Obtaining the three-dimensional structure of tree orchards from remote 2D terrestrial LIDAR scanning. Agric. For. Meteorol. 2009, 149, 1505–1515. [Google Scholar] [CrossRef]

- Sanz-Cortiella, R.; Llorens-Calveras, J.; Escola, A.; Arno-Satorra, J.; Ribes-Dasi, M.; Masip-Vilalta, J.; Camp, F.; Gracia-Aguila, F.; Solanelles-Batlle, F.; Planas-DeMarti, S.; et al. Innovative LIDAR 3D dynamic measurement system to estimate fruit-tree leaf area. Sensors 2011, 11, 5769–5791. [Google Scholar] [CrossRef] [PubMed]

- Zhu, C.; Zhang, X.; Hu, B.; Jaeger, M. Reconstruction of tree crown shape from scanned data. In Technologies for E-Learning and Digital Entertainment; Pan, Z., Zhang, X., Rhalibi, A., Woo, W., Li, Y., Eds.; Springer: Berlin, Germany, 2008; pp. 745–756. [Google Scholar]

- Cui, Y.; Schuon, S.; Chan, D.; Thrun, S.; Theobalt, C. 3D shape scanning with a time-of-flight camera. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1173–1180.

- Shim, H.; Adelsberger, R.; Kim, J.; Rhee, S.M.; Rhee, T.; Sim, J.Y.; Gross, M.; Kim, C. Time-of-flight sensor and color camera calibration for multi-view acquisition. Vis. Comput. 2012, 28, 1139–1151. [Google Scholar] [CrossRef]

- Song, Y.; Glasbey, C.A.; Heijden, G.A.M.; Polder, G.; Dieleman, J.A. Combining stereo and time-of-flight images with application to automatic plant phenotyping. In Image Analysis; Heyden, A., Kahl, F., Eds.; Springer: Berlin, Germany, 2011; pp. 467–478. [Google Scholar]

- Boykov, Y.; Kolmogorov, V. An experimental comparison of min-cut/max- flow algorithms for energy minimization in vision. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1124–1137. [Google Scholar] [CrossRef] [PubMed]

- Adhikari, B.; Karkee, M. 3D reconstruction of apple trees for mechanical pruning. In Proceedings of the ASABE Annual International Meeting, Louisville, KY, USA, 7–10 August 2011.

- Microsoft, Kinect for Xbox 360. Available online: http://www.xbox.com/en-US/KINECT (accessed on 10 March 2012).

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A. KinectFusion: Real-time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 559–568.

- Newcombe, R.A.; Davison, A.J.; Izadi, S.; Kohli, P.; Hilliges, O.; Shotton, J.; Molyneaux, D.; Hodges, S.; Kim, D.; Fitzgibbon, A. KinectFusion: Real-time dense surface mapping and tracking. In Proceedings of the 10th IEEE International Symposium on Mixed and Augmented reality (ISMAR), Basel, Switzerland, 26–29 October 2011; pp. 127–136.

- Chene, Y.; Rousseau, D.; Lucidarme, P.; Bertheloot, J.; Caffier, V.; Morel, P.; Belin, E.; Chapeau-Blondeau, F. On the use of depth camera for 3D phenotyping of entire plants. Comput. Electron. Agric. 2012, 82, 122–127. [Google Scholar] [CrossRef] [Green Version]

- Azzari, G.; Goulden, M.; Rusu, R. Rapid characterization of vegetation structure with a Microsoft Kinect sensor. Sensors 2013, 13, 2384–2398. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Zhang, Q. Three-dimensional reconstruction of a dormant tree using RGB-D cameras. In Proceedings of the Annual International Meeting, Kansas City, MI, USA, 21–24 July 2013; p. 1.

- Zhang, W.; Wang, H.; Zhou, G.; Yan, G. Corn 3D reconstruction with photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 967–970. [Google Scholar]

- Song, Y. Modelling and Analysis of Plant Image Data for Crop Growth Monitoring in Horticulture. Ph.D. Thesis, University of Warwick, Coventry, UK, 2008. [Google Scholar]

- Han, S.; Burks, T.F. 3D reconstruction of a citrus canopy. In Proceedings of the 2009 ASABE Annual International Meeting, Reno, NV, USA, 21–24 June 2009.

- Pollefeys, M.; Koch, R.; van Gool, L. Self-calibration and metric reconstruction in spite of varying and unknown internal camera parameters. In Proceedings of the 6th International Conference on Computer Vision, Bombay, India, 4–7 January 1998; pp. 90–95.

- Pollefeys, M.; Koch, R.; Vergauwen, M.; van Gool, L. Automated reconstruction of 3D scenes from sequences of images. ISPRS J. Photogramm. Remote Sens. 2000, 55, 251–267. [Google Scholar] [CrossRef]

- Fitzgibbon, A.W.; Zisserman, A. Automatic camera recovery for closed or open image sequences. In Proceedings of the 5th European Conference on Computer Vision-Volume I, Freiburg, Germany, 2–6 June 1998; pp. 311–326.

- Snavely, N.; Seitz, S.M.; Szeliski, R. Photo tourism: Exploring photo collections in 3D. ACM Trans. Graph. 2006, 25, 835–846. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Quan, L.; Tan, P.; Zeng, G.; Yuan, L.; Wang, J.; Kang, S.B. Image-based plant modeling. ACM Trans. Graph. 2006, 25, 599–604. [Google Scholar] [CrossRef]

- Lhuillier, M.; Quan, L. A quasi-dense approach to surface reconstruction from uncalibrated images. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 418–433. [Google Scholar] [CrossRef] [PubMed]

- Tan, P.; Zeng, G.; Wang, J.; Kang, S.B.; Quan, L. Image-based tree modeling. ACM Trans. Graph. 2007, 26, 87. [Google Scholar] [CrossRef]

- Teng, C.H.; Kuo, Y.T.; Chen, Y.S. Leaf segmentation, classification, and three-dimensional recovery from a few images with close viewpoints. Opt. Eng. 2011, 50, 037003. [Google Scholar]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef] [PubMed]

- Santos, T.T.; Oliveira, A.A. Image-based 3D digitizing for plant architecture analysis and phenotyping. In Proceedings of the Workshop on Industry Applications (WGARI) in SIBGRAPI 2012 (XXV Conference on Graphics, Patterns and Images), Ouro Preto, Brazil, 22–25 August 2012.

- Wu, C. SiftGPU: A GPU Implementation of Scale Invariant Feature Transform (SIFT). 2007. Available online: http://www.cs.unc.edu/~ccwu/siftgpu/ (accessed on 20 April 2013).

- Snavely, N. Bundler: Structure from Motion (SfM) for Unordered Image Collections. 2010. Available online: http://phototour.cs.washington.edu/bundler/ (accessed on 8 August 2011).

- Wu, C. VisualSFM: A Visual Structure from Motion System. 2011. Available online: http://homes.cs.washington.edu/~ccwu/vsfm/ (accessed on 20 April 2013).

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Bouguet, J.Y. Camera Calibration ToolBox for Matlab. 2008. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/ (accessed on 27 October 2011).

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the 4th Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151.

- Richard, S. Computer Vision: Algorithms and Applications; Springer: Berlin, Germany, 2011. [Google Scholar]

- Mikolajczyk, K.; Schmid, C. Scale & affine invariant interest point detectors. Int. J. Comput. Vis. 2004, 60, 63–86. [Google Scholar]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed]

- Yan, K.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 502, pp. II-506–II-513.

- Morel, J.M.; Yu, G. ASIFT: A new framework for fully affine invariant image comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; van Gool, L. Surf: Speeded up robust features. In Proceedings of the 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006.

- Arya, S.; Mount, D.M.; Netanyahu, N.S.; Silverman, R.; Wu, A.Y. An optimal algorithm for approximate nearest neighbor searching fixed dimensions. J. ACM 1998, 45, 891–923. [Google Scholar] [CrossRef]

- Lourakis, M.I.A.; Argyros, A.A. SBA: A software package for generic sparse bundle adjustment. ACM Trans. Math. Softw. 2009, 36, 1–30. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multi-view stereopsis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8.

- Furukawa, Y.; Ponce, J. Patch-Based Multi-View Stereo Software (PMVS—Version 2). 2010. Available online: http://www.di.ens.fr/pmvs/ (accessed on 9 September 2012).

- Furukawa, Y. Clustering Views for Multi-View Stereo (CMVS). 2010. Available online: http://www.di.ens.fr/cmvs/ (accessed on 9 September 2012).

- Wu, C.C.; Agarwal, S.; Curless, B.; Seitz, S.M. Multicore bundle adjustment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3057–3064.

- Forsyth, D.A.; Ponce, J. Computer Vision: A Modern Approach; Prentice Hall: Upper Saddle River, NJ, USA, 2003. [Google Scholar]

| Axis | Mean Absolute Error (mm) | Standard Deviation (mm) |

|---|---|---|

| X | 0.42 | 0.35 |

| Y | 0.36 | 0.31 |

| Z | 2.78 | 1.74 |

| Length | L1 | L2 | L3 | L4 | L5 | L6 |

|---|---|---|---|---|---|---|

| Estimated length (mm) | 64.19 | 63.47 | 68.82 | 65.59 | 63.00 | 61.99 |

| Actual length (mm) | 64.00 | 64.00 | 64.00 | 64.00 | 64.00 | 64.00 |

| error (mm) | 0.19 | −0.53 | 4.82 | 1.59 | −1.00 | −2.01 |

| Height | H1 | H2 | H3 | H4 | H5 | H6 |

|---|---|---|---|---|---|---|

| Estimated height (mm) | 70.45 | 68.53 | 71.10 | 68.13 | 70.68 | 69.03 |

| Actual height (mm) | 70.00 | 70.00 | 70.00 | 70.00 | 70.00 | 70.00 |

| error (mm) | 0.45 | −1.47 | 1.10 | −1.83 | 0.68 | −0.97 |

| Experimental Targets | # of Voxel Hits/# of Total 3D Points | Voxel Size (mm3) | Volume (cm3) |

|---|---|---|---|

| Croton | 16,156/19,579 | 28.46 | 1.23 × 103 |

| Jalapeno pepper | 28,591/38,773 | 12.61 | 3.61 × 102 |

| Lemon tree | 48,609/96,680 | 3.76 | 1.83 × 102 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ni, Z.; Burks, T.F.; Lee, W.S. 3D Reconstruction of Plant/Tree Canopy Using Monocular and Binocular Vision. J. Imaging 2016, 2, 28. https://doi.org/10.3390/jimaging2040028

Ni Z, Burks TF, Lee WS. 3D Reconstruction of Plant/Tree Canopy Using Monocular and Binocular Vision. Journal of Imaging. 2016; 2(4):28. https://doi.org/10.3390/jimaging2040028

Chicago/Turabian StyleNi, Zhijiang, Thomas F. Burks, and Won Suk Lee. 2016. "3D Reconstruction of Plant/Tree Canopy Using Monocular and Binocular Vision" Journal of Imaging 2, no. 4: 28. https://doi.org/10.3390/jimaging2040028