3D Imaging with a Sonar Sensor and an Automated 3-Axes Frame for Selective Spraying in Controlled Conditions

Abstract

:1. Introduction

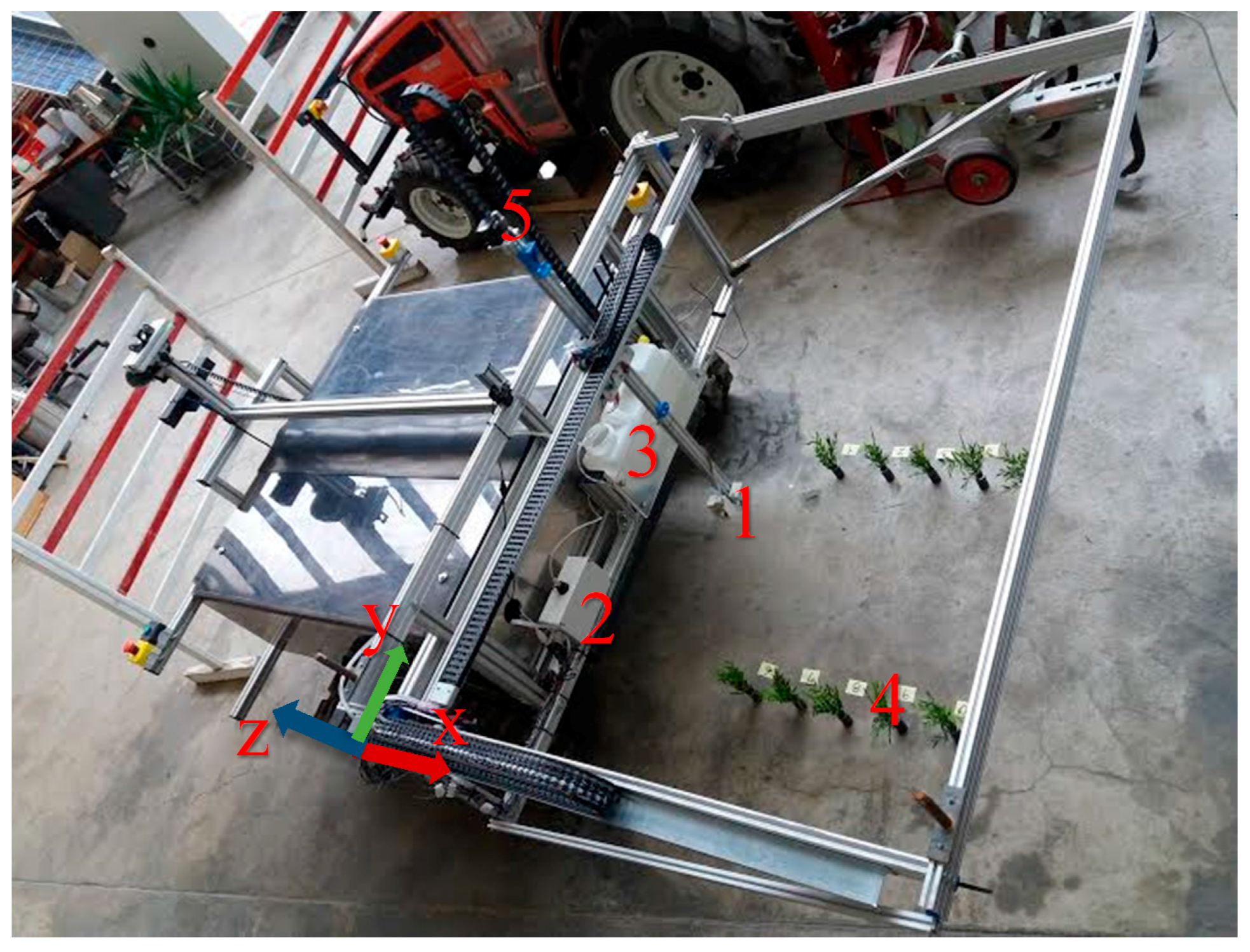

2. Materials and Methods

2.1. Hardware and Sensor Setup

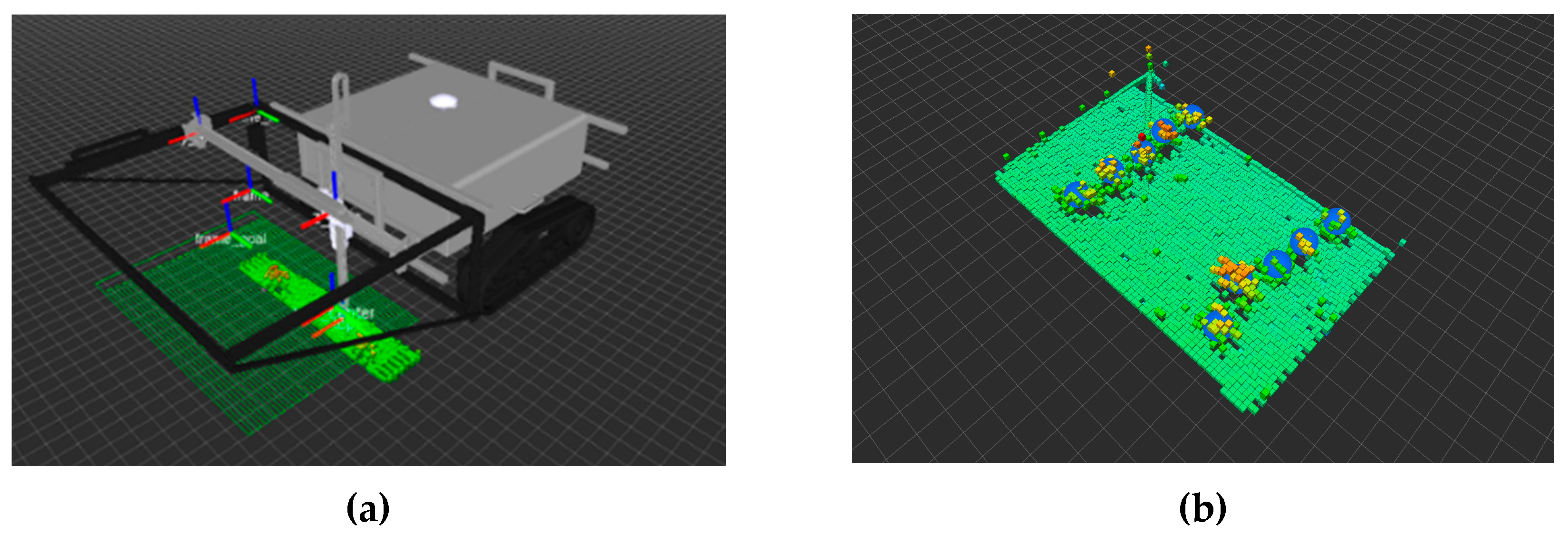

2.2. Software Setup

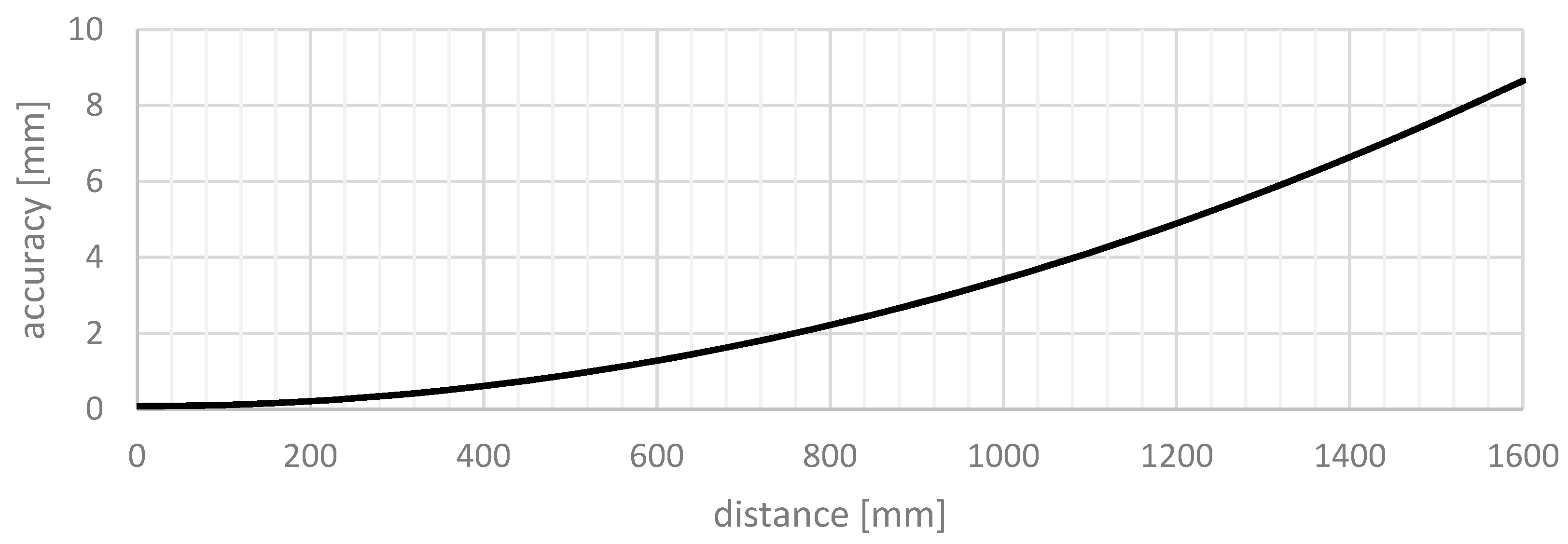

2.3. Calibration and System Test

2.4. Experiment Description

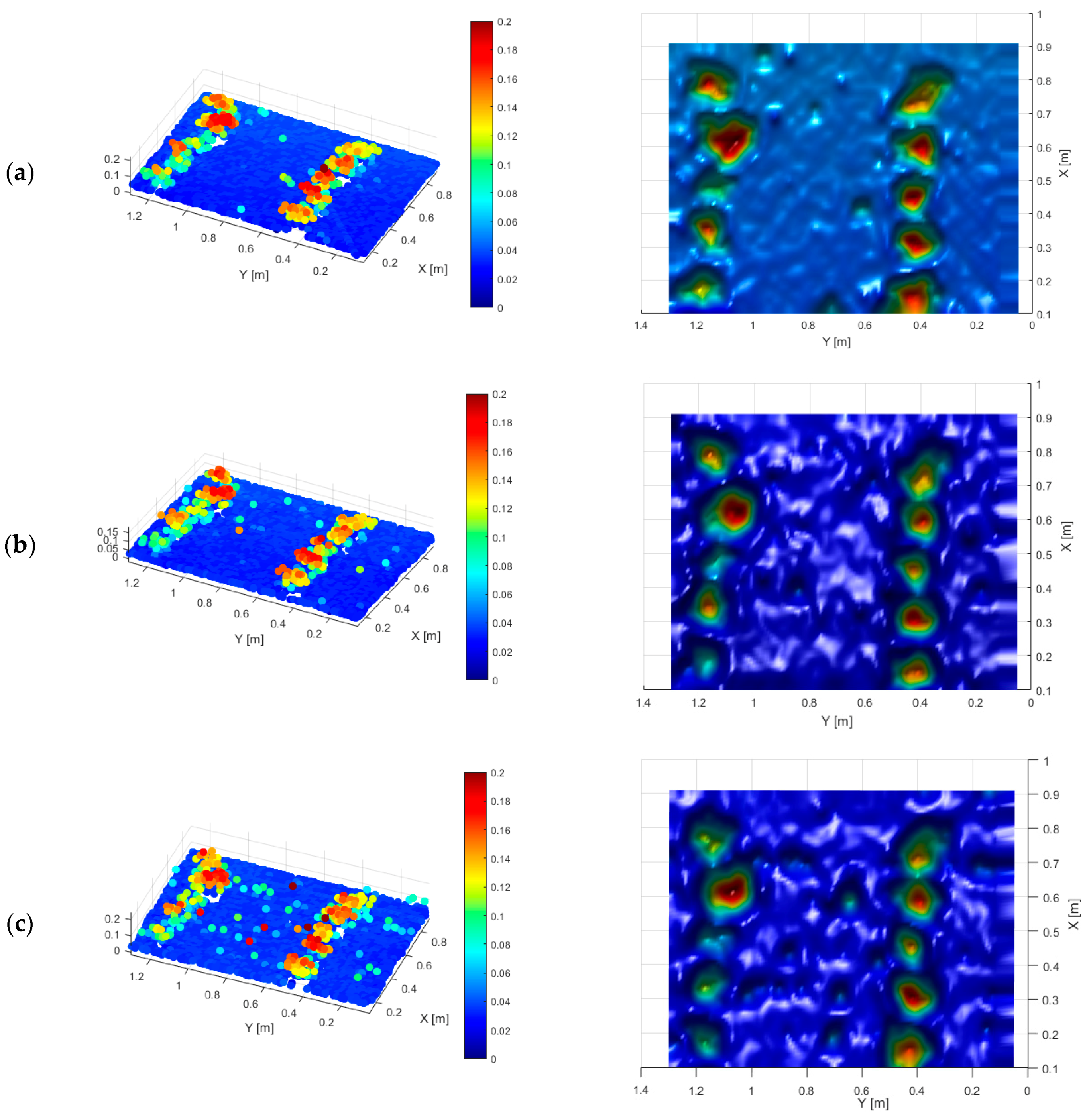

2.5. Point Cloud Assembling and Processing

2.6. Precision Spraying

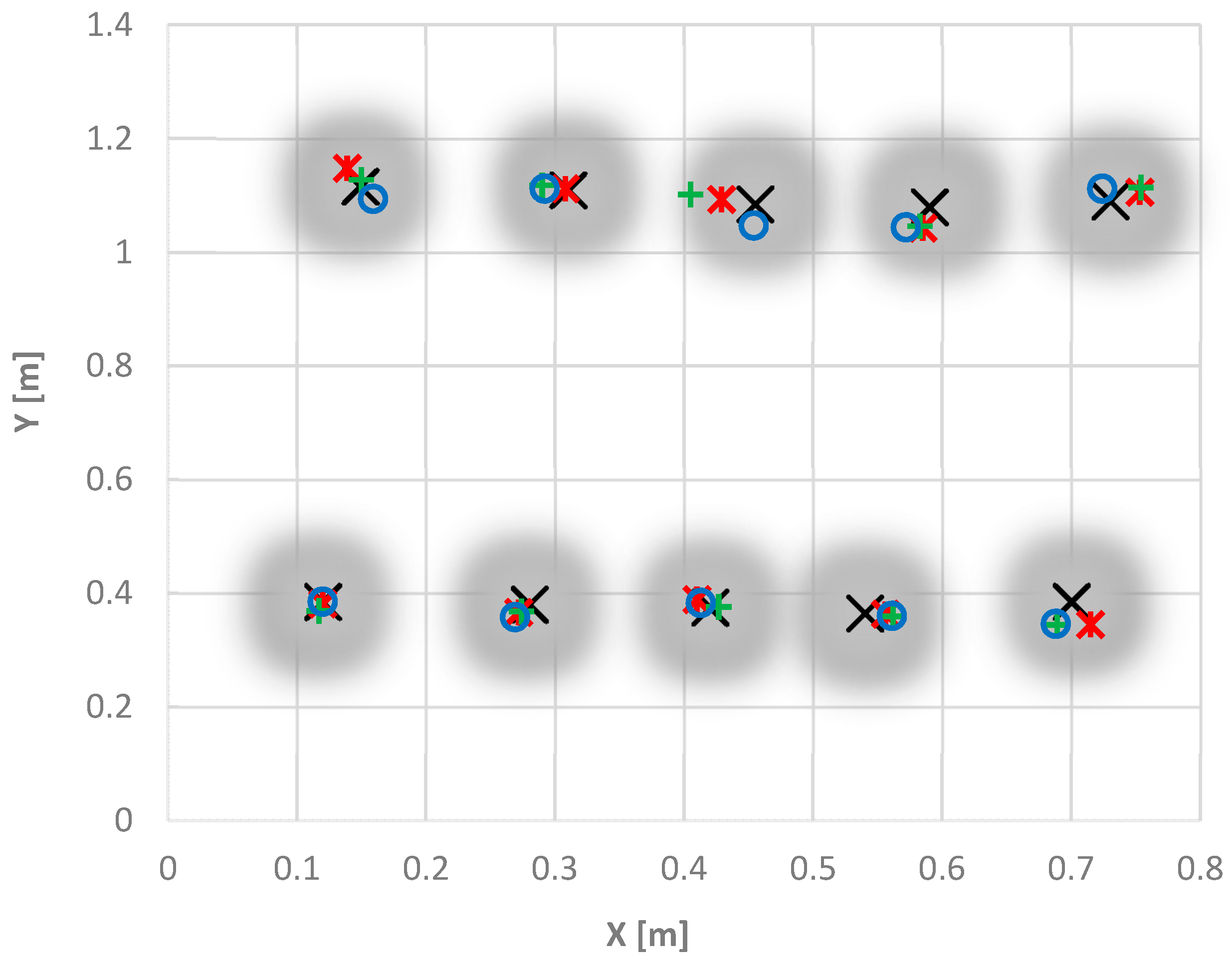

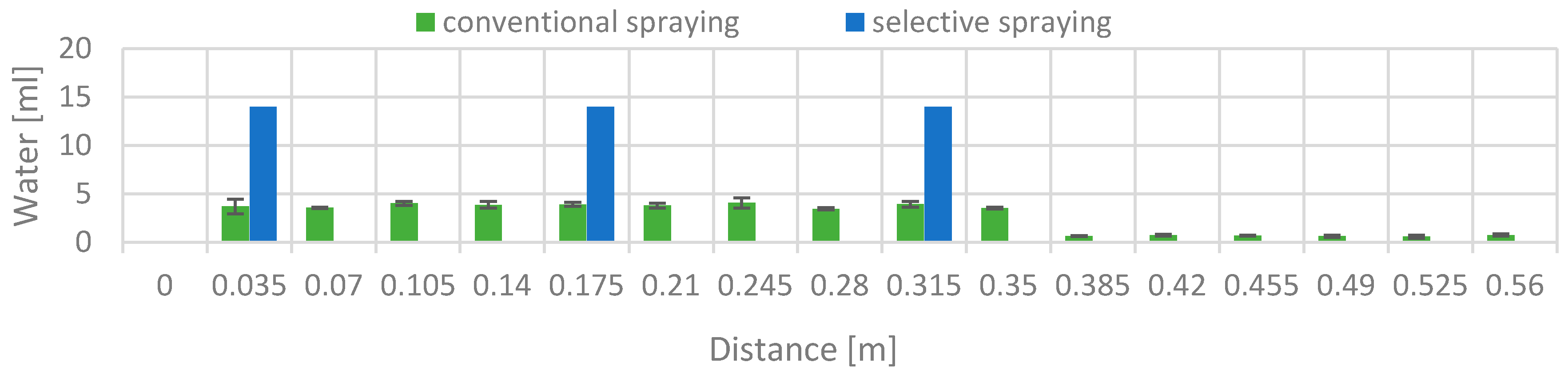

3. Results and Discussion

- The ground surface must have a planar shape so that it can be detected with a RANSAC plane-fitting algorithm. The soil irregularities must be smaller than the height of the plants.

- The plant leaves should not cover the area between the plants, so that height differences at the plant gaps are detectable.

- No other sonar sources should interfere with the sensor system.

- The plant height is smaller than approximately 0.5 m, since the TCP or the mobile robot could touch the leaves, producing incorrect measurements or damaging the plants.

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- El-Gawad, H.A. Validation method of organochlorine pesticides residues in water using gas chromatography—Quadruple mass. Water Sci. 2016, 30, 96–107. [Google Scholar] [CrossRef]

- Gaillard, J.; Thomas, M.; Iuretig, A.; Pallez, C.; Feidt, C.; Dauchy, X.; Banas, D. Barrage fishponds: Reduction of pesticide concentration peaks and associated risk of adverse ecological effects in headwater streams. J. Environ. Manag. 2016, 169, 261–271. [Google Scholar] [CrossRef] [PubMed]

- Oerke, E.-C. Crop losses to pests. J. Agric. Sci. 2006, 144, 31. [Google Scholar] [CrossRef]

- Kira, O.; Linker, R.; Dubowski, Y. Estimating drift of airborne pesticides during orchard spraying using active Open Path FTIR. Atmos. Environ. 2016, 142, 264–270. [Google Scholar] [CrossRef]

- Doulia, D.S.; Anagnos, E.K.; Liapis, K.S.; Klimentzos, D.A. Removal of pesticides from white and red wines by microfiltration. J. Hazard. Mater. 2016, 317, 135–146. [Google Scholar] [CrossRef] [PubMed]

- Heap, I. Global perspective of herbicide-resistant weeds. Pest Manag. Sci. 2014, 70, 1306–1315. [Google Scholar] [CrossRef] [PubMed]

- Alves, J.; Maria, J.; Ferreira, S.; Talamini, V.; De Fátima, J.; Medianeira, T.; Damian, O.; Bohrer, M.; Zanella, R.; Beatriz, C.; et al. Determination of pesticides in coconut (Cocos nucifera Linn.) water and pulp using modified QuEChERS and LC–MS/MS. Food Chem. 2016, 213, 616–624. [Google Scholar]

- Solanelles, F.; Escolà, A.; Planas, S.; Rosell, J.R.; Camp, F.; Gràcia, F. An Electronic Control System for Pesticide Application Proportional to the Canopy Width of Tree Crops. Biosyst. Eng. 2006, 95, 473–481. [Google Scholar] [CrossRef]

- Chang, J.; Li, H.; Hou, T.; Li, F. Paper-based fluorescent sensor for rapid naked-eye detection of acetylcholinesterase activity and organophosphorus pesticides with high sensitivity and selectivity. Biosens. Bioelectron. 2016, 86, 971–977. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-de-Soto, M.; Emmi, L.; Perez-Ruiz, M.; Aguera, J.; Gonzalez-de-Santos, P. Autonomous systems for precise spraying—Evaluation of a robotised patch sprayer. Biosyst. Eng. 2016, 146, 165–182. [Google Scholar] [CrossRef]

- Peteinatos, G.G.; Weis, M.; Andújar, D.; Rueda Ayala, V.; Gerhards, R. Potential use of ground-based sensor technologies for weed detection. Pest Manag. Sci. 2014, 70, 190–199. [Google Scholar] [CrossRef] [PubMed]

- Oberti, R.; Marchi, M.; Tirelli, P.; Calcante, A.; Iriti, M.; Tona, E.; Ho, M.; Pfaff, J.; Schu, C. Selective spraying of grapevines for disease control using a modular agricultural robot. Biosyst. Eng. 2016, 146, 203–215. [Google Scholar] [CrossRef]

- Slaughter, D.C.; Giles, D.K.; Downey, D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2008, 61, 63–78. [Google Scholar] [CrossRef]

- Blackmore, B.S.; Griepentrog, H.W.; Fountas, S. Autonomous Systems for European Agriculture. In Proceedings of the Automation Technology for Off-Road Equipment, Bonn, Germany, 1–2 September 2006.

- Lee, W.S.; Slaughter, D.C.; Giles, D.K. Robotic weed control system for tomatoes. Precis. Agric. 1999, 1, 95–113. [Google Scholar] [CrossRef]

- Kunz, C.; Sturm, D.J.; Peteinatos, G.G.; Gerhards, R. Weed suppression of Living Mulch in Sugar Beets. Gesunde Pflanz. 2016, 68, 145–154. [Google Scholar] [CrossRef]

- Gil, E.; Escolà, A.; Rosell, J.R.; Planas, S.; Val, L. Variable rate application of Plant Protection Products in vineyard using ultrasonic sensors. Crop Prot. 2007, 26, 1287–1297. [Google Scholar] [CrossRef]

- Garrido, M.; Paraforos, D.; Reiser, D.; Vázquez Arellano, M.; Griepentrog, H.; Valero, C. 3D Maize Plant Reconstruction Based on Georeferenced Overlapping LiDAR Point Clouds. Remote Sens. 2015, 7, 17077–17096. [Google Scholar] [CrossRef]

- Woods, J.; Christian, J. Glidar: An OpenGL-based, Real-Time, and Open Source 3D Sensor Simulator for Testing Computer Vision Algorithms. J. Imaging 2016, 2, 5. [Google Scholar] [CrossRef]

- Backman, J.; Oksanen, T.; Visala, A. Navigation system for agricultural machines: Nonlinear Model Predictive path tracking. Comput. Electron. Agric. 2012, 82, 32–43. [Google Scholar] [CrossRef]

- Berge, T.W.; Goldberg, S.; Kaspersen, K.; Netland, J. Towards machine vision based site-specific weed management in cereals. Comput. Electron. Agric. 2012, 81, 79–86. [Google Scholar] [CrossRef]

- Bietresato, M.; Carabin, G.; Vidoni, R.; Gasparetto, A.; Mazzetto, F. Evaluation of a LiDAR-based 3D-stereoscopic vision system for crop-monitoring applications. Comput. Electron. Agric. 2016, 124, 1–13. [Google Scholar] [CrossRef]

- Vázquez-Arellano, M.; Griepentrog, H.W.; Reiser, D.; Paraforos, D.S. 3-D Imaging Systems for Agricultural Applications—A Review. Sensors 2016, 16, 24. [Google Scholar] [CrossRef] [PubMed]

- Andújar, D.; Dorado, J.; Fernández-Quintanilla, C.; Ribeiro, A. An approach to the use of depth cameras for weed volume estimation. Sensors 2016, 16, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Li, C.; Paterson, A.H. High throughput phenotyping of cotton plant height using depth images under field conditions. Comput. Electron. Agric. 2016, 130, 57–68. [Google Scholar] [CrossRef]

- Kusumam, K.; Kranjík, T.; Pearson, S.; Cielniak, G.; Duckett, T. Can You Pick a Broccoli? 3D-Vision Based Detection and Localisation of Broccoli Heads in the Field. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Deajeon, Korea, 9–14 October 2016; pp. 1–6.

- Tumbo, S.D.; Salyani, M.; Whitney, J.D.; Wheaton, T.A.; Miller, W.M. Investigation of Laser and Ultrasonic Ranging Sensors for Measurements of Citrus Canopy Volume. Appl. Eng. Agric. 2002, 18, 367–372. [Google Scholar] [CrossRef]

- Giles, D.K.; Delwiche, M.J.; Dodd, R.B. Control of orchard spraying based on electronic sensing of target characteristics. Trans. ASABE 1987, 30, 1624–1630. [Google Scholar] [CrossRef]

- Doruchowski, G.; Holownicki, R. Environmentally friendly spray techniques for tree crops. Crop Prot. 2000, 19, 617–622. [Google Scholar] [CrossRef]

- Swain, K.C.; Zaman, Q.U.Z.; Schumann, A.W.; Percival, D.C. Detecting weed and bare-spot in wild blueberry using ultrasonic sensor technology. Am. Soc. Agric. Biol. Eng. Annu. Int. Meet. 2009 2009, 8, 5412–5419. [Google Scholar]

- Zaman, Q.U.; Schumann, A.W.; Miller, W.M. Variable rate nitrogen application in Florida citrus based on ultrasonically-sensed tree size. Appl. Eng. Agric. 2005, 21, 331–335. [Google Scholar] [CrossRef]

- Walklate, P.J.; Cross, J.V.; Richardson, G.M.; Baker, D.E. Optimising the adjustment of label-recommended dose rate for orchard spraying. Crop Prot. 2006, 25, 1080–1086. [Google Scholar] [CrossRef]

- Reiser, D.; Paraforos, D.S.; Khan, M.T.; Griepentrog, H.W.; Vázquez Arellano, M. Autonomous field navigation, data acquisition and node location in wireless sensor networks. Precis. Agric. 2016, 1, 1–14. [Google Scholar] [CrossRef]

- Zlot, R.; Bosse, M. Efficient Large-scale Three-dimensional Mobile Mapping for Underground Mines. J. Field Robot. 2014, 31, 758–779. [Google Scholar] [CrossRef]

- Reiser, D.; Izard, M.G.; Arellano, M.V.; Griepentrog, H.W.; Paraforos, D.S. Crop Row Detection in Maize for Developing Navigation Algorithms under Changing Plant Growth Stages. In Advances in Intelligent Systems and Computing; Springer: Lisbon, Portugal, 2016; Volume 417, pp. 371–382. [Google Scholar]

- Weiss, U.; Biber, P. Plant detection and mapping for agricultural robots using a 3D LIDAR sensor. Robot. Auton. Syst. 2011, 59, 265–273. [Google Scholar] [CrossRef]

- Back, W.C.; van Henten, E.J.; Edan, Y. Harvesting Robots for High-value Crops: State-of-the-art Review and Challenges Ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Dornbusch, T.; Wernecke, P.; Diepenbrock, W. A method to extract morphological traits of plant organs from 3D point clouds as a database for an architectural plant model. Ecol. Modell. 2007, 200, 119–129. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point cloud library. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 1–4.

- Rovira-Más, F.; Wang, Q.; Zhang, Q. Design parameters for adjusting the visual field of binocular stereo cameras. Biosyst. Eng. 2010, 105, 59–70. [Google Scholar] [CrossRef]

- Pajares, G.; García-Santillán, I.; Campos, Y.; Montalvo, M.; Guerrero, J.; Emmi, L.; Romeo, J.; Guijarro, M.; Gonzalez-de-Santos, P. Machine-Vision Systems Selection for Agricultural Vehicles: A Guide. J. Imaging 2016, 2, 34. [Google Scholar] [CrossRef]

- Reckleben, Y. Cultivation of maize—Which sowing row distance is needed? Landtechnik 2011, 66, 370–372. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Hirschmüller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Errico, J.D. Matlab Gridfit Function. Available online: https://de.mathworks.com/matlabcentral/fileexchange/8998-surface-fitting-using-gridfit (accessed on 23 December 2016).

- Maghsoudi, H.; Minaei, S.; Ghobadian, B.; Masoudi, H. Ultrasonic sensing of pistachio canopy for low-volume precision spraying. Comput. Electron. Agric. 2015, 112, 149–160. [Google Scholar] [CrossRef]

| No. | Total Station Measurement (m) | Software Position (m) | ||

|---|---|---|---|---|

| x | y | x | y | |

| 1 | 0.000 | 0.000 | 0.000 | 0.000 |

| 2 | 0.000 | 1.001 | 0.000 | 1.000 |

| 3 | 0.001 | 1.459 | 0.000 | 1.460 |

| 4 | 0.499 | 0.496 | 0.500 | 0.500 |

| 5 | 0.500 | 1.456 | 0.500 | 1.460 |

| 6 | 1.000 | 0.005 | 1.000 | 0.000 |

| 7 | 1.000 | 1.455 | 1.000 | 1.460 |

| Estimated Pose (m) | Measured Sonar Value (m) | Stereo Camera (m) | ||

|---|---|---|---|---|

| RMSE | Accuracy | |||

| 0.1000 | 0.1037 | 0.0041 | 0.0055 | 0.00011 |

| 0.2000 | 0.2037 | 0.0019 | 0.0041 | 0.00021 |

| 0.3000 | 0.3001 | 0.0002 | 0.0002 | 0.00038 |

© 2017 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reiser, D.; Martín-López, J.M.; Memic, E.; Vázquez-Arellano, M.; Brandner, S.; Griepentrog, H.W. 3D Imaging with a Sonar Sensor and an Automated 3-Axes Frame for Selective Spraying in Controlled Conditions. J. Imaging 2017, 3, 9. https://doi.org/10.3390/jimaging3010009

Reiser D, Martín-López JM, Memic E, Vázquez-Arellano M, Brandner S, Griepentrog HW. 3D Imaging with a Sonar Sensor and an Automated 3-Axes Frame for Selective Spraying in Controlled Conditions. Journal of Imaging. 2017; 3(1):9. https://doi.org/10.3390/jimaging3010009

Chicago/Turabian StyleReiser, David, Javier M. Martín-López, Emir Memic, Manuel Vázquez-Arellano, Steffen Brandner, and Hans W. Griepentrog. 2017. "3D Imaging with a Sonar Sensor and an Automated 3-Axes Frame for Selective Spraying in Controlled Conditions" Journal of Imaging 3, no. 1: 9. https://doi.org/10.3390/jimaging3010009