1. Introduction

Motion detection, one of the fundamental and most important problem of computer vision, plays a very important role in the realization of a complete vision based automated video surveillance system for automatic scene analysis, monitoring, and generation of security alerts based on relevant motion in a video scene. Motion detection in a video scene provides significant and important information related to movement and presence of objects in a scene. This information helps in achieving higher level objectives such as target detection and segmentation, target tracking, object recognition, scene analysis, etc. Furthermore, real-time motion detection allows more efficient hard disk storage by only archiving video frames where a motion threshold is crossed. By selecting the frames of relevant motion, it also reduces the communication and processing overheads (which are key implementation issues for an automated video surveillance system) in a remote video surveillance scenario.

The problem of motion detection can be stated as “given a set of images of the same scene taken at several different times, the goal of motion detection is to identify the set of pixels that are significantly different between the last image of the sequence and the previous images” [

1]. The identified pixels comprise the motion mask. One of the key issues is that the motion mask should not contain “unimportant” or “nuisance” forms of motion.

The importance of motion detection for designing an automated video surveillance system can be gauged from the availability of a large number of robust and complex algorithms that have been developed to-date, and the even larger number of articles that have been published on this topic so far. The simplest approach for motion detection is the frame differencing method in which motion detection can be achieved by finding the difference of the pixels between two adjacent frames. If the difference is higher than a threshold, the pixel is identified as foreground otherwise background. The threshold is chosen empirically. Different methods and criteria for choosing the threshold have been surveyed and their comparative results have been reported in the literature [

2,

3,

4]. Researchers have reported several motion detection methods that are closely related to simple differencing, e.g., change vector analysis [

5,

6,

7], image rationing [

8], and frame differencing using sub-sampled gradient images [

9]. The simplicity of frame differencing based approaches comes at the cost of motion detection quality. For a chosen threshold, simple differencing based approaches are unlikely to outperform the more advanced algorithms proposed for real-world surveillance applications. There are several other motion detection techniques such as predictive models [

10,

11,

12,

13,

14], adaptive neural network [

15], and shading models [

16,

17,

18]. A comprehensive description and comparative analysis of these methods has been presented by Radke et al. [

1].

Practical real-world video surveillance applications demand a continuous updating of the background frame to incorporate any permanent scene change, i.e., if a pixel has remained stationary for a sufficient number of frames, it must be copied into the background frame, for example light intensity changes in day time must be a part of the background. For this purpose, several researchers [

19,

20] have described adaptive background subtraction techniques for motion detection. They have used single Gaussian Density Function to model the background. These algorithms succeed in learning and refining the single background model. They are capable of handling illumination changes in a scene and are well suited for stationary background scenarios.

Due to pseudo-stationary nature of the background in real-world scenes, assuming that background is perfectly stationary for surveillance applications is a serious flaw. For example, in a real-world video scene, there may be swaying branches of trees, moving tree leaves in windows of rooms, moving clouds, the ripples of water on a lake, or moving fan in the room. These are small repetitive motions (typically not important) and so should be incorporated into background. The single background model based approaches mentioned above are incapable of correctly modeling such pseudo-stationary backgrounds. Stauffer and Grimson [

21] recognized that these kinds of pseudo-stationary backgrounds are inherently multi-model and hence they developed the technique of an Adaptive Background Mixture Models, which models each pixel by a mixture of Gaussians. According to this method, every incoming pixel value is compared against the existing set of models at that location to find a match. If there is a match, the parameters of matched model are updated and the incoming pixel is classified as background pixel. If there is no match, the incoming pixel is motion pixel and the least-likely model (model having minimum weighted Gaussian) is discarded and replaced by a new one with incoming pixel as its mean value and a high initial variance value. However, maintaining these mixtures for every pixel is an enormous computational burden and results in low frame rates when compared to previous approaches. Butler et al. [

22] proposed a new approach, similar to that of Stauffer and Grimson [

21], but with a reduced computational complexity. The processing, in this approach, is performed on YCrCb video data format, but it still requires many floating point computations and needs large amounts of memory for storing background models.

Within computer vision community, a myriad of algorithms exist for motion detection, unfortunately, most of these are not suitable for real-time processing and at the same time require a huge amount of storage space (memory) for storing multiple background models. Because of this, the objective of performing motion detection in real-time on limited computational resources for developing standalone automated video surveillance system proves to be a major bottleneck.

Furthermore, it is important to note that motion detection is one component of a potentially complex automated video surveillance system, intended to be used as a standalone system. Therefore, in addition to being accurate and robust, a successful motion detection technique must also be economical in the use of computational resources on FPGA development platform. This is because many other complex algorithms of an automated video surveillance system also run on the same FPGA platform. In order to address this problem of reducing the computational complexity, Chutani and Chaudhury [

23] proposed a block-based clustering scheme with a very low complexity for motion detection. On the one hand, this scheme is robust enough for handling pseudo-stationary nature of background, and on the other it significantly lowers the computational complexity. To achieve the real-time performance, a dedicated VLSI architecture has been designed for this clustering based motion detection scheme by Singh et al. [

24]. After analyzing the synthesis results, it is found that proposed architecture for clustering based motion detection scheme utilizes 168 36Kb Block RAMs out of the total 298 (approximately 56%) Block RAMs are on a Xilinx ML510 (Virtex-5 FX130) FPGA board. This implies that a large amount of on-chip memory (Block RAMs) is utilized by motion detection system, which is only one of the potentially complex and important components of an automated video surveillance system. This is a major concern for designing of a complete standalone automated video surveillance system which requires the implementation of multiple hardware architectures on the same FPGA platform—as not much FPGA Block RAMs are left for other complex operations such as focused region extraction, object tracking, and video history generation. For this reason, further emphasis needs to be given to the minimization of memory requirements of clustering-based motion detection algorithm and architecture without compromising on accuracy and robustness of motion detection.

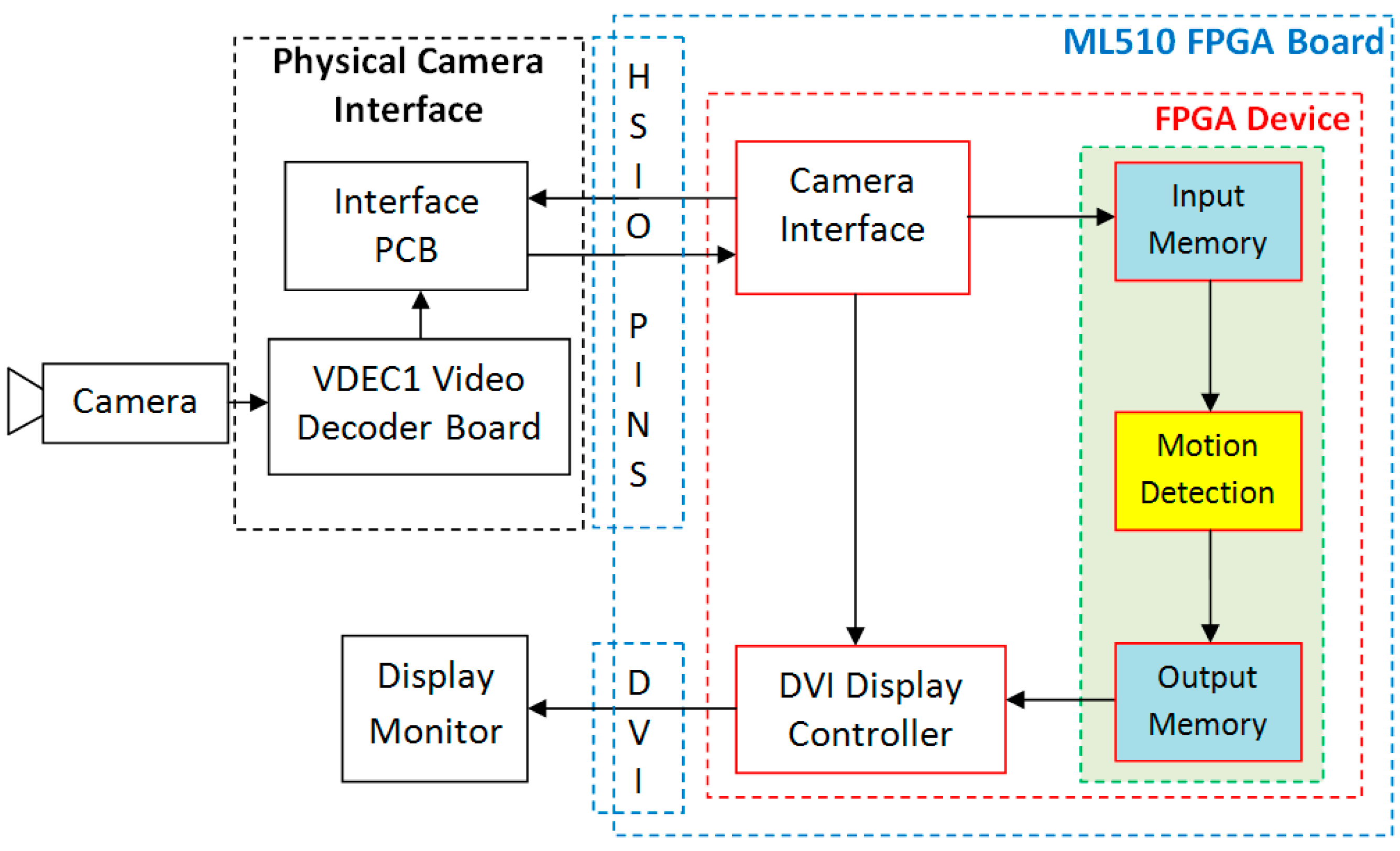

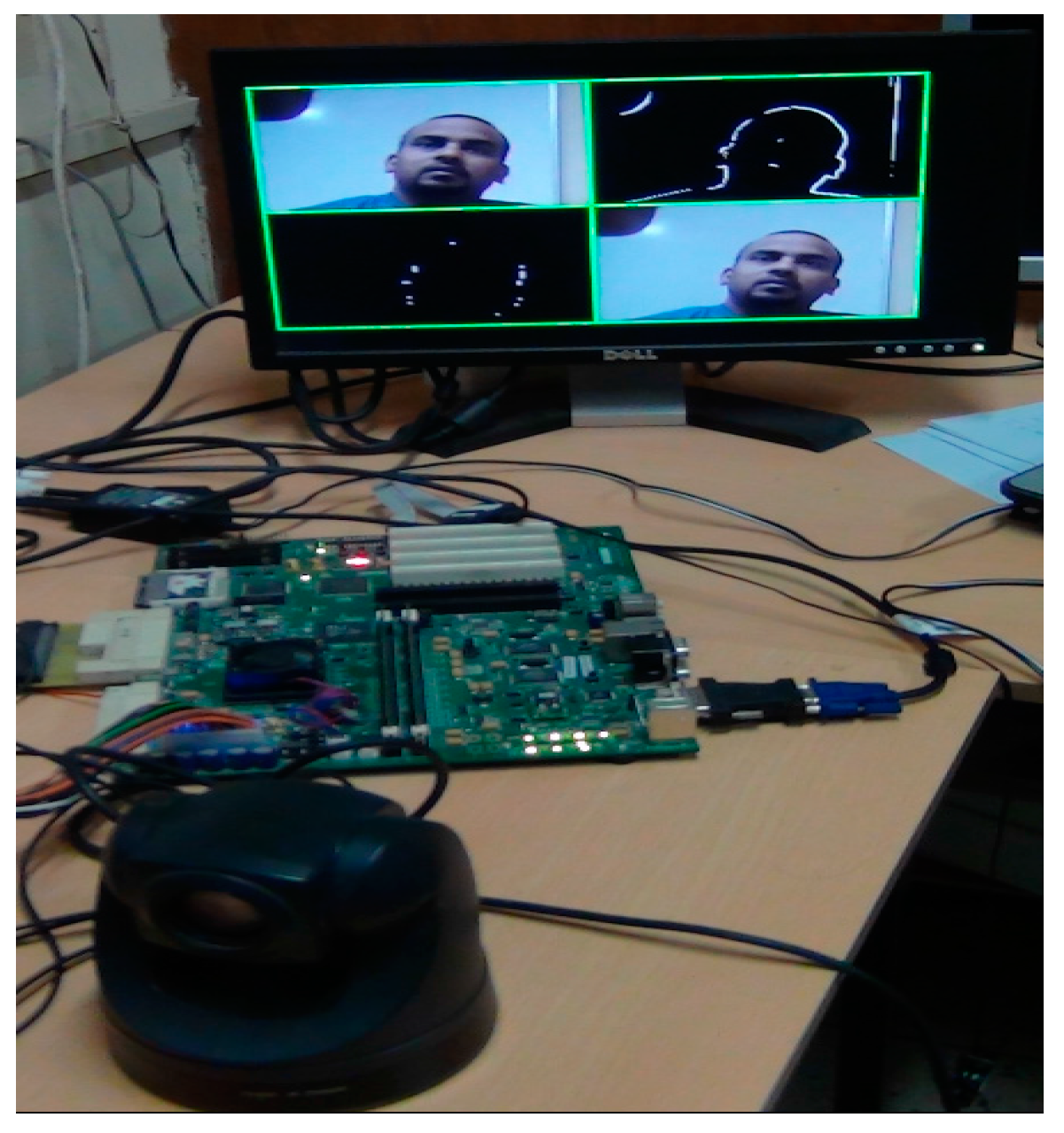

To overcome this problem, based on certain key observation and associated modification in original clustering-based motion detection algorithm, we have proposed a memory-efficient motion detection scheme and designed its dedicated memory-efficient VLSI architecture. Memory requirement of the proposed architecture is reduced by 41% compared to standard clustering based motion detection architecture [

24]. We have integrated the implemented memory efficient VLSI architectural modules with the camera interface module and DVI display controller and a working prototype system has been developed using Xilinx ML510 (Virtex-5 FX130T) FPGA development platform for real-time motion detection in a video scene. The system so developed is capable of detecting relevant motion in real-time and can filter the frames of interest for remote surveillance scenarios based on visual motion in a scene. The implemented memory-efficient system can be used as a component of a complete standalone automated video surveillance system.

In short, the contributions of this work over the existing literature are threefold. First, we have proposed and designed a memory efficient hardware implementation friendly motion detection algorithm. Further, its functionality has been verified through software implementation using C/C++ programming language. Second, for the proposed algorithm, we have designed and synthesized a VLSI architecture capable of providing real-time performance. Finally, we have integrated the implemented architectural modules with the camera interface module and DVI display controller and a working prototype system has been developed for real-time motion detection in a video scene.

2. Proposed Motion Detection Algorithm

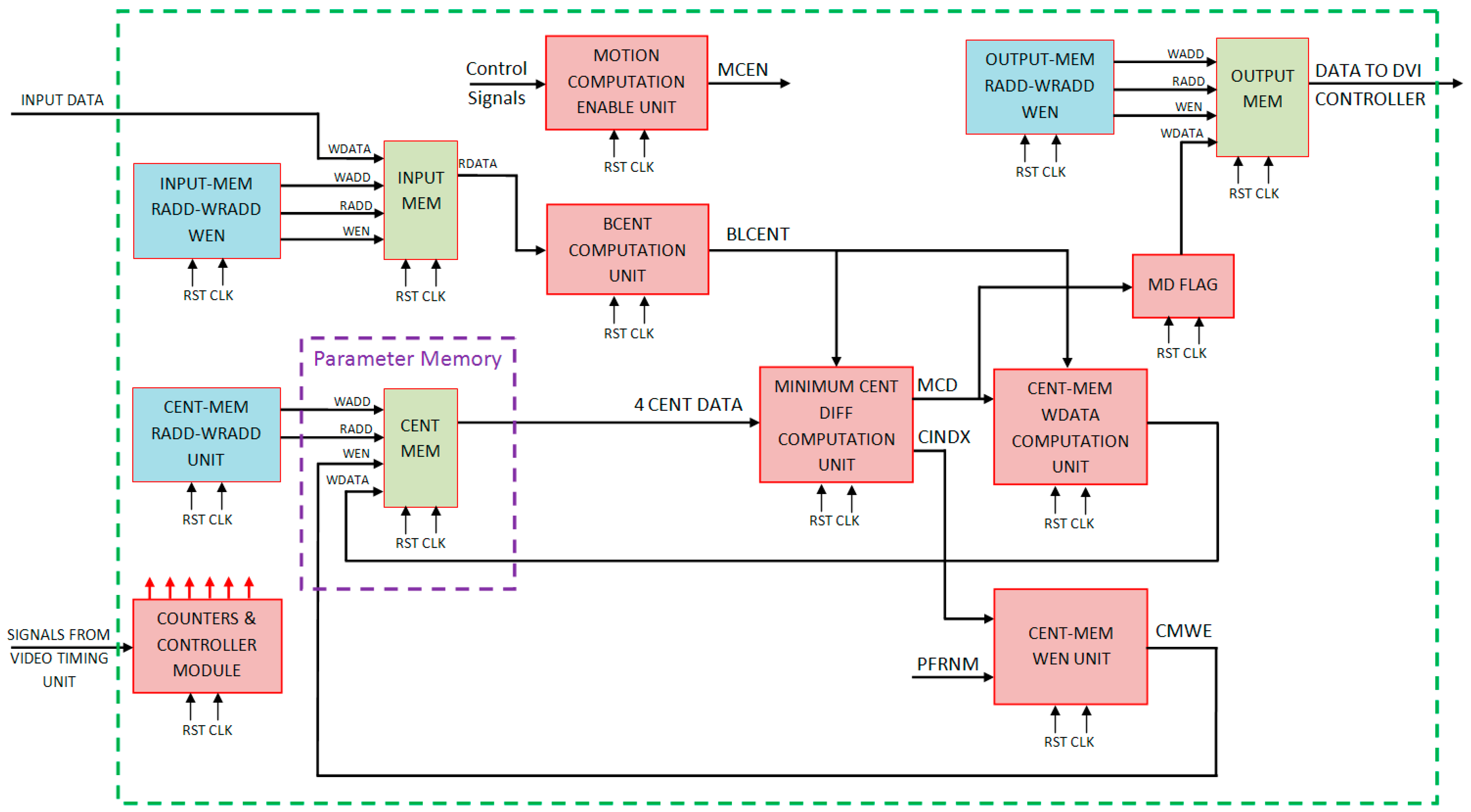

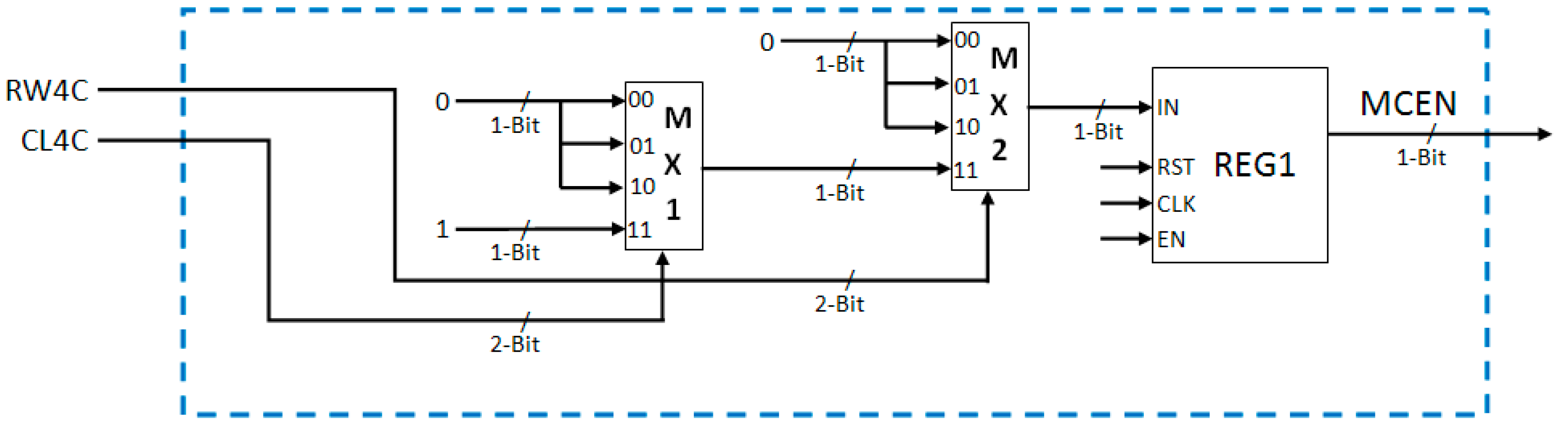

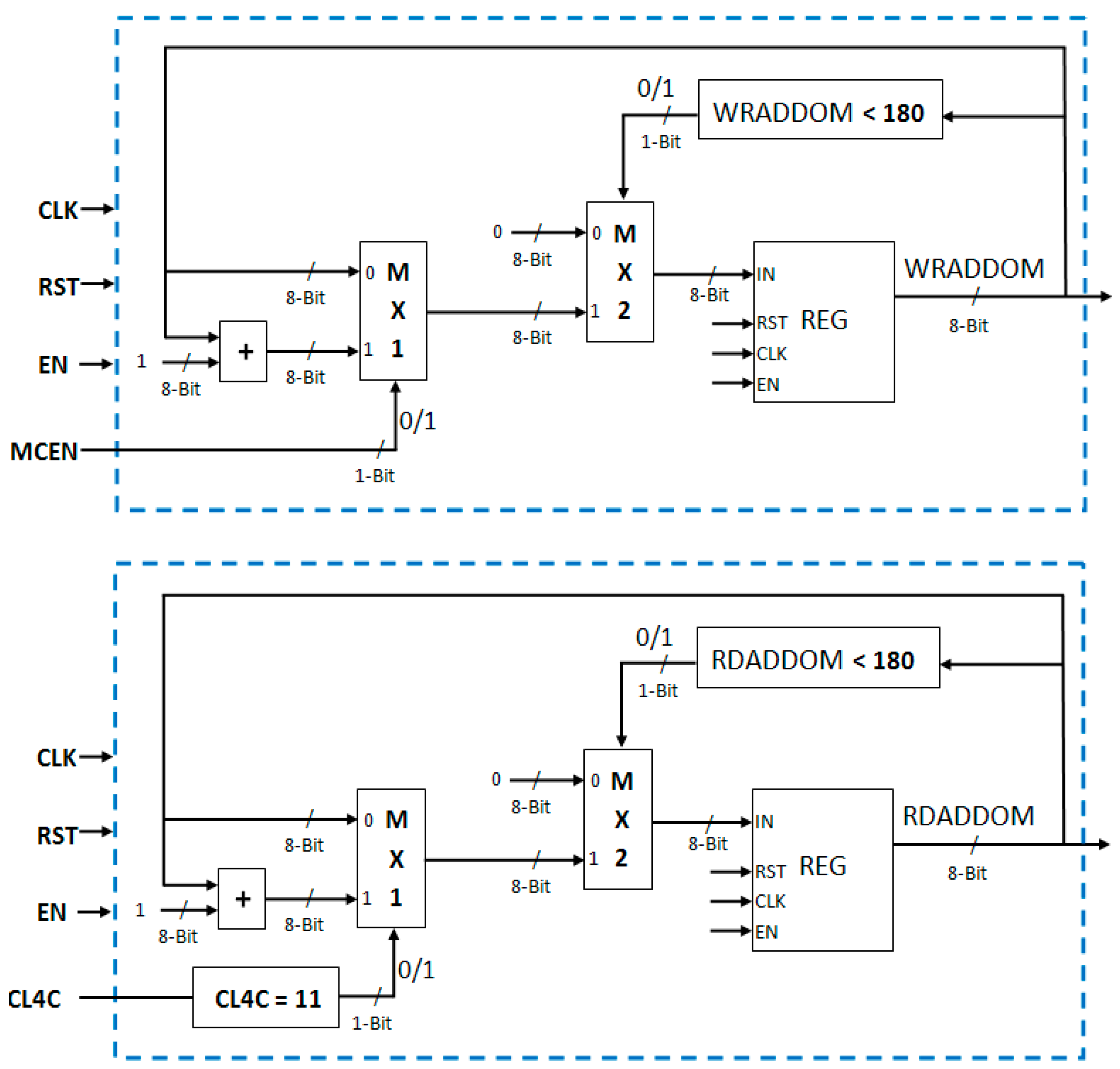

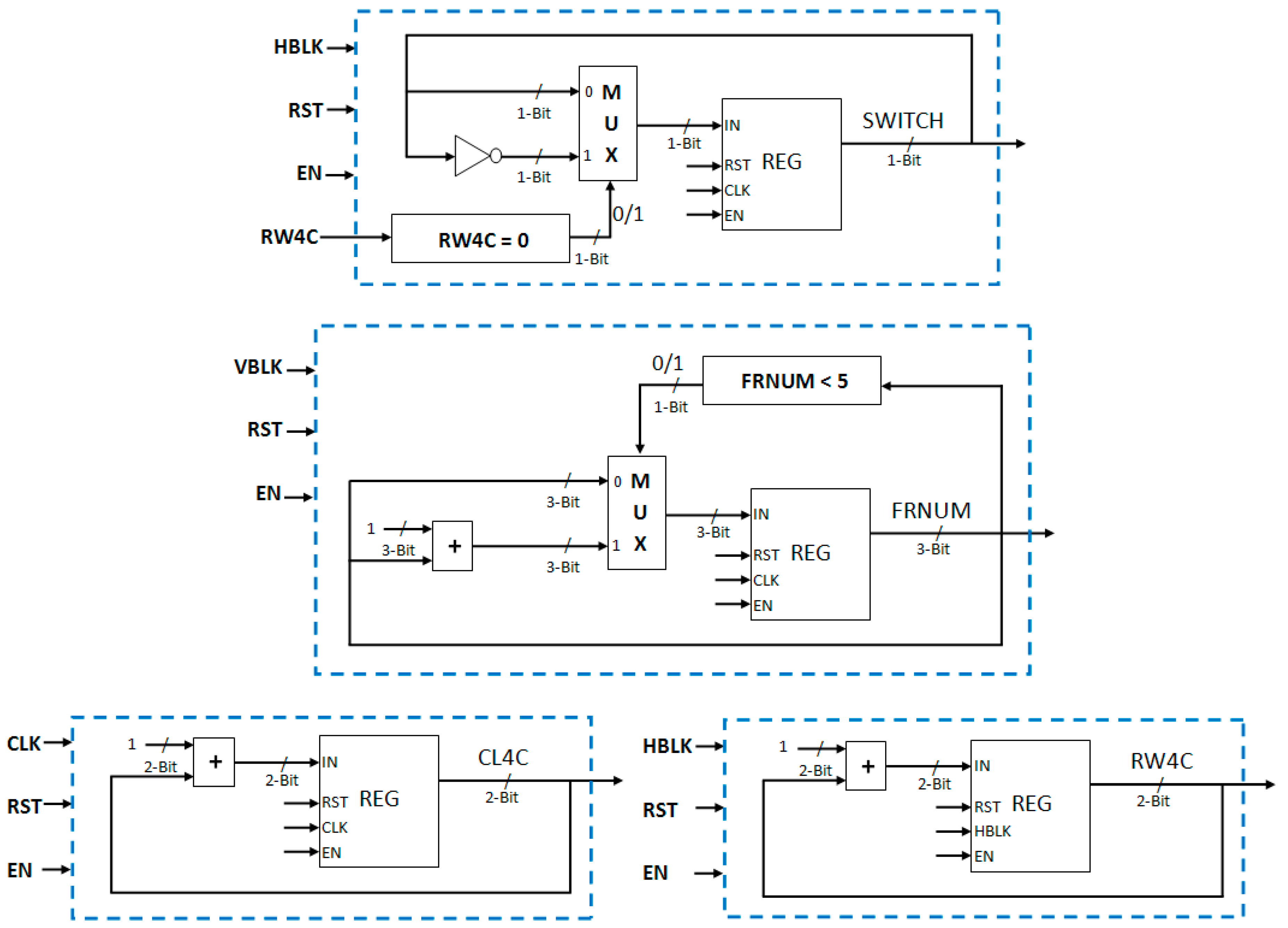

The original clustering-based motion detection algorithm [

23] and its VLSI architecture [

24] were re-looked and the memory analysis of the implemented architecture was carried out. There are three memory components in the proposed architecture: Input Buffer Memory, Output Buffer Memory, and Parameter Memory (Centroid Memory and Frame Number Memory). Each of the two output buffer memory modules stores one-bit output of 4 × 4 pixel block for 4 rows. This requires only 180 bits for each memory. Therefore, both the output memory modules are implemented using LUTs instead of Block RAMs and thus utilizes FPGA slices and not the on-chip memory. The input buffer memory requires eight 36-Kb Block RAMs. The Parameter memory requires 160 36-Kb Block RAMs (96 36-Kb Block RAMs for Centroid Parameter memory and 64 36-Kb Block RAMs for Frame Number Parameter Memory). As output buffer memory is implemented using FPGA slice LUTs so there is no scope of Block RAM reduction for this memory component. Therefore, we need to focus on minimizing the memory requirements for Input Buffer Memory and Parameter Memory. As Input Buffer Memory, requires only eight 36-Kb Block RAMs, effect of optimizations/improvements in Input Buffer Memory will be very small on overall memory requirements. On the other hand, the optimizations/reduction in Parameter Memory will significantly affect overall memory requirements.

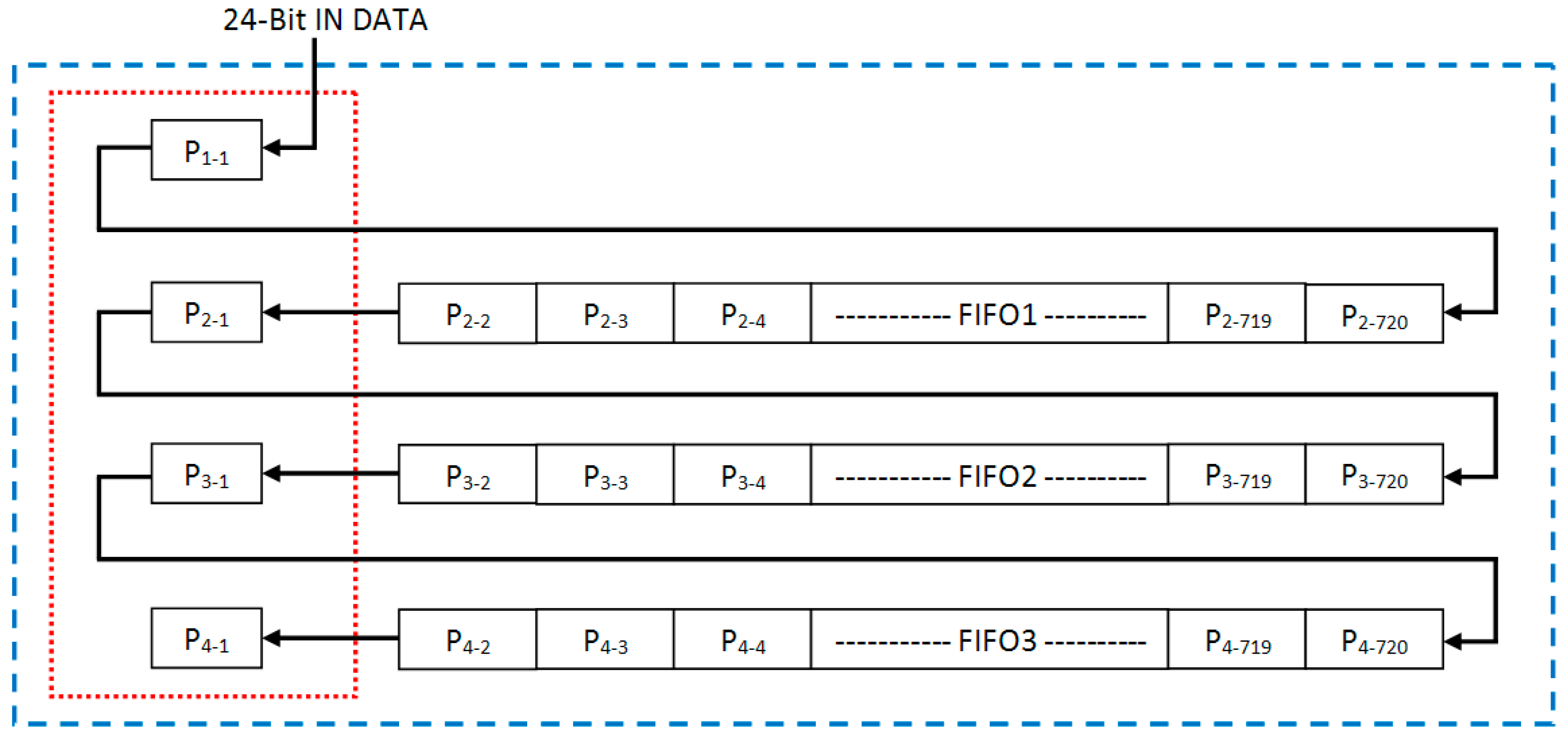

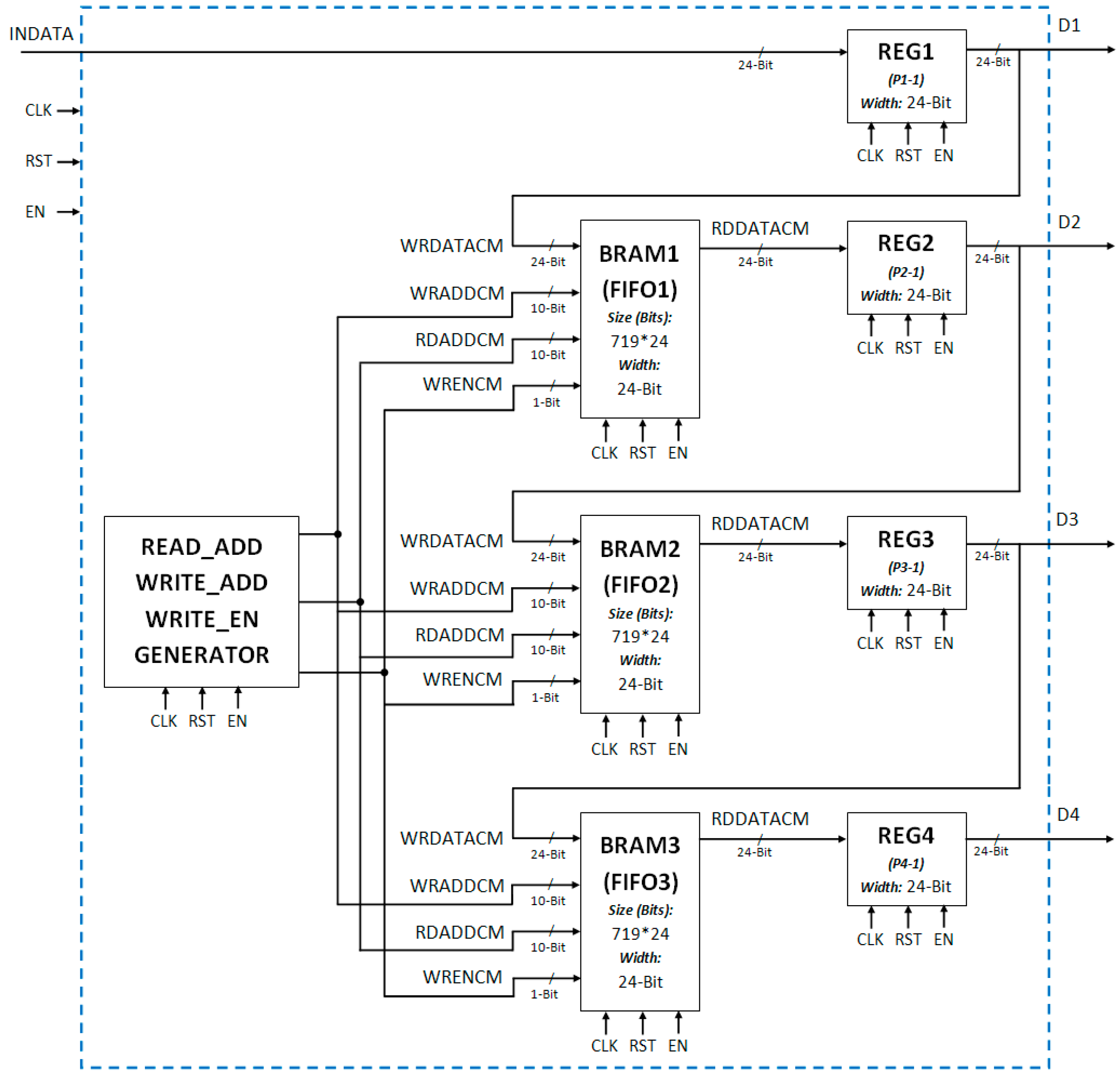

Furthermore, based on prior VLSI design experience and computer vision algorithm knowledge, we observed that the optimizations of Input Buffer Memory will come through architectural modifications, while the optimizations/reduction in Parameter Memory will come through algorithmic modifications. A new architecture has also been proposed for Input Buffer Memory for enabling streaming video processing which utilizes only three 36-Kb Block RAMs and four registers (each of 24-bit width) as against the earlier requirement of eight 36-Kb Block RAMs for Input Buffer Memory.

Therefore, in order to reduce the parameter memory size we proposed a new algorithm based on certain observations and modifications in the original clustering-based motion detection scheme [

23]. It has been found that the proposed modifications have resulted in 40% reduction in Parameter Memory requirements. The algorithm is proposed and designed aiming at reduction in the memory requirements without compromising on the robustness and accuracy of motion detection.

By proposing these architectural and algorithmic modifications, overall 41% reduction has been achieved in total on-chip memory requirements as compared to the architecture designed and implemented for the original clustering-based motion detection scheme. This reduction in memory requirements is very significant.

In the hardware architecture implemented and discussed in [

24] for original clustering based motion detection scheme, almost 95% of the total utilized Block RAMs (160 36-Kb Block RAMs out of 168 utilized 36-Kb Block RAMs) are used by parameter memory for storing Centroid values and Frame Number values of four clusters (used for modeling the pseudo-stationary backgrounds). The parameter memory size is directly proportional to the size of the cluster group, block size, and video frame size. Therefore, for given standard PAL (720 × 576) size color video streams, parameter memory size can be reduced either by reducing the number of clusters from four to three or by increasing the 4 × 4 pixel block size to a larger block size. However, Chutani and Chaudhury [

23] had chosen to select a cluster size of four clusters and block size of 4 × 4 pixels because empirically they had found that these values result in a good balance between accuracy and computational complexity of their algorithm. Therefore, reducing cluster size or increasing block size will result in the degradation of accuracy and robustness of the clustering based motion detection scheme. In the first case, if the number of clusters is reduced to three then the algorithm’s background model used to capture pseudo-stationary changes/movements becomes weak and the algorithm becomes more sensitive to pseudo-stationary background changes, resulting in false relevant motion detection outputs for pseudo-stationary background changes. In the second case, for larger block sizes, the system becomes less sensitive to relevant motions in smaller areas in a video scene. Therefore, none of the above two techniques can be used to reduce parameter memory size as the objective is to reduce parameter memory size without compromising on the accuracy and the robustness of motion detection. For this reason, we re-analyzed the original clustering based motion detection algorithm [

23] and the following observations resulted.

The background related information in the clustering-based motion detection algorithm is stored and updated in parameter memory having two components viz. Centroid Memory and Frame Number Memory. Each Centroid Memory location contains four Centroid values (corresponding to four clusters), which contain the background color and intensity related information. Each Frame Number Memory location stores four Frame Number values (corresponding to four clusters) which are used to keep the record of update or replacement history of the Centroid value, i.e., for the particular 4 × 4 pixel block when (at what time or for what Frame Number) the cluster Centroid value is updated or replaced. Now, the important observation is that during the cluster update (in the case a matching cluster is found) or cluster replacement (in the case no matching cluster is found) the actual time or frame number when the cluster is updated or replaced is not necessarily required. During cluster update, the matching cluster number is required (i.e., first or second or third or fourth), not the actual value of the Frame Number. In the case of cluster replacement, the oldest cluster number (which has not been updated for the longest period of time) is required. This implies that there is no need of storing the complete Frame Number value. An index value is sufficient to maintain the update or replacement history of a cluster, which implies that it is the newest cluster (most recently updated or replaced), the second newest cluster, the second oldest cluster, or the oldest cluster (which has not been updated for the longest period of time). It further implies that a two-bit index value is sufficient to record this information for four clusters. This reduces the 16-bit wide Frame Number Memory to two-bit wide memory. This saves 56 36-Kb Block RAMs for PAL resolution color videos.

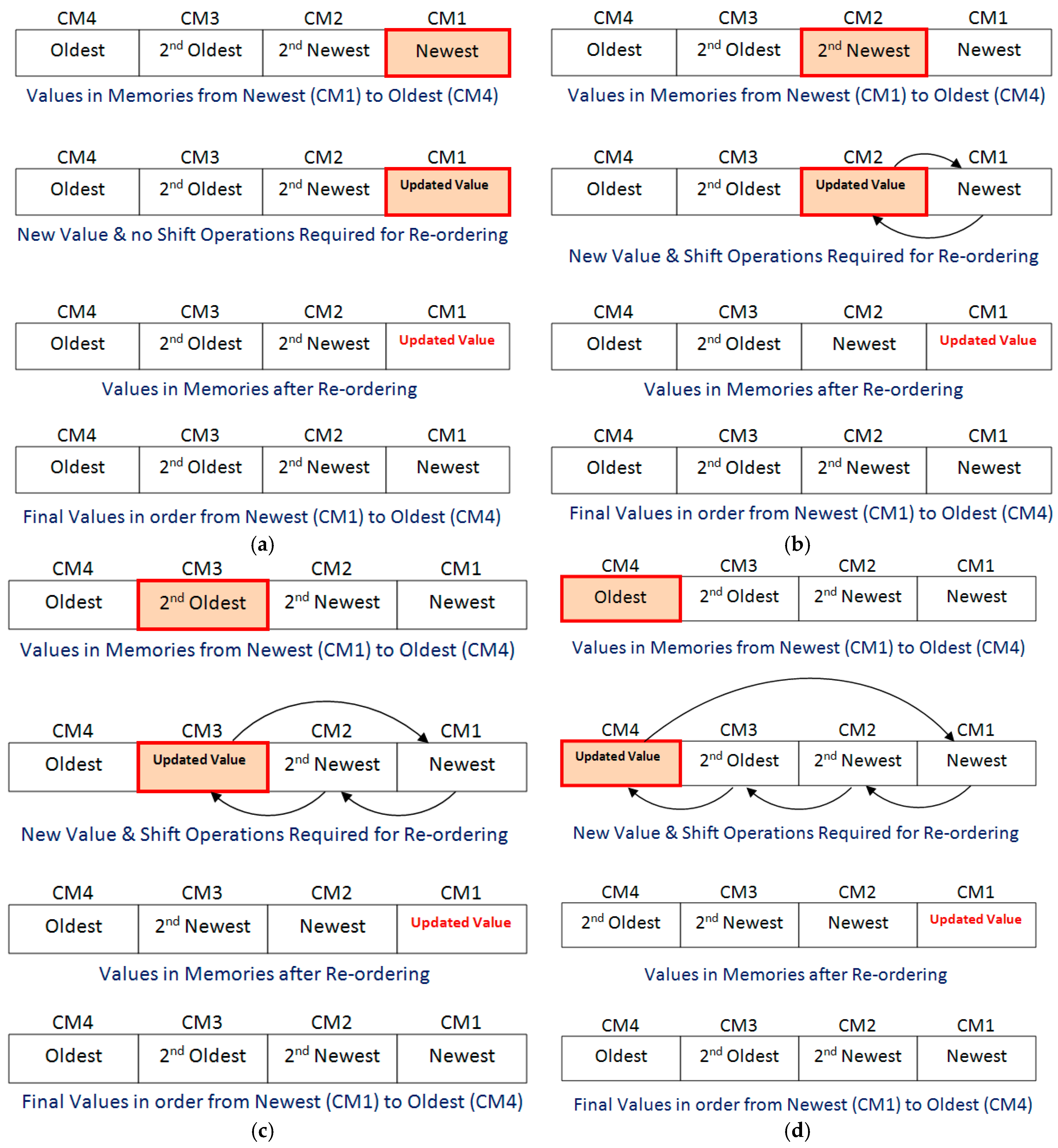

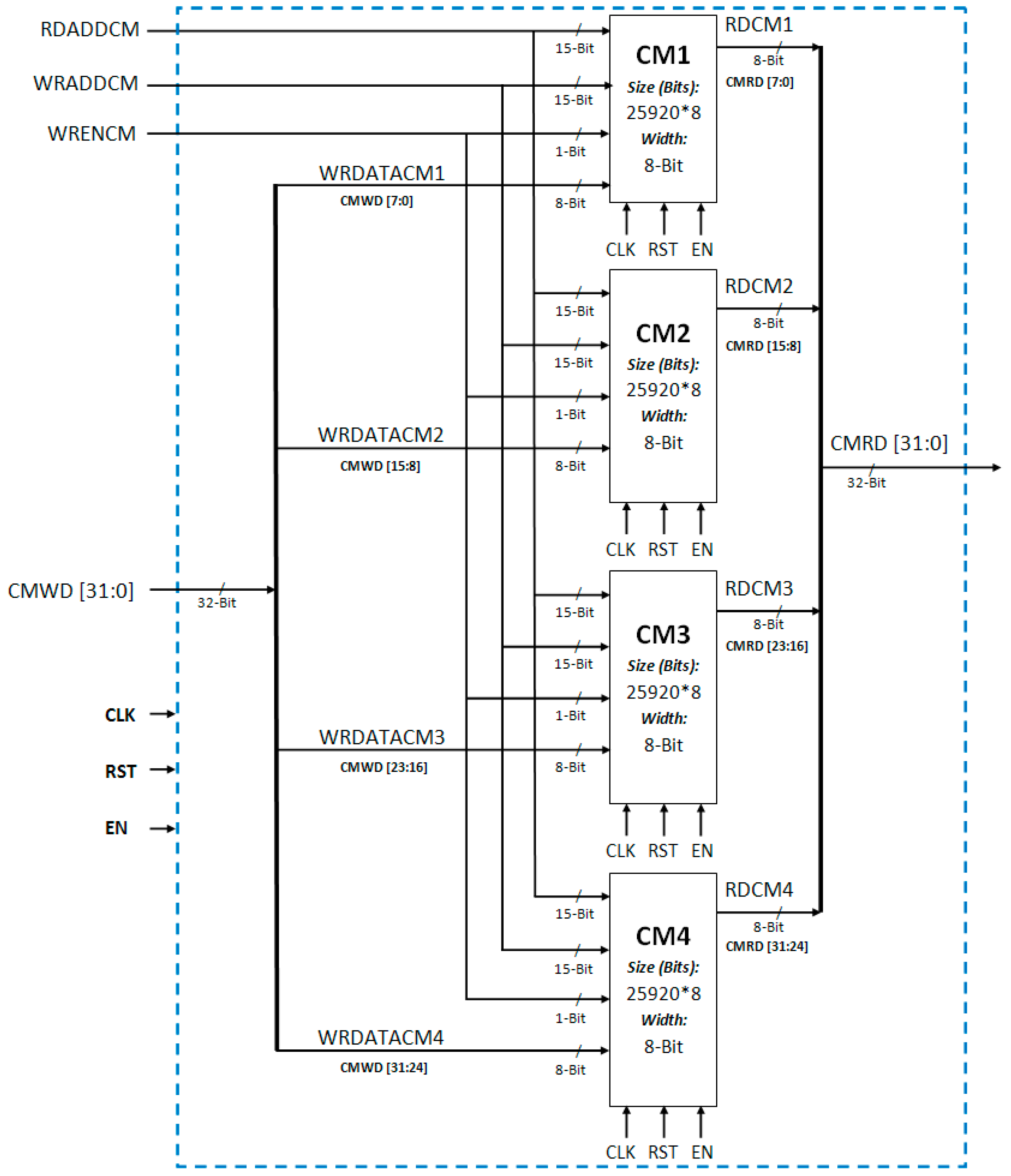

It is further observed that the record of cluster update or cluster replacement history can be maintained by using appropriate logic without even using two-bit index value. In order to store Centroid value of four clusters, there are four Centroid memories, i.e., CM1, CM2, CM3, and CM4. Consider that, the first memory CM1 always contains the newest Centroid value, i.e., the Centroid value of the cluster which is most recently updated (it is considered as Cluster 1), the second memory CM2 always contains the second newest Centroid value, i.e., Centroid value of the cluster which has been updated just before most recently updated cluster (it is considered as Cluster 2), the third memory CM3 always contains the second oldest Centroid value, i.e., the Centroid value of the cluster which has been updated just after oldest cluster (it is considered as Cluster 3), and the fourth memory CM4 always contains the oldest Centroid value, i.e., the Centroid value of the cluster which has not been updated for the longest period of time (it is considered as Cluster 4). These considerations are shown in

Figure 1a. The algorithmic steps for performing motion detection based on these assumptions are discussed next.

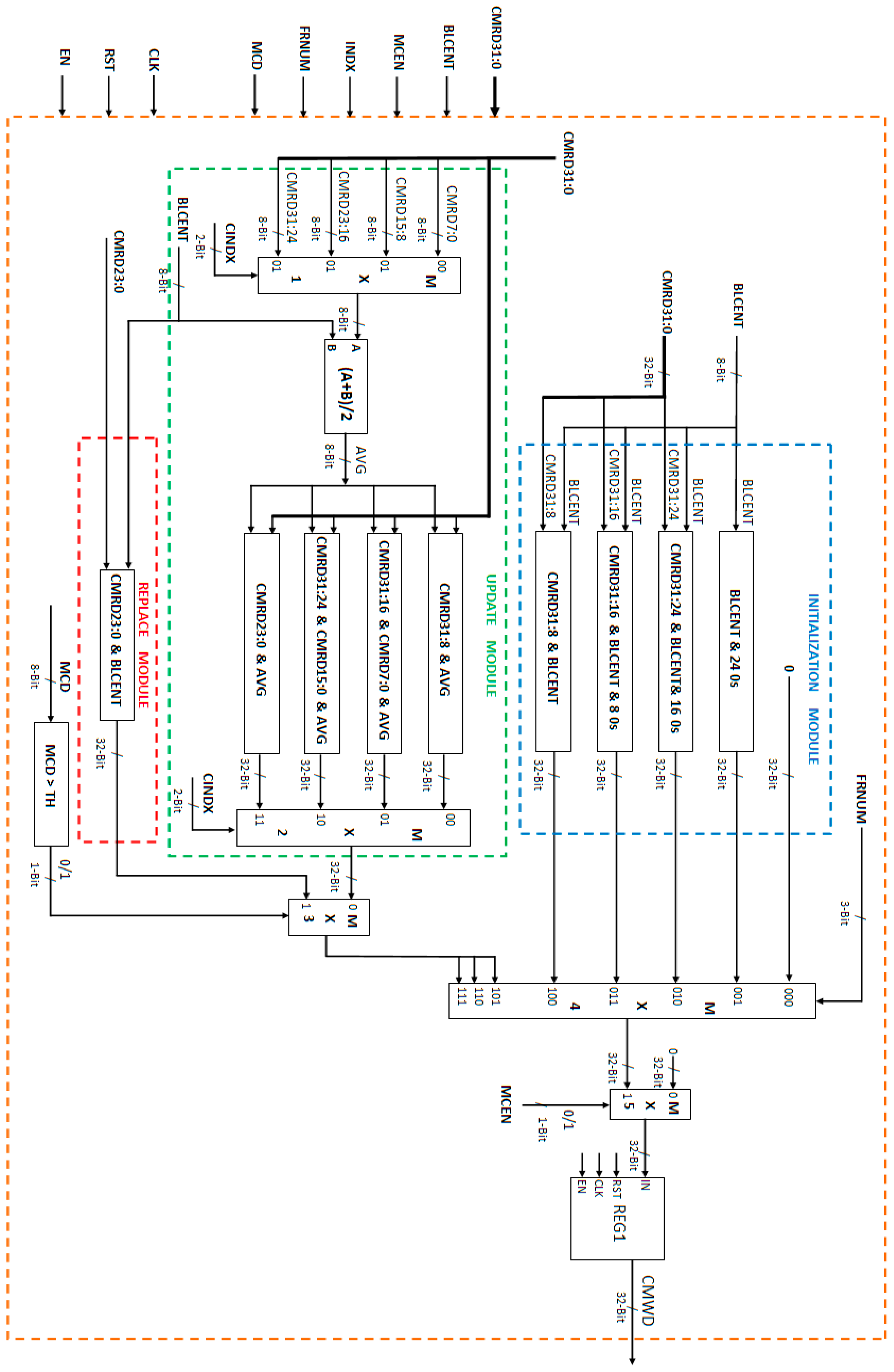

The main steps of the proposed memory efficient clustering based motion detection scheme are Block Centroid Computation, Cluster Group Initialization, Cluster Matching, Cluster Updating, Cluster Replacement, and Classification. Three steps of the proposed algorithm, i.e., Block Centroid Computation, Cluster matching, and Classification are the same as that in the case of original clustering based scheme. The remaining three steps of the proposed memory efficient motion detection algorithm, i.e., Cluster Group Initialization, Cluster Updating, and Cluster Replacement are explained below.

Cluster Group Initialization is performed during first four frames. In the first frame, the fourth cluster of each 4 × 4 pixel block is initialized with its Centroid set to Block Centroid of the corresponding block of the first frame and therefore, it is stored in Centroid Memory CM4, as this is going to be the oldest value at the end of the Cluster Group Initialization process. In the second frame, the third cluster of each 4 × 4 pixel block is initialized with its Centroid set to Block Centroid of the corresponding block of the second frame and therefore, it is stored in Centroid Memory CM3, as this is going to be the second oldest value at the end of the Cluster Group Initialization process. In the third frame, the second cluster of each 4 × 4 pixel block is initialized with its Centroid set to Block Centroid of the corresponding block of the third frame and therefore, it is stored in Centroid Memory CM2, as this is going to be the second newest value at the end of the Cluster Group Initialization process. In the fourth frame, the first cluster of each 4 × 4 pixel block is initialized with its Centroid set to Block Centroid of the corresponding block of the fourth frame and, therefore, it is stored in Centroid Memory CM1, as this is going to be the newest value at the end of the Cluster Group Initialization process. At the end of initialization process, the Centroid Memories (CM1, CM2, CM3, and CM4) contains the Centroid values in order from the newest (CM1) to the oldest (CM4). The complete process is shown in

Figure 1b.

After initialization (in initial four frames), for all subsequent frames, the motion detection is performed. During motion detection either of the two processes (i.e., Cluster Updating process or Cluster Replacement process) is performed. Therefore, the objective is either to update the Centroid value or to replace the Centroid value in the Centroid memories and maintain the order of newest value, second newest value, second oldest value, and oldest value in CM1, CM2, CM3, and CM4 respectively.

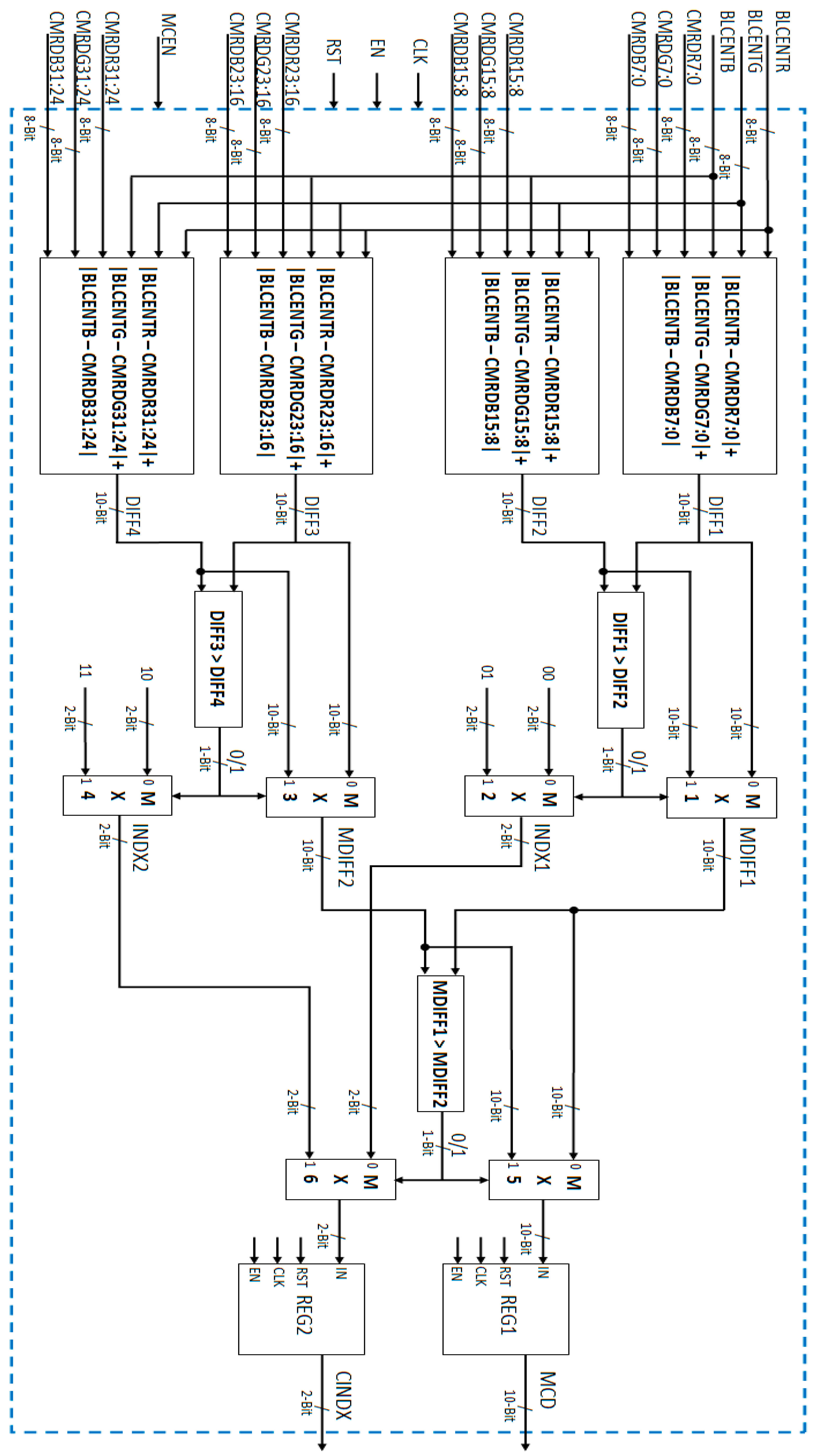

If a matching cluster is found (i.e., the Minimum Centroid Difference is less than the threshold) within the cluster group then the matching cluster is updated. For this purpose, the Centroid value of the matching cluster is updated with the average value of the matching cluster Centroid value and incoming current Block Centroid value. The matching cluster can be any one of the four clusters, i.e., first or second or third or fourth.

Consider the first case of Cluster Updating, i.e., matching cluster is the first cluster. In this case, Centroid memory CM1 is updated with the average value of the matching cluster Centroid value and incoming current Block Centroid value. The updated value in CM1 is the newest value. Therefore, no re-ordering of other memories values is required for arraigning the values in order of newest value, second newest value, second oldest value, and oldest value in CM1, CM2, CM3, and CM4 respectively. The process is explained in

Figure 2a.

Consider the second case of Cluster Updating, i.e., matching cluster is the second cluster. In this case, Centroid memory CM2 is updated with the average value of the matching cluster Centroid value and incoming current Block Centroid value. The updated value in CM2 is the newest value. Therefore, the re-ordering of other memories values is required for arraigning the values in order of newest value, second newest value, second oldest value, and oldest value in CM1, CM2, CM3, and CM4, respectively. For this purpose, the value of CM1 and updated value in CM2 are interchanged. During interchanging, the new updated value in CM2 (which is latest updated value) is moved to CM1 and the old value of CM1 is moved to CM2. The values of CM3 and CM4 remain unchanged. The process is explained in

Figure 2b. At the end of cluster updating process, the values are in the order of newest (CM1) to oldest (CM4).

Consider the third case of Cluster Updating, i.e., matching cluster is the third cluster. In this case, Centroid memory CM3 is updated with the average value of the matching cluster Centroid value and incoming current Block Centroid value. The updated value in CM3 is the newest value. Therefore, the re-ordering of other memories values is required for arraigning the values in order of newest value, second newest value, second oldest value, and oldest value in CM1, CM2, CM3, and CM4, respectively. For this purpose, the cluster Centroid values in CM1 and CM2 are shifted to CM2 and CM3, respectively, and these become older by one step. The latest updated value of CM3 is moved to CM1. The value of CM4 remains unchanged. The process is explained in

Figure 2c. At the end of cluster updating process, the values are in the order of newest (CM1) to oldest (CM4).

Consider the last/fourth case of Cluster Updating, i.e., matching cluster is the fourth cluster. In this case, Centroid memory CM4 is updated with the average value of matching cluster Centroid value and incoming current Block Centroid value. The updated value in CM4 is the newest value. Therefore, the re-ordering of other memories values is required for arraigning the values in order of newest value, second newest value, second oldest value, and oldest value in CM1, CM2, CM3, and CM4 respectively. For this purpose, the cluster Centroid values in CM1, CM2, and CM3 are shifted to CM2, CM3, and CM4, respectively and these become older by one step. The latest updated value of CM4 is moved to CM1. The process is explained in

Figure 2d. At the end of cluster updating process, the values are in the order of newest (CM1) to oldest (CM4).

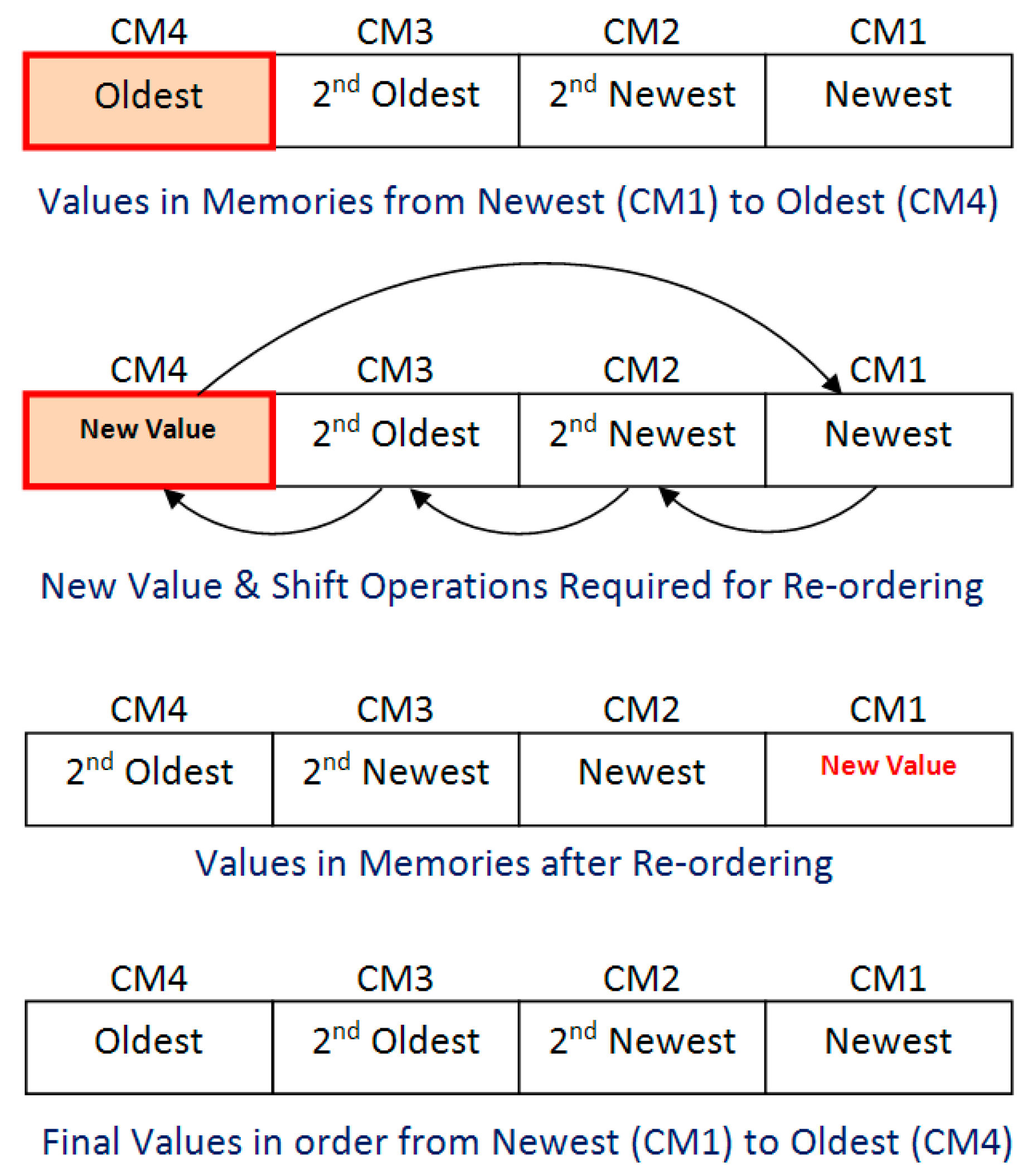

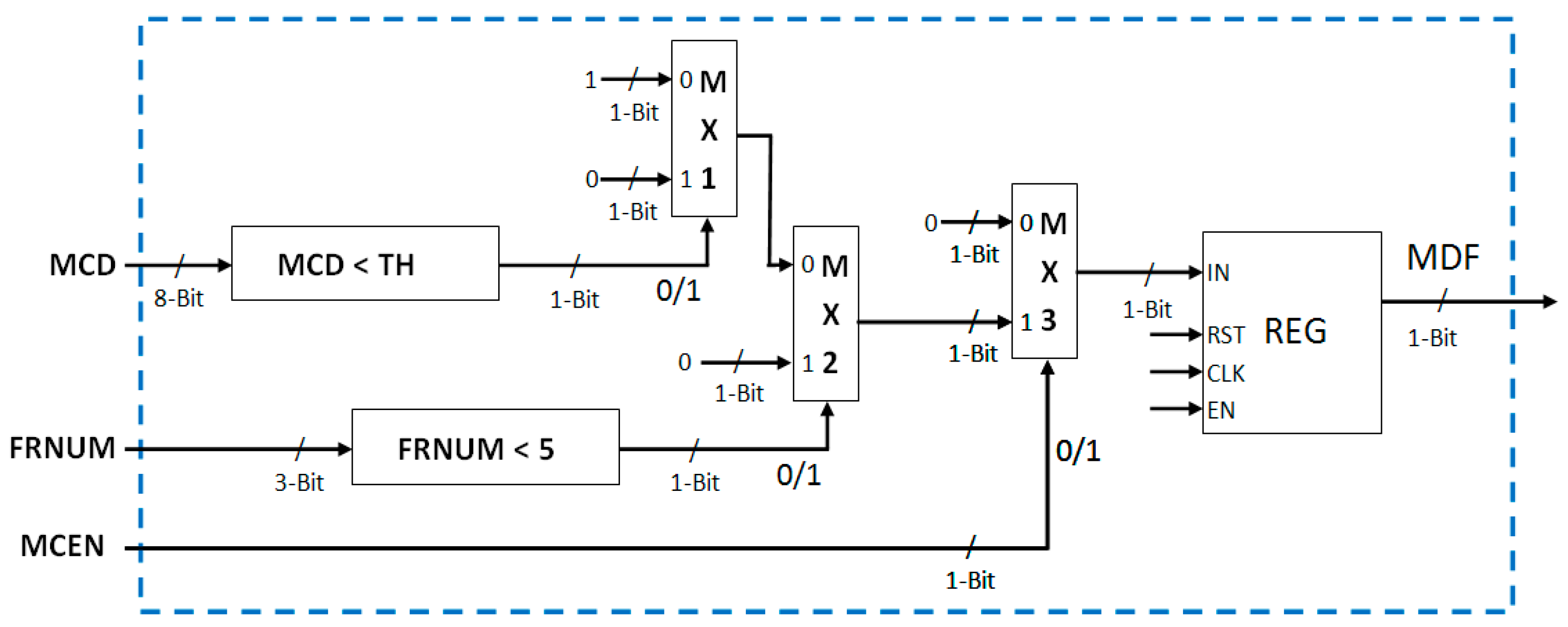

If no matching cluster is found (i.e., the Minimum Centroid Difference is greater than the threshold) within the cluster group then oldest cluster is replaced. For this purpose, the oldest cluster which has not been updated for the longest period of time, i.e., fourth cluster (Centroid value in Centroid memory CM4) is replaced with a cluster having Centroid value set to incoming current Block Centroid value. The new value in CM4 is the newest value as it is replaced most recently. Therefore, the re-ordering of other memories values is required for arraigning the values in order of newest value, second newest value, second oldest value, and oldest value in CM1, CM2, CM3, and CM4, respectively. For this purpose, the cluster Centroid values in CM1, CM2, and CM3 are shifted to CM2, CM3, and CM4, respectively, and these become older by one step. The latest (new) value of CM4 is moved to CM1. The process is explained in

Figure 3. At the end of the cluster replacement process, the values are in the order of newest (CM1) to oldest (CM4).

Thus, at the end of any of the processes, i.e., Cluster Group Initialization, Cluster Updating, or Cluster Replacing, the four Centroid memories CM1, CM2, CM3, and CM4 contain values in the order of newest value, second newest value, second oldest value, and oldest value in CM1, CM2, CM3, and CM4, respectively. This process is performed for all the blocks in every incoming frame and thus maintains the record of history of clusters without storing the frame number or the index value. In other words, it keeps track of newest cluster (most recently updated or replaced), second newest cluster, second oldest cluster, or oldest cluster (which has not been updated for the longest period of the time) and does not require frame number or index value. Only this much information about the history of the clusters (i.e., newest cluster, second newest cluster, second oldest cluster, and oldest cluster) is required for performing clustering based motion detection. The proposed novel scheme is thus successful in substantially reducing the memory requirement of clustering based motion detection scheme, without any loss of accuracy in motion detection. The resulting reduction in Parameter memory size is 40% as compared to the original clustering based motion detection scheme [

23] with no loss of accuracy or robustness of the system.

In order to reduce the memory requirement of motion detection algorithm, without negatively impacting the quality of processed videos, it is important to accurately evaluate the proposed algorithm against original clustering-based motion detection algorithm on video streams of different real-world scenarios.

For this purpose, the standard clustering based algorithm and proposed memory efficient algorithm have been programmed in C/C++ programming language. For running the code, a Dell Precision T3400 workstation (with Windows XP operating system, quad-core Intel® CoreTM2 Duo Processor with 2.93 GHz Operating Frequency, and 4 GB RAM) was used. Open Computer Vision (OpenCV) libraries were used in the code for reading video streams (either stored or coming from camera) and displaying motion detection results. Effect of removing the frame number from parameter memory was evaluated on test bench videos taken from surveillance cameras. The selected video streams have a resolution of 720 × 576 pixels (PAL size) and five-minute duration.

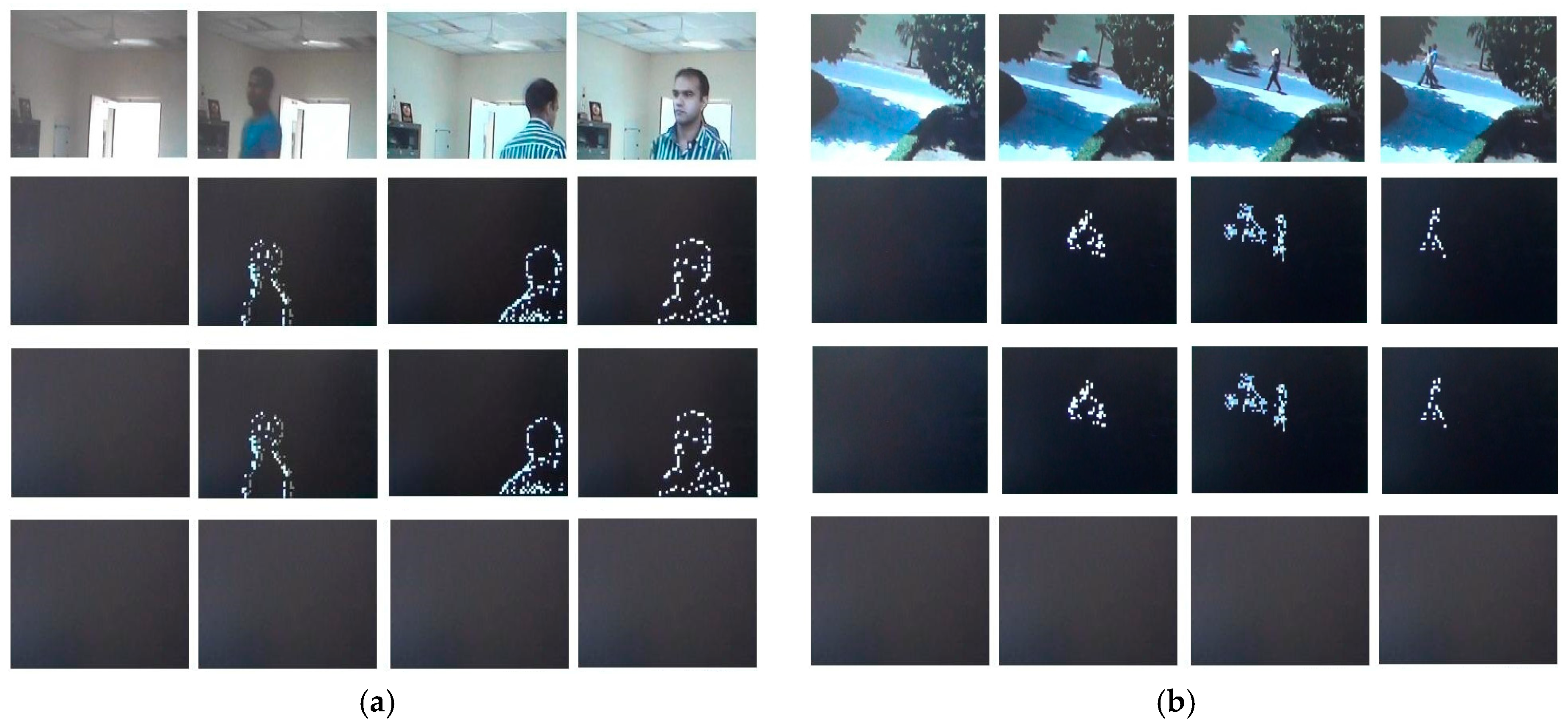

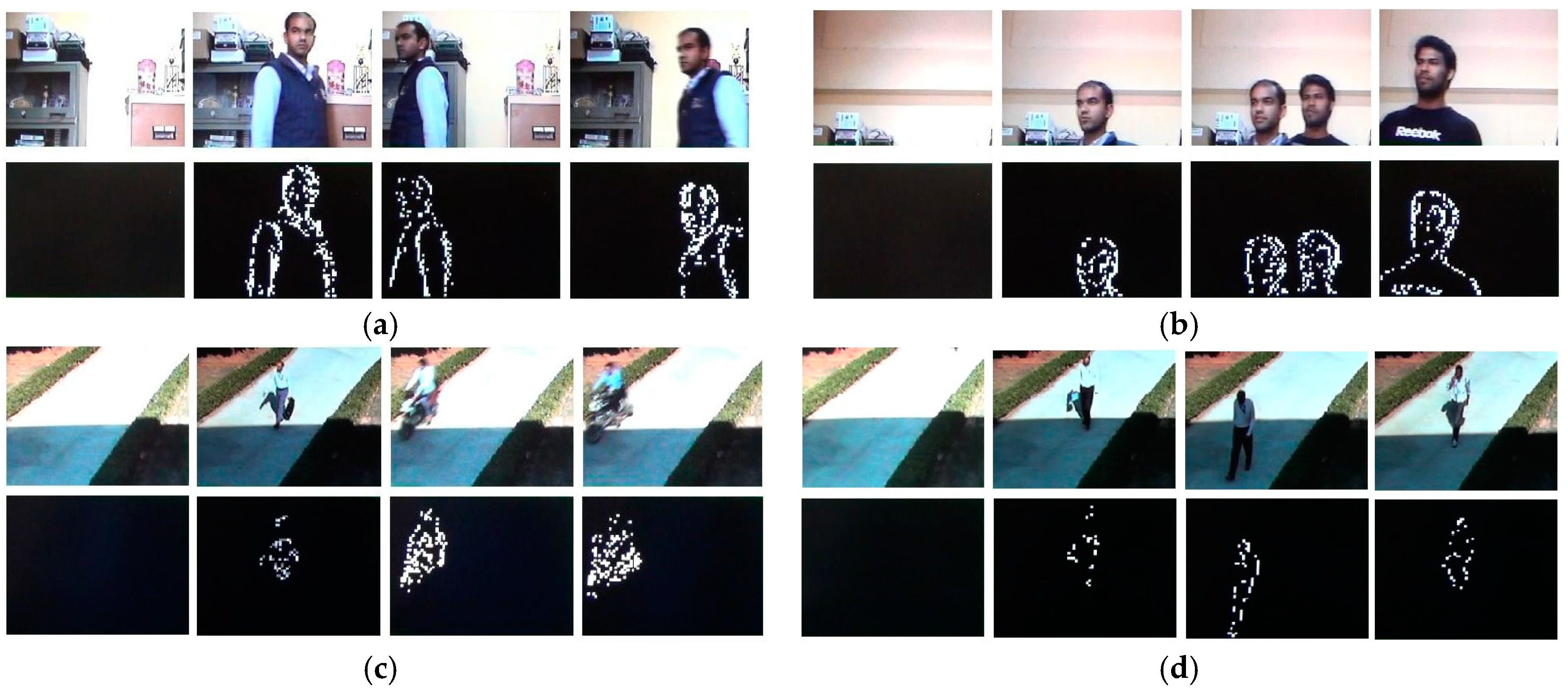

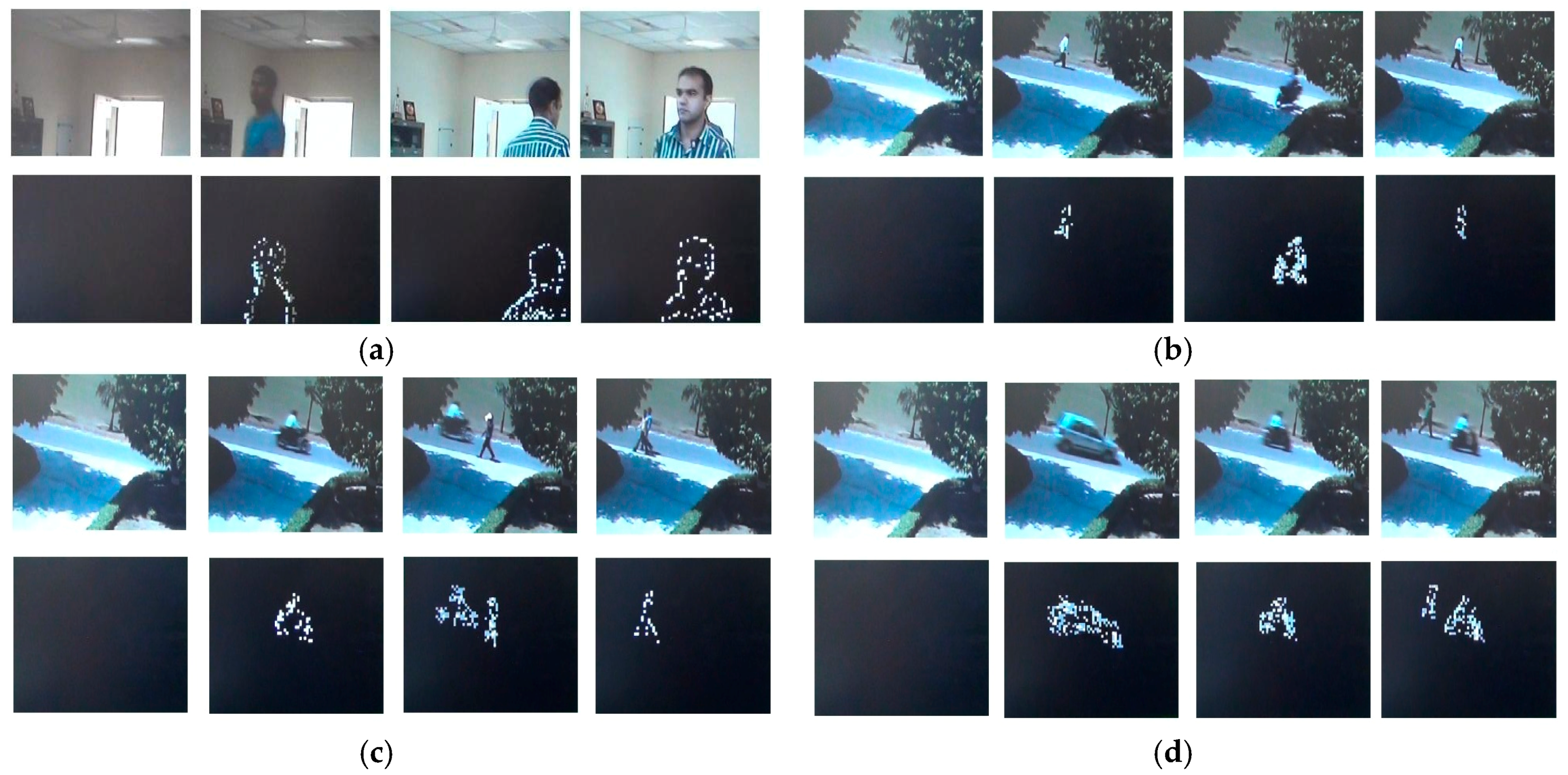

Figure 4 visually compares the results for both the motion detection algorithms (proposed and original) for different indoor and outdoor conditions with pseudo-stationary backgrounds. In

Figure 4a, moving fan in background is present, while, in

Figure 4b, moving leaves of trees are present. Top row shows the original frames extracted from video streams. Second row shows the motion detection results obtained from the original clustering based algorithm and the third row shows the motion detection results obtained from the proposed memory efficient clustering based algorithm for respective frames. To compare the results, pixel-by-pixel difference of second and third row images has been taken and it gives black images (fourth row). This indicates that the proposed memory efficient algorithm produces same motion detection results as original clustering based motion detection algorithm without any loss of accuracy and robustness but with 40% reduction in memory requirement. The frame rate for software-based implementation of memory efficient proposed motion detection algorithm is five frames per second (fps) for PAL (720 × 576) resolution color videos.

For a mathematical proof, for every frame of each video stream, the mean square error (MSE) is calculated. MSE is a common measure of quality of video and is equivalent to other commonly used measures of quality. For example, the peak signal-to-noise ratio (PSNR) is equivalent to MSE [

25]. Some researchers measure the number of false positives (FP) and false negatives (FN) whose sum is equivalent to the MSE [

26]. MSE is defined as

In the above equation, IORIGINAL (m, n) is the motion detected binary output image produced by running of the software (C/C++) implementation of original clustering based algorithm, while IPROPOSED (m, n) is the motion detected binary output image produced by running of the software (C/C++) implementation of proposed memory efficient clustering based algorithm. M is the number of rows in a video frame, i.e., 576 and N is the number of columns in a video frame, i.e., 720.

As the motion detection outputs are binary images, therefore, the square of the difference between IORIGINAL (m, n) and IPROPOSED (m, n) has only two possible values: “1” if the pixel has different values in IORIGINAL and IPROPOSED and “0” if the pixel has same values. As a result of this MSE is equivalent to the ratio of the number of pixels which are different in IPROPOSED with respect to IORIGINAL to the total number of pixels in a video frame.

The computed MSE for every frame of all the test bench videos is zero and it confirms that the proposed memory efficient motion detection scheme produces the same motion detection results as the original clustering based motion detection scheme without negatively affecting the quality of processed videos, but with 40% reduction in memory requirement.