1. Introduction

As one part of research related to Intelligent Transport Systems (ITS), in Japan, much research has been conducted regarding Advanced cruise-assist Highway Systems (AHS), aiming to ensure the safety and smoothness of automobile driving [

1,

2,

3,

4,

5,

6,

7,

8,

9]. AHS is composed of the following sections: information collection, support for driver operations, and fully automated driving. The means of information collection include the use of radar to detect obstacles in front, and camera images for detecting traffic signs and signals. Driving support systems using radar have already been commercialized and are installed in many vehicles. However, driving support systems using cameras remain in the research phase both for use on expressways and ordinary roads. Commercialization of these systems will require the following for accurate and stable provision of information about the vehicle surroundings to the driver: high processing speed, high recognition accuracy, detection of all detectable objects without omission, and robustness in response to changes in the surrounding environment.

It is said that when driving, a driver relies 80–90% on visual information for awareness of the surrounding conditions and for operating the vehicle. According to the results of investigations, large numbers of accidents involving multiple vehicles occur due to driver inattention which results in the driver not looking ahead while driving, distracted driving, or failure to stop at an intersection. Of such accidents, those that result in death are primarily due to excessive speed. Therefore, from the perspective of constructing a driver support system, it will be important to automatically detect the speed limit and provide a warning display or voice warning to the driver urging him/her to observe the posted speed limit when the vehicle is travelling too fast.

Traffic restriction signs such as “vehicle passage prohibited” and “speed limits” have a round external shape and are distinguished by a red ring around the outside edge. There have been many proposals regarding methods of using this distinguishing feature to extract traffic signs from an image [

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30]. Frejlichwski [

10] proposed the Polar-Fourier greyscale descriptor, which applies the information about silhouette and intensity of an object, for extracting an object from a digital image. Gao et al. [

11] proposed the novel local histogram feature representation to improve the traffic sign recognition performance. Sun et al. [

12] proposed an effectiveness and efficient algorithm based on a relatively new artificial network, extreme learning machine in designing a feasible recognition approach. Liu et al. [

13] proposed a new approach to tackle the traffic sign recognition problem by using the group sparse coding. Zaklouta et al. [

14] proposed the real-time traffic sign recognition system using an efficient linear SVM with HOG features. Stallkamp et al. [

15] compared the traffic sign recognition performance of humans to that of a state-of-art machine learning algorithm. Berger et al. [

16] proposed a new approach for traffic sign recognition based on the virtual generalizing random access memory weightless neural networks (VG-RAM WNN). Gu et al. [

18] proposed the dual-focal active camera system to obtain a high-resolution image of the traffic signs. Zaklouta et al. [

19] proposed a real-time traffic sign recognition system under bad weather conditions and poor illumination. Ruta et al. [

20] proposed novel image representation and discriminative feature selection algorithm in a three-stage framework involving detection, tracking and recognition. Chen et al. [

22] proposed the reversible image watermarking approach that works on quantized Discrete Cosine Transform (DCT) coefficients. Zhang [

23] proposed the method to pre-pure feature points by clustering to improve the efficiency of the feature matching. Zhang et al. [

24] proposed a multiple description method based on fractal image coding in image transformation. Zin et al. [

25] proposed a robust road sign recognition system under various illumination conditions to work for various types of circular and pentagonal road signs. Kohashi et al. [

26] proposed the high speed and high accurate road sign recognition method which is realized by emitting near-infrared ray to respond to bad lighting environment. Yamauchi et al. [

27] proposed the traffic sign recognition method by using string matching technique to match the signatures to template signatures. Matsuura, Uchimura et al. [

28,

30] proposed the algorithm for estimation of internal area in the circular road signs from color scene image. Yabuki et al. [

29] proposed an active net for region detection and a shape extraction. Many recognition methods have been proposed using means such as template matching and improved template matching, or using applied genetic algorithms [

30] and neural network [

29]. A method using shape features [

21], and an Eigen space method based on the KL transform [

17] have also been proposed. These have generally delivered good results. However, before commercialization of these methods is possible, a number of problems arising during the actual processing will have to be resolved. These problems include the need to convert an extracted image of unknown size to an image that is approximately the same size as the template, the need to make corrections for rotation and displacement, and the large time that is required for these processing calculations.

The method for traffic sign recognition [

21] previously proposed by the authors of this study was focused on extracting traffic signs in real time from a moving image as a step toward system commercialization. As a result, the recognition method proposed was a relatively simple method that was based on the geometric features of the signs and that was able to shorten the processing time. Because it extracted the geometric shape on the sign and then conducted recognition based on its aspect ratio, the method was not able to identify the numbers on a speed-limit sign, all of which have identical aspect ratios. However, in consideration of the fact that excessive speed is a major cause of fatal accidents, and because recognition of the speed-limit signs that play a major role in preventing fatal accidents and other serious accidents is more important, a speed-limit sign recognition method utilizing an Eigen space method based on the KL transform was proposed [

17]. This method yielded a fast processing speed, was able to detect the targets without fail, and was robust in response to geometrical deformation of the sign image resulting from changes in the surrounding environment or the shortening distance between the sign and vehicle. However, because this method used only color information for detection of speed-limit signs, precise color information settings and processing to exclude everything other than the signs are necessary in an environment where many colors similar to the speed-limit signs exist, and further study of the method for sign detection is needed.

Aiming to resolve the problems with the method previously proposed, this study focuses its consideration on the following three points, and proposes a new method for recognition of the speed limits on speed-limit signs. (1) Make it possible to detect only the speed-limit sign in an image of scenery using a single process focusing on the local patterns of speed-limit signs. (2) Make it possible to separate and extract the two-digit numbers on a speed-limit sign in cases when the two-digit numbers are incorrectly extracted as a single area due to the light environment. (3) Make it possible to identify the numbers using a neural network without performing precise analysis related to the geometrical shapes of the numbers.

Section 2 describes a speed-limit sign detection method using the AdaBoost classifier based on image LBP feature quantities.

Section 3 describes a method of reliably extracting individual speed numbers using an image in a HSV color space, and a method of classifying the indicated speed number image using a neural network that learned the probabilistic relationship between the feature vectors of the numbers and the classifications.

Section 4 describes the discussion of this study and the remaining problem points.

2. Detection of Speed-Limit Signs

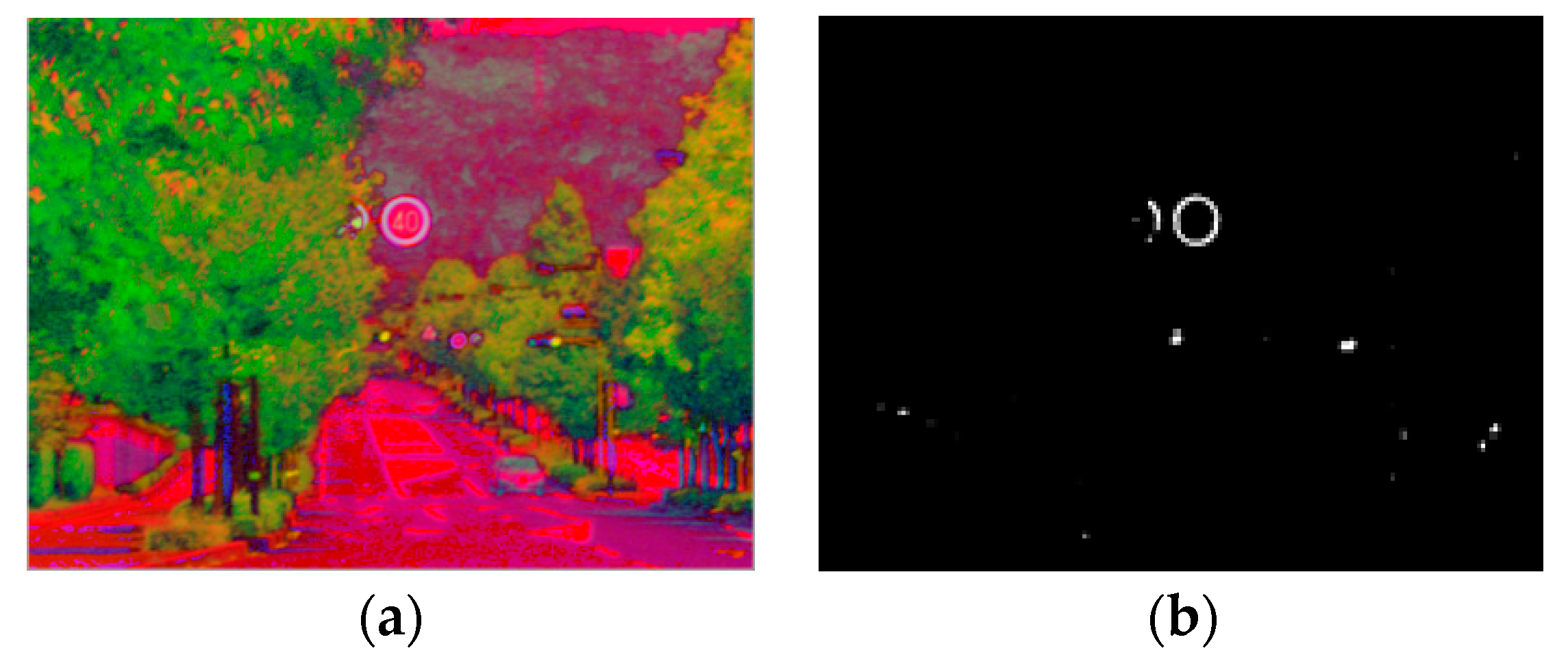

Figure 1 shows a landscape image which includes 40 km/h speed-limit signs installed above a road as seen looking forward from the driver’s position during driving. Because one sign is at a distance in the image, it is too small and is difficult to recognize. However, there is another speed-limit sign in a position close to the center of the image. The two red shining objects in the center are traffic signals. The speed-limit sign is round in shape, with a red ring around the outer edge and blue numbers on a white background indicating the speed limit.

The image acquired from the camera uses the popular RGB. In order to obtain the hue information, the image is converted to the popular Hue, Saturation, Value (HSV) [

31,

32,

33]. The resulting image is expressed as independent Hue, Saturation, and Value parameters, providing color information that is highly robust in terms of brightness. When the threshold value with respect to Hue H expressed at an angle of 0–2π is set to

, this yields the HSV image shown in

Figure 2a. As shown in

Figure 2b, when the image is converted to a binary image where only the regions where the Hue is within the set threshold value appear white and all other regions appear black, we can see that parts other than the sign have also been extracted.

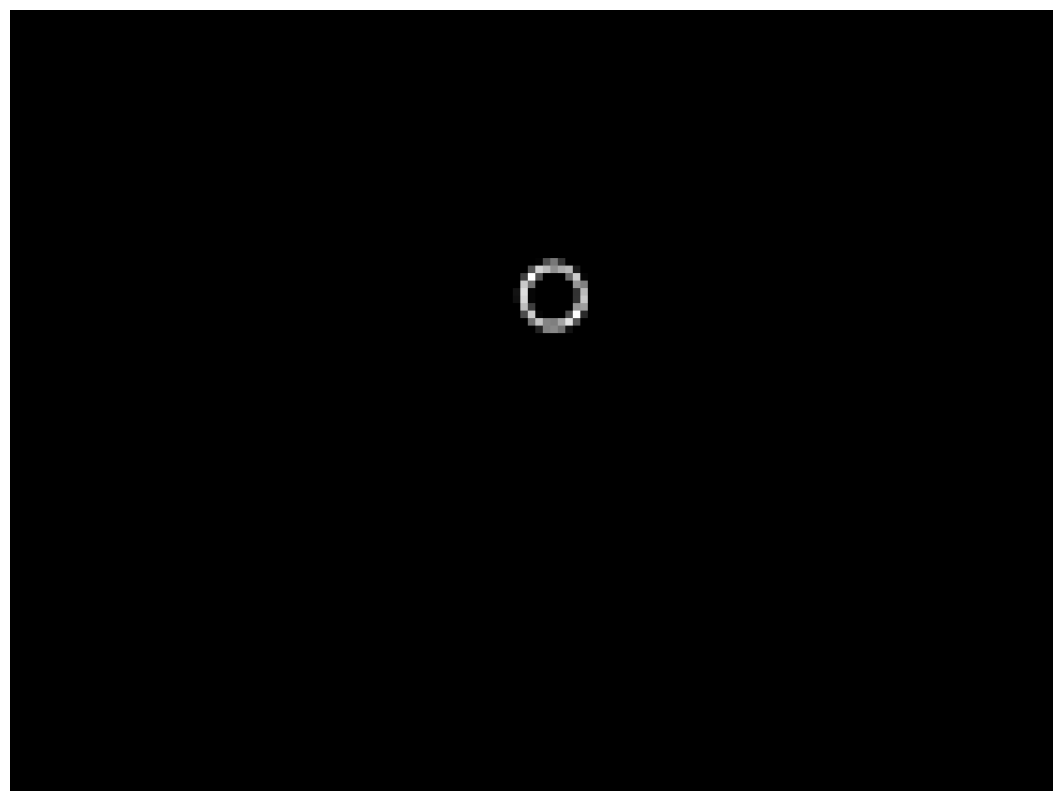

Because regions other than the sign are included in the binary image as shown in

Figure 2b, first at the labeling process for the extracted regions we remove the white regions that have small areas, and next we perform contour processing for the labeled regions. The roundness of the region is calculated from the area and peripheral length which are obtained as the contour information, and only the regions considered to be circular are extracted. The results are as shown in

Figure 3.

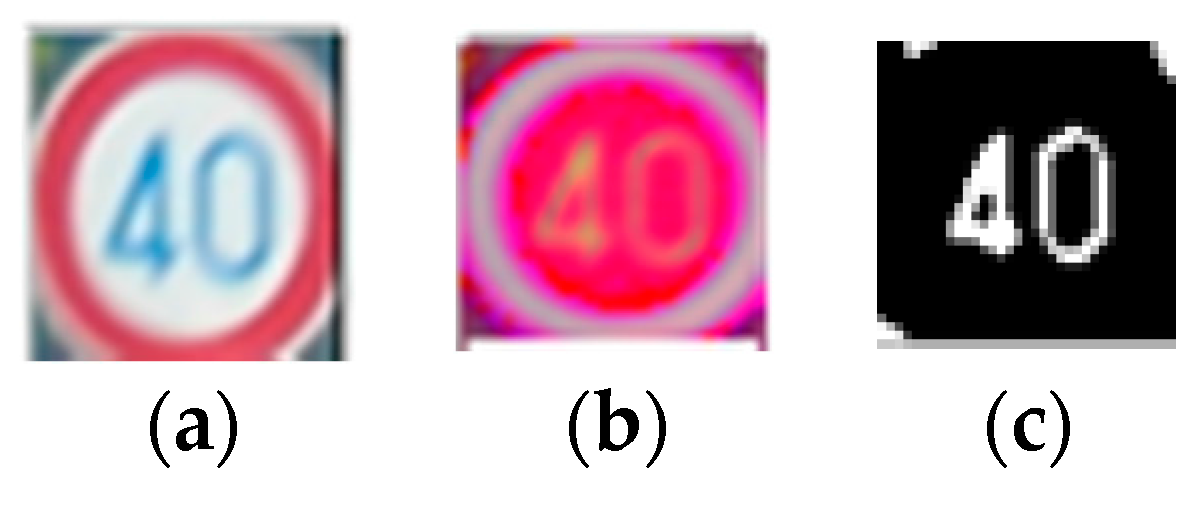

When the bounding box around the red circle is added based on the binary image of the extracted traffic sign (

Figure 3), we obtain the coordinates of the top left and bottom right corners of the speed-limit sign. Based on these corners, we are able to extract only the part corresponding to the traffic sign from the landscape image (

Figure 1). The extracted image is shown in

Figure 4a. After converting this image to a HSV image (

Figure 4b), and performing a binarization process by setting the threshold for the blue, the result is the extracted speed limit of 40 km/h, as can be seen in

Figure 4c.

Outdoors, even when imaging the same scene, Hue, Saturation, and Value vary depending on the time, such as day or night, and when imaging the scene from different directions. As a result, it is necessary to use a processing method which considers these differences when extracting the signs. However, this requires making precise settings in advance to handle all of the various situations that may occur.

This study attempted to find a method of determining the speed-limit sign pattern (feature quantities) in the image by converting the image to a grayscale image so that the color differences are converted to differences in grayscale levels, instead of using the color information directly. The AdaBoost classifier [

34] is used to detect the speed-limit signs based on feature quantities extracted from the image. The AdaBoost classifier is a machine learning algorithm that combines a number of simple classifiers (weak classifiers) with detection accuracy that is not particularly high to create a single advanced classifier (strong classifier).

Local binary pattern (LBP) feature quantities [

35,

36,

37] are used as the feature quantities. LBP find the differences between the target pixel and the eight neighboring pixels, and assigns values of one if positive and zero if negative, and express the eight neighboring pixels as a binary number to produce a 256-grade grayscale image. These feature quantities have a number of characteristics, including the fact that they can be extracted locally from the image, are resistant to the effects from image lighting changes, can be used to identify the relationships with the surrounding pixels, and require little calculation cost.

200 images which contained speed-limit signs (positive images) and 1000 images which did not contain speed-limit signs (negative images) were used for training set. A testing set of 191 images was prepared separately from the training set. The positive images included images of a variety of conditions including images with clear sign colors, images with faded sign colors, and images of signs photographed at an angle. Examples of the positive and negative images are shown in

Figure 5 and

Figure 6.

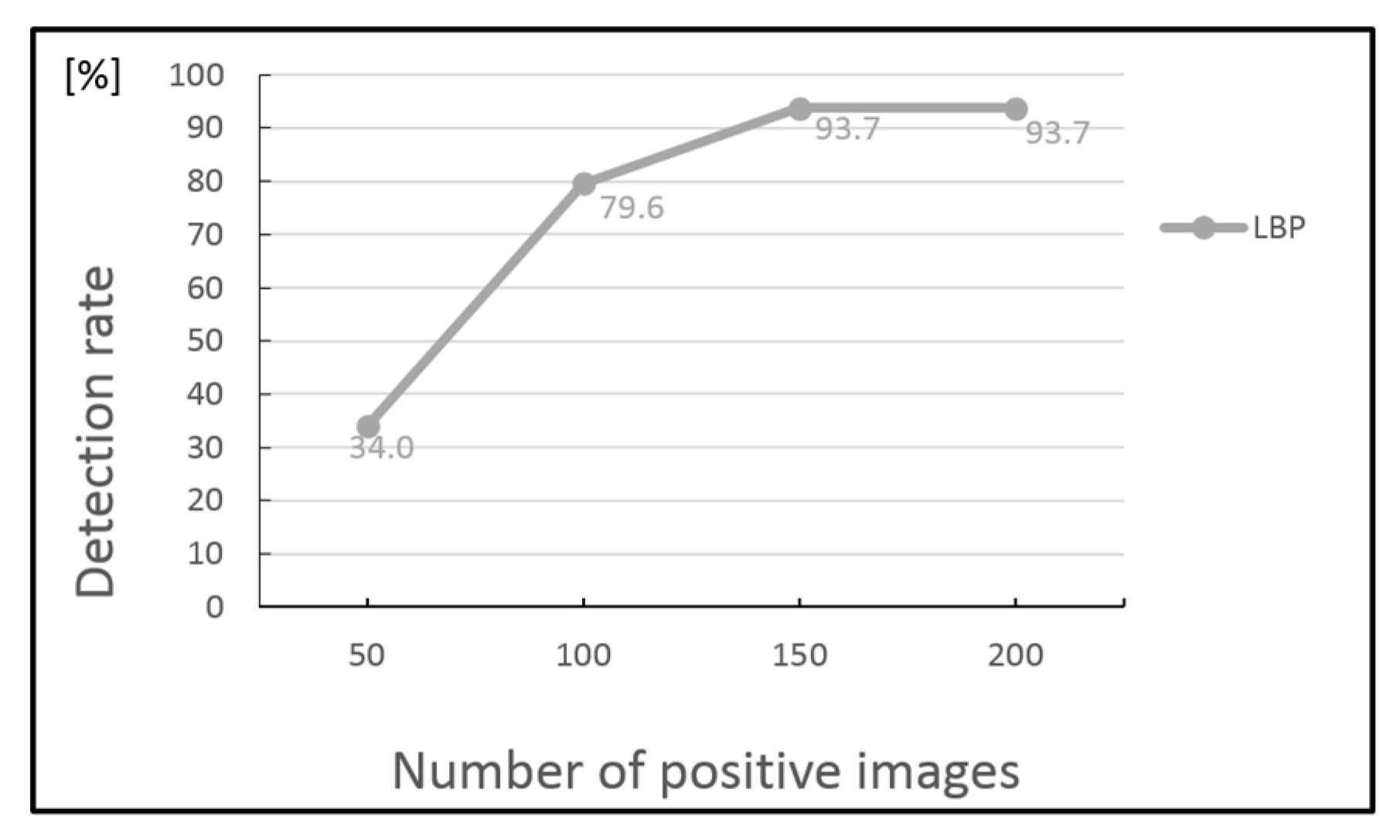

Figure 7 shows the changes in the detection rate when the number of positive images was increased from 50 to 100, 150, and 200 for the same 1000 negative images. It shows that increasing the number of positive images increases the detection rate.

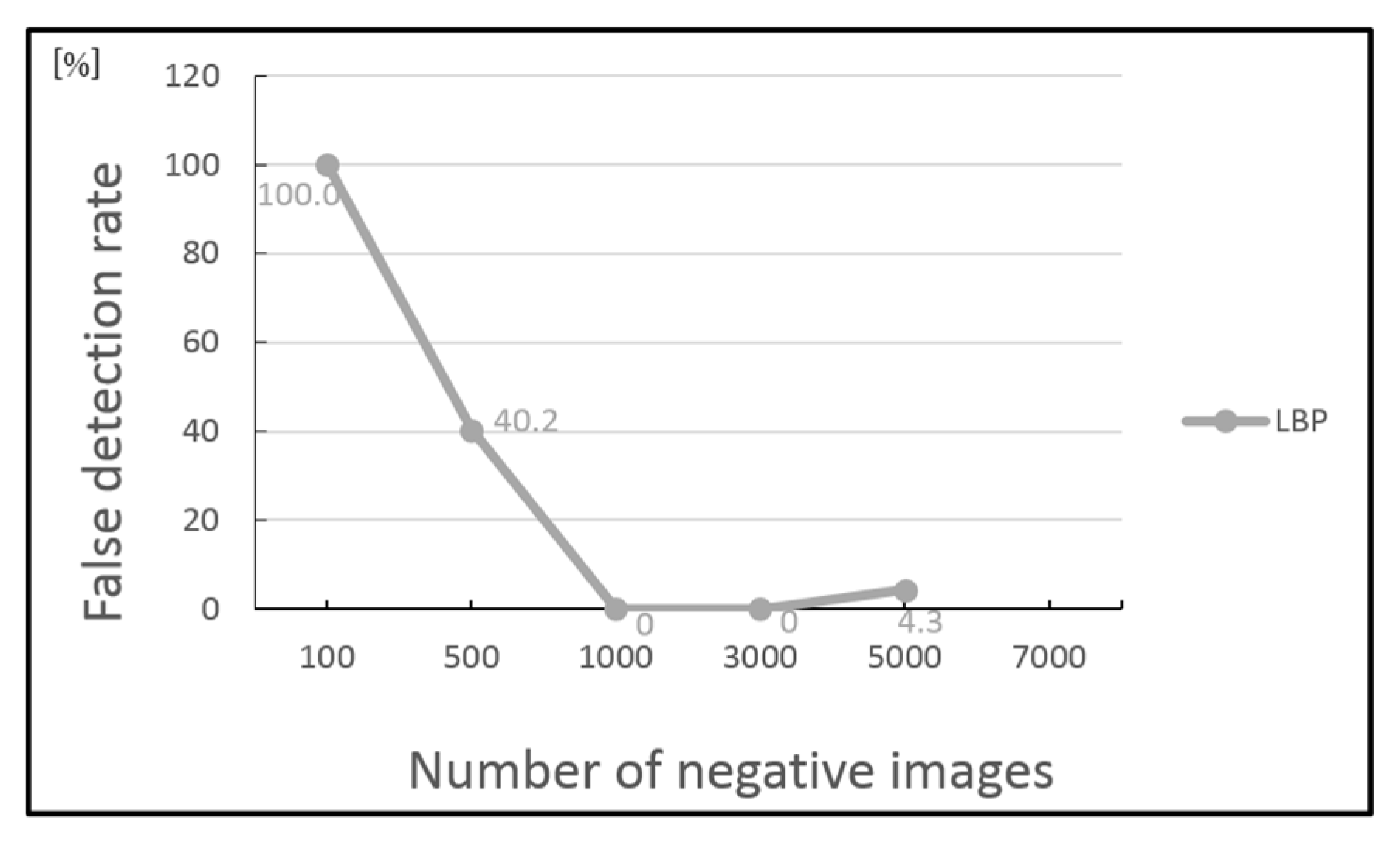

Figure 8 shows the changes in the false detection rate (= (Number of detected areas that are not speed-limit signs)/Number of detected speed-limit signs)) when the number of negative images was increased from 100 to 7000. It shows that although the false detection rate dropped to 0 (%) with 1000 and 3000 negative images, a small number of false detections occurred when the number of negative images was 5000 or more. It is believed that using too many negative images results in excessive learning or conditions where learning cannot proceed correctly.

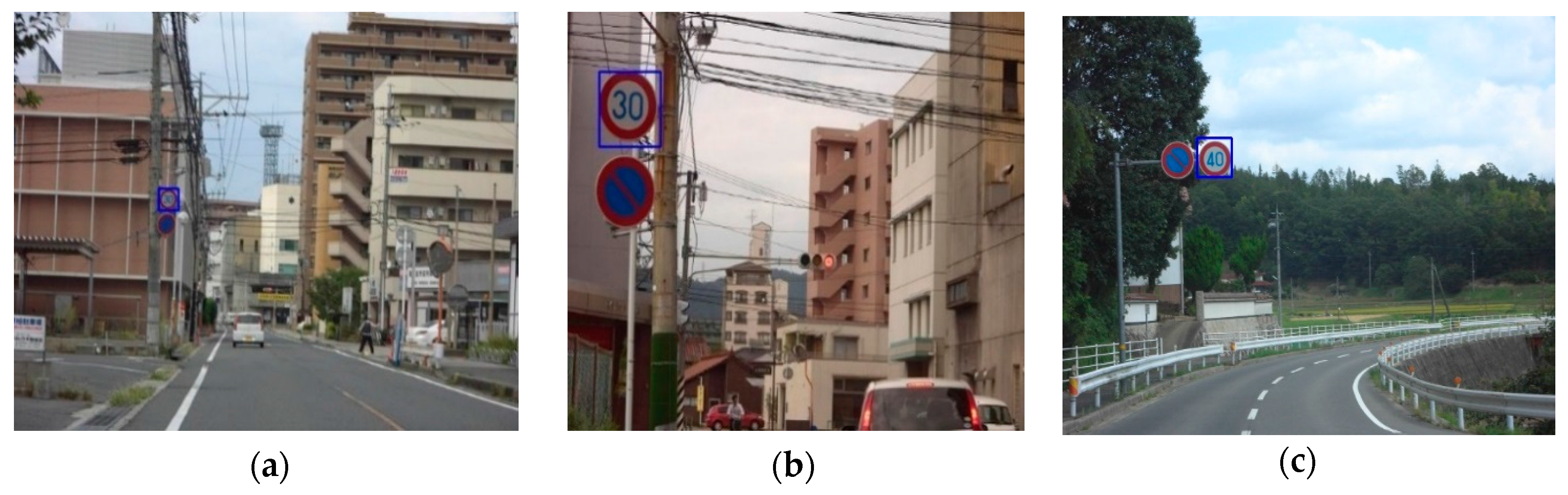

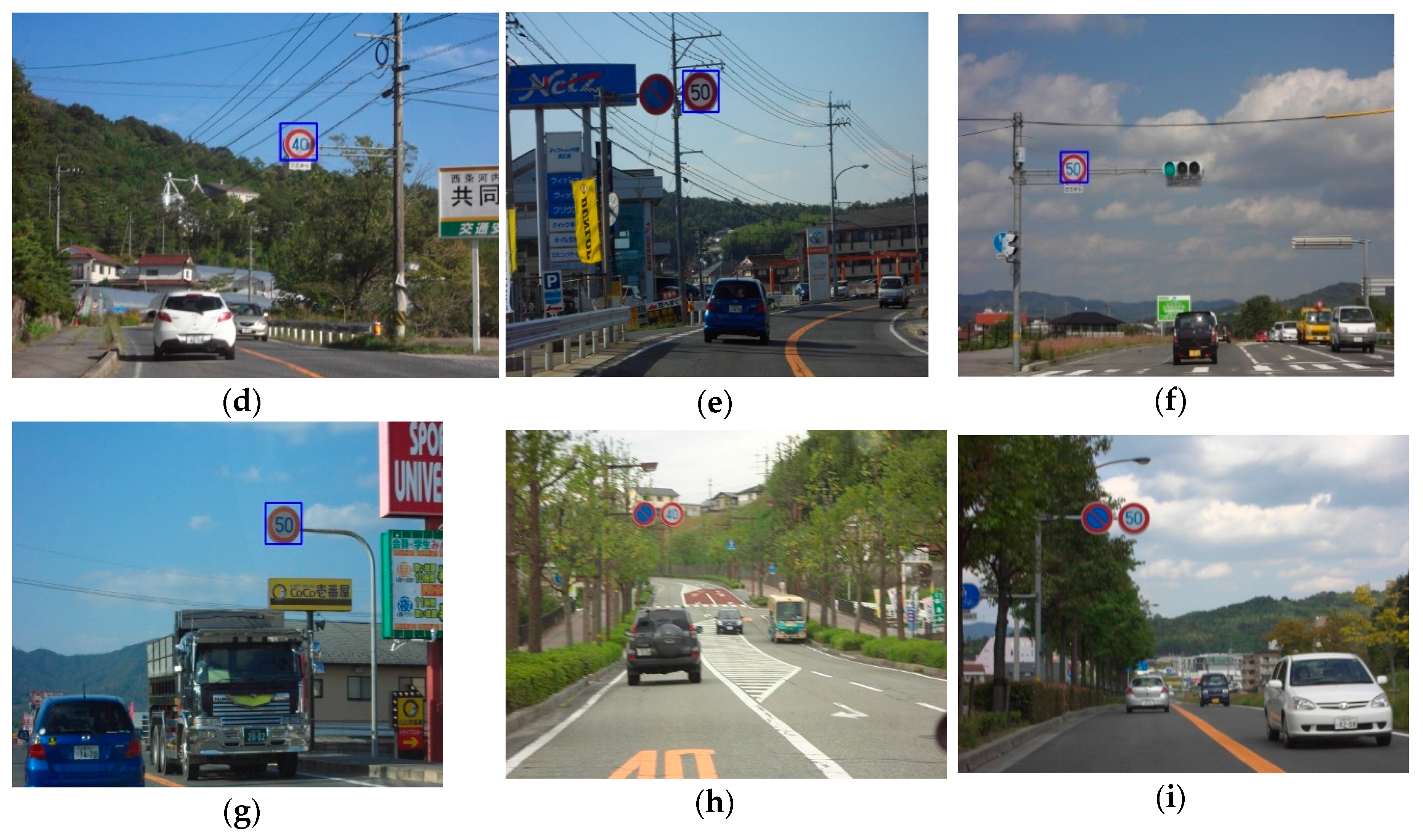

Figure 9 shows the detection results when an AdaBoost classifier that was created using 200 positive images and 1000 negative images was used with a testing set of 191 images that were different from the training set. The detection rate was 93.7(%) and the false detection rate was 0 (%). This means that out of the 191 images that this method was used with, there were 12 images in which the speed-limit sign was not detected, however, all 179 detected areas were actually speed-limit signs. Images a–g in

Figure 9 show cases when the speed-limit sign was detected, and images (h) and (i) in

Figure 9 show cases when the speed-limit sign was not detected.

3. Recognition of Speed Limits on Speed-Limit Signs

The speeds on speed limit signs on ordinary roads in Japan are mostly speeds of 30 km, 40 km, and 50 km per hour. This section describes the method of recognizing the numbers on the speed-limit signs that were extracted using the method in

Section 2.

The methods for identifying numbers and letters include a statistical approach and a parsing approach. The statistical approach is a method that evaluates the class to which the observed pattern most resembles based on the statistical quantities (average, variance, etc.) of feature quantities from a large quantity of data. The parsing approach considers the pattern of each class to be generated in accordance with rules, and identifies the class of the observed pattern by judging which of the class rules were used to generate it. Recently, the use of neural networks as a method of machine learning based on statistical processing makes it relatively simple to configure a classifier with good classifying performance based on learning data [

38,

39]. As a result, this method is frequently used for character recognition, voice recognition, and the other pattern recognition. It is said that a neural network makes it possible to acquire a non-linear identification boundary through learning, and delivers pattern recognition capabilities that are superior to previous technologies. Therefore, for the method in this study, a neural network was used to learn the probabilistic relationship between the number feature vectors and classes based on learning data, and to judge which class a target belonged to, being based on the unknown feature vectors acquired by measurement.

In this study, a 3-layer neural network was created, with the input layer, output layer, and intermediately layer each composed of four neurons.

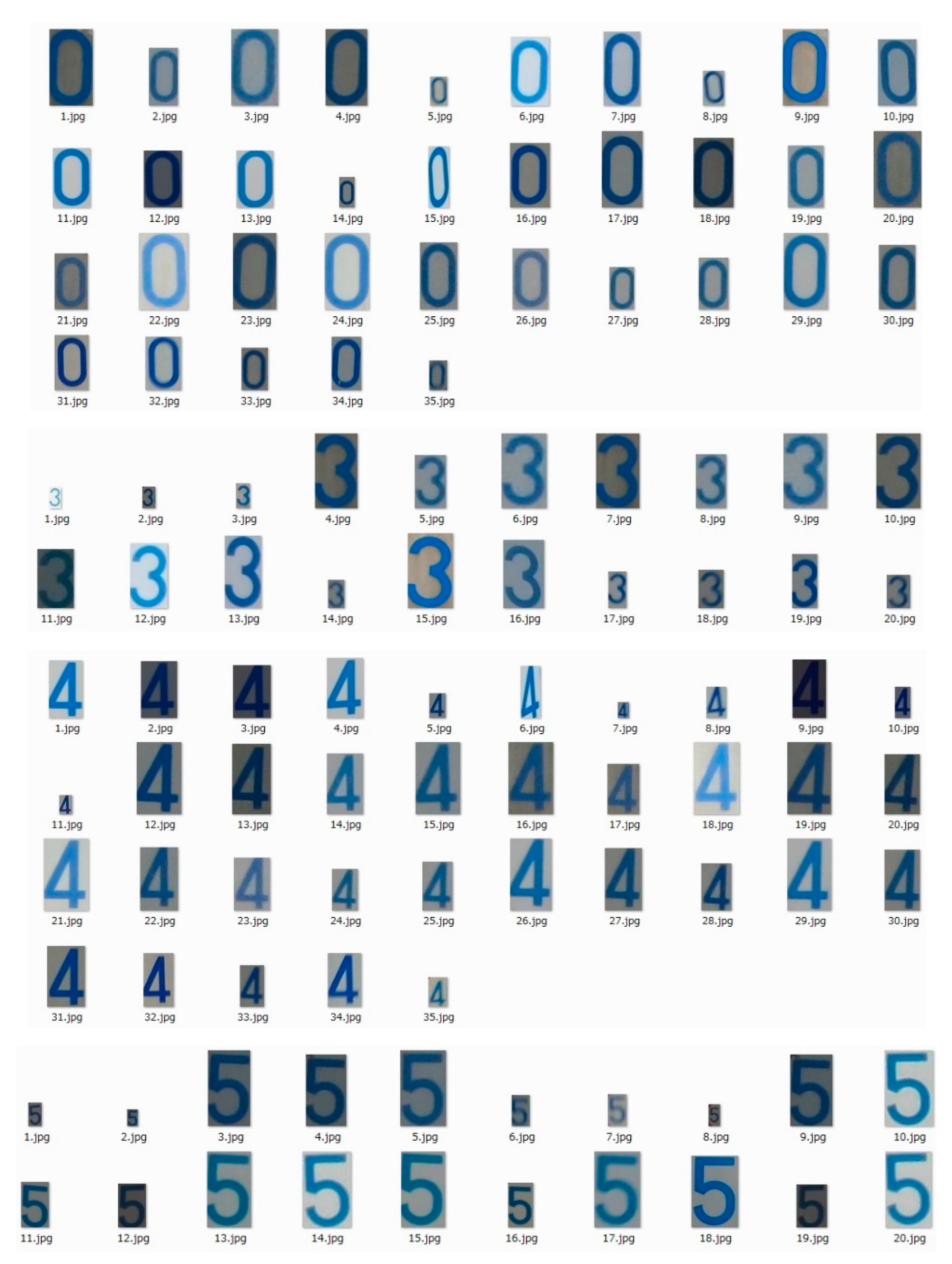

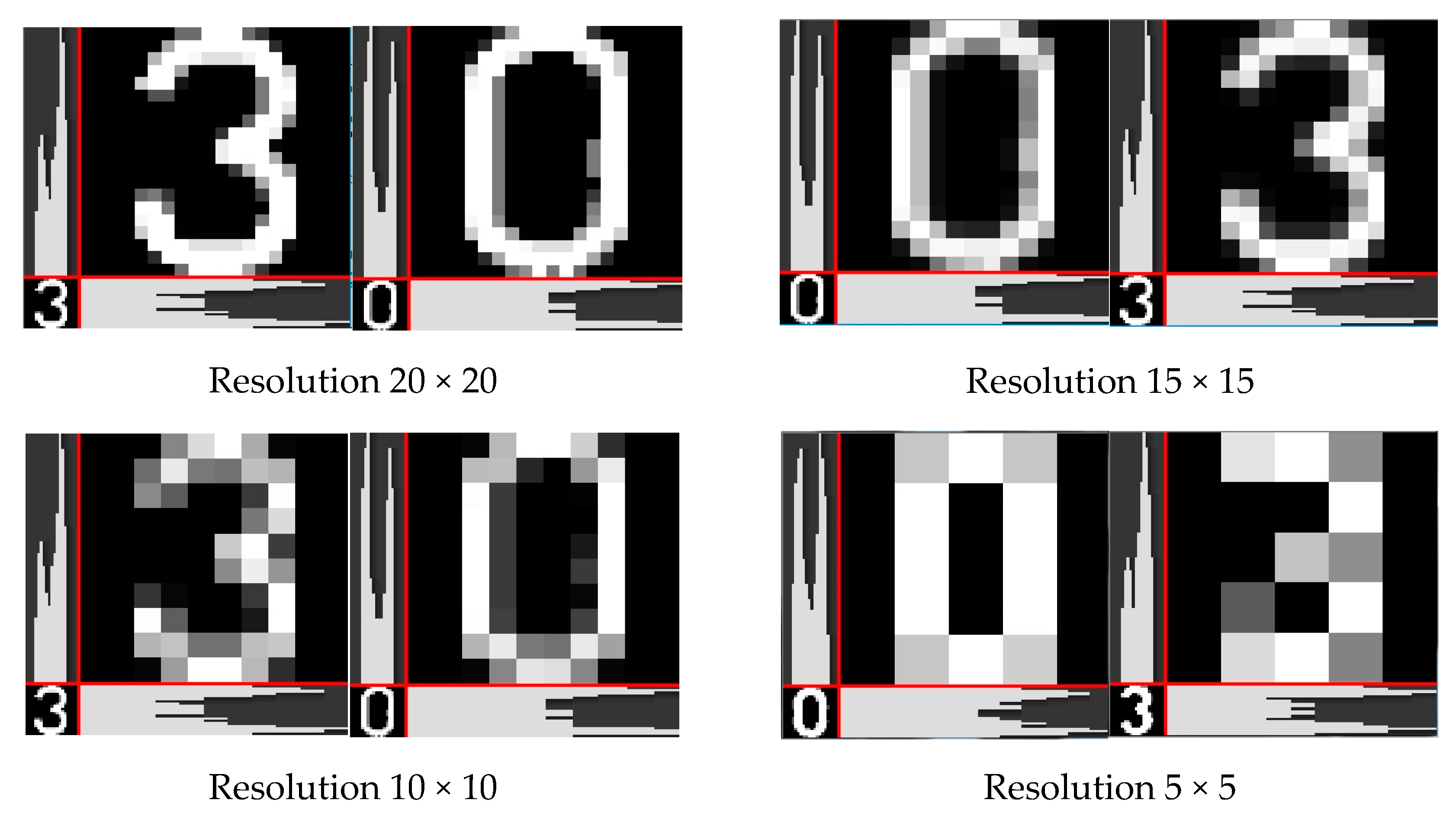

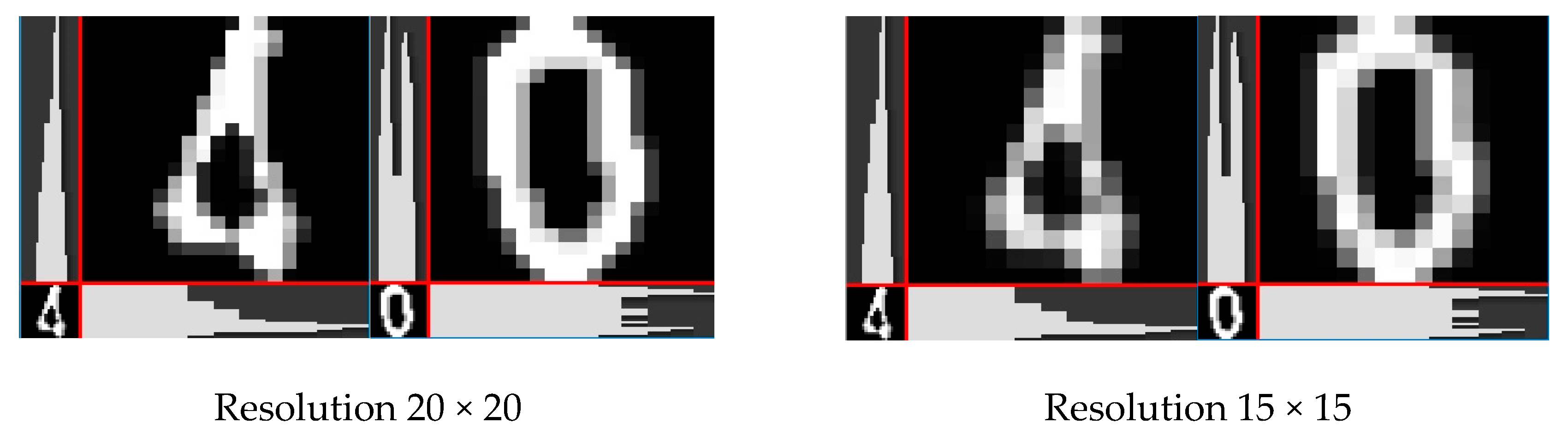

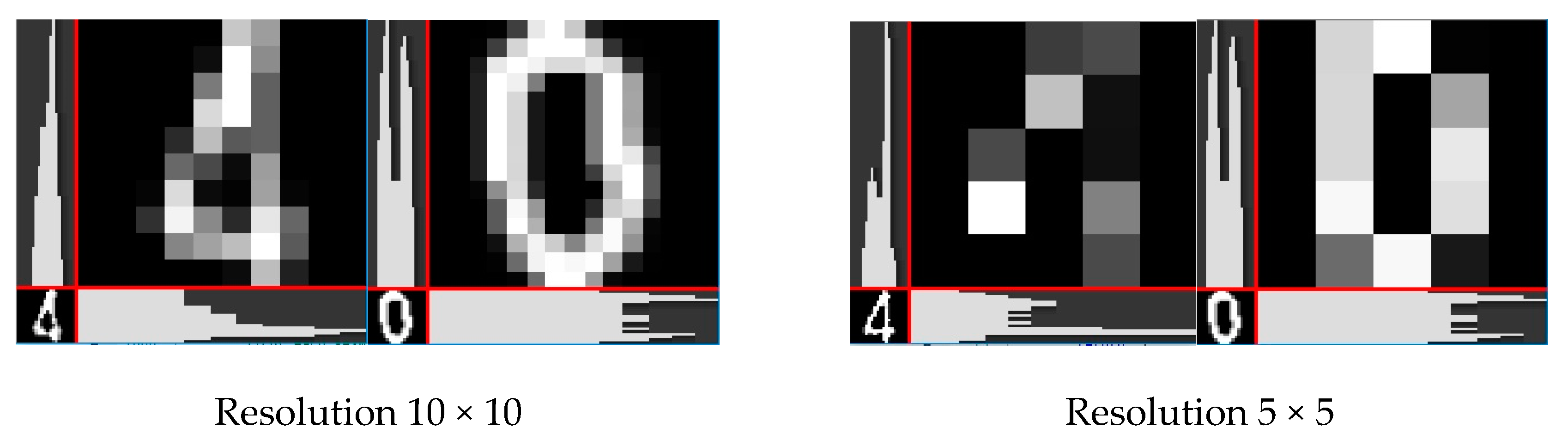

The neural network learning data was prepared as follows. Individual numbers were extracted from images of speed-limit signs captured under a variety of conditions as shown in

Figure 10, and were converted to grayscale images. The images were then resized to create four images: 5 × 5, 10 × 10, 15 × 15, and 20 × 20. 35 images of the number 0, 20 images of the number 3, 35 images of the number 4, and 20 images of the number 5 were prepared.

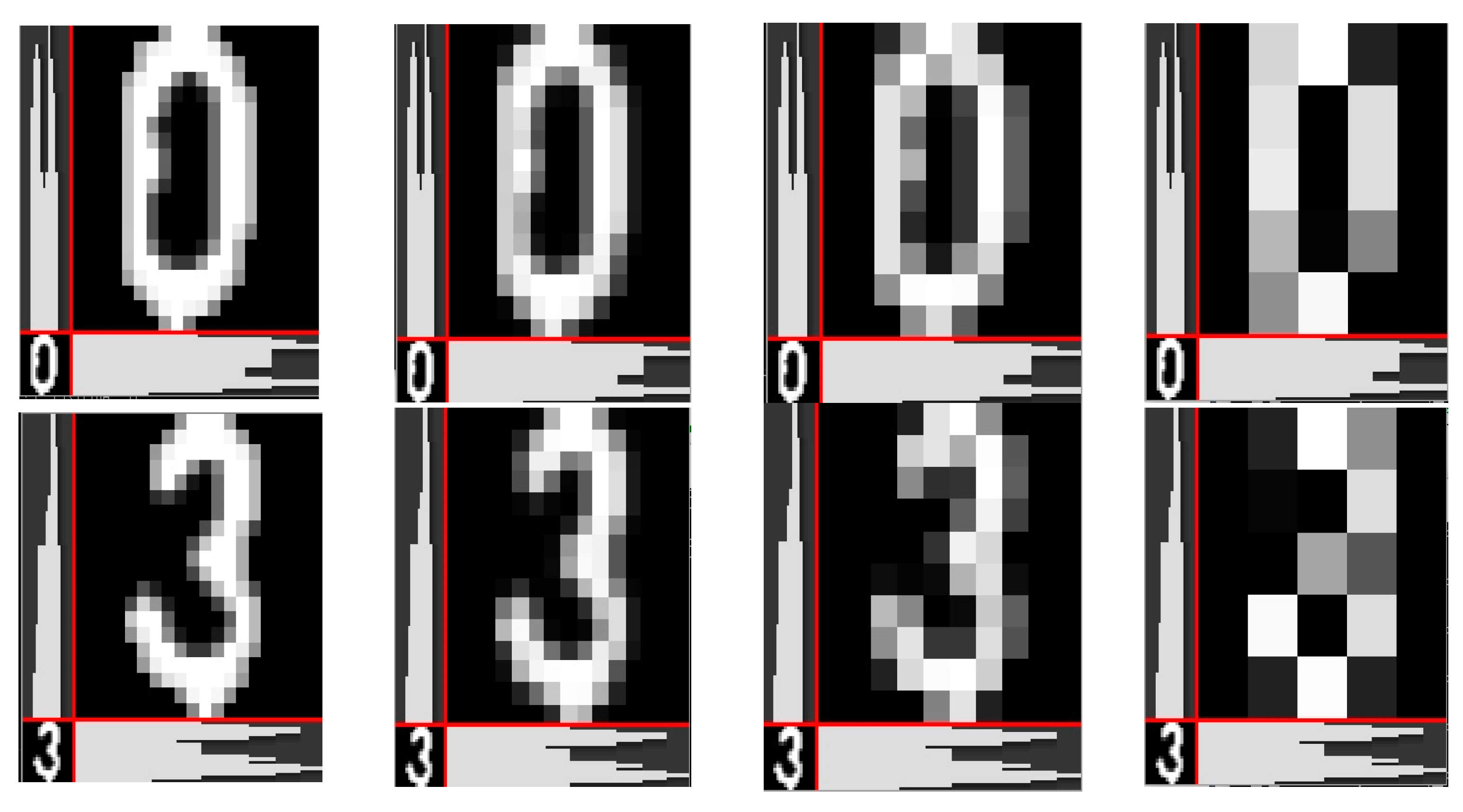

For each image, the following three feature quantities were calculated to create the feature quantity file: brightness, horizontal and vertical projection. Examples of feature quantities for each number are shown in

Figure 11.

Using the fact that the numbers are printed on speed-limit signs in blue, the number areas are extracted based on color information. Because the Hue, Saturation, Value (HSV) color specification system is closer to human color perception than the red, green, blue (RGB) color specification system, the image is converted from a RGB image to a HSV image, and the Hue, Saturation, and Value parameters are adjusted to extract only the blue number. As shown in

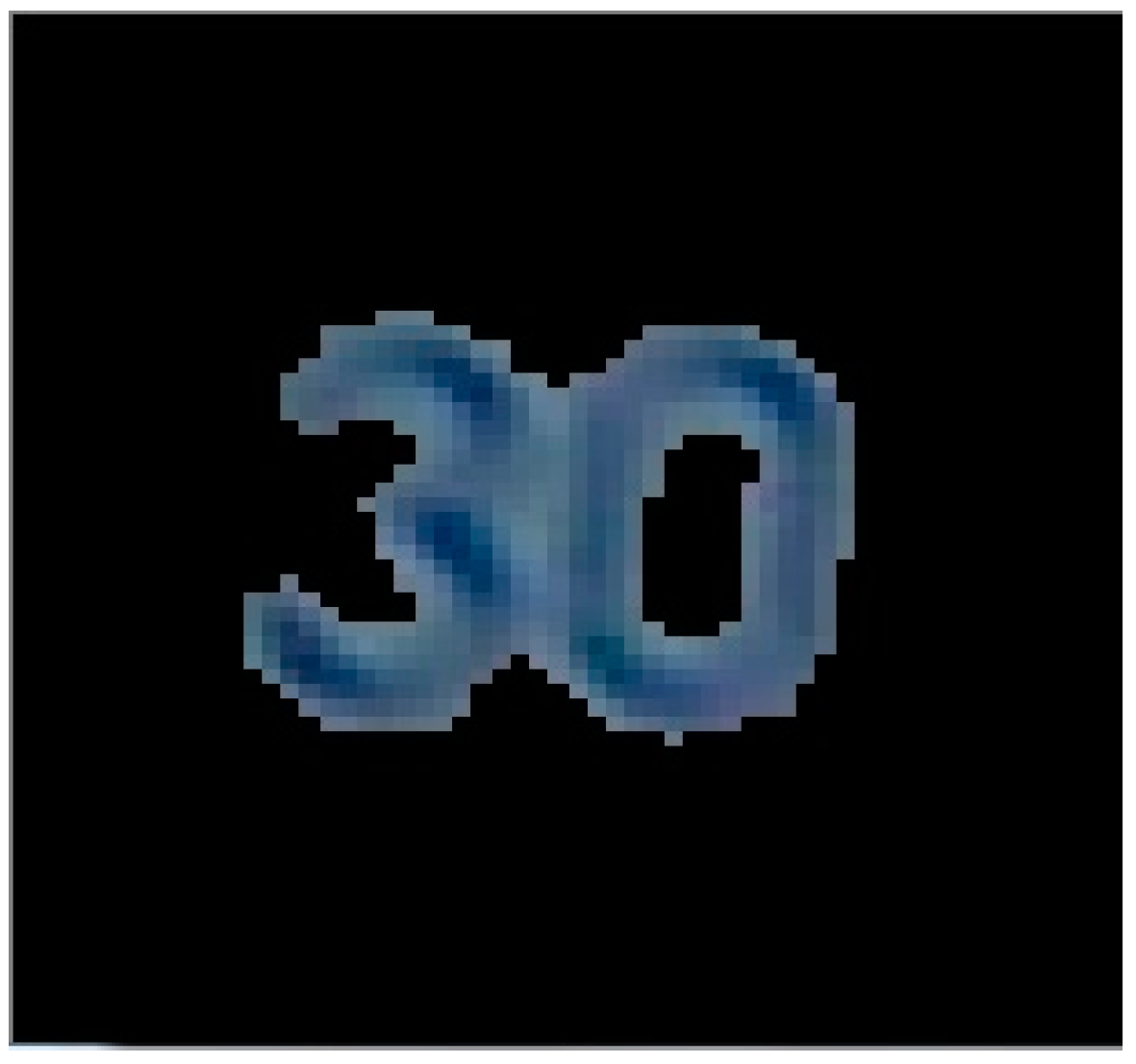

Figure 12, there are cases when the two-digit numbers cannot be separated, such as when the same parameters are used to process images acquired in different light environments, or when the image is too small. This study attempted to resolve this problem by using a variable average binarization process. If the image was acquired at a distance of 60–70 m from the sign, because the size of the image was 40 pixels or less, this problem was resolved by automatically doubling the image size before the number extraction process was performed. When the distance to the sign is approximately 70 m or more, the sign image itself is too small and it is not possible to extract the numbers.

The following is a detailed description of the processing used to extract each of the numbers on speed-limit signs.

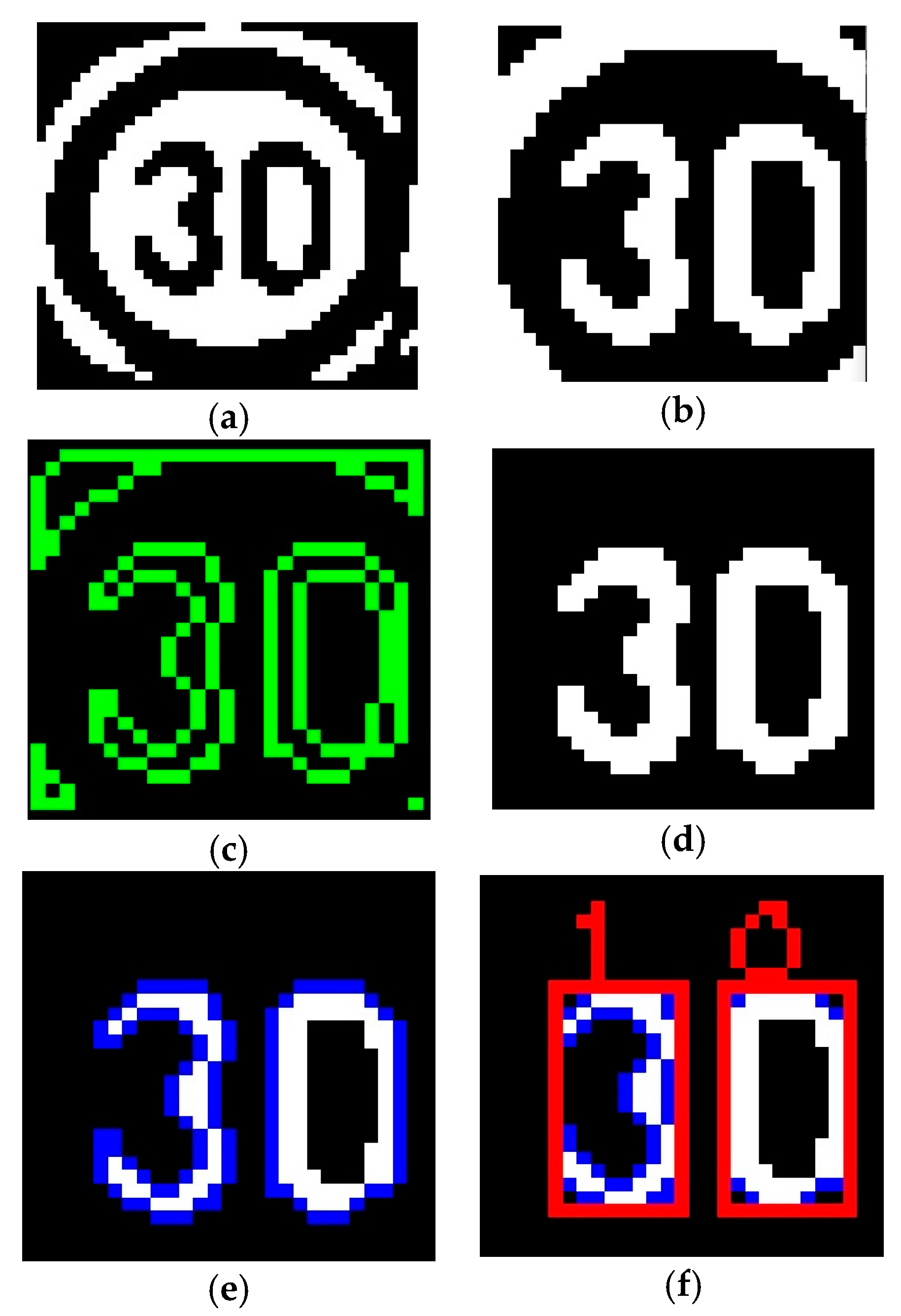

As shown in

Figure 4a, a speed limit sign consists of a red circle on the periphery with the numbers printed in blue on a white background inside the circle. Rather than directly extracting the numbers from the blue area, the speed limit sign extracted from the color image is converted to a grayscale image, and that image is subjected to variable average binarization to produce the image shown in

Figure 13a. The parts that are higher than a threshold for the Value inside and outside the red circle are converted to white, and all other parts are converted to black.

Because the area of the speed-limit sign detected in the image is a square that contacts the outer edges of the red circle, the numbers are located approximately in the center of the square. In consideration of this, processing is focused only on the square area at the center. Since the surrounding areas contain large amounts of white area (noise), this means that the process for removing the noise can be omitted. Extracting the area near the center and performing reverse processing yields the image shown in

Figure 13b.

Figure 13c shows the contours extracted from the borders between the white areas and black areas in

Figure 13b. The areas inside the multiple extracted contours are calculated. For the number 0 part, there are both inner and outer contours. Therefore, with both the number 3 contour and the two number 0 contours, there are a total of three contours in the number candidate images, and they occupy a large area in the image. After acquiring the contours with the three largest areas and filling them in, this yields two filled-in areas. For these two areas, the centroid of each area is found and the vertical coordinate value (Y coordinate) of each is calculated. The number 3 and number 0 on the speed-limit sign are described using approximately the same vertical coordinate of position. Therefore, when the Y coordinates of the filled-in areas described above are approximately the same, the number 3 and number 0 areas are used as the mask image for the image in

Figure 13b. This mask image is used to perform mask processing of

Figure 13b to produce the number candidate areas shown in

Figure 13d.

In addition, the dilation-erosion processing was performed to eliminate small black areas inside white objects in case that they exit in

Figure 13d, and then a contour processing was performed to separate the areas again, producing

Figure 13e. From this contour image (in this case, two contours), bounding rectangles with no inclination are found. Only the areas with aspect ratios close to those of the number 3 and number 0 are surrounded with the red bounding rectangles as shown in

Figure 13f and are delivered to the recognition processing. The numbers 0 and 1 are assigned to indicate the delivery order to the recognition processing.

The area is divided using the rectangles contacting the peripheries of the number candidate areas as shown in

Figure 13f. Each of these rectangular areas is separately identified as a number area. Therefore, the coordinate information for the number areas inside the speed limit sign is not used during the processing.

Following is an explanation of the application of this method to video. When image acquisition begins 150–180 m in front of the speed limit sign, detection begins in the distance range of 100–110 m. The distance at which recognition of the sign numbers begins is 70–90 m. Sufficient recognition was confirmed at 60–70 m. In this test, the processing time was on average 1.1 s/frame. The video camera image size was 1920 × 1080. The PC specs consisted of an IntelR CoreTM i7-3700 CPU @ 3.4 GHz and main memory of 8.00 GB.

At a vehicle speed of 50 km/h, a distance of 70 m is equivalent to the distance travelled in 5 s. Because the processing time for this method at the current stage is approximately 1 s, it is possible to inform the driver of the speed limit during that time.

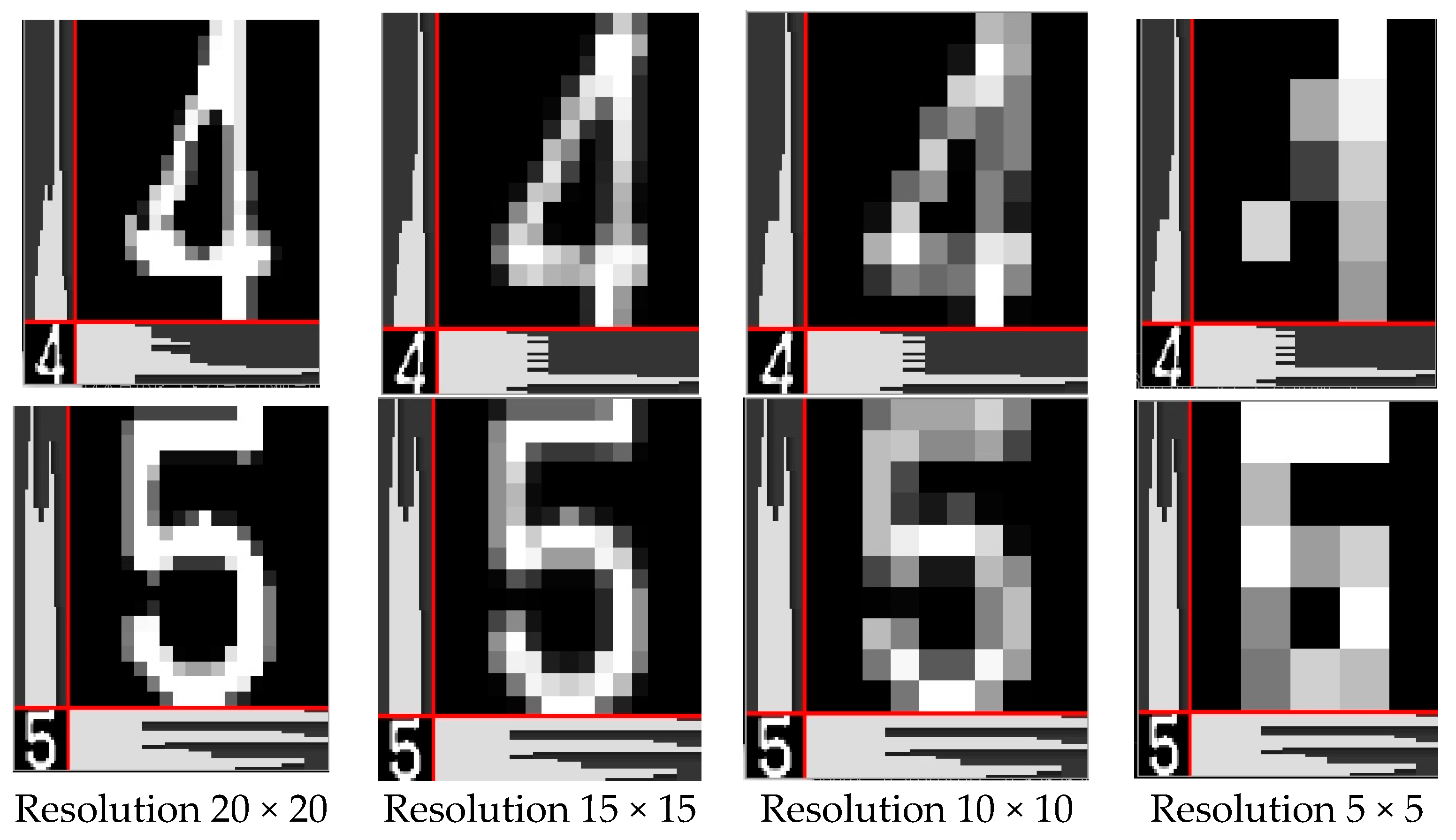

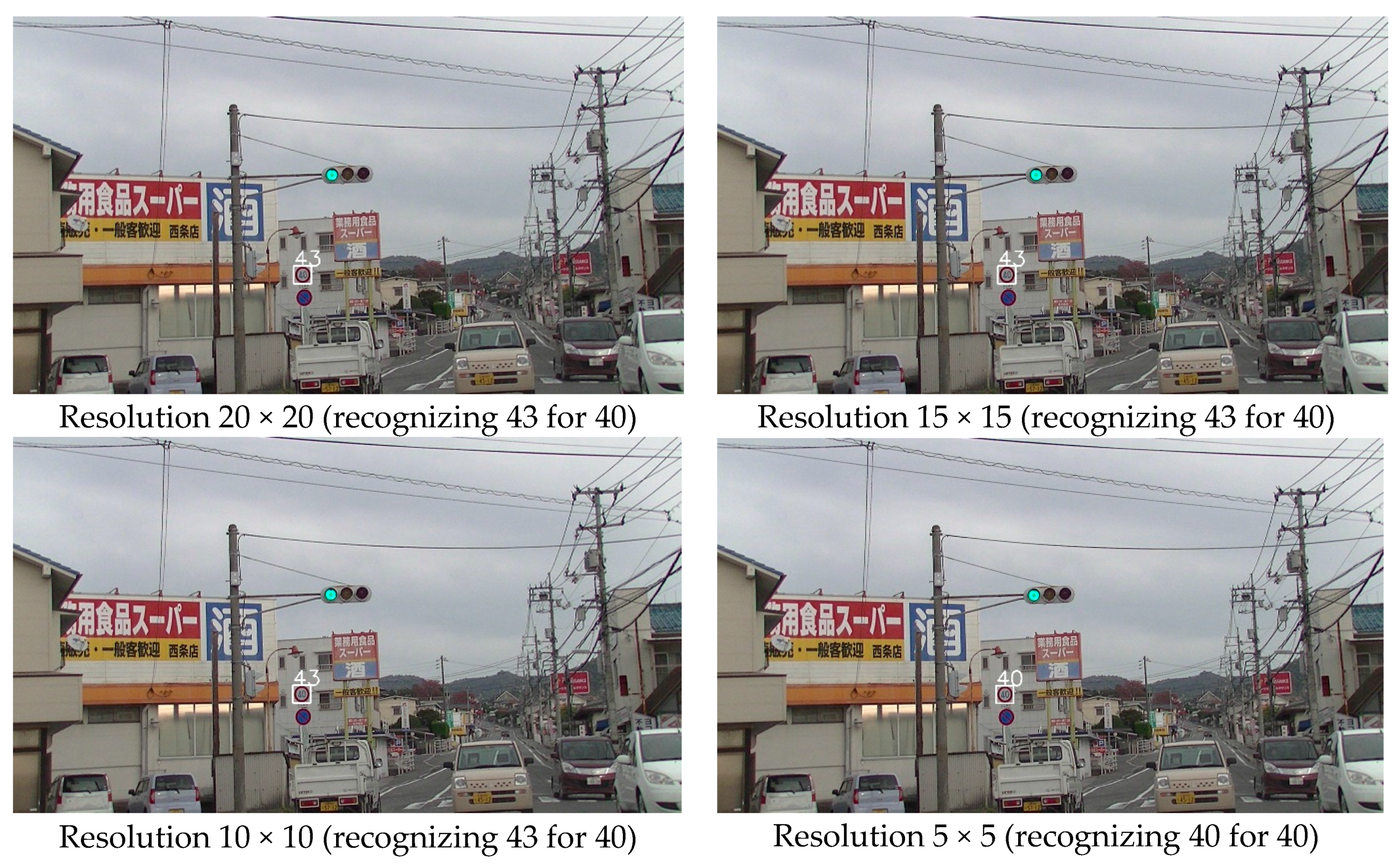

In order to identify what number each input image corresponds to, the 3 feature quantities of brightness, vertical and horizontal projection were calculated for each separated and extracted number image. These were then input into a neural network that had completed learning. Number recognition was then performed at each of the image resolutions (5 × 5, 10 × 10, 15 × 15, 20 × 20). Images of the feature quantities at each resolution for the extracted numbers 30, 40, and 50 are shown in

Figure 14,

Figure 15 and

Figure 16. Identification results for 30 km, 40 km, and 50 km per hour speed limits are shown in

Figure 17,

Figure 18 and

Figure 19.

Figure 17 shows the recognition results for the 30 km/h speed limit. When the resolution was 20 × 20, the 30 km/h speed limit was falsely recognized as 00 km/h. At all other resolutions, it was recognized correctly as 30 km/h.

Figure 18 shows the recognition results for the 40 km/h speed limit. It was recognized correctly as 40 km/h only at the 5 × 5 resolution. At other resolutions, it was falsely recognized as 43 km/h.

Figure 19 shows the recognition results for the 50 km/h speed limit. It was correctly recognized as 50 km/h at the 5 × 5 recognition. It was falsely recognized as 55 km/h at the 20 × 20 resolution, and 53 km/h at the 15 × 15 and 10 × 10 resolutions.

When the recognition conditions of 191 speed-limit signs were investigated, although there was almost no false recognition of 4 and 5 as other numbers, there were many cases of the numbers 0 and 3 each being falsely recognized as the other. Using the feature quantities in

Figure 11, it was separately confirmed that when the Value of certain pixels in the number 3 is deliberately changed, it may be falsely recognized as 0. Conversely, when the same is done with the number 0 it may be falsely recognized as 3. Changing the Value of certain pixels means that depending on the threshold settings for Value, Saturation, and Hue when the sign numbers are extracted during actual processing, the grayscale Value of those pixels may vary. It is thought that this is the reason why the numbers 0 and 3 are falsely recognized as each other. When the lower limit values for Value and Saturation were set to 60 for the 38 images shown in

Figure 20, then it was not possible to correctly extract the blue area numbers and false recognition occurred in the resolution 5 × 5 recognition results for 2.jpg, 10.jpg, 20.jpg, 26.jpg, and 36.jpg. For the 5 images where false recognition occurred, when the Saturation lower limit was set to 55 and the Value lower limit was set to 30, false recognition occurred in only 1 image (20.jpg). This shows that a slight difference in the threshold settings for Value, Saturation, and Hue will result in a small difference in the number feature quantities, and this has an effect on neural network recognition.

The speed recognition rate results for 191 extracted speed limit signs at each of the 4 resolutions (5 × 5, 10 × 10, 15 × 15, 20 × 20) are shown in

Table 1.

The methodology of this study involved identifying the four numbers 0, 3, 4, and 5 individually for speed limits 30 km/h, 40 km/h, and 50 km/h. Therefore, the recognition rate for a two-digit number was considered to be the recognition rate when both of the numbers were correctly identified, and this recognition rate was presented for each resolution. Because the numbers were recognized individually, there remained the problem of false recognition due to the effects of the extraction process for the number 0 and number 3. As a result, as shown in

Figure 17,

Figure 18, and

Figure 19, speed limits which do not actually exist (00 km/h, 43 km/h, and 53 km/h) were indicated. When such speed limits that do not exist are present in the recognition results, they can be easily identified as false recognition. Finally, it will be necessary to improve the methodology by performing a repeated recognition process when such results occur.

It shows that the recognition rate was highest with the 5 × 5 resolution image, and decreased at higher resolutions. This is believed to be because when the resolution is higher, the discrimination ability increases and numbers can only be recognized when the feature quantities are a nearly perfect match. Conversely, when the resolution is lower, the allowable range for each feature quantity increases, increasing the invariability of the feature quantities. This means that identification at low resolutions is not largely affected by some degree of difference in feature quantities between the evaluation image and learning images.

4. Discussion

The recognition method previously proposed by the author and others had a number of problems that needed to be resolved, including the inability to recognize the speed printed on the signs, and the need for a process to eliminate all parts other than the detected speed sign because the method uses only color information. This study proposed a method which converts the color image to a grayscale image in order to convert color differences to differences in grayscale levels, and uses the resulting pattern information during the speed limit sign detection process. During the process for recognizing the speed printed on a speed limit sign, first the RGB color space is converted to a HSV color space, and a variable average binarization process is applied to that image, allowing the individual speed numbers printed in blue to be reliably extracted. A neural network is then used to recognize the speed numbers in the extracted number areas.

Detection of the speed limit signs was performed using an AdaBoost classifier based on image LBP feature quantities. Feature quantities that can be used include Haar-like feature quantities, HOG feature quantities, and others, however, LBP feature quantities are the most suitable for speed limit sign detection because the LPB feature quantity learning time is shortest and because the number of false detection target types is smaller than with other feature quantities.

For number recognition, four image resolutions (5 × 5, 10 × 10, 15 × 15, 20 × 20) were prepared, and of these the 5 × 5 resolution showed the highest recognition rate at 97.1%. Although the 5 × 5 resolution is lower than the 20 × 20 resolution, it is thought that false detections were fewer because the feature quantities for each number have a certain invariability at lower resolutions. The percentage in which the speed limit sign was detected and the number part of the sign was correctly identified was 85%. Based on this, it will be necessary to improve the speed limit sign detection accuracy in order to improve the overall recognition rate.

The remaining issues include cases when the speed limit sign is not detected using only the feature quantities used in this study, and cases when number extraction was not successful due to signs with faded colors, signs that were backlit, or signs that were partially hidden by trees or other objects. In the future, it will be necessary to improve detection and recognition for the issues above. Because the processing time at the current stage is 1.1 s, and is too long for appropriately informing the driver, steps to shorten the processing time will also be necessary. It is possible that the use of a convoluted neural network or similar means may result in further improvement, and it will be necessary to perform comparison studies to determine the relative superiority compared to the method in this study. It is thought that improvements to these issues will be of increasing utility in driver support when automated driving is used.