Robust Parameter Design of Derivative Optimization Methods for Image Acquisition Using a Color Mixer †

Abstract

:1. Introduction

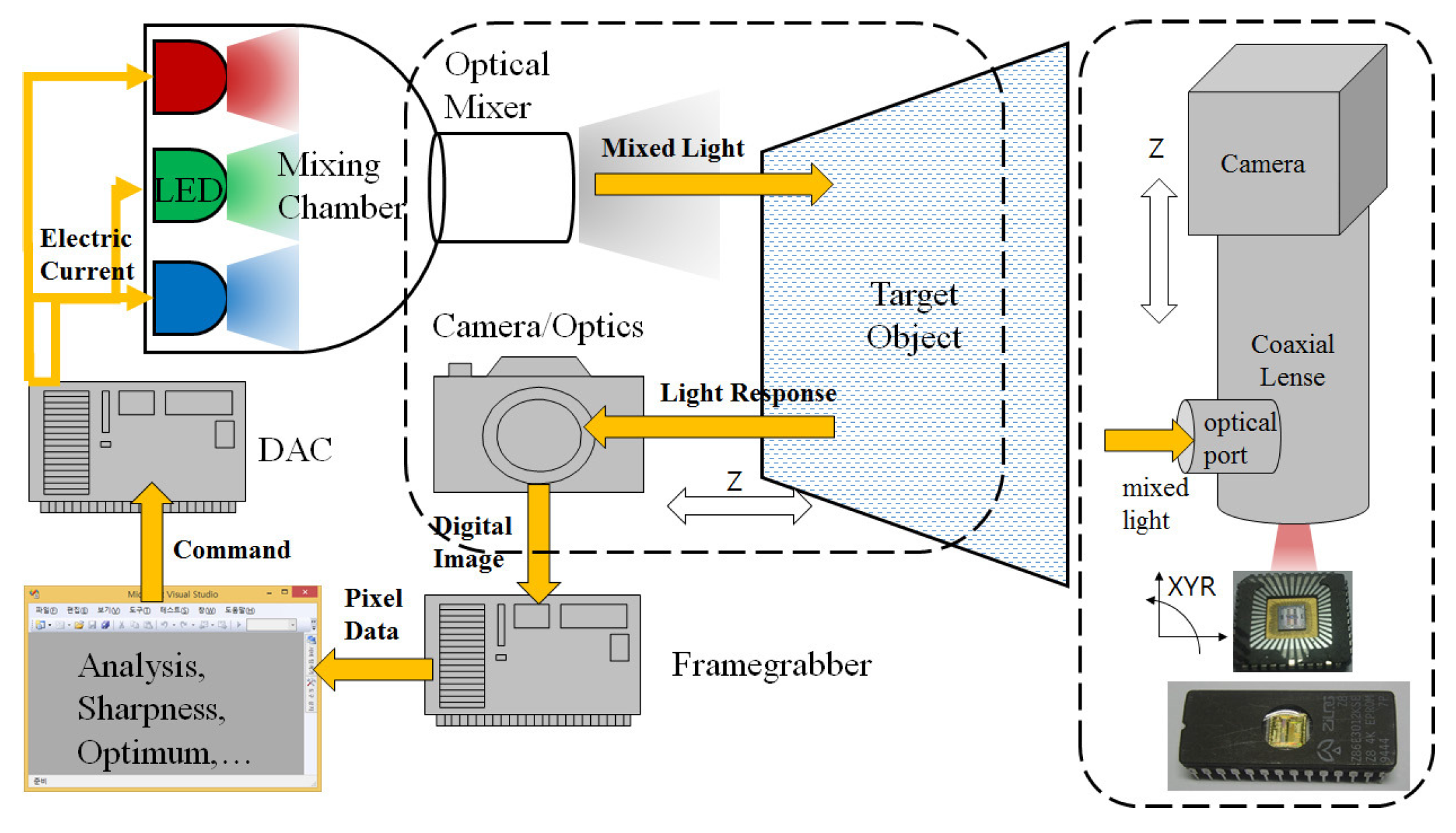

2. Derivative Optimum for Image Quality

2.1. Index for Image Quality

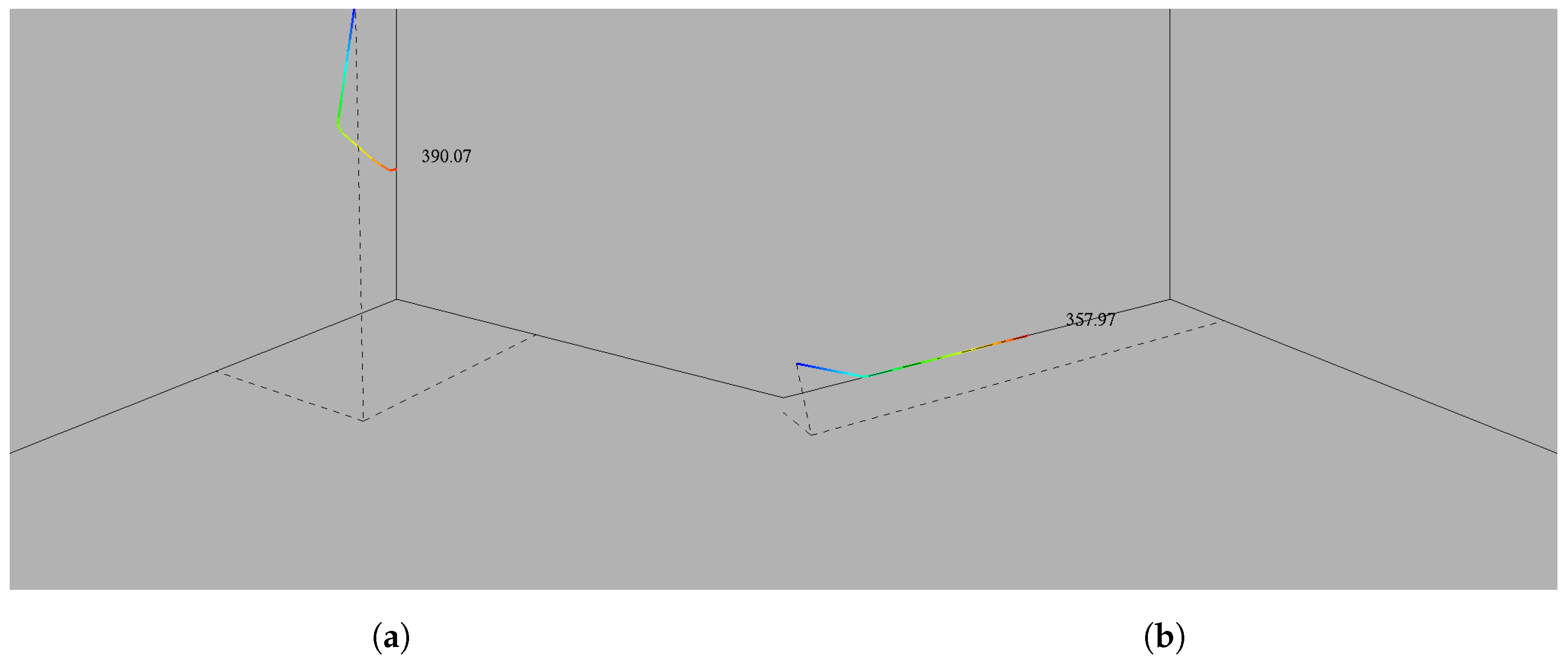

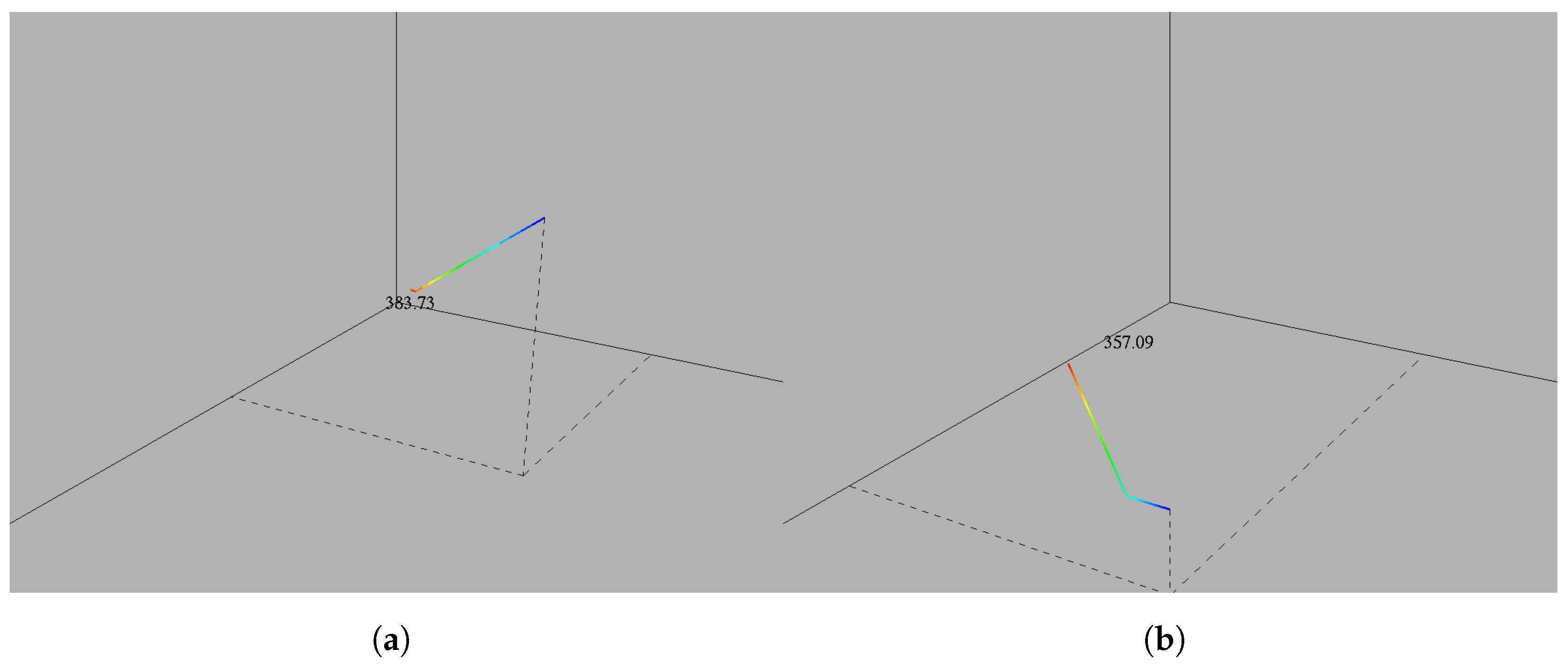

2.2. Derivative Optimum Methods

3. Robust Parameter Design

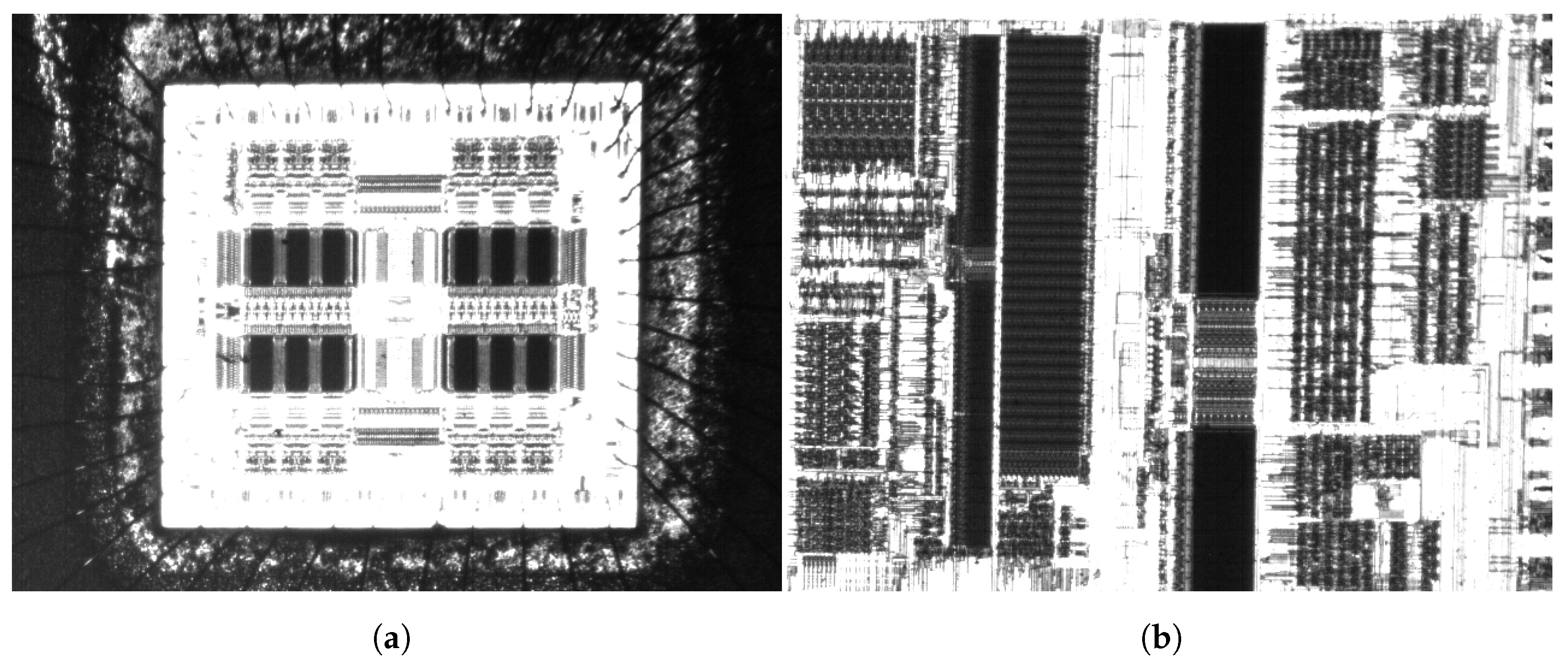

3.1. System for Experiment

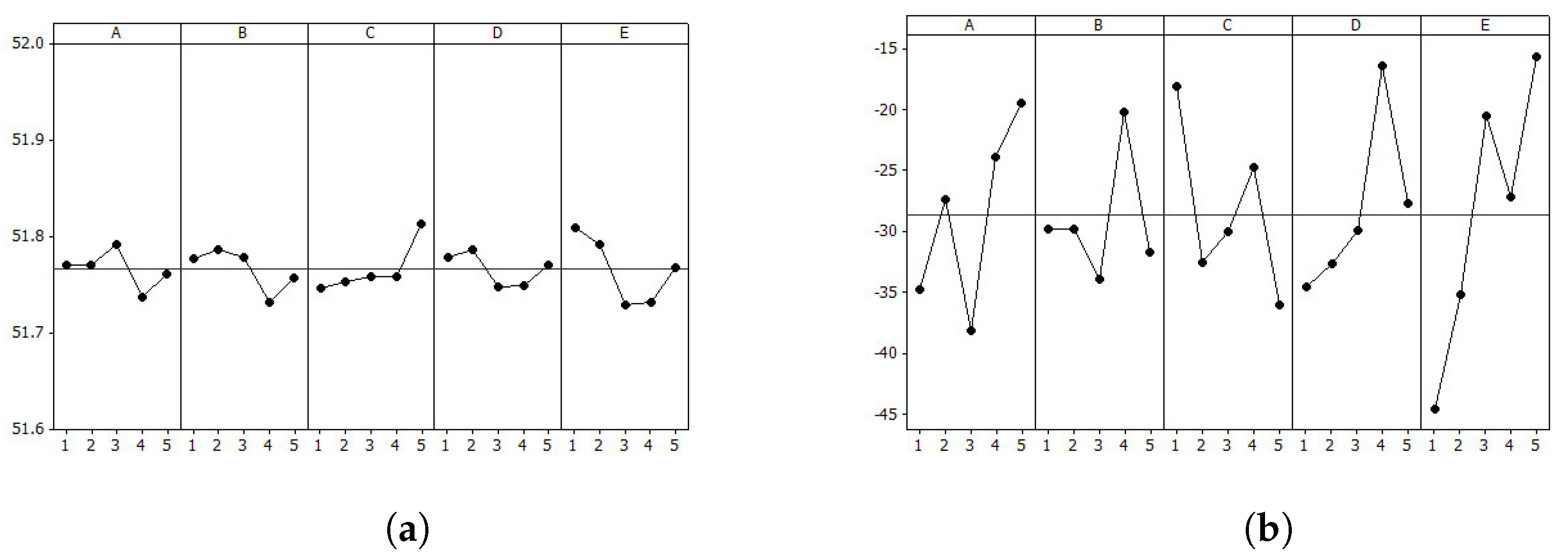

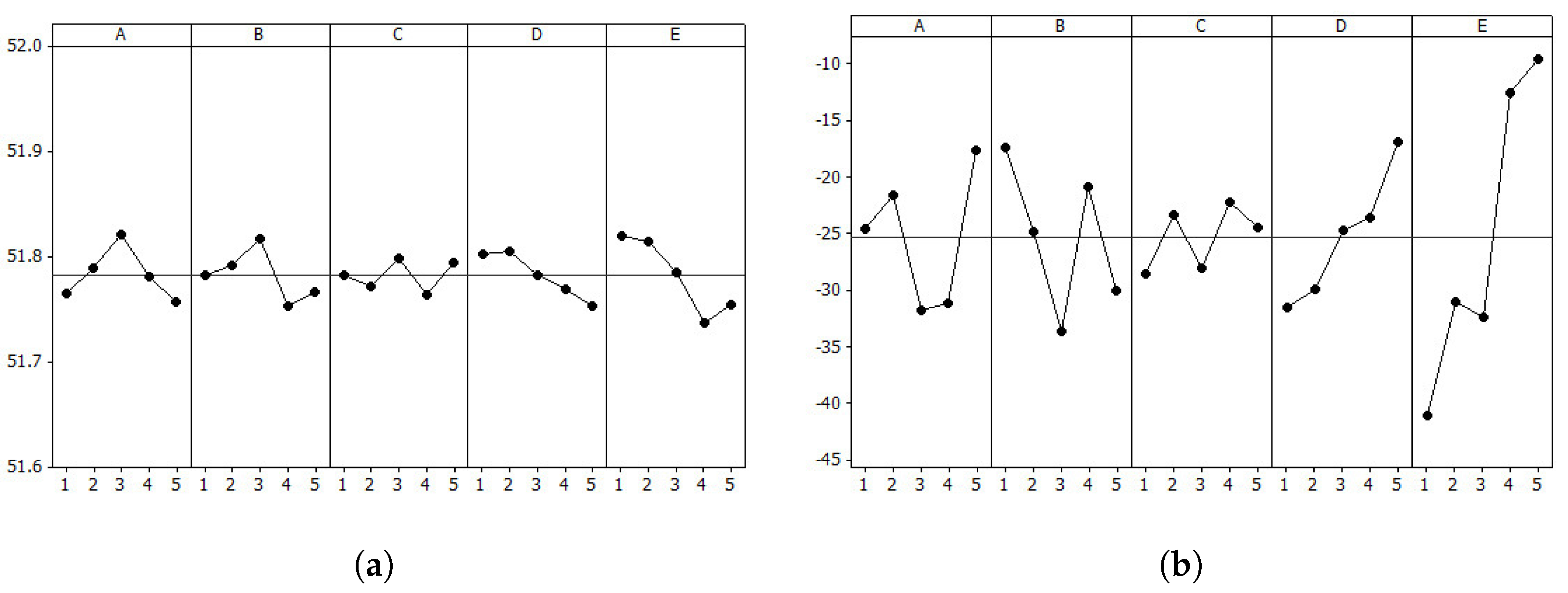

3.2. Taguchi Method

3.3. Experiment Design

4. Results

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| STD | Steepest descent method |

| CJG | Conjugate gradient method |

| LED | Light emitting diode |

| RGB | Red, green and blue |

| sRGB | Standard red, green and blue |

| ESS | Equal step search |

| TAE | Trial-and-error |

| SN | Signal-to-noise |

| Grey level of an image pixel | |

| Brightness, average grey level of an image | |

| k | Current iteration |

| m | Horizontal pixel number of an image |

| N | Number of voltage inputs for a color mixer |

| n | Vertical pixel number of an image |

| u | the performance index |

| V | Vector of voltage inputs for a color mixer |

| v | Individual voltage input for an LED |

| w | the number of experiments |

| x | Horizontal coordinate of an image |

| y | Vertical coordinate of an image |

| Convergence coefficient | |

| Terminal condition | |

| Convergence coefficient for limited range | |

| Negative sharpness, cost function | |

| Sharpness, image quality | |

| Threshold | |

| Index of update for conjugate gradient method |

References

- Gruber, F.; Wollmann, P.; Schumm, B.; Grahlert, W.; Kaskel, S. Quality Control of Slot-Die Coated Aluminum Oxide Layers for Battery Applications Using Hyperspectral Imaging. J. Imaging 2016, 2, 12. [Google Scholar] [CrossRef]

- Neogi, N.; Mohanta, D.K.; Dutta, P.K. Review of vision-based steel surface inspection systems. EURASIP J. Image Video Process. 2014, 50, 1–19. [Google Scholar] [CrossRef]

- Arecchi, A.V.; Messadi, T.; Koshel, R.J. Field Guide to Illimination; SPIE Press: Bellingham, WA, USA, 2007; pp. 110–115. [Google Scholar]

- Pfeifer, T.; Wiegers, L. Reliable tool wear monitoring by optimized image and illumination control in machine vision. Measurement 2010, 28, 209–218. [Google Scholar] [CrossRef]

- Jani, U.; Reijo, T. Setting up task-optimal illumination automatically for inspection purposes. Proc. SPIE 2007, 6503, 65030K. [Google Scholar]

- Kim, T.H.; Kim, S.T.; Cho, Y.J. Quick and Efficient Light Control for Conventional AOI Systems. Int. J. Precis. Eng. Manuf. 2015, 16, 247–254. [Google Scholar] [CrossRef]

- Chen, S.Y.; Zhang, J.W.; Zhang, H.X.; Kwok, N.M.; Li, Y.F. Intelligent Lighting Control for Vision-Based Robotic Manipulation. IEEE Trans. Ind. Electron. 2012, 59, 3254–3263. [Google Scholar] [CrossRef]

- Victoriano, P.M.A.; Amaral, T.G.; Dias, O.P. Automatic Optical Inspection for Surface Mounting Devices with IPC-A-610D compliance. In Proceedings of the 2011 International Conference on Power Engineering, Energy and Electrical Drives (POWERENG), Malaga, Spain, 11–13 May 2011; pp. 1–7. [Google Scholar]

- Muthu, S.; Gaines, J. Red, Green and Blue LED-based White Light Source: Implementation Challenges and Control Design. In Proceedings of the 2003 38th IAS Annual Meeting, Conference Record of the Industry Applications Conference, Salt Lake City, UT, USA, 12–16 October 2003; Volume 1, pp. 515–522. [Google Scholar]

- Esparza, D.; Moreno, I. Color patterns in a tapered lightpipe with RGB LEDs. Proc. SPIE 2010, 7786, 77860I. [Google Scholar]

- Van Gorkom, R.P.; van AS, M.A.; Verbeek, G.M.; Hoelen, C.G.A.; Alferink, R.G.; Mutsaers, C.A.; Cooijmans, H. Etendue conserved color mixing. Proc. SPIE 2007, 6670, 66700E. [Google Scholar]

- Zhu, Z.M.; Qu, X.H.; Liang, H.Y.; Jia, G.X. Effect of color illumination on color contrast in color vision application. Proc. SPIE Opt. Metrol. Insp. Ind. Appl. 2010, 7855, 785510. [Google Scholar]

- Park, J.I.; Lee, M.H.; Grossberg, M.D.; Nayar, S.K. Multispectral Imaging Using Multiplexed Illumination. In Proceedings of the IEEE 11th International Conference on Computer Vision (ICCV 2007), Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–8. [Google Scholar]

- Lee, M.H.; Seo, D.K.; Seo, B.K.; Park, J.I. Optimal Illumination Spectrum for Endoscope. In Proceedings of the 2011 17th Korea-Japan Joint Workshop on Frontiers of Computer Vision (FCV), Ulsan, Korea, 9–11 February 2011; pp. 1–6. [Google Scholar]

- Kim, T.H.; Kim, S.T.; Cho, Y.J. An Optical Mixer and RGB Control for Fine Images using Grey Scale Distribution. Int. J. Optomech. 2012, 6, 213–225. [Google Scholar] [CrossRef]

- Kim, T.H.; Kim, S.T.; Kim, J.S. Mixed-color illumination and quick optimum search for machine vision. Int. J. Optomech. 2013, 7, 208–222. [Google Scholar] [CrossRef]

- Kim, T.H.; Cho, K.Y.; Jin, K.C.; Yoon, J.S.; Cho, Y.J. Mixing and Simplex Search for Optimal Illumination in Machine Vision. Int. J. Optomech. 2014, 8, 206–217. [Google Scholar] [CrossRef]

- Arora, J.S. Introduction to Optimum Design, 2nd ed.; Academic Press: San Diego, CA, USA, 2004; pp. 433–465. [Google Scholar]

- Kim, T.H.; Cho, K.Y.; Kim, S.T.; Kim, J.S.; Jin, K.C.; Lee, S.H. Rapid Automatic Lighting Control of a Mixed Light Source for Image Acquisition using Derivative Optimum Search Methods. In Proceedings of the International Symposium of Optomechatronics Technology (ISOT 2015), Neuchâtel, Switzerland, 14–16 October 2015; p. 07004. [Google Scholar]

- Dey, N.; Ashour, A.S.; Beagum, S.; Pistola, D.S.; Gospodinov, M.; Gospodinova, E.P.; Tavares, J.M.R.S. Parameter Optimization for Local Polynomial Approximation based Intersection Confidence Interval Filter Using Genetic Algorithm: An Application for Brain MRI Image De-Noising. J. Imaging 2015, 1, 60–84. [Google Scholar] [CrossRef]

- Lazarevic, D.; Madic, M.; Jankovic, P.; Lazarevic, A. Cutting Parameters Optimization for Surface Roughness in Turning Operation of Polyethylene (PE) Using Taguchi Method. Tribol. Ind. 2012, 34, 68–73. [Google Scholar]

- Verma, J.; Agrawal, P.; Bajpai, L. Turning Parameter Optimization for Surface Roughness of Astm A242 Type-1 Alloys Steel by Taguchi Method. Int. J. Adv. Eng. Technol. 2012, 3, 255–261. [Google Scholar]

- Firestone, L.; Cook, K.; Culp, K.; Talsania, N.; Preston, K., Jr. Comparision of Autofocus Methods for Automated Microscopy. Cytometry 1991, 12, 195–206. [Google Scholar] [CrossRef] [PubMed]

- Bueno-Ibarra, M.A.; Alvarez-Borrego, J.; Acho, L.; Chavez-Sanchez, M.C. Fast autofocus algorithm for automated microscopes. Opt. Eng. 2005, 44, 063601. [Google Scholar]

- Sun, Y.; Duthaler, S.; Nelson, B.J. Autofocusing in computer microscopy: Selecting the optimal focus algorithm. Microsc. Res. Tech. 2004, 65, 139–149. [Google Scholar] [CrossRef] [PubMed]

- Muruganantham, C.; Jawahar, N.; Ramamoorthy, B.; Giridhar, D. Optimal settings for vision camera calibration. Int. J. Manuf. Technol. 2010, 42, 736–748. [Google Scholar] [CrossRef]

- Li, M.; Milor, L.; Yu, W. Developement of Optimum Annular Illumination: A Lithography-TCAD Approach. In Proceedings of the Advanced Semiconductor Manufacturing Conference and Workshop (IEEE/SEMI ), Cambridge, MA, USA, 10–12 September 1997; pp. 317–321. [Google Scholar]

- Kim, T.H.; Cho, K.Y.; Kim, S.T.; Kim, J.S. Optimal RGB Light-Mixing for Image Acquisition Using Random Search and Robust Parameter Design. In Proceedings of the 16th International Workshop on Combinatorial Image Analysis, Brno, Czech Republic, 28–30 May 2014; Volume 8466, pp. 171–185. [Google Scholar]

- Zahlay, F.D.; Rao, K.S.R.; Baloch, T.M. Autoreclosure in Extra High Voltage Lines using Taguchi’s Method and Optimized Neural Network. In Proceedings of the 2008 Electric Power Conference (EPEC), Vancouver, BC, Canada, 6–7 October 2008; Volume 2, pp. 151–155. [Google Scholar]

- Sugiono; Wu, M.H.; Oraifige, I. Employ the Taguchi Method to Optimize BPNN’s Architectures in Car Body Design System. Am. J. Comput. Appl. Math. 2012, 2, 140–151. [Google Scholar]

- Su, T.L.; Chen, H.W.; Hong, G.B.; Ma, C.M. Automatic Inspection System for Defects Classification of Stretch Kintted Fabrics. In Proceedings of the 2010 International Conference on Wavelet Analysis and Pattern Recognition (ICWAPR), Qingdao, China, 11–14 July 2010; pp. 125–129. [Google Scholar]

- Wu, Y.; Wu, A. Quality engineering and experimental design. In Taguchi Methods for Robust Design; The American Society of Mechanical Engineers: Fairfield, NJ, USA, 2000; pp. 3–16. [Google Scholar]

- Yoo, W.S.; Jin, Q.Q.; Chung, Y.B. A Study on the Optimization for the Blasting Process of Glass by Taguchi Method. J. Soc. Korea Ind. Syst. Eng. 2007, 30, 8–14. [Google Scholar]

| Factors | Code | Level | ||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| : Initial | A | 0.5 | 1.0 | 1.5 | 2.0 | 2.5 |

| : Initial | B | 0.5 | 1.0 | 1.5 | 2.0 | 2.5 |

| : Initial | C | 0.5 | 1.0 | 1.5 | 2.0 | 2.5 |

| : Threshold | D | 0.2 | 0.4 | 0.6 | 0.8 | 1.0 |

| : Convergence Constant | E | 0.2 | 0.4 | 0.6 | 0.8 | 1.0 |

| Run # | Control Factors | Pattern A | Pattern B | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A | B | C | D | E | |||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | 389.43 | 117 | 0.98 | 0.00 | 0.41 | 353.88 | 153 | 0.53 | 0.30 | 0.00 |

| 2 | 1 | 2 | 2 | 2 | 2 | 390.50 | 255 | 0.00 | 0.00 | 1.25 | - | - | - | - | - |

| 3 | 1 | 3 | 3 | 3 | 3 | - | - | - | - | - | 340.47 | 3 | 0.00 | 0.42 | 0.42 |

| 4 | 1 | 4 | 4 | 4 | 4 | 382.4 | 2 | 0.00 | 0.72 | 0.72 | - | - | - | - | - |

| 5 | 1 | 5 | 5 | 5 | 5 | 390.43 | 152 | 0.00 | 0.00 | 1.30 | 317.63 | 2 | 0.00 | 0.53 | 0.82 |

| 6 | 2 | 1 | 2 | 3 | 4 | 386.09 | 189 | 0.52 | 0.02 | 0.52 | 344.02 | 1 | 0.52 | 0.02 | 0.52 |

| 7 | 2 | 2 | 3 | 4 | 5 | 387.22 | 1 | 0.20 | 0.20 | 0.70 | 337.22 | 1 | 0.20 | 0.20 | 0.70 |

| 8 | 2 | 3 | 4 | 5 | 1 | 390.40 | 123 | 0.00 | 0.00 | 1.26 | 333.35 | 6 | 0.00 | 0.30 | 0.80 |

| 9 | 2 | 4 | 5 | 1 | 2 | 390.36 | 49 | 0.00 | 0.00 | 1.27 | 335.65 | 22 | 0.00 | 0.24 | 0.74 |

| 10 | 2 | 5 | 1 | 2 | 3 | 384.64 | 6 | 0.00 | 1.06 | 0.00 | 346.93 | 108 | 0.00 | 0.58 | 0.00 |

| 11 | 3 | 1 | 3 | 5 | 2 | 389.01 | 109 | 0.75 | 0.02 | 0.57 | - | - | - | - | - |

| 12 | 3 | 2 | 4 | 1 | 3 | 388.87 | 263 | 0.78 | 0.00 | 0.51 | 340.46 | 11 | 0.42 | 0.00 | 0.68 |

| 13 | 3 | 3 | 5 | 2 | 4 | 390.36 | 164 | 0.00 | 0.00 | 1.29 | 324.30 | 107 | 0.00 | 0.00 | 0.90 |

| 14 | 3 | 4 | 1 | 3 | 5 | 385.88 | 3 | 0.41 | 0.70 | 0.00 | 331.85 | 2 | 0.30 | 0.80 | 0.00 |

| 15 | 3 | 5 | 2 | 4 | 1 | 389.21 | 237 | 0.99 | 0.00 | 0.38 | 346.91 | 192 | 0.00 | 0.58 | 0.00 |

| 16 | 4 | 1 | 4 | 2 | 5 | 387.71 | 3 | 0.80 | 0.00 | 0.80 | 338.46 | 18 | 0.40 | 0.00 | 0.40 |

| 17 | 4 | 2 | 5 | 3 | 1 | 389.18 | 206 | 1.04 | 0.00 | 0.36 | 338.25 | 15 | 0.30 | 0.00 | 0.70 |

| 18 | 4 | 3 | 1 | 4 | 2 | - | - | - | - | - | 354.62 | 4 | 0.72 | 0.22 | 0.00 |

| 19 | 4 | 4 | 2 | 5 | 3 | 382.32 | 2 | 0.80 | 0.80 | 0.00 | 303.61 | 2 | 0.82 | 0.80 | 0.00 |

| 20 | 4 | 5 | 3 | 1 | 4 | 384.95 | 49 | 0.24 | 0.74 | 0.00 | 349.91 | 151 | 0.24 | 0.42 | 0.00 |

| 21 | 5 | 1 | 5 | 4 | 3 | 387.90 | 4 | 0.58 | 0.00 | 0.60 | 341.62 | 4 | 0.58 | 0.00 | 0.58 |

| 22 | 5 | 2 | 1 | 5 | 4 | 386.36 | 2 | 1.28 | 0.00 | 0.00 | 358.14 | 2 | 0.90 | 0.00 | 0.00 |

| 23 | 5 | 3 | 2 | 1 | 5 | 386.78 | 6 | 1.30 | 0.30 | 0.00 | 358.26 | 82 | 0.90 | 0.00 | 0.00 |

| 24 | 5 | 4 | 3 | 2 | 1 | 389.05 | 191 | 1.08 | 0.00 | 0.32 | 355.32 | 23 | 0.66 | 0.16 | 0.00 |

| 25 | 5 | 5 | 4 | 3 | 2 | 386.50 | 8 | 0.58 | 0.58 | 0.08 | 350.51 | 137 | 0.34 | 0.34 | 0.00 |

| Run # | Control Factors | Pattern A | Pattern B | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A | B | C | D | E | |||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | 389.28 | 59 | 0.99 | 0.00 | 0.43 | 358.49 | 143 | 0.95 | 0.00 | 0.00 |

| 2 | 1 | 2 | 2 | 2 | 2 | 390.58 | 41 | 0.00 | 0.00 | 1.25 | 343.00 | 24 | 0.48 | 0.16 | 0.43 |

| 3 | 1 | 3 | 3 | 3 | 3 | 390.43 | 139 | 0.00 | 0.00 | 1.20 | 340.21 | 3 | 0.00 | 0.42 | 0.42 |

| 4 | 1 | 4 | 4 | 4 | 4 | 382.42 | 2 | 0.00 | 0.72 | 0.72 | 354.05 | 9 | 0.80 | 0.00 | 0.00 |

| 5 | 1 | 5 | 5 | 5 | 5 | 384.66 | 2 | 0.00 | 0.57 | 0.78 | 316.46 | 2 | 0.00 | 0.50 | 0.88 |

| 6 | 2 | 1 | 2 | 3 | 4 | 386.07 | 1 | 0.52 | 0.02 | 0.52 | 343.92 | 1 | 0.52 | 0.02 | 0.52 |

| 7 | 2 | 2 | 3 | 4 | 5 | 387.16 | 1 | 0.20 | 0.20 | 0.70 | 337.14 | 1 | 0.20 | 0.20 | 0.70 |

| 8 | 2 | 3 | 4 | 5 | 1 | 390.40 | 50 | 0.00 | 0.00 | 1.30 | 358.43 | 168 | 0.98 | 0.00 | 0.00 |

| 9 | 2 | 4 | 5 | 1 | 2 | 390.54 | 28 | 0.00 | 0.00 | 1.27 | 338.89 | 29 | 0.24 | 0.16 | 0.66 |

| 10 | 2 | 5 | 1 | 2 | 3 | 388.64 | 178 | 0.74 | 0.02 | 0.71 | 350.01 | 21 | 0.48 | 0.41 | 0.00 |

| 11 | 3 | 1 | 3 | 5 | 2 | 390.42 | 24 | 0.00 | 0.00 | 1.28 | 331.95 | 2 | 0.70 | 0.00 | 0.70 |

| 12 | 3 | 2 | 4 | 1 | 3 | 390.50 | 144 | 0.00 | 0.00 | 1.25 | 352.28 | 16 | 0.58 | 0.24 | 0.08 |

| 13 | 3 | 3 | 5 | 2 | 4 | 390.37 | 17 | 0.00 | 0.00 | 1.31 | 324.42 | 15 | 0.00 | 0.00 | 0.90 |

| 14 | 3 | 4 | 1 | 3 | 5 | 388.16 | 5 | 1.48 | 0.00 | 0.00 | 347.42 | 33 | 0.00 | 0.60 | 0.00 |

| 15 | 3 | 5 | 2 | 4 | 1 | 390.39 | 284 | 0.00 | 0.00 | 1.29 | 348.20 | 26 | 0.11 | 0.63 | 0.00 |

| 16 | 4 | 1 | 4 | 2 | 5 | 387.61 | 3 | 0.80 | 0.00 | 0.80 | 338.48 | 124 | 0.40 | 0.00 | 0.40 |

| 17 | 4 | 2 | 5 | 3 | 1 | 390.43 | 273 | 0.00 | 0.00 | 1.24 | 342.81 | 38 | 0.28 | 0.28 | 0.43 |

| 18 | 4 | 3 | 1 | 4 | 2 | 390.43 | 264 | 0.00 | 0.00 | 1.25 | 354.42 | 4 | 0.72 | 0.22 | 0.00 |

| 19 | 4 | 4 | 2 | 5 | 3 | 387.57 | 7 | 0.95 | 0.60 | 0.00 | 304.10 | 2 | 0.80 | 0.80 | 0.00 |

| 20 | 4 | 5 | 3 | 1 | 4 | 387.84 | 39 | .00 | 0.27 | 0.91 | 349.67 | 13 | 0.24 | 0.42 | 0.00 |

| 21 | 5 | 1 | 5 | 4 | 3 | 387.92 | 5 | 0.74 | 0.08 | 0.70 | 338.57 | 4 | 0.58 | 0.00 | 0.65 |

| 22 | 5 | 2 | 1 | 5 | 4 | 384.69 | 1 | 1.70 | 0.20 | 0.00 | 358.02 | 2 | 0.90 | 0.00 | 0.00 |

| 23 | 5 | 3 | 2 | 1 | 5 | 387.46 | 8 | 0.90 | 0.30 | 0.20 | 357.96 | 8 | 0.90 | 0.00 | 0.00 |

| 24 | 5 | 4 | 3 | 2 | 1 | 389.12 | 80 | 0.93 | 0.00 | 0.42 | 358.45 | 118 | 0.95 | 0.00 | 0.00 |

| 25 | 5 | 5 | 4 | 3 | 2 | 386.35 | 8 | 0.58 | 0.58 | 0.08 | 350.36 | 9 | 0.34 | 0.34 | 0.00 |

| Control Factors | l | |||||||

|---|---|---|---|---|---|---|---|---|

| Source | Parameter | DF | SS | MS | Contribution (%) | SS | MS | Contribution (%) |

| A | Initial | 4 | 19.548 | 4.887 | 14.2 | 71,168 | 17,792 | 24.5 |

| B | Initial | 4 | 18.847 | 4.712 | 13.7 | 36,910 | 9228 | 12.7 |

| C | Initial | 4 | 34.290 | 8.572 | 23.0 | 65,697 | 16,424 | 22.6 |

| D | 4 | 17.188 | 4.297 | 12.5 | 43,406 | 10,851 | 14.9 | |

| E | 4 | 41.656 | 10.414 | 30.3 | 71,069 | 17,767 | 24.5 | |

| Error | 2 | 5.806 | 2.903 | 4.2 | 2171 | 1085 | 0.7 | |

| Total | 22 | 137.335 | 290,421 | |||||

| Control Factors | l | |||||||

|---|---|---|---|---|---|---|---|---|

| Source | Parameter | DF | SS | MS | Contribution (%) | SS | MS | Contribution (%) |

| A | Initial | 4 | 1094.64 | 273.66 | 24.0 | 4027 | 1007 | 4.5 |

| B | Initial | 4 | 916.54 | 229.13 | 20.1 | 40,860 | 10,215 | 45.6 |

| C | Initial | 4 | 1019.66 | 254.91 | 22.4 | 2807 | 702 | 3.1 |

| D | 4 | 715.99 | 179 | 15.7 | 17,557 | 4389 | 19.6 | |

| E | 4 | 516.07 | 129.02 | 11.3 | 10,919 | 2730 | 12.2 | |

| Error | 4 | 291.90 | 72.97 | 6.4 | 13,524 | 3381 | 15.1 | |

| Total | 24 | 4554.80 | 89,694 | |||||

| Control Factors | l | |||||||

|---|---|---|---|---|---|---|---|---|

| Source | Parameter | DF | SS | MS | Contribution (%) | SS | MS | Contribution (%) |

| A | Initial | 4 | 24.802 | 6.2 | 18.5 | 30,195 | 7549 | 14.8 |

| B | Initial | 4 | 23.749 | 5.937 | 17.7 | 34,288 | 8572 | 16.9 |

| C | Initial | 4 | 8.295 | 2.074 | 6.2 | 9756 | 2439 | 4.8 |

| D | 4 | 19.159 | 4.8 | 14.3 | 24,720 | 6180 | 12.1 | |

| E | 4 | 51.27 | 12.818 | 38.2 | 72,863 | 18,216 | 35.8 | |

| Error | 4 | 7.054 | 1.763 | 5.3 | 31,656 | 7914 | 15.6 | |

| Total | 24 | 134.329 | 203,478 | |||||

| Control Factors | l | |||||||

|---|---|---|---|---|---|---|---|---|

| Source | Parameter | DF | SS | MS | Contribution (%) | SS | MS | Contribution (%) |

| A | Initial | 4 | 637.8 | 159.5 | 14.3 | 1884.4 | 471.1 | 3.3 |

| B | Initial | 4 | 161.4 | 40.3 | 3.6 | 5903.6 | 1475.9 | 10.3 |

| C | Initial | 4 | 1491.5 | 372.9 | 33.4 | 8974.8 | 2243.7 | 15.6 |

| D | 4 | 840.4 | 210.1 | 18.8 | 8401.6 | 2100.4 | 14.6 | |

| E | 4 | 794.9 | 198.7 | 17.8 | 29,353.6 | 7338.4 | 51.1 | |

| Error | 4 | 537.4 | 134.3 | 12.0 | 2888 | 722 | 5.0 | |

| Total | 24 | 4463.4 | 57,406 | |||||

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.; Cho, K.; Kim, J.; Jin, K.; Kim, S. Robust Parameter Design of Derivative Optimization Methods for Image Acquisition Using a Color Mixer. J. Imaging 2017, 3, 31. https://doi.org/10.3390/jimaging3030031

Kim H, Cho K, Kim J, Jin K, Kim S. Robust Parameter Design of Derivative Optimization Methods for Image Acquisition Using a Color Mixer. Journal of Imaging. 2017; 3(3):31. https://doi.org/10.3390/jimaging3030031

Chicago/Turabian StyleKim, HyungTae, KyeongYong Cho, Jongseok Kim, KyungChan Jin, and SeungTaek Kim. 2017. "Robust Parameter Design of Derivative Optimization Methods for Image Acquisition Using a Color Mixer" Journal of Imaging 3, no. 3: 31. https://doi.org/10.3390/jimaging3030031