Color Consistency and Local Contrast Enhancement for a Mobile Image-Based Change Detection System

Abstract

:1. Introduction

2. Methods

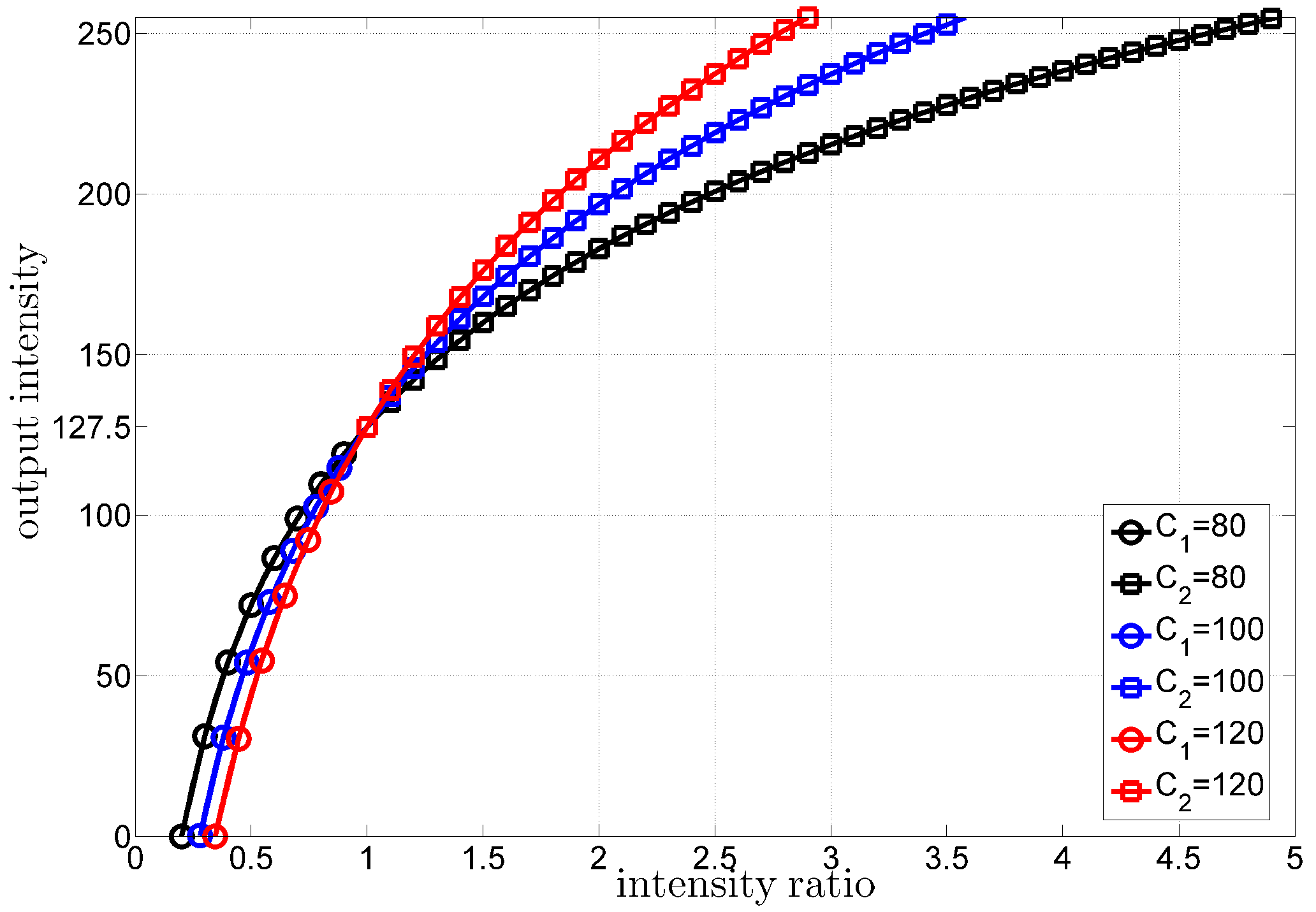

2.1. Center/Surround Retinex Using an Extended Gain/Offset Function

2.2. Gray World

2.3. Color Processing Function Combining Retinex and Gray World

2.4. Stacked Integral Images (SII)

3. Results

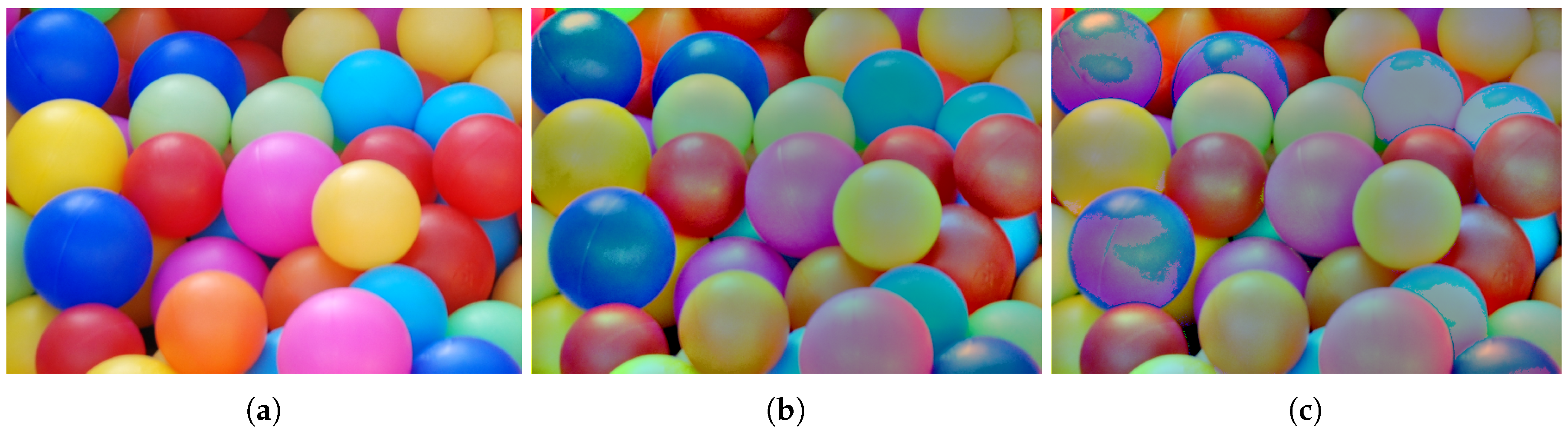

3.1. Color Rendition

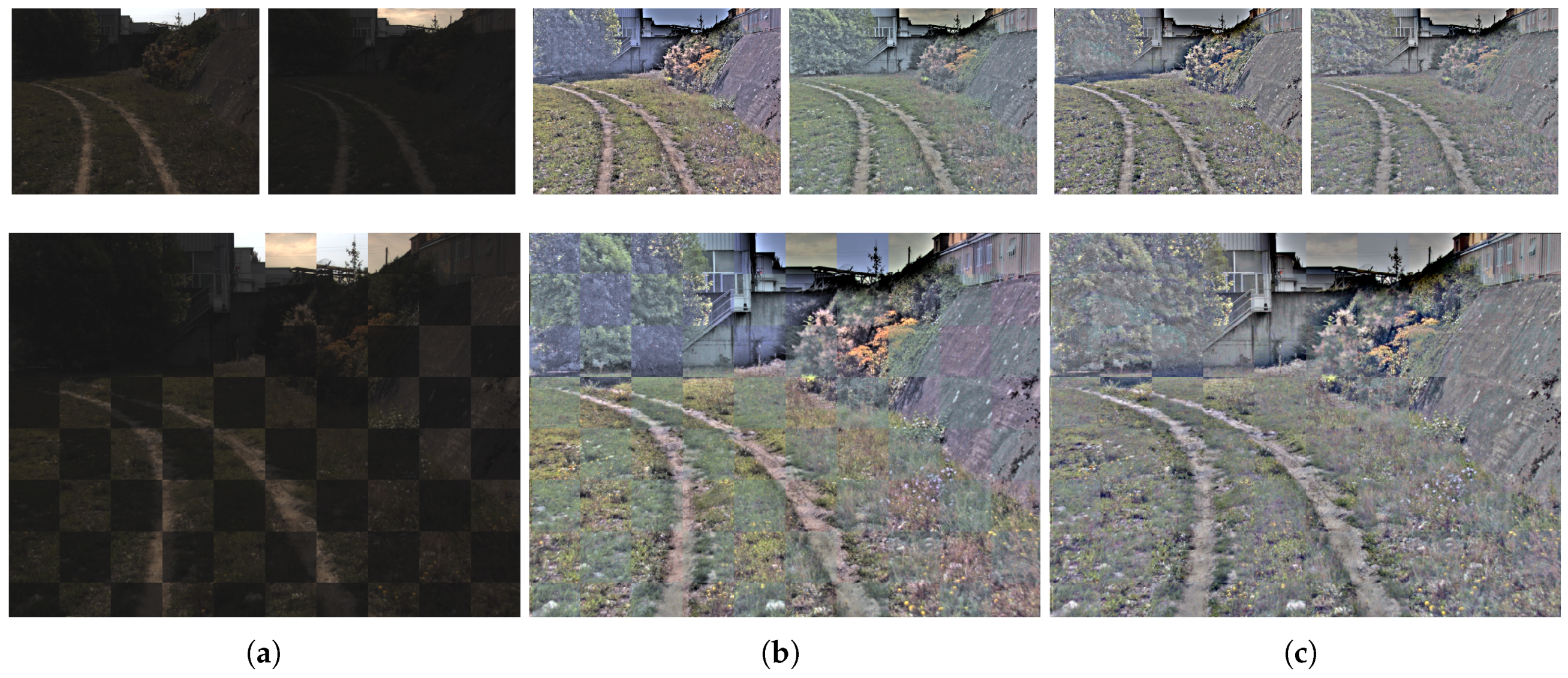

3.2. Inter-Sequence Color Consistency

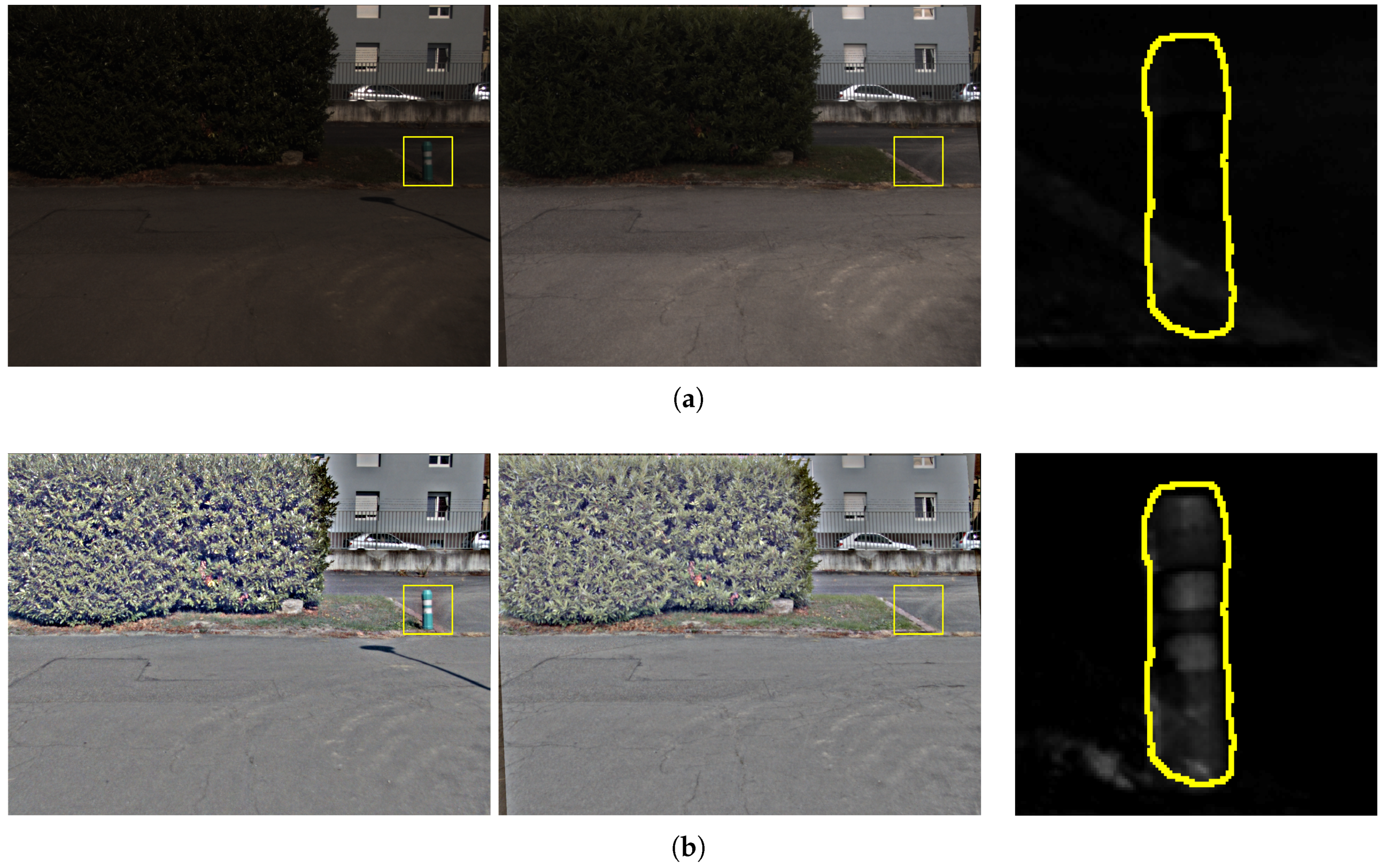

3.3. Change Detection

4. Discussion

5. Conclusions

Author Contributions

Conflicts of Interest

References

- Monnin, D.; Schneider, A.L.; Bieber, E. Detecting suspicious objects along frequently used itineraries. In Proceedings of the SPIE, Security and Defence: Electro-Optical and Infrared Systems: Technology and Applications VII, Toulouse, France, 20 September 2010; Volume 7834, pp. 1–6. [Google Scholar]

- Jobson, D.; Rahman, Z.; Woodell, G.A. Properties and performance of a center/surround Retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef] [PubMed]

- Agarwal, V.; Abidi, B.R.; Koschan, A.; Abidi, M.A. An overview of color constancy algorithms. J. Pattern Recognit. Res. 2006, 1, 42–54. [Google Scholar] [CrossRef]

- Land, E.H. The Retinex Theory of Color Vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef] [PubMed]

- Land, E.H.; McCann, J.J. Lightness and retinex theory. Opt. Soc. Am. 1971, 61, 1–11. [Google Scholar] [CrossRef]

- Land, E.H. An alternative technique for the computation of the designator in the Retinex theory of color vision. Proc. Natl. Acad. Sci. USA 1986, 83, 3078–3080. [Google Scholar] [CrossRef] [PubMed]

- Jobson, D.; Rahman, Z.; Woodell, G.A. A multiscale Retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Barnard, K.; Funt, B. Investigations into multi-scale Retinex. In Colour Imaging: Vision and Technology; John Wiley & Sons: Hoboken, NJ, USA, 1999; pp. 9–17. [Google Scholar]

- Pei, S.C.; Shen, C.T. High-dynamic-range parallel multi-scale retinex enhancement with spatially-adaptive prior. In Proceedings of the IEEE International Symposium on Circuits and Systems, Melbourne, Australia, 1–5 June 2014; pp. 2720–2723. [Google Scholar]

- Liu, H.; Sun, X.; Han, H.; Cao, W. Low-light video image enhancement based on multiscale Retinex-like algorithm. In Proceedings of the IEEE Chinese Control and Decision Conference, Yinchuan, China, 28–30 May 2016; pp. 3712–3715. [Google Scholar]

- McCann, J.J. Retinex at 50: Color theory and spatial algorithms, a review. J. Electron. Imaging 2017, 26, 1–14. [Google Scholar] [CrossRef]

- Provenzi, E. Similarities and differences in the mathematical formalizations of the Retinex model and its variants. In Proceedings of the International Workshop on Computational Color Imaging, Milan, Italy, 29–31 March 2017; Springer: Berlin, Germany, 2017; pp. 55–67. [Google Scholar]

- Bertalmío, M.; Caselles, V.; Provenzi, E. Issues about retinex theory and contrast enhancement. Int. J. Comput. Vis. 2009, 83, 101–119. [Google Scholar] [CrossRef]

- Provenzi, E.; Caselles, V. A wavelet perspective on variational perceptually-inspired color enhancement. Int. J. Comput. Vis. 2014, 106, 153–171. [Google Scholar] [CrossRef]

- Buchsbaum, G. A spatial processor model for object colour perception. J. Frankl. Inst. 1980, 310, 1–26. [Google Scholar] [CrossRef]

- Bhatia, A.; Snyder, W.E.; Bilbro, G. Stacked integral image. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 1530–1535. [Google Scholar]

- Tektonidis, M.; Monnin, D. Image enhancement and color constancy for a vehicle-mounted change detection system. In Proceedings of the SPIE, Security and Defense: Electro-Optical Remote Sensing, Edinburgh, UK, 26 September 2016; Volume 9988, pp. 1–8. [Google Scholar]

- Finlayson, G.D.; Trezzi, E. Shades of gray and colour constancy. In Proceedings of the Color and Imaging Conference, Scottsdale, AZ, USA, 9 November 2004; Society for Imaging Science and Technology: Springfield, VA, USA, 2004; pp. 37–41. [Google Scholar]

- Van De Weijer, J.; Gevers, T.; Gijsenij, A. Edge-based color constancy. IEEE Trans. Image Process. 2007, 16, 2207–2214. [Google Scholar] [CrossRef] [PubMed]

- Petro, A.B.; Sbert, C.; Morel, J.M. Multiscale Retinex. Image Process. On Line 2014, 4, 71–88. [Google Scholar] [CrossRef]

- Getreuer, P. A survey of Gaussian convolution algorithms. Image Process. On Line 2013, 3, 286–310. [Google Scholar] [CrossRef]

- Gond, L.; Monnin, D.; Schneider, A. Optimized feature-detection for on-board vision-based surveillance. In Proceedings of the SPIE, Defence and Security: Detection and Sensing of Mines, Explosive Objects, and Obscured Targets XVII, Baltimore, MA, USA, 23 April 2012; Volume 8357, pp. 1–12. [Google Scholar]

- Funt, B.; Barnard, K.; Brockington, M.; Cardei, V. Luminance-based multi-scale Retinex. In Proceedings of the AIC Color, Kyoto, Japan, 25–30 May 1997; Volume 97, pp. 25–30. [Google Scholar]

- Tektonidis, M.; Monnin, D.; Christnacher, F. Hue-preserving local contrast enhancement and illumination compensation for outdoor color images. In Proceedings of the SPIE, Security and Defense: Electro-Optical Remote Sensing, Photonic Technologies, and Applications IX, Toulouse, France, 21 September 2015; Volume 9649, pp. 1–13. [Google Scholar]

- Yang, C.C.; Rodríguez, J.J. Efficient luminance and saturation processing techniques for color images. J. Vis. Commun. Image Represent. 1997, 8, 263–277. [Google Scholar] [CrossRef]

- Barnard, K.; Cardei, V.; Funt, B. A comparison of computational color constancy algorithms—Part I: Methodology and experiments with synthesized data. IEEE Trans. Image Process. 2002, 11, 972–984. [Google Scholar] [CrossRef] [PubMed]

- Radke, R.J.; Andra, S.; Al-Kofahi, O.; Roysam, B. Image change detection algorithms: A systematic survey. IEEE Trans. Image Process. 2005, 14, 294–307. [Google Scholar] [CrossRef] [PubMed]

| A in [3] | , in [4] | in [5] | in [7] | in [11] | K in [11] |

|---|---|---|---|---|---|

| 80, 120 | 125 | 3 |

| Approach | Mean RGB | Mean rg |

|---|---|---|

| Angular Error | Endpoint Error | |

| Unprocessed | ||

| Retinex [2] | ||

| Gray World [15] extension | ||

| Hue-preserving Retinex [24] | ||

| New combined Retinex/Gray World |

| Changed Objects | |||||||

|---|---|---|---|---|---|---|---|

| Approach | Traffic Pole | Stone | Stone2 | Barrel | Fake Stone | Car | Mean |

| Unprocessed | |||||||

| Retinex [2] | |||||||

| Gray World [15] extension | |||||||

| Hue-preserving Retinex [24] | |||||||

| New combined Retinex/Gray World | 13 | ||||||

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tektonidis, M.; Monnin, D. Color Consistency and Local Contrast Enhancement for a Mobile Image-Based Change Detection System. J. Imaging 2017, 3, 35. https://doi.org/10.3390/jimaging3030035

Tektonidis M, Monnin D. Color Consistency and Local Contrast Enhancement for a Mobile Image-Based Change Detection System. Journal of Imaging. 2017; 3(3):35. https://doi.org/10.3390/jimaging3030035

Chicago/Turabian StyleTektonidis, Marco, and David Monnin. 2017. "Color Consistency and Local Contrast Enhancement for a Mobile Image-Based Change Detection System" Journal of Imaging 3, no. 3: 35. https://doi.org/10.3390/jimaging3030035