DocCreator: A New Software for Creating Synthetic Ground-Truthed Document Images

Abstract

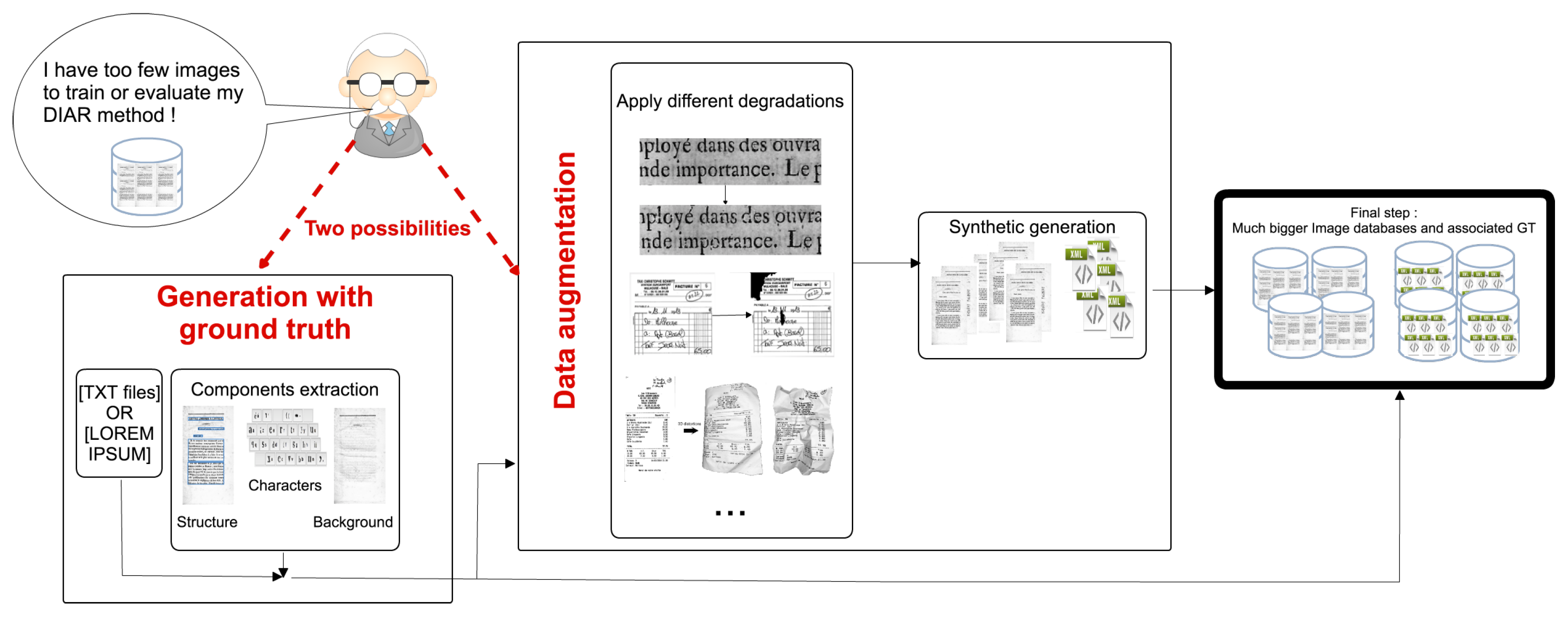

:1. Introduction

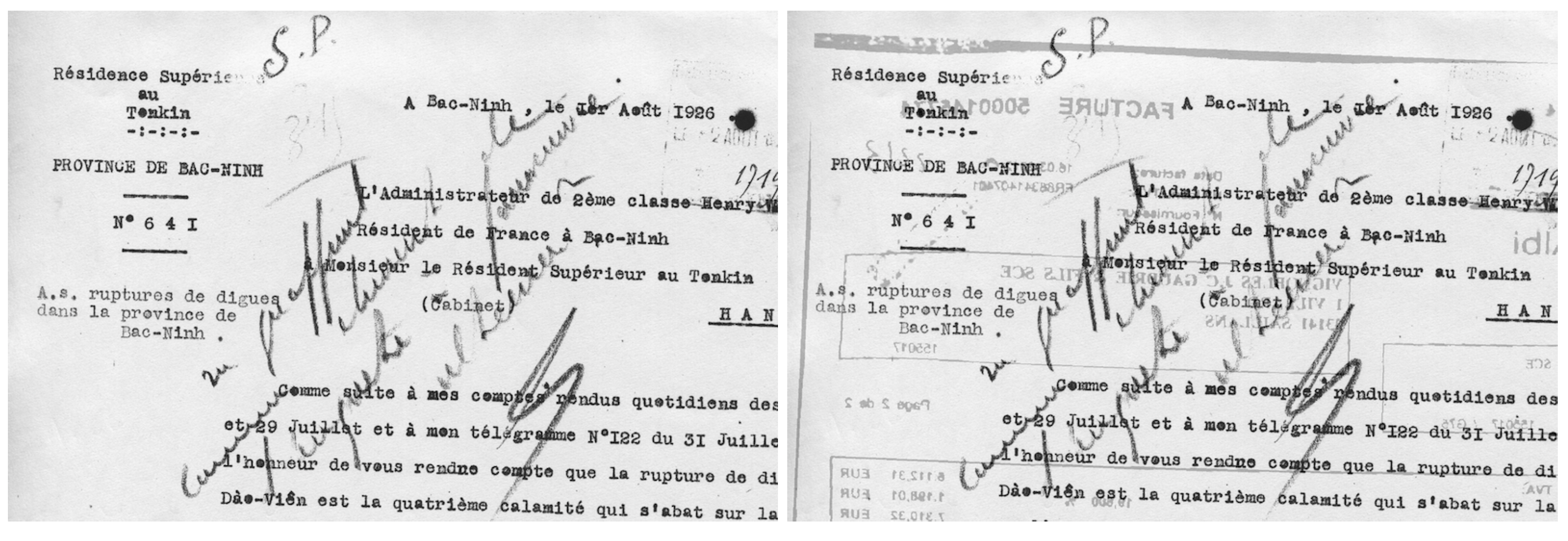

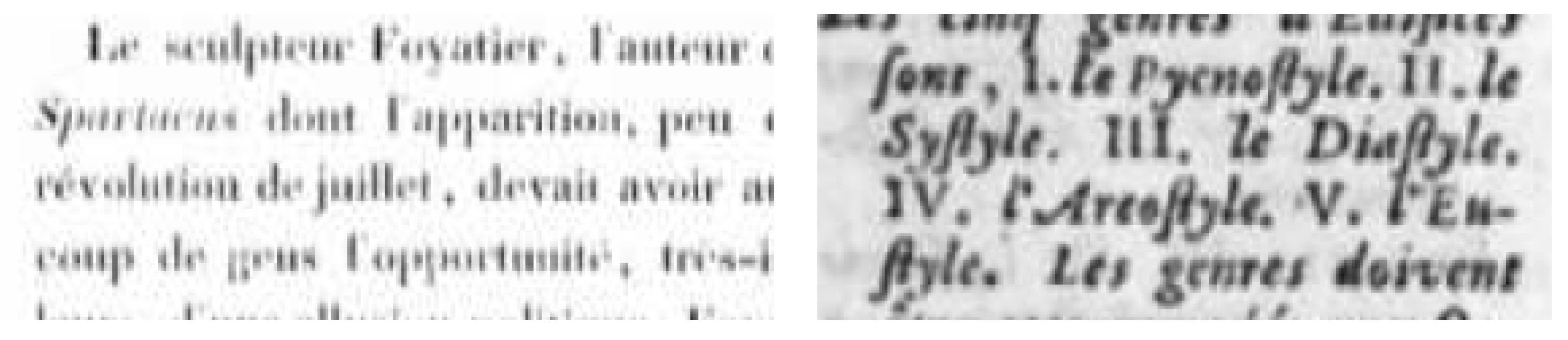

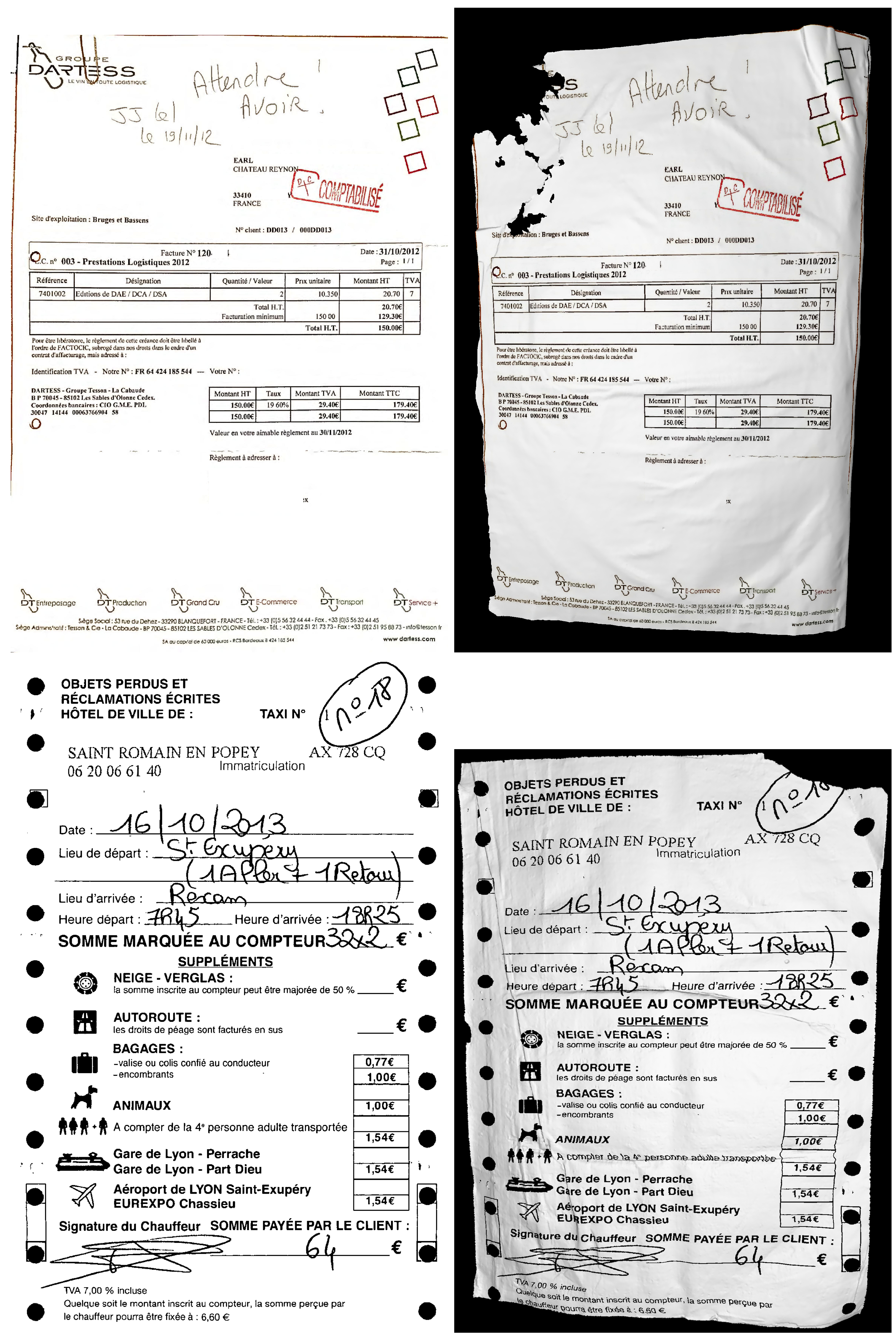

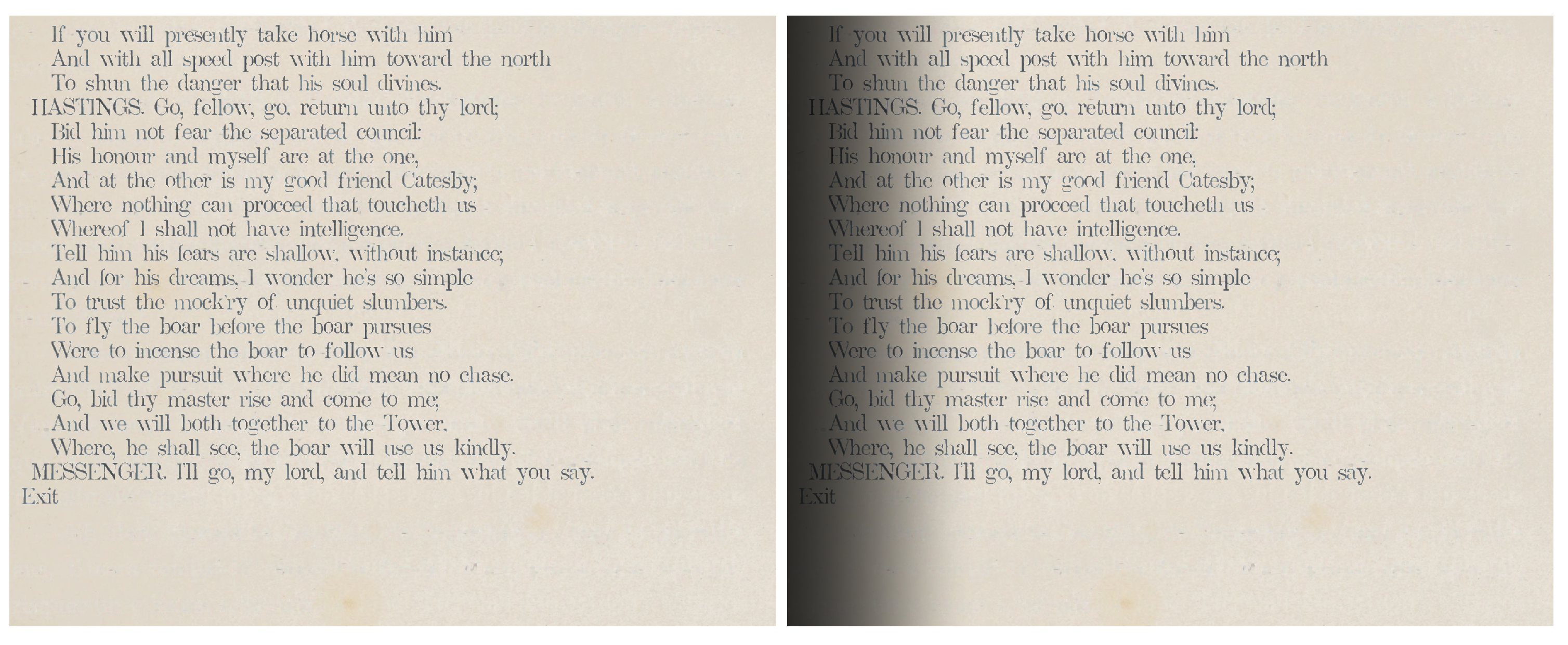

2. How to Create a Synthetic Document (with Ground Truth) That Looks Like a Real One?

3. Document Degradation Models

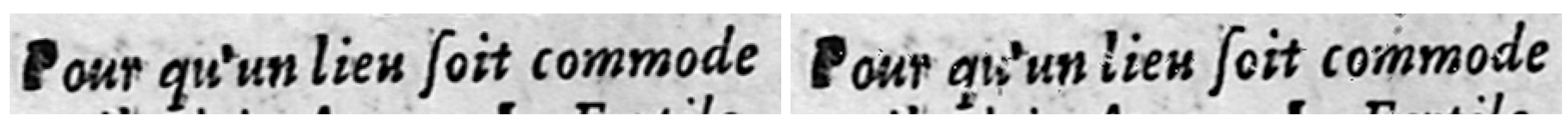

3.1. Ink Degradation

3.2. Phantom Character

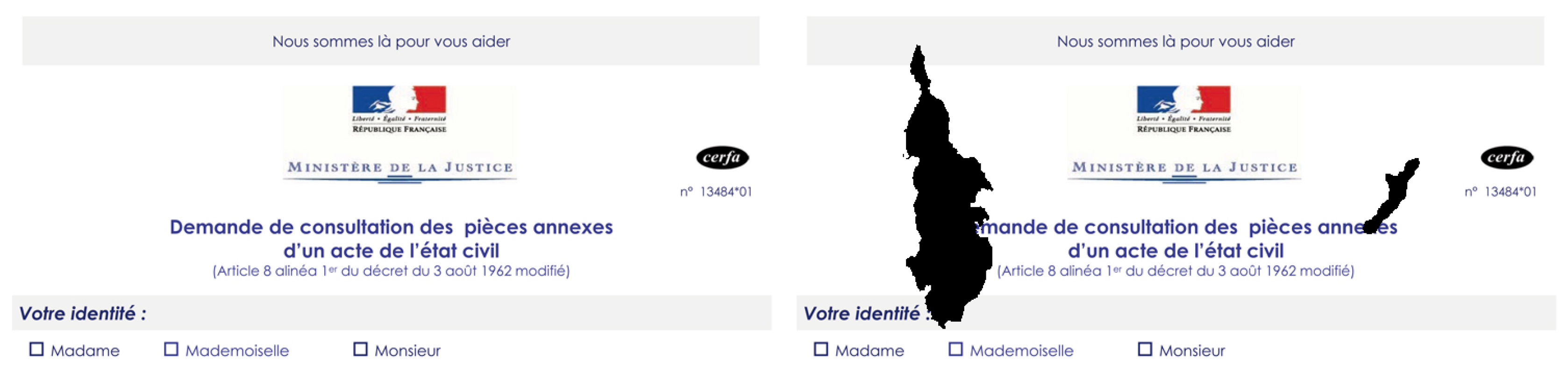

3.3. Paper Holes

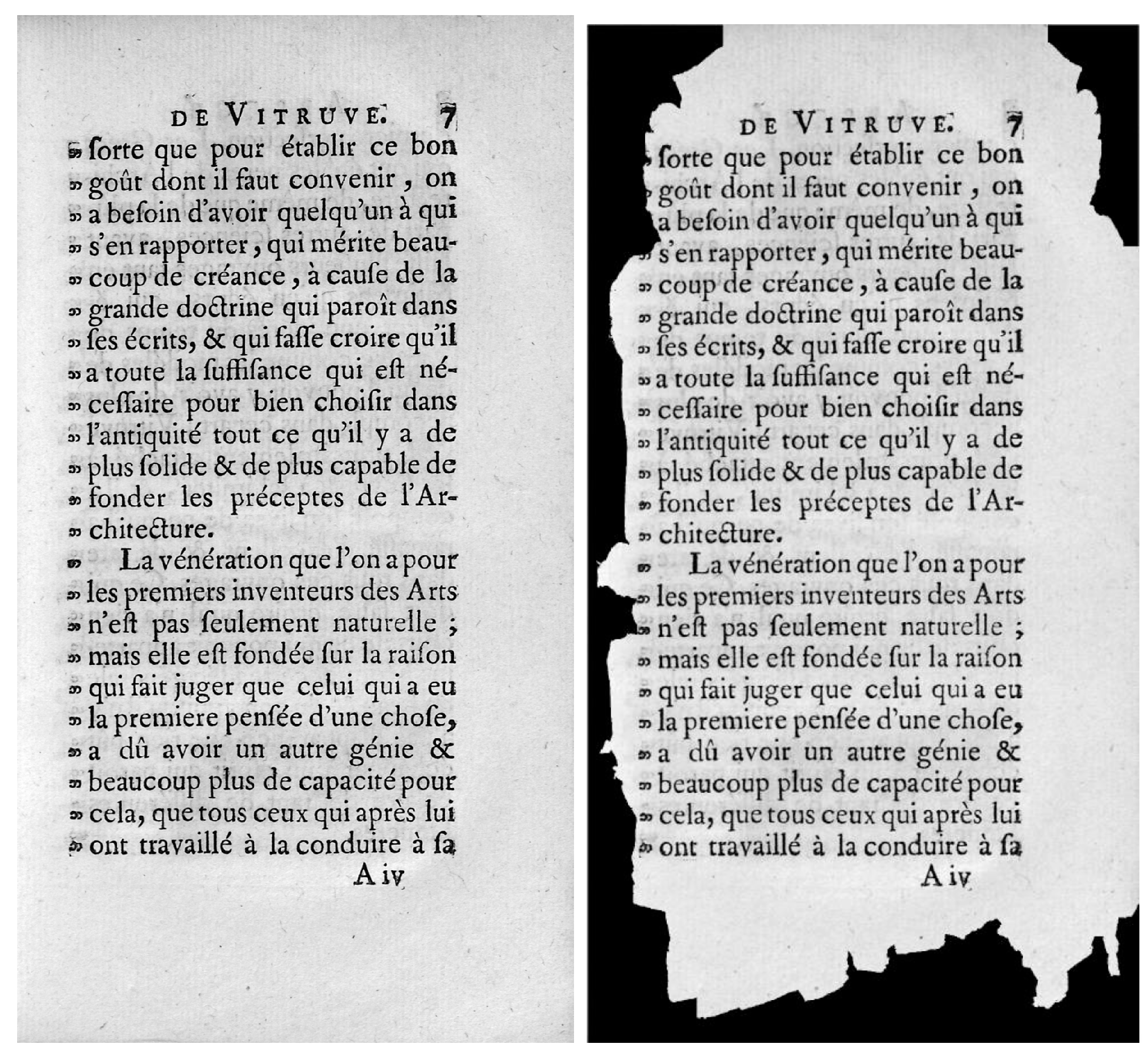

3.4. Bleed-Through

3.5. Adaptive Blur

3.6. 3D Paper Deformation

3.7. Nonlinear Illumination Model

4. Use of DocCreator for Performance Evaluation Tasks or Retraining

4.1. Published Results Using DocCreator

4.1.1. Document Image Generation for Performance Evaluation

4.1.2. Document Image Generation for Retraining Task

4.2. New Results on Performance Prediction Using DocCreator

4.2.1. Increase the Prediction Rate of Predictive Binarization Algorithm

4.2.2. Predict OCR Recognition Rate Using Synthetic Images

5. Conclusions

Author Contributions

Conflicts of Interest

References

- L’Affaire Alexis . Available online: http://gallica.bnf.fr/ark:/12148/bpt6k8630878m/f1.item.texteImage (accessed on 9 December 2017).

- Shahab, A.; Shafait, F.; Kieninger, T.; Dengel, A. An Open Approach Towards the Benchmarking of Table Structure Recognition Systems. In Proceedings of the 9th IAPR International Workshop on Document Analysis Systems, Boston, MA, USA, 9–11 June 2010; ACM: New York, NY, USA, 2010; pp. 113–120. [Google Scholar]

- Lazzara, G.; Levillain, R.; Géraud, T.; Jacquelet, Y.; Marquegnies, J.; Crépin-Leblond, A. The SCRIBO Module of the Olena Platform: a Free Software Framework for Document Image Analysis. In Proceedings of the 2011 International Conference on Document Analysis and Recognition (ICDAR), Beijing, China, 18–21 September 2011. [Google Scholar]

- Yalniz, I.; Manmatha, R. A Fast Alignment Scheme for Automatic OCR Evaluation of Books. In Proceedings of the 2011 International Conference on Document Analysis and Recognition (ICDAR), Beijing, China, 18–21 September 2011; pp. 754–758. [Google Scholar]

- Roy, P.; Ramel, J.; Ragot, N. Word Retrieval in Historical Document Using Character-Primitives. In Proceedings of the 2011 International Conference on Document Analysis and Recognition (ICDAR), Beijing, China, 18–21 September 2011; pp. 678–682. [Google Scholar]

- IAM Handwriting Database. Available online: http://www.iam.unibe.ch/fki/databases/iam-handwriting-database (accessed on 9 December 2017).

- Grosicki, E.; Carré, M.; Brodin, J.M.; Geoffrois, E. Results of the second RIMES evaluation campaign for handwritten mail processing. In Proceedings of the 2009 10th International Conference on Document Analysis and Recognition (ICDAR), Barcelona, Spain, 26–29 July 2009. [Google Scholar]

- Perez, D.; Tarazon, L.; Serrano, N.; Castro, F.; Terrades, O.R.; Juan, A. The GERMANA Database. In Proceedings of the 2009 10th International Conference on Document Analysis and Recognition (ICDAR), Barcelona, Spain, 26–29 July 2009; IEEE Computer Society: Washington, DC, USA, 2009; pp. 301–305. [Google Scholar]

- Nakagawa, K.; Fujiyoshi, A.; Suzuki, M. Ground-truthed Dataset of Chemical Structure Images in Japanese Published Patent Applications. In Proceedings of the 9th IAPR International Workshop on Document Analysis Systems, Boston, MA, USA, 9–11 June 2010; ACM: New York, NY, USA, 2010; pp. 455–462. [Google Scholar]

- Eurecom. Available online: http://www.eurecom.fr/huet/work.html (accessed on 9 December 2017).

- University of California, San Francisco. The Legacy Tobacco Document Library (LTDL); University of California: San Francisco, CA, USA, 2007. [Google Scholar]

- Delalandre, M.; Valveny, E.; Pridmore, T.; Karatzas, D. Generation of Synthetic Documents for Performance Evaluation of Symbol Recognition & Spotting Systems. Int. J. Doc. Anal. Recognit. 2010, 13, 187–207. [Google Scholar]

- Fornés, A.; Dutta, A.; Gordo, A.; Lladós, J. CVC-MUSCIMA: A ground truth of handwritten music score images for writer identification and staff removal. Int. J. Doc. Anal. Recognit. 2012, 15, 243–251. [Google Scholar] [CrossRef]

- Burie, J.C.; Chazalon, J.; Coustaty, M.; Eskenazi, S.; Luqman, M.M.; Mehri, M.; Nayef, N.; Ogier, J.M.; Prum, S.; Rusiñol, M. ICDAR2015 competition on smartphone document capture and OCR (SmartDoc). In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Nancy, France, 23–26 August 2015; pp. 1161–1165. [Google Scholar]

- Nayef, N.; Luqman, M.M.; Prum, S.; Eskenazi, S.; Chazalon, J.; Ogier, J.M. SmartDoc-QA: A dataset for quality assessment of smartphone captured document images-single and multiple distortions. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Nancy, France, 23–26 August 2015; pp. 1231–1235. [Google Scholar]

- TC11 Online Resources. Available online: http://tc11.cvc.uab.es/datasets/ (accessed on 9 December 2017).

- Yanikoglu, B.; Vincent, L. Pink Panther: A complete environment for ground-truthing and benchmarking document page segmentation. Pattern Recognit. 1998, 31, 1191–1204. [Google Scholar] [CrossRef]

- Ha Lee, C.; Kanungo, T. The architecture of TRUEVIZ: A groundTRUth/metadata Editing and VIsualiZing toolkit. Pattern Recognit. 2003, 36, 811–825. [Google Scholar] [CrossRef]

- Doermann, D.; Zotkina, E.; Li, H. GEDI—A Groundtruthing Environment for Document Images. In Proceedings of the 9th IAPR International Workshop on Document Analysis Systems (DAS 2010), Boston, MA, USA, 9–11 June 2010. [Google Scholar]

- Clausner, C.; Pletschacher, S.; Antonacopoulos, A. Efficient OCR Training Data Generation with Aletheia. In Proceedings of the International Association for Pattern Recognition (IAPR), Tours, France, 7–10 April 2014. [Google Scholar]

- Garz, A.; Seuret, M.; Simistira, F.; Fischer, A.; Ingold, R. Creating ground truth for historical manuscripts with document graphs and scribbling interaction. In Proceedings of the 2016 12th IAPR Workshop on Document Analysis Systems (DAS), Santorini, Greece, 11–14 April 2016; pp. 126–131. [Google Scholar]

- Gatos, B.; Louloudis, G.; Causer, T.; Grint, K.; Romero, V.; Sánchez, J.A.; Toselli, A.H.; Vidal, E. Ground-truth production in the tranScriptorium project. In Proceedings of the 2014 11th IAPR International Workshop on Document Analysis Systems Document Analysis Systems (DAS), Tours, France, 7–10 April 2014; pp. 237–241. [Google Scholar]

- Wei, H.; Chen, K.; Seuret, M.; Würsch, M.; Liwicki, M.; Ingold, R. DIVADIAWI— A Web-Based Interface for Semi-Automatic Labeling of Historical Document Images; Digital Humanities: Sydney, Australia, 2015. [Google Scholar]

- Mas, J.; Fornés, A.; Lladós, J. An Interactive Transcription System of Census Records using Word-Spotting based Information Transfer. In Proceedings of the 12th IAPR International Workshop on Document Analysis Systems (DAS 2016), Santorini, Greece, 11–14 April 2016. [Google Scholar]

- Recital Manuscript Platform. Available online: http://recital.univ-nantes.fr/ (accessed on 9 December 2017).

- Clausner, C.; Pletschacher, S.; Antonacopoulos, A. Aletheia—An Advanced Document Layout and Text Ground-Truthing System for Production Environments. In Proceedings of the International Conference on document Analysis and Recognition, Beijing, China, 18–21 September 2011; pp. 48–52. [Google Scholar]

- Baird, H.S. Document Image Defect Models. In Proceedings of the IAPR workshop on Syntatic and Structural Pattern Recognition, Murray Hill, NJ, USA, 13–15 June 1990; pp. 13–15. [Google Scholar]

- Jiuzhou, Z. Creation of Synthetic Chart Image Database with Ground Truth; Technical Report; National University of Singapore: Singapore, 2005. [Google Scholar]

- Ishidera, E.; Nishiwaki, D. A Study on Top-down Word Image Generation for Handwritten Word Recognition. In Proceedings of the 2003 7th International Conference on Document Analysis and Recognition (ICDAR), Edinburgh, UK, 3–6 August 2003; IEEE Computer Society: Washington, DC, USA, 2003. [Google Scholar]

- Yin, F.; Wang, Q.F.; Liu, C.L. Transcript Mapping for Handwritten Chinese Documents by Integrating Character Recognition Model and Geometric Context. Pattern Recognit. 2013, 46, 2807–2818. [Google Scholar] [CrossRef]

- Opitz, M.; Diem, M.; Fiel, S.; Kleber, F.; Sablatnig, R. End-to-End Text Recognition Using Local Ternary Patterns, MSER and Deep Convolutional Nets. In Proceedings of the 2014 11th IAPR International Workshop on Document Analysis Systems (DAS), Tours, France, 7–10 April 2014; pp. 186–190. [Google Scholar]

- Yacoub, S.; Saxena, V.; Sami, S. Perfectdoc: A ground truthing environment for complex documents. In Proceedings of the Eighth International Conference on Document Analysis and Recognition, Seoul, Korea, 31 August–1 September 2005; pp. 452–456. [Google Scholar]

- Saund, E.; Lin, J.; Sarkar, P. Pixlabeler: User interface for pixel-level labeling of elements in document images. In Proceedings of the International Conference on Document Analysis and Recognition, Barcelona, Spain, 26–29 July 2009; pp. 646–650. [Google Scholar]

- Lamiroy, B.; Lopresti, D. An Open Architecture for End-to-End Document Analysis Benchmarking. In Proceedings of the 2011 International Conference on Document Analysis and Recognition (ICDAR), Beijing, China, 18–21 September 2011; pp. 42–47. [Google Scholar]

- Seuret, M.; Chen, K.; Eichenbergery, N.; Liwicki, M.; Ingold, R. Gradient-domain degradations for improving historical documents images layout analysis. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 23–26 August 2015; pp. 1006–1010. [Google Scholar]

- Mehri, M.; Gomez-Krämer, P.; Héroux, P.; Mullot, R. Old Document Image Segmentation Using the Autocorrelation Function and Multiresolution Analysis. In Proceedings of the IS & T/SPIE Electronic Imaging, Burlingame, CA, USA, 3–7 February 2013. [Google Scholar]

- Visani, M.; Kieu, V.; Fornés, A.; Journet, N. The ICDAR 2013 Music Scores Competition: Staff Removal. In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition (ICDAR), Washington, DC, USA, 25–28 August 2013; pp. 1407–1411. [Google Scholar]

- Montagner, I.d.S.; Hirata, R., Jr.; Hirata, N.S.T. A Machine Learning based method for Staff Removal. In Proceedings of the 2014 22nd International Conference on Pattern Recognition (ICPR), Stockholm, Sweden, 24–28 August 2014; pp. 3162–3167. [Google Scholar]

- Fischer, A.; Visani, M.; Kieu, V.C.; Suen, C.Y. Generation of Learning Samples for Historical Handwriting Recognition Using Image Degradation. In Proceedings of the the 2nd International Workshop on Historical Document Imaging and Processing, Washington, DC, USA, 24 August 2013; pp. 73–79. [Google Scholar]

- Smith, R. An Overview of the Tesseract OCR Engine. In Proceedings of the 2007 9th International Conference on Document Analysis and Recognition (ICDAR), Parana, Brazil, 23–26 September 2007; pp. 629–633. [Google Scholar]

- Bahaghighat, M.K.; Mohammadi, J. Novel approach for baseline detection and Text line segmentation. Int. J. Comput. Appl. 2012, 51. [Google Scholar] [CrossRef]

- Telea, A. An image inpainting technique based on the fast marching method. J. Graph. Tools 2004, 9, 23–34. [Google Scholar] [CrossRef]

- Mehri, M.; Héroux, P.; Lerouge, J.; Gomez-Krämer, P.; Mullot, R. A structural signature based on texture for digitized historical book page categorization. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 23–26 August 2015; pp. 116–120. [Google Scholar]

- Breuel, T.M. Two geometric algorithms for layout analysis. In International Workshop on Document Analysis Systems; Springer: Berlin, Germany, 2002; pp. 188–199. [Google Scholar]

- Ramel, J.Y.; Leriche, S.; Demonet, M.; Busson, S. User-driven page layout analysis of historical printed books. Int. J. Doc. Anal. Recognit. 2007, 9, 243–261. [Google Scholar] [CrossRef] [Green Version]

- Garz, A.; Seuret, M.; Fischer, A.; Ingold, R. A User-Centered Segmentation Method for Complex Historical Manuscripts Based on Document Graphs. IEEE Trans. Hum.-Mach. Syst. 2017, 47, 181–193. [Google Scholar] [CrossRef]

- Kanungo, T.; Haralick, R. Automatic generation of character groundtruth for scanned documents: A closed-loop approach. In Proceedings of the 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; Volume 3, pp. 669–675. [Google Scholar]

- Shakhnarovich, G. Learning Task-Specific Similarity. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2005. [Google Scholar]

- Moghaddam, R.F.; Cheriet, M. Low Quality Document Image Modeling and Enhancement. Int. J. Doc. Anal. Recognit. 2009, 11, 183–201. [Google Scholar] [CrossRef]

- Lelégard, L.; Bredif, M.; Vallet, B.; Boldo, D. Motion blur detection in aerial images shot with channel-dependent exposure time. In Proceedings of the ISPRS-Technical-Commission III Symposium on Photogrammetric Computer Vision and Image Analysis (PCV), Saint-Mandé, France, 1–3 September 2010; pp. 180–185. [Google Scholar]

- Kieu, V.; Journet, N.; Visani, M.; Mullot, R.; Domenger, J. Semi-synthetic Document Image Generation Using Texture Mapping on Scanned 3D Document Shapes. In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition (ICDAR), Washington, DC, USA, 25–28 August 2013; pp. 489–493. [Google Scholar]

- Kanungo, T.; Haralick, R.M.; Phillips, I. Global and Local Document Degradation Models. In Proceedings of the 1993 2nd International Conference on Document Analysis and Recognition (ICDAR), Tsukuba City, Japan, 20–22 October 1993; pp. 730–734. [Google Scholar]

- Calvo-Zaragoza, J.; Micó, L.; Oncina, J. Music staff removal with supervised pixel classification. Int. J. Doc. Anal. Recognit. 2016, 19, 1–9. [Google Scholar] [CrossRef]

- Bui, H.N.; Na, I.S.; Kim, S.H. Staff Line Removal Using Line Adjacency Graph and Staff Line Skeleton for Camera Based Printed Music Scores. In Proceedings of the 2014 22nd International Conference on Pattern Recognition (ICPR), Stockholm, Sweden, 24–28 August 2014. [Google Scholar]

- Géraud, T. A morphological method for music score staff removal. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 2599–2603. [Google Scholar]

- Zaragoza, J.C. Pattern Recognition for Music Notation. Ph.D. Thesis, Universidad de Alicante, Alicante, Spain, 2016. [Google Scholar]

- Montagner, I.S.; Hirata, N.S.; Hirata Jr, R.; Canu, S. Kernel approximations for W-operator learning. In Proceedings of the International Conference on Graphics, Patterns and Images (SIBGRAPI), Sao Paulo, Brazil, 4–7 October 2016. [Google Scholar]

- IAM-HistDB Database. Available online: https://diuf.unifr.ch/main/hisdoc/iam-histdb (accessed on 9 December 2017).

- Wei, H.; Baechler, M.; Slimane, F.; Ingold, R. Evaluation of SVM, MLP, and GMM Classifiers for Layout Analysis of Historical Documents. In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition (ICDAR), Washington, DC, USA, 25–28 August 2013; pp. 1220–1224. [Google Scholar]

- Varga, T.; Bunke, H. Effects of training set expansion in handwriting recognition using synthetic data. In Proceedings of the 11th Conference of the International Graphonomics Society, Scottsdale, AZ, USA, 2–5 November 2003; pp. 200–203. [Google Scholar]

- Rabeux, V.; Journet, N.; Vialard, A.; Domenger, J.P. Quality Evaluation of Degraded Document Images for Binarization Result Prediction. Int. J. Doc. Anal. Recognit. 2013, 1–13. [Google Scholar] [CrossRef]

- Pratikakis, I.; Gatos, B.; Ntirogiannis, K. ICDAR 2011 Document Image Binarization Contest (DIBCO 2011). In Proceedings of the 2011 11th International Conference on Document Analysis and Recognition (ICDAR), Washington, DC, USA, 25–28 August 2011; pp. 1506–1510. [Google Scholar]

- Bhowmik, T.K.; Paquet, T.; Ragot, N. OCR performance prediction using a bag of allographs and support vector regression. In Proceedings of the 2014 11th IAPR International Workshop on Document Analysis Systems (DAS), Tours, France, 7–10 April 2014; pp. 202–206. [Google Scholar]

- Peng, X.; Cao, H.; Natarajan, P. Document image OCR accuracy prediction via latent Dirichlet allocation. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 23–26 August 2015; pp. 771–775. [Google Scholar]

- Ye, P.; Doermann, D. Document image quality assessment: A brief survey. In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition (ICDAR), Washington, DC, USA, 25–28 August 2013; pp. 723–727. [Google Scholar]

- Clausner, C.; Pletschacher, S.; Antonacopoulos, A. Quality prediction system for large-scale digitisation workflows. In Proceedings of the 2016 12th IAPR Workshop on Document Analysis Systems (DAS), Santorini, Greece, 11–14 April 2016; pp. 138–143. [Google Scholar]

| Export | Open-Source | Desktop/Online | Groundtruthing Assistance | Collaborative | Year | |

|---|---|---|---|---|---|---|

| Software for manual ground truth creation | ||||||

| Pink Panther [17] | ASCII | n/a | desktop | no | no | 1998 |

| TrueViz [18] | XML | yes | desktop | no | no | 2003 |

| PerfectDoc [32] | XML | yes | desktop | ? | no | 2005 |

| PixLabeler [33] | XML | no | desktop | no | no | 2009 |

| GEDI [19] | XML | yes | desktop | yes | no | 2010 |

| DAE [34] | no | yes | online | yes | yes | 2011 |

| Aletheia [20,26] | XML | no | online/desktop | yes | no | 2011 |

| Transcriptorium [22] | TEI-XML | no | online | yes | yes | 2014 |

| DIVADIAWI [23] | XML | n/a | online | yes | n/a | 2015 |

| Recital [25] | no | yes | online | yes | yes | 2017 |

| Algorithms for synthetic data augmentation | ||||||

| Baird et al. [27] | no | n/a | n/a | n/a | no | 1990 |

| Zhao et al. [28] | no | n/a | n/a | n/a | no | 2005 |

| Delalandre et al. [12] | no | n/a | n/a | n/a | no | 2010 |

| Yin et al. [30] | no | n/a | n/a | n/a | no | 2013 |

| Mas et al. [24] | no | n/a | n/a | n/a | yes | 2016 |

| Seuret et al. [35] | no | yes | n/a | n/a | no | 2015 |

| Software for semi-automatic ground truth creation and data augmentation capabilities | ||||||

| DocCreator | XML | yes | online/desktop | yes | no | 2017 |

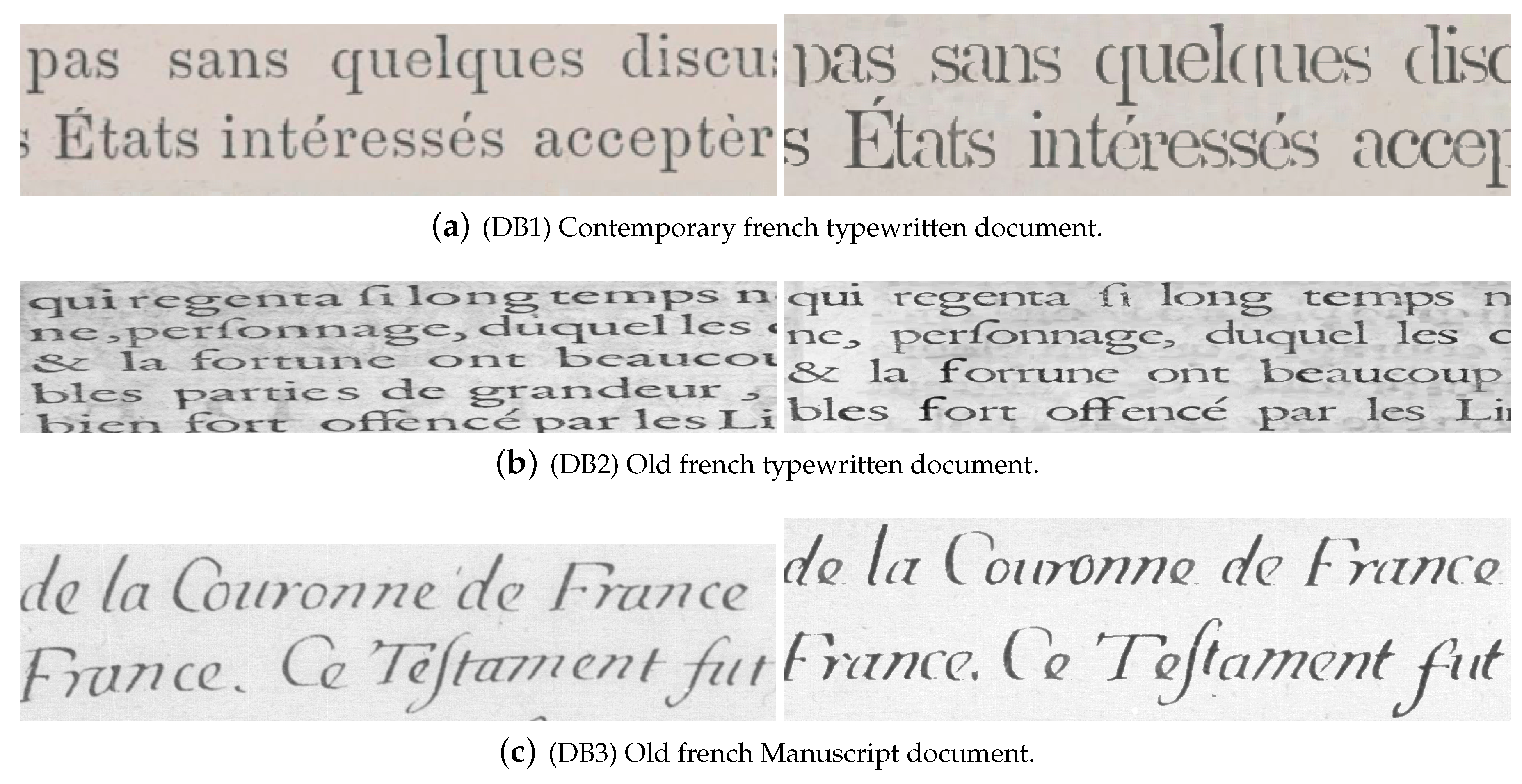

| Original Image | Font From | Same Text | Lorem Text | |

|---|---|---|---|---|

| DB1 | 0.95 | DB1 | 0.94 | 0.88 |

| DB2 | 0.80 | DB2 | 0.85 | 0.84 |

| DB3 | 0.24 | DB3 | 0.21 | 0.23 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Journet, N.; Visani, M.; Mansencal, B.; Van-Cuong, K.; Billy, A. DocCreator: A New Software for Creating Synthetic Ground-Truthed Document Images. J. Imaging 2017, 3, 62. https://doi.org/10.3390/jimaging3040062

Journet N, Visani M, Mansencal B, Van-Cuong K, Billy A. DocCreator: A New Software for Creating Synthetic Ground-Truthed Document Images. Journal of Imaging. 2017; 3(4):62. https://doi.org/10.3390/jimaging3040062

Chicago/Turabian StyleJournet, Nicholas, Muriel Visani, Boris Mansencal, Kieu Van-Cuong, and Antoine Billy. 2017. "DocCreator: A New Software for Creating Synthetic Ground-Truthed Document Images" Journal of Imaging 3, no. 4: 62. https://doi.org/10.3390/jimaging3040062