Range Imaging for Motion Compensation in C-Arm Cone-Beam CT of Knees under Weight-Bearing Conditions †

Abstract

:1. Introduction

2. Materials and Methods

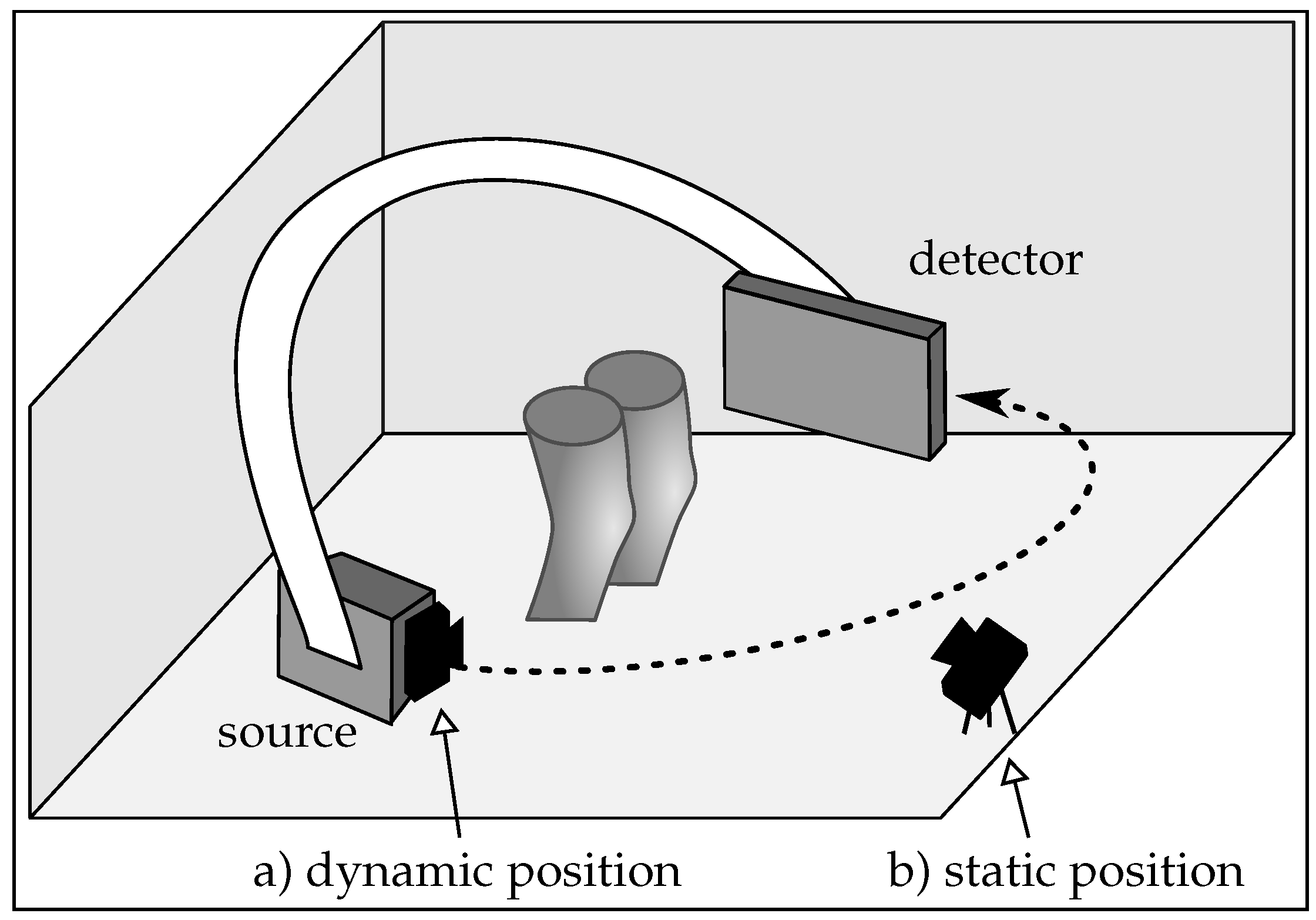

2.1. Imaging Setup

- Motion estimation: compared to the static case, where the same scene is observed in each frame, partial overlap between the point clouds occurs in the dynamic scenario. This happens since the range camera rotates with the C-arm around the object. Registration of partly overlapping point clouds is an especially challenging problem if a smooth, cylindrical object, such as the human knee is imaged. Therefore, two point cloud registration methods are compared to investigate their motion estimation capability in both scenarios.

- Calibration: co-calibration of the C-arm and the range camera is required to incorporate the estimated motion of the range camera correctly into the reconstruction. A calibration has to be done only once for the dynamic case, since their relative position is constant.

- Object occlusion: in an unpredictable medical environment, staff, patient clothing, or the C-arm might temporarily occlude the field of view of the range camera. Missing or partly missing points can heavily result in errors in the motion estimation. In the dynamic setup, the trajectory of the C-arm has to be selected carefully, such that always the object of interest is visible. Otherwise it could happen that the knee surface is occluded by the second knee. However, in the static setup the rotating C-arm could also cover partly the camera’s field of view.

- Spatial requirements: limited space in the medical examination room has also to be considered for the camera setup. A camera mounted on the C-arm takes up less space. Furthermore, the static camera is prone to be touched, which would require a new calibration. This is rather unlikely for the position on the C-arm.

2.2. Cone-Beam Computed Tomography and Motion

2.3. Data Generation

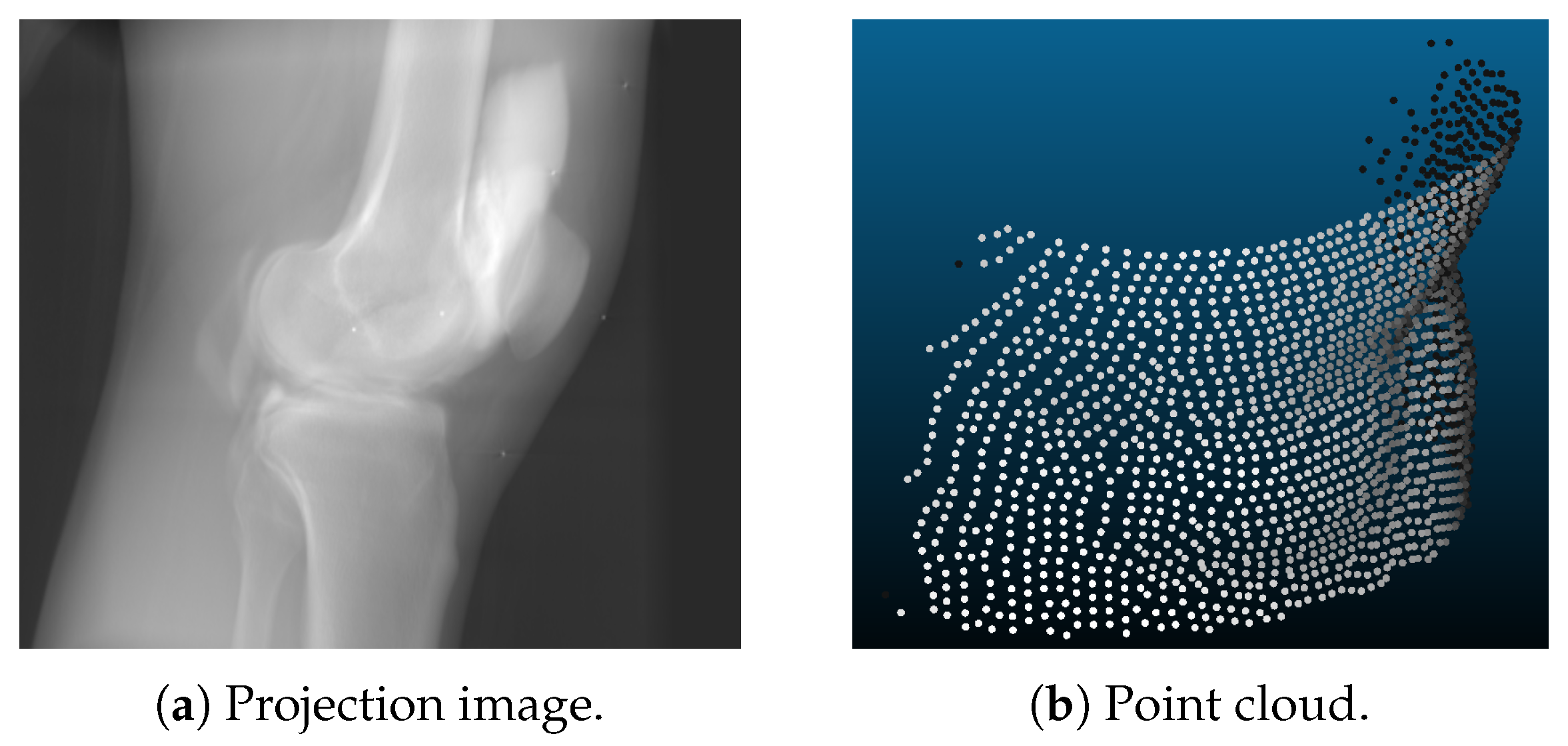

- Point clouds are generated using a voxelized volume of the object. For each discrete time step, the volume was transformed rigidly. The surface of this volume was then sampled using a ray casting approach. The range camera was modeled using projective geometry with properties similar to the Microsoft Kinect One v2 [38,39]: the sampled points lie on a grid like pattern, as shown in Figure 2b. In the isocenter of the C-arm scanner, which is the area of interest for CBCT scanning, the distance of the sampled points was approximately 1.4 mm in image space. A depth resolution of 1 mm was applied with a camera distance of 75 cm. These settings were the same for the static and the dynamic camera position. The only difference lied in the different trajectories of both setups. For the experiments, we further created point clouds with and without noise. This noise was approximated as Gaussian noise with a standard deviation of 1 mm.

- X-ray projections are created from the same volume used for the point clouds. Given a C-arm trajectory, i.e., several projection matrices that describe the C-arm rotation around the object, the volume was forward projected yielding a stack of 2D projection images. Motion was incorporated using Formula (2). This was done using CONRAD, an open-source software framework dedicated for cone-beam imaging simulation and reconstruction [40].

- Motion used to corrupt the data was realistic knee motion of a standing subject, measured with an VICON (Vicon Motion Systems Ltd, Oxford, UK) motion capture system [1]. The same motion pattern was applied in the point clouds and projection images simulation.

2.4. Motion Estimation Using Range Imaging

2.4.1. Iterative Closest Point Algorithm

2.4.2. Group-Wise Rigid Registration Using a Hybrid Mixture Model

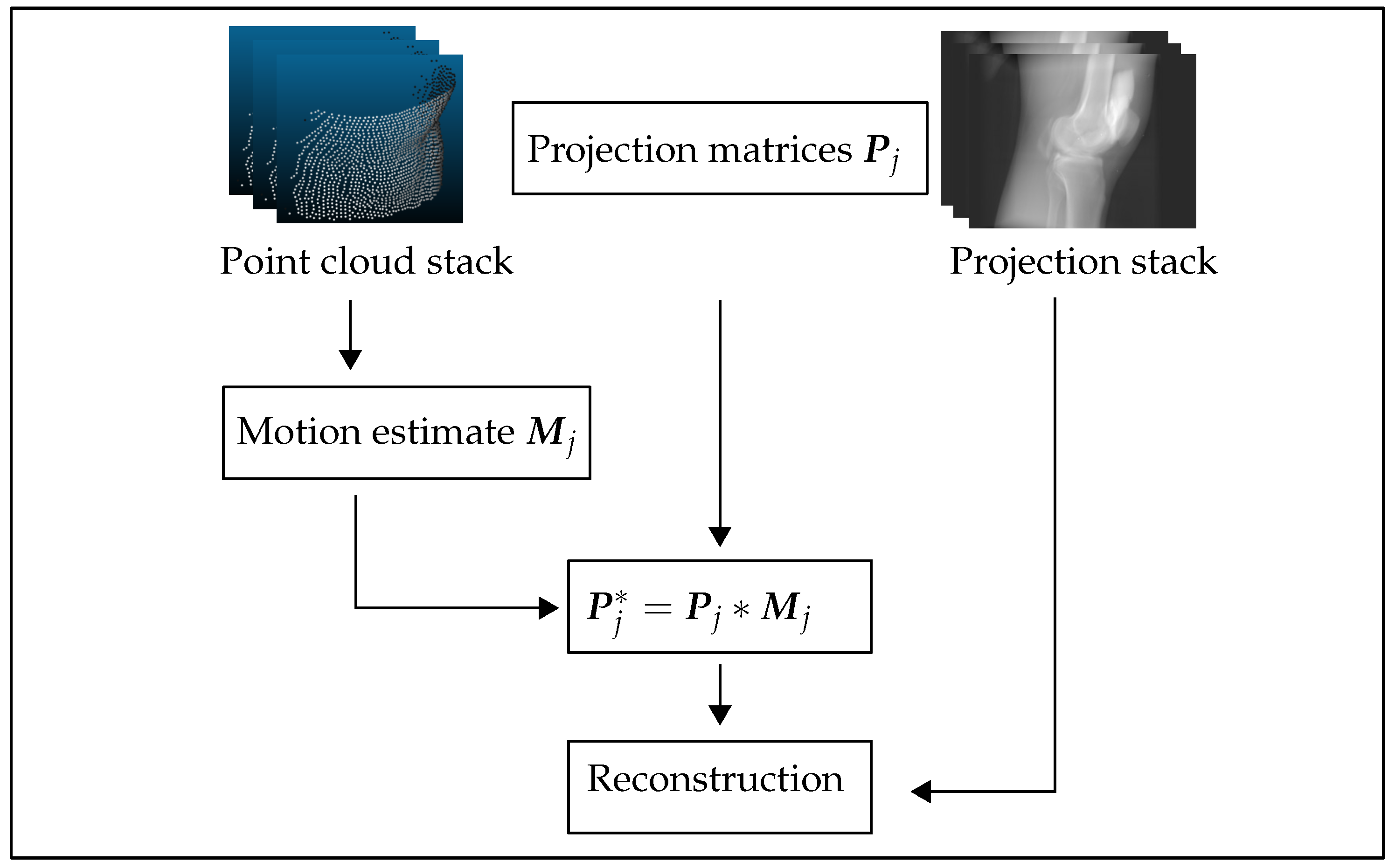

2.4.3. Reconstruction Pipeline

2.4.4. Experiments

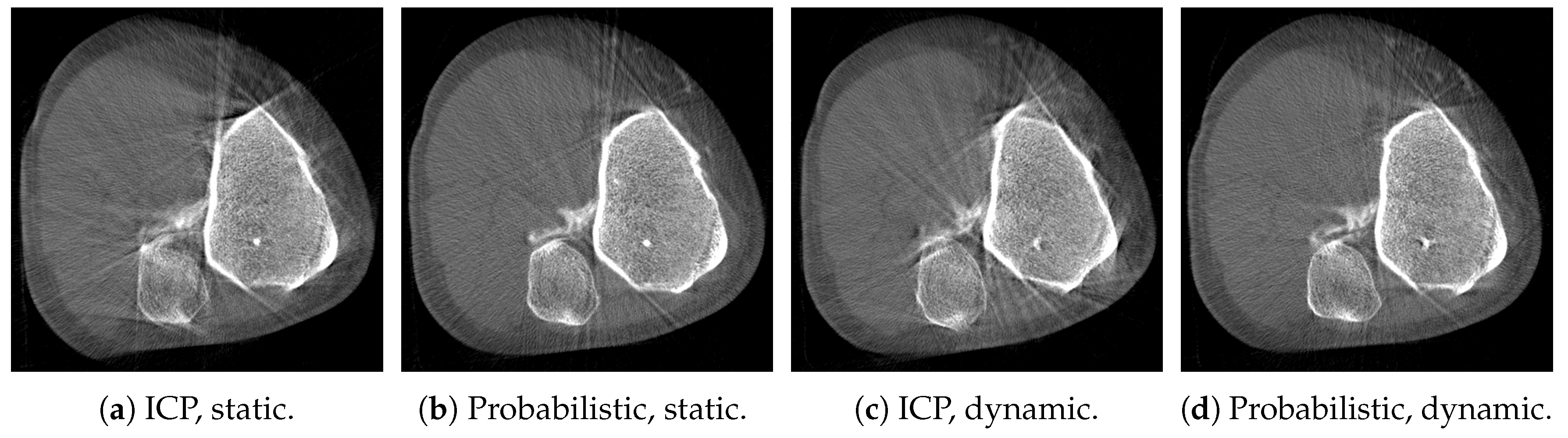

- Dataset 1: one knee of a high resolution reconstruction of a supine scan acquired with clinical C-arm CT system (Artis Zeego, Siemens Healthcare GmbH, Erlangen, Germany) is extracted. The projection matrices used are real calibrated projection matrices from the same clinical C-arm CBCT system, which was operated on a horizontal trajectory [4]. With this dataset, we conducted two experiments: one without and one with simulated noise in the observed point clouds.

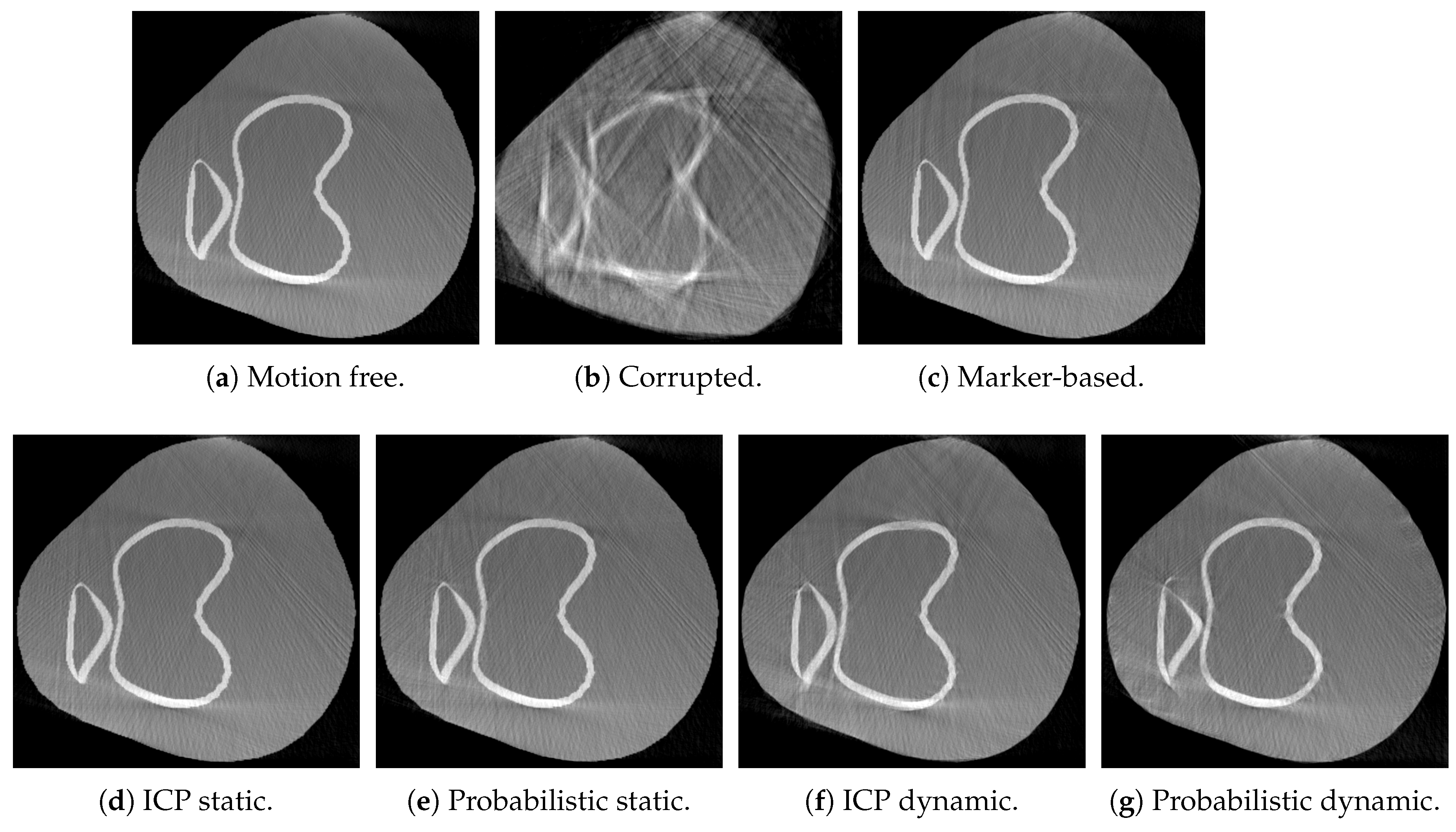

- Dataset 2: the XCAT phantom [43] has been used to simulate the legs of a standing patient, squatting with a knee flexion of 40. The trajectory for this scan has been created using CONRAD, such that one knee of the XCAT phantom is always present in the field of view of the range camera. In contrast to dataset 1, two legs are in the simulation volume. Note, that the point clouds of this dataset were post processed to obtain the points of a single knee only.

2.4.5. Evaluation Methodology

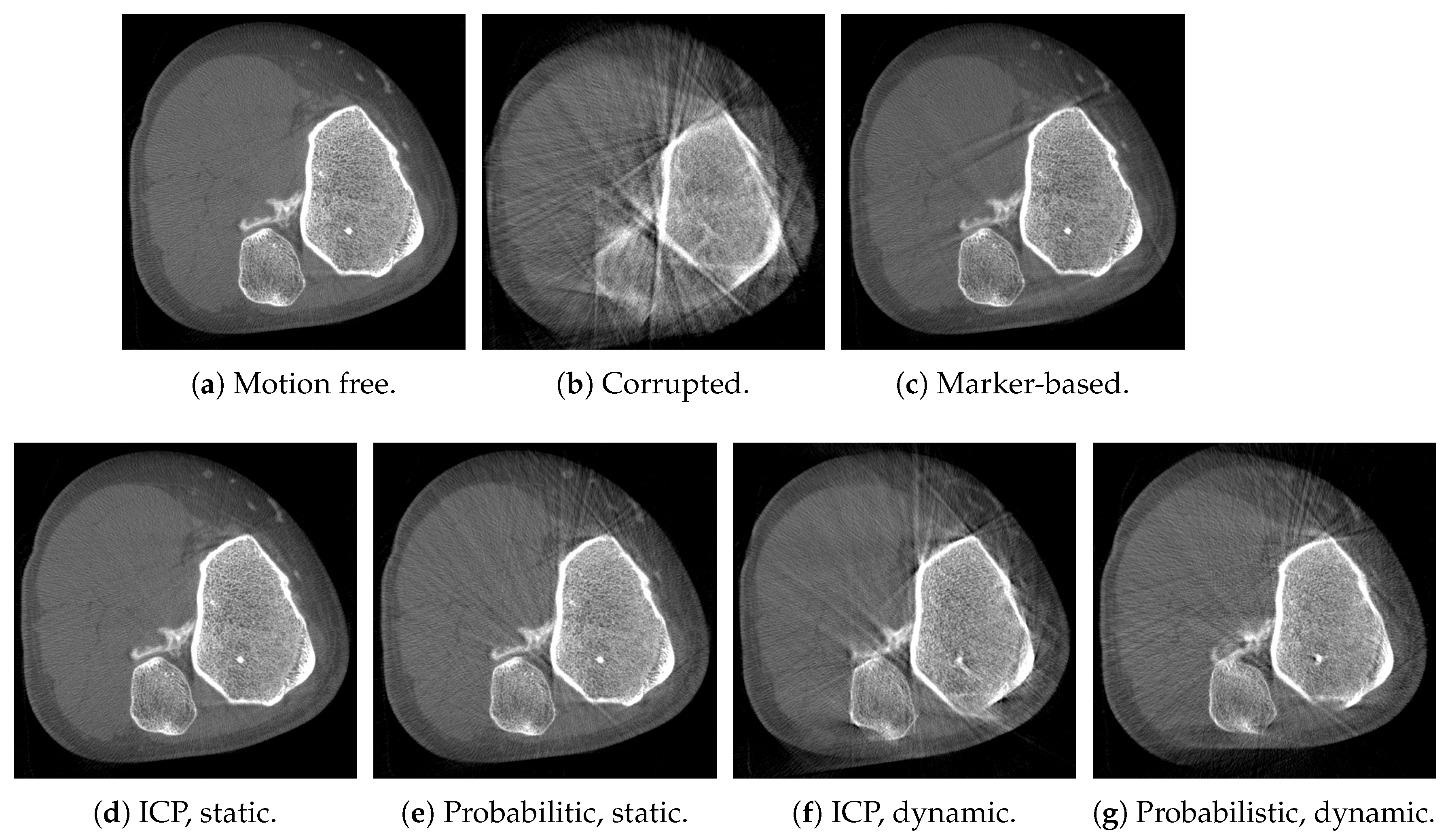

3. Results

4. Discussion

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| CBCT | cone-beam computed tomography |

| CT | computed tomography |

| ICP | Iterative Closest Point |

| SSIM | Structural Similarity |

| PET | positron emission tomography |

| SPECT | single-photon emission computed tomography |

| HdMM | hybrid mixture model |

References

- Choi, J.H.; Maier, A.; Keil, A.; Pal, S.; McWalter, E.J.; Beaupré, G.S.; Gold, G.E.; Fahrig, R. Fiducial marker-based correction for involuntary motion in weight-bearing C-arm CT scanning of knees. II. Experiment. Med. Phys. 2014, 41, 061902. [Google Scholar] [CrossRef] [PubMed]

- Carrino, J.A.; Al Muhit, A.; Zbijewski, W.; Thawait, G.K.; Stayman, J.W.; Packard, N.; Senn, R.; Yang, D.; Foos, D.H.; Yorkston, J.; et al. Dedicated cone-beam CT system for extremity imaging. Radiology 2014, 270, 816–824. [Google Scholar] [CrossRef] [PubMed]

- Powers, C.M.; Ward, S.R.; Fredericson, M. Knee Extension in Persons With Lateral Subluxation of the Patella: A Preliminary Study. J. Orthop. Sports Phys. Ther. 2013, 33, 677–685. [Google Scholar] [CrossRef] [PubMed]

- Maier, A.; Choi, J.H.; Keil, A.; Niebler, C.; Sarmiento, M.; Fieselmann, A.; Gold, G.; Delp, S.; Fahrig, R. Analysis of vertical and horizontal circular C-arm trajectories. Proc. SPIE Med. Imaging 2011, 7961, 796123. [Google Scholar] [CrossRef]

- Choi, J.H.; Muller, K.; Hsieh, S.; Maier, A.; Gold, G.; Levenston, M.; Fahrig, R. Over-exposure correction in knee cone-beam CT imaging with automatic exposure control using a partial low dose scan. Proc. SPIE Med. Imaging 2016, 9783, 97830L. [Google Scholar]

- Zbijewski, W.; De Jean, P.; Prakash, P.; Ding, Y.; Stayman, J.W.; Packard, N.; Senn, R.; Yang, D.; Yorkston, J.; Machado, A.; et al. A dedicated cone-beam CT system for musculoskeletal extremities imaging: Design, optimization, and initial performance characterization. Med. Phys. 2011, 38, 4700. [Google Scholar] [CrossRef] [PubMed]

- Herbst, M.; Schebesch, F.; Berger, M.; Choi, J.H.; Fahrig, R.; Hornegger, J.; Maier, A. Dynamic detector offsets for field of view extension in C-arm computed tomography with application to weight-bearing imaging. Med. Phys. 2015, 42, 2718–2729. [Google Scholar] [CrossRef] [PubMed]

- Maier, J.; Black, M.; Bonaretti, S.; Bier, B.; Eskofier, B.; Choi, J.H.; Levenston, M.; Gold, G.; Fahrig, R.; Maier, A. Comparison of Different Approaches for Measuring Tibial Cartilage Thickness. J. Integr. Bioinform. 2017, 14, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Siemens Healthcare GmbH. Siemens SOMATOM Definition Flash Brochure. Available online: https://static.healthcare.siemens.com/siemens_hwem-hwem_ssxa_websites-context-root/wcm/idc/groups/public/@global/@imaging/@ct/documents/download/mdaw/mzcz/~edisp/ct_ws_somatom_definition_flash_international-00292513.pdf (accessed on 5 January 2018).

- Unberath, M.; Aichert, A.; Achenbach, S.; Maier, A. Consistency-based respiratory motion estimation in rotational angiography. Med. Phys. 2017, 44, e113–e124. [Google Scholar] [CrossRef] [PubMed]

- Sonke, J.J.; Zijp, L.; Remeijer, P.; van Herk, M. Respiratory correlated cone beam CT. Med. Phys. 2005, 32, 1176–1186. [Google Scholar] [CrossRef] [PubMed]

- Hansis, E.; Schäfer, D.; Dössel, O.; Grass, M. Projection-based motion compensation for gated coronary artery reconstruction from rotational X-ray angiograms. Phys. Med. Biol. 2008, 53, 3807. [Google Scholar] [CrossRef] [PubMed]

- Ouadah, S.; Stayman, J.W.; Gang, G.J.; Ehtiati, T.; Siewerdsen, J.H. Self-calibration of cone-beam CT geometry using 3D–2D image registration. Phys. Med. Biol. 2016, 61, 2613–2632. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.H.; Fahrig, R.; Keil, A.; Besier, T.F.; Pal, S.; McWalter, E.J.; Beaupré, G.S.; Maier, A. Fiducial marker-based correction for involuntary motion in weight-bearing C-arm CT scanning of knees. Part I. Numerical model-based optimization. Med. Phys. 2014, 41, 061902. [Google Scholar] [CrossRef] [PubMed]

- Berger, M.; Forman, C.; Schwemmer, C.; Choi, J.H.; Müller, K.; Maier, A.; Hornegger, J.; Fahrig, R. Automatic Removal of Externally Attached Fiducial Markers in Cone Beam C-arm CT. In Bildverarbeitung für die Medizin; Springer: Berlin, Germany, 2014; pp. 168–173. [Google Scholar]

- Müller, K.; Berger, M.; Choi, J.; Maier, A.; Fahrig, R. Automatic Motion Estimation and Compensation Framework for Weight-bearing C-arm CT scans using Fiducial Markers. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering, Toronto, ON, Canada, 7–12 June 2015; pp. 58–61. [Google Scholar]

- Müller, K.; Berger, M.; Choi, J.h.; Datta, S.; Gehrisch, S.; Moore, T.; Marks, M.P.; Maier, A.K.; Fahrig, R. Fully Automatic Head Motion Correction for Interventional C-arm Systems using Fiducial Markers. In Proceedings of the 13th Fully Three-Dimensional Image Reconstruction in Radiology and Nuclear Medicine, Newport, RI, USA, 1–4 June 2015; pp. 534–537. [Google Scholar]

- Syben, C.; Bier, B.; Berger, M.; Aichert, A.; Fahrig, R.; Gold, G.; Levenston, M.; Maier, A. Joint Calibration and Motion Estimation in Weight-Bearing Cone-Beam CT of the Knee Joint Using Fiducial Markers. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, Australia, 18–21 April 2017; pp. 494–497. [Google Scholar]

- Unberath, M.; Choi, J.H.; Berger, M.; Maier, A.; Fahrig, R. Image-based compensation for involuntary motion in weight-bearing C-arm cone-beam CT scanning of knees. Proc. SPIE Med. Imaging 2015, 9413, 94130D. [Google Scholar]

- Sisniega, A.; Stayman, J.; Yorkston, J.; Siewerdsen, J.; Zbijewski, W. Motion compensation in extremity cone-beam CT using a penalized image sharpness criterion. Phys. Med. Biol. 2017, 62, 3712. [Google Scholar] [CrossRef] [PubMed]

- Berger, M.; Müller, K.; Aichert, A.; Unberath, M.; Thies, J.; Choi, J.H.; Fahrig, R.; Maier, A. Marker-free motion correction in weight-bearing cone-beam CT of the knee joint. Med. Phys. 2016, 43, 1235–1248. [Google Scholar] [CrossRef] [PubMed]

- Aichert, A.; Wang, J.; Schaffert, R.; Dörfler, A.; Hornegger, J.; Maier, A. Epipolar Consistency in Fluoroscopy for Image-Based Tracking. Proc. BMVC 2015, 82.1–82.10. [Google Scholar] [CrossRef]

- Berger, M.; Xia, Y.; Aichinger, W.; Mentl, K.; Unberath, M.; Aichert, A.; Riess, C.; Hornegger, J.; Fahrig, R.; Maier, A. Motion compensation for cone-beam CT using Fourier consistency conditions. Phys. Med. Biol. 2017, 62, 7181–7215. [Google Scholar] [CrossRef] [PubMed]

- Bier, B.; Aichert, A.; Felsner, L.; Unberath, M.; Levenston, M.; Gold, G.; Fahrig, R.; Maier, A. Epipolar Consistency Conditions for Motion Correction in Weight-Bearing Imaging. In Bildverarbeitung für Die Medizin; Springer: Berlin/Heidelberg, Germany, 2017; pp. 209–214. [Google Scholar]

- Bauer, S.; Seitel, A.; Hofmann, H.G.; Blum, T.; Wasza, J.; Balda, M.; Meinzer, H.P.; Navab, N.; Hornegger, J.; Maier-Hehn, L. Real-Time Range Imaging in Health Care: A Survey. In Time-of-Flight and Depth Imaging. Sensors, Algorithms, and Applications; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8200, pp. 228–254. [Google Scholar]

- Fischer, M.; Fuerst, B.; Lee, S.C.; Fotouhi, J.; Habert, S.; Weidert, S.; Euler, E.; Osgood, G.; Navab, N. Preclinical usability study of multiple augmented reality concepts for K-wire placement. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 1007–1014. [Google Scholar] [CrossRef] [PubMed]

- Navab, N.; Bani-Kashemi, A.; Mitschke, M. Merging visible and invisible: Two Camera-Augmented Mobile C-arm (CAMC) applications. In Proceedings of the 2nd IEEE and ACM International Workshop on Augmented Reality, San Francisco, CA, USA, 20–21 October 1999; pp. 134–141. [Google Scholar]

- McNamara, J.E.; Pretorius, P.H.; Johnson, K.; Mukherjee, J.M.; Dey, J.; Gennert, M.A.; King, M.A. A flexible multicamera visual-tracking system for detecting and correcting motion-induced artifacts in cardiac SPECT slices. Med. Phys. 2009, 36, 1913–1923. [Google Scholar] [CrossRef] [PubMed]

- Geimer, T.; Unberath, M.; Taubmann, O.; Bert, C.; Maier, A. Combination of Markerless Surrogates for Motion Estimation in Radiation Therapy. In Proceedings of the Computer Assisted Radiology and Surgery (CARS) 2016: 30th International Congress and Exhibition, Heidelberg, Germany, 21–25 June 2016; pp. 59–60. [Google Scholar]

- Fotouhi, J.; Fuerst, B.; Wein, W.; Navab, N. Can real-time RGBD enhance intraoperative Cone-Beam CT? Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1211–1219. [Google Scholar] [CrossRef] [PubMed]

- Bier, B.; Unberath, M.; Geimer, T.; Maier, J.; Gold, G.; Levenston, M.; Fahrig, R.; Maier, A. Motion Compensation Using Range Imaging in C-Arm Cone-Beam CT. In Proceedings of the Annual Conference on Medical Image Understanding and Analysis, Edinburgh, UK, 11–13 July 2017; pp. 561–570. [Google Scholar]

- Bellekens, B.; Spruyt, V.; Weyn, M. A Survey of Rigid 3D Pointcloud Registration Algorithms. In Proceedings of the AMBIENT 2014, The Fourth International Conference on Ambient Computing, Applications, Services and Technologies, Rome, Italy, 24–28 August 2014; pp. 8–13. [Google Scholar]

- Ravikumar, N.; Gooya, A.; Frangi, A.F.; Taylor, Z.A. Generalised Coherent Point Drift for Group-Wise Registration of Multi-dimensional Point Sets. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec City, QC, Canada, 10–14 September 2017; pp. 309–316. [Google Scholar]

- Habert, S.; Gardiazabal, J.; Fallavollita, P.; Navab, N. RGBDX: First design and experimental validation of a mirror-based RGBD X-ray imaging system. In Proceedings of the 2015 IEEE International Symposium on Mixed and Augmented Reality, Fukuoka, Japan, 29 September–3 October 2015; pp. 13–18. [Google Scholar]

- Zeng, G.L. Medical Image Reconstruction: A Conceptual Tutorial; Springer: New York, NY, USA, 2010. [Google Scholar]

- Strobel, N.K.; Heigl, B.; Brunner, T.M.; Schütz, O.; Mitschke, M.M.; Wiesent, K.; Mertelmeier, T. Improving 3D Image Quality of X-ray C-Arm Imaging Systems by Using Properly Designed Pose Determination Systems for Calibrating the Projection Geometry. Proc. SPIE Med. Imaging 2003, 5030, 943–954. [Google Scholar]

- Hartley, R.I.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Wasenmüller, O.; Stricker, D. Comparison of Kinect v1 and v2 Depth Images in Terms of Accuracy and Precision. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; pp. 34–45. [Google Scholar]

- Peter, F.; Placht, S.; Balda, M.; Schaller, C.; Hofmann, H.; Maier, A.; Riess, C. A Comparative Error Analysis of Current Time-of-Flight Sensors. IEEE Trans. Comput. Imaging 2015, 2, 27–41. [Google Scholar]

- Maier, A.; Hofmann, H.G.; Berger, M.; Fischer, P.; Schwemmer, C.; Wu, H.; Müller, K.; Hornegger, J.; Choi, J.H.; Riess, C.; et al. CONRAD—A software framework for cone-beam imaging in radiology. Med. Phys. 2013, 40, 111914. [Google Scholar] [CrossRef] [PubMed]

- Ravikumar, N.; Gooya, A.; Çimen, S.; Frangi, A.F.; Taylor, Z.A. A multi-resolution t-mixture model approach to robust group-wise alignment of shapes. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec City, QC, Canada, 10–14 September 2016; pp. 142–149. [Google Scholar]

- Feldkamp, L.A.; Davis, L.C.; Kress, J.W. Practical cone-beam algorithm. J. Opt. Soc. Am. A 1984, 1, 612. [Google Scholar] [CrossRef]

- Segars, W.P.; Sturgeon, G.; Mendonca, S.; Grimes, J.; Tsui, B.M.W. 4D XCAT phantom for multimodality imaging research. Med. Phys. 2010, 37, 4902. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Stefan Klein and Marius Staring and Keelin Murphy and Max A. Viergever and Josien P.W. Pluim. elastix: A toolbox for intensity-based medical image registration. IEEE Trans. Med. Imaging 2010, 29, 196–205. [Google Scholar]

- Rausch, J.; Maier, A.; Fahrig, R.; Choi, J.H.; Hinshaw, W.; Schebesch, F.; Haase, S.; Wasza, J.; Hornegger, J.; Riess, C. Kinect-based correction of overexposure artifacts in knee imaging with C-Arm CT systems. Int. J. Biomed. Imaging 2016, 2016. [Google Scholar] [CrossRef] [PubMed]

- Chun, S.; Bernhard, L.; Fotouhi, J.; Fischer, M.; Osgood, G.; Navab, N. Calibration of RGBD camera and cone-beam CT for 3D intra-operative mixed reality visualization. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 967–975. [Google Scholar]

- Endres, F.; Hess, J.; Engelhard, N.; Sturm, J.; Cremers, D.; Burgard, W. An evaluation of the RGB-D SLAM system. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 1691–1696. [Google Scholar]

- Bier, B.; Unberath, M.; Geimer, T.; Ravikumar, N.; Gold, G.; Fahrig, R.; Maier, A. Fusing Motion Estimates for CBCT reconstruction. In Proceedings of the 2017 IEEE Nuclear Science Symposium and Medical Imaging Conference (IEEE NSS/MIC 2017), Atlanta, GA, USA, 21–28 October 2017. [Google Scholar]

- Willomitzer, F.; Häusler, G. Single-shot 3D motion picture camera with a dense point cloud. Opt. Express 2017, 25, 23451–23464. [Google Scholar] [CrossRef] [PubMed]

| Method | Dataset 1 | with Noise | Dataset 2 |

|---|---|---|---|

| Uncorrected | 0.93 | - | 0.81 |

| Marker-based [1] | 0.98 | - | 0.98 |

| ICP static | 0.99 | 0.95 | 0.99 |

| Probabilistic static | 0.98 | 0.98 | 0.99 |

| ICP dynamic | 0.94 | 0.94 | 0.95 |

| Probabilistic dynamic | 0.96 | 0.97 | 0.94 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bier, B.; Ravikumar, N.; Unberath, M.; Levenston, M.; Gold, G.; Fahrig, R.; Maier, A. Range Imaging for Motion Compensation in C-Arm Cone-Beam CT of Knees under Weight-Bearing Conditions. J. Imaging 2018, 4, 13. https://doi.org/10.3390/jimaging4010013

Bier B, Ravikumar N, Unberath M, Levenston M, Gold G, Fahrig R, Maier A. Range Imaging for Motion Compensation in C-Arm Cone-Beam CT of Knees under Weight-Bearing Conditions. Journal of Imaging. 2018; 4(1):13. https://doi.org/10.3390/jimaging4010013

Chicago/Turabian StyleBier, Bastian, Nishant Ravikumar, Mathias Unberath, Marc Levenston, Garry Gold, Rebecca Fahrig, and Andreas Maier. 2018. "Range Imaging for Motion Compensation in C-Arm Cone-Beam CT of Knees under Weight-Bearing Conditions" Journal of Imaging 4, no. 1: 13. https://doi.org/10.3390/jimaging4010013